Abstract

This research apparatuses an approximate spectral method for the nonlinear time-fractional partial integro-differential equation with a weakly singular kernel (TFPIDE). The main idea of this approach is to set up a new Hilbert space that satisfies the initial and boundary conditions. The new spectral collocation approach is applied to obtain precise numerical approximation using new basis functions based on shifted first-kind Chebyshev polynomials (SCP1K). Furthermore, we support our study by a careful error analysis of the suggested shifted first-kind Chebyshev expansion. The results show that the new approach is very accurate and effective.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As we know, spectral methods have been applied for obtaining approximate solutions to various differential equations. The philosophy of spectral methods is based on expressing the approximate solution of the problem as a truncated series of polynomials, which are often orthogonal. There are three known versions of spectral methods: Galerkin, tau, and collocation. The Galerkin method, in which we choose a suitable basis satisfying the initial and boundary conditions (Youssri et al. 2022; Atta et al. 2021, 2022a, b). On the other hand, when the basis functions do not satisfy the initial and boundary conditions, the tau method is the best choice, see for example Azimi et al. (2022c), Atta et al. (2020, 2022d) and Lima et al. (2022). The collocation method is the most popular technique and can be used for all differential equations. For some articles that utilize collocation approach, see Mahdy et al. (2022), Wu and Wang (2022), Taghipour and Aminikhah (2022a) and Atta et al. (2019).

Recently, the studies of fractional calculus have attracted the attention of many mathematicians and physicists. This branch studies the possibility of taking the real number powers of the differentiation and the integration operators. Many physical systems are modeled by fractional partial differential equations, see Moghaddam and Machado (2017) and Mostaghim et al. (2018). One of the biggest problems that contain fractional derivatives is the nonlinear TFPIDE. This problem appears in the modeling heat transfer materials with memory, population dynamics (Zheng et al. 2021), and nuclear reaction theory (Sanz-Serna 1988). Moreover, it has been studied numerically in some papers. For example, the authors in Guo et al. (2020) proposed a finite difference method for solving this problem. In Taghipour and Aminikhah (2022b), the nonlinear TFPIDE is solved by using Pell collocation method.

Great attention has been paid to various versions of Chebyshev polynomials due to their importance in analysis, especially in numerical analysis. There are six classes of Chebyshev polynomials: first, second, third, fourth, fifth, and sixth kinds (Masjed-Jamei 2006). There are many old and recent studies interested in these polynomials (Abd-Elhameed et al. 2016; Türk and Codina 2019; Abd-Elhameed and Youssri 2018, 2019; Abd-Elhameed 2021; Abd-Elhameed and Alkhamisi 2021; Atta et al. 2022a). In this paper, our main focus is on the first type of Chebyshev polynomials and their shifted ones. These polynomials enjoy various interesting and useful features, in addition to the high accuracy of the approximation and the simplicity of numerical methods established based on these polynomials.

The main aims of this paper may be summarized as follows:

-

By applying the spectral collocation method, we are developing a new technique for solving the nonlinear TFPIDE via new basis functions based on the SCP1K.

-

Discussion of the error analysis of the proposed method.

-

We are presenting some examples to check the applicability and accuracy of the scheme.

The paper has the following structure: Section 2 reports a summary of the Caputo fractional derivative and some definitions and formulas linked to the SCP1K. Section 3 is devoted to presenting a numerical technique to solve the nonlinear TFPIDE using the spectral collocation method. Section 4 focuses on studying the error analysis of the proposed double expansion. Section 5 gives some numerical examples to show the theoretical results. Finally, Sect. 6 reports conclusions.

2 Preliminaries and essential relations

The main objective of this study is to give a summary of the Caputo fractional derivative. In addition, some properties and formulas related to the family of orthogonal polynomials, namely, SCP1K.

2.1 Summary on the Caputo fractional derivative

Definition 1

(Podlubny 1998) The Caputo fractional derivative of order s is defined as:

where \(m-1\leqslant s<m,\quad m\in {\mathbb {N}}.\)

The following properties are satisfied by the operator \(D_{z}^{s}\) for \(m-1\leqslant s<m,\quad m\in {\mathbb {N}},\)

where \({{\mathbb {N}}}=\{1,2,3,\ldots \},\) \({{\mathbb {N}}}_0=\{0\}\cup {\mathbb {N}} \) and the notation \(\lceil \alpha \rceil \) denotes the ceiling function.

2.2 Some properties and formulas of the SCP1K

The SCP1K \(T^{*}_{m}(x)\) are defined as:

and satisfying this orthogonality relation (Abd-Elhameed et al. 2016)

where

and

Moreover, \(T^{*}_{m}(x)\) can be generated using the recurrence formula shown below

where \(T^{*}_{0}(x)=1,\quad T^{*}_{1}(x)=\frac{2\,x}{\ell }-1.\)

One of the most important formulas of \(T^{*}_{m}(x)\) is the power form representation and its inversion formula (Abd-Elhameed and Youssri 2018)

where

3 Collocation technique for handling the nonlinear TFPIDE

Herein, a numerical technique is presented to solve the nonlinear TFPIDE using the spectral collocation method.

Consider the following nonlinear TFPIDE:

assuming that the initial and boundary conditions are as follows:

where g(x, t) is the source term.

3.1 Basis functions

Suppose that

Then the orthogonality relations of the last basis functions are given by:

and

where \(h_{\ell ,p}\) is as given in (1).

Now, define

then, any function \(\chi _{N}(x,t)\in \tilde{P_{N}}\) can be expanded as

where

and \({\hat{\varvec{\nu }}}=(\nu _{i,j})_{0\le i,j\le N}\) is the matrix of unknowns coefficients of order \((N+1)\times (N+1).\)

3.2 Some formulas concerned with the basis functions

Lemma 1

(Abd-Elhameed et al. 2016) The first-derivative of \(\psi _{r}(x)\) is

where

Theorem 1

The second-derivative of \(\psi _{j}(x)\) is

where

Proof

Differentiating Eq. (4) with respect to the variable x, one has

where

Expanding the right-hand side of formula (5) and rearranging the similar terms lead to the following relation

where

Now, with the aid of Maple program, the following relations can be summed to give the following reduced forms

and

and therefore, the following formula can be obtained

where

\(\square \)

Remark 1

Lemma 1 and Theorem 1 can be written in matrix form as:

where \({\textbf{Q}}=(q_{j,m})\) and \({\textbf{M}}=(m_{j,m})\) are matrices of order \((N+1) \times (N+1).\) In addition, \({\varvec{\zeta }}(x)=[\zeta _{0}(x),\zeta _{1}(x),\ldots ,\zeta _{N}(x)]^{\textrm{T}},\) \({\textbf{o}}(x)=[o_{0}(x),o_{1}(x),\ldots ,o_{N}(x)]^{\textrm{T}},\) and

Theorem 2

For \(0<\beta <1,\) the following approximation relation holds

where

Proof

Using the power form representation of \(\phi _{i}(t),\) we obtain

where

we can approximate \(t^{k+\beta +1}\) in the form

where

Using the power form representation of \(\phi _{i}(t),\) and integrating the right hand side of Eq. (9), yields

Now, to reduce the summation in the right-hand side of Eq. (10), set

and using Zeilberger algorithm (Koepf 1998) to show that \(H^{\beta }_{r,k},\) satisfies the following recurrence relation

which can be exactly solved to give

And therefore, Eq. (10) becomes

Now, inserting Eq. (8) into Eq. (6), the desired result of Theorem 2 may be obtained. \(\square \)

Lemma 2

For \(0<\beta <1,\) the following approximation relation holds

where

Remark 2

Theorem 2 and Lemma 2 can be combined to give the following matrix form

where

Theorem 3

For \(0<\alpha <1,\) the following approximation formula is valid

where

Proof

The application of the operator \(D_{t}^{\alpha }\) on the power form representation of \(\phi _{i}(t),\) enables us to write

where \(\lambda _{k,i}\) is defined in (7).

Now, using similar steps as in follow in Theorem 2 to approximate \(t^{k+1-\alpha },\) the results in Theorem 3 can be easily obtained. \(\square \)

Lemma 3

For \(0<\alpha <1,\) the following approximation formula is valid

where

Remark 3

Theorem 3 and Lemma 3 can be combined to give the following matrix form

where

3.3 Collocation solution for nonlinear TFPIDE

Now, we are in a position to deduce our collocation scheme for treating the nonlinear TFPIDE in (2).

Remarks 1,2 and 3 enable us to get the following approximations after approximating \(\chi (x,t)\) as in (3)

With the aid of the last relations (11), the residual \({\textbf{R}}(x,t)\) of Eq. (2) can be written as

Now, we enforce Eq. (12) to be satisfied exactly at the following roots

to obtain

Equation (13), produce a nonlinear system of algebraic equations of dimension \((N+1)\times (N+1)\) in the unknown expansion coefficients \(\nu _{ij}\), that may be solved using Newton’s iterative method.

3.4 Transformation to homogeneous conditions

assuming that the nonlinear TFPIDE

subject to the non-homogeneous initial and boundary conditions

Using the following transformation:

where \(f(0)=Z_{1}(0),\) the nonlinear TFPIDE (2), with non-homogeneous conditions will be transformed into its homogeneous ones.

4 Error analysis

This section is confined to study the error analysis of the double expansion of new basis based on SCP1K used in approximation. This study is built on the results given in Ref. Abd-Elhameed et al. (2016). The expression \(z\lessapprox {\bar{z}}\) means \(z\le n\,{\bar{z}},\) where n is a generic positive constant independent of N and of any function.

Lemma 4

(Stewart 2015) Let f(x) be continuous, positive and decreasing function for \(x\ge n.\) If \(f(k)=a_{k}\) such that \(\sum {a_{n}}\) is convergent and \(R_{n}=\sum _{k=n+1}^{\infty }a_{k},\) then \(R_{n}\le \int _{n}^{\infty }f(x){\textrm{d}}\,x.\)

Theorem 4

Any function \(\chi (x,t)=x\,t\,(\ell -x)\,g_{1}(x)\,g_{2}(t)\in \tilde{P_{N}},\) with \(g_{1}(x)\) and \(g_{2}(t)\) have bounded second derivative can be expanded as :

The above series is uniformly convergent. Moreover, the expansion coefficients in (14) satisfy :

Proof

Based on the following separability \(\chi (x,t)=x\,t\,(\ell -x)\,g_{1}(x)\,g_{2}(t)\) and imitating similar steps to those given in Abd-Elhameed et al. (2016), the desired result may be obtained. \(\square \)

Theorem 5

If \(\chi (x,t)\) satisfies the hypothesis of Theorem 4, and if \(\chi _{N}(x,t)=\sum _{r=0}^{N} \sum _{s=0}^{N}\nu _{rs}\, \psi _r(x)\,\phi _{s}(t),\) then the following truncation error estimate is satisfied

Proof

According to the definitions of \(\chi (x,t)\) and \(\chi _{N}(x,t),\) we have

As in Theorem 4 followed in Abd-Elhameed et al. (2016), it is easy to obtain the following inequalities

Now, the direct application of inequalities (16), Theorem 4 and the two identities

lead to

Using Lemma 4 along with the following approximation

where f is decreasing function, one has

This completes the proof of Theorem 5. \(\square \)

Remark 4

As shown in Theorem 5, we find that the truncation error estimate (15) leads to an exponential rate of convergence.

5 Illustrative examples

In this section, we apply our approximate spectral scheme which is presented in Sect. 3 on four examples. All results show that our method is applicable and effective when we compare it with results in Taghipour and Aminikhah (2022b).

Example 1

(Taghipour and Aminikhah 2022b; Guo et al. 2020) Consider the following equation

along with the following initial and boundary conditions:

where

The exact solution of this problem is \(\chi (x,t)=t^{3}\,\sin (\pi \,x).\)

Tables 1 and 2 show the comparison of the absolute errors between our method at \(N=12\) with the method developed in Taghipour and Aminikhah (2022b) at different values of \(\alpha \) and \(\beta .\) In addition, Fig. 1 shows the \(L_{\infty }\) error for the case corresponding to \(\alpha =0.7,\) \(\beta =0.4\) and \(\alpha =0.3,\) \(\beta =0.8\) at \(N=12.\) It can be seen that the approximate solution is quite near to the precise one.

The \(L_{\infty }\) error of Example 1

Example 2

(Taghipour and Aminikhah 2022b) Consider the following equation with the exact solution \(\chi (x,t)=t^2 \,x \,(1-x)\)

along with the following initial and boundary conditions:

where

Table 3 shows the absolute errors at different values of \(\alpha \) and \(\beta \) at \(N=1.\) The results in the last table show that our method is more accurate when we compare our results with those obtained in table 2 at Taghipour and Aminikhah (2022b).

Example 3

(Akram et al. 2021; Guo et al. 2020) Consider the following equation

along with the following initial and boundary conditions:

where

The exact solution of this problem is \(u(x,t)=\left( t^{\frac{5}{2}}+1\right) \, x^2 \,(1-x)^2.\)

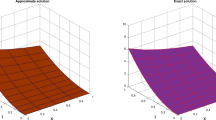

Table 4 show the comparison of the absolute errors between our method at \(N=12\) with the method developed in Akram et al. (2021) at \(\beta =0.15\) and different values of \(\alpha .\) Also, Fig. 2 shows the \(L_{\infty }\) error for the case corresponding to \(\alpha =0.95,\) \(\beta =0.15\) and \(N=12.\)

The \(L_{\infty }\) error of Example 3

Example 4

(Taghipour and Aminikhah 2022b) Consider the following equation

along with the following initial and boundary conditions:

where

The exact solution of this problem is \(\chi (x,t)=t^3\, e^x.\)

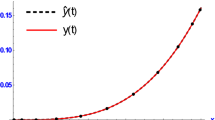

Table 5 shows the absolute errors at \(N=9,\) \(N=12\) and \(\alpha =\beta =0.5.\) Also Table 6 presents the maximum absolute errors at \(\alpha =0.9\) and \(\beta =0.3.\) The results in Tables 5 and 6 show that our method is more accurate when we compare our results with those obtained in table 4 at Taghipour and Aminikhah (2022b). Figure 3 indicates the advantage of our method for obtaining the maximum absolute errors at small values of N. This figure proves that our method is in good agreement with the analytical solution.

Example 5

(Taghipour and Aminikhah 2022b) Consider the following equation

along with the following initial and boundary conditions:

where

The exact solution of this problem is \(\chi (x,t)=t^2 \,(1-x)\, \cos (x).\)

The maximum absolute errors of Example 4

(Left) Approximate solution, (Right) \(L_{\infty }\) error for Example 5

Figure 4 shows the approximate solution and \(L_{\infty }\) error for \(\alpha =\beta =0.7,\) at \(N=10.\) In addition, Table 7 displays the absolute errors at \(\alpha =0.6,\) \(\beta =0.9\) and \(N=10.\) Moreover, Table 8 shows the absolute errors at different values of N when \(\alpha =0.3,\) \(\beta =0.8.\) Finally, Table 9 presents a comparison of \(L_{2}\) error at \(\alpha =\beta =0.5.\) It can be seen that the approximate solution is quite near to the precise one.

6 Concluding remarks

This paper presents suitable basis functions to solve the nonlinear TFPIDE subject to homogeneous initial and boundary conditions. Based on this basis, we developed new operational matrices of differentiation and integration that enable us to get the approximate spectral solution. Moreover, the error analysis of the suggested approximate double expansion was studied in depth. In the end, the proposed numerical examples illustrated the presented technique’s high accuracy, applicability, and efficiency. As an expected future work, we aim to employ the developed theoretical results in this paper along with suitable spectral methods to treat some other problems numerically. All codes were written and debugged by Mathematica 11 on HP Z420 Workstation, Processor: Intel (R) Xeon(R) CPU E5-1620—3.6 GHz, 16 GB Ram DDR3, and 512 GB storage.

References

Abd-Elhameed WM (2021) Novel expressions for the derivatives of sixth kind Chebyshev polynomials: spectral solution of the non-linear one-dimensional Burgers’ equation. Fractal Fract 5(2):53

Abd-Elhameed WM, Alkhamisi SO (2021) New results of the fifth-kind orthogonal Chebyshev polynomials. Symmetry 13(12):2407

Abd-Elhameed WM, Youssri YH (2018) Fifth-kind orthonormal Chebyshev polynomial solutions for fractional differential equations. Comput Appl Math 37:2897–2921

Abd-Elhameed WM, Youssri YH (2019) Sixth-kind Chebyshev spectral approach for solving fractional differential equations. Int J Nonlinear Sci Numer Simul 20(2):191–203

Abd-Elhameed WM, Doha EH, Youssri YH, Bassuony MA (2016) New Tchebyshev–Galerkin operational matrix method for solving linear and nonlinear hyperbolic telegraph type equations. Numer Methods Partial Differ Equ 32(6):1553–1571

Akram T, Ali Z, Rabiei F, Shah K, Kumam P (2021) A numerical study of nonlinear fractional order partial integro-differential equation with a weakly singular kernel. Fractal Fract 5(3):85

Atta AG, Moatimid GM, Youssri YH (2019) Generalized Fibonacci operational collocation approach for fractional initial value problems. Int J Appl Comput Math 5(1):1–11

Atta AG, Moatimid GM, Youssri YH (2020) Generalized Fibonacci operational tau algorithm for fractional Bagley–Torvik equation. Prog Fract Differ Appl 6:215–224

Atta AG, Abd-Elhameed WM, Moatimid GM, Youssri YH (2021) Shifted fifth-kind Chebyshev Galerkin treatment for linear hyperbolic first-order partial differential equations. Appl Numer Math 167:237–256

Atta AG, Abd-Elhameed WM, Moatimid GM, Youssri YH (2022a) A fast Galerkin approach for solving the fractional Rayleigh–Stokes problem via sixth-kind Chebyshev polynomials. Mathematics 10(11):1843

Atta AG, Abd-Elhameed WM, Youssri YH (2022b) Shifted fifth-kind Chebyshev polynomials Galerkin-based procedure for treating fractional diffusion-wave equation. Int J Mod Phys C 33(08): 2250102

Azimi R, Mohagheghy Nezhad M, Pourgholi R (2022c) Legendre spectral tau method for solving the fractional integro-differential equations with a weakly singular kernel. Glob Anal Discret Math. https://doi.org/10.22128/GADM.2022.490.1063

Atta AG, Abd-Elhameed WM, Moatimid GM, Youssri YH (2022d) Advanced shifted sixth-kind Chebyshev tau approach for solving linear one-dimensional hyperbolic telegraph type problem. Math Sci. https://doi.org/10.1007/s40096-022-00460-6

Guo J, Xu D, Qiu W (2020) A finite difference scheme for the nonlinear time-fractional partial integro-differential equation. Math Methods Appl Sci 43(6):3392–3412

Koepf W (1998) Hypergeometric summation: an algorithmic approach to summation and special function identities. Vieweg, Braunschweig

Lima N, Matos JAO, Matos JMA, Vasconcelos PB (2022) A time-splitting tau method for PDE’s: a contribution for the spectral tau toolbox library. Math Comput Sci 16(1):1–11

Mahdy AMS, Mohamed MS, Al Amiri AY, Gepreel KA (2022) Optimal control and spectral collocation method for solving smoking models. Intell Autom Soft Comput 31(2):899–915

Masjed-Jamei M (2006) Some new classes of orthogonal polynomials and special functions: a symmetric generalization of Sturm–Liouville problems and its consequences. PhD thesis

Moghaddam BP, Machado JAT (2017) Time analysis of forced variable-order fractional van der pol oscillator. Eur Phys J Spec Top 226(16):3803–3810

Mostaghim ZS, Moghaddam BP, Haghgozar HS (2018) Numerical simulation of fractional-order dynamical systems in noisy environments. Comput Appl Math 37(5):6433–6447

Podlubny I (1998) Fractional differential equations: an introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications. Elsevier, San Diego

Sanz-Serna JM (1988) A numerical method for a partial integro-differential equation. SIAM J Numer Anal 25(2):319–327

Stewart J (2015) Single variable calculus: early transcendentals. Cengage Learning, Boston

Taghipour M, Aminikhah H (2022a) A fast collocation method for solving the weakly singular fractional integro-differential equation. Comput Appl Math 41(4):1–38

Taghipour M, Aminikhah H (2022b) Pell collocation method for solving the nonlinear time-fractional partial integro-differential equation with a weakly singular kernel. J Funct Spaces 2022, Article ID 8063888. https://doi.org/10.1155/2022/8063888

Türk Ö, Codina R (2019) Chebyshev spectral collocation method approximations of the stokes eigenvalue problem based on penalty techniques. Appl Numer Math 145:188–200

Wu C, Wang Z (2022) The spectral collocation method for solving a fractional integro-differential equation. AIMS Math 7(6):9577–9587

Youssri YH, Abd-Elhameed WM, Atta AG (2022) Spectral Galerkin treatment of linear one-dimensional telegraph type problem via the generalized Lucas polynomials. Arab J Math 11(3): 601–615

Zheng X, Qiu W, Chen H (2021) Three semi-implicit compact finite difference schemes for the nonlinear partial integro-differential equation arising from viscoelasticity. Int J Model Simul 41(3):234–242

Funding

Open access funding provided by The Science, Technology &; Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no any competing interests.

Additional information

Communicated by Hui Liang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Atta, A.G., Youssri, Y.H. Advanced shifted first-kind Chebyshev collocation approach for solving the nonlinear time-fractional partial integro-differential equation with a weakly singular kernel. Comp. Appl. Math. 41, 381 (2022). https://doi.org/10.1007/s40314-022-02096-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-02096-7

Keywords

- Nonlinear time-fractional partial integro-differential equation with a weakly singular kernel

- First-kind Chebyshev polynomials

- Collocation method

- Error analysis