Abstract

Side weirs are broadly used in irrigation channels, drainage systems and sewage disposal canals for controlling and adjusting the flow in main channels. In this study, a new artificial intelligence model entitled “self-adaptive extreme learning machine” (SAELM) is developed for simulating the discharge coefficient of side weirs located upon rectangular channels. Also, the Monte Carlo simulations are implemented for assessing the abilities of the numerical models. It should be noted that the k-fold cross-validation approach is used for validating the results obtained from the numerical models. Based on the parameters affecting the discharge coefficient, six artificial intelligence models are defined. The examination of the numerical models exhibits that such models simulate the discharge coefficient valued with acceptable accuracy. For instance, mean absolute error and root mean square error for the superior model are computed 0.022 and 0.027, respectively. The best SAELM model predicts the discharge coefficient values in terms of Froude number (Fd), ratio of the side weir height to the downstream depth (w/hd), ratio of the channel width at downstream to the downstream depth (bd/hd) and ratio of the side weir length to the downstream depth (L/hd). Based on the sensitivity analysis results, the Froude number of the side weir downstream is identified as the most influencing input parameter. Lastly, a matrix is presented to estimate the discharge coefficient of side weirs on convergent channels.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Side orifices, side slide gates and side weirs are divert structures usually installed on the main channel wall for diverting and controlling the flow into the divert channel. This type of hydraulic structures have many applications in environmental and engineering and irrigation practices and also used in wastewater treatment plants, drainage lands, sedimentation tanks, aeration ponds, irrigation networks and flocculation units. Numerous studies have been conducted by different researchers on divert structures. In addition, several researchers such as Ghodsian (2003), Kra and Merkley (2004), Lewis (2011), Granata et al. (2013) Azimi et al. (2014, 2015), Nezami et al. (2015), Maranzoni et al. (2017) and Karami et al. (2018) have carried out some laboratory, analytical and numerical investigations on hydraulic behavior of side weirs. Hussein et al. (2010) experimentally studied the parameters affecting the passing discharge through circular sharp-crested side orifices. They concluded a relationship as a function of the Froude number and also the ratio of the orifice diameter to the main channel width for computing the discharge coefficient of this type of side orifices. Hussein et al. (2011) conducted an experimental study on the properties of the flow passing through a main channel with rectangular sharp-crested side orifices. Moreover, they also examined the parameters affecting the flow passing through rectangular side orifices. They presented an equation for calculating the discharge coefficient of such side orifices as a function of the Froude number and the ratio of the width of the rectangular side orifice to the main channel width.

Recently, artificial intelligence techniques such as the artificial neural network and fuzzy logic have been utilized as powerful tools in modeling, pattern-cognition and solving nonlinear complex problems (Zambrano et al. 2019; Gholami et al. 2019; Parsaie and Haghiabi 2019). Emiroglu et al. (2010) proposed an equation for calculating the discharge coefficient of triangular labyrinth side weirs placed on a rectangular straight channel in subcritical flow conditions using ANFIS. Bilhan et al. (2010) compared the experimental discharge coefficient of sharp-crested side weirs located on the wall of a rectangular channel in subcritical flow conditions with the discharge coefficient predicted by different neural network techniques. Dursun et al. (2012) concluded the discharge coefficient of semi-elliptical side weirs located on a rectangular straight channel using ANFIS.

As discussed above, side weirs are widely used for conducting and diverting exceeded flows in urban sewage disposal systems and are utilized as flow control structures in flood control systems.

Furthermore, Ebtehaj et al. (2015) predicted discharge coefficient of rectangular side weirs within a main flume by employing gene expression programming (GEP). In addition, a meta-heuristic hybrid artificial intelligence approach was developed by Khoshbin et al (2016) to estimate discharge coefficient of side weirs located on rectangular canals. Besides, Akhbari et al. (2017) applied the radial base neural networks (RBNN) and M5’ approaches to model discharge coefficient of triangular weirs in a rectangular conduit. Azimi and Shabanlou (2018) simulated the flow turbulence and water surface nearby a side weir on circular channels in supercritical flow regime through renormalization group (RNG) k-e turbulence model and volume of fluid (VOF) method. By using support vector machine (SVM), discharge coefficients of side weirs installed on trapezoidal channels were modeled by Azimi et al (2019).

Furthermore, usage of various artificial intelligence (AI) techniques is increasing every day so as to model multifarious fields, ranging from hydrological issues to hydraulic problems. It should be stated that discharge coefficient is one of the most important factor to design a side weir properly. Thus, simulation of the discharge coefficient of side weirs located on convergent channels through a new AI approach so-called “self-adaptive extreme learning machine” has enough novelty for both scholar and engineers. This means that, for the first time, the discharge coefficient of rectangular weirs located on convergent channels is simulated by the novel and robust self-adaptive extreme learning machine in this study.

Materials and methods

Extreme learning machine (ELM)

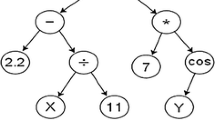

The extreme learning machine (ELM) which is a single-layer feed-forward neural network was provided for the first time by Huang et al. (2004). The ELM randomly determines input weights and also analytically specifies output weights. The only difference between the ELM and the single-layer feed-forward neural network (SLFFNN) is the lack of using biases for the output neuron. Input layer neurons are related with all hidden layer neurons. Hidden layer neurons are created by a bias. The activation function of hidden neurons can be in the form of piecewise continuous function, while this function is linear for output layer neurons. The ELM model uses different algorithms for calculating weights and biases; thus, it leads to decrease the learning time of the network. The mathematical expression of the feed-forward neural network with n hidden node is as follows Huang et al. (2004):

where βi is the weight between the ith hidden node and the output node, ai (\( a_{i} \in R^{n} \)) and bi are the learning factors of hidden nodes and G(ai, bi, x) is the ith node output for the input x. The activation function g(x) (with many types) for the additive hidden node G (ai, bi, x) can be rewritten as follows Huang et al. (2004):

Activation functions are used for calculating the response of neurons. The behavior of neurons is composed of two parts including the total weighted of inputs and the activation function. When a set of weighted input signals is applied, activation functions are used to achieve the response. Also, the same activation functions are used for the same layer neurons which might be linear and nonlinear. In linear functions, a straight linear graph is drawn while a curved line is drawn for nonlinear. Since the number of input and output variables is not the same in nonlinear functions, classification issues are common among them (Pandey and Govind 2016). Nonlinear ELM activation functions discussed in this paper are: step function (hardlim), sigmoid (sig), sinusoidal (sin), triangular bias (tribas) and radial bias (radbas). In the ELM, weights and biases between hidden and output layer neurons are allocated randomly. The activation of hidden layer neurons for each learning sample in an ELM network with j neurons in the hidden layer, i input neurons and k learning samples are computed as follows Huang et al. (2004):

where g(.) can be any nonlinear continuous activation function, Wji is the ith input weight and the neuron of the jth hidden layer, Bj is the bias of the jth hidden layer neuron, Xik is the input neuron for the kth learning sample and Hik is the activation matrix of the jth neuron of the hidden layer for the kth learning sample so that the activation of all hidden layer neurons for samples used in the learning are presented by this matrix. In this matrix, j and k represent the column and row, respectively. The matrix H is expressed as the matrix of the output hidden layer of the neural network. Weights between hidden and output layer neurons are utilized by the fitness of the least square for objective values in the learning mode versus outputs of hidden layer neurons for each learning sample. The mathematical equivalent is expressed as follows Huang et al. (2004):

where β denotes the weight between the output layer neuron and hidden layer neurons and the vector T represents objective values for learning samples which is expressed as follows Huang et al. (2004):

Finally, weights are calculated as follows Huang et al. (2004):

where

In these equations, \( \tilde{a} = a_{\text{1}} \text{,} \ldots \text{,}a_{L} \text{;}\,\tilde{b} = b_{\text{1}} \text{,} \ldots \text{,}b_{L} \text{;}\tilde{x} = x_{\text{1}} \text{,} \ldots \text{,}x_{L} \), β is the weight vector between hidden and output layer neurons and H′ is the Moore–Penrose inverse of the matrix H. T is the vector between weights of learning samples. It can be said the ELM learning process includes two stages: (1) the random allocation of weights and biases to hidden layer neurons and the calculation of the matrix H hidden layer output and (2) the calculation of output weights using the matrix H Moore–Penrose inverse and objective values for different learning samples. The learning process in finding the Moore–Penrose inverse of the hidden layer matrix (H) is fast. This procedure is faster than common iteration-based algorithms such as Levenberg–Marquardt which does not include any step of nonlinear optimization. Therefore, the learning time of the network is noticeably reduced (Huang et al. 2006). The ELM model works with a large number of nonlinear random input space simulations so that each neuron is related to a single random sample.

Differential evolution

The differential evolution (DE) method is one of the relatively new techniques in the field of meta-search optimization proposed by Storn and Price (1997). In recent years, differential evolution algorithm has been introduced as a powerful and fast method for optimization problems in continuous spaces and has a good ability to optimize nonlinear indifferentiable functions. Like other evolutionary algorithms, this algorithm starts by creating an initial population. Then, by applying operators such as combinations, mutations and intersections, the offspring is formed and in the next step called selection stage, the offspring of the offspring is compared with the offspring for the amount of competency measured by the target function. Then the best members move on to the next stage as the next generation. This process continues until the desired results are achieved.

Self-adaptive extreme learning machine

Using the differential evolution algorithm as self-adaptive has the ability to overcome limitations such as control parameters in the algorithm, choosing the trial vector strategy. Therefore, the robust self-adaptive learning machine algorithm (SAELM) is proposed by Cao et al. (2012) to optimize network input weights and hidden node bias. Having the training dataset, L hidden nodes and the activation function g(x), the SAELM algorithm can be formulated. To this end, the initial population is first represented by population vectors (NPs) which include hidden nodes.

Physical model

In the current study, two groups of data including the experimental values obtained by Bagheri et al. (2014) and Maranzoni et al. (2017) are used for validating the numerical results. Bagheri et al.’s (2014) model is composed of a prismatic channel with a side weir installed on its sidewall. Additionally, Maranzoni et al.’s (2017) experimental model consists of a rectangular convergent channel with a side weir attached to the convergence point of the channel and on the sidewall. The layout of the experimental models is illustrated in Fig. 1.

Discharge coefficient of side orifices located on convergent channels

Maranzoni et al. (2017) considered discharge of side weirs placed on convergent channels (Qw) as a function of the main channel width before convergence (B), the main channel width after convergence (bd), the weir height (w), the weir length (L), the crest thickness of the side weir (s), the channel bed slope (S0), the roughness coefficient of the channel (\( \varepsilon \)), the downstream depth of the side weir (hd), discharge at the side weir downstream (Qd), viscosity of water (ρ), specific weight (γ), dynamic viscosity (μ) and surface stress tension (\( \sigma \)) (Maranzoni et al. 2017):

By introducing six groups, they considered the discharge coefficient of side weirs placed upon convergent channels as follows (Maranzoni et al. 2017):

In this relationship, θ is the convergence factor and Fd denotes the Froude number at the downstream of the side weir. Thus, the dimensionless parameters of this equation are taken into account as the input parameters of the numerical models. In this study, six different numerical models are defined and the combinations of input parameters are shown in Fig. 2.

In this paper, Monte Carlo simulations (MCs) are used to investigate the capability of numerical models. The Monte Carlo method is a class of computational algorithms that rely on random iterative sampling to calculate their results. Monte Carlo methods are often used when simulating a mathematical or physical system. Because of their reliance on duplicate calculations and random or random numbers, Monte Carlo methods are often configured to be run by a computer. The tendency to use Monte Carlo methods becomes even more difficult when it is impossible to calculate the exact response using deterministic algorithms. Monte Carlo simulation methods are especially useful in studying systems where there are many variables associated with pair related degree of freedom. In the present study, the algorithms of ANFIS, genetic, and particle swarm optimization have a set of coefficients that provide one response at a time. In general, the purpose of the Monte Carlo method is to adjust the coefficients in a fixed range, which is then presented as the average of all performances (e.g., 1000 runs) as optimized values. If the Monte Carlo method is not used at each step, coefficient values change and do not follow the stump pattern. In general, Monte Carlo is an algorithm that is implemented to optimize the coefficients used in other algorithms in the programming language environment and the statistical distribution is not used. In addition, the multilayer validation method is used for performance evaluation. The aforementioned models are used. In the multilayer validation method, the original sample is randomly divided into k subsamples of equal size. Among subsamples k, one subsample is used as the validation data and the remainder as the test data of this model. The k-fold cross-validation approach is then repeated k times (equal to the number of layers), each of the k subsamples being used exactly once as validation data. The results of the k-layer are averaged and presented as an estimate. The advantage of this method is the random repetition of subsamples in the test and training process for all observations and each observation is used exactly once for validation. In this study, k is assumed to be 4. The outline of the k-fold cross-validation method can also be seen in Fig. 3.

Results and discussion

Criteria for examining accuracy of numerical models

In this study, to study the accuracy of the self-adaptive extreme learning machine models, the statistical indices including mean absolute error (MAE), root mean square error (RMSE), the coefficient of determination (R2) and mean absolute percent error (MAPE) are used as follows:

In the above equations, \( R_{{\left( {\text{Observed}} \right)i}} \), \( R_{{\left( {\text{Pridicted}} \right)i}} \), \( \bar{R}_{{\left( {\text{Observed}} \right)i}} \) and n are experimental dats, results predicted by numerical models, the average of experimental data and the number of experimental measurements, respectively. Desirable value of the MAE, RMSE and MAPE closes to zero, while closeness of the R index to one is ideal.

The presented statistical indices do not provide a simultaneous comparison of average and variance of models. Thus, the Akaike information criterion (AIC) is introduced for comparing predicted and experimental discharge coefficients as follows:

where k is the number of estimated parameters used in the numerical model. The parameter ACI is taken into account as a criterion for suitable agreement of a statistical model. In addition, this criterion is used as a tool for choosing them model which simultaneously describes the complexity and accuracy of the numerical model. The best model has the lowest value of the AIC index.

Number of hidden layer neurons

In this section, the number of hidden layer neurons is evaluated. Generally, by increasing the number of hidden layer neurons, the modeling accuracy increases. In this paper, two hidden layer neurons are utilized for modeling the discharge coefficient of side weirs placed on convergent channels. Then, by increasing the number of neurons, the error of each step is calculated and compared with the previous step. This procedure continues until the numerical model error reaches an acceptable range. In Fig. 4, the changes of different statistical indices versus the changes of the number of hidden layer neurons are depicted. As shown in Fig. 4, after reaching the number of the optimized hidden layer neurons to 6, the numerical model error is calculated in an acceptable range. For example, the RMSE and MAPE values of the numerical model in the case where the number of hidden layer neuron is equal to 6 are computed 0.034 and 5.135, respectively. Also, the MAE for this number of hidden layer neurons is calculated 0.027. The coefficient of determination calculated for this condition is approximated 0.963. Therefore, the number of the hidden layer neurons is considered 6 for the rest of the modeling process.

Activation functions

In the next section, the results of the activation functions of the self-adaptive extreme learning machine model are examined. As discussed previously, the self-adaptive extreme learning machine model has five activation functions entitled sigmoid, sine, hardlimit, triangle basis and radial basis. In Fig. 5 and Table 1, different values of statistical indices for the SAELM model activation functions are arranged. Also, the discharge coefficient values simulated by sigmoid, sine, hardlimit, tribas and radbas are given in Fig. 6. For example, the values of R2, RMSE and MAPE for the sigmoid activation function are computed 0.963, 0.034 and 5.135, respectively. Furthermore, MAE for this activation function is estimated to be 0.027. For the sine activation function, the MAE is calculated almost equal to 0.056, while the sine function predicts the ACI and R2 values − 275.205 and 0.818, respectively. It is worth noting that the MAE, R2 and ACI values for the hardlim activation function are estimated 0.056, 0.812 and − 273.169, respectively. The triangle basis activation function calculates the RMSE and MAPE values equal to 0.118 and 16.915, respectively. Furthermore, the value of R2 for the radial basis activation function is equal to 0.548. Also, the MAE, MAPE and ACI values for this function are calculated 0.050, 8.317 and − 296.206, respectively. As can be seen, the sigmoid activation function predicts the discharge coefficient values with higher accuracy than the other activation functions.

Results of sensitivity analysis

In order to conduct the sensitivity analysis, six SAELM models are introduced by combining the input parameters. The results of the statistical indices calculated for different models are shown in Fig. 7. Also, the results of the statistical indices are given in Table 2. For example, the SAELM 1 is a function of all input parameters. For this model, the R2, MAE and MAPE are estimated 0.975, 0.023 and 4.369, respectively. The ACI for the SAELM 1 is obtained equal to − 401.826. Moreover, the scatter plots of different SAELM models are depicted in Fig. 8. In the following, the influence of each input parameter is removed to identify the most effective input parameter. For example, the SAELM 2 model is a function of the parameters L/hd, w/hd, bd/hd and .θ In other words, the influence of the Froude number at the downstream of the side weir (Fd) is neglected for this model. For this model, the values of MAE and RMSE are calculated 0.039 and 0.057, respectively. Furthermore, the R2, MAPE and ACI values for the mentioned model are 0.901, 6.438 and − 326.181, respectively. Among all SAELM model, the SAELM 2 model has the lowest accuracy. Therefore, by eliminating the flow Froude number, the numerical model accuracy is significantly decreases. For the SAELM 3 model, the influence of the input parameter L/hd is neglected. In other words, this model simulates the discharge coefficient values in terms of Fd،, w/hd, bd/hd and θ. For instance, the R2 value for this model is calculated 0.962. Also, the values of MAE and RMSE for the mentioned model are obtained 0.026 and 0.035, respectively. Among the models with four input parameters, this model has the highest correlation with the experimental values. In the following, the SAELM 4 model is evaluated. This model simulates the objective function values in terms of Fd, L/hd, bd/hd and θ. The influence of the parameter w/hd is removed for this model. This model estimated the R2 and ACI values equal to 0.951 and − 299.019, respectively. In addition, the RMSE, MAPE and MAE values are 0.040, 5.030 and 0.029, respectively. Also, the RMSE, MAPE and MAE values for the SAELM 5 model are calculated 0.037, 4.831 and 0.028, respectively. The values of R2 and ACI for the SAELM 5 model are 0.957 and − 354.203, respectively. This model simulates the discharge coefficient values as a function of Fd, L/hd, w/hd and θ. It should be noted that for the mentioned model the influence of the dimensionless parameter bd/hd is eliminated. The SAELM 6 model predicts the discharge coefficient values in terms of Fd, w/hd, bd/hd and L/hd and the influence of θ is neglected. Based on the modeling results, the R2, MAPE and RMSE values for the SAELM 6 model are approximated 0.977, 3.997 and 0.027, respectively. In addition, the ACI value for the mentioned model is computed − 406.018. According to the modeling results, the SAELM 6 model is introduced as the superior model. This model estimates the discharge coefficient values in terms of Fd, w/hd, bd/hd and L/hd. It is worth noting that the Froude number at the downstream of the side weir (Fd) and w/hd are, respectively, identified as the most influencing input parameters.

Superior model

In the following, the superior model (SAELM 6) which estimates the discharge coefficient values in terms of Fd, w/hd, bd/hd and L/hd. The mentioned model has higher accuracy compared to the other artificial intelligence models. Next, a formula is proposed for calculating the discharge coefficient of labyrinth weirs for the superior model as follows:

where In W, In V, BHN and Out W are the matrices of input weights, input variables, the bias of hidden neurons and output weights, respectively. Values of each of these matrices are presented as follows:

Conclusions

In the study, discharge coefficient of side weirs located on convergent flumes simulated by employing a novel approach entitled “self-adaptive extreme learning machine” (SAELM). Then, the parameters affecting the discharge coefficient of side weirs placed upon convergent channels were introduced. In this study, the value of k for the k-fold cross-validation method was taken into account equal to 6. After that, the number of the hidden layer neurons was evaluated. The optimized number of the hidden layer neurons was obtained to be equal to 6. Subsequently, the results of the activation functions for the SAELM model were assessed. Based on the modeling results, the sigmoid activation function predicted the discharge coefficient values with higher accuracy than the other activation functions. To perform a sensitivity analysis, six SAELM models were introduced through the combination of the input parameters. Then, the effect of each input parameter was eliminated to detect the most influencing input parameter. According to the results obtained from the numerical modeling, the SAELM 6 model was identified as the superior model. This model approximated the discharge coefficient values in terms of Fd, w/hd, bd/hd and L/h. Based on the sensitivity analysis results, the values of R2, MAPE and RMSE for the superior model were estimated 0.977, 3.997 and 0.027, respectively. Based on the sensitivity analysis, the Froude number at the downstream of the side weir and the ratio of the side weir height to the downstream depth of the side weir (w/hd) were, respectively, detected as the most influencing input parameters.

References

Akhbari A, Zaji AH, Azimi H, Vafaeifard M (2017) Predicting the discharge coefficient of triangular plan form weirs using radian basis function and M5’methods. J Appl Res Water Wastewater 4(1):281–289

Azimi H, Shabanlou S (2018) Numerical study of bed slope change effect of circular channel with side weir in supercritical flow conditions. Appl Water Sci 8(6):166

Azimi H, Shabanlou S, Salimi MS (2014) Free surface and velocity field in a circular channel along the side weir in supercritical flow conditions. Flow Meas Instrum 38:108–115

Azimi H, Hadad H, Shokati Z, Salimi MS (2015) Discharge and flow field of the circular channel along the side weir. Can J Civ Eng 42(4):273–280

Azimi H, Bonakdari H, Ebtehaj I (2019) Design of radial basis function-based support vector regression in predicting the discharge coefficient of a side weir in a trapezoidal channel. Appl Water Sci 9(4):78

Bagheri S, Kabiri-Samani AR, Heidarpour M (2014) Discharge coefficient of rectangular sharp-crested side weirs part II: Domínguez’s method. Flow Meas Instrum 35:116–121

Bilhan O, Emiroglu ME, Kisi O (2010) Application of two different neural network techniques to lateral outflow over rectangular side weirs located on a straight channel. Adv Eng Softw 41(6):831–837

Cao J, Lin Z, Huang GB (2012) Self-adaptive evolutionary extreme learning machine. Neural Process Lett 36(3):285–305

Dursun OF, Kaya N, Firat M (2012) Estimating discharge coefficient of semi-elliptical side weir using ANFIS. J Hydrol 426–427:55–62

Ebtehaj I, Bonakdari H, Zaji AH, Azimi H, Sharifi A (2015) Gene expression programming to predict the discharge coefficient in rectangular side weirs. Appl Soft Comput 35:618–628

Emiroglu ME, Kisi O, Bilhan O (2010) Predicting discharge capacity of triangular labyrinth side weir located on a straight channel by using an adaptive neuro-fuzzy technique. Adv Eng Softw 41(2):154–160

Ghodsian M (2003) Flow through side sluice gate. J Irrig Drain Eng 129(6):458–462

Gholami A, Bonakdari H, Zaji AH, Akhtari AA (2019) An efficient classified radial basis neural network for prediction of flow variables in sharp open-channel bends. Appl Water Sci 9(6):145

Granata F, de Marinis G, Gargano R, Tricarico C (2013) Novel approach for side weirs in supercritical flow. J Irrig Drain Eng 139(8):672–679

Huang G-B, Zhu Q-Y, Siew C-K (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. Proc Int Joint Conf Neural Netw 2:985–990

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501

Hussein A, Ahmad Z, Asawa GL (2010) Discharge characteristics of sharp-crested circular side orifices in open channels. Flow Meas Instrum 21(3):418–424

Hussein A, Ahmad Z, Asawa GL (2011) Flow through sharp-crested rectangular side orifices under free flow condition in open channels. Agric Water Manag 98:1536–1544

Karimi M, Attari J, Saneie M, Jalili Ghazizadeh MR (2018) Side weir flow characteristics: comparison of piano key, labyrinth, and linear types. J Hydraul Eng 144(12):04018075

Khoshbin F, Bonakdari H, Ashraf Talesh SH, Ebtehaj I, Zaji AH, Azimi H (2016) Adaptive neuro-fuzzy inference system multi-objective optimization using the genetic algorithm/singular value decomposition method for modelling the discharge coefficient in rectangular sharp-crested side weirs. Eng Optim 48(6):933–948

Kra EY, Merkley GP (2004) Mathematical modelling of open-channel velocity profiles for float method calibration. Agric Water Manag 70(3):229–244

Lewis JW, Wright SJ, Pribak M, Sherill J (2011) Bottom slot discharge outlet for combined sewer diversion structure. J Irrig Drain Eng 137(2):248–253

Maranzoni A, Pilotti M, Tomirotti M (2017) Experimental and numerical analysis of side weir flows in a converging channel. J Hydraul Eng 143(7):04017009

Nezami F, Farsadizadeh D, Nekooie MA (2015) Discharge coefficient for trapezoidal side weir. Alexandria Eng J 54(3):595–605

Pandey P, Govind R (2016) Analysis of randomized performance of bias parameters and activation function of extreme learning machine. Int J Comput Appl 135(1):23–28

Parsaie A, Haghiabi AH (2019) Evaluation of energy dissipation on stepped spillway using evolutionary computing. Appl Water Sci 9(6):144

Storn R, Price K (1997) Differential evolution: a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Zambrano J, Samuelsson O, Carlsson B (2019) Machine learning techniques for monitoring the sludge profile in a secondary settler tank. Appl Water Sci 9(6):146

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gharib, R., Heydari, M., Kardar, S. et al. Simulation of discharge coefficient of side weirs placed on convergent canals using modern self-adaptive extreme learning machine. Appl Water Sci 10, 50 (2020). https://doi.org/10.1007/s13201-019-1136-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13201-019-1136-0