Abstract

Given a positive lower semi-continuous density f on \(\mathbb {R}^2\) the weighted volume \(V_f:=f\mathscr {L}^2\) is defined on the \(\mathscr {L}^2\)-measurable sets in \(\mathbb {R}^2\). The f-weighted perimeter of a set of finite perimeter E in \(\mathbb {R}^2\) is written \(P_f(E)\). We study minimisers for the weighted isoperimetric problem

for \(v>0\). Suppose f takes the form \(f:\mathbb {R}^2\rightarrow (0,+\infty );x\mapsto e^{h(|x|)}\) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Let \(v>0\) and B a centred ball in \(\mathbb {R}^2\) with \(V_f(B)=v\). We show that B is a minimiser for the above variational problem and obtain a uniqueness result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let f be a positive lower semi-continuous density on \(\mathbb {R}^2\). The weighted volume \(V_f:=f\mathscr {L}^2\) is defined on the \(\mathscr {L}^2\)-measurable sets in \(\mathbb {R}^2\). Let E be a set of finite perimeter in \(\mathbb {R}^2\). The weighted perimeter of E is defined by

We study minimisers for the weighted isoperimetric problem

for \(v>0\). To be more specific we suppose that f takes the form

where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Our first main result is the following. It contains the classical isoperimetric inequality (cf. [9, 12]) as a special case; namely, when h is constant on \([0,+\infty )\).

Theorem 1.1

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Let \(v>0\) and B a centred ball in \(\mathbb {R}^2\) with \(V_f(B)=v\). Then B is a minimiser for (1.2).

For \(x\ge 0\) and \(v\ge 0\) define the directional derivative of h in direction v by

and define \(h^\prime _-(x,v)\) similarly for \(x>0\) and \(v\le 0\). We introduce the notation

on \((0,+\infty )\). The function h is locally of bounded variation and is differentiable a.e. with \(h^\prime =\rho \) a.e. on \((0,+\infty )\). Our second main result is a uniqueness theorem.

Theorem 1.2

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Suppose that \(R:=\inf \{\rho >0\}\in [0,+\infty )\) and set \(v_0:=V(B(0,R))\). Let \(v>0\) and E a minimiser for (1.2). The following hold:

-

(i)

if \(v\le v_0\) then E is a.e. equivalent to a ball B in \(\overline{B}(0,R)\) with \(V(B)=V(E)\);

-

(ii)

if \(v>v_0\) then E is a.e. equivalent to a centred ball B with \(V(B)=V(E)\).

Theorem 1.1 is a generalisation of Conjecture 3.12 in [24] (due to K. Brakke) in the sense that less regularity is required of the density f: in the latter, h is supposed to be smooth on \((0,+\infty )\) as well as convex and non-decreasing. This conjecture springs in part from the observation that the weighted perimeter of a local volume-preserving perturbation of a centred ball is non-decreasing ([24] Theorem 3.10). In addition, the conjecture holds for log-convex Gaussian densities of the form \(h:[0,+\infty )\rightarrow \mathbb {R};t\mapsto e^{ct^2}\) with \(c>0\) ([3, 24] Theorem 5.2). In subsequent work partial forms of the conjecture were proved in the literature. In [19] it is shown to hold for large v provided that h is uniformly convex in the sense that \(h^{\prime \prime }\ge 1\) on \((0,+\infty )\) (see [19] Corollary 6.8). A complemen tary result is contained in [11] Theorem 1.1 which establishes the conjecture for small v on condition that \(h^{\prime \prime }\) is locally uniformly bounded away from zero on \([0,+\infty )\). The above-mentioned conjecture is proved in large part in [7] (see Theorem 1.1) in dimension \(n\ge 2\) (see also [4]). There it is assumed that the function h is of class \(C^3\) on \((0,+\infty )\) and is convex and even (meaning that h is the restriction of an even function on \(\mathbb {R}\) to \([0,+\infty )\)). A uniqueness result is also obtained ( [7] Theorem 1.2). We obtain these results under weaker hypotheses in the 2-dimensional case and our proofs proceed along different lines.

We give a brief outline of the article. In Sect. 2 we discuss some preliminary material. In Sect. 3 we show that (1.2) admits an open minimiser E with \(C^1\) boundary M (Theorem 3.8). The argument draws upon the regularity theory for almost minimal sets (cf. [27]) and includes an adaptation of [21] Proposition 3.1. In Sect. 4 it is shown that the boundary M is of class \(C^{1,1}\) (and has weakly bounded curvature). This result is contained in [21] Corollary 3.7 (see also [8]) but we include a proof for completeness. This Section also includes the result that E may be supposed to possess spherical cap symmetry (Theorem 4.5). Section 5 contains further results on spherical cap symmetric sets useful in the sequel. The main result of Sect. 6 is Theorem 6.5 which shows that the generalised (mean) curvature is conserved along M in a weak sense. In Sect. 7 it is shown that there exist convex minimisers of (1.2). Sections 8 and 9 comprise an analytic interlude and are devoted to the study of solutions of the first-order differential equation that appears in Theorem 6.6 subject to Dirichlet boundary conditions. Section 9 for example contains a comparison theorem for solutions to a Ricatti equation (Theorem 9.15 and Corollary 9.16). These are new as far as the author is aware. Section 10 concludes the proof of our main theorems.

2 Some preliminaries

Geometric measure theory. We use \(|\cdot |\) to signify the Lebesgue measure on \(\mathbb {R}^2\) (or occasionally \(\mathscr {L}^2\)). Let E be a \(\mathscr {L}^2\)-measurable set in \(\mathbb {R}^2\). The set of points in E with density \(t\in [0,1]\) is given by

As usual \(B(x,\rho )\) denotes the open ball in \(\mathbb {R}^2\) with centre \(x\in \mathbb {R}^2\) and radius \(\rho >0\). The set \(E^1\) is the measure-theoretic interior of E while \(E^0\) is the measure-theoretic exterior of E. The essential boundary of E is the set \(\partial ^\star E:=\mathbb {R}^2{\setminus }(E^0\cup E^1)\).

Recall that an integrable function u on \(\mathbb {R}^2\) is said to have bounded variation if the distributional derivative of u is representable by a finite Radon measure Du (cf. [1] Definition 3.1 for example) with total variation |Du|; in this case, we write \(u\in \mathrm {BV}(\mathbb {R}^2)\). The set E has finite perimeter if \(\chi _E\) belongs to \(\mathrm {BV}_{\mathrm {loc}}(\mathbb {R}^2)\). The reduced boundary \(\mathscr {F}E\) of E is defined by

(cf. [1] Definition 3.54) and is a Borel set (cf. [1] Theorem 2.22 for example). We use \(\mathscr {H}^k\) (\(k\in [0,+\infty )\)) to stand for k-dimensional Hausdorff measure. If E is a set of finite perimeter in \(\mathbb {R}^2\) then

by [1] Theorem 3.61.

Let f be a positive locally Lipschitz density on \(\mathbb {R}^2\). Let E be a set of finite perimeter and U a bounded open set in \(\mathbb {R}^2\). The weighted perimeter of E relative to U is defined by

By the Gauss–Green formula ( [1] Theorem 3.36 for example) and a convolution argument,

where we have also used [1] Propositions 1.47 and 1.23.

Lemma 2.1

Let \(\varphi \) be a \(C^1\) diffeomeorphism of \(\mathbb {R}^2\) which coincides with the identity map on the complement of a compact set and \(E\subset \mathbb {R}^2\) with \(\chi _E\in \mathrm {BV}(\mathbb {R}^2)\). Then

-

(i)

\(\chi _{\varphi (E)}\in \mathrm {BV}(\mathbb {R}^2)\);

-

(ii)

\(\partial ^\star \varphi (E)=\varphi (\partial ^\star E)\);

-

(iii)

\(\mathscr {H}^1(\mathscr {F}\varphi (E)\varDelta \varphi (\mathscr {F}E))=0\).

Proof

Part (i) follows from [1] Theorem 3.16 as \(\varphi \) is a proper Lipschitz function. Given \(x\in E^0\) we claim that \(y:=\varphi (x)\in \varphi (E)^0\). Let M stand for the Lipschitz constant of \(\varphi \) and L stand for the Lipschitz constant of \(\varphi ^{-1}\). Note that \( B(y,r)\subset \varphi (B(x,Lr)) \) for each \(r>0\). As \(\varphi \) is a bijection and using [1] Proposition 2.49,

This means that

for \(r>0\) and this proves the claim. This entails that \(\varphi (E^0)\subset [\varphi (E)]^0\). The reverse inclusion can be seen using the fact that \(\varphi \) is a bijection. In summary \(\varphi (E^0)=[\varphi (E)]^0\). The corresponding identity for \(E^1\) can be seen in a similar way. These identities entail (ii). From (2.1) and (ii) we may write \(\mathscr {F}\varphi (E)\cup N_1=\varphi (\mathscr {F}E)\cup \varphi (N_2)\) for \(\mathscr {H}^1\)-null sets \(N_1,N_2\) in \(\mathbb {R}^2\). Item (iii) follows. \(\square \)

Curves with weakly bounded curvature. Suppose the open set E in \(\mathbb {R}^2\) has \(C^1\) boundary M. Denote by \(n:M\rightarrow \mathbb {S}^1\) the inner unit normal vector field. Given \(p\in M\) we choose a tangent vector \(t(p)\in \mathbb {S}^1\) in such a way that the pair \(\{t(p),n(p)\}\) forms a positively oriented basis for \(\mathbb {R}^2\). There exists a local parametrisation \(\gamma _1:I\rightarrow M\) where \(I=(-\delta ,\delta )\) for some \(\delta >0\) of class \(C^1\) with \(\gamma _1(0)=p\). We always assume that \(\gamma _1\) is parametrised by arc-length and that \(\dot{\gamma _1}(0)=t(p)\) where the dot signifies differentiation with respect to arc-length. Let X be a vector field defined in some neighbourhood of p in M. Then

if this limit exists and the divergence \(\mathrm {div}^M X\) of X along M at p is defined by

evaluated at p. Suppose that X is a vector field in \(C^1(U,\mathbb {R}^2)\) where U is an open neighbourhood of p in \(\mathbb {R}^2\). Then

at p. If \(p\in M{\setminus }\{0\}\) let \(\sigma (p)\) stand for the angle measured anti-clockwise from the position vector p to the tangent vector t(p); \(\sigma (p)\) is uniquely determined up to integer multiples of \(2\pi \).

Let E be an open set in \(\mathbb {R}^2\) with \(C^{1,1}\) boundary M. Let \(x\in M\) and \(\gamma _1:I\rightarrow M\) a local parametrisation of M in a neighbourhood of x. There exists a constant \(c>0\) such that

for \(s_1,s_2\in I\); a constraint on average curvature (cf. [10, 18]). That is, \(\dot{\gamma }_1\) is Lipschitz on I. So \(\dot{\gamma }_1\) is absolutely continuous and differentiable a.e. on I with

for any \(s_1,s_2\in I\) with \(s_1<s_2\). Moreover, \(|\ddot{\gamma }_1|\le c\) a.e. on I (cf. [1] Corollary 2.23). As \(\langle \dot{\gamma }_1,\dot{\gamma }_1\rangle =1\) on I we see that \(\langle \dot{\gamma }_1,\ddot{\gamma }_1\rangle =0\) a.e. on I. The (geodesic) curvature \(k_1\) is then defined a.e. on I via the relation

as in [18]. The curvature k of M is defined \(\mathscr {H}^1\)-a.e. on M by

whenever \(x=\gamma _1(s)\) for some \(s\in I\) and \(k_1(s)\) exists. We sometimes write \(H(\cdot ,E)=k\).

Let E be an open set in \(\mathbb {R}^2\) with \(C^{1}\) boundary M. Let \(x\in M\) and \(\gamma _1:I\rightarrow M\) a local parametrisation of M in a neighbourhood of x. In case \(\gamma _1\ne 0\) let \(\theta _1\) stand for the angle measured anti-clockwise from \(e_1\) to the position vector \(\gamma _1\) and \(\sigma _1\) stand for the angle measured anti-clockwise from the position vector \(\gamma _1\) to the tangent vector \(t_1=\dot{\gamma }_1\). Put \(r_1:=|\gamma _1|\) on I. Then \(r_1,\theta _1\in C^1(I)\) and

on I provided that \(\gamma _1\ne 0\). Now suppose that M is of class \(C^{1,1}\). Let \(\alpha _1\) stand for the angle measured anti-clockwise from the fixed vector \(e_1\) to the tangent vector \(t_1\) (uniquely determined up to integer multiples of \(2\pi \)). Then \(t_1=(\cos \alpha _1,\sin \alpha _1)\) on I so \(\alpha _1\) is absolutely continuous on I. In particular, \(\alpha _1\) is differentiable a.e. on I with \(\dot{\alpha }_1=k_1\) a.e. on I. This means that \(\alpha _1\in C^{0,1}(I)\). In virtue of the identities \(r_1\cos \sigma _1=\langle \gamma _1,t_1\rangle \) and \(r_1\sin \sigma _1=-\langle \gamma _1,n_1\rangle \) we see that \(\sigma _1\) is absolutely continuous on I and \(\sigma _1\in C^{0,1}(I)\). By choosing an appropriate branch we may assume that

on I. We may choose \(\sigma \) in such a way that \(\sigma \circ \gamma _1=\sigma _1\) on I.

Flows. Recall that a diffeomorphism \(\varphi :\mathbb {R}^2\rightarrow \mathbb {R}^2\) is said to be proper if \(\varphi ^{-1}(K)\) is compact whenever \(K\subset \mathbb {R}^2\) is compact. Given \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) there exists a 1-parameter group of proper \(C^\infty \) diffeomorphisms \(\varphi :\mathbb {R}\times \mathbb {R}^2\rightarrow \mathbb {R}^2\) as in [20] Lemma 2.99 that satisfy

We often use \(\varphi _t\) to refer to the diffeomorphism \(\varphi (t,\cdot ):\mathbb {R}^2\rightarrow \mathbb {R}^2\).

Lemma 2.2

Let \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) and \(\varphi \) be the corresponding flow as above. Then

-

(i)

there exists \(R\in C^\infty (\mathbb {R}\times \mathbb {R}^2,\mathbb {R}^2)\) and \(K>0\) such that

$$\begin{aligned} \varphi (t,x)= \left\{ \begin{array}{ll} x+tX(x)+R(t,x) &{} \text { for }x\in \mathrm {supp}[X];\\ x &{} \text { for }x\not \in \mathrm {supp}[X];\\ \end{array} \right. \end{aligned}$$where \(|R(t,x)|\le K t^2\) for \((t,x)\in \mathbb {R}\times \mathbb {R}^2\);

-

(ii)

there exists \(R^{(1)}\in C^\infty (\mathbb {R}\times \mathbb {R}^2,M_2(\mathbb {R}))\) and \(K_1>0\) such that

$$\begin{aligned} d\varphi (t,x)= \left\{ \begin{array}{ll} I+tdX(x)+R^{(1)}(t,x) &{} \text { for }x\in \mathrm {supp}[X];\\ I &{} \text { for }x\not \in \mathrm {supp}[X];\\ \end{array} \right. \end{aligned}$$where \(|R^{(1)}(t,x)|\le K_1 t^2\) for \((t,x)\in \mathbb {R}\times \mathbb {R}^2\);

-

(iii)

there exists \(R^{(2)}\in C^\infty (\mathbb {R}\times \mathbb {R}^2,\mathbb {R})\) and \(K_2>0\) such that

$$\begin{aligned} J_2d\varphi (t,x)= \left\{ \begin{array}{ll} 1+t\,\mathrm {div}\,X(x)+R^{(2)}(t,x) &{} \text { for }x\in \mathrm {supp}[X];\\ 1 &{} \text { for }x\not \in \mathrm {supp}[X];\\ \end{array} \right. \end{aligned}$$where \(|R^{(2)}(t,x)|\le K_2 t^2\) for \((t,x)\in \mathbb {R}\times \mathbb {R}^2\).

Let \(x\in \mathbb {R}^2,v\) a unit vector in \(\mathbb {R}^2\) and M the line though x perpendicular to v. Then

-

(iv)

there exists \(R^{(3)}\in C^\infty (\mathbb {R}\times \mathbb {R}^2,\mathbb {R})\) and \(K_3>0\) such that

$$\begin{aligned} J_1d^{M}\varphi (t,x)= \left\{ \begin{array}{ll} 1+t(\mathrm {div}^{M}\,X)(x)+R^{(3)}(t,x) &{} \text { for }x\in \mathrm {supp}[X];\\ 1 &{} \text { for }x\not \in \mathrm {supp}[X];\\ \end{array} \right. \end{aligned}$$where \(|R^{(3)}(t,x)|\le K_3 t^2\) for \((t,x)\in \mathbb {R}\times \mathbb {R}^2\).

Proof

(i) First notice that \(\varphi \in C^\infty (\mathbb {R}\times \mathbb {R}^2)\) by [16] Theorem 3.3 and Exercise 3.4. The statement for \(x\not \in \mathrm {supp}[X]\) follows by uniqueness (cf. [16] Theorem 3.1); the assertion for \(x\in \mathrm {supp}[X]\) follows from Taylor’s theorem. (ii) follows likewise: note, for example, that

where the subscript \(_,\) signifies partial differentiation. (iii) follows from (ii) and the definition of the 2-dimensional Jacobian (cf. [1] Definition 2.68). (iv) Using [1] Definition 2.68 together with the Cauchy–Binet formula [1] Proposition 2.69, \(J_1d^M\varphi (t,x)=|d\varphi (t,x)v|\) for \(t\in \mathbb {R}\) and the result follows from (ii). \(\square \)

Let I be an open interval in \(\mathbb {R}\) containing 0. Let \(Z:I\times \mathbb {R}^2\rightarrow \mathbb {R}^2;(t,x)\mapsto Z(t,x)\) be a continuous time-dependent vector field on \(\mathbb {R}^2\) with the properties

-

(Z.1)

\(Z(t,\cdot )\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\) for each \(t\in I\);

-

(Z.2)

\(\mathrm {supp}[Z(t,\cdot )]\subset K\) for each \(t\in I\) for some compact set \(K\subset \mathbb {R}^2\).

By [16] Theorems I.1.1, I.2.1, I.3.1, I.3.3 there exists a unique flow \(\varphi :I\times \mathbb {R}^2\rightarrow \mathbb {R}^2\) such that

-

(F.1)

\(\varphi :I\times \mathbb {R}^2\rightarrow \mathbb {R}^2\) is of class \(C^1\);

-

(F.2)

\(\varphi (0,x)=x\) for each \(x\in \mathbb {R}^2\);

-

(F.3)

\(\partial _t\varphi (t,x)=Z(t,\varphi (x,t))\) for each \((t,x)\in I\times \mathbb {R}^2\);

-

(F.4)

\(\varphi _t:=\varphi (t,\cdot ):\mathbb {R}^2\rightarrow \mathbb {R}^2\) is a proper diffeomorphism for each \(t\in I\).

Lemma 2.3

Let Z be a time-dependent vector field with the properties (Z.1)–(Z.2) and \(\varphi \) be the corresponding flow. Then

-

(i)

for \((t,x)\in I\times \mathbb {R}^2\),

$$\begin{aligned} d\varphi (t,x)= \left\{ \begin{array}{ll} I+tdZ_0(x)+tR(t,x) &{} \text { for }x\in K;\\ I &{} \text { for }x\not \in K;\\ \end{array} \right. \end{aligned}$$where \(\sup _K|R(t,\cdot )|\rightarrow 0\) as \(t\rightarrow 0\).

Let \(x\in \mathbb {R}^2,v\) a unit vector in \(\mathbb {R}^2\) and M the line though x perpendicular to v. Then

-

(ii)

for \((t,x)\in I\times \mathbb {R}^2\),

$$\begin{aligned} J_1d^M\varphi (t,x)= \left\{ \begin{array}{ll} 1+t(\mathrm {div}^{M}\,Z_0)(x)+tR^{(1)}(t,x) &{} \text { for }x\in K;\\ 1 &{} \text { for }x\not \in K.\\ \end{array} \right. \end{aligned}$$where \(\sup _K|R^{(1)}(t,\cdot )|\rightarrow 0\) as \(t\rightarrow 0\).

Proof

(i) We first remark that the flow \(\varphi :I\times \mathbb {R}^2\rightarrow \mathbb {R}^2\) associated to Z is continuously differentiable in t, x in virtue of (Z.1) by [16] Theorem I.3.3. Put \(y(t,x):=d\varphi (t,x)\) for \((t,x)\in I\times \mathbb {R}^2\). By [16] Theorem I.3.3,

for each \((t,x)\in I\times \mathbb {R}^2\) and \(y(0,x)=I\) for each \(x\in \mathbb {R}^2\) where I stands for the \(2\times 2\)-identity matrix. For \(x\in \ K\) and \(t\in I\),

Applying the mean-value theorem component-wise and using uniform continuity of the matrix \(\dot{y}\) in its arguments we see that

uniformly on K as \(t\rightarrow 0\). This leads to (i). Part (ii) follows as in Lemma 2.2. \(\square \)

Let E be a set of finite perimeter in \(\mathbb {R}^2\) with \(V_f(E)<+\infty \). The first variation of weighted volume resp. perimeter along \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) is defined by

whenever the limit exists. By Lemma 2.1 the f-perimeter in (2.14) is well-defined.

Convex functions. Suppose that \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a convex function. For \(x\ge 0\) and \(v\ge 0\) define

and define \(h^\prime _-(x,v)\) similarly for \(x>0\) and \(v\le 0\). For future use we introduce the notation

on \((0,+\infty )\). It holds that h is differentiable a.e. and \(h^\prime =\rho \) a.e. on \((0,+\infty )\). Define \([\rho ]:=\rho _+-\rho _-\). Then \([\rho ]\ge 0\) and vanishes a.e. on \((0,+\infty )\).

Lemma 2.4

Suppose that the function f takes the form (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a convex function. Then

-

(i)

the directional derivative \(f^\prime _+(x,v)\) exists in \(\mathbb {R}\) for each \(x\in \mathbb {R}^2\) and \(v\in \mathbb {R}^2\);

-

(ii)

for \(v\in \mathbb {R}^2\),

$$\begin{aligned} f^\prime _+(x,v)= \left\{ \begin{array}{ll} f(x)h^\prime _+(|x|,\mathrm {sgn}\langle x,v\rangle )\frac{|\langle x,v\rangle |}{|x|} &{} \text { for }x\in \mathbb {R}^2{\setminus }\{0\};\\ f(0)h^\prime _+(0,+1)|v| &{} \text { for }x=0;\\ \end{array} \right. \end{aligned}$$ -

(iii)

if M is a \(C^1\) hypersurface in \(\mathbb {R}^2\) such that \(\cos \sigma \ne 0\) on M then f is differentiable \(\mathscr {H}^1\)-a.e. on M and

$$\begin{aligned} (\nabla f)(x)=f(x)\rho (|x|)\frac{\langle x,\cdot \rangle }{|x|} \end{aligned}$$for \(\mathscr {H}^1\)-a.e. \(x\in M\).

Proof

The assertion in (i) follows from the monotonicity of chords property while (ii) is straightforward. (iii) Let \(x\in M\) and \(\gamma _1:I\rightarrow M\) be a \(C^1\)-parametrisation of M near x as above. Now \(r_1\in C^1(I)\) and \(\dot{r_1}(0)=\cos \sigma (x)\ne 0\) so we may assume that \(r_1:I\rightarrow r_1(I)\subset (0,+\infty )\) is a \(C^1\) diffeomorphism. The differentiability set D(h) of h has full Lebesgue measure in \([0,+\infty )\). It follows by [1] Proposition 2.49 that \(r_1^{-1}(D(h))\) has full measure in I. This entails that f is differentiable \(\mathscr {H}^1\)-a.e. on \(\gamma _1(I)\subset M\). \(\square \)

3 Existence and \(C^1\) regularity

We start with an existence theorem.

Theorem 3.1

Assume that f is a positive radial lower-semicontinuous non-decreasing density on \(\mathbb {R}^2\) which diverges to infinity. Then for each \(v>0\),

Proof

See [22] Theorems 3.3 and 5.9. \(\square \)

But the bulk of this section will be devoted to a discussion of \(C^1\) regularity.

Proposition 3.2

Let f be a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(E\subset \mathbb {R}^2\) be a bounded set with finite perimeter. Let \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\). Then

Proof

Let \(t\in \mathbb {R}\). By the area formula ( [1] Theorem 2.71 and (2.74)),

and

The density f is locally Lipschitz and in particular differentiable a.e. on \(\mathbb {R}^2\) (see [1] 2.3 for example). By the dominated convergence theorem and Lemma 2.2,

by the generalised Gauss–Green formula [1] Theorem 3.36. \(\square \)

Proposition 3.3

Let f be a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(E\subset \mathbb {R}^2\) be a bounded set with finite perimeter. Let \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\). Then there exist constants \(C>0\) and \(\delta >0\) such that

for \(|t|<\delta \).

Proof

Let \(t\in \mathbb {R}\). By Lemma 2.1 and [1] Theorem 3.59,

As \(\mathscr {F}E\) is countably 1-rectifiable ( [1] Theorem 3.59) we may use the generalised area formula [1] Theorem 2.91 to write

For each \(x\in \mathscr {F}E\) and any \(t\in \mathbb {R}\),

where K is the Lipschitz constant of f on \(\mathrm {supp}[X]\). The result follows upon writing

and using Lemma 2.2. \(\square \)

Lemma 3.4

Let f be a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(E\subset \mathbb {R}^2\) be a bounded set with finite perimeter and \(p\in \mathscr {F}E\). For any \(r>0\) there exists \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[X]\subset B(p,r)\) such that \(\delta V_f(X)=1\).

Proof

By (2.2) and [1] Theorem 3.59 and (3.57) in particular,

for any \(r>0\). By the variational characterisation of the f-perimeter relative to B(p, r) we can find \(Y\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[Y]\subset B(p,r)\) such that

where we make use of the generalised Gauss–Green formula (cf. [1] Theorem 3.36). Put \(X:=(1/c)Y\). Then \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[X]\subset B(p,r)\) and \(\delta V_f(X)=1\) according to Proposition 3.2. \(\square \)

Proposition 3.5

Let f be a positive lower semi-continuous density on \(\mathbb {R}^2\). Let U be a bounded open set in \(\mathbb {R}^2\) with Lipschitz boundary. Let \(E, F_1, F_2\) be bounded sets in \(\mathbb {R}^2\) with finite perimeter. Assume that \(E\varDelta F_1\subset \subset U\) and \(E\varDelta F_2\subset \subset \mathbb {R}^2{\setminus }\overline{U}\). Define

Then F is a set of finite perimeter in \(\mathbb {R}^2\) and

Proof

The function \(\chi _E\vert _U\in \mathrm {BV}(U)\) and \(D(\chi _E\vert _U)=(D\chi _E)\vert _U\). We write \(\chi _E^U\) for the boundary trace of \(\chi _E\vert _U\) (see [1] Theorem 3.87); then \(\chi _E^U\in L^1(\partial U,\mathscr {H}^1|\!\_\partial U)\) (cf. [1] Theorem 3.88). We use similar notation elsewhere. By [1] Corollary 3.89,

From the definition of the total variation measure ([1] Definition 1.4),

where we also use the fact that \(\chi _{F_1}^U=\chi _E^U\) as \(E\varDelta F_1\subset \subset U\) and similarly for \(F_2\). The result now follows. \(\square \)

Proposition 3.6

Assume that f is a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(v>0\) and suppose that the set E is a bounded minimiser of (1.2). Let U be a bounded open set in \(\mathbb {R}^2\). There exist constants \(C>0\) and \(\delta >0\) with the following property. For any \(x\in U\) and \(0<r<\delta \),

where F is any set with finite perimeter in \(\mathbb {R}^2\) such that \(E\varDelta F\subset \subset B(x,r)\).

Proof

The proof follows that of [21] Proposition 3.1. We assume to the contrary that

in the language of quantifiers where we have taken some liberties with notation.

Choose \(p_1,p_2\in \mathscr {F}E\) with \(p_1\ne p_2\). Choose \(r_0>0\) such that the open balls \(B(p_1,r_0)\) and \(B(p_2,r_0)\) are disjoint. Choose vector fields \(X_j\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[X_j]\subset B(p_j,r_0)\) such that

as in Lemma 3.4 and Proposition 3.3. Put \(a:=\max \{a_1,a_2\}\). By (3.5),

So there exist \(\varepsilon >0\) and \(1>\eta >0\) such that

for \(|t|<\varepsilon \) and \(j=1,2\). In particular,

for \(|t|<\varepsilon \) and \(j=1,2\).

In (3.4) choose \(C=(1+a)/(1-\eta )\) and \(\delta >0\) such that

-

(a)

\(0<2\delta <\mathrm {dist}(B(p_1,r_0),B(p_2,r_0))\),

-

(b)

\(\sup \{V_f(B(x,\delta )):\,x\in U\}<(1-\eta )\,\varepsilon \).

Choose x, r and \(F_1\) as in (3.4). In light of (a) we may assume that \(B(x,r)\cap B(p_1,r_0)=\emptyset \). By (b),

From (3.6) and (3.8) we can find \(t\in (-\varepsilon ,\varepsilon )\) such that with \(F_2:=\varphi _t^{(1)}(E)\),

by the intermediate value theorem. From (3.4),

while from (3.7),

Let F be the set

Note that \(E\varDelta F_2\subset \subset B(p_1,r_0)\). By Proposition 3.5, F is a bounded set of finite perimeter in \(\mathbb {R}^2\) and

We then infer from (3.10), (3.11) and (3.9) that

On the other hand, \(V_f(F)=V_f(F_1)+V_f(F_2)-V_f(E)=V_f(E)\) by (3.9). We therefore obtain a contradiction to the f-isoperimetric property of E. \(\square \)

Let E be a set of finite perimeter in \(\mathbb {R}^2\) and U a bounded open set in \(\mathbb {R}^2\). The minimality excess is the function \(\psi \) defined by

where

as in [27] (1.9). We recall that the boundary of E is said to be almost minimal in \(\mathbb {R}^2\) if for each bounded open set U in \(\mathbb {R}^2\) there exists \(T>0\) and a positive constant K such that for every \(x\in U\) and \(r\in (0,T)\),

This definition corresponds to [27] Definition 1.5.

Theorem 3.7

Assume that f is a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(v>0\) and assume that E is a bounded minimiser of (1.2). Then the boundary of E is almost minimal in \(\mathbb {R}^2\).

Proof

Let U be a bounded open set in \(\mathbb {R}^2\) and \(C>0\) and \(\delta >0\) as in Proposition 3.6. The open \(\delta \)-neighbourhood of U is denoted \(I_\delta (U)\). Let \(x\in U\) and \(r\in (0,\delta )\). Put \(V:=I_{2\delta }(U)\). For the sake of brevity write \(m:=\inf _{B(x,r)}f\) and \(M:=\sup _{B(x,r)}f\). Let F be a set of finite perimeter in \(\mathbb {R}^2\) such that \(F\varDelta E\subset \subset B(x,r)\). By Proposition 3.6,

where L stands for the Lipschitz constant of the restriction of f to V. We then derive that

By [13] (5.14), \(\nu (E,B(x,r))\le \pi r\). The inequality in (3.13) now follows. \(\square \)

Theorem 3.8

Assume that f is a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(v>0\) and suppose that E is a bounded minimiser of (1.2). Then there exists a set \(\widetilde{E}\subset \mathbb {R}^2\) such that

-

(i)

\(\widetilde{E}\) is a bounded minimiser of (1.2);

-

(ii)

\(\widetilde{E}\) is equivalent to E;

-

(iii)

\(\widetilde{E}\) is open and \(\partial \widetilde{E}\) is a \(C^1\) hypersurface in \(\mathbb {R}^2\).

Proof

By [13] Proposition 3.1 there exists a Borel set F equivalent to E with the property that

By Theorem 3.7 and [27] Theorem 1.9, \(\partial F\) is a \(C^1\) hypersurface in \(\mathbb {R}^2\) (taking note of differences in notation). The set

satisfies (i)–(iii). \(\square \)

4 Weakly bounded curvature and spherical cap symmetry

Theorem 4.1

Assume that f is a positive locally Lipschitz density on \(\mathbb {R}^2\). Let \(v>0\) and suppose that E is a bounded minimiser of (1.2). Then there exists a set \(\widetilde{E}\subset \mathbb {R}^2\) such that

-

(i)

\(\widetilde{E}\) is a bounded minimiser of (1.2);

-

(ii)

\(\widetilde{E}\) is equivalent to E;

-

(iii)

\(\widetilde{E}\) is open and \(\partial \widetilde{E}\) is a \(C^{1,1}\) hypersurface in \(\mathbb {R}^2\).

Proof

We may assume that E has the properties listed in Theorem 3.8. Put \(M:=\partial E\). Let \(x\in M\) and U a bounded open set containing x. Choose \(C>0\) and \(\delta >0\) as in Proposition 3.6. Let \(0<r<\delta \) and \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[X]\subset B(x,r)\). Then

for each \(t\in \mathbb {R}\). From the identity (3.2),

where K stands for the Lipschitz constant of f restricted to U. On dividing by t and taking the limit \(t\rightarrow 0\) we obtain

upon using Lemma 2.2 and Proposition 3.2. Replacing X by \(-X\) we derive that

where \(C_1=C\Vert f\Vert _{L^\infty (U)}+\sqrt{2}K\). Let \(\gamma _1:I\rightarrow M\) be a local \(C^1\) parametrisation of M near x. Suppose that \(Y\in C^1_c(I,\mathbb {R}^2)\) with \(\mathrm {supp}[Y]\subset I\) and that \(\gamma _1(I)\subset M\cap B(x,r)\). Note that there exists \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[X]\subset B(x,r)\) such that \(X\circ \gamma _1=Y\) on I. The above estimate entails that

This means that the function \((f\circ \gamma _1)t\) belongs to \(\mathrm {BV}(I)\) and this implies in turn that \(t\in \mathrm {BV}(I)\). For \(s_1,s_2\in I\) with \(s_1<s_2\),

where \(1/c=\inf _{\overline{U}}f>0\). It follows that M is of class \(C^{1,1}\). \(\square \)

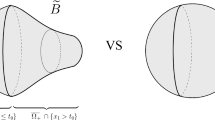

We turn to the topic of spherical cap symmetrisation. Denote by \(\mathbb {S}^1_\tau \) the centred circle in \(\mathbb {R}^2\) with radius \(\tau >0\). We sometimes write \(\mathbb {S}^1\) for \(\mathbb {S}^1_1\). Given \(x\in \mathbb {R}^2,v\in \mathbb {S}^1\) and \(\alpha \in (0,\pi ]\) the open cone with vertex x, axis v and opening angle \(2\alpha \) is the set

Let E be an \(\mathscr {L}^2\)-measurable set in \(\mathbb {R}^2\) and \(\tau >0\). The \(\tau \)-section \(E_\tau \) of E is the set \(E_\tau :=E\cap \mathbb {S}^1_\tau \). Put

and \(p(E) := \{\tau>0:L(\tau )>0\}\). The function L is \(\mathscr {L}^1\)-measurable by [1] Theorem 2.93. Given \(\tau >0\) and \(0<\alpha \le \pi \) the spherical cap \(C(\tau ,\alpha )\) is the set

and has \(\mathscr {H}^1\)-measure \(s(\tau ,\alpha ):=2\alpha \tau \). The spherical cap symmetral \(E^{sc}\) of the set E is defined by

where \(\alpha \in (0,\pi ]\) is determined by \(s(\tau ,\,\alpha )=L(\tau )\). Observe that \(E^{sc}\) is a \(\mathscr {L}^2\)-measurable set in \(\mathbb {R}^2\) and \(V_f(E^{sc})=V_f(E)\). Note also that if B is a centred open ball then \(B^{sc}=B{\setminus }\{0\}\). We say that E is spherical cap symmetric if \(\mathscr {H}^1((E\varDelta E^{sc})_\tau )=0\) for each \(\tau >0\). This definition is broad but suits our purposes.

The result below is stated in [22] Theorem 6.2 and a sketch proof given. A proof along the lines of [2] Theorem 1.1 can be found in [23]. First, let B be a Borel set in \((0,+\infty )\); then the annulus A(B) over B is the set \(A(B):=\{x\in \mathbb {R}^2:|x|\in B\}\).

Theorem 4.2

Let E be a set of finite perimeter in \(\mathbb {R}^2\). Then \(E^{sc}\) is a set of finite perimeter and

for any Borel set \(B\subset (0,\infty )\) and the same inequality holds with \(E^{sc}\) replaced by any set F that is \(\mathscr {L}^2\)-equivalent to \(E^{sc}\).

Corollary 4.3

Let f be a positive lower semi-continuous radial function on \(\mathbb {R}^2\). Let E be a set of finite perimeter in \(\mathbb {R}^2\). Then \( P_f(E^{sc})\le P_f(E) \).

Proof

Assume that \(P_f(E)<+\infty \). We remark that f is Borel measurable as f is lower semi-continuous. Let \((f_h)\) be a sequence of simple Borel measurable radial functions on \(\mathbb {R}^2\) such that \(0\le f_h\le f\) and \(f_h\uparrow f\) on \(\mathbb {R}^2\) as \(h\rightarrow \infty \). By Theorem 4.2,

for each h. Taking the limit \(h\rightarrow \infty \) the monotone convergence theorem gives \(P_f(E^{sc})\le P_f(E)\). \(\square \)

Lemma 4.4

Let E be an \(\mathscr {L}^2\)-measurable set in \(\mathbb {R}^2\) such that \(E{\setminus }\{0\}=E^{sc}\). Then there exists an \(\mathscr {L}^2\)-measurable set F equivalent to E such that

-

(i)

\(\partial F=\{x\in \mathbb {R}^2:0<|F\cap B(x,\rho )|<|B(x,\rho )|\text { for any }\rho >0\}\);

-

(ii)

F is spherical cap symmetric.

Proof

Put

We claim that \(E_1\) is spherical cap symmetric. For take \(x\in E_1\) with \(\tau =|x|>0\) and \(|\theta (x)|\in (0,\pi ]\). Now \(|E\cap B(x,\rho )|=|B(x,\rho )|\) for some \(\rho >0\). Let \(y\in \mathbb {R}^2\) with \(|y|=\tau \) and \(|\theta (y)|<|\theta (x)|\). Choose a rotation \(O\in \mathrm {SO}(2)\) such that \(OB(x,\rho )=B(y,\rho )\). As \(E{\setminus }\{0\}=E^{sc},|E\cap B(y,\rho )|=|O(E\cap B(x,\rho ))|=|E\cap B(x,\rho )|=|B(x,\rho )|=|B(y,\rho )|\). The claim follows. It follows in a similar way that \(\mathbb {R}^2{\setminus } E_0\) is spherical cap symmetric. It can then be seen that the set \(F:=(E_1\cup E){\setminus } E_0\) inherits this property. As in [13] Proposition 3.1 the set F is equivalent to E and enjoys the property in (i). \(\square \)

Theorem 4.5

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Given \(v>0\) let E be a bounded minimiser of (1.2). Then there exists an \(\mathscr {L}^2\)-measurable set \(\widetilde{E}\) with the properties

-

(i)

\(\widetilde{E}\) is a minimiser of (1.2);

-

(ii)

\(L_{\widetilde{E}}=L\) a.e. on \((0,+\infty )\);

-

(iii)

\(\widetilde{E}\) is open, bounded and has \(C^{1,1}\) boundary;

-

(iv)

\(\widetilde{E}{\setminus }\{0\}=\widetilde{E}^{sc}\).

Proof

Let E be a bounded minimiser for (1.2). Then \(E_1:=E^{sc}\) is a bounded minimiser of (1.2) by Corollary 4.3 and \(L_E=L_{E_1}\) on \((0,+\infty )\). Now put \(E_2:=F\) with F as in Lemma 4.4. Then \(L_{E_2}=L\) a.e. on \((0,+\infty )\) as \(E_2\) is equivalent to \(E_1,E_2\) is a bounded minimiser of (1.2) and \(E_2\) is spherical cap symmetric. Moreover, \(\partial E_2=\{x\in \mathbb {R}^2:0<|E_2\cap B(x,\rho )|<|B(x,\rho )|\text { for any }\rho >0\}\). As in the proof of Theorem 3.8, \(\partial E_2\) is a \(C^1\) hypersurface in \(\mathbb {R}^2\). Put

Then \(\widetilde{E}\) is equivalent to \(E_2\) so that (ii) holds, and is a bounded minimiser of (1.2); \(\widetilde{E}\) is open and \(\partial \widetilde{E}=\partial E_2\) is \(C^1\). In fact, \(\partial \widetilde{E}\) is of class \(C^{1,1}\) by Theorem 4.1. As \(E_2\) is spherical cap symmetric the same is true of \(\widetilde{E}\). But \(\widetilde{E}\) is open which entails that \(\widetilde{E}{\setminus }\{0\}=\widetilde{E}^{sc}\). \(\square \)

5 More on spherical cap symmetry

Let

stand for the open upper half-plane in \(\mathbb {R}^2\) and

for reflection in the \(x_1\)-axis. Let \(O\in \mathrm {SO}(2)\) represent rotation anti-clockwise through \(\pi /2\).

Lemma 5.1

Let E be an open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\). Let \(x\in M{\setminus }\{0\}\). Then

-

(i)

\(Sx\in M{\setminus }\{0\}\);

-

(ii)

\(n(Sx)=Sn(x)\);

-

(iii)

\(\cos \sigma (Sx)=-\cos \sigma (x)\).

Proof

(i) The closure \(\overline{E}\) of E is spherical cap symmetric. The spherical cap symmetral \(\overline{E}\) is invariant under S from the representation (4.2). (ii) is a consequence of this last observation. (iii) Note that \(t(Sx)=O^\star n(Sx)=O^\star Sn(x)\). Then

as \(SO^\star S=O\) and \(O=-O^\star \). \(\square \)

We introduce the projection \(\pi :\mathbb {R}^2\rightarrow [0,+\infty );x\mapsto |x|\).

Lemma 5.2

Let E be an open set in \(\mathbb {R}^2\) with boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\).

-

(i)

Suppose \(0\ne x\in \mathbb {R}^2{\setminus }\overline{E}\) and \(\theta (x)\in (0,\pi ]\). Then there exists an open interval I in \((0,+\infty )\) containing \(\tau \) and \(\alpha \in (0,\theta (x))\) such that \(A(I){\setminus }\overline{S}(\alpha )\subset \mathbb {R}^2{\setminus }\overline{E}\).

-

(ii)

Suppose \(0\ne x\in E\) and \(\theta (x)\in [0,\pi )\). Then there exists an open interval I in \((0,+\infty )\) containing \(\tau \) and \(\alpha \in (\theta (x),\pi )\) such that \(A(I)\cap S(\alpha )\subset E\).

-

(iii)

For each \(0<\tau \in \pi (M),M_\tau \) is the union of two closed spherical arcs in \(\mathbb {S}^1_\tau \) symmetric about the \(x_1\)-axis.

Proof

(i) We can find \(\alpha \in (0,\theta (x))\) such that \(\mathbb {S}^1_\tau {\setminus } S(\alpha )\subset \mathbb {R}^2{\setminus }\overline{E}\) as can be seen from definition (4.2). This latter set is compact so \(\mathrm {dist}(\mathbb {S}^1_\tau {\setminus } S(\alpha ),\overline{E})>0\). This means that the \(\varepsilon \)-neighbourhood of \(\mathbb {S}^1_\tau {\setminus } S(\alpha )\) is contained in \(\mathbb {R}^2{\setminus }\overline{E}\) for \(\varepsilon >0\) small. The claim follows. (ii) Again from (4.2) we can find \(\alpha \in (\theta (x),\pi )\) such that \(\overline{\mathbb {S}^1_\tau \cap S(\alpha )}\subset E\) and the assertion follows as before.

(iii) Suppose \(x_1,x_2\) are distinct points in \(M_\tau \) with \(0\le \theta (x_1)<\theta (x_2)\le \pi \). Suppose y lies in the interior of the spherical arc joining \(x_1\) and \(x_2\). If \(y\in \mathbb {R}^2{\setminus }\overline{E}\) then \(x_2\in \mathbb {R}^2{\setminus }\overline{E}\) by (i) and hence \(x_2\not \in M\). If \(y\in E\) we obtain the contradiction that \(x_1\in E\) by (ii). Therefore \(y\in M\). We infer that the closed spherical arc joining \(x_1\) and \(x_2\) lies in \(M_\tau \). The claim follows noting that \(M_\tau \) is closed. \(\square \)

Lemma 5.3

Let E be an open set in \(\mathbb {R}^2\) with \(C^1\) boundary M. Let \(x\in M\). Then

Proof

Assume for a contradiction that

There exists \(\eta \in (0,1)\) and a sequence \((y_h)\) in E such that \(y_h\rightarrow x\) as \(h\rightarrow \infty \) and

for each \(h\in \mathbb {N}\). Choose \(\alpha \in (0,\pi /2)\) such that \(\cos \alpha =\eta \). As M is \(C^1\) there exists \(r>0\) such that

By choosing h sufficiently large we can find \(y_h\in B(x,r)\) with the additional property that \(y_h\in C(x,-n(x),\alpha )\) by (5.1). We are thus led to a contradiction. \(\square \)

Lemma 5.4

Let E be an open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\). For each \(0<\tau \in \pi (M)\),

-

(i)

\(|\cos \sigma |\) is constant on \(M_\tau \);

-

(ii)

\(\cos \sigma =0\) on \(M_\tau \cap \{x_2=0\}\);

-

(iii)

\(\langle Ox,n(x)\rangle \le 0\) for \(x\in M_\tau \cap H\)

-

(iv)

\(\cos \sigma \le 0\) on \(M_\tau \cap H\);

and if \(\cos \sigma \not \equiv 0\) on \(M_\tau \) then

-

(v)

\(\tau \in p(E)\);

-

(vi)

\(M_\tau \) consists of two disjoint singletons in \(\mathbb {S}^1_\tau \) symmetric about the \(x_1\)-axis;

-

(vii)

\(L(\tau )\in (0,2\pi \tau )\);

-

(viii)

\(M_\tau =\{(\tau \cos (L(\tau )/2\tau ),\pm \tau \sin (L(\tau )/2\tau )\}\).

Proof

(i) By Lemma 5.2, \(M_\tau \) is the union of two closed spherical arcs in \(\mathbb {S}^1_\tau \) symmetric about the \(x_1\)-axis. In case \(M_\tau \cap \overline{H}\) consists of a singleton the assertion follows from Lemma 5.1. Now suppose that \(M_\tau \cap \overline{H}\) consists of a spherical arc in \(\mathbb {S}^1_\tau \) with non-empty interior. It can be seen that \(\cos \sigma \) vanishes on the interior of this arc as \(0=r_1^\prime =\cos \sigma _1\) in a local parametrisation by (2.9). By continuity \(\cos \sigma =0\) on \(M_\tau \). (ii) follows from Lemma 5.1. (iii) Let \(x\in M_\tau \cap H\) so \(\theta (x)\in (0,\pi )\). Then \(S(\theta (x))\cap \mathbb {S}^1_\tau \subset \overline{E}\) as \(\overline{E}\) is spherical cap symmetric. Then

by Lemma 5.3. (iv) The adjoint transformation \(O^\star \) represents rotation clockwise through \(\pi /2\). Let \(x\in M_\tau \cap H\). By (iii),

and this leads to the result. (v) As \(\cos \sigma \not \equiv 0\) on \(M_\tau \) we can find \(x\in M_\tau \cap H\). We claim that \(\mathbb {S}^1_\tau \cap S(\theta (x))\subset E\). For suppose that \(y\in \mathbb {S}^1_\tau \cap S(\theta (x))\) but \(y\not \in E\). We may suppose that \(0\le \theta (y)<\theta (x)<\pi \). If \(y\in \mathbb {R}^2{\setminus }\overline{E}\) then \(x\in \mathbb {R}^2{\setminus }\overline{E}\) by Lemma 5.2. On the other hand, if \(y\in M\) then the spherical arc in H joining y to x is contained in M again by Lemma 5.2. This arc also has non-empty interior in \(\mathbb {S}^1_\tau \). Now \(\cos \sigma =0\) on its interior so \(\cos (\sigma (x))=0\) by (i) contradicting the hypothesis. A similar argument deals with (vi) and this together with (v) in turn entails (vii) and (viii). \(\square \)

Lemma 5.5

Let E be an open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\). Suppose that \(0\in M\). Then

-

(i)

\((\sin \sigma )(0+)=0\);

-

(ii)

\((\cos \sigma )(0+)=-1\).

Proof

(i) Let \(\gamma _1\) be a \(C^1\) parametrisation of M in a neighbourhood of 0 with \(\gamma _1(0)=0\) as above. Then \(n(0)=n_1(0)=e_1\) and hence \(t(0)=t_1(0)=-e_2\). By Taylor’s Theorem \(\gamma _1(s)=\gamma _1(0)+t_1(0)s+o(s)=-e_2s+o(s)\) for \(s\in I\). This means that \(r_1(s)=|\gamma _1(s)|=s+o(s)\) and

as \(s\rightarrow 0\) which entails that \((\cos \theta _1)(0-)=0\). Now \(t_1\) is continuous on I so \(t_1=-e_2+o(1)\) and \(\cos \alpha _1=\langle e_1,t_1\rangle =o(1)\). We infer that \((\cos \alpha _1)(0-)=0\). By (2.11), \(\cos \alpha _1=\cos \sigma _1\cos \theta _1-\sin \sigma _1\sin \theta _1\) on I and hence \((\sin \sigma _1)(0-)=0\). We deduce that \((\sin \sigma )(0+)=0\). Item (ii) follows from (i) and Lemma 5.4. \(\square \)

The set

plays an important rôle in the proof of Theorem 1.1.

Lemma 5.6

Let E be an open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\). Then \(\varOmega \) is an open set in \((0,+\infty )\).

Proof

Suppose \(0<\tau \in \varOmega \). Choose \(x\in M_\tau \cap \{\cos \sigma \ne 0\}\). Let \(\gamma _1:I\rightarrow M\) be a local \(C^1\) parametrisation of M in a neighbourhood of x such that \(\gamma _1(0)=x\) as before. By shrinking I if necessary we may assume that \(r_1\ne 0\) and \(\cos \sigma _1\ne 0\) on I. Then the set \(\{r_1(s):s\in I\}\subset \varOmega \) is connected and so an interval in \(\mathbb {R}\) (see for example [25] Theorems 6.A and 6.B). By (2.9), \(r_1^\prime (0)=\cos \sigma _1(0)=\cos \sigma (p)\ne 0\). This means that the set \(\{r_1(s):s\in I\}\) contains an open interval about \(\tau \). \(\square \)

6 Generalised (mean) curvature

Given a set E of finite perimeter in \(\mathbb {R}^2\) the first variation \(\delta V_f(Z)\) resp. \(\delta P_f^+(Z)\) of weighted volume and perimeter along a time-dependent vector field Z are defined as in (2.13) and (2.14).

Proposition 6.1

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Let E be a bounded open set in \(\mathbb {R}^2\) with \(C^1\) boundary M. Let Z be a time-dependent vector field. Then

where \(Z_0:=Z(0,\cdot )\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\).

Proof

The identity (3.2) holds for each \(t\in I\) with M in place of \(\mathscr {F}E\). The assertion follows on appealing to Lemma 2.3 and Lemma 2.4 with the help of the dominated convergence theorem. \(\square \)

Given \(X,Y\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) let \(\psi \) resp. \(\chi \) stand for the 1-parameter group of \(C^\infty \) diffeomorphisms of \(\mathbb {R}^2\) associated to the vector fields X resp. Y as in (2.12). Let I be an open interval in \(\mathbb {R}\) containing the point 0. Suppose that the function \(\sigma :I\rightarrow \mathbb {R}\) is \(C^1\). Define a flow via

Lemma 6.2

The time-dependent vector field Z associated with the flow \(\varphi \) is given by

for \((t,x)\in I\times \mathbb {R}^2\) and satisfies (Z.1) and (Z.2).

Proof

For \(t\in I\) and \(x\in \mathbb {R}^2\) we compute using (2.12),

and this gives (6.1). Put \(K_1:=\mathrm {supp}[X],K_2:=\mathrm {supp}[Y]\) and \(K:=K_1\cup K_2\). Then (Z.2) holds with this choice of K. \(\square \)

Let E be a bounded open set in \(\mathbb {R}^2\) with \(C^1\) boundary M. Define \(\varLambda :=(M{\setminus }\{0\})\cap \{\cos \sigma =0\}\) and

For future reference put \(\varLambda _1^{\pm }:=\varLambda _1\cap \{x\in M:\pm \langle x,n\rangle >0\}\).

Lemma 6.3

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Let E be a bounded open set in \(\mathbb {R}^2\) with \(C^{1,1}\) boundary M and suppose that \(E{\setminus }\{0\}=E^{sc}\). Then

-

(i)

\(\varLambda _1\) is a countable disjoint union of well-separated open circular arcs centred at 0;

-

(ii)

\(\mathscr {H}^1(\overline{\varLambda _1}{\setminus }\varLambda _1)=0\);

-

(iii)

f is differentiable \(\mathscr {H}^1\)-a.e. on \(M{\setminus }\overline{\varLambda _1}\).

The term well-separated in (i) means the following: if \(\varGamma \) is an open circular arc in \(\varLambda _1\) with \(\varGamma \cap (\varLambda _1{\setminus }\varGamma )=\emptyset \) then \(d(\varGamma ,\varLambda _1{\setminus }\varGamma )>0\).

Proof

(i) Let \(x\in \varLambda _1\) and \(\gamma _1:I\rightarrow M\) a \(C^{1,1}\) parametrisation of M near x. By shrinking I if necessary we may assume that \(\gamma _1(I)\subset M\cap B(x,\rho )\) with \(\rho \) as in (6.2). So \(\cos \sigma =0\) \(\mathscr {H}^1\)-a.e. on \(\gamma _1(I)\) and hence \(\cos \sigma _1=0\) a.e. on I. This means that \(\cos \sigma _1=0\) on I as \(\sigma _1\in C^{0,1}(I)\) and that \(r_1\) is constant on I by (2.9). Using (2.10) it can be seen that \(\gamma _1(I)\) is an open circular arc centred at 0. By compactness of M it follows that \(\varLambda _1\) is a countable disjoint union of open circular arcs centred on 0. The well-separated property flows from the fact that M is \(C^1\). (ii) follows as a consequence of this property. (iii) Let \(x\in M{\setminus }\overline{\varLambda _1}\) and \(\gamma _1:I\rightarrow M\) a \(C^{1,1}\) parametrisation of M near x with properties as before. We assume that x lies in the upper half-plane H. By shrinking I if necessary we may assume that \(\gamma _1(I)\subset (M{\setminus }\overline{\varLambda _1})\cap H\). Let \(s_1,s_2, s_3\in I\) with \(s_1<s_2<s_3\). Then \(y:=\gamma _1(s_2)\in M{\setminus }\overline{\varLambda _1}\). So \(\mathscr {H}^1(M\cap \{\cos \sigma \ne 0\}\cap B(y,\rho ))>0\) for each \(\rho >0\). This means that for small \(\eta >0\) the set \(\gamma _1((s_2-\eta ,s_2+\eta ))\cap \{\cos \sigma \ne 0\}\) has positive \(\mathscr {H}^1\)-measure. Consequently, \(r_1(s_3)-r_1(s_1)=\int _{s_1}^{s_3}\cos \sigma _1\,ds<0\) bearing in mind Lemma 5.4. This shows that \(r_1\) is strictly decreasing on I. So h is differentiable a.e. on \(r_1(I)\subset (0,+\infty )\) in virtue of the fact that h is convex and hence locally Lipschitz. This entails (iii). \(\square \)

Proposition 6.4

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Given \(v>0\) let E be a minimiser of (1.2). Assume that E is a bounded open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and suppose that \(E{\setminus }\{0\}=E^{sc}\). Suppose that \(M{\setminus }\overline{\varLambda _1}\ne \emptyset \). Then there exists \(\lambda \in \mathbb {R}\) such that for any \(X\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\),

Proof

Let \(X\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\). Let \(x\in M\) and \(r>0\) such that \(M\cap B(x,r)\subset M{\setminus }\overline{\varLambda _1}\). Choose \(Y\in C^\infty _c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[Y]\subset B(x,r)\) as in Lemma 3.4. Let \(\psi \) resp. \(\chi \) stand for the 1-parameter group of \(C^\infty \) diffeomorphisms of \(\mathbb {R}^2\) associated to the vector fields X resp. Y as in (2.12). For each \((s,t)\in \mathbb {R}^2\) the set \(\chi _s(\psi _t(E))\) is an open set in \(\mathbb {R}^2\) with \(C^1\) boundary and \(\partial (\chi _s\circ \psi _t)(E)=(\chi _s\circ \psi _t)(M)\) by Lemma 2.1. Define

for \((s,t)\in \mathbb {R}^2\). We write \(F=(\chi _t\circ \psi _s)(E)\). Arguing as in Proposition 3.2,

with an application of the area formula (cf. [1] Theorem 2.71). This last varies continuously in (s, t). The same holds for partial differentiation with respect to s. Indeed, put \(\eta :=\chi _t\circ \psi _s\). Then noting that \(J_2d(\eta \circ \psi _h)=(J_2d\eta )\circ \psi _h J_2d\psi _h\) and using the dominated convergence theorem,

where the explanation for the last term can be found in the proof of Proposition 3.2. In this regard we note that \(d(d\chi _t)\) (for example) is continuous on \(I\times \mathbb {R}^2\) (cf. [1] Theorem 3.3 and Exercise 3.2) and in particular \(\nabla J_2d\chi _t\) is continuous on \(I\times \mathbb {R}^2\). The expression above also varies continuously in (s, t) as can be seen with the help of the dominated convergence theorem. This means that \(V(\cdot ,\cdot )\) is continuously differentiable on \(\mathbb {R}^2\). Note that

by choice of Y. By the implicit function theorem there exists \(\eta >0\) and a \(C^1\) function \(\sigma :(-\eta ,\eta )\rightarrow \mathbb {R}\) such that \(\sigma (0)=0\) and \(V(s,\sigma (s))=0\) for \(s\in (-\eta ,\eta )\); moreover,

by the Gauss–Green formula (cf. [1] Theorem 3.36).

The mapping

satisfies conditions (F.1)–(F.4) above with \(I=(-\eta ,\eta )\) where the associated time-dependent vector field Z is given as in (6.1) and satisfies (Z.1) and (Z.2); moreover, \(Z_0=Z(0,\cdot )=\sigma ^\prime (0)Y+X\). Note that \(Z_0=X\) on \(M{\setminus } B(x,r)\).

The mapping \(I\rightarrow \mathbb {R};t\mapsto P_f(\varphi _t(E))\) is right-differentiable at \(t=0\) as can be seen from Proposition 6.1 and has non-negative right-derivative there. By Proposition 6.1 and Lemma 6.3,

The identity then follows upon inserting the expression for \(\sigma ^\prime (0)\) above with \(\lambda =-\int _{M}f^\prime _+(\cdot ,Y) + f\,\mathrm {div}^M Y\,d\mathscr {H}^1\). The claim follows for \(X\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\) by a density argument. \(\square \)

Theorem 6.5

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Given \(v>0\) let E be a minimiser of (1.2). Assume that E is a bounded open set in \(\mathbb {R}^2\) with \(C^{1,1}\) boundary M and suppose that \(E{\setminus }\{0\}=E^{sc}\). Suppose that \(M{\setminus }\overline{\varLambda _1}\ne \emptyset \). Then there exists \(\lambda \in \mathbb {R}\) such that

-

(i)

\(k+\rho \sin \sigma +\lambda =0\) \(\mathscr {H}^1\)-a.e. on \(M{\setminus }\overline{\varLambda _1}\);

-

(ii)

\(\rho _--\lambda \le k\le \rho _+-\lambda \) on \(\varLambda _1^+\);

-

(iii)

\(-\rho _+-\lambda \le k\le -\rho _--\lambda \) on \(\varLambda _1^-\).

The expression \(k+\rho \sin \sigma \) is called the generalised (mean) curvature of M.

Proof

(i) Let \(x\in M\) and \(r>0\) such that \(M\cap B(x,r)\subset M{\setminus }\overline{\varLambda _1}\). Choose \(X\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\) with \(\mathrm {supp}[X]\subset B(x,r)\). We know from Lemma 6.3 that f is differentiable \(\mathscr {H}^1\)-a.e. on \(\mathrm {supp}[X]\). Let \(\lambda \) be as in Proposition 6.4. Replacing X by \(-X\) we deduce from Proposition 6.4 that

The divergence theorem on manifolds (cf. [1] Theorem 7.34) holds also for \(C^{1,1}\) manifolds. So

where \(u=\langle n,X\rangle \). Combining this with the equality above we see that

for all \(X\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\). This leads to the result.

(ii) Let \(x\in M\) and \(r>0\) such that \(M\cap B(x,r)\subset \varLambda _1^+\). Let \(\phi \in C^1(\mathbb {S}^1_r)\) with support in \(\mathbb {S}^1_r\cap B(x,r)\). We can construct \(X\in C^1_c(\mathbb {R}^2,\mathbb {R}^2)\) with the property that \(X=\phi n\) on \(M\cap B(x,r)\). By Lemma 2.4,

on \(\varLambda _1\). Let us assume that \(\phi \ge 0\). As \(\langle \cdot ,n\rangle >0\) on \(\varLambda _1^+\) we have that \(f^\prime _+(\cdot ,X)=f\phi h^\prime _+(|x|,+1)=f\phi \rho _+\) so by Proposition 6.4,

We conclude that \(\rho _+-k-\lambda \ge 0\) on \(M\cap B(x,r)\). Now assume that \(\phi \le 0\). Then \(f^\prime _+(\cdot ,X)=-f\phi h^\prime _+(|x|,-1)=f\phi \rho _-\) so

and hence \(\rho _--k-\lambda \le 0\) on \(M\cap B(x,r)\). This shows (ii).

(iii) The argument is similar. Assume in the first instance that \(\phi \ge 0\). Then \(f^\prime _+(\cdot ,X)=f\phi h^\prime _+(|x|,-1)=-f\phi \rho _-\) so

We conclude that \(-\rho _--k-\lambda \ge 0\) on \(M\cap B(x,r)\). Next suppose that \(\phi \le 0\). Then \(f^\prime _+(\cdot ,X)=-f\phi h^\prime _+(|x|,+1)=-f\phi \rho _+\) so

and \(-\rho _+-k-\lambda \le 0\) on \(M\cap B(x,r)\). \(\square \)

Let E be an open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\) and that \(\varOmega \) is as in (5.2). Bearing in mind Lemma 5.4 we may define

The function

plays a key role.

Theorem 6.6

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Given \(v>0\) let E be a bounded minimiser of (1.2). Assume that E is open with \(C^{1,1}\) boundary M and that \(E{\setminus }\{0\}=E^{sc}\). Suppose that \(M{\setminus }\overline{\varLambda _1}\ne \emptyset \) and let \(\lambda \) be as in Theorem 6.5. Then \(u\in C^{0,1}(\varOmega )\) and

a.e. on \(\varOmega \).

Proof

Let \(\tau \in \varOmega \) and x a point in the open upper half-plane such that \(x\in M_\tau \). There exists a \(C^{1,1}\) parametrisation \(\gamma _1:I\rightarrow M\) of M in a neighbourhood of x with \(\gamma _1(0)=x\) as above. Put \(u_1:=\sin \sigma _1\) on I. By shrinking the open interval I if necessary we may assume that \(r_1:I\rightarrow r_1(I)\) is a diffeomorphism and that \(r_1(I)\subset \subset \varOmega \). Note that \(\gamma =\gamma _1\circ r_1^{-1}\) and \(u=u_1\circ r_1^{-1}\) on \(r_1(I)\). It follows that \(u\in C^{0,1}(\varOmega )\). By (2.9),

a.e. on \(r_1(I)\). As \(\dot{\alpha }_1=k_1\) a.e. on I and using the identity (2.10) we see that \(\dot{\sigma }_1=\dot{\alpha }_1-\dot{\theta }_1=k_1-(1/r_1)\sin \sigma _1\) a.e on I. Thus,

a.e. on \(r_1(I)\). By Theorem 6.5 there exists \(\lambda \in \mathbb {R}\) such that \(k+\rho \sin \sigma +\lambda =0\) \(\mathscr {H}^1\)-a.e. on M. So

a.e. on \(r_1(I)\). The result follows. \(\square \)

Lemma 6.7

Suppose that E is a bounded open set in \(\mathbb {R}^2\) with \(C^1\) boundary M and that \(E{\setminus }\{0\}=E^{sc}\). Then

-

(i)

\(\theta _2\in C^1(\varOmega )\);

-

(ii)

\(\theta _2^\prime =-\frac{1}{\tau }\frac{u}{\sqrt{1-u^2}}\) on \(\varOmega \).

Proof

Let \(\tau \in \varOmega \) and x a point in the open upper half-plane such that \(x\in M_\tau \). There exists a \(C^1\) parametrisation \(\gamma _1:I\rightarrow M\) of M in a neighbourhood of x with \(\gamma _1(0)=x\) as above. By shrinking the open interval I if necessary we may assume that \(r_1:I\rightarrow r_1(I)\) is a diffeomorphism and that \(r_1(I)\subset \subset \varOmega \). It then holds that

on \(r_1(I)\) by choosing an appropriate branch of \(\theta _1\). It follows that \(\theta _2\in C^1(\varOmega )\). By the chain-rule, (2.10) and (2.9),

on \(r_1(I)\). By Lemma 5.4, \(\cos (\sigma \circ \gamma )=-\sqrt{1-u^2}\) on \(\varOmega \). This entails (ii). \(\square \)

7 Convexity

Lemma 7.1

Let E be a bounded open set in \(\mathbb {R}^2\) with \(C^{1,1}\) boundary M and assume that \(E{\setminus }\{0\}=E^{sc}\). Put \(d:=\sup \{|x|:x\in M\}>0\) and \(b:=(d,0)\). Let \(\gamma _1:I\rightarrow M\) be a \(C^{1,1}\) parametrisation of M near b with \(\gamma _1(0)=b\). Then

Proof

For \(s\in I\),

and

by (2.6). By the Fubini–Tonelli Theorem,

for \(s\in I\). Assume for a contradiction that

for some \(l\in \mathbb {R}\). Then we can find \(\delta >0\) such that \(k_1<l\) a.e. on \([-\delta ,\delta ]\). So

as \(s\downarrow 0\) and

as \(s\downarrow 0\). Alternatively,

As \(1-dl>0\) we can find \(s\in I\) with \(r_1(s)>d\), contradicting the definition of d. \(\square \)

Lemma 7.2

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Given \(v>0\) let E be a bounded minimiser of (1.2). Assume that E is open with \(C^{1,1}\) boundary M and that \(E{\setminus }\{0\}=E^{sc}\). Suppose that \(M{\setminus }\overline{\varLambda _1}\ne \emptyset \). Then \(\lambda \le -1/d-\rho _-(d)<0\) with \(\lambda \) as in Theorem 6.5.

Proof

We write M as the disjoint union \(M=(M{\setminus }\overline{\varLambda _1})\cup \overline{\varLambda _1}\). Let b be as above. Suppose that \(b\in \overline{\varLambda _1}\). Then \(b\in \varLambda _1\); in fact, \(b\in \varLambda _1^-\). By Theorem 6.5, \(\lambda \le -\rho _--k\) at b. By Lemma 7.1, \(\lambda \le -1/d-\rho _-(d)\) upon considering an appropriate sequence in M converging to b. Now suppose that b lies in the open set \(M{\setminus }\overline{\varLambda _1}\) in M. Let \(\gamma _1:I\rightarrow M\) be a \(C^{1,1}\) parametrisation of M near b with \(\gamma _1(I)\subset M{\setminus }\overline{\varLambda _1}\). By Theorem 6.5, \(k_1+\rho (r_1)\sin \sigma _1+\lambda =0\) a.e. on I. Now \(\sin \sigma _1(s)\rightarrow 1\) as \(s\rightarrow 0\). In light of Lemma 7.1, \(1/d+\rho (d-)+\lambda \le 0\) and \(\lambda \le -1/d-\rho _-(d)\). \(\square \)

Theorem 7.3

Let f be as in (1.3) where \(h:[0,+\infty )\rightarrow \mathbb {R}\) is a non-decreasing convex function. Given \(v>0\) let E be a bounded minimiser of (1.2). Assume that E is open with \(C^{1,1}\) boundary M and that \(E{\setminus }\{0\}=E^{sc}\). Suppose that \(M{\setminus }\overline{\varLambda _1}\ne \emptyset \). Then E is convex.

Proof

The proof runs along similar lines as [22] Theorem 6.5. By Theorem 6.5, \(k+\rho \sin \sigma +\lambda =0\) \(\mathscr {H}^1\)-a.e. on \(M{\setminus }\overline{\varLambda _1}\). By Lemma 7.2,

and \(k\ge 1/d\) \(\mathscr {H}^1\)-a.e. on \(M{\setminus }\overline{\varLambda _1}\). On \(\varLambda _1^+,k\ge \rho _--\lambda \ge \rho _-+\rho _-(d)+1/d>0\); on the other hand, \(k<0\) on \(\varLambda _1^+\). So in fact \(\varLambda _1^+=\emptyset \). If \(b\in \varLambda _1^-\) then \(k=1/d\). On \(\varLambda _1^-\cap B(0,d),k\ge -\rho _+-\lambda \ge -\rho _++\rho _-(d)+1/d\ge 1/d\). Therefore \(k\ge 1/d>0\) \(\mathscr {H}^1\)-a.e. on M. The set E is then convex by a modification of [26] Theorem 1.8 and Proposition 1.4. It is sufficient that the function f (here \(\alpha _1\)) in the proof of the former theorem is non-decreasing. \(\square \)

8 A reverse Hermite–Hadamard inequality

Let \(0\le a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Let h be a primitive of \(\rho \) on [a, b] so that \(h\in C^{0,1}([a,b])\) and introduce the functions

Then

a.e. on (a, b). Define

If \(\rho \) takes the constant value \(\mathbb {R}\ni \lambda \ge 0\) on [a, b] we use the notation \(m(\lambda ,a,b)\) and we write \(m_0=m(0,a,b)\). A computation gives

where \(A(a,b):=(a+b)/2\) stands for the arithmetic mean of a and b.

Lemma 8.1

Let \(0\le a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Then \(m_0\le m\).

Proof

Note that g is convex on [a, b] as can be seen from (8.3). By the Hermite-Hadamard inequality (cf. [15, 17]),

The inequality \((b-a)(g(a)+g(b))\le (a+b)(g(b)-g(a))\) entails

and the result follows on rearrangement. \(\square \)

Lemma 8.2

Let \(0\le a<b<+\infty \) and \(\lambda >0\). Then \(m(\lambda ,a,b)<\lambda +A(a,b)^{-1}\).

Proof

First suppose that \(\lambda =1\) and take \(h:[a,b]\rightarrow \mathbb {R};t\mapsto t\). In this case,

and

The inequality in the statement is equivalent to

which in turn is equivalent to the statement \(\tanh [(b-a)/2]<(b-a)/2\) which holds for any \(b>a\).

For \(\lambda >0\) take \(h:[a,b]\rightarrow \mathbb {R};t\mapsto \lambda t\). Substitution gives

so from above

\(\square \)

Theorem 8.3

Let \(0\le a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Then

-

(i)

\(m(\rho ,a,b)\le \rho (b-)+A(a,b)^{-1}\);

-

(ii)

equality holds if and only if \(\rho \equiv 0\) on [a, b).

Proof

(i) Define \(h:=\int _a^\cdot \rho \,d\tau \) on [a, b] so that \(h^\prime =\rho \) a.e. on (a, b). Define \(h_1:[a,b]\rightarrow \mathbb {R};t\mapsto h(b)-\rho (b-)(b-t)\). Then \(h_1(b)=h(b),h_1^\prime =\rho (b-)\ge \rho =h^\prime \) a.e. on (a, b) and hence \(h\ge h_1\) on [a, b]. We derive

and

with obvious notation. This entails that \(m(\rho ,a,b)\le m(\rho (b-),a,b)\) and the result follows with the help of Lemma 8.2.

(ii) Suppose that \(\rho \not \equiv 0\) on [a, b). If \(\rho \) is constant on [a, b] the assertion follows from Lemma 8.2. Assume then that \(\rho \) is not constant on [a, b). Then \(h\not \equiv h_1\) on [a, b] in the above notation and \(\int _a^b te^{h(t)}\,dt>\int _a^b te^{h_1(t)}\,dt\) which entails strict inequality in (i). \(\square \)

With the above notation define

A computation gives

Lemma 8.4

Let \(0\le a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Then \(\hat{m}\ge \hat{m}_0\).

Proof

This follows by the Hermite-Hadamard inequality (8.6). \(\square \)

We prove a reverse Hermite-Hadamard inequality.

Theorem 8.5

Let \(0\le a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Then

-

(i)

\((b-a)\hat{m}(\rho ,a,b)\le 2+a\rho (a+)+b\rho (b-)\);

-

(ii)

equality holds if and only if \(\rho \equiv 0\) on [a, b).

This last inequality can be written in the form

comparing with (8.6) justifies naming this a reverse Hermite-Hadamard inequality.

Proof

(i) We assume in the first instance that \(\rho \in C^1((a,b))\). We prove the above result in the form

Put

for \(t\in [a,b]\) so that

Then using (8.3),

on (a, b) as

An integration over [a, b] gives the result.

Let us now assume that \(\rho \ge 0\) is a non-decreasing bounded function on [a, b]. Extend \(\rho \) to \(\mathbb {R}\) via

for \(t\in \mathbb {R}\). Let \((\psi _\varepsilon )_{\varepsilon >0}\) be a family of mollifiers (see e.g. [1] 2.1) and set \(\widetilde{\rho }_\varepsilon :=\widetilde{\rho }\star \psi _\varepsilon \) on \(\mathbb {R}\) for each \(\varepsilon >0\). Then \(\widetilde{\rho }_\varepsilon \in C^\infty (\mathbb {R})\) and is non-decreasing on \(\mathbb {R}\) for each \(\varepsilon >0\). Put \(\rho _\varepsilon :=\widetilde{\rho }_\varepsilon \mid _{[a,b]}\) for each \(\varepsilon >0\). Then \((\rho _\varepsilon )_{\varepsilon >0}\) converges to \(\rho \) in \(L^1((a,b))\) by [1] 2.1 for example. Note that \(h_{\varepsilon }:=\int _a^\cdot \rho _\varepsilon \,dt\rightarrow h\) pointwise on [a, b] as \(\varepsilon \downarrow 0\) and that \((h_\varepsilon )\) is uniformly bounded on [a, b]. Moreover, \(\rho _\varepsilon (a)\rightarrow \rho (a+)\) and \(\rho _\varepsilon (b)\rightarrow \rho (b-)\) as \(\varepsilon \downarrow 0\). By the above result,

for each \(\varepsilon >0\). The inequality follows on taking the limit \(\varepsilon \downarrow 0\) with the help of the dominated convergence theorem.

(ii) We now consider the equality case. We claim that

this entails the equality condition in (ii). First suppose that \(\rho \in C^1((a,b))\). In this case the inequality in (8.10) implies (8.11) upon integration. Now suppose that \(\rho \ge 0\) is a non-decreasing bounded function on [a, b]. Then (8.11) holds with \(\rho _\varepsilon \) in place of \(\rho \) for each \(\varepsilon >0\). The inequality for \(\rho \) follows by the dominated convergence theorem. \(\square \)

9 Comparison theorems for first-order differential equations

Let \(\mathscr {L}\) stand for the collection of Lebesgue measurable sets in \([0,+\infty )\). Define a measure \(\mu \) on \(([0,+\infty ),\mathscr {L})\) by \(\mu (dx):=(1/x)\,dx\). Let \(0\le a<b<+\infty \). Suppose that \(u:[a,b]\rightarrow \mathbb {R}\) is an \(\mathscr {L}^1\)-measurable function with the property that

The distribution function \(\mu _u:(0,+\infty )\rightarrow [0,+\infty )\) of u with respect to \(\mu \) is given by

Note that \(\mu _u\) is right-continuous and non-increasing on \((0,\infty )\) and \(\mu _u(t)\rightarrow 0\) as \(t\rightarrow \infty \).

Let u be a Lipschitz function on [a, b]. Define

By [1] Lemma 2.96, \(Z\cap \{u=t\}=\emptyset \) for \(\mathscr {L}^1\)-a.e. \(t\in \mathbb {R}\) and hence \(N:=u(Z)\subset \mathbb {R}\) is \(\mathscr {L}^1\)-negligible. We make use of the coarea formula ( [1] Theorem 2.93 and (2.74)),

for any \(\mathscr {L}^1\)-measurable function \(\phi :[a,b]\rightarrow [0,\infty ]\).

Lemma 9.1

Let \(0\le a<b<+\infty \) and u a Lipschitz function on [a, b]. Then

-

(i)

\(\mu _u\in \mathrm {BV}_{\mathrm {loc}}((0,+\infty ))\);

-

(ii)

\(D\mu _u=-u_\sharp \mu \);

-

(iii)

\(D\mu _u^a=D\mu _u|\!\_((0,+\infty ){\setminus } N)\);

-

(iv)

\(D\mu _u^s=D\mu _u|\!\_N\);

-

(v)

\(A:=\Big \{t\in (0,+\infty ):\mathscr {L}^1(Z\cap \{u=t\})>0\Big \}\) is the set of atoms of \(D\mu _u\) and \(D\mu _u^j=D\mu _u|\!\_A\);

-

(vi)

\(\mu _u\) is differentiable \(\mathscr {L}^1\)-a.e. on \((0,+\infty )\) with derivative given by

$$\begin{aligned} \mu _u^\prime (t)=-\int _{\{u=t\}{\setminus } Z}\frac{1}{|u^\prime |}\,\frac{d\mathscr {H}^{0}}{\tau } \end{aligned}$$for \(\mathscr {L}^1\)-a.e. \(t\in (0,+\infty )\);

-

(vii)

\(\mathrm {Ran}(u)\cap [0,+\infty )=\mathrm {supp}(D\mu _u)\).

The notation above \(D\mu _u^a,D\mu _u^s,D\mu _u^j\) stands for the absolutely continuous resp. singular resp. jump part of the measure \(D\mu _u\) (see [1] 3.2 for example).

Proof

For any \(\varphi \in C^\infty _c((0,+\infty ))\) with \(\mathrm {supp}[\varphi ]\subset (\tau ,+\infty )\) for some \(\tau >0\),

by Fubini’s theorem; so \(\mu _u\in \mathrm {BV}_{\mathrm {loc}}((0,+\infty ))\) and \(D\mu _u\) is the push-forward of \(\mu \) under \(u,D\mu _u=-u_\sharp \mu \) (cf. [1] 1.70). By (9.2),

for any \(\mathscr {L}^1\)-measurable set A in \((0,+\infty )\). In light of the above, we may identify \(D\mu _u^a=D\mu _u|\!\_((0,+\infty ){\setminus } N)\) and \(D\mu _u^s=D\mu _{u}|\!\_N\). The set of atoms of \(D\mu _{u}\) is defined by \(A:=\{t\in (0,+\infty ):D\mu _u(\{t\})\ne 0\}\). For \(t>0\),

and this entails (v). The monotone function \(\mu _u\) is a good representative within its equivalence class and is differentiable \(\mathscr {L}^1\)-a.e. on \((0,+\infty )\) with derivative given by the density of \(D\mu _{u}\) with respect to \(\mathscr {L}^1\) by [1] Theorem 3.28. Item (vi) follows from (9.2) and (iii). Item (vii) follows from (ii).\(\square \)

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Let \(\eta \in \{\pm 1\}^2\). We study solutions to the first-order linear ordinary differential equation

where \(u\in C^{0,1}([a,b])\) and \(\lambda \in \mathbb {R}\). In case \(\rho \equiv 0\) on [a, b] we use the notation \(u_0\).

Lemma 9.2

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Let \(\eta \in \{\pm 1\}^2\). Then

-

(i)

there exists a solution \((u,\lambda )\) of (9.4) with \(u\in C^{0,1}([a,b])\) and \(\lambda =\lambda _\eta \in \mathbb {R}\);

-

(ii)

the pair \((u,\lambda )\) in (i) is unique;

-

(iii)

\(\lambda _\eta \) is given by

$$\begin{aligned} -\lambda _{(1,1)}=\lambda _{(-1,-1)}=m;\,\lambda _{(1,-1)}=-\lambda _{(-1,1)}=\hat{m}; \end{aligned}$$ -

(iv)

if \(\eta =(1,1)\) or \(\eta =(-1,-1)\) then u is uniformly bounded away from zero on [a, b].

Proof

(i) For \(\eta =(1,1)\) define \(u:[a,b]\rightarrow \mathbb {R}\) by

with m as in (8.4). Then \(u\in C^{0,1}([a,b])\) and satisfies (9.4) with \(\lambda =-m\). For \(\eta =(1,-1)\) set \(u=(-\hat{m}\int _a^\cdot g\,ds+g(a))/g\) with \(\lambda =\hat{m}\). The cases \(\eta =(-1,-1)\) and \(\eta =(-1,1)\) can be dealt with using linearity. (ii) We consider the case \(\eta =(1,1)\). Suppose that \((u_1,\lambda _1)\) resp. \((u_2,\lambda _2)\) solve (9.4). By linearity \(u:=u_1-u_2\) solves

where \(\lambda =\lambda _1-\lambda _2\). An integration gives that \(u=(-\lambda \int _a^\cdot g\,ds+c)/g\) for some constant \(c\in \mathbb {R}\) and the boundary conditions entail that \(\lambda =c=0\). The other cases are similar. (iii) follows as in (i). (iv) If \(\eta =(1,1)\) then \(u>0\) on [a, b] from (9.5) as \(m>0\). \(\square \)

The boundary condition \(\eta _1\eta _2=-1\).

Lemma 9.3

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Let \((u,\lambda )\) solve (9.4) with \(\eta =(1,-1)\). Then

-

(i)

there exists a unique \(c\in (a,b)\) with \(u(c)=0\);

-

(ii)

\(u^\prime <0\) a.e. on [a, c] and u is strictly decreasing on [a, c];

-

(iii)

\(D\mu _u^s=0\).

Proof

(i) We first observe that \(u^\prime \le -\hat{m}<0\) a.e. on \(\{u\ge 0\}\) in view of (9.4). Suppose \(u(c_1)=u(c_2)=0\) for some \(c_1,c_2\in (a,b)\) with \(c_1<c_2\). We may assume that \(u\ge 0\) on \([c_1,c_2]\). This contradicts the above observation. Item (ii) is plain. For any \(\mathscr {L}^1\)-measurable set B in \((0,+\infty ),D\mu _u^s(B)=\mu (\{u\in B\}\cap Z)=0\) using Lemma 9.1 and (ii). \(\square \)

Lemma 9.4

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Let \((u,\lambda )\) solve (9.4) with \(\eta =(1,-1)\). Assume that

-

(a)

u is differentiable at both a and b and that (9.4) holds there;

-

(b)

\(u^\prime (a)<0\) and \(u^\prime (b)<0\);

-

(c)

\(\rho \) is differentiable at a and b.

Put \(v:=-u\). Then

-

(i)

\(\int _{\{v=1\}{\setminus } Z_v}\frac{1}{|v^\prime |}\frac{d\mathscr {H}^0}{\tau } \ge \int _{\{u=1\}{\setminus } Z_u}\frac{1}{|u^\prime |}\frac{d\mathscr {H}^0}{\tau }\);

-

(ii)

equality holds if and only if \(\rho \equiv 0\) on [a, b).

Proof

First, \(\{u=1\}=\{a\}\) by Lemma 9.3. Further \(0<-au^\prime (a)=1+a[\hat{m}+\rho (a)]\) from (9.4). On the other hand \(\{v=1\}\supset \{b\}\) and \(0<bv^\prime (b)=-1+b[\hat{m}-\rho (b)]\). Thus

By Theorem 8.5, \(0\le 2+(a-b)\hat{m}+a\rho (a)+b\rho (b)\), noting that \(\rho (a)=\rho (a+)\) in virtue of (c) and similarly at b. A rearrangement leads to the inequality. The equality assertion follows from Theorem 8.5. \(\square \)

Theorem 9.5

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Suppose that \((u,\lambda )\) solves (9.4) with \(\eta =(1,-1)\) and set \(v:=-u\). Assume that \(u>-1\) on [a, b). Then

-

(i)

\(-\mu _v^\prime \ge -\mu _u^\prime \) for \(\mathscr {L}^1\)-a.e. \(t\in (0,1)\);

-

(ii)

if \(\rho \not \equiv 0\) on [a, b) then there exists \(t_0\in (0,1)\) such that \(-\mu _v^\prime >-\mu _u^\prime \) for \(\mathscr {L}^1\)-a.e. \(t\in (t_0,1)\);

-

(iii)

for \(t\in [-1,1]\),

$$\begin{aligned} \mu _{u_0}(t)=\log \Big \{\frac{-(b-a)t+\sqrt{(b-a)^2t^2+4ab}}{2a}\Big \} \end{aligned}$$and \(\mu _{v_0}=\mu _{u_0}\) on \([-1,1]\);

in obvious notation.

Proof

(i) The set

(in obvious notation) is a null set in [a, b] and likewise for \(Y_v\). By [1] Lemma 2.95 and Lemma 2.96, \(\{u=t\}\cap (Y_u\cup Z_{1,u})=\emptyset \) for a.e. \(t\in (0,1)\) and likewise for the function v. Let \(t\in (0,1)\) and assume that \(\{u=t\}\cap (Y_u\cup Z_{1,u})=\emptyset \) and \(\{v=t\}\cap (Y_v\cup Z_{1,v})=\emptyset \). Put \(c:=\max \{u\ge t\}\). Then \(c\in (a,b),\{u>t\}=[a,c)\) by Lemma 9.3 and u is differentiable at c with \(u^\prime (c)<0\). Put \(d:=\max \{v\le t\}=\max \{u\ge -t\}\). As u is continuous on [a, b] it holds that \(a<c<d<b\). Moreover, \(u^\prime (d)<0\) as \(v(d)=t\) and \(d\not \in Z_v\). Put \(\widetilde{u}:=u/t\) and \(\widetilde{v}:=v/t\) on [c, d]. Then

By Lemma 9.4,

By Lemma 9.1,

for \(\mathscr {L}^1\)-a.e. \(t\in (0,1)\) and a similar formula holds for v. The assertion in (i) follows.

(ii) Assume that \(\rho \not \equiv 0\) on [a, b). Put \(\alpha :=\inf \{\rho >0\}\in [a,b)\). Note that \(\max \{v\le t\}\rightarrow b\) as \(t\uparrow 1\) as \(v<1\) on [a, b) by assumption. Choose \(t_0\in (0,1)\) such that \(\max \{v\le t_0\}>\alpha \). Then for \(t>t_0\),

that is, the interval [c, d] with c, d as described above intersects \((\alpha ,b]\). So for \(\mathscr {L}^1\)-a.e. \(t\in (t_0,1)\),

by the equality condition in Lemma 9.4. The conclusion follows from the representation of \(\mu _u\) resp. \(\mu _v\) in Lemma 9.1.

(iii) A direct computation gives

for \(\tau \in [a,b]\); \(u_0\) is strictly decreasing on its domain. This leads to the formula in (iii). A similar computation gives

for \(t\in [-1,1]\). Rationalising the denominator results in the stated equality. \(\square \)

Corollary 9.6

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Suppose that \((u,\lambda )\) solves (9.4) with \(\eta =(1,-1)\) and set \(v:=-u\). Assume that \(u>-1\) on [a, b). Then

-

(i)

\(\mu _u(t)\le \mu _v(t)\) for each \(t\in (0,1)\);

-

(ii)

if \(\rho \not \equiv 0\) on [a, b) then \(\mu _u(t)<\mu _v(t)\) for each \(t\in (0,1)\).

Proof

(i) By [1] Theorem 3.28 and Lemma 9.3,

for each \(t\in (0,1)\) as \(\mu _u(1)=0\). On the other hand,

for each \(t\in (0,1)\). The claim follows from Theorem 9.5 noting that \(D\mu _v^s((t,1])\le 0\) as can be seen from Lemma 9.1. Item (ii) follows from Theorem 9.5 (ii). \(\square \)

Corollary 9.7

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Suppose that \((u,\lambda )\) solves (9.4) with \(\eta =(1,-1)\). Assume that \(u>-1\) on [a, b). Let \(\varphi \in C^1((-1,1))\) be an odd strictly increasing function with \(\varphi \in L^1((-1,1))\). Then

-

(i)

\(\int _{\{u>0\}}\varphi (u)\,d\mu <+\infty \);

-

(ii)

\(\int _a^b\varphi (u)\,d\mu \le 0\);

-

(iii)

equality holds in (ii) if and only if \(\rho \equiv 0\) on [a, b).

In particular,

-

(iv)

\(\int _a^b\frac{u}{\sqrt{1-u^2}}\,d\mu \le 0\) with equality if and only if \(\rho \equiv 0\) on [a, b).

Proof

(i) Put \(I:=\{1>u>0\}\). The function \(u:I\rightarrow (0,1)\) is \(C^{0,1}\) and \(u^\prime \le -\hat{m}\) a.e. on I by Lemma 9.3. It has \(C^{0,1}\) inverse \(v:(0,1)\rightarrow I,v^\prime =1/(u^\prime \circ v)\) and \(|v^\prime |\le 1/\hat{m}\) a.e. on (0, 1). By a change of variables,

from which the claim is apparent. (ii) The integral is well-defined because \(\varphi (u)^+=\varphi (u)\chi _{\{u>0\}}\in L^1((a,b),\mu )\) by (i). By Lemma 9.3 the set \(\{u=0\}\) consists of a singleton and has \(\mu \)-measure zero. So

where \(v:=-u\) as \(\varphi \) is an odd function. We remark that in a similar way to (9.3),

using oddness of \(\varphi \) and an analogous formula holds with v in place of u. Thus we may write

by Corollary 9.6 as \(\varphi ^\prime >0\) on (0, 1). (iii) Suppose that \(\rho \not \equiv 0\) on [a, b). Then strict inequality holds in the above by Corollary 9.6. If \(\rho \equiv 0\) on [a, b) the equality follows from Theorem 9.5. (iv) follows from (ii) and (iii) with the particular choice \(\varphi :(-1,1)\rightarrow \mathbb {R};t\mapsto t/\sqrt{1-t^2}\). \(\square \)

The boundary condition \(\eta _1\eta _2=1\). Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. We study solutions of the auxilliary Riccati equation

with \(w\in C^{0,1}([a,b])\) and \(\lambda \in \mathbb {R}\). If \(\rho \equiv 0\) on [a, b] then we write \(w_0\) instead of w. Suppose \((u,\lambda )\) solves (9.4) with \(\eta =(1,1)\). Then \(u>0\) on [a, b] by Lemma 9.2 and we may set \(w:=1/u\). Then \((w,-\lambda )\) satisfies (9.6).

Lemma 9.8

Let \(0<a<b<+\infty \) and \(\rho \ge 0\) be a non-decreasing bounded function on [a, b]. Then

-

(i)

there exists a solution \((w,\lambda )\) of (9.6) with \(w\in C^{0,1}([a,b])\) and \(\lambda \in \mathbb {R}\);

-

(ii)

the pair \((w,\lambda )\) in (i) is unique;

-

(iii)

\(\lambda =m\).

Proof

(i) Define \(w:[a,b]\rightarrow \mathbb {R}\) by

Then \(w\in C^{0,1}([a,b])\) and (w, m) satisfies (9.6). (ii) We claim that \(w>0\) on [a, b] for any solution \((w,\lambda )\) of (9.6). For otherwise, \(c:=\min \{w=0\}\in (a,b)\). Then \(u:=1/w\) on [a, c) satisfies

Integrating, we obtain