Abstract

Quantum machine learning has the potential to improve traditional machine learning methods and overcome some of the main limitations imposed by the classical computing paradigm. However, the practical advantages of using quantum resources to solve pattern recognition tasks are still to be demonstrated. This work proposes a universal, efficient framework that can reproduce the output of a plethora of classical supervised machine learning algorithms exploiting quantum computation’s advantages. The proposed framework is named Multiple Aggregator Quantum Algorithm (MAQA) due to its capability to combine multiple and diverse functions to solve typical supervised learning problems. In its general formulation, MAQA can be potentially adopted as the quantum counterpart of all those models falling into the scheme of aggregation of multiple functions, such as ensemble algorithms and neural networks. From a computational point of view, the proposed framework allows generating an exponentially large number of different transformations of the input at the cost of increasing the depth of the corresponding quantum circuit linearly. Thus, MAQA produces a model with substantial descriptive power to broaden the horizon of possible applications of quantum machine learning with a computational advantage over classical methods. As a second meaningful addition, we discuss the adoption of the proposed framework as hybrid quantum–classical and fault-tolerant quantum algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Quantum computers are machines that leverage the properties of quantum mechanics to store and process information. There are many different ways to build these devices [1,2,3], and several algorithms have already been tested on real machines [4, 5]. Although a quantum advantage has already been shown (e.g. quantum chemistry [6], multi-agent systems [7, 8]), it is still unclear whether quantum computing can be used efficiently in machine learning (ML).

The intersection between ML and quantum computing (QC) is known as quantum machine learning (QML). There are two ways in which ML and QC can be combined: one approach is to run the learning process predominantly in a quantum computer so that the expensive subroutines can be executed efficiently. For this purpose, a rich collection of quantum algorithms for basic linear algebra subroutines have been proposed in literature [9,10,11]. Some popular examples of this approach are QSVM [12] and QSplines [13], which obtain an exponential speed-up with respect to their classical counterparts. However, the protocols within this category usually assume the availability of a fault-tolerant quantum computer.

Alternatively, variational quantum algorithms can be considered machine learning models that can be trained using hybrid quantum–classical optimisation. In this case, a quantum algorithm is used to make a call to a function that allows estimating the target variable of interest given the input data and a set of rotation parameters [14, 15]. This approach requires a parametrised quantum circuit and a classical optimisation procedure to find the optimal set of parameters for a sequence of quantum gates. Although these techniques represent the most promising attempt to leverage near-term quantum technology, it is still unclear whether they can outperform classical algorithms.

Despite the remarkable success of ML in numerous real-world applications, the ever-increasing size of datasets and the high computational requirements of modern algorithms indicate that the current computational tools will no longer be sufficient in the future. In this work, we propose a novel and efficient quantum framework to reproduce a plethora of machine learning models using quantum computational advantages. The framework is called Multiple Aggregator Quantum Algorithm (MAQA) due to its capability to combine multiple and diverse functions to solve typical supervised learning tasks. Thanks to superposition, entanglement and interference, the MAQA framework can compute the weighted average of an exponentially large number of functions while increasing the depth of the correspondent quantum circuit linearly. This allows for building quantum models with incredible descriptive power that might be a credible alternative to classical methods in the future.

2 Preliminaries

The objective of a supervised model is to find a useful approximation to the function \(f(x; \theta )\) that underlies the predictive relationship between the input x and output y for a fixed set of parameters \(\theta \). Assuming for simplicity an additive error, the model of interest can be expressed as follows:

where \(\epsilon \) is a random variable whose conditioned probability distribution given x is centred in zero. Although Eq. (1) provides a general mathematical formulation for supervised learning, several methods do not estimate a single function but explicitly calculate multiple and diverse functions. These functions belong to the same family but differ in either a set of parameters or the training data. In all these cases, the final output results from the weighted average of the estimated functions:

where \(f(x; \theta )\) is the final output and \(g(x; \cdot )\) describes the function component.

The calculation of \(g(x; \cdot )\) corresponds to a specific transformation of data x based on \(\theta _h\), whose contribution to the final output is weighted by \(\beta _h\). The estimation of a collection of functions components allows producing an extremely flexible model, which is able to approximate the behaviour of complex patterns. Different choices for \(\beta \), \(g(x; \cdot )\) and \(\theta _h\) determine different supervised models commonly adopted in real-world applications.

For instance, a single-layer neural network (or Single-Layer Perceptron—SLP) assumes as function component \(g(x; \cdot )\) the activation function \(\sigma _{\text {hidden}}\) applied to the linear combinations \(L(x;\theta _h)\) of the input vector x. In fact, an SLP with H hidden neurons is a two-stage model that takes as input training data x and H sets of linear coefficients and estimates the target variable as follows:

where \(\sigma _{\text {output}}\) is the identity function when the task is the function approximation.Footnote 1

Another classical supervised learning approach that falls into the schema of function aggregation is ensemble learning. In practice, ensemble methods reduce to computing several predictions \(g_{1}(x), g_{2}(x), \dots , g_{H}(x)\) using H different training sets, which are then averaged to obtain a single model:

In this case, the component functions \(g(x;\cdot )\) are weak classification/regression models and the choice of the weights depends on the type of the ensemble in use (boosting, bagging, randomisation).

Other models that fit into the idea of multiple aggregations are Generalised Additive Models [16], Support Vector Machines and Decision trees [17].

Contribution. In this work, we propose a novel efficient quantum framework to reproduce the idea of machine learning models as functions aggregators. The proposed architecture, named Multiple Aggregator Quantum Algorithm (MAQA), can potentially reproduce some of the most important classical supervised learning algorithms introducing relevant computational advantages. In particular, MAQA propagates an input state to multiple quantum trajectories in superposition, and each trajectory describes a specific function \(g(x; \cdot )\) that represents the component function of the final model. The entanglement between the two quantum registers involved (data and control) allows for efficient averaging of those transformations, and the final result can be accessed by measuring only a subset of qubits. The proposed approach has two main advantages: from a classical perspective, it introduces an exponential scaling in the number of aggregated functions while linearly increasing the time complexity of the correspondent quantum algorithm. From a quantum perspective, the framework opens the possibility of implementing a plethora of models not yet proposed in the literature. Eventually, we discuss the adoption of MAQA to generalise some existing QML algorithms, considering both fault-tolerant settings and hybrid quantum–classical algorithms.

3 Multiple Aggregator Quantum Algorithm (MAQA)

In this section, we describe the MAQA framework that is able to reproduce the classical model expressed in Eq. (2). The algorithm leverages the three main properties of quantum computing (superposition, entanglement and interference) to encode in a quantum state the sum of different input transformations accessible by measuring a single quantum register. The proposed algorithm can potentially reproduce all those models that refer to the idea of functions aggregation and provide attractive computational advantages with respect to the classical counterparts.

The quantum algorithm adopts two quantum registers: data and control. The data register encodes the model’s input data, and the control register is used to generate multiple trajectories in superposition, where each trajectory represents a different transformation of data.

Starting from a n-qubit data register and a d-qubit control register, the Multiple Aggregator Quantum Algorithm (MAQA) involves four main steps: state preparation, multiple trajectories in superposition, transformation via interference and measurement.

(Step 1) State Preparation

State preparation consists of encoding the input in the data register and the initialisation of the control register whose amplitudes depend on a set of parameters \(\beta =\{\beta ^*_i\}_{i=1, \dots , 2^d}\):

We refer to \(S_{x}\) as a quantum routine to encode data into a quantum state, and \(S_{\beta }\) as a routine that transforms a d-qubit register from an all-zero state to a quantum state which depends on a set of parameters \(\beta \). Importantly, the computational cost of this step is not considered classically since any classical algorithm assumes the input x to be directly accessible.

(Step 2) Multiple Trajectories in Superposition

The second step regards the generation of \(2^d\) different transformations of the input data in superposition, each entangled with a possible state of the control register. The single quantum state of the superposition encodes a specific transformation of the data and it depends on a set of parameters \(\varTheta _k\). To this end, a unitary \(G(\theta _1, \dots , \theta _{2^d})\) that performs the following operation is assumedFootnote 2

where the implementation of \(G(\theta _1, \dots , \theta _{2^d})\) can be accomplished in only d steps. Each step consists in the entanglement of the ith \((i=1, \dots d)\) control qubit with two transformations \(G\left( \theta _{i,1}\right) \) and \(G\left( \theta _{i,2}\right) \) of \(\vert {x} \rangle \) based on two sets of parameters, \(\theta _{i, 1}\) and \(\theta _{i, 2}\). Let us consider a unitary \(G\left( \theta _{i,j}\right) \) that implements the transformation \(l\left( x; \theta _{i,j}\right) \). The most straightforward way to obtain the quantum state in Eq. (6) is to apply \(G\left( \theta _{i,j}\right) \) through controlled operations, using as control state the two basis states of the current control qubit. In particular, the generic ith step involves the following two transformations:

First, the controlled unitary \(C^{(1)}G\left( \theta _{i,1}\right) \) is executed to entangle the transformation \(G\left( \theta _{i,1}\right) \vert {x} \rangle \) with the excited state \(\vert {1} \rangle \) of the ith control qubit:

where \(a_i\) and \(b_i\) are the amplitudes of the ith control qubit and \(C^{(1)}G(\theta _{i,1})\) is a controlled operation that entangles the exited state of the control qubit \(\vert {c_i} \rangle \) to transform the data register according to the unitary \(G(\theta _{i,1})\).

Then, a second controlled unitary \(C^{(0)}G\left( \theta _{i,2}\right) \) is executed. This time the control state is the \(\vert {0} \rangle \) basis state:

These two transformations are repeated for each qubit in the control register and two different unitaries \(G\left( \theta _{i,1}\right) \) and \(G\left( \theta _{i,2}\right) \) are applied, at each iteration. After d steps, the control and data registers are fully entangled and \(2^d\) different quantum trajectories in superposition are generated. The output of this procedure can be expressed as follows:

where \(G\left( \varTheta _h\right) \) results from the product of d unitary matrices \(G\left( \theta _{i,j}\right) \) and it represents a single quantum trajectory. Each trajectory differs from the others for, at least, one unitary \(G\left( \theta _{i,j}\right) \).Footnote 3

When discussing a specific implementation of QML algorithms (Sects. 4.2 and 4.1), we will see that, from a computational point of view, the possibility to generate \(2^d\) different transformations in only d steps potentially leads to scaling exponentially the number of component functions with respect to classical methods, assuming an efficient implementation of the \(C^{(j)}G(\theta _{i,j})\).

(Step 3) Transformation via Interference Once we generated multiple transformations \(l(x; \varTheta _h)\) of the input in superposition, the third step consists of transforming the data register through a generic quantum gate F that works via interference:

where \(H=2^d\). In Eq. (10), the assumption is that the sequential application of \(G(\varTheta _h)\) and F on the quantum state \(\vert {x} \rangle \) is equivalent to calculate the function \(g_h^*\) to an input x. At this point, different values of the function \(g_h^*\) are entangled with different states of the control register.

It is important to notice that a single execution of F allows the computation of the function \(g_h^*\) for all the quantum trajectories in superposition. This is extremely useful when, during the computation, the same operations need to be applied to multiple inputs (e.g. when the activation function is applied to a huge number of neurons or in the case of ensemble learning, where the same classifier has to be executed to different sub-samples of the training set).

(Step 4) Measurement The last step consists of measuring the data register, leaving untouched the control register:

where \(g\left( x; \varTheta _h\right) =\langle {g^*_h|M}\vert {g^*_h}\rangle \) for a measurement operator M, \(\beta _h = |\beta ^*_h|^2\) with \(\sum _h |\beta _h|^2 =1\) and \(H=2^d\).

The expectation value \(\left\langle M \right\rangle \) stores the weighted average of the \(2^d\) functions \(g\left( x; \varTheta _h\right) \), which is accessible by measuring the data register. While extracting the single contribution \(g\left( x; \varTheta _h\right) \) would require an exponential number of measurements (since those values are in the superposition of \(2^d\) possible basis states), in a classical supervised learning scenario the measure of interest is the weighted average of all the functions which can be directly accessed by measuring the data register and leaving intact the control register.

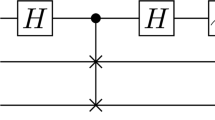

To summarise, the proposed architecture allows calculating the aggregation of multiple and diverse functions described in Eq. (2) using a quantum algorithm. In particular, it is possible to access the final result by measuring only the data register while obtaining the weighted average of \(2^d\) different transformations \(g(x;\cdot )\) of the input data x, where d is the size of the control register. Specifying properly \(S_{\beta }\), \(S_x\), \(\{G\left( \theta _{i,1}\right) , G\left( \theta _{i,2}\right) \}_{i=1, \dots , d}\) and F allows potentially to reproduce the quantum version of all the ML algorithms discussed in Sect. 2. Furthermore, the framework is very generic and can be adopted for hybrid and fault-tolerant quantum computation. The quantum circuit for implementing MAQA is depicted in Fig. .

4 Discussion

As shown in the previous section, the MAQA allows obtaining a quantum state that reproduces the idea of ML models as aggregators of functions using the properties of quantum computing. From a classical ML perspective, relevant computational advantages are introduced. Given \(2^d\) component functions, any classical method that leverages the idea of functions aggregation scales linearly in \(2^d\) since it is necessary to compute those functions explicitly to obtain the overall average. Furthermore, in the worst-case scenario, each component function has to process all available data; this implies a linear cost in the training set size multiplied by \(2^d\). Using big-\(\mathcal {O}\) notation, given a dataset \((x_i,y_i)\) for \(i=1, \dots N\), where \(x_i\) is a p-dimensional vector, and \(y_i\) is the target variable of interest, the overall time complexity of a model based on the aggregation of \(2^d\) functions is:

In contrast, MAQA generates a superposition of \(2^d\) different transformations of the input in only d steps since the single transformations are not computed directly, but they result from the combination of different unitaries \(G(\theta _{i,j})\). Then, once the quantum state in Eq. (10) is generated, any operation (unitary F) is propagated to all the quantum trajectories with a single execution. Using big-\(\mathcal {O}\) notation, the time complexity of implementing the MAQA is:

where \(C_G\) is the cost the controlled operation \(C^{(j)}G(\theta )\) and \(C_F\) is the cost of F. Note that the number of different functions grows exponentially with respect to the parameter d, which has a linear impact on the overall time complexity. This means that it is possible to generate an exponentially large number of different transformations of the input while obtaining their average efficiently, at the cost of increasing the depth of the corresponding quantum circuit linearly by a factor of \(2C_G\).

However, these advantages come with some compromises. First, the assumption about the nature of the operator \(G(\theta _{i,j})\). In fact, MAQA assumes that the product of \(G(\theta _{i,j})\) for \(i=1, \dots , d\) produces a quantum gate \( G\left( \varTheta _{k}\right) \):

In practice, this means that multiple applications of the unitaries that depend on some set of parameters \(\theta _{i,j}\) result in a single transformation of the same nature that depends on a derived set of parameters \(\varTheta _k\). Although any quantum circuit can be expressed as the product of different unitary matrices, the design of these gates in the context of supervised learning needs to be accomplished such that the final measurement provides the target variable of interest.

Finally, when comparing classical and quantum algorithms, it is important to consider that quantum computation introduces a new complexity class, the Bounded-error Quantum Polynomial time, representing the class of problems solvable in polynomial time by an innately probabilistic quantum Turing machine [18]. Nevertheless, quantum algorithms need to be evaluated in terms of gate complexity. Thus, it is necessary that the exponential scaling introduced with respect to d is preserved when considering a specific QML model.

4.1 MAQA as quantum–classical hybrid algorithm

Recently, the idea of aggregating two different unitary operators to reproduce the output of a two-neuron single-layer neural network via quantum circuit has been proposed (qSLP) [19]. Since multiple aggregations are the basis of both qSLP and MAQA, the latter can be seen as a natural extension of the former with an exponentially large number of neurons in the hidden layer. In fact, the entanglement between the control and data registers implies the number of linear combinations to be equal to the number of basis states of the control register. This, in turn, implies that the number of hidden neurons H scales exponentially with the number of states of the control register as a consequence of each hidden neuron being represented by a quantum trajectory. This exponential scaling might enable the construction of a qSLP with an arbitrarily large number of hidden neurons as the amount of available qubits increases. In other words, by adopting MAQA to generalise the qSLP, we can build a model with an incredible descriptive power capable of being a universal approximator.

From a computational point of view, given H hidden neurons and L training epochs, the training of a classical SLP scales (at least) linearly in H and L since the output of each hidden neuron has to be calculated explicitly to obtain the final output. Furthermore, if H is too large (a necessary condition for an SLP to be a universal approximator [19, 20]), the problem becomes NP-hard [21]. The adoption of MAQA to generalise the qSLP allows scaling linearly with respect to \(log_2(H)=d\), thanks to the entanglement between the two quantum registers, which allows generating an exponentially large number of quantum trajectories in superposition.

However, the main challenge to tackle in the near future for qSLP-MAQA is still the design of a proper activation function—in the sense of the Universal Approximation Theorem—which is one of the significant issues for building a complete quantum neural network. Yet, a recent proposal of QSplines [13] opened the possibility of approximating non-linear activation functions via a quantum algorithm. Even so, QSplines use the HHL as a subroutine, a fault-tolerant quantum algorithm that cannot be adopted in hybrid computation on NISQ devices.

Nevertheless, recently it has been shown that quantum feature maps alongside functions aggregation is able to achieve universal approximation [22]. Thus, a possible future work consists of studying the qSLP-MAQA on top of the quantum feature map to enable it as a universal functions approximator without implementing a nonlinear quantum activation function.

4.2 MAQA as fault-tolerant quantum algorithm

Recently, a quantum algorithm that implements the idea of ensemble methods has been proposed [23] and further developed [24]. Looking at the specific quantum circuit in use, it is possible to observe that quantum ensembles can be considered as a particular instance of MAQA, where the controlled rotation in Eqs. (8), (7) are implemented using only the basis state \(\vert {1} \rangle \) as control state which is transformed through Pauli-X gate at each iteration. Furthermore, while MAQA allows flexible quantum trajectories in terms of parametrised quantum gates \(S_{\beta }\) and \(\{G\left( \theta _{i,1}\right) , G\left( \theta _{i,2}\right) \}_{i=1, \dots , d}\), in the case of quantum ensemble [24] the weights are pre-fixed (uniform superposition of the control register) and the transformations of the input data are represented by CSWAP operations. Thus, MAQA potentially extends the proposed quantum ensemble specifically defined for bagging strategy to other ensembles such as boosting and randomisation, where the parameters of the single base model and the correspondent weights are not pre-fixed. Still, the main drawback of the quantum ensemble remains the underlying assumption to encode the complete training and test set into two different quantum registers and use a large number of trajectories in superposition to compute different subsamples of the training set. This would require an incredibly high number of qubits in a fault-tolerant quantum computer.

In this respect, the main challenge to tackle to make the ensemble effective (using MAQA) in the near future is the design of a quantum classifier based on interference that guarantees a more efficient data encoding strategy (e.g. amplitude encoding) and can process larger datasets.

5 Conclusions

The practical advantages of using quantum resources to solve machine learning tasks are still to be demonstrated. However, the ground provided by quantum mechanics is highly appealing since a low number of qubits allows accessing an exponentially large Hilbert space.

In this work, we tried to take a further step towards the study of how machine learning can benefit from quantum computation. The proposed quantum framework, the Multiple Aggregator Quantum Algorithm (MAQA), is capable of reproducing some of the most important classical machine learning algorithms using quantum computing resources. MAQA can potentially improve, in terms of time complexity, all those models that require explicitly computing multiple and diverse functions to produce a final strong model. In particular, the cost aggregating H different functions in classical machine learning requires a computational cost linear in H. Instead, the proposed quantum architecture allows scaling exponentially in H, requiring only \(log_2(H)\) steps under the assumption that the cost in terms of circuit complexity is unitary for each step. The advantage comes directly from using superposition and entanglement as resources for generating different transformations of the input. Furthermore, quantum interference allows propagating the use of a specific unitary (gate F) to all the quantum trajectories in superposition. Hence, the application of F impacts additively the overall time complexity, and the same operation would require a multiplicative cost in classical computation.

In addition, we discussed how the proposed approach could be adopted as a fault-tolerant (quantum ensemble) and hybrid quantum–classical (quantum Single-Layer Perceptron) algorithm, though different technical aspects need to be further investigated for both cases.

We are still in an early stage of QML, and its contribution to solving real world problems in the context of machine learning is yet to be understood. However, many research findings, including this work, suggest that the potential of quantum computing is huge, and machine learning will likely benefit from it in the future.

Data availability

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

Notes

When considering a neural network with multiple hidden layers, the only difference in Eq. (3) is that the function component \(g(x; \cdot )\) is, in turn, a neural network.

A detailed example with \(d=3\) is described in Appendix 5.

References

Buluta, I., Ashhab, S., Nori, F.: Natural and artificial atoms for quantum computation. Rep. Prog. Phys. 74(10), 104401 (2011)

Obada, A.F., Hessian, H., Mohamed, A.A., Homid, A.H.: Quantum logic gates generated by sc-charge qubits coupled to a resonator. J. Phys. A: Math. Theor. 45(48), 485305 (2012)

Obada, A.-S., Hessian, H., Mohamed, A.-B., Homid, A.H.: A proposal for the realization of universal quantum gates via superconducting qubits inside a cavity. Ann. Phys. 334, 47–57 (2013)

Obada, A.-S.F., Hessian, H.A., Mohamed, A.-B.A., Homid, A.H.: Implementing discrete quantum fourier transform via superconducting qubits coupled to a superconducting cavity. JOSA B 30(5), 1178–1185 (2013)

Homid, A., Sakr, M., Mohamed, A.-B., Abdel-Aty, M., Obada, A.-S.: Rashba control to minimize circuit cost of quantum fourier algorithm in ballistic nanowires. Phys. Lett. A 383(12), 1247–1254 (2019)

Peruzzo, A., McClean, J., Shadbolt, P., Yung, M.H., Zhou, X.Q. Love, P.J., Aspuru-Guzik, A., O’brien, J.L.: A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 4213 (2014)

Venkatesh, S.M., Macaluso, A., Klusch, M.: Bilp-q: quantum coalition structure generation. In: Proceedings of the 19th ACM International Conference on Computing Frontiers, pp. 189–192. (2022)

Venkatesh, S.M., Macaluso, A., Klusch, M.: Gcs-q: Quantum graph coalition structure generation. arXiv preprint arXiv:2212.11372 (2022)

Biamonte, J., Wittek, P., Pancotti, N., Rebentrost, P., Wiebe, N., Lloyd, S.: Quantum machine learning. Nature 549(7671), 195 (2017)

Nakahara, M., Ohmi, T.: Quantum computing: from linear algebra to physical realizations. CRC press, (2008)

Gillespie, T.A.: Spectral theory of linear operators. Proc. Edinburgh Math. Soc. (1980). https://doi.org/10.1017/S0013091500003886

Rebentrost, P., Mohseni, M., Lloyd, S.: Quantum support vector machine for big data classification. Phys. Rev. Lett. 113(13), 130503 (2014)

Macaluso, A., Clissa, L., Lodi, S., Sartori, C.: Quantum splines for non-linear approximations. In: Proceedings of the 17th ACM International Conference on Computing Frontiers, pp. 249–252 (2020)

Benedetti, M., Lloyd, E., Sack, S., Fiorentini, M.: Parameterized quantum circuits as machine learning models. Quantum Sci. Technol. 4(4), 043001 (2019)

Schuld, M., Bocharov, A., Svore, K., Wiebe, N.: Circuit-centric quantum classifiers. arXiv preprint arXiv:1804.00633 (2018)

Hastie, T.J., Tibshirani, R.J.: Generalized additive models. 43, CRC press, (1990)

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning, Springer Series in Statistics, Springer. New York Inc., New York, NY, USA (2001)

Nielsen, M.A., Chuang, I.L.: Quantum Computation and Quantum Information: 10th Anniversary Edition, 10th edn. Cambridge University Press, USA (2011)

Macaluso, A., Clissa, L., Lodi, S., Sartori, C.: A variational algorithm for quantum neural networks. In: International Conference on Computational Science, Springer, pp. 591–604 (2020)

Hornik, K., Stinchcombe, M., White, H., et al.: Multilayer feedforward networks are universal approximators. Neural Netw. 2(5), 359–366 (1989)

Judd, J.S.: Neural Network Design and the Complexity of Learning. MIT press (1990)

Goto, T., Tran, Q.H., Nakajima, K.: Universal approximation property of quantum feature map. arXiv preprint arXiv:2009.00298 (2020)

Macaluso, A., Lodi, S., Sartori, C.: Quantum algorithm for ensemble learning. In: Proceedings of the 21st Italian Conference on Theoretical Computer Science. (2020)

Macaluso, A., Clissa, L., Lodi, S., Sartori, C.: Quantum ensemble for classification. arXiv preprint arXiv:2007.01028 (2020)

Acknowledgements

This work has been partially funded by the German Ministry for Education and Research (BMB+F) in the project QAI2-QAICO under grant 13N15586.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

We consider the MAQA architecture when \(d=3\). To make the notation simpler, we indicate the parametrised quantum gate \(G(\theta _{i,j})\) as \(G_{i,j}\).

Without loss of generality, we can express the quantum gate \(S_{\beta }\) as the tensor product of \(d=3\) unitary gates \(B_i\). Then, the state preparation step can be expressed as follows:

where \(\vert {c_{i}} \rangle \) is the ith control qubit and \(a_i\) and \(b_i\) are the parameters that determine its amplitudes:

Once the two registers are initialised, each qubit in the control register is entangled with two different random transformations of the data register. Thus, the first step after state preparation is the following:

Step 1 (\(i=1\))

First, the controlled-unitary \(C^{(1)}G_{1,1}\) is executed to entangle the transformation \(G_{1,1}\vert {x} \rangle \) with the excited state of \(\vert {c_3} \rangle \):

Then, a second controlled unitary \(C^{(0)}G_{1,2}\) is executed:

At this point, two different transformations, \(G_{1,1}\) and \(G_{1,2}\), of the initial state \(\vert {x} \rangle \) are generated in superposition and are entangled with the two basis states of the control qubit \(\vert {c_3} \rangle \).

Step 2 (\(i=2\)) The same operations are applied using \(\vert {c_2} \rangle \) as control qubit and different matrices, \(G_{2,1}\) and \(G_{2,2}\).

First, the controlled unitary \(C^{(1)}G_{2,1}\) is applied to entangle a transformation of \(\vert {x} \rangle \) with the excited state of \(\vert {c_2} \rangle \):

where the position of the gate \(C^{(1)}\) indicates the control qubit used for \(G_{2,1}\). Then, a second controlled-unitary \(C^{(0)}G_{2,2}\) is executed:

Notice that the entanglement performed in Step 2.1 influences the entanglement in Step 2.2, and each trajectory describes a different transformation of \(\vert {x} \rangle \). Equation (18) can be rewritten expressing the four basis states of the control register using natural numbers:

where \(G(\varTheta _{h})\) is the product of \(d=2\) unitaries \(G_{i,j}\), the coefficients \(\beta ^*_h\) result from the product of two coefficients \(a_i\) and \(b_i\). Thus, using 2 control qubits 4 different quantum trajectories are generated that correspond to 4 different transformations of data \(\vert {x} \rangle \).

Step 3 (\(i=3\))

Extending the same procedure when \(d=3\), the result is the following:

where each \(G(\varTheta _{h})\) is the product of 3 unitaries \(G_{i,j}\) for \(i=1,2,3\) and \(j=1,2\).

Repeating this procedure d times with different control qubits, the result is the following quantum state:

where each \(G(\varTheta _{h})\) is the product of \(d=3\) unitaries \(G_{i,j}\) for \(i=1, \cdots , d\) and \(j=1,2\).

Finally, gate F is applied, as shown in Eq. (10) and the measurement of the data register is performed.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Macaluso, A., Klusch, M., Lodi, S. et al. MAQA: a quantum framework for supervised learning. Quantum Inf Process 22, 159 (2023). https://doi.org/10.1007/s11128-023-03901-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-023-03901-w