Abstract

In this paper, we consider a stochastic SIR epidemic model with general disease incidence rate and perturbation caused by nonlinear white noise and L\(\acute{e}\)vy jumps. First of all, we study the existence and uniqueness of the global positive solution of the model. Then, we establish a threshold \(\lambda \) by investigating the one-dimensional model to determine the extinction and persistence of the disease. To verify the model has an ergodic stationary distribution, we adopt a new method which can obtain the sufficient and almost necessary conditions for the extinction and persistence of the disease. Finally, some numerical simulations are carried out to illustrate our theoretical results.

Similar content being viewed by others

1 Introduction

The study of epidemic dynamics is to establish a mathematical model which can reflect the biological mechanisms according to the occurrence, development and environmental changes of diseases, and then to show the evolution of diseases through the study of dynamics of the model. Theories of Kermack and McKendrick laid the foundation for subsequent study of infectious disease dynamics and the generation of the most classic SIR epidemic model [1]. Since then, a large number of papers have focused on the dynamics of SIR infectious disease model [2,3,4,5,6]. And this model is usually used to denote some diseases with permanent immunity such as herpes, rabies, syphilis, whooping cough, smallpox, and measles, etc. We refer the readers to [7,8,9] for more details. In this paper, we assume that the mortality due to disease is not very high and the average daily increase in people over a period of time is constant. Then, the classic epidemic model can be given by:

where S(t), I(t), R(t) represent the density of susceptible individuals, infected individuals and individuals recovered from the disease at time t, respectively. The parameter \(\alpha \) denotes the recruitment rate of the population, \(\beta \) is the transmission coefficient between S(t) and I(t), \(\mu \) is the natural mortality rate, \(\rho \) is the mortality due to disease, and \(\gamma \) is the recovery rate. All of the parameters \(\alpha \), \(\beta \), \(\mu \), \(\rho \), \(\gamma \) are assumed to be positive.

It is well known that the bilinear incidence rate \(\beta S(t)I(t)\) describes the number of people infected by all the patients in a unit of time t (i.e., the number of new cases). However, studies have shown there exist many biological factors that may contribute to nonlinearity of transmission rate (refer [10] and the references therein ). The nonnegligible interactions between organisms caused by the nonlinear incidence of disease have attracted many scholars to consider more complex incidence functions. For example, a study on the transmission of cholera epidemic in Bari, Italy, 1973 attracted Capasso and Serio’s attention to SIR epidemic model with saturated incidence [11], they put forward the nonlinear incidence rate \(\frac{\beta SI}{1+aI}\), which can avoid the unboundedness of the contact rate on the cholera epidemic. This incidence rate measures the behavioral change of the disease and saturation effect as the number of infected individuals increases. That is, \(\frac{\beta SI}{1+aI}\) will converge to a saturation point when I is large. In addition, Chong et al. [12] considered a model of avian influenza with half-saturated incidence \(\frac{\beta SI}{H+I}\), where \(\beta >0\) denotes the transmission rate and H denotes the half-saturation constant which means the density of infected individuals in the population that yields 50\(\%\) possibility of contracting avian influenza. Huo and coworkers [13] proposed a rumor transmission model with Holling-type II incidence rate given by \(\frac{\lambda SI}{m+S}\). Kashkynbayev and Rihan [14] studied the dynamics of a fractional-order epidemic model with general nonlinear incidence rate functionals and time-delay, they proposed that the model applied to the incidence rate \(\frac{\beta S^{n}I}{1+\eta S^{n}}\), \(n \ge 2\). Furthermore, they adopted the Holling-type III functional response \(\frac{\beta S^{2} I}{1+\eta S^{2}}\) for numerical simulation to implement the theoretical results. In [15], authors assumed that the infection rate of HIV-1 was given by the Beddington–DeAngelis incidence function \(\frac{\beta SI}{1+aS+bI}\), obviously, with the different values of a and b, this nonlinear incidence rate can be transformed into Holling-type II or saturation incidence function. Similarly, when Alqahtani performed the stability and numerical analysis of a SIR epidemic system (COVID-19), they also adopted the Beddington–DeAngelis incidence function \(f(S,I)=\frac{\beta _{1}SI}{a_{1}+a_{2}S+a_{3}I}\) [16]. Besides, Ruan et al. proposed an epidemic model with nonlinear incidence rate \(\frac{kI^{l}S}{1+\alpha I^{h}}\) in [17], where \(kI^{l}\) measures the infection force of the disease and \(\frac{1}{1+\alpha I^{h}}\) measures the inhibition effect from the behavioral change of the susceptible individuals when their number increases or from the crowding effect of the infective individuals. In [18], Rohith and Devika modeled the COVID-19 transmission dynamics using a susceptible-exposed-infectious-removed model with a nonlinear incidence rate \(\frac{\beta _{0}SI}{1+\alpha I^{2}}\). Khan et al. [19] presented the dynamics of a fractional SIR model with a general incidence rate f(I)S which contained several most famous generalized forms. In addition, there are a lot of other studies on the subject (see [20,21,22,23,24,25,26,27]). In this paper, we take a more general incidence rate F with two variables S(t) and I(t) which will contain a number of common incidence rate mentioned in studies before. To be specific, model (1) turns into the following form:

Throughout this paper, we assume the general incidence rate F(S, I) has the following properties.

Assumption 1

Suppose that F(S(t), I(t)) is locally Lipschitz continuous for both variables with \(F(0,I)=0\) , \(\forall I \ge 0\). Furthermore, F is continuous at \(I=0\) uniformly, that is

Suppose further that F(S, I) is a function non-decreasing in S, non-increasing in I and satisfies the following condition:

where c is a positive constant.

Remark 1

Note that the incidence rate F(S, I) contains all the disease incidence functions listed in this paper. In summary, it includes the bilinear incidence rate (\(F(S,I)=\beta S\)) [28], saturated incidence rate (\(F(S,I)=\frac{\beta S}{1+mI}\)) [11, 29, 30], half-saturated incidence rate (\(F(S,I)=\frac{\beta S}{m+I}\)) [12, 31], Holling-type II incidence rate (\(F(S,I)=\frac{\beta S}{m+S}\)) [13], Holling-type III incidence rate (\(F(S,I)=\frac{\beta S^{2}}{(m_{1}+S)(m_{2}+S)}\)) [14], the Beddington–DeAngelis incidence rate (\(F(S,I)=\frac{\beta S}{1+m_{1}S+m_{2}I}\)) [15, 32, 33] and some other nonlinear incidence rates that are not listed here.

However, from the perspective of ecology and biology, the transmission process of infectious diseases, the contact between people, the movement of people and so on are inevitably affected by various environmental disturbances [34], such as temperature, water supply or climate change, whereas the above deterministic model does not consider the effects of any random factors. May [35] has revealed that some main parameters in epidemic model, such as the birth rates, death rates and spread rates of disease, are affected by environmental noise to a certain extent. In addition, as we know, Brownian motion is the main choice for simulating random motion and noise in continuous-time system modeling. This choice is soundly based on the good statistical characteristics of Brownian motion. For example, Brownian motion has finite moments of all orders, continuous sample-path trajectories, and there are powerful analytical tools that can solve the Brownian motion problem. Thus, we aim at stochastic epidemic model which contains white noise on the basis of the deterministic model (see [36, 37]).

In order to better simulate the impact of environmental noise during disease transmission, follow the methods of Liu and Jiang [38], nonlinear perturbation is considered in this paper, because the random perturbation may be dependent on square of the state variables S, I and R. Specifically, we assume the perturbations of S, I, R have the following form, respectively.

where \(B_{1}(t), B_{2}(t)\) and \(B_{3}(t)\) are mutually independent standard Brownian motions. \(\sigma _{ij}^{2}>0\), \(i=1,2,3\), \(j=1,2\) are the intensities of white noise. Thus, after taking into account the nonlinear perturbation of white noise, model (2) turns into the form of

Brownian motion has many excellent properties, but in some cases advantages can also be disadvantages. In the population ecosystem, it is inevitable to suffer some abrupt massive disturbances. These disturbances could be major catastrophes, like tsunamis, hurricanes, tornadoes, earthquakes and floods, etc.; and they also could be serious, large-scale diseases, such as avian influenza, COVID-19, SARS, dengue fever and Hemorrhagic fever caused by the Ebola virus, etc. Once these disasters occur, they usually lead to drastic fluctuations in the population of the region, and even a jump in the number of people. In other words, these disturbances will lead to discontinuous sample-path trajectories in the corresponding mathematical model. Therefore, Brownian motion cannot be simply used to describe these kinds of environmental disturbances. In order to explain the above phenomenon more accurately, a stochastic differential equation with jump should be considered to continue the study of epidemic dynamics system.

According to Liu et al. [39], the jump times are always random, and the waiting time of jumps is similar to L\(\acute{e}\)vy jumps. In addition, according to the theory of Eliazar and Klafter [40], L\(\acute{e}\)vy motions—performed by stochastic processes with stationary and independent increments—constitute one of the most important and fundamental family of random motions. Consequently, some scholars incorporated jump process into the system and there have been a number of specific studies of epidemiological models with L\(\acute{e}\)vy jumps up to now. Bao et al. took the lead in considering the competitive LotKa-Volterra population dynamics with jumps in [41] and gave some results to reveal the effect of jump process on the system. In [42], authors used the stochastic differential equation with jumps to study the asymptotic behavior of stochastic SIR model. Some other studies can be found in [43, 44] and the references therein. To the best of the authors’ knowledge, there is little literature on stochastic SIR epidemic model with general disease incidence and second-order perturbation of white noise and L\(\acute{e}\)vy jumps. Inspired by the above, we develop model (3) with L\(\acute{e}\)vy jumps:

where \(S(t^{-})\), \(I(t^{-})\), \(R(t^{-})\) are the left limit of S(t), I(t) and R(t), respectively. \(N(\cdot ,\cdot )\) is a Poisson counting measure with characteristic measure \(\lambda \) on a measurable subset \(\mathbb {Y}\) of \([0,\infty )\) with \(\lambda (\mathbb {Y}) < \infty \), and the compensated Poisson random measure is defined by \({\widetilde{N}}(d t, d u) = N(d t, d u) - \lambda (d u)d t\). Throughout this paper, we assume that \(B_{i}(t)\), \(i = 1, 2, 3\) and \(N(\cdot ,\cdot )\) are independent and all the coefficients of the system are positive. Since the dynamics of recovered population has no impact on the disease transmission dynamics of model (4), hence, we can omit the third equation in system (4) for convenience.

Assumption 2

According to this assumption, we can derive that \(\int _{\mathbb {Y}}\left( \ln (1+f_{ij}(u))\right) ^{2}\lambda (d u)\)

\(< \infty \), which implies that the intensities of L\(\acute{e}\)vy jumps are not very big.

As far as we know, few papers have studied the effects of a SIR epidemic model with general incidence rate and perturbed by both nonlinear white noise and L\(\acute{e}\)vy jumps. Therefore, this paper presents a great challenge to the theoretical analysis of the model. The main innovation and contribution in this paper is that we provide a sufficient and almost necessary condition under which the disease disappears and persists. In a deterministic model, the persistence and extinction of the disease are usually reflected by the stability of the equilibrium point, while in a stochastic model, we usually discuss the existence of the stationary distribution. The common way to prove the existence of ergodic stationary distribution is the theory of Has’minskii [45], and the key to the theory is to establish befitting Lyapunov functions. However, only sufficient conditions for the existence and uniqueness of ergodic stationary distribution can be obtained by these conventional methods [46,47,48]. To perfect the results, in this paper, we adopt a novel method which is a combination of classical Lyapunov functions and methods introduced in [49]. Finally, we obtain the desired sufficient and almost necessary condition for persistence of the disease and get a threshold \(\lambda \). To be more specific, in case of \(\lambda <0\), the number of the infected population will tend to zero exponentially which means the disease will become extinct. In case \(\lambda >0\), system (4) exists an ergodic stationary distribution on \(\mathbb {R}_{+}^{2}\) which means the disease will persist in the population.

The structure of this paper is arranged as follows. In Sect. 2, we first give some preliminary knowledge that may be used in this paper, including the exponential martingale inequality with L\(\acute{e}\)vy jumps and the local martingale’s strong law of large numbers. Section 3 proves the existence and uniqueness of the global positive solution in system (4). In order to obtain a threshold to determine the extinction and persistence of the disease, we discuss the existence of ergodic stationary distribution of the equation on the boundary where the infected individuals are absent in Sect. 4, and then we define a \(\lambda \) which is a key in this paper. The extinction and the ergodic stationary distribution of the disease in model (4) are given in Sects. 5 and 6, respectively. Finally, several numerical simulation examples are conducted to illustrate our main research results.

2 Preliminaries

Unless otherwise stated, throughout this paper, let (\(\varOmega \), \({\mathcal {F}}\), \(\{{\mathcal {F}}_t\}_{t\ge 0}\), P) be a complete probability space with a filtration \(\{{\mathcal {F}}_t\}_{t\ge 0}\) satisfying the usual conditions. We denote \(\mathbb {R}_+=[0,\infty )\), \(\mathbb {R}_+^n=\{x_{i}\in \mathbb {R}^n:x_i>0,i=1,2,\cdots ,n\}\).

Now we shall give some primary basic knowledge in stochastic population systems with L\(\acute{e}\)vy jumps, more details on L\(\acute{e}\)vy process can be found in [50].

Definition 1

X is a L\(\acute{e}\)vy process if:

-

(1)

X(0) = 0 a.s.;

-

(2)

X has independent and stationary increments;

-

(3)

X is stochastically continuous, i.e., for all \(a > 0\) and \(s>0\),

$$\begin{aligned} \lim _{t \rightarrow s}P(\left| X(t)-X(s) \right| > a) = 0. \end{aligned}$$

In general, let x(t) be a d-dimensional L\(\acute{e}\)vy process on \(t \ge 0\) presented as the following stochastic differential equation with L\(\acute{e}\)vy jumps

where \(f \in {\mathcal {L}}^{1}(\mathbb {R}_{+},\mathbb {R}^{d})\), \(g \in {\mathcal {L}}^{2}(\mathbb {R}_{+},\mathbb {R}^{d \times m})\) and \(\gamma \in {\mathcal {L}}^{1}(\mathbb {R}_{+} \times \mathbb {Y},\mathbb {R}^{d})\).

B(t)=\(\{\left( B_{t}^{1}, B_{t}^{2}, \cdots , B_{t}^{m}\right) ^{T}\}_{t \ge 0}\) is an m-dimensional Brownian motion defined on the complete probability space \((\varOmega , {\mathcal {F}} , P)\). Integrating both sides of (5) from 0 to t, we can get

Let \(C^{2,1}(\mathbb {R}^{d} \times \mathbb {R}_{+} ; \mathbb {R})\) denote the family of all real-valued functions V(x, t) defined on \(\mathbb {R}^{d} \times \mathbb {R}_{+}\) such that they are continuously twice differentiable in x and once in t. For any function \(U \in C^{2,1}(\mathbb {R}^{d} \times \mathbb {R}_{+}; \mathbb {R})\), define the differential operator \({\mathcal {L}}U(x(t),t)\) as follows:

where

According to the It\({\hat{o}}\)’s formula,

Next, we shall introduce the exponential martingale inequality with jumps as follows [41].

Definition 2

Assume that \(g \in {\mathcal {L}}^{2}(\mathbb {R}_{+},\mathbb {R}^{d \times m}), \gamma \in {\mathcal {L}}^{1}(\mathbb {R}_{+} \times \mathbb {Y},\mathbb {R}^{d})\). For any constants \(T , \alpha , \beta > 0 \),

To make the theory more complete, the following lemma cited by [50] is concerning the local martingale’s strong law of large numbers.

Lemma 1

Assume that M(t) is a local martingale vanishing at \(t=0\), define

where \(\left\langle M\right\rangle (t) :=\left\langle M, M \right\rangle (t) \) is Meyer’s angle bracket process.

If \(\lim _{t \rightarrow \infty }\rho _{M}(t) < \infty \;\;a.s. \) holds, then

From the relevant introduction in [51], we cite the proposition as follows.

Remark 2

Assume that

For any \(\gamma \in \varGamma _{loc}^{2}\),

then one can see that

where \(\left[ M \right] (t)=\left[ M,M\right] (t) \) denotes the quadratic variation process of M(t).

3 Existence and uniqueness of the global positive solution

In order to study the dynamics of an epidemic system, the first thing we concerned is whether the solution of model (4) is global and positive. Here, we give the following conclusion which is a fundamental condition for the long time behavior of model (4).

Theorem 1

For any initial value \((S(0),I(0))\in \mathbb {R}_+^2\), stochastic system (4) has a unique positive solution \((S(t),I(t))\in \mathbb {R}_+^2\) on \(t\ge 0\), and the solution will remain in \(\mathbb {R}_+^2\) with probability one.

Proof

Our proof is motivated by the methods of [34]. Since the drift and diffusion (i.e., the coefficients of model (4)) are locally Lipschitz continuous, hence there is a unique local solution \(\left( S(t),I(t) \right) \) on \(t \in [ 0,\rho _{e} )\) for any given initial value \(\left( S(0),I(0) \right) \in \mathbb {R}_{+}^{2}\), where \(\rho _{e}\) is an explosion time. To testify this solution is global, we only need to show that \(\rho _{e} = \infty \) a.s.. Let \(k_{0} > 0\) be sufficiently large such that both S(0) and I(0) can lie within the interval \([ \frac{1}{k_{0}} , k_{0} ]\). For each integer \(k \ge k_{0}\), define the following stopping time

Apparently, \(\tau _{k}\) is increasing as \(k \rightarrow \infty \). Set \(\tau _{\infty }=\lim _{k \rightarrow \infty }\tau _{k}\), hence \(\tau _{\infty } \le \rho _{e}\) a.s.. Once we prove that \(\tau _{\infty }=\infty \) a.s., then we can get \(\rho _{e}=\infty \) and \(\left( S(t), I(t) \right) \in \mathbb {R}_{+}^{2}\) a.s..

If \(\tau _{\infty } < \infty \) a.s., then there exists a pair of constants \(T > 0\) and \(0< \varepsilon <1\) such that \(P( \tau _{\infty } \le T ) > \varepsilon \). Hence, there is an integer \(k_{1} \ge k_{0}\) such that \(P( \tau _{k} \le T ) \ge \varepsilon \) for all \(k \ge k_{1}\).

Define a \(C^{2}\)-function V: \(\mathbb {R}_+^2 \rightarrow \mathbb {R}_+\) as follows:

where \(0<p<1\). The nonnegativity of V(S, I) is due to \(k-1-\log k \ge 0\) for \(k \ge 0\). It is easy to see V(S, I) is continuously twice differentiable with respect to S and I.

Applying It\({\hat{o}}\)’s formula to function V(S, I), we have

where

For any \(0< p <1\), by the inequation \(x^{r} \le 1+r(x-1)\) for \(x \ge 0,\;\; 0 \le r \le 1\), we have

On the basis of Assumption 1 and the above results, then

where

Integrating both sides of (6) from 0 to \(\tau _{k}\wedge T\) and then taking expectations

Let \(\varOmega _{k}=\{ \tau _{k}\le T \}\) for \(k \ge k_{1}\), then we have \(p( \varOmega _{k} ) \ge \varepsilon \). Note that for every \(\omega \in \varOmega _{k}\), \(S( \tau _{k},\omega )\) or \(I( \tau _{k},\omega )\) equals either k or \(\frac{1}{k}\). Consequently,

where \(I_{\varOmega _{k}}\) is the indicator function of \(\varOmega _{k}\). Taking \(k \rightarrow \infty \), we obtain that \(\infty > V\left( S(0),I(0)\right) +k_{3}T = \infty \) which is a contradiction, therefor we have \(\tau _{\infty } = \infty \) a.s. (i.e., S(t) and I(t) will not explode in a finite time with probability one). The conclusion is confirmed. \(\square \)

4 Exponential ergodicity for the system without disease

In this section, a threshold \(\lambda \) will be defined by exploring the exponential ergodicity of a one-dimensional disease-free system. To proceed, we first consider the following equation if there is no infective at time \(t = 0\):

In terms of the comparison theorem, it is easy to check out that \(S(t) \le {\hat{S}}(t)\), \(\forall t \ge 0\) a.s. provided \(S(0) = {\hat{S}}(0) > 0\). In order to obtain the exponential ergodicity of model (7), we first give the following lemma which has been discussed in [38].

Lemma 2

The following equation

admits an ergodic stationary distribution with the density:

where Q is a constant such that \(\int _{0}^{\infty }\pi ^{*}(x)d x=1, x\in (0,\infty )\) and it follows \(\lim _{t \rightarrow \infty }\frac{1}{t}\int _{0}^{t}{\bar{S}}(\tau )d \tau =\int _{0}^{\infty }x\pi ^{*}(d x) \;\;a.s..\)

Theorem 2

Markov process \({\hat{S}}(t)\) is exponentially ergodic and it has a unique stationary distribution denoted by \({\bar{\pi }}\) on \(\mathbb {R_+}\).

Proof

In order to prove the existence of the ergodic stationarity of \({\hat{S}}(t)\), according to [49], it is equivalent to proving the following two conditions: (a) The auxiliary process \({\bar{S}}(t)\) determined by (8) has a positive transition probability density with respect to Lebesgue measure. (b) There exists a nonnegative \(C^{2}\)-function \(V({\hat{S}}(t))\) such that \({\mathcal {L}}V({\hat{S}}(t)) \le -H_{2}V({\hat{S}}(t))+H_{1}\), in which \(H_{1}, H_{2}\) are positive constants. In view of Lemma 2, condition (a) has been given; therefore, we just need to verify condition (b) in the following.

Consider the Lyapunov function

where \(0< p < 1\).

Applying It\({\hat{o}}\)’s formula , one sees that

By reason of the inequations \(\frac{1}{x}-1+\ln x \ge 0\) for \(x \ge 0\) and \(x^{r}\le 1+r(x-1)\) for \(x \ge 0\), \(0 \le r \le 1 \), we derive that

where

This completes the proof of the theorem. \(\square \)

Remark 3

For any \({\bar{\pi }}\)-integrable f(x): \(\mathbb {R}_{+}^{2} \rightarrow \mathbb {R}\), according to the ergodicity of \({\hat{S}}(t)\),

Furthermore, integrating both sides of (7) from 0 to t and then taking expectation, then it yields

combining the above result and \(\lim _{t \rightarrow \infty }\frac{\mathbb {E}{\hat{S}}(t)}{t}=0\), we can obtain

Remark 4

Now, we define a critical value which will play an important role in determining the extinction and persistence of the disease.

According to Assumption (1), one can see that \(F(S,I) \le cS\), hence,

Therefore, \(\lambda \) is well defined.

5 Extinction of the disease

In this section, we will present sufficient conditions for the demise of the disease, which will provide theoretical guidance for the prevention and control of the spread of disease. The following theorem is vital in this paper.

Theorem 3

Let \(\left( S(t),I(t)\right) \) be the solution of system (4) with any given positive initial value \(\left( S(0) , I(0)\right) \in \mathbb {R}_{+}^{2}\), then it has the property

If \(\lambda < 0\) holds, I(t) will go to zero exponentially with probability one.

Proof

Applying It\({\hat{o}}\)’s formula to \(\ln I(t)\), we have

Integrating both sides of (11), we obtain

where

It is obvious that \(\left\langle w_{1},w_{1}\right\rangle (t) =\sigma _{21}^{2}t \). In view of Remark 2, one can obtain that \(\left\langle w_{2},w_{2}\right\rangle (t)=t\int _{\mathbb {Y}}\left( \ln \left( 1+f_{21}(u)\right) \right) ^{2}\lambda (d u)\). Consequently, according to the strong law of large numbers presented in Lemma 1 we have

Furthermore, on the basis of exponential martingale inequality introduced in Definition 2, we choose \(\alpha =1\), \(\beta =2\ln n\), then it follows

since \(\sum \frac{1}{n^{2}} < \infty \), the Borel–Cantelli lemma implies that there exist a set \(\varOmega _{0} \in {\mathcal {F}}\) with \(P(\varOmega _{0})=1\) and an integer-valued random variable \(n_{0}\) such that for every \(\omega \in \varOmega _{0}\),

That is, for all \(0 \le t \le n \) and \(n \ge n_{0}\) a.s., it follows

Then, substituting the above results into (12) deduces that

for all \(0 \le t \le n \) and \(n \ge n_{0}\) a.s..

Therefore, for almost all \(\omega \in \varOmega _{0}\), if \(n \ge n_{0}\), \(0 < n-1 \le t \le n \), by taking the limit of both sides we obtain

If \(\lambda < 0\), then \(\limsup _{t \rightarrow \infty }\frac{\ln I(t)}{t} < 0\), i.e., \(\lim _{t \rightarrow \infty }I(t)=0\) a.s., which means the disease will die out in a long term. \(\square \)

6 Ergodic stationary distribution

In biology, the persistence of disease is closely related to the balance and stability of the entire ecosystem. In theoretical research, different from the deterministic model, the stochastic model has no endemic equilibrium point, so there is no way to get the desired result by analyzing the stability of the equilibrium point. In this part, we will investigate the existence of the ergodic stationary distribution of model (4) in a new way on the basis of the method mentioned in [53,54,55].

Theorem 4

Assume that \(\lambda > 0\), for any initial value \(\left( S(0) , I(0)\right) \in \mathbb {R}_{+}^{2}\), system (4) has a unique stationary distribution \(\pi \) and it has ergodic property.

Furthermore, the following assertions are valid.

-

(a)

For any \(\pi \)-integrable f(x,y) : \(\mathbb {R}_{+}^{2} \rightarrow \mathbb {R}\), it follows that

$$\begin{aligned}&\lim _{t \rightarrow \infty }\frac{1}{t}\int _{0}^{t}f(S(\tau ),I(\tau ))d \tau \\&\quad =\int _{\mathbb {R}_{+}^{2}} f(x,y)\pi (d x, d y) \, a.s.. \end{aligned}$$ -

(b)

\(\lim _{t \rightarrow \infty }||P(t,(S(0),I(0)),\cdot )-\pi ||=0\), \(\forall (S(0),I(0)) \in \mathbb {R}_{+}^{2}\), where

\(P(t,(S(0),I(0)),\cdot )\) is the transition probability of (S(t),I(t)).

Proof

At first, we define a \(C^{2}\)-function

where p \(\in (0,1)\), M is a positive constant which satisfies \(-M\lambda + L \le -2\) and constant L will be determined later. In view of \(\hat{S(t)}-S(t)>0, \forall t \ge 0\) and the partial derivative equations, it is easy to know that the following function has a minimum point, i.e.,

Then, we consider the following nonnegative function

Denote

An application of It\({\hat{o}}\)’s formula , one can see that

where Assumption 1 has been used.

Combining Eqs. (13) and (14), we obtain

Moreover,

Now, combining the inequalities what we have got above, it follows

where

From the expression of G(S, I), we can deduce that

Case 1. If \(S \rightarrow 0^{+}\), then it is obvious that \(G(S,I) \rightarrow -\infty \);

Case 2. If \(S \rightarrow +\infty \), obviously we have \(G(S,I) \rightarrow -\infty \);

Case 3. If \(I \rightarrow +\infty \), then \(G(S,I) \rightarrow -\infty \);

Case 4. If \(I \rightarrow 0^{+}\), it is easy to see that

according to Assumption 1, F(S, I) is continuous at \(I=0\) uniformly, hence it is obvious that \(F(S,0)-F(S,I)\) tends to 0 as I tends to \(0^{+}\). Consequently, we obtain that

Now we proceed to define the bounded closed set

\(U_{\varepsilon }=\left\{ (S,I) \in \mathbb {R}_{+}^{2} , \varepsilon \le S \le \frac{1}{\varepsilon } , \varepsilon \le I \le \frac{1}{\varepsilon }\right\} \), taking \(\varepsilon >0\) sufficiently small. From what we have discussed it follows that

On the other hand, for any \((S,I) \in \mathbb {R}_{+}^{2}\), there exists a positive constant H such that \(G(S,I) \le H\). Consequently, we have

According to the ergodicity of \({\hat{S}}(t)\), we get

which follows that

where \(P(t,(S(0),I(0)),\cdot )\) is the transition probability of (S(t), I(t)). Inequality (15) and the invariance of \(\mathbb {R}_{+}^{2}\) imply that there exist an invariant probability measure of system (x(t), y(t)) on \(\mathbb {R}_{+}^{2}\). Furthermore, the independence between standard Brownian motions \(B_{i}(t)\), \(i=1,2,3\) indicates that the diffusion matrix is non-degenerate. In addition, it is easy to see the existence of an invariant probability measure is equivalent to a positive recurrence. Therefore, system (4) has a unique stationary distribution \(\pi \) and it has the ergodic property. On the other hand, assertions (a) and (b) can refer to [13, 56]. The proof is complete. \(\square \)

Lemma 3

Assume that \(\left( S(t), I(t)\right) \) is the positive solution of system (4) with initial value \(\left( S(0), I(0) \right) \in \mathbb {R}_{+}^{2} \), then for any \(0\le \theta \le 1\), there exists a positive constant \(K(\theta )\) such that

Proof

Consider the Lyapunov function

By simple calculation on the basis of It\({\hat{o}}\)’s formula, we obtain

Applying It\({\hat{o}}\)’s formula to \(e^{\eta t}V(S(t),I(t))\), we have

where \(\eta \) is a positive constant which satisfies \(\eta >\mu \theta \).

Denote

According to the inequation

it follows

Integrating both sides of (16) from 0 to t and then taking expectations

This completes the proof. \(\square \)

Remark 5

If \(\theta =1\), by virtue of Lemma 3 and Theorem 4, one can see that

Although information about the stationary distribution \(\pi \) is not known yet, the above result implies that S(t) and I(t) are persistent in the mean.

7 Examples and numerical simulations

7.1 Numerical simulation only with white noise

In this section, we give some numerical simulation examples to illustrate the effect of disturbances on the SIR epidemic model. Since it is difficult to get the explicit value of \(\lambda \), we first consider the following equation with saturated incidence rate but without the perturbation of L\(\acute{e}\)vy jumps, i.e., \(f_{ij}=0\), \(i=1,2,3\), \(j=1,2\).

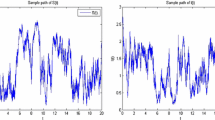

Simulations of the solution in stochastic system (17) with white noise \(\sigma _{11}=\sigma _{12}=\sigma _{21}=\sigma _{22}=\sigma _{31}=\sigma _{32}=0.01\). The graph shows that the three sub-populations are persistent, which means that the disease will spread among people

The values of parameters in model (17) and initial values of S, I, R are shown in the following table.

Example 1

Consider model (17) with parameters in Table 1, we take the white noise intensities as \(\sigma _{ij}=0.01\), \(i=1,2,3\), \(j=1,2\). By using MATLAB software, we compute that

According to Theorem 4, this means that the disease will persist and system (17) has an unique ergodic stationary distribution. Through the trajectory images of S(t), I(t) and R(t) shown in Fig. 1, one can easily find that the number of all the three sub-populations fluctuated around a nonzero number, which means that the disease persists in a long term.

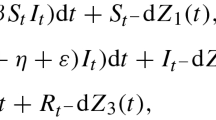

Next, we choose other parameter values such that \(\lambda <0\), which can indicate the disease will be extinct in a long time. The only difference between the two examples is the intensities of white noise. Consider model (17) with \(\sigma _{11}=0.01, \sigma _{12}=0.01, \sigma _{21}=0.8, \sigma _{22}=0.01,\sigma _{31}=0.01,\sigma _{32}=0.01\), then by software we obtain \(\lambda \approx -0.274<0.\) According to Theorem 3, we can know that I(t) will go to zero exponentially with probability one while S(t) converges to the ergodic process \({\bar{S}}(t)\). Through the curve trajectories in Fig. 2, one can see that the number of infected and recovered populations tends to zero eventually, and this implies that the disease can be brought under control and stopped spreading among people.

Simulations of the solution in stochastic system (17) with white noise \(\sigma _{11}=0.01, \sigma _{12}=0.01, \sigma _{21}=0.8, \sigma _{22}=0.01,\sigma _{31}=0.01, \sigma _{32}=0.01\). The curves in the graph show that both the infected and the recovered population will eventually decrease to zero, which means that the disease will eventually disappear

Simulations of the solution in stochastic system (18) with white noise \(\sigma _{ij}=0.01\), \(i=1,2,3\), \(j=1,2\) and jump noise \(f_{ij}=0.01\), \(i=1,2,3\), \(j=1,2\). The curves in the figure indicate a persistent presence of susceptible, infected and recovered individuals

Simulations of the solution in stochastic system (18) with white noise \(\sigma _{ij}=0.01\), \(i=1,2,3\), \(j=1,2\) and jump noise \(f_{11}=f_{12}=f_{31}=f_{32}=0.01\) while \({21}=f_{22}=0.8\). As time goes on, the number of infected and recovered people tends to zero, which means that the disease will stop spreading and eventually disappear

7.2 Numerical simulation with white noise and L\(\acute{e}\)vy jumps

Although we cannot get the exact mathematical expression of \(\lambda \) at present, some corresponding visualized results can be obtained by numerical simulation. Now, we take into account the interference of L\(\acute{e}\)vy jumps to study the effects of this noise. At first, we present the equation.

Example 2

Based on the parameter values in Table 1, we set the intensities of white noise and L\(\acute{e}\)vy noise as \(\sigma _{ij}=f_{ij}=0.01\), \(i=1,2,3\), \(j=1,2\). When the noise intensities are relatively small, the effect of external disturbance on epidemic system (18) is weak, in addition, the dynamic properties of the stochastic model are similar to those of the deterministic model. From Fig. 3, it is easy to see that the numbers of S(t), I(t), R(t) are stable in the mean which also indicates that the disease will be persistent in a long term under the relatively weak noise.

On the other hand, we increase the intensity of L\(\acute{e}\)vy noise and set it to \(f_{21}=f_{22}=0.8\). It is obvious that the only difference between the two examples is the value of \(f_{21}\) and \(f_{22}\). Now, the external noise plays an important role in the dynamics of disease transmission, and its influence on the stochastic system cannot be ignored. Through the curve trajectories in Fig. 4, it is obvious that the susceptible still remain stable on average, while both the infected and the recovered disappeared eventually. This also reflects that when there is a strong external disturbance, the disease can be controlled and does not spread in the population.

Based on the numerical simulations above, it is easy to find that both white noise and L\(\acute{e}\)vy jumps can suppress the spread of the disease. As the intensities of the white noise and L\(\acute{e}\)vy jumps increase, the disease disappeared eventually.

8 Conclusion

Based on the pervasiveness of randomness in nature, which includes mild noises and some massive, abrupt fluctuations, a stochastic SIR epidemic model with general disease incidence rate and L\(\acute{e}\)vy jumps is studied in this paper. Through rigorous theoretical analysis, we first present that the solution of model (4) is global and unique. Then, we investigate the existence of exponential ergodicity for the corresponding one-dimensional disease-free system (7) and a threshold \(\lambda \) is established, which is represented by the stationary distribution \({\bar{\pi }}\) of (7) and the parameters in model (4). Through the symbol of the threshold, we can classify the extinction and persistence of the disease. To be specific, when \(\lambda <0\), the number of the infected population will tend to zero exponentially which means the disease will extinct finally. Meanwhile, in case of \(\lambda >0\), system (4) exists an ergodic stationary distribution on \(\mathbb {R}_{+}^{2}\) which also means the disease is permanent.

However, since the explicit analytic formula of invariant measure \({\bar{\pi }}\) cannot be obtained so far, the exact expression of \(\lambda \) cannot be known accordingly, whereas from the threshold we can still get a series of dynamic behaviors and characteristics of model (4). According to the mathematical expression of the threshold \(\lambda \), a surprising finding is that neither \(f_{11}(u)\) nor \(f_{12}(u)\) has an effect on the value of \(\lambda \). In addition, both the linear perturbation parameters \(\sigma _{21}\) of white noise and \(f_{21}(u)\) of L\(\acute{e}\)vy jumps have a negative effect on the value of \(\lambda \), while the second-order perturbation parameters have little effect.

In our numerical simulation, one can easily find that when the intensities of noises are relatively small, the disease will persist. However, with the increase in noise intensity, the curves of the solution (S, I, R) to model (4) fluctuate more obvious. Finally, when noise intensity is relatively high, the number of infected and recovered people tends to zero, which indicates that the disease tends to disappear. In other words, it implies that both the white noise and L\(\acute{e}\)vy jumps can suppress the outbreak of the disease.

Data availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Kermack, W.O., Mckendrick, A.G.: Contributions to the mathematical theory of epidemics (Part I). Proc. Soc. London Series A 115, 700–721 (1927)

Dieu, N.T., Nguyen, D.H., Du, N.H., Yin, G.: Classification of asymptotic behavior in a stochastic SIR model. SIAMJ. Appl. Dyn. Syst. 15, 1062–1084 (2016)

Jiang, D., Yu, J., Ji, C.: Asymptotic behavior of global positive solution to a stochastic SIR model. Math. Comput. Model. 54, 221–232 (2011)

Zhou, Y., Zhang, W., Yuan, S.: Survival and stationary distribution of a SIR epidemic model with stochastic perturbations. Appl. Math. Comput. 244, 118–131 (2014)

Liu, Z.: Dynamics of positive solutions to SIR and SEIR epidemic models with saturated incidence rates. Nonlinear Anal. RWA. 14, 1286–1299 (2013)

Yang, Y., Jiang, D.: Long-time behaviou of a stochastic SIR model. Appl. Math. Comp. 236, 1–9 (2014)

Brauer, F., Chavez, C.C.: Mathematical Models in Population Biology and Epidemiology. Springer-Verlag, New York (2012)

Capasso, V.: Mathematical Structures of Epidemic Systems. Springer-Verlag, Berlin (1993)

Ma, Z., Zhou, Y., Wu, J.: Modeling and Dynamics of Infectious Diseases. Higher Education Press, Beijing (2009)

Hochberg, M.E.: Non-linear transmission rates and the dynamics of infectious disease. J. Theoret. Biol. 153, 301–321 (1991)

Capasso, V., Serio, G.: A generalization of the Kermack-McKendrick deterministic epidemic model. Math. Biosci. 42(1–2), 43–61 (1978)

Chong, N.S., Tchuenche, J.M., Smith, R.J.: A mathematical model of avian influenza with half-saturated incidence. Theory Biosci. 133, 23–38 (2014)

Ichihara, K., Kunita, H.: A classification of the second order degenerate elliptic operators and its probabilistic characerization. Z. Wahrsch. Verw. Gebiete 39, 81–84 (1977)

Kashkynbayev, A., Rihan, F.A.: Dynamics of fractional-order epidemic models with general nonlinear incidence rate and time-delay. Mathematics 15, 1829 (2021)

Huang, G., Ma, W., Takeuchi, Y.: Global properties for virus dynamics model with Beddington-DeAngelis functional response. Appl. Math. Lett. 22, 1690–1693 (2009)

Alqahtani, R.T.: Mathematical model of SIR epidemic system (COVID-19) with fractional derivative: stability and numerical analysis. Adv. Differ. Equ. 2021, 2 (2021)

Ruan, S., Wang, W.: Dynamical behavior of an epidemic model with a nonlinear incidence rate. J. Differ. Equ. 188, 135–163 (2003)

Rohith, G., Devika, K.B.: Dynamics and control of COVID-19 pandemic with nonlinear incidence rates. Nonlinear Dyn. 101, 2013–2026 (2020)

Khan, M.A., Ismail, M., Ullah, S., Farhan, M.: Fractional order SIR model with generalized incidence rate. AIMS Math. 5, 1856–1880 (2020)

Guo, K., Ma, W.B.: Global dynamics of an SI epidemic model with nonlinear incidence rate, feedback controls and time delays. Math. Biosci. Eng. 18, 643–672 (2020)

Bajiya, V.P., Bugalia, S., Tripathi, J.P.: Mathematical modeling of COVID-19: Impactof non-pharmaceutical interventions in India. Chaos 30, 113143 (2020)

Bugalia, S., Tripathi, J.P., Wang, H.: Mathematical modeling of intervention and low medical resource availability with delays: Applications to COVID-19 outbreaks in Spain and Italy. Math. Biosci. Eng. 18, 5865–5920 (2021)

Bugalia, S., Bajiya, V.P., Tripathi, J.P., Li, M.T., Sun, G.Q.: Mathematical modeling of COVID-19 transmission: the roles of intervention strategies and lockdown. Math. Biosci. Eng. 17, 5961–5986 (2020)

Tripathi, J.P., Abbas, S.: Global dynamics of autonomous and nonautonomous SI epidemic models with nonlinear incidence rate and feedback controls. Nonlinear Dyn. 86, 337–351 (2016)

Tang, Y., Huang, D., Ruan, S., Zhang, W.: Coexistence of limit cycles and homoclinic loops in a SIRS model with a nonlinear incidence rate. SIAM J. Appl. Math. 69, 621–639 (2008)

Huo, L., Jiang, J., Gong, S., He, B.: Dynamical behavior of a rumor transmission model with Holling-type II functional response in emergency event. Phys. A 450, 228–240 (2016)

Yang, Q., Jiang, D., Shi, N., Ji, C.: The ergodicity and extinction of stochastically perturbed SIR and SEIR epidemic models with saturated incidence. J. Math. Anal. Appl. 388, 248–271 (2012)

Wang, J.J., Zhang, J.Z., Jin, Z.: Analysis of an SIR model with bilinear incidence rate. Nonlinear Anal. Real World Appl. 11, 2390–2402 (2010)

Khan, M.A., Badshah, Q., Islam, S.: Global dynamics of SEIRS epidemic model with non-linear generalized incidences and preventive vaccination. Adv. Differ. Equ. 2015, 88 (2015)

Khan, M.A., Khan, Y., Islam, S.: Complex dynamics of an SEIR epidemic model with saturated incidence rate and treatment. Phys. A. 493, 210–227 (2018)

Shi, Z.F., Zhang, X.H., Jiang, D.Q.: Dynamics of an avian influenza model with half-saturated incidence. Appl. Math. Comput. 355, 399–416 (2019)

Beddington, J.R.: Mutual interference between parasites or predators and its effect on searching efficiency. J Anim Ecol 44, 3310–341 (1975)

DeAngelis, D.L., Goldstein, R.A., Oeill, R.V.: A model for tropic interaction. Ecology 56, 881–892 (1975)

Mao, X., Marion, G., Renshaw, E.: Environmental Brownian noise suppresses explosions in population dynamics. Stoch. Process. Appl. 97, 95–110 (2002)

May, R.: Stability and Complexity in Model Ecosystems. Princeton University, Princeton (1973)

Du, N.H., Dieu, N.T., Nhu, N.N.: Conditions for permanence and ergodicity of certain SIR epidemic models. Acta Appl. Math. 160, 81–99 (2019)

Ji, C., Jiang, D., Shi, N.: The behavior of an SIR epidemic model with stochastic perturbation. Stoch. Anal. Appl. 30, 755–773 (2012)

Liu, Q., Jiang, D.: Stationary distribution and extinction of a stochastic SIR model with nonlinear perturbation. Appl. Math. Lett. 73, 8–15 (2017)

Liu, Q., Jiang, D.Q., Shi, N.Z., Hayat, T., Alsaedi, A.: Stochastic mutualism model with Lévy jumps. Commun. Nonlinear Sci. Numer. Simul. 43, 78–90 (2017)

Eliazar, I., Klafter, J.: Lévy, Ornstein-Uhlenbeck, and Subordination. J. Stat. Phys. 119, 165–196 (2005)

Bao, J., Mao, X., Yin, G., Yuan, C.: Competitive LotKa-Volterra population dynamics with jumps. Nonlinear. Anal-Theor. 74, 6601–6616 (2011)

Zhang, X., Wang, K.: Stochastic SIR model with jumps. Appl. Math. Lett. 26(8), 867–874 (2013)

Liu, Q., Jiang, D., Shi, N., Hayat, T.: Dynamics of a stochastic delayed SIR epidemic model with vaccination and double disease driven by Lévy jumps. Phys. A. 492, 2010-2018 (2018)

Zhou, Y., Zhang, W.: Threshold of a stochastic SIR epidemic model with Lévy jumps. Phys. A. 446, 204–216 (2016)

Has’minskii, R.: Stochastic stability of differential equations, Sijthoff & Noordhoff. Alphen aan den Rijn, The Netherlands (1980)

Liu, Q., Jiang, D., Hayat, T., Alsaedi, A., Ahmad, B.: A stochastic SIRS epidemic model with logistic growth and general nonlinear incidence rate. Phys. A. 551, 124152 (2020)

Yu, X., Yuan, S.: Asymptotic Properties of a stochastic chemostat model with two distributed delays and nonlinear perturbation. Discr. Contin. Dyn. Syst. Ser.B 25, 2373–2390 (2020)

Wang, L., Jiang, D.: Ergodic property of the chemostat: A stochastic model under regime switching and with general response function. Nonlinear Anal. Hybrid Syst. 27, 341–352 (2018)

Xi, F.: Asymptotic properties of jump-diffusion processes with state-dependent switching. Stoch. Proc. Appl. 119, 2198–2221 (2009)

Lin, Y., Zhao, Y.: Exponential ergodicity of a regime-switching SIS epidemic model with jumps. Appl. Math. Lett. 94, 133–139 (2019)

Lipster, R.: A strong law of large numbers for local martingales. Stochastics 3, 217–228 (1980)

Kunita, H.: Itô’s stochastic calculus: its surprising power for applications. Stoch. Process Appl. 120, 622–652 (2010)

Du, N.H., Dang, N.H., Yin, G.: Conditions for permanence and ergodicity of certain stochastic predator-prey models. J. Appl. Probab. 53, 187–202 (2016)

Has’miniskii, R.: Stochastic Stability of Differential equations. Sijthoff and Noordhoff, Alphen ann den Rijn (1980)

Mao, X.: Stochastic Differential Equations and Applications. Horwood Publishing, Chichester (1997)

Has’miniskii, R.: Ergodic properties of recurrent diffusion processes and stabilization of the Cauchy problem for parabolic equations. Theory Probab. Appl. 5, 179–196 (1960)

Naik, P.A., Zu, J., Ghoreishi, M.: Stability analysis and approximate solution of sir epidemic model with crowley-martin type functional response and holling type-II treatment rate by using homotopy analysis method. J. Appl. Anal. Comput. 10, 1482–1515 (2020)

Dubey, P., Dubey, B., Dubey, U.S.: An SIR Model with Nonlinear Incidence Rate and Holling Type III Treatment Rate. APPLIED ANALYSIS IN BIOLOGICAL AND PHYSICAL SCIENCES, pringer Proceedings in Mathematics & Statistics 186, 63–81 (2016)

Funding

The authors thank the support of the National Natural Science Foundation of China (Grant nos. 11801566, 11871473) and the Fundamental Research Funds for the Central Universities of China (No. 19CX02059A).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, Q., Zhang, X. & Jiang, D. Asymptotic behavior of a stochastic SIR model with general incidence rate and nonlinear Lévy jumps. Nonlinear Dyn 107, 2975–2993 (2022). https://doi.org/10.1007/s11071-021-07095-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-021-07095-7