Abstract

Data assimilation in reservoir modeling often involves model variables that are multimodal, such as porosity and permeability. Well established data assimilation methods such as ensemble Kalman filter and ensemble smoother approaches, are based on Gaussian assumptions that are not applicable to multimodal random variables. The selection ensemble smoother is introduced as an alternative to traditional ensemble methods. In the proposed method, the prior distribution of the model variables, for example the porosity field, is a selection-Gaussian distribution, which allows modeling of the multimodal behavior of the posterior ensemble. The proposed approach is applied for validation on a two-dimensional synthetic channelized reservoir. In the application, an unknown reservoir model of porosity and permeability is estimated from the measured data. Seismic and production data are assumed to be repeatedly measured in time and the reservoir model is updated every time new data are assimilated. The example shows that the selection ensemble Kalman model improves the characterisation of the bimodality of the model parameters compared to the results of the ensemble smoother.

Similar content being viewed by others

1 Introduction

The predictions of fluid flow reservoir models are generally affected by the uncertainty in the initial reservoir model of petrophysical properties, such as porosity and saturation. This model is usually built by integrating different types of data (core measurements, well logs, and surface seismic measurements) and by using geophysical data and statistical algorithms. The so-obtained static reservoir model is then used as an input for fluid flow simulators to predict the dynamic changes in fluid saturation during injection and production. However, there are several sources of uncertainties that might impact the accuracy of the static reservoir model, and as a consequence they affect the accuracy of the fluid flow predictions. Probability random fields can be used to represent the model uncertainty. When additional data become available, including production data or repeated seismic surveys, the static model is updated to reduce the initial uncertainty and improve the reservoir description (Oliver et al. 2008; Evensen 2009).

During injection and production, direct observations of the fluid saturation can be acquired at the well locations. However, this information is available only at sparse locations and cannot fully explain the local variations far away from the wells. Seismic data, repeatedly acquired at multiple times, are used to monitor the changes in seismic response during injection and production (Landrø 2001; Landrø et al. 2003; Dadashpour et al. 2010; Trani et al. 2012). The changes in fluid saturation affect the elastic response of the reservoir. Therefore, by measuring seismic and well data at different times, rock and fluid properties can be predicted by solving inverse problems. In reservoir modeling, this process is called history matching (Williams et al. 1998), which is a data assimilation problem where a set of unknown model variables are updated every time new data are available (Evensen 2009). Time-lapse seismic data have been previously used in history matching in reservoir applications (Huang et al. 1997; Landa et al. 1997; Aanonsen et al. 2003; Kretz et al. 2002; Dong et al. 2005). The integration of seismic data leads to several challenges associated with the resolution of the data and the dimensionality of the problem. In this work, the measured data include oil production and bottom hole pressure at the wells and surface seismic data, and the unknown model variables are the porosity and saturation fields, but the process can be extended to other rock and fluid properties.

Several methods have been presented to solve data assimilation problems, such as gradient-based techniques (e.g. Gauss–Newton), derivative-free optimization methods (e.g. Hooke–Jeeves direct search, genetic algorithm, and particle swarm optimization) and Monte Carlo methods (e.g. ensemble Kalman filter and particle filtering) as described in Oliver and Chen (2011). However, one of the main challenges in seismic and production data assimilation is the representation of the uncertainty. The Ensemble Kalman filter (EnKF) is a Bayesian updating method that relies on the Kalman filter predictor-corrector structure (Evensen 2009). This method has been successfully applied to several geoscience problems including subsurface monitoring (Nævdal et al. 2003; Oliver et al. 2008; Evensen 2009; Oliver and Chen 2011). Skjervheim et al. (2005) applied the EnKF approach to time-lapse seismic and production data in a three-dimensional field case.

Several variations of the ensemble approach have been presented to improve the accuracy of the predictions, including the ensemble smoother and the ensemble smoother with multiple data assimilation (Emerick and Reynolds 2013). Numerous attempts focusing on reproducing the uncertainty in the Kalman gain estimate have also been presented (Myrseth et al. 2010; Sætrom and Omre 2011). Mathematical formulations including localization, covariance inflation, and dimensionality reduction have also been tested for preventing overfitting, ensemble collapsing and spurious correlations and to make the approach applicable to practical applications, including non-linear models in large grids. However, ensemble based methods are generally based on Gaussian assumptions for the conditional distribution of the model, and therefore it is not theoretically applicable to models with multimodal random variables. A regression towards the mean occurs during the conditioning steps, thereby rendering posterior marginal distributions Gaussian. This is a challenging problem in reservoir simulation because subsurface properties, such as porosity and saturation, often show non-Gaussian distributions owing to the underlying geology. The focus on this study is on representing non-Gaussian features in the posterior ensemble of the variables of interest.

Statistical methods, such as Ensemble Randomized Likelihood (EnRML) (Chen and Oliver 2012), Gaussian anamorphosis (Bertino et al. 2003; Zhou et al. 2012), Gaussian mixture models (Dovera and Della Rossa 2011) and truncated pluri-Gaussian (Oliver and Chen 2018), have been developed to address this issue. EnRML and Gaussian anamorphosis attempt to modify the conditioning step to preserve the desirable features in the posterior ensemble, and they are compatible with any prior ensemble. Gausssian mixture and truncated pluri-Gaussian models assume specific prior distribution, and the ensemble algorithm is adapted to the prior model. Recent developments also include the indicator based data assimilation proposed in Kumar and Srinivasan (2018) and the hierarchical truncated pluri-Gaussian simulation (Silva and Deutsch 2019).

An alternative to Gaussian mixture models is the selection-Gaussian models (Arellano-Valle et al. 2006; Arellano-Valle and del Pino 2004; Omre and Rimstad 2018). These models have been applied to spatio-temporal inversion in Conjard and Omre (2020) and validated using synthetic examples. In this manuscript, the compatibility of the selection Kalman model framework detailed in Conjard and Omre (2020) is extended by integrating the selection Kalman model with the ensemble smoother. The resulting approach is named ’selection ensemble smoother’. The prior for the initial state vector is a selection-Gaussian distribution, but instead of sampling the initial ensemble directly from the selection-Gaussian distribution, the proposed approach takes advantage of the structure of the selection-Gaussian to sample from a Gaussian augmented state vector, thereby preventing unwanted regression towards the mean while keeping the computational cost to a minimum. A similar approach has been proposed for asymmetric priors using the closed skew Gaussian distribution (Naveau et al. 2005; Rezaie and Eidsvik 2014).

The proposed methodology is then tested on a two-dimensional channelized reservoir, with the goal of estimating the porosity and saturation fields based on seismic and production data. In Sect. 2, the problem is defined in a Bayesian formulation, the novel method is presented and the forward operator for the reservoir model is defined. In Sect. 3, a synthetic two-dimensional case study featuring a channelized reservoir with two facies is presented. In Sect. 4, conclusions are presented.

2 Methodology

The Methodology section describes the proposed framework for data assimilation in reservoir modeling. The Bayesian formulation of the selection ensemble smoother is first presented. Then, the forward operators for the geophysics and fluid flow modeling are summarized.

In this paper, \(f({\varvec{y}})\) denotes the probability density function (pdf) of a random variable \({\varvec{y}}\), \(\varphi _n({\varvec{y}};\varvec{\mu },\varvec{\Sigma })\) denotes the pdf of the Gaussian n-vector \({\varvec{y}}\) with expectation n-vector \(\varvec{\mu }\) and covariance \((n \times n)\)-matrix \(\varvec{\Sigma }\). Furthermore, \(\Phi _n(A;\varvec{\mu },\varvec{\Sigma })\) denotes the probability of the aforementioned Gaussian n-vector \({\varvec{y}}\) to be in \(A \subset {\mathbb {R}}^n\). Further, \({\varvec{i}}_n\) is used to denote the all-ones n-vector.

2.1 Hidden Markov Model

From a Bayesian perspective, the data assimilation can be assimilated in a hidden Markov model formulation, where at a given time the current state is updated based on the previous state and the current measurements. The state of the chain represents the values of the variables of interest. If a variable of interest is \({\varvec{r}}\) and the observable measurement is \({\varvec{d}}\), the goal of data assimilation is to predict the initial value of the variable of interest, i.e. the state of the chain \({\varvec{r}}_0={\varvec{r}}_{t=0}\) based on the data \({\varvec{d}}_t\) available at different times \(t=0,\ldots ,T\), assuming that the forward model and the likelihood function of the measurements are known.

Given the prior model of the initial state vector \({\varvec{r}}_0 \in {\mathbb {R}}^n\), the distribution of the vector of the following states \([{\varvec{r}}_{1:T+1}|{\varvec{r}}_{0}] =[{\varvec{r}}_{1} ,\ldots , {\varvec{r}}_{T+1}|{\varvec{r}}_{0}] \) is defined as

with

where \(\omega _t(\cdot )\in {\mathbb {R}}^n\) is the forward operator with random Gaussian n-vector \(\varvec{\epsilon }_t^{r}\), independent and identically distributed (iid) for each time t. The forward model defines a first-order Markov chain.

Consider, then, a set of measurements \({\varvec{d}}= \{{\varvec{d}}_1,\ldots ,{\varvec{d}}_T\}\) where \({\varvec{d}}_t \in {\mathbb {R}}^m, \forall t = 1,\ldots ,T\). It is assumed that the measured data depend on the variables of interest according to a likelihood model. The likelihood model for \([{\varvec{d}}|{\varvec{r}}]\) is conditionally independent and defined as

with

where \(\psi _t(\cdot ,\cdot )\in {\mathbb {R}}^m\) is the likelihood function with random Gaussian m-vector \(\varvec{\epsilon }_t^{d}\), iid for each t.

Let \(\Phi _t = \omega _t \circ \omega _{t-1} \circ \ldots \omega _1\) such that

then, it is possible to write \( [{\varvec{d}}_t|{\varvec{r}}_0]\) as

and consequently \([{\varvec{d}}|{\varvec{r}}_0]\) as

Equations 1 and 4 provide the framework for the EnKF, whereas Eq. 7 gives the framework for the ensemble smoother using the same hidden Markov model formulation.

2.2 Ensemble Smoother

The ensemble smoother is an inverse method that assimilates the measured data \({\varvec{d}}\) in a unique step by evaluating the likelihood \(f({\varvec{r}}_0|{\varvec{d}})\). The likelihood model described in Eq. 7 is represented as

where \(g({\varvec{r}}_0) =\begin{bmatrix} \psi _1 \circ \Phi _{0}({\varvec{r}}_0) \\ \ldots \\ \psi _T \circ \Phi _{T-1}({\varvec{r}}_0)\end{bmatrix}\) and \(\varvec{\delta } =\begin{bmatrix} \varvec{\epsilon }_1^d \\ \ldots \\ \varvec{\epsilon }_T^d\end{bmatrix}\).

In this data assimilation approach, an ensemble of \(n_e\) members \({\varvec{r}}^{p(i)}, i=1,\ldots ,n_e\) is generated from the prior distribution of the initial state \(f({\varvec{r}}_0)\) and the ensemble members are updated as

where \({\varvec{d}}^i\) represents the pseudo-data (i.e. the data predicted from the model according to the forward operator) generated for the ensemble member i as \({\varvec{d}}^{(i)} = g({\varvec{r}}^{p(i)}) + \varvec{\delta }\), and where the crosscovariance and covariance matrices, \( {\hat{\varvec{\Gamma }}}_{{r},d}\) and \( {\hat{\varvec{\Sigma }}}_{d}\), are computed as

with \(\overline{{\varvec{r}}}^{p}\) being the empirical mean from the initial ensemble and \(\overline{{\varvec{d}}}\) the empirical mean of the pseudo-data. Localization has been proposed in data assimilation problems to avoid spurious correlations and ensemble collapse (Houtekamer and Mitchell 1998; Chen and Oliver 2011). The method is based on distance-dependent functions to constrain the updates of the model variables and their variability to a specific area based on the observed data in that region. Localization should be applied on a scale larger than the correlation length of the spatial variograms of the model variables to avoid introducing biases on the spatial correlation and resolution of the model. In the case study, localization is used on both \({\hat{\varvec{\Gamma }}}_{{r},d}\) and \({\hat{\varvec{\Sigma }}}_{d}\) because, as is often the case, the size of the ensemble is small compared to the dimension of the state vector.

One of the most popular inverse methods in reservoir modeling simulation is the ensemble smoother with multiple data assimilation (ES-MDA) (Emerick and Reynolds 2013). In the MDA version of the ensemble smoother, the data are assimilated multiple times to improve convergence. This approach requires a limited number of runs of the forward operator and handles the non-linearity in the forward and likelihood models. The mathematical formulation of the MDA approach is detailed in Emerick and Reynolds (2013).

In the ensemble smoother, the prior distribution for the initial state can be chosen freely, however it is difficult to preserve non-Gaussian features in the posterior distribution owing to the linearized update (Eq. 9). The selection ensemble smoother attempts to represent non-Gaussian features in the posterior distribution.

2.3 Selection Ensemble Smoother

The selection-Gaussian pdf can represent multimodality, skewness, and heavy-tailed distributions; it is an extension of the skew-Gaussian distribution (Omre and Rimstad 2018). Consider a Gaussian \((n+n)\)-vector \([{\tilde{\varvec{r}}},\varvec{\kappa }]\)

where \(\varvec{\mu }_{{\tilde{r}}}\) and \(\varvec{\mu }_{\kappa }\) are n-vectors, \(\varvec{\Gamma }_{\kappa |{\tilde{r}}}\) is a \((n\times n)\)-matrix, and \(\varvec{\Sigma }_{{\tilde{r}}}\), \(\varvec{\Sigma }_{\kappa }\), and \(\varvec{\Sigma }_{\kappa |{\tilde{r}}}\) are three covariance \((n\times n)\)-matrices with \(\varvec{\Sigma }_{\kappa }= \varvec{\Gamma }_{\kappa |{\tilde{r}}}\varvec{\Sigma }_{{\tilde{r}}}\varvec{\Gamma }_{\kappa |{\tilde{r}}}^T+\varvec{\Sigma }_{\kappa |{\tilde{r}}}\).

If \({\varvec{r}}_0\) is defined as \({\varvec{r}}_0 = [{\tilde{\varvec{r}}}|\varvec{\kappa }\in {A}]\), where \(A \subset {\mathbb {R}}^n\) is a selection set of dimension n, then \({\varvec{r}}_0\) is distributed according to a selection-Gaussian pdf given by

The structure of the selection-Gaussian model provides a suitable model for non-Gaussian features of the posterior distribution of the inverse problem.

A selection-Gaussian random variable \({\varvec{r}}_A \in {\mathbb {R}}^n\) can be written as \([{\varvec{r}}|\varvec{\kappa } \in A]\) with \({\varvec{r}}\in {\mathbb {R}}^n\), \(\varvec{\kappa }\in {\mathbb {R}}^n\), \(A \subset {\mathbb {R}}^n\), and where \([{\varvec{r}},\varvec{\kappa }]\) is jointly Gaussian. When conditioning on data, it is always possible to consider \([{\varvec{r}},\varvec{\kappa }]\), condition first on the data \({\varvec{d}}\) and then on \(\varvec{\kappa } \in A\). Similarly when transforming \({\varvec{r}}_A : \rightarrow g({\varvec{r}}_A)\), one can show that the distribution of \(g({\varvec{r}}_A))\) is equal to that of \([g({\varvec{r}})|\varvec{\kappa } \in A]\). In any data assimilation process where a selection-Gaussian distribution is chosen as a prior, it is therefore possible to work on the augmented Gaussian vector \([{\varvec{r}},\varvec{\kappa }]\) throughout the assimilation step(s), and condition on the latent variable \(\varvec{\kappa }\) being in A afterwards. The selection Ensemble Kalman filter detailed in (Conjard and Omre 2020) adopts this approach.

The proposed selection ensemble smoother for data assimilation problems is defined by a prior distribution \(f({\varvec{r}}_0)\) that is selection-Gaussian such that \({\varvec{r}}_0= [{\tilde{\varvec{r}}}_0|\varvec{\kappa } \in A], A \subset {\mathbb {R}}^n\), and where the prior state vector is represented by the augmented vector \([{\tilde{\varvec{r}}}_0,\varvec{\kappa }]\). When assessing \([{\varvec{r}}_0|{\varvec{d}}_{1:T}]\), the selection ensemble smoother first evaluates \( [{\tilde{\varvec{r}}}_0,\varvec{\kappa }|{\varvec{d}}_{1:T}]\) and then adopts a Markov chain Monte-Carlo (MCMC) algorithm (Omre and Rimstad 2018) to calculate \([{\varvec{r}}_0|{\varvec{d}}_{1:T}] = [{\tilde{\varvec{r}}}_0|\varvec{\kappa } \in A,{\varvec{d}}_{1:T}]\). There are two advantages in using the augmented state vector. First, in ensemble methods, the update step is optimal if the prior is Gaussian and the prior and the data are jointly Gaussian. Therefore, by enforcing a Gaussian initial state vector, the optimal update conditions are ensured. Then, since the update is optimal only if the prior is Gaussian and the forward and likelihood models linear and Gaussian, the traditional update tends to make the posterior ensemble more and more Gaussian; however, conditioning on the latent variable after the assimilation is performed allows representing non-Gaussian features in the posterior distribution.

The selection ensemble smoother proceeds as follows. First, \(n_e\) ensemble members are generated

from the augmented Gaussian prior distribution of the initial state \(f({\varvec{r}}_0,\varvec{\kappa })\), and they are updated as

where \({\varvec{d}}^i\) represents the pseudo-data generated for the ensemble member i as \({\varvec{d}}^{(i)} = g({\tilde{\varvec{r}}}^{p(i)}) + \varvec{\delta }\), and where \( {\hat{\varvec{\Gamma }}}_{{{\tilde{r}}}\kappa ,d}\) and \({\hat{\varvec{\Sigma }}}_{d}\) are calculated as follows

with \(\overline{{\tilde{\varvec{r}}}}^{p}\) and \(\overline{\varvec{\kappa }}^{p}\) being the empirical means from each component of the initial ensemble and \(\overline{{\varvec{d}}}\) being the empirical mean of the pseudo-data. In the case study, localization is used on both \( {\hat{\varvec{\Gamma }}}_{{{\tilde{r}}}\kappa ,d}\) and \({\hat{\varvec{\Sigma }}}_{d}\) because the size of the ensemble is small compared to the dimension of the state vector.

After conditioning on the data, the ensemble represents \([{\varvec{r}}_0,\varvec{\kappa }|{\varvec{d}}]\) which is assumed to be Gaussian. The target distribution \(f({\varvec{r}}_0|\varvec{\kappa }\in A,{\varvec{d}})\) can be written as

Equation 20 shows how sampling from the posterior distribution is performed: first from the truncated Gaussian \(\varvec{\kappa }^* \sim [\varvec{\kappa }| \varvec{\kappa } \in A,{\varvec{d}}]\) and then from the Gaussian vector \({\varvec{r}}^* \sim [{\varvec{r}}_0|\varvec{\kappa }^*,{\varvec{d}}]\). Hence \({\varvec{r}}^*\) are distributed according to the target distribution \(f({\varvec{r}}_0|\varvec{\kappa }\in A,{\varvec{d}})\).

The challenging step is the sampling from the truncated Gaussian, which is performed using an MCMC sampling algorithm (Omre and Rimstad 2018). The MCMC algorithm, denoted by S, generates \(\varvec{\kappa }^*\)

where \({\hat{\varvec{\mu }}}_{\varvec{\kappa }|{\varvec{d}}}\) and \({\hat{\varvec{\Sigma }}}_{\varvec{\kappa }|{\varvec{d}}}\) are the empirical mean and covariance matrix of the posterior ensemble for \(\varvec{\kappa }\). Note that the selection set A is considered constant throughout the algorithm. The empirical covariance matrix estimate \({\hat{\varvec{\Sigma }}}_{\varvec{\kappa }|{\varvec{d}}}\) is known to be subject to sampling errors, which are addressed by using localization on \({\hat{\varvec{\Sigma }}}_{\varvec{\kappa }|{\varvec{d}}}\). The algorithm itself has to be tailored to the specific application as rank deficiency is to be expected in high-dimensional inverse problems. The original algorithm expected a positive definite covariance matrix, and even though localization does increase the rank of the localised matrix, it is unlikely that it becomes full rank after the transformation. Therefore, singular value decomposition is used to evaluate the pseudo inverse instead of outright inverting the matrix in the algorithm. The singular value decomposition of \({\hat{\varvec{\Sigma }}}_{\varvec{\kappa }|{\varvec{d}}}\) gives,

where \({\varvec{U}}\) and \({\varvec{V}}\) are \((n \times n)\) orthogonal matrices, and \(\varvec{\Sigma }\) is an \((n \times n)\) diagonal matrix whose diagonal entries are non-negative. The pseudo inverse \({{\hat{\varvec{\Sigma }}}_{\varvec{\kappa }|{\varvec{d}}}}^+\) of \({\hat{\varvec{\Sigma }}}_{\varvec{\kappa }|{\varvec{d}}}\) is then given by

where \(\varvec{\Sigma }^+\) is the pseudoinverse of \(\varvec{\Sigma }\), which is formed by replacing every non-zero diagonal entry by its inverse and transposing the resulting matrix.

2.4 Forward Operator

The data assimilation problem discussed in this work requires two operators: the fluid flow simulation operator used as a forward model and the geophysical operator used for the likelihood model of the measured data.

The forecast of the dynamic behavior of fluid displacement in the subsurface requires a mathematical-physical model generally called fluid flow simulation. For hydrocarbon-water two-phase flow in subsurface reservoirs, the model is based on Darcy’s equation and mass balance law and includes two partial differential equations (PDE) in two unknowns, namely water saturation \(s_\mathrm{w}(t)\) and water pressure \(p_\mathrm{w}(t)\). This model assumes that there are only two immiscible fluids, the flow is isothermal, and the capillary pressure is a function of saturation. For a system of oil and water, the PDE system can be written as follows

where k is the absolute permeability, \(\phi \) is the porosity, d is the depth, \(p_\mathrm{c}\) is the capillary pressure, \(q_\mathrm{w}\) is the water production rate, \(q_\mathrm{o}\) is the oil production rate and the constants \(k_\mathrm{w}\), \(B_\mathrm{w}\), \(\mu _\mathrm{w}\), \(\gamma _\mathrm{w}\), \(\gamma _\mathrm{w}\), \(k_\mathrm{o}\), \(B_\mathrm{o}\), \(\mu _\mathrm{o}\), and \(\gamma _\mathrm{o}\) are parameters associated with the fluid properties of oil and water. If porosity and permeability are known, then the system can be solved using finite difference methods to compute the water and saturation and pressure, \(s_\mathrm{w}(t)\) and \(p_\mathrm{w}(t)\), at any time t. The oil saturation and pressure can then be computed from water saturation and water pressure.

The permeability field is assumed to be a point-wise function of the porosity field given by Kozeny–Carman’s equation

where a is a geometrical factor estimated by fitting the true permeability field. This approximation allows removing \({\varvec{k}}\) from the state vector because it is then a known function of the porosity \(\varvec{\phi }\).

Similarly, the prediction of the geophysical response of subsurface models requires a mathematical-physical model that includes rock physics relations and seismic wave propagation models. Rock physics models allow computing P-wave and S-wave velocities and densities given the petrophysical properties, porosity, and fluid saturation. The seismic wave propagation model allows calculating the seismic response, in terms of amplitude and travel time, based on the velocities and densities. For a partially-saturated porous rock of porosity \(\phi \) and water saturation \(s_\mathrm{w}\), the density \(\rho (\phi ,s_\mathrm{w})\) can be computed as

and the P-wave and S-wave velocities, \(v_p(\phi ,s_\mathrm{w})\) and \(v_s(\phi ,s_\mathrm{w})\), are given by Raymer–Dvorkin’s relations (Dvorkin et al. 2014)

where \(\rho _\mathrm{m}\) is the density of the solid phase, \(\rho _\mathrm{w}\) is the density of water, \(\rho _\mathrm{o}\) is the density of oil, \(K_\mathrm{m}\) is the bulk modulus of the solid phase, \(G_\mathrm{m}\) is the shear modulus of the solid phase, \(K_\mathrm{w}\) is the bulk modulus of water, and \(K_\mathrm{o}\) is the bulk modulus of oil. All these parameters are assumed to be constant and known. The reflection coefficients \(r_{pp}(t, \theta )\) associated with seismic wave propagation are a function of the seismic travel time and incident angle \(\theta \) and depend on the relative change in P-wave and S-wave velocity and density. For small incident angles, the reflection coefficients can be approximated using Aki–Richards equations (Aki and Richards 1980) as

where

with c being a constant value equal to the square of the average \(v_s/v_p\) ratio.

The amplitudes \(s(t, \theta )\) of the seismogram are then approximated as a convolution of a wavelet \(w(t, \theta )\) and the reflection coefficients \(r_{pp}(t, \theta )\) as

where the wavelet \(w(t, \theta )\) is generally assumed to be known. As shown in Buland and Omre (2003), the convolution can be discretized by decomposing the matrix associated with the forward operator as \({\varvec{G}}=\varvec{WAD}\), where \({\varvec{W}}\) is the wavelet matrix, \({\varvec{A}}\) is the reflection coefficient matrix, and \({\varvec{D}}\) is the first order differential matrix.

In the current implementation, we adopted a forward model based on the convolution of the wavelet with the linearized approximation of Zoeppritz equations (Shuey 1985; Aki and Richards 1980) because it is mathematically tractable, and the computational cost is limited. The proposed approach can be extended to other forward operations including convolutional models based on full Zoeppritz equations, Kennett’s invariant imbedding method and the Born weak scattering approximation (Zoeppritz 1919; Kennett 1984; Russell 1988; Sheriff and Geldart 1995; Yilmaz 2001; Aki and Richards 1980). In theory, the same formulation can also be applied to the full waveform model for wave propagation, however, the computational cost of the approach is still prohibitive for field-scale applications. Applications of ensemble-based methods to full waveform inversion problems have been proposed Thurin et al. (2019) and Gineste et al. (2020), according to Gaussian assumptions of the error and model variables.

3 Application

The proposed method is applied to a two-dimensional synthetic case to demonstrate the validity of the selection ensemble smoother (S-ES) and the advantages compared to the traditional ensemble smoother (ES).

The synthetic example mimics the production of hydrocarbon in a reservoir by water injection as shown in Liu and Grana (2018, 2020). The geology of the reservoir includes four channels in the north-south direction with an average porosity of 0.2. The channels are surrounded by shale with effective porosity close to 0. It is assumed that the entire reservoir is filled by oil with an irreducible water saturation of 0.2. Water is injected in four wells and oil is produced from six wells. All the wells are located within the channels. Fluid flow occurs predominantly within the channels due to the low porosity and permeability of the surrounding shale, and the injected water displaces the oil in place towards the producing wells.

The reservoir model mimics the two-phase (oil and water) flow due to water injection and oil production in a geological system represented in a two-dimensional uniform grid of \(n=64 \times 64\) cells of dimension \(40 \times 40 \times 25\) m. Figure 3 shows the true porosity \(\varvec{\phi }_r\) and permeability \({\varvec{k}}_r\) fields. The reference porosity model is generated using the following geostatistical modeling approach: First, a facies model for a channelized system is generated using object modeling with randomly sampled parameters of the channel geometry (length, width, and tortuosity). Then a porosity model is generated using sequential Gaussian simulation within each facies using facies-dependent prior distributions to mimic medium-high porosity within the channels and low porosity in the background shale. Finally, permeability is simulated in the logarithmic domain, conditioned on the porosity field using sequential Gaussian co-simulation. Figure 3 shows the geostatistical realization used as the reference (true) model for the application. Figure 3 also shows the locations of the four water injection wells \((i_1,i_2,i_3,i_4)\) and the six producer wells \((p_1,\ldots ,p_6)\).

Fluid flow is then simulated to create the reference (true) saturation maps for a time period of 12 years using the Matlab Reservoir Simulation Toolbox (MRST) (Lie 2019). Figure 4 shows the evolution of the water saturation in the field for the reference porosity and permeability field specified in Fig. 3. The initial water saturation \({\varvec{s}}_{w0}\) is set constant to 0.2, then water propagates predominantly within the channels until it reaches, after 12 years, one of the producers. Figure 5 shows the bottom hole pressure (BHP) and the oil production rate (OPR) every six months at the injector and producer wells, respectively.

In addition to the saturation and production data, a synthetic geophysical dataset is generated for the data assimilation problem. To generate seismic data, two additional geological layers representing non-permeable shale formations are added above and below the reservoir model. The model then includes three layers (top shale, reservoir, and bottom shale) and two interfaces (top reservoir and bottom reservoir). Seismic data are then generated using the rock physics model with constant mineral properties in sand and shale and the seismic convolutional operator. The seismic data are calculated for two incident angles of \(8^{\circ }\) (near) and \(24^{\circ }\) (far). Figure 6 shows the seismic data computed 12 years after injection and production started. Because the reservoir properties are assumed to be vertically homogeneous within the model, two main reflections are generated in the seismic response, at the top and at the bottom of the reservoir. For this reason, for each trace, the dimension of the data is \(4 \times 1\) (two angles and two measurements per angle), the dimension of the vector of elastic variables is \(9 \times 1\) (three properties and three locations per property), and the dimension of the vector of porosity and initial saturation is \(6 \times 1\) (two properties and three locations per property). The seismic operator is represented by a matrix \({\varvec{G}}={\varvec{W}}{\varvec{A}}{\varvec{D}}\) of dimensions \(4\times 9\) (\({\varvec{W}}\) is \(4\times 4\), \({\varvec{A}}\) is \(4\times 6\), \({\varvec{D}}\) is \(6\times 9\)) and the rock physics model is a system of 3 equations (one for each elastic property) with two unknowns (porosity and saturation).

The focus is on the prediction of the porosity field assumed to be spatially variable but constant in time, and the water saturation field before and after the experiment. Following the formalism in Eq. 8, all the measured data, production and seismic are saved in a vector \({\varvec{d}}\) that contains OPR and BHP measurements every six months for twelve years and the seismic amplitudes measured after twelve years. Porosity and initial water saturation are stored in \({\varvec{r}}_0\). The forward model f generates the model predictions according to the discretization of Eqs. 24, 25, 30 and 34 . The measured data are perturbed by adding white noise represented by the Gaussian error term \(\varvec{\delta }\) with standard deviation 1, \(\sqrt{2}/2\) for BHP and OPR respectively. The seismic amplitudes have a signal to noise ratio of approximately 2.5.

In this case study, data assimilation is performed using two methods: S-ES and ES. Figure 2 displays a flowchart summarizing the data assimilation procedure. For the S-ES, the prior distribution of the porosity field \(\varvec{\phi }\) is defined on log-scale as \(f(\log (\varvec{\phi }))\) and is selection-Gaussian with parameters

The parameters \((\gamma ^{\phi },A)\) are chosen so that the prior marginal is bimodal, with modes approximately matching the average porosity values of the two facies in the reference field. The spatial correlation (\(n\times n\))-matrix \(\varvec{\Sigma }_{\cdot }^{\rho }\) is defined by the second order exponential spatial correlation function \(\rho (\tau ;d_x,d_y) = \exp {[-(\frac{\tau _x^2}{d_x^2}+\frac{\tau _y^2}{d_y^2})]}\). The parameters values are listed in Table 1. The chosen values for \(d_x\) and \(d_y\) assume longer correlation along the y-axis than along the x-axis. The prior distribution for the initial water saturation field is Gaussian centered at \(\varvec{\mu _{sw}} = 0.2\times {\varvec{i}}_n\) with covariance matrix \(\varvec{\Sigma }_{sw} = 0.0005*\varvec{\Sigma }_{{\tilde{r}}}^{\rho }\), where \(\varvec{\Sigma }^{\rho }_{{\tilde{r}}}\) is the covariance matrix of the porosity field.

An initial ensemble \({\varvec{e}}_0 = \{ {\varvec{r}}_0^{p(i)}, i =1,\ldots ,n_e\}\) of size \(n_e= 500\) is generated. Covariance localization (Gaspari and Cohn 1999) is used for the conditioning. The localization was chosen restrictive enough to preserve an acceptable rank in the estimated covariance matrix, thereby preventing ensemble collapse. The mean of the realizations and the marginal distributions are shown in Fig. 7. The spatial stationarity is shown in the maps, whereas the marginal distribution is displayed by the histograms, which show the bimodality of the selection-Gaussian prior distribution for porosity.

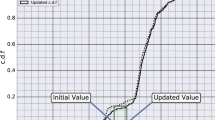

The posterior distributions of porosity and water saturation are then computed using the S-ES method. Figure 8 shows the posterior mean for the porosity field and the water saturation field after 12 years obtained by the S-ES. Comparing the posterior mean of the porosity field to the reference porosity field, it can be observed that channels are correctly identified with good accuracy. The predicted water saturation field is smoother than the reference water saturation, but the overall pattern is aptly reproduced. Figure 9 shows the prediction and \(95\%\) confidence interval for porosity and water saturation along a vertical and horizontal line of the two-dimensional field. Even though the uncertainty is slightly underestimated, the S-ES captures the characteristics of the variations of the porosity and water saturation fields. Inflation was not adopted to show the uncertainty quantification of the methods, but the use of inflation factors might mitigate the underestimation in the uncertainty quantification. Figure 10 shows realizations from the posterior distribution of the porosity field obtained with the S-ES. The channels are correctly predicted with some uncertainties on the boundaries. Figure 11 shows the spatial histograms of the predicted porosity field and water saturation field obtained with the S-ES and compares them the reference values. The marginal maximum a posteriori (MMAP) is used as a predictor due to the marginal bimodality of the posterior ensemble. The spatial histogram of the mean porosity field shows an accurate match with the reference porosity field, whereas the water saturation spatial histogram is slightly smoother than the references one. Both findings are consistent with the analysis of Fig. 8. Figures 12 and 13 show the predicted OPR and BHP for the S-ES respectively, showing a good agreement with the true data.

For the traditional ES approach, the prior ensemble is generated assuming that the prior distributions for the log-porosity and initial water saturation fields are Gaussian. The prior distribution for the porosity field is defined as \(f(\log (\varvec{\phi })) \sim \varphi _n(\log (\varvec{\phi }), \varvec{\mu }_{r},\sigma _r^2\times \varvec{\Sigma }_{{r}}^{\rho }),\) where \(\varvec{\mu }_{r} = -2.5 {\varvec{i}}_n\) and \(\sigma _r^2=0.2\) are chosen such that the marginal prior distribution approximates the spatial histogram of the reference porosity field. The covariance matrix \(\varvec{\Sigma }_{{r}}^{\rho }\) and the prior distribution for the initial water saturation field are the same as in the S-ES case. Figure 14 shows the results for the posterior ensemble for the porosity field and water saturation field obtained using the ES. The posterior mean of the porosity field seems to identify the channels but the porosity values are underestimated within the channels. The predicted water saturation is much smoother than the reference water saturation field and the prediction from the S-ES. The spatial histogram of the mean porosity field shows that the ES fails to capture the bimodal nature of the porosity field, while the spatial histogram of the water saturation field severely underestimates the number of locations where saturation remains equal to the initial value of 0.2 and overpredicts saturation in several locations. By analyzing the predictions of porosity and water saturation along the horizontal and vertical lines, the ES prediction fails to capture the sharp changes, even though the coverage of the confidence intervals is satisfactory. Figures 15 and 16 display the predicted OPR and BHP for the ES, showing a better match for OPR and a worse match for BHP compared to the S-ES results.

The two methods are compared using different statistics: the mean absolute error (MAE), the correlation, and the 80% coverage probability are considered. Let \(\text {MAE}_{\phi }\) and \(\text {corr}_{\phi }\) define, respectively, the MAE and the correlation between the predicted porosity field and the reference porosity field. Further, let \(\text {MAE}_{sw}\) and \(\text {corr}_{sw}\) define, respectively, the MAE and the correlation between the predicted water saturation field and the reference water saturation at the final time step. The 80% coverage probability is calculated for the predicted porosity field. The results are detailed in Table 2. The MAE measures for the ES are about 30% higher than those of the S-ES. The correlations for the porosity are comparable. The correlation for the water saturation for the S-ES is about 10% higher than that of the ES. The 80% coverage probability seems to indicates the ES underestimates the uncertainty more than the S-ES overestimates it. Overall these statistics are favorable to the S-ES. The sensitivity to the data does not seem to cause over-fitting. The use of multiple data assimilation and iterative methods could improve the data match for both the ensemble smoother and selection ensemble smoother. The S-ES has a higher computational demand than the ES because the posterior distribution needs to be sampled. On a \(64\times 64\) grid, it takes a few minutes to generate 200 samples, which is considered acceptable in view of the results. When the dimension of the data is very large, such as for field case reservoir models defined on 3D grids with millions of nodes, the matrix \({\hat{\varvec{\Sigma }}}_{d}\) in Eqs. 9 and 15 is too large to be stored, let alone inverted. In that case, one could propose a parametric approach where the covariance is defined by a few model parameters and maximum likelihood estimation is used to estimate those parameters (Skauvold and Eidsvik 2019). Alternatively, a methodological development along the lines of the parametric Kalman filter could be considered (Pannekoucke et al. 2016).

4 Conclusions

Seismic and production data assimilation is performed to predict the spatial distribution of porosity and water saturation using a novel method named ’selection ensemble smoother’, a Monte Carlo ensemble method that extends the ensemble smoother to selection-Gaussian models. By using a selection-Gaussian prior and an augmented initial state vector, the posterior distribution can represent multimodal posterior distributions. The main advantage of the selection ensemble smoother is the use of the augmented state vector which makes possible conditioning on the latent variable after data assimilation. The posterior distribution is then sampled assuming that the model is selection-Gaussian, thereby allowing multimodal features in the posterior distribution. The design of the experiment is optimal for the selection ensemble smoother approach since the channelized system leads to a bimodal porosity distribution. The results from the selection ensemble smoother are promising since the predictions are accurate and the data predictions match the measurements. The limited band-with of the seismic measurements, which limits the resolution of the data, prevents the inversion from clearly identifying the non-stationarity in the porosity field but still allows the selection ensemble smoother method to select the right mode, whereas the ensemble smoother results regress towards the mean.

References

Aanonsen SI, Aavatsmark I, Barkve T, Cominelli A, Gonard R, Gosselin O, Kolasinski M, Reme H et al (2003) Effect of scale dependent data correlations in an integrated history matching loop combining production data and 4D seismic data. In: Society of Petroleum Engineers, SPE reservoir simulation symposium

Aki K, Richards P (1980) Quantitative seismology: theory and methods. W. H. Freeman & Co, San Francisco

Arellano-Valle R, del Pino G (2004) From symmetric to asymmetric distributions: a unified approach. In: Genton M (ed) Skew-elliptical distributions and their applications: a journey beyond normality. Chapman & Hall / CRC, New York, pp 113–133

Arellano-Valle RB, Branco MD, Genton MG (2006) A unified view on skewed distributions arising from selections. Can J Stat 34(4):581–601

Bertino L, Evensen G, Wackernagel H (2003) Sequential data assimilation techniques in oceanography. Int Stat Rev 71(2):223–241

Buland A, Omre H (2003) Bayesian linearized AVO inversion. Geophysics 68(1):185–198

Chen Y, Oliver DS (2011) Localization of ensemble-based control-setting updates for production optimization. SPE J 17(01):122–136

Chen Y, Oliver DS (2012) Ensemble randomized maximum likelihood method as an iterative ensemble smoother. Math Geosci 44(1):1–26

Conjard M, Omre H (2020) Data assimilation in spatio-temporal models with non-Gaussian initial states-the selection ensemble Kalman model. Appl Sci 10(17):5742

Dadashpour M, Echeverria Ciaurri D, Mukerji T, Kleppe J, Landrø M (2010) A derivative-free approach for the estimation of porosity and permeability using time-lapse seismic and production data. J Geophys Eng 7(4):351–368

Dong Y, Oliver DS et al (2005) Quantitative use of 4D seismic data for reservoir description. SPE J 10(01):91–99

Dovera L, Della Rossa E (2011) Multimodal ensemble Kalman filtering using Gaussian mixture models. Comput Geosci 15(2):307–323

Dvorkin J, Gutierrez MA, Grana D (2014) Seismic reflections of rock properties. Cambridge University Press, Cambridge

Emerick AA, Reynolds AC (2013) Ensemble smoother with multiple data assimilation. Comput Geosci 55:3–15

Evensen G (2009) Data assimilation: the ensemble Kalman filter. Springer, Berlin

Gaspari G, Cohn S (1999) Construction of correlation functions in two and three dimensions. Q J R Meteorol Soc 125(554):723–757

Gineste M, Eidsvik J, Zheng Y (2020) Ensemble-based seismic inversion for a stratified medium. Geophysics 85(1):29–39

Houtekamer PL, Mitchell HL (1998) Data assimilation using an ensemble Kalman filter technique. Mon Weather Rev 126(3):796–811

Huang X, Meister L, Workman R et al (1997) Reservoir characterization by integration of time-lapse seismic and production data. In: SPE annual technical conference and exhibition, 38695, Society of Petroleum Engineers

Kennett BLN (1984) Guided wave propagation in laterally varying media, I. Theoretical development. Geophys J Int 79(1):235–255

Kretz V, Ravalec-Dupin L, Roggero F, et al (2002) An integrated reservoir characterization study matching production data and 4D seismic. In: SPE annual technical conference and exhibition, 77516, Society of Petroleum Engineers

Kumar D, Srinivasan S (2018) Ensemble-based assimilation of nonlinearly related dynamic data in reservoir models exhibiting non-Gaussian characteristics. Math Geosci 51:75–107

Landa J L, Horne R N, et al. (1997) A procedure to integrate well test data, reservoir performance history and 4-D seismic information into a reservoir description. In: SPE annual technical conference and exhibition, 38653, Society of Petroleum Engineers

Landrø M (2001) Discrimination between pressure and fluid saturation changes from time-lapse seismic data. Geophysics 66(3):836–844

Landrø M, Veire HH, Duffaut K, Najjar N (2003) Discrimination between pressure and fluid saturation changes from marine multicomponent time-lapse seismic data. Geophysics 68(5):1592–1599

Lie KA (2019) An introduction to reservoir simulation using MATLAB/GNU Octave: User guide for the MATLAB reservoir simulation toolbox (MRST). Cambridge University Press, Cambridge

Liu M, Grana D (2018) Ensemble-based seismic history matching with data reparameterization using convolutional autoencoder. In: SEG technical program expanded abstracts 2018, Society of Exploration Geophysicists, pp 3156–3160

Liu M, Grana D (2020) Time-lapse seismic history matching with an iterative ensemble smoother and deep convolutional autoencoder. Geophysics 85(1):15–31

Myrseth I, Omre H et al (2010) Hierarchical ensemble Kalman filter. SPE J 15(02):569–580

Nævdal G, Johnsen L M, Aanonsen S I, Vefring E H, et al. (2003) Reservoir monitoring and continuous model updating using ensemble Kalman filter. In: SPE annual technical conference and exhibition, 84372, Society of Petroleum Engineers

Naveau P, Genton MG, Shen X (2005) A skewed Kalman filter. J Multivar Anal 94(2):382–400

Oliver D, Chen Y (2018) Data assimilation in truncated Plurigaussian models: impact of the truncation map. Math Geosci 50:867–893

Oliver DS, Chen Y (2011) Recent progress on reservoir history matching: a review. Comput Geosci 15(1):185–221

Oliver DS, Reynolds AC, Liu N (2008) Inverse theory for petroleum reservoir characterization and history matching. Cambridge University Press, Cambridge

Omre H, Rimstad K (2018) Bayesian spatial inversion and conjugate selection Gaussian prior models. arXiv preprint arXiv:1812.01882

Pannekoucke O, Ricci S, Barthelemy S, Ménard R, Thual O, Tellus A (2016) Parametric Kalman filter for chemical transport models. Dyn Meteorol Oceanogr 68(1):31547

Rezaie J, Eidsvik J (2014) Kalman filter variants in the closed skew normal setting. Comput Stat Data Anal 75:1–14

Russell B H (1988) Introduction to seismic inversion methods. Society of Exploration Geophysicists

Sætrom J, Omre H (2011) Ensemble Kalman filtering with shrinkage regression techniques. Comput Geosci 15(2):271–292

Sheriff RE, Geldart LP (1995) Exploration seismology, 2nd edn. Cambridge University Press, Cambridge

Shuey RT (1985) A simplification of the Zoeppritz equations. Geophysics 50(4):609–614

Silva D, Deutsch C (2019) Multivariate categorical modeling with hierarchical truncated pluri-Gaussian simulation. Math Geosci 51:527–552

Skauvold J, Eidsvik J (2019) Parametric spatial covariance models in the ensemble Kalman filter. Spatial Stat 29:226–242

Skjervheim J A, Evensen G, Aanonsen S I, Ruud B O, Johansen T A, et al. (2005) Incorporating 4D seismic data in reservoir simulation models using ensemble Kalman filter. In: SPE annual technical conference and exhibition, 95789, Society of Petroleum Engineers

Thurin J, Brossier R, Métivier L (2019) Ensemble-based uncertainty estimation in full waveform inversion. Geophys J Int 219(3):1613–1635

Trani M, Arts R, Leeuwenburgh O et al (2012) Seismic history matching of fluid fronts using the ensemble Kalman filter. SPE J 18(01):159–171

Williams M, Keating J, Barghouty M et al (1998) The stratigraphic method: a structured approach to history matching complex simulation models. SPE Reserv Eval Eng 1(02):169–176

Yilmaz Ö (2001) Seismic data analysis: processing, inversion, and interpretation of seismic data. Society of Exploration Geophysicists

Zhou H, Li L, Franssen HJ, Gomez-Hernandez J (2012) Pattern recognition in a bimodal aquifer using the normal-score ensemble Kalman filter. Math Geosci 44:1–17

Zoeppritz K (1919) On the reflection and propagation of seismic waves. Gottinger Nachrichten 1(5):66–84

Acknowledgements

Authors acknowledge the Uncertainty in Reservoir Evaluation (URE) activity at the Norwegian University of Science and Technology (NTNU), the School of Energy Resources and the Department of Geology and Geophysics of the University of Wyoming for their support. Authors acknowledge Mingliang Liu (University of Wyoming) for developing the case study, Henning Omre (NTNU) for the helpful comments and suggestions, and SINTEF for providing the Matlab Reservoir Simulation Toolbox MRST.

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Conjard, M., Grana, D. Ensemble-Based Seismic and Production Data Assimilation Using Selection Kalman Model. Math Geosci 53, 1445–1468 (2021). https://doi.org/10.1007/s11004-021-09940-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11004-021-09940-2