Abstract

Linear response theory, originally formulated for studying how near-equilibrium statistical mechanical systems respond to small perturbations, has developed into a formidable set of tools for investigating the forced behaviour of a large variety of systems, including non-equilibrium ones. Mathematically rigorous derivations of linear response theory have been provided for systems obeying stochastic dynamics as well as for deterministic chaotic systems. In this paper we provide a new angle on the problem. We study under which conditions it is possible to perform predictions of the response of a given observable of a forced system, using, as predictors, the response of one or more different observables of the same system. This allows us to bypass the need to know all the details of the acting perturbation. Thus, we break the rigid separation between forcing and response, which is key in linear response theory, and revisit the concept of causality. We find that that not all observables are equally good as predictors when a given forcing is applied. In fact, the surrogate Green function one constructs for predicting the response of an observable of interest using a “bad” observable as predictor has support that is not limited to the nonnegative time axis. We explain the mathematical reasons behind the fact that an observable is an inefficient predictor. We derive general explicit formulas that, in absence of such pathologies, allow one to reconstruct the response of an observable of interest to N independent external forcings by using as predictors N other observables, with \( N\geq 1 \). We provide a thorough test of the theory and of the possible pathologies by using numerical simulations of the paradigmatic Lorenz’96 model. Our results are potentially relevant for problems like the reconstruction of data from proxy signals, like in the case of paleoclimate, and, in general, the analysis of signals and feedbacks in complex systems where our knowledge on the system is limited, as in neurosciences. Our technique might also be useful for reconstructing the response to forcings of a spatially extended system in a given location by looking at the response in a separate location.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Response theory provides a powerful array of methods for predicting how the statistical properties of a system change when one or more of its parameters are changed or when new forcings are added to the system. The usefulness of the theory comes from the fact that the response operators can be constructed by considering suitably defined statistical properties of the unperturbed system. This feature is particularly prominent in the case of systems with N degrees of freedom possessing invariant measure in the unperturbed state that is absolutely continuous with respect to the corresponding Lebesgue measure in N dimension. This is the case of deterministic equilibrium physical systems, whose evolution is governed by Hamiltonian dynamics and, consequently, the volume of the phase space is preserved, and in stochastically perturbed dynamical systems. In the case of full invariant measure, the Fluctuation–Dissipation theorem allows one to construct response operators from a suitably defined correlation functions in the unperturbed state [1,2,3].

Things are more difficult in the case of deterministic chaotic systems whose dynamics is governed by equations of motions featuring, on the average, a contraction of the phase space. In such systems, which can be thought as providing good models of non-equilibrium statistical mechanical systems, the fluctuation–dissipation theorem cannot be readily applied because there is no correspondence between forced and free fluctuations. This was clarified by Ruelle, who also showed that, regardless of such a complication, it is possible to construct a rigorous response theory for Axiom A dynamical systems [4,5,6]. The response theory has been later recovered using methods based on the transfer operator formalism [7], and extended to more general dynamical systems than Axiom A ones [8]. We remark that Axiom A dynamical systems can be considered of general physical relevance despite their rather specific mathematical properties than to the chaotic hypothesis [9], and the predictions of linear response theory have been verified numerically for systems that are not strictly Axiom A [10,11,12,13].

The lack of correspondence between forced and free fluctuations makes a careful study of the response theory extremely relevant. In the case of climate, response theory has been shown to be a very useful tool for predicting climate change [14, 15] and for critically assessing geoengineering practices [16]. The prediction is performed by constructing response operators for each observable of interest (for one or more forcings) through a set of suitably planned test experiments, and then use such operators for predicting the response to a different time pattern of the same forcing. This approach is promising in the case one has full control of the system under investigation, thus acting is a sort of real or virtual lab, where forcing can be changed at pleasure, experiments can be repeated, and suitable observables can be measured with a good degree of precision. The direct construction of the response operator faces the extremely hard computational challenge of having to deal with contributions coming from the effects of the perturbations on the stable and on the unstable manifold of the unperturbed system, which have entirely different properties in terms of convergence [17].

Straightforward applications of the fluctuation–dissipation theorem in a chaotic system like the climate have had various degrees of success (see e.g. [18, 19]). The problems mostly come from the fact that if we try to reconstruct the forced response from the free fluctuations we commit an error (due to the properties of the stable manifold of the system) which is hard to bound and which depends in a very nontrivial way on the specific choice of the applied forcing and of the observable of interest. In a recent paradigmatic analysis performed on a simplified climate model, it has been shown that whereas for a certain forcing the fluctuation–dissipation relation leads to a fairly good estimate of the response operator, for another choice of the forcing its skill is negligible. In this second circumstance, the response of the perturbed system can be interpreted as featuring climatic surprises, in the form of weather patterns that are entirely absent in the unperturbed system [20].

Other authors have instead taken the point of view of stochastic dynamics: in this case, linear response theory can be shown to hold under very weak assumptions, as the noise takes care of regularizing a lot of the pathologies that can be encountered as a result of deterministic dynamics [21]. This has been taken as starting point to further derive the validity of linear response theory for high-dimensional deterministic chaotic systems, by considering stochastic limits for macroscopic observables [22]. The latter point of view seems quite relevant in many complex systems where one, instead of looking at the details of the microscopic dynamics, is de facto interested in studying coarse grained variables and effective, coarse grained dynamics, which is stochastic as a result of the action of the hidden, subscale variables [23,24,25,26,27]. See also the rather comprehensive and informative review given in [28].

In this paper we want to explore a rather different direction, namely we would like to relate the response of different observables of the system to the applied perturbation. The goal is to understand to what extent it is possible to use one or more forced signals as surrogates for the actual external forcing, and then develop a response theory for predicting the response for an observable of interest based on such surrogates. The reason for looking at the response from this angle lies in the fact that whereas in the case of a direct problem we are usually knowledgeable of the specific properties of the system (including its evolution equations) and of the nature and spatio-temporal pattern of the applied forcing, this is not true when considering an inverse problem where we need to interpret data and have a possibly moderate control or knowledge of the time pattern of the applied forcing, but, in fact, we might access to multiple observables on top of the one we are specifically interested into. Information on the observable we want to study might be less abundant or subject to uncertainties or experimental errors.

The former situation is quite relevant in the case we are able to prepare and observe a system in a lab, and are able to operate on it by changing some of its parameters and the applied forcings. This is the classical Gedankenexperiment for which the usual response theory is relevant. The latter situation applies when our control and knowledge of the system is inherently limited, i.e. we can have access to information to a (possibly small) subset of the degrees of freedom of the system, and we might suffer from the presence of unavoidable uncertainties or gaps in the available data. Some examples of such conditions are found in neurosciences and in geosciences.

Let’s expand on the specific case of geosciences, as it has provided the initial inspiration for this work. While, mathematically, one can often deal with a generic, well-behaved, observable, some observables are definitely more meaningful than other ones, as in the obvious case of energy when considering physical systems. Additionally, in the case of geosciences, where collecting observations and modelling the dynamics of systems is far from being trivial, our ability to observe and/or to suitably model some observables—possibly of great relevance—can be substantially more limited than for other observables. These observables can be quite different in terms of characteristic temporal (and possibly spatial) scales of variability, as when comparing atmospheric versus oceanic fields.

Such issues are particularly relevant when considering the problem of paleoclimatic reconstruction performed by using proxy data. In this case, one has access to time series (with a varying degree of uncertainty) of measurements of physical and chemical properties of natural recorders of climate variability and change (e.g. tree rings, sediments, air bubble inside ice cores, etc.). The goal is to find an approximate functional relationship between what we can actually measure—the proxy data—and what we are interested into –climate variables such as temperature, humidity, etc. [29].

Additionally, finding functional relationships between the response of different observable to climate change (‘emergent constraints”) and testing them against observations has been proposed as a way to assess the quality of model and reduce uncertainty on the climatic observables we have harder time to model accurately [30,31,32].

Moreover, our aim is to provide a basis for improving and extending the classical theory of feedbacks, which assumes instantaneous relations between the response of different climatic variables to forcings or, in the opposite limit, assumes that slow variables unidirectionally modulate the response of fast ones [33]. In some cases, the effect of an external forcing on a specific variable of interest is studied by looking at how it is mediated by the response of other variables and, in turn, on their influence of the variable of interest. Let’s give an example: usually, in the climate literature it is said that, in global warming conditions, the increase of global precipitations is caused by the increase in the average atmospheric temperatures. In turn, roughly speaking, because of the Clausius-Clapeyron relation, this leads to an increased capability of the atmosphere to retain water vapour [34]. Instead, both the increase of the atmospheric temperature and of the global precipitation are caused by the increase of CO2 concentration, which is the real forcing applied to the system. Nonetheless, the statement makes indeed physical sense, as a result of the time scales of the involved processes. Another more specific example: it is generally thought that under global warming conditions one might expect stronger (but less frequent for a complex set of reasons) hurricanes mostly as a result of higher surface temperatures of the tropical oceans [35]. In order to test such a hypothesis—and isolating the effect of the surface temperature change on the statistical properties of hurricanes—a typical (yet now somewhat outdated) strategy relies on performing simulations where, instead of using coupled global climate models with prescribed forcing due to changes in the CO2 concentration, one uses atmospheric general circulation models having as boundary conditions prescribed warmer sea surface temperature in the desired region [36].

We will try to provide a formal treatment of these problems based upon the linear response theory as discussed above, and we will try to define under which conditions the choice of an observable is suboptimal (or not so) in terms of the information it conveys. Can we distinguish between good observables, usable for predicting the response of other observables, and less useful ones? How do the time scales of the response of each observable to the applied forcing impact such property of bearing potential predictability? This entails defining, within a system, a (possibly, approximate) chain of causality associated to the forcing between different observables, or, in the case of spatially extended systems, between different regions of the system.

As mentioned above, response theory has been successfully used in the context of climate problems and we hope to provide an additional contribution in this direction, even if our treatment is more general and applications can be foreseen in other areas of science as well.

The paper is organised as follows. In Sect. 2 we provide a rapid summary of the formalism of response theory, presenting it in the context of deterministic dynamical systems. We will always assume that the chaotic hypothesis [9], so that we can assume, de facto, that the system is close enough (for our purposes) to being uniformly hyperbolic. Nonetheless, all the subsequent results and considerations apply a fortiori in the case of rather general stochastic systems, where the conditions for the applicability of response theory are quite relaxed, as mentioned above. Section 3 provides the basic example detailing how one can use the response of one generic observable to forcing as a predictor of the response of another generic observable, partly bypassing the need to know all the details of the forcing. Explicit formulas are derived by using complex analysis and linear algebra, under assumptions—discussed later—which are needed for performing such operations. Section 4 provides the generalisation of what shown in Sect. 3 to the case where N forcings are applied to the system. In this case, one needs the knowledge of the response of N generic observables to be able to predict the response of an observable of interest. Section 5 details the fundamental limitations of what discussed before. Not in all cases the operation is practically possible, as the functional relations one finds in the frequency space do not always lead to usable, causal Green functions for performing the predictions. Section 6 provides some examples of numerical experiments performed using the now classical Lorenz 96 model [37] to support our results. In Sect. 7 we present our conclusions and ideas for future investigations.

2 Response Theory Formalism

Let’s consider a smooth autonomous chaotic continuous-time dynamical system acting on a smooth compact manifold \( {\mathcal{M}} \) evolving from an initial condition \( x_{0} \) at time \( t = 0 \). We define \( x\left( {t,x_{0} } \right) = \varPi^{t} \left( {x_{0} } \right) \) its state at a generic time \( t \), where \( \varPi^{t} \) is the of evolution operator. Let’s now consider the evolution for a time \( t \) of a general observable \( O\left( x \right) \). We define the Koopman operator \( S^{t} \) operating on the observable \( O \) as \( S^{t} O = O \circ \varPi^{t} \), so that \( S^{t} \left( {O\left( {x_{0} } \right)} \right) = O\left( {\varPi^{t} \left( {x_{0} } \right)} \right) \) [38]. The corresponding set of differential equations can be customarily written as

where \( F\left( y \right) = d/d\tau \left. {\varPi^{\tau } \left( y \right)} \right|_{\tau = 0} \). The evolution of the measure \( \rho \) driven by the dynamical system given in Eq. (1a) is described by the Perron-Frobenius operator [38]. The operator \( {\mathcal{L}}^{t} \) defining the forward evolution of the measure for a time \(t\) is the adjoint of the operator \( S^{t} \), and is defined by \( \left\langle {{\mathcal{L}}^{t} \rho ,{\text{O}}} \right\rangle \equiv \left\langle {\rho ,{\text{S}}^{t} {\text{O}}} \right\rangle \), where \( \left\langle {\mu ,O} \right\rangle = \mathop \smallint \nolimits \mu (dx)O(x) \) indicates the expectation value of the observable O according to the measure \( \mu \). The family of operators \( \left\{ {{\mathcal{L}}^{t} } \right\}_{t\, \ge \,0} \), forms a one-parameter semigroup, such that \( {\mathcal{L}}^{t + s} = {\mathcal{L}}^{t} {\mathcal{L}}^{s} \) and \( {\mathcal{L}}^{0} = 1 \). The same applies for the family of operators \( \left\{ {S^{t} } \right\}_{t \ge 0} \). The invariant measure \( \rho_{0} \) of the dynamical system given in Eqs. (1a, 1b) is the eigenvector with eigenvalue 1 for all \( \left\{ {{\mathcal{L}}^{t} } \right\}_{t \ge 0} \).

Assuming strong continuity and boundedness of the semigroup given by \( \left\{ {{\mathcal{L}}^{t} } \right\}_{t \ge 0} \), we can introduce the Liouville operator L, such that \( {\mathcal{L}}^{t} = \exp \left( {tL} \right) \). Assuming that the measure is smooth with respect to Lebesgue, so that we can write \( \left\langle {\rho ,O} \right\rangle = \mathop \smallint \nolimits dx \rho (x)O(x), \) we can write the Liouville equation for the evolution of the density \( \rho \) mirroring the set of differential equations in Eq. (1a):

We now perturb the autonomous dynamics given in Eqs. (1a, 1b) by one specific forcing, so that the system is modified as follows:

where \( G_{1} (x) \) is the forcing and \( e_{1} \left( t \right) \) is its time modulation. Having in mind the example of climate change, one may think \( G_{1} (x) \) as the extra radiative forcing due to the anomaly of CO2 concentration, with \( e_1(t) \) defining its time-pattern; see [10, 11]. Assuming—as mentioned above—the chaotic hypothesis, response theory says that for any sufficiently smooth (e.g. C3) observable \( \varPsi_{1} (x) \) the (time-dependent) change in the expectation value with respect to the unperturbed case can be written in linear approximation as

where \( \varGamma_{{\varPsi_{1} ,G_{1} }} \left( \tau \right) \) is the (causal) Green function. \( \varGamma_{{\varPsi_{1} ,G_{1} }} \left( \tau \right) \) can be written in terms of the properties of the unperturbed flow and depends explicitly on \( \varPsi_{1} ,G_{1} \). Using Ruelle’s response theory, we have:

where \( \varTheta \left( t \right) \) is the Heaviside’s distribution. Note that in the case of a system possessing an invariant measure that is smooth with respect to Lebesgue, so that \( \rho_{0} \left( {dx} \right) = \rho_{0} \left( x \right)dx \), we can write:

where \( L_{{G_{1} }} \), if \( e_{1} \left( t \right) = 1 \), is the perturbation to the Liouville operator on the right hand side of Eq. (1b) due to the introduction of the extra forcing described by \( {\text{G}}_{1} (x) \). Equation (3c) provides a rather general form of the fluctuation–dissipation theorem.

Note that Eqs. (2)–(3a, 3b, 3c) indicate that the time-dependent version of the Ruelle response theory can be used for practically constructing the time-dependent measure defining the pullback attractor [39,40,41,42] of the non-autonomous system given in Eq. (2). The results can be extended beyond linear approximation [3, 43] and applies for small (in principle, infinitesimal) forcings. Response theory becomes of little utility when one is near critical transitions [44, 45], which can be seen as phase transitions for—in general—non-equilibrium systems.

After taking the Fourier Transform of Eq. (3a), we obtain:

where \( \widetilde{\varGamma }_{{\varPsi_{1} ,G_{1} }} \left( \omega \right) \) is usually referred to as susceptibility. We remark that any \( \widetilde{\varGamma }_{\varPsi ,G} \left( \omega \right) \), can, under suitable assumptions, be written in general as:

where \( \sigma_{k} \) are—for all choices of \( \varPsi ,G \)—the eigenvalues of the Liouvillian operator \( L \) introduced in Eq. (1b), while the factors \( \alpha_{k} \) depend on the choice of \(\Psi\) and \(G\) and are constructed by computing the projection of response operator on the corresponding eigenvectors. The constants \( \pi_{k} = i\sigma_{k} \) are usually referred to as Ruelle-Pollicott poles [7, 46, 47]. If we consider smooth enough observables, causality implies that \( \pi_{k} \) do not have positive imaginary component, i.e. \( {\Im }\left[ {\sigma_{k} } \right]\, = \,{\Re }\left( {\pi_{k} } \right)\, < \,0 \forall k \) [3,4,5]. As a result, the functions \( \widetilde{\varGamma }_{\varPsi ,G} \left( \omega \right) \) are analytic in the upper complex \( \omega \) plane (and have a meromorphic extension on a strip including the real axis in the lower complex \( \omega \) plane), which leads to the fact the functions \( \widetilde{\varGamma }_{\varPsi ,G} \left( \omega \right) \) obey Kramers–Kronig relations [48] for all choices of smooth \( \varPsi ,G \) [4,5,6, 43]. The presence of at least one Ruelle-Pollicott pole, say \( \pi_{1} , \) with real part very close to zero is associated to slow decay of correlations with time t of the form \( \approx \exp \left[ {{\Re }\left( {\pi_{1} } \right)t} \right] \) in the unperturbed system and, as first discussed in [49], can lead to breakdown of linear response for small forcings; see also a detailed analysis in the case of finite dimensional Markov chains in [50]. As recently clarified in [44, 45], such a condition can be considered in some cases as an effective flag for anticipating critical transitions.

One should note that the expression for the susceptibility given in Eq. (5) mirrors very closely the quantum expressions for the electric susceptibility for e.g. atoms or molecules. In this latter case, the summation involves all the pairs of the eigenstates of the unperturbed Hamiltonian operator, of the system, and in each term of the summation the imaginary part of the poles corresponds to the energy difference between the pair of considered eigenstates, the real part is the so-called line width of the transition (whose inverse is the life time), and the numerator is the so-called dipole strength for the considered transition [51]. The analytic properties of the electric susceptibility function can be associated to the fact that the system is energetically passive.

At practical level, it is important to underline that the formal similarities between linear response theory for equilibrium and nonequilibrium systems discussed in [46] allow in principle one to use algorithms developed for the analysis of electronic systems such as Vector Fitting (VF) [52] and the more advanced RKFIT [53] for the analysis of the response of multiple observables to perturbations for a general nonequilibrium system and, in particular for estimating the Ruelle–Pollicott resonances.

3 Observables as Predictors and Predictands: Causality, Locality, and Memory Effects

Now, let’s consider a second observable \( \varPsi_{2} (x) \). Repeating the derivation as in Eq. (4) above, we find:

By taking the ratio of Eqs. (4) and (6), it is easy to derive that

where this relation holds regardless of the time pattern of the forcing \( G_{1} \), but by just assuming its presence (i.e. \( e_{1} \left( t \right) \) cannot be identically zero). The previous equation can now be written as

where

or, in the time domain, after taking the inverse Fourier Transform, as

which, in general, is not local in time, but indeed linear. We refer to \( \widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \) as the surrogate susceptibility and to \( {\text{H}}_{{1,2,G_{1} }} \left( \tau \right) \) as the surrogate Green function. One can clearly exchange the roles of \( \varPsi_{1} \) and \( \varPsi_{2} \) and derive

where, clearly, \( \widetilde{\text{H}}_{{1,2,G_{1} }} \left( \omega \right) = 1/\widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \). We underline that the surrogate Green functions \( \widetilde{\text{H}}_{{1,2,G_{1} }} \left( \omega \right) \) and \( \widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \) depend on the specific choice of the forcing \( G_{1} \). Next, we find an expression of the integration kernels \( {\text{H}}_{{2,1,G_{1} }} \left( t \right) \) and \( {\text{H}}_{{1,2,G_{1} }} \left( t \right) \) in order to understand, e.g., whether one of the two observables can be seen as precursor of the other.

The first remark is that both functions \( \widetilde{\varGamma }_{{\varPsi_{1} ,G_{1} }} \left( \omega \right) \) and \( \widetilde{\varGamma }_{{\varPsi_{2} ,G_{1} }} \left( \omega \right) \) are analytic in the upper complex \( \omega \) plane, and so is their ratio, unless \( \widetilde{\varGamma }_{{\varPsi_{1} ,G_{1} }} \left( \omega \right) \) and \( \widetilde{\varGamma }_{{\varPsi_{2} ,G_{1} }} \left( \omega \right) \) possess complex zeros located there. For the moment we assume that no complex zeros are found in the upper complex \( \omega \) plane; we will study in Sect. 5 the important implications of relaxing such an assumption.

In the rest of Sect. 3, we make some assumptions on the functional form of \( \widetilde{\text{H}}_{{1,2,G_{1} }} \left( \omega \right) \) in order to derive explicit formulas that can be used to provide a more intuitive physical interpretation to our results. Nonetheless, the main findings of the paper do not depend on the approximations taken below.

If we neglect for the moment the essential spectrum of the operator L in Eq. (1b), and using Eq. (5), we propose to approximate \( \widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \) with a generic rational function of the form

where we can consider arbitrarily large values of N and M, and where all the \( \omega_{j} \)’s and \( \upsilon_{k} \)’s are—in general—distinct. Because of analyticity, all the constants \( \upsilon_{k} \)’s have negative imaginary part. Obviously, by the same token, also all the poles of \( \widetilde{\text{H}}_{{1,2,G_{1} }} \left( \omega \right)\, = \,1/\widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \) must be in the upper complex \( \omega \) plane, so that all the constants \( \omega_{j} \)’s must also have negative imaginary part. Note that Eq. (11) implies that for real values of \( \omega \) much larger in absolute value that the largest in absolute value among the \( \omega_{j} \)’s and \( \upsilon_{k} \)’s, we have \( \widetilde{\varGamma }_{{\varPsi_{1} ,G_{1} }} \left( \omega \right) \sim \widetilde{\varGamma }_{{\varPsi_{2} ,G_{1} }} \left( \omega \right)\omega^{N - M} \), so that \( \omega^{N - M} \) must correspond to the ratio between the asymptotic behaviours of the two susceptibilities. We can then express \( \widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \) as follows:

where we perform the standard division of polynomials, so that \( \widetilde{S}_{{2,1,G_{1} }} \left( \omega \right) \) is a polynomial of order \( N - M \) (\( {\text{if}}\,N \ge M \)) while \( \widetilde{R}_{{2,1,G_{1} }} \left( \omega \right) \) is of order strictly smaller than \( \widetilde{Q}_{{2,1,G_{1} }} \left( \omega \right) \). Clearly, \( \widetilde{S}_{{2,1,G_{1} }} \left( \omega \right) = 0 \) if \( N < M \). A standard partial fraction expansion gives us the following expression for \( \widetilde{\text{K}}_{{2,1,G_{1} }} \left( \omega \right) \)

where \( \alpha_{k} = \widetilde{P}_{{2,1,G_{1} }} \left( {\upsilon_{k} } \right)\left( {\left. {\frac{{d\widetilde{Q}_{{2,1,G_{1} }} \left( x \right)}}{dx}} \right|_{{x = \upsilon_{k} }} } \right)^{ - 1} \) and \( \delta_{i,j} \) is the standard Kronecker’s delta which is nonvanishing only when the two arguments are equal. We remark that the constant \( \upsilon_{k} \) have, in general, nothing to do with the Ruelle-Pollicott poles \( \pi_{k} = i\sigma_{k} \) defined above, because here we are constructing the properties of a constrained dynamics where one of the observables acts as surrogate forcing applied to the other one.

We recall that the inverse Fourier transform of \( \left( { - \,i\omega } \right)^{p} \) is \( \delta^{p} \left( t \right) \), where \( \delta^{p} \left( t \right) \) indicates the pth derivative of the Dirac’s \( \delta \) distribution, and we define: \( \widetilde{S}_{{1,2,G_{1} }} \left( \omega \right) = \mathop \sum \limits_{l = 0}^{N - M} \gamma_{l} \left( { - \,i\omega } \right)^{l} \). We also recall that, assuming that \( \upsilon_{k} \) has a negative imaginary part, the inverse Fourier transform of \( 1/\left( {\omega - \upsilon_{k} } \right) \) is \( \varTheta \left( t \right)\exp \left[ { - \,i\upsilon_{k} t} \right] \). We then obtain:

where we have separated the singular contribution \( S_{{2,1,G_{1} }} \left( \tau \right) \) to the Green function from the non-singular one, given by \( {\text{K}}_{{2,1,G_{1} }} \left( \tau \right) \). After performing the convolution integral shown in Eq. (9), we derive:

The terms in the first summation provide a local (in time) link between \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \) and \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \). The remaining terms are due to the non-singular component of the Green function and provide the non-local (in time) contribution, where memory effects are relevant. We recall that, asymptotically, the decay of the memory is controlled by the pole with the smallest (in absolute value) real part. At finite time, the coefficients \( \alpha_{k} \) contribute to determining which terms of the previous summation are dominant.

As discussed above, we have that \( \widetilde{\text{H}}_{{1,2,G_{1} }} \left( \omega \right) = 1/\widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \). Therefore, if the integration Kernel \( \widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) = \widetilde{\text{K}}_{{2,1,G_{1} }} \left( \omega \right) \) contains purely non-local terms—e.g. if N < M discussed above, see Eqs. (12)–(13)—then we have that necessarily \( \widetilde{\text{H}}_{{1,2,G_{1} }} \left( \omega \right)\left( \tau \right) \) will be, instead, of the form given in Eq. (15), with non-vanishing contributions from the Dirac’s delta and its derivatives:

where we have used new symbols for the coefficient in front of the various terms and have inserted the appropriate poles \( \omega_{k} \)’s in the last summation. Note that we should not expect the set of poles \( \omega_{k} \) and \( \upsilon_{k} \) to be identical, and this reflects into the fact that different observables retain differently the properties of the memory of the system.

In this case, there is a clear difference between the two observables \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \) and \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \), because local (in time) information is relevant only in one direction of the inference. Nonetheless, it is important to note that inference is possible from any pair of observables, in both directions. Furthermore, we note that, in this case also \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) also plays the role of a surrogate forcing, because it appears in the convolution integral. The memory terms in Eq. (16) drop out when \( \widetilde{P}_{{2,1,G_{1} }} \left( \omega \right) \) in Eq. (11) is a constant. This is in general realised when \( \widetilde{\text{K}}_{{2,1,G_{1} }} \left( \omega \right) \) has a constant numerator.

A clearer symmetry is established between \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \) and \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \) when N = M. In this case \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) is given by a term proportional to \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) plus a non-local term depending on \( \delta \left\langle {\varPsi^{\left( 1 \right)} } \right\rangle \left( t \right) \) at previous times, and the same reversing the applies reversing the roles of the two observables, even if the non-local rest term is clearly different in the two cases, compare Eqs. (15) and (16) setting N = M (in this case \( \varphi_{0} = 1/\gamma_{0} \)). In general, since the poles \( \omega_{k} \) and \( \upsilon_{k} \) are in general different, the time scales over which the memory acts in the two directions of prediction are in general different.

4 Generalizing the Results for More Complex Patterns of Forcing

We now wish to generalise the results presented above by treating the case where multiple independent forcings are applied to the system. The price to pay will be, as shown below, the need for considering multiple observables as predictors. Let’s consider the case of a more complex pattern of perturbations added to the system:

where \( G_{1} (x) \) and \( G_{2} \left( x \right) \) are non-parallel vector fields modulated by two different time patterns \( e_{1} \left( t \right) \) and \( e_{2} \left( t \right) \). We now consider three independent observables \( \varPsi_{1} \), \( \varPsi_{2} \), and \( \varPsi_{3} \), whose change in the expectation value due to the introduction of the forcing can be written as:

Following the same approach as before, we would like to be able to construct an effective equation for expressing the change in one of the observables as a function of the change in the other ones. Without loss of generality, we want to find a relationship

where we have slightly simplified the notation for matters of readability. We now substitute the right hand side of Eqs. (18a, 18b, 18c) in (19) and obtain:

Since \( e_{1} \left( \omega \right) \) and \( e_{2} \left( \omega \right) \) are arbitrary, we derive:

which gives:

As all the susceptibility functions are analytic in the upper complex a \( \omega \) plane, if one excludes complex zeros in the upper complex a \( \omega \) plane for the denominator in the fist factor on the right hand side of Eq. (22), one derives that also \( {\text{H}}_{3,1} \left( \omega \right) \) and \( {\text{H}}_{3,2} \left( \omega \right) \) are also analytic in the same region. We then derive the following integro-differential equation describing the time evolution of \( \delta \left\langle {\varPsi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) as a function of the past and present state of \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) and \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \):

where \( {\text{H}}_{{{\text{i}},{\text{j}}}} \left( t \right) = {\text{S}}_{{{\text{i}},{\text{j}}}} \left( t \right) + {\text{K}}_{{{\text{i}},{\text{j}}}} \left( t \right) \), with \( {\text{S}}_{{{\text{i}},{\text{j}}}} \left( t \right) \) indicating the singular component of the integration kernel and \( {\text{K}}_{{{\text{i}},{\text{j}}}} \left( t \right) \) the non-singular one. Equation (23), similarly to Eq. (10), has the remarkable feature of applying regardless of the time patterns \( e_{1} \left( t \right) \) and \( e_{2} \left( t \right) \), which may be hard to access in many applications. We remark that, instead, \( \widetilde{\text{H}}_{3,1} \left( \omega \right) \) and \( \widetilde{\text{H}}_{3,2} \left( \omega \right) \) do depend on the vector fields \( G_{1} \left( x \right) \) and \( G_{2} \left( x \right) \).

The problem can be generalized to the case where N independent forcings are applied:

and we wish to express the response of one observable \( \delta \left\langle {\varPsi_{N + 1} } \right\rangle^{\left( 1 \right)} \) as a linear combination of the response of other N observables as follows:

By expressing \( \delta \left\langle {\widetilde{{\varPsi_{k} }}} \right\rangle^{\left( 1 \right)} \left( \omega \right) = \sum\nolimits_{l = 1}^{K} {\widetilde{\varGamma }_{{\varPsi_{k} ,G_{l} }} \left( \omega \right)\widetilde{e}_{l} \left( \omega \right)\,} {\text{for}}\,k = 1, \ldots ,N + 1,{\text{ where}}\,\,\widetilde{\varGamma }_{{\varPsi_{k} ,G_{l} }} \left( \omega \right) \) is the susceptibility of the observable \( \varPsi_{k} \) subjected to the forcing \( G_{l} \left( x \right) \), and inserting this in Eq. (24), we derive by equating all terms with the same factor \( \widetilde{e}_{l} \left( \omega \right),l = 1, \ldots ,N: \)

which gives as final solution:

which defines the N surrogate susceptibilities of the system. By applying the inverse Fourier transform to \( \widetilde{\text{H}}_{N + 1,k} \left( \omega \right),k = 1, \ldots ,N, \) we can generalise Eq. (23) as follows:

Equations (10), (23), and (28) provide, in increasing level of generality, a comprehensive way for reconstructing the response of one observable of the system when one knows the response of other observables. As of the derivation above, the theory seems extremely flexible, as, in principle, no constraints exist in the choice of the predictor(s) and of the predictand. An important—in fact, essential—caveat is reported in Sect. 5 below.

5 Remarks on the Effects of the Presence of Complex Zeros in the Effective Susceptibility Functions

Let’s give a new, critical look at Eqs. (8)–(10). Assume now that \( \widetilde{\varGamma }_{{\varPsi_{2} ,G_{1} }} \left( \omega \right) \) has a complex zero in the upper complex \( \omega \) plane. The occurrence of complex zeros is a very non-trivial property of complex functions, see [54], and has extremely relevant impacts in standard optical retrieval techniques [48]. This amounts to saying that \( \varPsi_{2} \) can have a zero response to specific bounded, physically realisable time patterns \( e_{1} \left( t \right) \) for the vector field \( G_{1} \left( x \right) \), with the ensuing result that some information on response of the system is lost if one looks at it through the lens of \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \). As a result, the surrogate susceptibility \( \widetilde{\text{H}}_{{2,1,G_{1} }} \left( \omega \right) \) is not analytic in the same domain. This marks a clear departure from the standard case investigated in response theory.

As a result, the surrogate Green function \( {\text{H}}_{{2,1,G_{1} }} \left( \tau \right) \) includes also non-causal contributions, so that its support is not limited to the non-negative subset of the real axis. This implies that in this case the knowledge of the past value of \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) is not sufficient for predicting the future values of \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \), or, in other terms \( \delta \left\langle {\varPsi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) is an imperfect predictor of the predictand \( \delta \left\langle {\varPsi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \). One can say that the reduced system we are studying does not have a purely passive nature, but is instead also active, as a result of unstable feedbacks. Whereas response theory applies for all sufficiently well-behaved observables and cannot rule out the existence of susceptibility functions featuring complex zeros in the upper complex \( \omega \) plane, the procedure outlined here, given the presence of a specific forcing with spatial patterns \( G_{1} \left( x \right) \), allows one to discriminate between observables featuring or not predictive power on the future state of other observables.

Any prediction must be based only on the past data of the predictor. Therefore, in the case \( {\text{H}}_{{2,1,G_{1} }} \left( \tau \right) \) includes non-causal components, we perform predictions using the modified surrogate Green function:

where \( K^{\prime}_{{2,1,G_{1} }} \left( \tau \right) = \varTheta \left( \tau \right)K_{{2,1,G_{1} }} \left( \tau \right) \). Note that the Heaviside distribution takes care of enforcing causality.Footnote 1 In the case we are dealing with a problem where we need to reconstruct a signal (as in the case of proxy data in geosciences) rather than predict it, this problem is less relevant as the non-causal component of the surrogate Green function can also be used.

The same problems described here for the simple case of one forcing and one observable could emerge, a fortiori, in the case of N forcings and N observables, because the algebraic operations involved in constructing the inverse of the matrix in Eq. (30) can cause the emergence of poles in the upper complex \( \omega \) plane for one or more of the surrogate susceptibilities \( \widetilde{\rm K}_{k,1} \left( \omega \right) \). As a result, we have to replace in any actual prediction the integral kernel \( {\text{K}}_{{{\text{k}},1}} \left( \tau \right) \) with its causal component \( K^{\prime}_{k,1} \left( \tau \right) \), as described above. We will show below a case where, instead, using two observables as predictors (thus taking advantage of Eq. 23) cures the pathology associated with the presence of complex zeros in the susceptibility of one of such observables.

The fact that not all choices of predictors and predict ands are equally fortunate, makes, in fact, perfect intuitive sense, as implied in the classical concept of feedback on a system. What we show above is a mathematical characterization of such an intuition: when suitable predictors are chosen for predict and, the non-causal component of the integral kernel will be as small as possible (or even identically zero).

6 Numerical Experiments

In order to test the performance and explore potential pitfalls of the methodology proposed in this paper, we consider the now classic and widely used Lorenz 96 model [37]. This is a forced and dissipative model that represents metaphorically the main processes—advection, dissipation, and forcing—occurring in a latitudinal circle of the atmosphere. The model has rapidly become a standard testbed for many mathematical methods in geophysics [55,56,57,58], and most notably for data assimilation and parametrization schemes, and has recently gained relevance in the context of dynamical systems theory and statistical mechanics [12, 59,60,61]. In its simpler, one-level—version, the model is defined as follows:

with periodic boundary conditions \( x_{ - 1} = x_{N - 1} ,x_{0} = x_{N} ,\,{\text{and}}\,x_{1} = x_{N + 1} . \) One usually assumes \( \upsilon = 1 \) and \( F_{k} = F, \forall k = 1, \ldots ,N \). The first term on the right hand side describe the nonlinear advection, the second term describes the dissipation, and the last term describes the forcing. In the inviscid and unforced case \( \left( {\upsilon = 0\,{\text{and}}\,F = 0} \right) \), the total energy of the system \( E = \sum\nolimits_{k = 1}^{k = 1} {x_{k}^{2} /2} \) is a conserved quantity (but it is not the generator of the time translations, see [62]). When \( \upsilon = 1 \), the model exhibits chaotic behaviour and intensive properties for \( F \ge 6 \) and \( N \ge 20 \), approximately [61]. We select for our simulations the classical values of \( F = 8 \) and \( N = 36 \), which put the system well within the chaotic regime. We perform all of our simulations below taking advantage of the MATLAB2017™ software package and using an adaptive Runge–Kutta 4th order time integrator with absolute and relative precision of \( 10^{ - 8} \).

We first run a very long simulation of the unperturbed system lasting 106 time units from a random initial condition. We discard the data coming from the first 103 time units of the simulation, in order to remove transient effects and be safely within the steady state regime. We then consider—separately—the following perturbation vector fields:

-

\( G_{1,k} \left( x \right) = 1,\forall k = 1, \ldots ,N, \) corresponding to a change in the constant forcing;

-

\( G_{2,k} \left( x \right) = - x_{k} \forall k = 1, \ldots ,N, \) corresponding to a change in the viscosity.

We investigate the response of the system in terms of changes in the expectation value of the observables \( \phi_{j} = 1/N\sum\nolimits_{k = 1}^{N} {x_{k}^{j} /j} ,{\text{ j}} = 1,{ 2},{ 3} \); where, in particular, \( \varPsi_{1} \) is usually referred to as momentum of the system, and \( \varPsi_{2} \) is, as mentioned before, the energy of the system. In order to do so, we need to construct the Green functions \( \varGamma_{{\phi_{j} ,G_{k} }} \left( \tau \right) \) for all the \((j,k)\) combinations of observables and applied perturbations.

Following the definition given in Eq. (3b), the Green functions need to be estimated by taking an average over the unperturbed invariant measure. We approximate it by using 100,000 ensemble members, each chosen every 10 time units of the unperturbed run described above. We then proceed as follows. For each member of the ensemble, we introduce the perturbative vector field \( G_{1} \left( x \right) \) above with the time pattern \( e_{1} \left( t \right) = \varepsilon_{1} /2\delta \left( t \right) \), then \( e_{1} \left( t \right) = - \,\varepsilon_{1} /2\delta \left( t \right) \), (where \( \delta \left( t \right) \) is the Dirac’s delta) and take the difference of the two signals for each considered observable \( \phi_{j} , \) for j = 1,2,3. We compute the ensemble average of the signals and derive \( \varGamma_{{\phi_{j} ,G_{1} }} \left( \tau \right) \), for j = 1,2,3. We use \( \varepsilon_{1} = 1 \): while not being small, we have verified that such a choice sets us well within the range of linearity. Note that by computing the linear response as a centred difference, we eliminate the quadratic nonlinear term, as discussed in [20]. We then repeat the same procedure, using the same ensemble members, for the perturbative vector field \( G_{2} \left( x \right) \), and evaluate \( \varGamma_{{\phi_{j} ,G_{2} }} \left( \tau \right) \) by computing the semidifference between the response obtained the time pattern \( e_{2} \left( t \right) = \varepsilon_{2} /2\delta \left( t \right) \) and \( e_{2} \left( t \right) = - \varepsilon_{2} /2\delta \left( t \right) \), We choose here \( \varepsilon_{2} = 0.1 \), which, similarly, is well within the range of linearity.

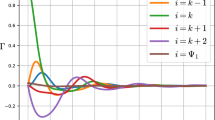

The Green functions obtained according to this procedure are presented in Fig. 1, where we show that the characteristic response time of the perturbation to the viscosity \( \upsilon \) and to the forcing F are comparable for all observables, as to be expected from Eq. (5). As quite intuitive from physical arguments and from the shape of the Green function, increasing the forcing has the effect of increasing the expectation value of the three considered observables, whereas the opposite holds for the viscosity.

All Green functions analysed here have non-vanishing values in the limit of \( t \) going to zero from positive values. Along the lines of [13], by using Eq. (3c) and the definition of \( \phi_{j} \), we can derive the following results:

See Table 1 for the numerical estimates of the expectation value of the observables \( \phi_{j} , j = 1,2,3 \) in the unperturbed state.

Following [13], we derive that at leading order, for large real values of \( \omega \):

We then proceed to constructing the functions \( \widetilde{\text{H}}_{{{\text{i}},{\text{j}},G_{k} }} \left( \omega \right) \) as defined in Eq. (8). As all the considered susceptibilities have the same asymptotic behaviour for large values of \( \omega \), all the functions \( \widetilde{\text{H}}_{{{\text{i}},{\text{j}},G_{k} }} \left( \omega \right) \) converge asymptotically for large values of \( \omega \) to a constant. One finds:

This leads to the presence of singular contributions \( {\text{S}}_{{{\text{i}},{\text{j}},G_{k} }} \left( \tau \right) \) in the corresponding integration kernels \( {\text{H}}_{{{\text{i}},{\text{j}},G_{k} }} \left( \tau \right) \). The singular contributions are in the form of Dirac’s deltas and have the following expression:

We then shift our attention to the non-local component of the surrogate Green functions, for which it is harder to derive explicit results. We first treat the case of perturbations to the forcing F of the system and we show in Fig. 2 the functions \( {\text{K}}_{{{\text{i}},{\text{j}},G_{1} }} \left( \tau \right) \), \( {\text{i}},{\text{j}} = 1,2,3. \) The surrogate Green functions have a slightly less trivial structure than those depicted in Fig. 1, as more complex features appear, but still broadly conform to the case of representing an exponential decay modulated by oscillations. We find that in this case it is possible to reconstruct the response of each observable from the knowledge of the present (see Table 1) and past (see Fig. 2) state of any other observable. In other terms, one can treat, e.g., \( \phi_{3} \) as surrogate forcing for \( \phi_{1} \), and viceversa. As discussed above, the fact that, e.g., both \( {\text{K}}_{{1,3,G_{1} }} \left( \tau \right) \) and \( {\text{K}}_{{3,1,G_{1} }} \left( \tau \right) \) are non-vanishing proves that the neither acts as a true external forcing.

We now consider the case of perturbations to the viscosity \( \upsilon \) of the system and we analyze the functions \( {\text{K}}_{{{\text{i}},{\text{j}},G_{2} }} \left( \tau \right) \), \( {\text{i}},{\text{j}} = 1,2,3 \). We find (Fig. 3) that the two functions \( {\text{K}}_{{2,1,G_{2} }} \left( \tau \right) \) and \( {\text{K}}_{{3,1,G_{2} }} \left( \tau \right) \) have a substantial non-causal component, and an extremely slow decay of correlations for positive times. The presence of a non-causal component must result from the existence of complex zeros for the function \( \widetilde{\varGamma }_{{\varPsi_{1} ,G_{2} }} \left( \omega \right) \) in the upper complex \( \omega \) plane. Note such an occurrence is due to the specific combination of the observable and of the applied perturbation, and cannot be easily ruled out (or predicted) a priori.

Non-singular components of the surrogate Green functions allowing to predict the response of one observable from the response of another observable, in the case the viscosity \( \varvec{\upsilon} \) is perturbed. Note the different scale of times in the x-axis compared to the previous two figures. Note that the functions \( {\mathbf{K}}_{{3,1,\varvec{G}_{2} }} \left(\varvec{\tau}\right) \) and \( {\mathbf{K}}_{{2,1,\varvec{G}_{2} }} \left(\varvec{\tau}\right) \) have a substantial non-causal component

We want to test the impact of the presence of a non-causal component in the surrogate Green function on our ability to predict the response of the system to a perturbation. We then consider a perturbation to the viscosity of the model (thus considering the vector field \( G_{2,k} \left( x \right) = - x_{k} \forall k = 1, \ldots ,N \)) modulated by

and compute over an ensemble comprising of 10,000 members (the first 10% of the ensemble members described before) the time dependent change in the expectation value of \( \phi_{3} \), shown by the black line in Fig. 4. Clearly, the choice of \( e_{2} \left( t \right) \) [just like \( e_{1} \left( t \right) \) below] is arbitrary. We then estimate \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) by convolving the corresponding Green function \( \varGamma_{{\phi_{3} ,G_{2} }} \left( t \right) \) shown in Fig. 1 with \( e_{2} \left( t \right) \) in Eqs. (37a, 37b). The output of the prediction of ordinary linear response theory compares quite well with the observed value and is plotted as a blue line in Fig. 4.

Predicting the response of the observable \( \phi_{3} \) to changes in the value of \( {\varvec{\upupsilon}} \). Black Line: actual response of the system. Blue line: Prediction based on the surrogate Green function \( {\mathbf{H}}_{{3,2,\varvec{G}_{2} }} \left(\varvec{\tau}\right) \). Red line: Prediction based on classical linear response theory using the Green function \( {\varvec{\Gamma}}_{{3,\varvec{G}_{2} }} \left(\varvec{\tau}\right) \), Magenta line: Prediction based on the surrogate Green function \( {\mathbf{H}}_{{3,1,\varvec{G}_{2} }} \left(\varvec{\tau}\right) \). Only the causal components of the Green functions are considered (Color figure online)

We then estimate \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) by performing the convolution of \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) with the surrogate Green function \( {\text{H}}_{{3,2,G_{2} }} \left( \tau \right) \), whose non-singular component is causal and is shown in Fig. 3, while the singular component can be reconstructed by considering what is reported in Table 1 and Eq. (36). The prediction for \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) obtained using \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) as predictor is extremely good and is shown by the black line in Fig. 3.

Finally, we repeat the same procedure by using \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) as predictor. We then convolve \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) with the causal component of the surrogate Green function \( H^{\prime}_{{3,1,G_{2} }} \left( \tau \right) \), constructed as described in Eq. (29). Its Dirac’s \( \delta \) component can be reconstructed by looking at Table 1 and considering Eq. (36), while, for the non-singular component, the enforced causality compels us to set to zero the non-singular component \( {\text{K}}_{{3,1,G_{2} }} \left( \tau \right) \) reported in Fig. 3 for non-positive values of \( \tau . \) The result of the prediction is shown as the magenta line in Fig. 4. Clearly, this latter prediction is unsuccessful, and we conclude that \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) is an inefficient predictor for \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \).

We then treat the case where two independent forcings act simultaneously on the system, by adding on top of the modulation to the viscosity described in Eq. (33) a perturbation to the forcing F. We then consider a perturbation vector field \( G_{1,k} \left( x \right) = 1 \forall k = 1, \ldots ,N \)) modulated by

Following Eq. (23), we aim at predicting \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) by summing the convolution of \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) with \( {\text{H}}_{3,2} \left( t \right) \) and of \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) with \( {\text{H}}_{3,1} \left( t \right) \). From Eq. (22) we derive the following limits:

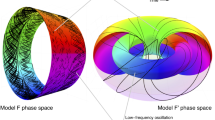

The singular components of \( {\text{H}}_{3,1} \left( t \right) \) and \( {\text{H}}_{3,2} \left( t \right) \) are given by \( {\text{S}}_{3,1} \left( t \right) = \mathop {\lim }\limits_{\omega \to \infty } \widetilde{\text{H}}_{3,1} \left( \omega \right)\delta \left( t \right) \) and \( {\text{S}}_{3,2} \left( t \right) = \mathop {\lim }\limits_{\omega \to \infty } \widetilde{\text{H}}_{3,2} \left( \omega \right)\delta \left( t \right) \), respectively. Instead, the non-singular components \( {\text{K}}_{3,1} \left( t \right) \) and \( {\text{K}}_{3,2} \left( t \right) \) are shown in Fig. 5 and are, somewhat unexpectedly, both causal. As a result, the prediction of \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) performed using Eq. (23) is virtually perfect: compare the black and the red lines in Fig. 6.

Non-singular components of the integration kernels associated to the matrix given in Eq. (23)

Predicting the response of the observable \( \phi_{3} \) to changes in the value of \( {\text{F}} \) and \( \upsilon \). Black Line: actual response of the system. Red line: Prediction based on the surrogate Green functions \( {\text{H}}_{3,1} \left( \tau \right) \) and \( {\text{H}}_{3,2} \left( \tau \right) \), using Eq. ( 23 ). Magenta line: Prediction computed by convolving only \( {\text{H}}_{3,2} \left( \tau \right) \) with \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \). Blue line: Prediction computed by convolving only \( {\text{H}}_{3,1} \left( \tau \right) \) with \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) (Color figure online)

Therefore, by combining two forcings and using two observables as predictors, the pathology associated to inability of the observable \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) to be a good predictor for \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) for the specific case of forcing to the viscosity is sorted out, because more information is available on the response of the system thanks to using both \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) and \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) as predictors. In Fig. 6 we also show that \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) ends up being the most important predictor, with \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) playing, instead, a relatively minor role.

A reasonable question to ask is whether Eq. (23) might be used also in the case where, in fact, only one between \( e_{1} \left( t \right) \) or \( e_{2} \left( t \right) \), which provide the time modulation of the perturbation vector fields \( G_{1} \left( x \right) \) and \( G_{2} \left( x \right) \), respectively, is different from zero. In this case, as discussed earlier, we could in principle use the simpler Eq. (10) for performing the prediction. We then decide to set \( e_{1} \left( t \right) = 0 \) and use \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) and \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) as predictors of \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \). Note that in Fig. 4 we had shown that in this case, using Eq. (10), \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) cannot be used to predict \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \), while \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \) serves perfectly the scope.

We find that, if we use Eq. (23), we can predict to a very high accuracy \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) and, more surprisingly, \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) provides a contribution to the prediction. The results are shown in Fig. 7, where the black line indicates the actual value of \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) (and matches the black line in Fig. 4), the red line shows the prediction performed using Eq. (23), while the blue line shows the contributions to the prediction coming from using \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) as predictand. Clearly, \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \), which can by itself predict \( \delta \left\langle {\phi_{3} } \right\rangle^{\left( 1 \right)} \left( t \right) \) using Eq. (10), lends some role in the predictability to \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) when the more complex Eq. (23) is used. We conjecture that using multiple predictors might increase the robustness of the prediction, as we might decrease the probability of encountering pathologies as the presence of complex zeros discussed above.

Predicting the response of the observable \( \phi_{3} \) to changes in the value of \( \upsilon \) (as in the case depicted in Fig. 4) using two predictands \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \) and \( \delta \left\langle {\phi_{2} } \right\rangle^{\left( 1 \right)} \left( t \right) \). Black Line: actual response of the system (same as the black line in Fig. 4). Red line: Prediction based on the use of Eq. (23). Blue line: Prediction computed by convolving only \( {\text{H}}_{3,1} \left( \tau \right) \) with \( \delta \left\langle {\phi_{1} } \right\rangle^{\left( 1 \right)} \left( t \right) \). Note that the difference between the black curve here and that in Fig. 6 is, clearly, the blue line in Fig. 6 (Color figure online)

7 Summary and Conclusions

Response theory is a well-established set of mathematical theorems or, in some cases, heuristically motivated yet often practically applicable formulas that allow one to predict how a large class of systems—including near equilibrium as well as far from equilibrium statistical mechanical systems—respond to applied perturbations. Specifically, response formulas give a prescription on how the expectation value of a generic observable will change as a result of the forcing, where such change in expressed in terms of the statistical properties of the unperturbed system. When the system has full Lebesgue measure, this boils down—in the linear case—to the celebrated fluctuation–dissipation relation. Response theory can be developed also in the case of time-dependent forcings: here, response theory provides a practical tool for approximating the time-dependent measure supported on the pullback attractor of the system under consideration. Finally, response theory—or better, the analysis of the conditions under which it breaks down—can be key for detecting and anticipating critical transitions.

While response theory is extremely powerful and widely used, it is not practically usable in some relevant scientific cases. In many complex systems we have only partial information on the state of the system, on its dynamics, on the acting forcing, and we might want to use some observables of the system as surrogate forcing. This might be motivated by practical reasons or by the knowledge of the acting feedbacks or by time scale arguments. Investigations in geosciences and neuroscience often face these challenges.

In order to address this, in this paper, we have proposed a reformulation and extension of linear response theory where we blur the distinction between forcing and response, by focusing on the definition of constitutive relations relating the response of different observables, in order to construct tools for performing prediction, partly bypassing the need for knowing the applied forcing in full detail. Our theoretical predictions are supported by extensive yet extremely simple and computationally cheap numerical simulation performed on the Lorenz’96 system using commercial software (namely, MATLAB2017™) on a commercial laptop in a matter of a couple of days. The goal of these—purposely low-tech—simulations is to show that what the mathematical aspects discussed here emerge quite naturally.

We first evaluate to what extent one can use one forced signal (the change in the expectation value of one observable) to predict another forced signal (the change in the expectation value of another observable), thus bypassing the need for knowing some details (in particular, the time pattern) of the applied forcing. This leads to defining surrogate susceptibilities and surrogate Green functions, which, in practice, amount to justifying the existence of general linear, yet, nontrivial, relations between the various forced signals. This point of view is very natural as it reflects the way one looks at feedbacks inside a complex systems, which often entails constructing approximate temporally ordered causal links describing how the effect of the forcing propagates between different components or parts of the system itself. We explain that, in some cases, one can predict the future state of observable \( \phi_{2} \) from the past and present knowledge of observable \( \phi_{1} \), and vice versa, so that it makes little sense to say what causes what.

Additionally, once a forcing is considered, in agreement with physical intuition, we prove that in some cases an observable \( \phi_{1} \) is not capable of acting as effective predictor for another generic observable \( \phi_{2} \). This results from the presence of complex zeros in the upper complex angular frequency plane of the susceptibility of \( \phi_{1} \). The presence of a complex zero implies that for some time-pattern of forcing, the change in the expectation value of the observable \( \phi_{1} \) is identically zero. As a result, the surrogate Green function is not causal, and \( \phi_{1} \) cannot be used for predicting any other observable \( \phi_{2} \). Such a loss of causality is obviously just apparent, because we are not relating the true forcing to a response. What we see is, in fact, the result of the loss of information due to using \( \phi_{1} \), a bad predictor, as surrogate for the forcing.

Finally, we show using standard linear algebra that, in absence of mathematical pathologies, as the one just mentioned above, if a system undergoes N different simultaneous forcings, it is possible to predict the response of an observable \( \phi_{N + 1} \) using N suitably defined surrogate Green functions convoluted with N other observables \( \phi_{1} , \ldots ,\phi_{N} \) without the need to know the time patterns of the actually applied forcings. As shown in our numerical example, such a strategy may overcome the difficulties associated to choosing a bad observable as predictor: the use of multiple predictors seems inherently more robust.

The surrogate Green functions discussed above can be derived from specific sets of experiments where only one of the forcings is applied at a time. Following [63], this can achieved also using stochastic perturbations.

This allows for a model-assisted reconstruction of signals where we have no or partial information on the time patterns of the forcings, or even if one or more of the forcings are, in fact, even inactive. This seems extremely promising for a variety of problems in the analysis of the response of complex models to perturbations where we might be interested in finding relationships between different forced signals, or when the actual forcings might be hard to control or measure.

We foresee applications of our results in problems relevant for climate science such as the intercomparison of climate models regarding their response to external forcing, the analysis of the relationship between forced and free variability, and, on a different note, on the reconstruction of climate readings from multiple proxy data. Additionally, our results seem relevant for studying, in spatially extended systems, the response of a part of the system to perturbations by looking at its response somewhere else. In order to do this, it is sufficient to select observables that have support in separate regions of the system. This might be relevant in fluid dynamical systems, in systems obeying diffusion laws, and neural systems, where the presence of a maximum speed of propagation of the information might lead to barrier to prediction if the considered parts of the system and/or the location of the forcings are too far away.

Finally, we believe that our results might have some relevance in the context of the theory of causal networks [64], because we are able to define whether or not one can assume causal relations between different observables or different regions of a system.

Notes

Note that \( \widetilde{{K^{\prime}}}_{{2,1,G_{1} }} \left( \omega \right) = \widetilde{K}_{{2,1,G_{1} }} \left( \omega \right)*\left( { - iP\left( {\frac{1}{\omega }} \right) + \pi \delta \left( \omega \right)} \right) \), where P indicates the principal part.

References

Kubo, R.: The fluctuation–dissipation theorem. Rep. Prog. Phys. 29, 255–284 (1966)

Marconi, U.M.B., Puglisi, A., Rondoni, A., Vulpiani, A.: Fluctuation–dissipation: response theory in statistical physics. Phys. Rep. 461, 111–195 (2008)

Lucarini, V., Colangeli, M.: Beyond the linear fluctuation–dissipation theorem: the role of causality. J. Stat. Mech. 5, P05013 (2012)

Ruelle, D.: Differentiation of SRB states. Comm. Math. Phys. 187, 227–241 (1997)

Ruelle, D.: General linear response formula in statistical mechanics, and the fluctuation–dissipation theorem far from equilibrium. Phys. Lett. A 245, 220–224 (1998)

Ruelle, D.: A review of linear response theory for general differentiable dynamical systems. Nonlinearity 22, 855–870 (2009)

Butterley, O., Liverani, C.: Smooth Anosov flows: correlation spectra and stability. J. Mod. Dyn. 1, 301–322 (2007)

Baladi, V.: The quest for the ultimate anisotropic Banach space. J. Stat. Phys. 166(3), 525 (2017)

Gallavotti, G., Cohen, E.G.D.: Dynamical ensembles in nonequilibrium statistical mechanics. Phys. Rev. Lett. 74, 2694–2697 (1995)

Reick, C.H.: Linear response of the Lorenz system. Phys. Rev. E 66, 036103 (2002). https://doi.org/10.1103/PhysRevE.66.036103

Cessac, B., Sepulchre, J.-A.: Linear response, susceptibility and resonances in chaotic toy models. Physica D 225, 13–28 (2007)

Lucarini, V.: Evidence of dispersion relations for the nonlinear response of Lorenz 63 system. J. Stat. Phys. 134, 381–400 (2009)

Lucarini, V., Sarno, S.: A statistical mechanical approach for the computation of the climatic response to general forcings. Nonlin. Processes Geophys. 18, 7–28 (2011)

Ragone, F., Lucarini, V., Lunkeit, F.: A new framework for climate sensitivity and prediction: a modelling perspective. Clim. Dyn. 46, 1459 (2016)

Lucarini, V., Lunkeit, F., Ragone, F.: Predicting climate change using response theory: global averages and spatial patterns. J. Stat. Phys. 166, 1036 (2017)

Bodai, T., Lucarini, V., Lunkeit, F.: Linear response theory applied to geoengineering, arXiv:1803.09606 (2018)

Abramov, R., Majda, A.: Blended response algorithms for linear fluctuation-dissipation for complex nonlinear dynamical systems. Nonlinearity 20(2007), 2793 (2007)

Gritsun, A., Branstator, G., Majda, A.: Climate response of linear and quadratic functionals using the fluctuation dissipation theorem. J. Atmos. Sci. 65, 2824 (2008)

Cooper, F.C., Haynes, P.H.: Climate Sensitivity via a nonparametric fluctuation–dissipation theorem. J. Atmos. Sci. 68, 937–953 (2011)

Gritsun, A., Lucarini, V.: Fluctuations, response, and resonances in a simple atmospheric model. Physica D 349, 62 (2017)

Hairer, M., Majda, A.J.: A simple framework to justify linear response theory. Nonlinearity 23, 909 (2010)

Worwell, C., Gottwald, G.A.: On the validity of linear response theory in high-dimensional deterministic dynamical systems, arXiv:1801.09377

Mori, H., Fujisaka, H., Shigematsu, H.: A new expansion of the master equation. Progress Theoret. Phys. 51, 109–122 (1974)

Zwanzig, R.: Ensemble method in the theory of irreversibility. J. Chem. Phys. 33, 1338–1341 (1960)

Chekroun, M.D., Liu, H., Wang, S.: Approximation of stochastic invariant manifolds. Springer, Cambridge (2015)

Chekroun, M.D., Liu, H., Wang, S.: Stochastic parameterizing manifolds and non-markovian reduced equations. Springer, Cambridge (2015)

Wouters, J., Lucarini, V.: Multi-level dynamical systems: connecting the ruelle response theory and the Mori-Zwanzig Approach. J. Stat. Phys. 151, 850–860 (2013)

Baiesi, M., Maes, C.: An update on the nonequilibrium linear response. New J. Phys. 15, 013004 (2013)

Saltzman, B.: Dynamical Paleoclimatology: Generalized Theory of Global Climate Change. Academic, London (2002)

Bracegirdle, T.J., Stephenson, D.B.: On the robustness of emergent constraints used in multimodel climate change projections of arctic warming. J. Climate 26, 669–678 (2013)

Cox, P.M., Huntingford, C., Williamson, M.S.: Emergent constraint on equilibrium climate sensitivity from global temperature variability. Nature 553, 319–322 (2018)

Nijsse, F.J.M.M., Dijkstra, H.A.: A mathematical approach to understanding emergent constraints. Dynam, Earth Syst (2018). https://doi.org/10.5194/esd-2018-15

Peixoto, J., Oort, A.: Physics of Climate. American Institute of Physics, New York (1992)

Stocker, T.F., et al.: IPCC, Climate Change 2013: The Physical Science Basis. Cambridge University Press, Cambridge (2013)

Knutson, T.R., et al.: Tropical cyclones and climate change. Nat. Geosci. 3, 157–163 (2010)

Bengsson, L.: Tropical cyclones in a warmer climate. WMO Bull. 56, 196–203 (2007)

Lorenz, E.N.: Predictability—a problem partly solved. In: Palmer, T., Hagedorn, R. (eds.) Predictability of Weather and Climate, pp. 40–58. Cambridge University Press, Cambridge (1996)

Baladi, V.: Positive transfer operators and decay of correlations. World Scientific Publishing Co. Inc., Singapore (2000)

Ghil, M., Chekroun, M.D., Simonnet, E.: Climate dynamics and fluid mechanics: natural variability and related uncertainties. Phys. D 237, 2111–2126 (2008)

Carvalho, A.N., Langa, J., Robinson, J.C.: The pullback attractor. Attractors for infinite-dimensional non-autonomous dynamical systems. Appl. Math. Sci. 182, 3–22 (2013)

Bódai, T., Károlyi, G.: T Tél (2011) A chaotically driven model climate: extreme events and snapshot attractors. Nonlinear Process Geophys. 18, 573–580 (2011)

Herein, M., Drótos, G., Bódai, T., Lunkeit, F., Lucarini, V.: Reconsidering the relationship of the El Niño–Southern Oscillation and the Indian monsoon using ensembles in Earth system models, arXiv:1803.08909 (2018)

Lucarini, V.: Response Theory for Equilibrium and Non-Equilibrium Statistical Mechanics: Causality and Generalized Kramers–Kronig relations. J. Stat. Phys. 131, 543–558 (2008)

Tantet, A., Lucarini, V., Lunkeit, F., Dijkstra, H.A.: Crisis of the chaotic attractor of a climate model: a transfer operator approach. Nonlinearity 31, 2221 (2018)

Tantet, A., Lucarini, V., Dijkstra, H.A.: Resonances in a chaotic attractor crisis of the lorenz flow. J. Stat. Phys. 170, 584–616 (2018)

Pollicott, M.: On the rate of mixing of Axiom A flows. Invent. Math. 81(3), 413 (1985)

Ruelle, D.: Resonances of chaotic dynamical systems. Phys. Rev. Lett. 56(5), 405 (1986)

Lucarini, V., Saarinen, J., Peiponen, K.-E., Vartiainen, E.: Kramers–Kronig relations in Optical Materials Research. Springer, New York (2005)

Chekroun, M.D., Neelin, J.D., Kondrashov, D., McWilliams, J.C., Ghil, M.: Rough parameter dependence in climate models and the role of Ruelle-Pollicott resonances. Proc. Natl Acad. Sci. U.S.A. 111, 1684 (2014)

Lucarini, V.: Response operators for Markov processes in a finite state space: radius of convergence and link to the response theory for axiom a systems. J. Stat. Phys. 162, 312 (2015)

Cohen-Tannoudji, C., Dupont-Roc, J., Grynberg, G.: Photons and atoms: introduction to quantum electrodynamics. Wiley, New York (1997)

Gustavsen, B., Semlyen, A.: Rational approximation of frequency domain responses by vector fitting. IEEE Trans. Power Delivery 14, 1052–1061 (1999)

Berljafa, M., Güttel, S.: The RKFIT algorithm for nonlinear rational approximation. SIAM J. Sci. Comput. 39, A2049–A2071 (2017)

Kravanja, P., Sakurai, T., Van Barel, M.: On locating clusters of zeros in analytic functions. BIT Num. Math. 39, 646–682 (1999)

Orrell, D.: Model error and predictability over different timescales in the Lorenz’96 systems. J. Atmos. Sci. 60(17), 2219–2228 (2003)

Trevisan, A., D’Isidoro, M., Talagrand, O.: Four-dimensional variational assimilation in the unstable subspace and the optimal subspace dimension. Q. J. R. Met. Soc. 136, 487–496 (2010)

Wilks, D.S.: Effects of stochastic parametrizations in the Lorenz’96 system. Q. J. R. Met. Soc. 131, 389–407 (2005)

Vissio, G., Lucarini, V.: A proof of concept for scale-adaptive parametrizations: the case of the Lorenz ‘96 model. Q. J. Royal Met. Soci. 144, 63–75 (2017)

Abramov, R.V., Majda, A.: New approximations and tests of linear fluctuation-response for chaotic nonlinear forced-dissipative dynamical systems. J. Nonlinear Sci. 18, 303–341 (2008)

Karimi, A., Paul, M.R.: Extensive chaos in the Lorenz-96 model. Chaos: an interdisciplinary. J. Nonlinear Sci. 20, 043105 (2010)

Gallavotti, G., Lucarini, V.: Equivalence of non-equilibrium ensembles and representation of friction in turbulent flows: the Lorenz 96 Model. J. Stat. Phys. 156, 1027–1065 (2014)

Blender, R., Wouters, J., Lucarini, V.: Avalanches, breathers, and flow reversal in a continuous Lorenz-96 model. Phys. Rev. E 88, 013201 (2013)

Lucarini, V.: Stochastic perturbations to dynamical systems: a response theory approach. J. Stat. Phys. 146, 774 (2012)

Pearl, J.: From Bayesian networks to causal networks. In: Coletti, G., Dubois, D., Scozzafava, R. (eds.) Mathematical Models for Handling Partial Knowledge in Artificial Intelligence. Springer, Boston (1995)

Acknowledgements