Abstract

The provision of accurate methods for predicting the climate response to anthropogenic and natural forcings is a key contemporary scientific challenge. Using a simplified and efficient open-source general circulation model of the atmosphere featuring O(\(10^5\)) degrees of freedom, we show how it is possible to approach such a problem using nonequilibrium statistical mechanics. Response theory allows one to practically compute the time-dependent measure supported on the pullback attractor of the climate system, whose dynamics is non-autonomous as a result of time-dependent forcings. We propose a simple yet efficient method for predicting—at any lead time and in an ensemble sense—the change in climate properties resulting from increase in the concentration of CO\(_2\) using test perturbation model runs. We assess strengths and limitations of the response theory in predicting the changes in the globally averaged values of surface temperature and of the yearly total precipitation, as well as in their spatial patterns. The quality of the predictions obtained for the surface temperature fields is rather good, while in the case of precipitation a good skill is observed only for the global average. We also show how it is possible to define accurately concepts like the inertia of the climate system or to predict when climate change is detectable given a scenario of forcing. Our analysis can be extended for dealing with more complex portfolios of forcings and can be adapted to treat, in principle, any climate observable. Our conclusion is that climate change is indeed a problem that can be effectively seen through a statistical mechanical lens, and that there is great potential for optimizing the current coordinated modelling exercises run for the preparation of the subsequent reports of the Intergovernmental Panel for Climate Change.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The climate is a forced and dissipative nonequilibrium chaotic system with a complex natural variability resulting from the interplay of instabilities and re-equilibrating mechanisms, negative and positive feedbacks, all covering a very large range of spatial and temporal scales. One of the outstanding scientific challenges of the last decades has been to attempt to provide a comprehensive theory of climate, able to explain the main features of its dynamics, describe its variability, and predict its response to a variety of forcings, both anthropogenic and natural [1–3]. The study of the phenomenology of the climate system is commonly approached by focusing on distinct aspects like:

-

wave-like features such Rossby or equatorial waves, which have enormous importance in terms of predictability and transport of, e.g., energy, momentum, and water vapour;

-

particle-like features such as hurricanes, extratropical cyclones, or oceanic vortices, which are of great relevance for the local properties of the climate system and its subdomains;

-

turbulent cascades, which determine, e.g., dissipation in the boundary layer and development of large eddies through the mechanism of geostrophic turbulence.

While each of these points of view is useful and necessary, none is able to provide alone a comprehensive understanding of the properties of the climate system; see also discussion in [4].

On a macroscopic level, one can say that at zero order the climate is driven by differences in the absorption of solar radiation across its domain. The prevalence of absorption at surface and at the lower levels of the atmosphere leads, through a rich portfolio of processes, to compensating vertical energy fluxes (most notably, convective motions in the atmosphere and exchanges of infrared radiation), while the prevalence of absorption of solar radiation in the low latitudes regions leads to the set up of the large scale circulation of the atmosphere (with the hydrological cycle playing a key role), which allows for reducing the temperature differences between tropics and polar regions with respect to what would be the case in absence of horizontal energy transfers [5, 6].

It is important to stress that such organized motions of the geophysical fluids, which act as negative feedbacks but cannot be treated as diffusive Onsager-like processes, typically result from the transformation of some sort of available potential into kinetic energy, which is continuously dissipated through a variety of irreversible processes. See [7] for a detailed analysis of the relationship between response, fluctuations, and dissipation at different scales. Altogether, the climate can be seen as a thermal engine able to transform heat into mechanical energy with a given efficiency, and featuring many different irreversible processes that make it non-ideal [2, 8, 9].

Besides the strictly scientific aspect, much of the interest on climate research has been driven in the past decades by the accumulated observational evidence of the human influence on the climate system. In order to summarize and coordinate the research activities carried on by the scientific community, the United Nations Environment Programme (UNEP) and the World Meteorological Organization (WMO) established in 1988 the Intergovernmental Panel on Climate Change (IPCC). The IPCC reports, issued periodically about every 5 years, provide systematic reviews of the scientific literature on the topic of climate dynamics, with special focus on global warming and on the socio-economic impacts of anthropogenic climate change [10–12]. Along with such a review effort, the IPCC defines standards for the modellistic exercises to be performed by research groups in order to provide projections of future climate change with numerical models of the climate system. A typical IPCC-like climate change experiment consists in simulating the system in a reference state (a stationary preindustrial state with fixed parameters, or a realistic simulation of the present-day climate), raising the CO\(_2\) concentration (as well as, in general, the concentration of other greenhouse gases such as methane) in the atmosphere following a certain time modulation within a given time window, and then fixing the CO\(_2\) concentration to a final value, in order to observe the relaxation of the system to a new stationary state. Each time-modulation of the CO\(_2\) forcing defines a scenario, and it is a representation of the expected change in the CO\(_2\) concentration resulting from a specific path of industrialization and change in land use. Note that the attribution of unusual climatic conditions to specific climate forcing is far from being a trivial matter [13, 14].

While much progress has been achieved, we are still far from having a conclusive framework of climate dynamics. One needs to consider that the study of climate faces, on top of all the difficulties that are intrinsic to any nonequilibrium system, the following additional aspects that make it especially hard to advance its understanding:

-

the presence of well-defined subdomains—the atmosphere, the ocean, etc.—featuring extremely different physical and chemical properties, dominating dynamical processes, and characteristic time-scales;

-

the complex processes coupling such subdomains;

-

the presence of a continuously varying set of forcings resulting from, e.g., the fluctuations in the incoming solar radiation and the processes—natural and anthropogenic—altering the atmospheric composition;

-

the lack of scale separation between different processes, which requires a profound revision of the standard methods for model reduction/projection to the slow manifold, and calls for the unavoidable need of complex parametrization of subgrid scale processes in numerical models;

-

the impossibility to have detailed and homogeneous observations of the climatic fields with extremely high-resolution in time and in space, and the need to integrate direct and indirect measurements when trying to reconstruct the past climate state beyond the industrial era;

-

the fact that we can observe only one realization of the process.

Since the climate is a nonequilibrium system, it is far from trivial to derive its response to forcings from the natural variability realized when no time-dependent forcings are applied. This is the fundamental reason why the construction of a theory of the climate response is so challenging [3]. As already noted by Lorenz [15], it is hard to construct a one-to-one correspondence between forced and free fluctuations in a climatic context. Following the pioneering contribution by Leith [16], previous attempts on predicting climate response based broadly on applications of the fluctuation–dissipation theorem have had some degree of success [17–21], but, in the deterministic case, the presence of correction terms due to the singular nature of the invariant measure make such an approach potentially prone to errors [22, 23]. Adding noise in the equations in the form of stochastic forcing—as in the case of using stochastic parametrizations [24] in multiscale system—provides a way to regularize the problem, but it is not entirely clear how to treat the zero noise limit. Additionally, one should provide a robust and meaningful derivation of the model to be used for constructing the noise: a proposal in this direction is given in [2, 25, 26].

In this paper we want to show how climate change is indeed a well-posed problem at mathematical and physical level by presenting a theoretical analysis of how PLASIM [27], a general circulation model of intermediate complexity, responds to changes in the atmospheric composition mimicking increasing concentrations of greenhouse gases. We will frame the problem of studying the statistical properties of a non-autonomous, forced and dissipative complex system using the mathematical construction of the pullback attractor [28–30]—see also the closely related concept of snapshot attractor [31–34]—and will use as theoretical framework the Ruelle response theory [22, 35] to compute the effect of small time-dependent perturbations on the background state. We will stick to the linear approximation, which has proved its effectiveness in various examples of geophysical interest [23, 36]. The basic idea is to use a set of probe perturbations to derive the Green function of the system, and be then able to predict the response of the system to a large class of forcings having the same spatial structure. In this way, the uncertainties associated to the application of the fluctuation–dissipation theorem are absent and the theoretical framework is more robust. Note that, as shown in [37], one can practically implement the response theory also to treat the nonlinear effects of the forcing.

PLASIM has O\((10^5)\) degrees of freedom and provides a flexible tool for performing theoretical studies in climate dynamics. PLASIM is much faster (and indeed simpler) than the state of the art climate models used in the IPCC reports, but provides a reasonably realistic representation of atmospheric dynamics and of its interactions with the land surface and with the mixed layer of the ocean. The model includes a suite of efficient parametrization of small scale processes such as those relevant for describing radiative transfer, cloud formation, and turbulent transport across the boundary layer. It is important to recall that in climate science it is practically necessary and conceptually very sound to choose models belonging to different levels of a hierarchy of complexity [38] depending on the specific problem to be studied: the most physically comprehensive and computationally expensive model is not the best model to be used for all purposes; see discussion in [39].

In a previous work [36] we focused on the analysis of a globally averaged observable, and we considered a model set-up where the meridional oceanic heat transport was set to zero, with no feedback from the climate state. Such a limitation resulted in an extremely high increase of the globally averaged surface temperature resulting from higher CO\(_2\) concentrations. In this paper we extend the previous analysis by using a better model set-up and by considering a wider range of climate observables, able to provide a more complete picture of the climate response to CO\(_2\) concentration. In particular, we wish to show to what extent response theory is suited for performing projections of the spatial pattern of climate change.

The paper is organized as follows. In Sect. 2 we introduce the main concepts behind the theoretical framework of our analysis. We briefly describe the basic properties of the pullback attractor and explain its relevance in the context of climate dynamics. We then discuss the relevance of response theory for studying situations where the non-autonomous dynamics can be decomposed into a dominating autonomous component plus a small non-autonomous correction. In Sect. 3 we introduce the climate model used in this study, discuss the various numerical experiments and the climatic observables of our interest, and present the data processing methods used for predicting the climate response to forcings. In Sect. 4 we present the main results of our work. We focus on two observables of great relevance, namely the surface temperature and the yearly total precipitation, and investigate the skill of response theory in predicting the change in their statistical properties, exploring both changes in global quantities and spatial patterns of changes. We will also show how to flexibly use response theory for assessing when climate change becomes statistically significant in a variety of scenarios. In Sect. 5 we summarize and discuss the main findings of this work. In Sect. 6 we propose some ideas for potentially exciting future investigations.

2 Pullback Attractor and Climate Response

Since the climate system experiences forcings whose variations take place on many different time scales [40], defining rigorously what climate response actually is requires some care. It seems relevant to take first a step in the direction of considering the rather natural situation where we want to estimate the statistical properties of complex non-autonomous dynamical systems.

Let us then consider a continuous-time dynamical system

on a compact manifold \(\mathcal {Y}\subset \mathbb {R}^d\), where \(x(t)=\phi (t,t_0)x(t_0)\), with \(x(t=t_0)=x_{in}\in \mathcal {Y}\) initial condition and \(\phi (t,t_0)\) is defined for all \(t\ge t_0\) with \(\phi (s,s)=\mathbf{1}\). The two-time evolution operator \(\phi \) generates a two-parameter semi-group. In the autonomous case, the evolution operator generates a one-parameter semigroup, because of time translational invariance, so that \(\phi (t,s)=\phi (t-s)\) \(\forall t\ge s\). In the non-autonomous case, in other terms, there is an absolute clock. We want to consider forced and dissipative systems such that with probability one initial conditions in the infinite past are attracted at time t towards \(\mathcal {A}(t)\), a time-dependent family of geometrical sets. In more formal terms, we say a family of objects \(\cup _{t\in \mathbb R} \mathcal {A}(t)\) in the finite-dimensional, complete metric phase space \(\mathcal {Y}\) is a pullback attractor for the system \(\dot{x}=F(x,t)\) if the following conditions are obeyed:

-

\(\forall t\), \(\mathcal {A}(t)\) is a compact subset of \(\mathcal {Y}\) which is covariant with the dynamics, i.e. \(\phi (s,t)\mathcal {A}(t)=\mathcal {A}(s)\), \(s\ge t\).

-

\(\forall t\) \(\lim _{t_0\rightarrow -\infty }d_{\mathcal {Y}}(\phi (t,t_0)B,\mathcal {A}(t))=0\) for a.e. measurable set \(B\subset \mathcal {Y}\).

where \(d_\mathcal {Y}(P,Q)\) is the Hausdorff semi-distance between the \(P \subset \mathcal {Y}\) and \(Q\subset \mathcal {Y}\). We have that \(d_\mathcal {Y}(P,Q)=\sup _{x\in P}d_\mathcal {Y}(x,Q)\), with \(d_\mathcal {Y}(x,Q)=\inf _{y\in Q}d_\mathcal {Y}(x,y)\). We have that, in general, \(d_\mathcal {Y}(P,Q)\ne d_\mathcal {Y}(Q,P)\) and \(d_\mathcal {Y}(P,Q)=0\Rightarrow P\subset Q\). See a detailed discussion of these concepts in, e.g., [28–30]. Note that a substantially similar construction, the snapshot attractor, has been proposed and fruitfully used to address a variety of time-dependent problems, including some of climatic relevance [31–34].

In some cases, the geometrical set \(\mathcal {A}(t)\) supports a useful measure \(\mu _t(\text {d}x)\). Such a measure can be obtained as evolution at time t through the Ruelle–Perron–Frobenius operator [41] of the Lebesgue measure supported on B in the infinite past, as from the conditions above. Proposing a minor generalization of the chaotic hypothesis [42], we assume that when considering sufficiently high-dimensional, chaotic and non-autonomous dissipative systems, at all practical levels—i.e. when one considers macroscopic observables—the corresponding measure \(\mu _t(\text {d}x)\) constructed as above is of the SRB type. This amounts to the fact that we can construct at all times t a meaningful (time-dependent) physics for the system. Obviously, in the autonomous case, and under suitable conditions—e.g. in the case of of Axiom A system or taking the point of view of the chaotic hypothesis—\(\mathcal {A}(t)=\Omega \) is the attractor of the system (where the t-dependence is dropped), which supports the SRB invariant measure \(\mu (\text {d}x)\).

Note that when we analyze the statistical properties of a numerical model describing a non-autonomous forced and dissipative system, we often follow—sometimes inadvertently—a protocol that mirrors precisely the definitions given above. We start many simulations in the distant past with initial conditions chosen according to an a-priori distribution. After a sufficiently long time, related to the slowest time scale of the system, at each instant the statistical properties of the ensemble of simulations do not depend anymore on the choice of the initial conditions. A prominent example of this procedure is given by how simulations of past and historical climate conditions are performed in the modeling exercises such as those demanded by the IPCC [10–12], where time-dependent climate forcings due to changes in greenhouse gases, volcanic eruptions, changes in the solar irradiance, and other astronomical effects are taken into account for defining the radiative forcing to the system. Note that future climate projections are always performed using as initial conditions the final states of simulations of historical climate conditions, with the result that the covariance properties of the \(\mathcal {A}(t)\) set are maintained.

Computing the expectation value of measurable observables for the time dependent measure \(\mu _t(\text {d}x)\) resulting from the evolution of the dynamical system given in Eq. 1 is in general far from trivial and requires constructing a very large ensemble of initial conditions in the Lebesgue measurable set B mentioned before. Moreover, from the theory of pullback attractors we have no real way to predict the sensitivity of the system to small changes in the dynamics.

The response theory introduced by Ruelle [22, 35] (see also extensions and a different point of view summarized in, e.g. [43]) allows for computing the change in the measure of an Axiom A system resulting from weak perturbations of intensity \(\epsilon \) applied to the dynamics in terms of the properties of the unperturbed system. The basic concept behind the Ruelle response theory is that the invariant measure of the system, despite being supported on a strange geometrical set, is differentiable with respect to \(\epsilon \). See [44] for a discussion on the radius of convergence (in terms of \(\epsilon \)) of the response theory.

In this case, instead, our focus is on saying that the Ruelle response theory allows for constructing the time-dependent measure of the pullback attractor \(\mu _t(\mathrm {d}x)\) by computing the time-dependent corrections of the measure with respect to a reference state. In particular, let us assume that we can write

where \(|\epsilon X(x,t)|\ll |F(x)|\) \(\forall t\in \mathbb R\) and \(\forall x\in \mathcal {Y}\), so that we can treat F(x) as the background dynamics and \(\epsilon X(x,t)\) as a perturbation. Under appropriate mild regularity conditions, it is possible to perform a Schauder decomposition [45] of the forcing, so that we express \(X(x,t)=\sum _{k=1}^\infty X_k(x)T_k(t)\). Therefore, we restrict our analysis without loss of generality to the case where \(F(x,t)=F(x)+\epsilon X(x)T(t)\).

One can evaluate the expectation value of a measurable observable \(\Psi (x)\) on the time dependent measure \(\mu _t(\text {d}x)\) of the system given in Eq. 1 as follows:

where \(\langle \Psi \rangle _0 = \int \bar{\mu }(\mathrm {d}x) \Psi (x)\) is the expectation value of \(\Psi \) on the SRB invariant measure \(\bar{\mu }(\mathrm {d}{x})\) of the dynamical system \(\dot{x}=F(x)\). Each term \(\langle \Psi \rangle _0^{(j)}(t)\) can be expressed as time-convolution of the \(j^{th}\) order Green function \(G^{(j)}_\Psi \) with the time modulation T(t):

At each order, the Green function can be written as:

where \(\Lambda (\bullet )={X} \cdot \nabla (\bullet )\) and \(S_0^t(\bullet )=\exp (t {F}\cdot \nabla )(\bullet )\) is the unperturbed evolution operator while the Heaviside \(\Theta \) terms enforce causality. In particular, the linear correction term can be written as:

while, considering the Fourier transform of Eq. 6, we have:

where we have introduced the susceptibility \(\chi ^{(1)}_\Psi (\omega )=\mathcal {F}[G^{(1)}_\Psi ]\), defined as the Fourier transform of the Green function \(G^{(1)}_\Psi (t)\). Under suitable integrability conditions, the fact that the Green function \(G^{(t)}\) is causal is equivalent to saying that its susceptibility obeys the so-called Kramers–Kronig relations [23, 46], which provide integral constraints linking its real and imaginary part, so that \(\chi ^{(1)}(\omega )=\mathrm {i} \mathcal {P}(1/\omega )\star \chi ^{(1)}(\omega )\), where \(i=\sqrt{-1}\), \(\star \) indicates the convolution product, and \(\mathcal {P}\) indicates that integration by parts is considered. See also extensions to the case of higher order susceptibilities in [37, 47–49].

As discussed in [2, 23, 36], the Ruelle response theory provides a powerful language for framing the problem of the response of the climate system to perturbations. Clearly, given a vector flow F(x, t), it is possible to define different background states, corresponding to different reference climate conditions, depending on how we break up F(x, t) into the two contributions F(x) and \(\epsilon X(x,t)\) in the right hand side of Eq. 2. Nonetheless, as long as the expansion is well defined, the sum given in Eq. 3 does not depend on the reference state. Of course, a wise choice of the reference dynamics leads to faster convergence.

Note that once we define a background vector flow F(x) and approximate its invariant measure \(\bar{\mu }(\mathrm {d}x)\) by performing an ensemble of simulations, by using Eqs. 3–6 we can construct the time dependent measure \(\mu _t(\mathrm {d}x)\) for many different choices of the perturbation field \(\epsilon X(x,t)\), as long as we are within the radius of convergence of the response theory. Instead, in order to construct the time dependent measure following directly the definition of the pullback attractor, we need to construct a different ensemble of simulations for each choice of F(x, t).

One needs to note that constructing directly the response operator using the Ruelle formula given in Eq. 6 is indeed challenging, because of the different difficulties associated to the contribution coming from the unstable and stable directions [50]; nonetheless, recent applications of adjoint approaches [51] seem quite promising [52].

Instead, starting from Eqs. 6 and 7, it is possible to provide a simple yet general method for predicting the response the system for any observable \(\Psi \) at any finite or infinite time horizon t for any time modulation T(t) of the vector field X(x), if the corresponding Green function or, equivalently, the susceptibility, is known. Moreover, given a specific choice of T(t) and measuring \(\langle \Psi \rangle _0^{(1)}(t)\) from a set of experiments, the same equations allow one to derive the Green function. Therefore, using the output of a specific set of experiments, we achieve predictive power for any temporal pattern of the forcing X(x). In other terms, from the knowledge of the time dependent measure of one specific pullback attractor, we can derive the time dependent measures of a family of pullback attractors. We will follow this approach in the analysis detailed below. While the methodology is almost trivial in the linear case, it is in principle feasible also when higher order corrections are considered, as long as the response theory is applicable [37, 48, 49].

We remark that in some cases divergence in the response of a chaotic system can be associated to the presence of slow decorrelation for the measurable observable in the background state, which, as discussed in e.g. [53], can be related to the presence of nearby critical transitions. Indeed, we have recently investigated such an issue in [54], thus providing the statistical mechanical embedding for the classical problem of multistability of the Earth’s system, which had been previously studied using macroscopic thermodynamics in [55–57].

3 A Climate Model of Intermediate Complexity: The Planet Simulator—PLASIM

The Planet Simulator (PLASIM) is a climate model of intermediate complexity, freely available upon request to the group of Theoretical Meteorology at the University of Hamburg and includes a graphical user interface facilitating its use. By intermediate complexity we mean that the model is gauged in such a way to be parsimonious in terms of computational cost and flexible in terms of the possibility of exploring widely differing climatic regimes [39]. Therefore, the most important climatic processes are indeed represented, and the model is complex enough to feature essential characteristics of high-dimensional, dissipative, and chaotic systems, as the existence of a limited horizon of predictability due to the presence of instabilities in the flow. Nonetheless, one has to sacrifice the possibility of using the most advanced parametrizations for subscale processes and cannot use high resolutions for the vertical and horizontal directions in the representation of the geophysical fluids. Therefore, we are talking of a modelling strategy that differs from the conventional approach aiming at achieving the highest possible resolution in the fluid fields and the highest precision in the parametrization of the highest possible variety of subgrid scale processes [12], but rather focuses on trying to reduce the gap between the modelling and the understanding of the dynamics of the geophysical flows [58].

The dynamical core of PLASIM is based on the Portable University Model of the Atmosphere PUMA [59]. The atmospheric dynamics is modelled using the primitive equations formulated for vorticity, divergence, temperature and the logarithm of surface pressure. Moisture is included by transport of water vapour (specific humidity). The governing equations are solved using the spectral transform method [60, 61]. In the vertical, non-equally spaced sigma (pressure divided by surface pressure) levels are used. The parametrization of unresolved processes consists of long- [62] and short- [63] wave radiation, interactive clouds [64–66], moist [67, 68] and dry convection, large-scale precipitation, boundary layer fluxes of latent and sensible heat and vertical and horizontal diffusion [69–72]. The land surface scheme uses five diffusive layers for the temperature and a bucket model for the soil hydrology. The oceanic part is a 50 m mixed-layer (swamp) ocean, which includes a thermodynamic sea ice model [73].

The horizontal transport of heat in the ocean can either be prescribed or parametrized by horizontal diffusion. While in [36] the ocean transport was set to zero, in the experiments performed here we choose the second option, because having even a severely simplified representation of the feedbacks associated to the large scale heat transport performed by the ocean improves the realism of the response of the system. We remind that the ocean contributes to about 30 % of the total meridional heat transport in the present climate [6, 74, 75]. A detailed study of the impact of changing oceanic heat transports on the dynamics and thermodynamics of the atmosphere can be found in [76].

The model is run at T21 resolution (approximately \(5.6^{\circ }\times 5.6^{\circ }\)) with 10 vertical levels. While this resolution is relatively low, it is expected to be sufficient for obtaining a reasonable description of the large scale properties of the atmospheric dynamics, which are most relevant for the global features we are interested in. We remark that previous analyses have shown that using a spatial resolution approximately equivalent to T21 allows for obtaining an accurate representation of the major large scale features of the climate system. PLASIM features O \((10^5)\) degrees of freedom, while state-of-the-art Earth System Models boast easily over \(10^8\) degrees of freedom.

Long-term climatology of the PLASIM model. Control run performed with background value of CO\(_2\) concentration set to 360 ppm and solar constant defined as \(S=1365\) \(\mathrm{Wm}^{-2}\): a Surface temperature \(\langle T_S \rangle _0 \) (in K); b Yearly total precipitation \(\langle P_y \rangle _0 \) (in mm); c Zonally averaged values \(\langle [T_S] \rangle _0 \) (red line and red y-axis) and \(\langle [P_y]\rangle _0\) (blue line and blue y-axis) (Color figure online)

While the lack of a dynamical ocean hinders the possibility of having a good representation of the climate variability on multidecadal or longer timescales, the climate simulated by PLASIM is definitely Earth-like, featuring qualitatively correct large scale features and turbulent atmospheric dynamics. Figure 1 provides an outlook of the climatology of the model run with constant CO\(_2\) concentration of 360 ppm and solar constant set to \(S=1365\) \(\mathrm{Wm}^{-2}\). We show the long-term averages of the surface temperature \(T_S\) (panel a) and of the yearly total precipitation \(P_y\) (panel b) fields, plus their zonal averages \([T_S]\) and \([P_y]\).Footnote 1 Despite the simplifications of the model, one finds substantial agreement with the main features of the climatology obtained from observations and state-of-the-art model runs: the average temperature monotonically decreases as we move poleward, while precipitation peaks at the equator, as a result of the large scale convection corresponding to the intertropical convergence zone (ICTZ), and features two secondary maxima at the mid latitudes of the two hemispheres, corresponding to the areas of the so-called storm tracks [6]. As a result of the lack of a realistic oceanic heat transport and of too low resolution in the model, the position to the ICTZ is a bit unrealistic as it is shifted southwards compared to the real world, with the precipitation peaking just south of the equator instead of few degrees north of it.

Beside standard output, PLASIM provides comprehensive diagnostics for the nonequilibrium thermodynamical properties of the climate system and in particular for local and global energy and entropy budgets. PUMA and PLASIM have already been used for several theoretical climate studies, including a variety of problems in climate response theory [2, 36], climate thermodynamics [77, 78], analysis of climatic tipping points [54, 55], and in the dynamics of exoplanets [56, 57].

3.1 Experimental Setting

We want to perform predictions on the climatic impact of different scenarios of increase in the CO\(_2\) concentration with respect to a baseline value of 360 ppm, focusing on observables \(\Psi \) of obvious climatic interest such as, e.g. the globally averaged surface temperature \(T_S\). We wish to emphasise that most state-of-the-art general circulation models feature an imperfect closure of the global energy budget of the order of 1 \(\mathrm{Wm}^{-2}\) for standard climate conditions, due to inaccuracies in the treatment of the dissipation of kinetic energy and the hydrological cycle [2, 75, 79, 80]. Instead, PLASIM has been modified in such a way that a more accurate representation of the energy budget is obtained, even in rather extreme climatic conditions [55–57]. Therefore, we are confident of the thermodynamic consistency of our model, which is crucial for evaluating correctly the climate response to radiative forcing resulting from changes in the opacity of the atmosphere.

We proceed step-by-step as follows:

-

We take as dynamical system \(\dot{x}=F(x)\) the spatially discretized version of the partial differential equations describing the evolution of the climate variables in a baseline scenario with set boundary conditions and set values for, e.g., the CO\(_2 = 360\) ppm baseline concentration and the solar constant \(S=1365\) \(\mathrm{Wm}^{-2}\). We assume, for simplicity, that system does not feature daily or seasonal variations in the radiative input at the top of the atmosphere. We run the model for 2400 years in order to construct a long control run. Note that the model relaxes to its attractor with an approximate time scale of 20–30 years.

-

We study the impact of perturbations using a specific test case. We run a first set of \(N=200\) perturbed simulations, each lasting 200 years, and each initialized with the state of the model at year \(200 + 10k\), \(k=1,\ldots ,200\). We choose as perturbation field X(x) the additional convergence of radiative fluxes due to changes in the atmospheric CO\(_2\) concentration. Therefore, such a perturbation field has non-zero components only for the variables directly affected by such forcings, i.e. the values of the temperatures at the resolved grid points of the atmosphere and at the surface. In each of these simulation we perturbed the vector flow by doubling instantaneously the CO\(_2\) concentration. This corresponds to having \(\dot{x}=F(x)\rightarrow \dot{x}=F(x)+\epsilon \Theta (t)X(x)\). Note that the forcing is well known to scale proportionally to the logarithm of the CO\(_2\) concentration [6].

-

By plugging \(T(t)=T_a(t)=\Theta (t)\) into Eq. 6, we have that :

$$\begin{aligned} \frac{\mathrm {d}}{\mathrm {d}t}\langle \Psi \rangle _0^{(1)}(t)=\epsilon G_\Psi ^{(1)}(t) \end{aligned}$$(8)We estimate \(\langle \Psi \rangle _0^{(1)}(t)\) by taking the average of response of the system over a possibly large number of ensemble members, and use the previous equation to derive our estimate of \( G_\Psi ^{(1)}(t)\), by assuming linearity in the response of the system. In what follows, we present the results obtained using all the available \(N=200\) ensemble members, plus, in some selected cases, showing the impact of having a smaller number (\(N=20\)) of ensemble members. It is important to emphasize that framing the problem of climate change using the formalism of response theory gives us ways for providing simple yet useful formulas for defining precisely the climate sensitivity \(\Delta _\Psi \) for a general observable \(\Psi \), as \(\Delta _\Psi =\mathfrak {R}\{\chi ^{(1)}_{\Psi }(0)\}\). Furthermore, if we consider perturbation modulated by a Heaviside distribution, we have the additional simple and useful relation:

$$\begin{aligned} \Delta _\Psi =\frac{2}{\pi \epsilon }\int d\omega '\mathfrak {R}\big \{\langle \Psi \rangle _0^{(1)}{(\omega ')}\big \}, \end{aligned}$$(9)which relates climate response at all frequencies to its sensitivity, as resulting from the validity of the Kramers–Kronig relations. We remark that using a given set of forced experiments it is possible to derive information on the climate response to the given forcing for as many climatic observables as desired. It is important to note that, for a given finite intensity \(\epsilon \) of the forcing, the accuracy of the linear theory in describing the full response depends also on the observable of interest. Moreover, the signal to noise ratio and, consequently, the time scales over which predictive skill is good may change a lot from variable to variable.

-

We want to be able to predict at finite and infinite time the response of the system to some other pattern of CO\(_2\) forcing. Following [36], we choose as a pattern of forcing one of the classic IPCC scenarios, namely a 1 % yearly increase of the CO\(_2\) concentration up to its doubling, and we perform a set of additional \(N=200\) perturbed simulations performed according to such a protocol. Since, as mentioned above, the radiative forcing is proportional to the logarithm of the CO\(_2\) concentration, this corresponds to choosing a new time modulation that can be approximated as a linear ramp of the form

$$\begin{aligned} T_b^\tau (t)=\epsilon t/\tau , \quad 0\le t \le \tau , \quad T_b^\tau (t)=1, t>\tau , \end{aligned}$$(10)where \(\tau =100 \log (2)\) years \(\sim 70\) years is the doubling time. Therefore, for each observable \(\Psi \) we compare the result of convoluting the estimate of the Green function obtained in the previous step with time modulation \(T_b(t)\) with the ensemble average obtained from the new set of simulations.

4 Results

The response theory sketched above allows us in principle to study the change in the statistical properties of any well-behaved, smooth enough observable. Nonetheless, problems naturally emerge when we consider finite time statistics, finite number of ensemble members, and finite precision approximations of the response operators. The Green functions of interest are derived using Eq. 8 as time derivative of the ensemble averaged time series of the observed response to the probe forcing whose time modulation is given by the Heaviside distribution. Clearly, the response is not smooth unless the ensemble size \(N\rightarrow \infty \). Therefore, taking numerically the time derivative leads to having a very noisy estimate of the Green function, which might also depend heavily on the specific procedure used for computing the discrete derivative. This might suggest that the procedure is not robust. Instead, we need to keep in mind that we aim at using the Green function exclusively as a tool for predicting the climate response. Therefore, if we convolute with the Green function with a sufficiently smooth modulating factor T(t) as in Eq. 6, the small time scales fluctuations of the Green function, albeit large in size, become of no relevance, because they are averaged out. This is further eased if, instead of looking for predictions valid for observables defined at the highest possible time resolution of our model, we concentrate of suitably time averaged quantities. Clearly, while it is in principle possible to define mathematically the climate response on the time scale of, e.g., one second, this has no real physical relevance. Looking at the asymptotic behaviour of the susceptibility \(\chi ^{(1)}_\Psi (\omega )\) for \(\omega \rightarrow \infty \) it is possible to derive what is, depending on the signal-to-noise ratio, the time scale over which we can expect to be able to perform meaningful predictions; see discussion in [36].

In order to provide an overlook of the practical potential of the response theory in addressing the problem of climate change, we have decided to focus on two climatological quantities of general interest, namely the yearly averaged surface temperature \(T_S\) and the yearly total precipitation \(P_y\). Such quantities have obvious relevance for basically any possible impact study of climate change, and, while there is much more in climate change than studying the changes in \(T_S\) and \(P_y\), these are indeed the first quantities one considers when benchmarking the performance of a climate model and when assessing whether climate change signals can be detected.

Another issue one needs to address is the role of the spatial patterns of change in the considered quantities. The change in the globally average surface temperature \(\{ T_S\}\) Footnote 2 has undoubtedly gained prominence in the climate change debate and in the IPCC negotiations targets are tailored according to such an indicators. Nonetheless, the impacts of climate change are in fact local and one needs to investigate the spatial patterns of the change signals [12]. Evidently, one expects that coarse grained (in space) quantities will have a better signal-to-noise ratio and will allow for performing higher precision climate projection using response theory. On the other side, the performance of linear response theory at local scale might be hindered by the presence of local strongly nonlinear feedbacks, such as the ice-albedo feedback, which have less relevance when spatial averaging is performed. In what follows, we will consider observables constructed from the spatial fields of \(T_S\) and \(P_y\) by performing different levels of coarse graining. We will begin by looking into globally averaged quantities, and then address the problem of predicting the spatial patterns of climate change.

4.1 Globally Averaged Quantities

We begin our investigation by focusing on the globally averaged surface temperature \(\{T_S\}\) and the globally averaged yearly total precipitation \( \{P_y\} \). In what follows, we perform the analysis using the model output at the highest available resolution (1 day) but present, for sake of convenience and since we indeed focus on yearly quantities, data where a 1-year band pass filtering is performed.

4.1.1 From Green Functions to Climate Predictions

Figure 2 shows the ensemble average performed over \(N=200\) members of the change of \(\langle \{T_S\}\rangle \) (panel a) and \(\langle \{P_y\} \rangle \) (panel b) as a result of the instantaneous doubling of the CO\(_2\) concentration described in the previous section. We find that the asymptotic change in the surface temperature is given by the equilibrium climate sensitivity \(ECS=\Delta _{\{T_S\}}\sim 4.9\) K, which is just outside the likely range of values for the ECS elicited in [12]. Note that the models discussed in [12] include more complex physical and chemical processes and most notably a comprehensive representation of the dynamics of the ocean, plus featuring a seasonal and daily cycle of radiation, so that the comparison is a bit unfair. Nonetheless, we get the sense that PLASIM features an overall reasonable response to changes in the CO\(_2\). This is confirmed by looking at the long terms response of \(\langle \{P_y\} \rangle \) to \(\mathrm{CO}_2\) concentration doubling, for which we find \(\Delta _{\{P_y\}}\sim 125\) mm, which corresponds to about \(11.6\,\%\) of the initial value. These figures are also in good agreement with what reported in [12]. We will comment below on the relationship between the climate change signal for \(\{T_S\}\) and for \(\{P_y\}\).

Climate response to instantaneous \(\mathrm{CO}_2\) concentration doubling for a globally averaged mean annual surface temperature \(\langle \{ T_S\} \rangle \); and b the globally averaged annual total precipitation \(\langle \{P_y\}\rangle \) (b). The black line shows the \(N=200\) ensemble averaged properties of the doubling CO\(_2\) experiments. The light red shading indicates the variability as given by the \(2\sigma \) of the ensemble members. In each panel, the inset portrays the corresponding Green function (Color figure online)

In each panel of Fig. 2 we show as inset the corresponding Green function computed according to Eq. 8. We find that both Green functions have to first approximation an exponential behaviour, even if one can expect also important deviations, as discussed in [36]. We will not elaborate on this. Instead we note that \(G_{\{P_y\}}^{(1)}\) is more noisy that \(G_{\{T_S\}}^{(1)}\), as a result of the fact that \(\langle \{P_y\} \rangle ^{(1)}(t)\) has stronger high frequency contribution to its variability than \(\langle \{T_S\} \rangle ^{(1)}(t)\), i.e. \(\langle \{P_y\} \rangle ^{(1)}(t)\) has, unsurprisingly, has a much shorter decorrelation time, because it refers to the much faster hydrological cycle related processes.

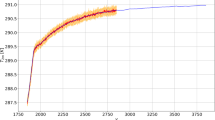

Climate change projections for a the globally averaged mean annual surface temperature \(\langle \{T_S\} \rangle \); and b the globally averaged annual total precipitation \(\langle \{P_y\}\rangle \). The black line shows the \(N=200\) ensemble averaged properties of the experiments where we have a \(1\,\%\) per year \(\mathrm{CO}_2\) concentration increase up to doubling. The light red shading indicates the variability as given by the \(2\sigma \) of the ensemble members. The blue shading indicates the interannual variability of the control run. The thick blue line is the projection obtained using the Green functions derived using \(N=200\) ensemble members of the instantaneous CO\(_2\) concentration doubling experiments. The dashed lines correspond to ten projections each obtained using Green functions derived from \(N=20\) ensemble members of the instantaneous CO\(_2\) concentration doubling experiments (Color figure online)

Figure 3 provides a comparison between the statistics of \(\langle \{T_S\} \rangle (t)\) and \(\langle \{P_y\} \rangle (t)\) obtained by performing \(N=200\) simulations where we increase the CO\(_2\) concentration by \(1\,\%\) per year until doubling, and the prediction of the response theory derived by performing the convolution of the Green functions shown in Fig. 2 with the ramp function given in Eq. 10. We have that the prediction of the ensemble average \(\langle \{T_S\} \rangle _0^{(1)}\) and of the ensemble average \(\langle \{P_y\} \rangle _0^{(1)}\) (blue thick lines) obtained using \(N=200\) ensemble members for the doubling CO\(_2\) experiments is in good agreement for both observables with the results of the direct numerical integrations.

More precisely, we have that the prediction given by the climate response lies within the variability of the \(N=200\) direct simulations for basically all time horizons. The range of variability is depicted with a light red shade, centered on the ensemble mean represented by the black line. Instead, within a time window of about 40–60 years, the response theory slightly underestimates the true change in both \(\{T_S\}\) and \(\{P_Y\}\): we will investigate below the reasons for this mismatch.

It is well known that it is hard to construct a very large set of ensemble members in the case of state-of-the-art climate models, due to the exorbitant computing costs associated with each individual run. Additionally, in general, in the modelling practise the continuous growth in computing and storing resources is typically invested in increasing the resolution and the complexity of climate models, rather than populating more attentively the statistics of the run of a given version of a model. Therefore, even in coordinated modelling exercises contributing to the latest IPCC report, the various modelling groups are requested to deliver a number of ensemble member of the order of 10 [12].

In order to partially address the problem of assessing how the number of ensemble members can affect our prediction, we present in Fig. 3 the result of the prediction of the climate change signal for \(\langle \{T_S\} \rangle \) and \(\langle \{P_y\} \rangle \) obtained by constructing the Green function using only \(N=20\) members of the ensemble of simulations of instantaneous CO\(_2\) concentration doubling. Ten thin dashed blue lines represent in each panel the result of such predictions. Interestingly, each of the prediction obtained with a reduced number of ensemble members also agrees with the direct numerical simulations when we consider \(\langle \{T_S\} \rangle \), because the spread around our best estimates obtained using the full set of ensemble members is minimal.

Instead, when looking at \(\langle \{P_y\} \rangle \), we have that only some of the predictions derived using reduced ensemble sets lie within the variability of the direct numerical simulations, with a much larger spread around the prediction obtained with the full ensemble set. This fact is closely related to the fact that the corresponding Green function is noisier, see Fig. 2, and suggests that in order to have good convergence of the statistical properties of the response operator a better sampling of the attractor is needed.

We conclude that the computational requirements for having good skill in predicting the changes in \(\{P_y\}\) are harder than in the case of \(\{T_S\}\). Indeed, the surface temperature is a good quantity in terms of our ability to predict it, and in terms of being a good indicator of climate change, as it allows one to find clear evidence of the departure of the statistics from the unperturbed climate conditions. This is in agreement with the actual practice of the climate community [12].

Climate response at different time horizons. a Time needed for detecting climate climate change: ensemble average of the response is outside the interannual variability with \(95\,\%\) statistical significance (black line); no overlap between the \(95\,\%\) confidence intervals representing the interannual variability of the control run and the ensemble variability of the projection (red line). b Transient climate sensitivity—TCS—as measured by the ensemble average of the \(\langle T_S \rangle \) at time \(\tau \) where doubling of the CO\(_2\) concentration is realized following an exponential increase at rate of \(r_\tau =100(2^{(1/\tau )}-1)\) % per year, where \(\tau \) is expressed in years. The equilibrium climate sensitivity (ECS) is indicated (Color figure online)

4.1.2 Climate Change Detection and Climate Inertia

We dedicate some additional care in studying the climate response in terms of changes in the globally averaged surface temperature. We wish to use the information gathered so far for assessing some features of climate change in different scenarios of modulation of the forcing. In particular, we focus on studying the properties of the following expression:

when different values of the CO\(_2\) concentration doubling time \(\tau \) are considered. This amounts to performing a family of climate projections where the rate of increase of the CO\(_2\) concentration is \(r_\tau =100(2^{(1/\tau )}-1)\) % per year (where \(\tau \) is expressed in years). As limiting cases, we have \(\tau =0\) — instantaneous doubling, as in fact described by the probe scenario \(T_a(t)\), and \(\tau \rightarrow \infty \), which provides the adiabatic limit of infinitesimally slow changes.

We want to show how response theory — and, in particular, Eq. 11 — can be used for providing a flexible tool in the problem of climate change detection. The definition of a suitable probabilistic framework for assessing whether an observed climate fluctuations is caused by a specific forcing is extremely relevant (including for legal and political reasons) and is since the early 2000s the subject of an intense debate [13, 14]. In this case, given our overall goals, we provide a rather unsophisticated treatment of the problem. In Fig. 4a we present our results, where different scenarios of forcings are considered. The black line tells us what is the time it takes for the projected change in the ensemble average to lie outside the \(95\,\%\) confidence interval of the statistics of the unperturbed control run, i.e. practically being outside its range of interannual variability. More precisely, the black line portrays the following escape time:

where \(\sigma (\{T_S\})_0\sim 0.24\) K is the standard deviation of the yearly averaged time series of \(\{T_S\}\) in the control run.

Nonetheless, we would like to be able to assess when not only the projected change in the ensemble average is distinguishable from the statistics of the control run, but, rather, when an actual individual simulation is incompatible with the statistics of the unperturbed climate, because we live in one of such realizations, and not on any averaged quantity. Obviously, in order to assess this, one would require performing an ensemble of direct simulations, thus giving no scope to any application of the response theory. We can observe, though, from Fig. 3a, that the interannual variability of the control run and the ensemble variability of the perturbed run are rather similar (being the same if no perturbation is applied). Therefore, we heuristically assume that the two confidence intervals have the same width. The red line portrays the second escape time

such that for \(t\ge t_{min,4}^\tau \) it is extremely unlikely that any realization of the climate change scenario due to a forcing of the form \(T_b^\tau \) perturbed run has statistics compatible with that of the control run. In other terms, \(t_{min,4}^\tau \) provides a robust estimate of when detection of climate change in virtually unavoidable from a single run, while \(t_{min,2}^\tau \) gives an estimate of the time horizon after which it makes sense to talk about climate change. We would like to remark that using the Green functions reconstructed from the reduced ensemble sets as shown in Fig. 3, one obtains virtually indistinguishable estimates for \(t\ge t_{min,2}^\tau \) and \(t\ge t_{min,4}^\tau \) for all values of \(\tau \). This suggests that these quantities are rather robust.

We can detect two approximate scaling regimes, with changeover taking place for \(r_\tau \sim 1\,\%\) per year.

-

for large values of \(r_\tau \) (\({\ge }1\,\%\) per year), we have that \(t_{min,2}^\tau , t_{min,4}^\tau \propto r_\tau ^{-0.6}\)

-

for moderate values of \(r_\tau \) (\({\le }1\,\%\) per year), we have that \(t_{min,2}^\tau , t_{min,4}^\tau \propto r_\tau ^{-1}\). The latter corresponds to the quasi-adiabatic regime and one finds that, correspondingly, \(t_{min,4}^\tau , t_{min,2}^\tau \propto \tau \).

A quantity that has attracted considerable interest in the climate community is the so-called transient climate sensitivity (TCS), which, as opposed to the ECS, which looks at asymptotic temperature changes, describes the change of \(\{T_S\}\) at the moment of \(\mathrm{CO}_2\) concentration doubling following a \(1\,\%\) per year increase [81]. The difference between ECS and TSC gives a measure of the inertia of the climate system in reaching the asymptotic increase of \(\{T_S\}\) realized with doubled CO\(_2\) concentration. Using response theory, we can extend the concept of transient climate sensitivity by considering any rate of exponential increase of the CO\(_2\) concentration, as discussed in [36]. Using Eq. 11, we have that:

describes the change in the expectation value of \(\{T_S\}\) at the end of the ramp of increase of CO\(_2\) concentration. As suggested by the argument proposed in [81], one expects that the TCS is a monotonically increasing function of \(\tau \) (see Fig. 4b), and that the difference between the ECS and TCS becomes very small for large values of \(\tau \), because we enter the quasi-adiabatic regime where the change in the CO\(_2\) is slower than the slowest internal time scale of the system.

4.1.3 A Final Remark

We would like to make a final remark of the properties of the response of the global observables \(\{T_S\}\) and \(\{P_y\}\). Looking at Figs. 2 and 3, one is unavoidably bound to observe that the temporal pattern of response of \(\{T_S\}\) and \(\{P_y\}\) are extremely similar. In agreement with [81] (see also [12]), we find that to a very good approximation the following scaling applies for all simulations:

where K is one degree Kelvin. In other terms, the two Green functions \(G_{\{T_S\}}^{(1)}\) and \(G_{\{P_y\}}^{(1)}\) are, to a very good approximation, proportional to each other when yearly averages are considered.

Note that this scaling relations does not agree with the naive scaling imposed by the Clausius–Clapeyron relation controlling the partial pressure of saturated water vapour. In fact, were the Clausius–Clapeyron scaling correct, one would have

The reasons why a scaling between changes in \(\{T_S\}\) and \(\{P_y\}\) exists at all, and why it looks like a modified version of a Clausius–Clapeyron-like law, are hotly debated in the literature [82–84].

4.2 Predicting Spatial Patterns of Climate Change

While there is a very strong link between the change in the globally averaged precipitation and of the globally averaged surface temperature, important differences emerge when looking at the spatial patterns of change of the two fields [83]. We will investigate the spatial features of climate response in the next subsection.

The methods of response theory allow us to treat seamlessly also the problem of predicting the climate response for (spatially) local observables. It is enough to define appropriately the observable \(\Psi \) and repeat the procedure described in Sect. 3.1. As a first step in the direction of assessing our ability to predict climate change at local scale, we concentrate the zonally (longitudinally) averaged fields \([T_S](\lambda )\) and \([P_y](\lambda )\), where we have made explicit reference to to the dependence on the latitude \(\lambda \). Studying these fields is extremely relevant because it allows us to look at the difference of the climate response at different latitudinal belts, which are well known to have entirely different dynamical properties, and, in particular, to look at equatorial-polar contrasts.

We show in Fig. 5 the long-term change in the climatology of the \([T_S]\), i.e., the climate sensitivity for each latitudinal band. We have confirmation of well-established findings: the response of the surface temperature is much stronger in the higher latitudes than in the tropical regions, as a result of the local ice-albedo feedback and, secondarily, of the increased transport resulting from changes in the circulation. Additionally, there is a clear asymmetry between the two hemispheres, with the response in the northern hemisphere being notably larger, as a result of the larger land masses [12]

Patterns of climate response—surface temperature \(T_S\). a Projection of the change of \([T_S]\). The black and red lines indicates the escape times as presented in Fig. 4 for \(\tau =70\). b Difference between the ensemble average of the direct numerical simulations and the predictions obtained using the response theory (Color figure online)

In this case, we need first to construct a different Green function for each latitude from the instantaneous CO\(_2\) doubling experiments. Then, we perform the convolution of the Green functions with the same ramp function and obtaining the prediction of the response to the \(1\,\%\) per year increase in the CO\(_2\) concentration for each latitude.

Figure 6 shows the result of our application of the response theory for predicting the response of the zonally averaged surface temperature to the considered forcing scenario: panel a displays the projection performed using response theory, and panel b shows the difference between the results obtained from the actual direct numerical simulations. We first observe that the agreement is extremely good except for the time window 20–60 y in the high latitudes of the Southern Hemisphere and 40–60 y in the high latitudes of the Northern Hemisphere, where the response theory underestimates the true amount of surface temperature increase.

Climate response at different time horizons for the \(T_S\) spatial field. a Projection obtained using response theory for a time horizon of 20 y. b Same as in a, but for 60 y. c Difference between the ensemble average of the direct numerical simulations and the prediction of the response theory for a time horizon of 20 y. d Same as in c, but for 60 y (Color figure online)

Something interesting happens when looking at the latitudinal profile of the time horizons of escape from the statistics of the control run presented in Fig. 4a given by \(t_{min,2}^\tau \) and \(t_{min,4}^\tau \), where \(\tau \) is 70 y. Interestingly, we find that, while the climate response is weaker in the tropics, one is able to detect climate change earlier than in the high latitude regions, the reason being that the interannual variability of the tropical temperature is much lower. Therefore, the signal-to-noise ratio is higher. We need to note that our model does not feature the processes responsible for important tropical variability like El-Niño-Southern Oscillation (ENSO), so this result might be not so realistic, yet it seems to have some conceptual merit.

It is also rather attractive the fact that we are now able to relate to specific region the cold bias of the prediction for \(\{T_S\}\) seen in Fig. 3. The reason for the presence of such discrepancies concentrated in the high latitude regions is relatively easy to ascertain. We can in fact attribute this to the inability of a linear method like the one used here to represent accurately the strong nonlinear ice-albedo feedback, which dominates the climate response of the polar regions, and especially over the sea areas.

This can be made even more clear by looking at the performance of the response theory in predicting the 2D patterns of change of \(T_S\). This is shown in Fig. 7. where we see more clearly the geographical features of the changes in \(T_S\) described above, and find confirmation that the sources of biases come exactly from the high-latitude sea-land margins, where sea ice is present. We also note that while for the time horizon of 20 y the bias between the simulations and the predictions of the response theory is comparable to the actual signal of response, the situation greatly improves for the time horizon of 60 y. As we see here, there is good hope in being able to predict quite accurately the climate response also at local scale, with no coarse graining involved, at least in the case of the \(T_S\) field.

As a final step, we wish to discuss climate projections for the quantity \([P_y]\). Figure 5 shows that our model give a picture of long-term climate change that is overall compatible with the findings of more complex and modern models: we foresee an increase of the precipitation in the tropical belt and in the regions of the storm tracks in the mid-latitude of the two hemispheres, whereas the change in small or negative in the remaining parts of the world. In other terms the regions that get a lot of rain are going to get even more, while drier regions do not benefit from the overall increase in the globally averaged precipitation. As we see, there is basically no correspondence between the patterns of change of \([P_y]\) and \([T_S]\) (despite the strong link between the response of the two globally averaged quantities) because precipitation patterns change as a result of a complex interplay of small and large scale processes, involving local thermodynamic exchanges, evaporation, as well as purely dynamical processes [83].

Patterns of climate response—yearly total precipitation \(P_y\). a Projection of the change of the zonal average of \(P_y\) for different time horizons. The black and red lines indicates the escape times as presented in Fig. 4 for \(\tau =70\). b Difference between the ensemble average of the direct numerical simulations and the predictions obtained using the response theory (Color figure online)

Panel a of Fig. 8 shows how response theory predicts the change in \([P_y]\) at different time horizons. We note that climate change is basically detectable only in the regions where strong increases of precipitation takes place, and the horizon of escape from the control is much later in time compared to the case of \([T_S]\), the basic reason being that the variability of precipitation is much higher.

Panel b of Fig. 8 shows the bias between the ensemble average of the numerical simulations and the prediction of the response theory. We notice that such biases are much stronger than in the case of \([T_S]\) (Fig. 6b). In particular, we find rather interesting features in the time horizon of 20–60 y for basically all latitudes. As we know from Fig. 3b, the global average of such biases is rather small, but they are quite large locally. The projections performed using response theory underestimate the effects of some (relevant) nonlinear phenomena that impact the latitudinal distribution of precipitation, such as:

-

Change in the relative size and symmetry of the ascending and descending branches of the Hadley cell, and in the position of its poleward extension: it is well known that strong warming can lead to a shift in the ITCZ, where where strong convective rain occurs, and to an extension of the dry areas of descending [85]. Correspondingly, the projections performed using the response theory are biased dry in the equatorial belt and biased wet in the subtropical band.

-

Impact on the water budget of the mid-latitudes of the increased water transport from the tropical regions taking place near the poleward extension of the Hadley cell, plus the change in the position of the stork track [86]. As a result, the projection performed using the response theory is biased dry compared to the actual simulations in the mid-latitudes.

Comparing Figs. 3a, b, 6b, and 8b makes it clear that the performance of methods based on linear response theory depends strongly on the specific choice of the observable. In fact, when we choose an observable whose properties are determined by processes that are rather sensitive to the applied forcing, higher order corrections will be necessary to achieve a good precision.

5 A Critical Summary of the Results

This paper has been devoted to providing a statistical mechanical conceptual framework for studying the problem of climate change. We find it useful to construct the statistical properties of an unavoidably non-autonomous system like the climate using the idea of the pullback attractor and of the time-dependent measure supported on it. Response theory allows to practically compute such a time-dependent measure starting from the invariant measure of a suitably chosen reference autonomous dynamics.

Using a the general circulation model PLASIM, we have developed response operators for predicting climate change resulting from an increase in the concentration of CO\(_2\). The model features only O\((10^5)\) degrees of freedom as opposed to O\((10^8)\) or more of state-of-the-art climate models [12], but, despite its simplicity, delivers a pretty good representation of Earth’s climate and of its long-term response to CO\(_2\) increase. PLASIM provides a low-resolution yet accurate representation of the dynamics of the atmosphere and of its coupling with the land surface, the ocean and the sea ice; it uses simplified but effective parametrizations for subscale processes including radiation, diffusion, dissipation, convection, clouds formation, evaporation, and precipitation in liquid and solid form. The main advantage of PLASIM is its flexibility and the relative low computer cost of launching a large ensemble of climate simulations. The main disadvantage is the lack of a representation of a dynamic ocean, which implies that we have a cut-off at the low frequencies, because we miss the multidecadal and centennial variability due to the ocean dynamics. We have decided to consider classic IPCC scenarios of greenhouse forcing in order to make our results as relevant as possible in terms of practical implications.

The construction of the time dependent measure resulting from varying concentrations of CO\(_2\) has been achieved by first performing a first set of simulations where \(N=200\) ensemble members sampled from a long control run undergo an instantaneous doubling of the initial CO\(_2\) concentration. Through simple numerical manipulations, we have been able to derive the linear Green function for any observable of interest, which makes it possible to perform predictions of climate change to an arbitrary pattern of change of the CO\(_2\) concentration using simple convolutions, under the hypothesis that linearity is obeyed to a good degree of approximation.

We have studied the skill of the response theory in predicting the change in the globally averaged quantities as well as the spatial patterns of change to a forcing scenario of \(1\,\%\) per year increase of CO\(_2\) concentration up to doubling. We have focused on observables describing the properties of two climatic quantities of great geophysical interest, namely the surface temperature and the yearly total precipitation. The predictions of the response theory have been compared to the results of additional \(N=200\) direct numerical simulations performed according this second scenario of forcing.

The performance of response theory in predicting the change in the globally averaged surface temperature and precipitation is rather good at all time horizons, with the predicted response falling within the ensemble variability of the direct simulations for all time horizons except for a minor discrepancy in the time window 40–60 y. Additionally, our results confirm the presence of a strong linear link in the form of modified Clausius–Clapeyron relation between changes in such quantities, as already discussed in the literature.

We have also studied how sensitive is the climate projection obtained using response theory to the size of the ensemble used for constructing the Green function. This is a matter of great practical relevance because it is extremely challenging to run a large number of ensemble members for specific scenarios using state-of-the-art climate models, given their extreme computational cost [12]. We have then tested the skill of projections of globally averaged surface temperature and of globally averaged yearly total precipitation performed using Green functions constructed using \(N=20\) ensemble members. We obtain that the quality of the projection is only moderately affected, and especially so in the case of the temperature observable.

By performing convolution of the Green function with various scenarios where the CO\(_2\) increases at different rates, we are able to study the problem of climate change detection, associating to each rate of increase a time frame when climate change becomes statistically significant. Another new aspect of climate response we are able to investigate thanks to the methods developed here is the rigorous definition of transient climate sensitivity, which basically measures how different is the actual climate response with respect to the case of quasi-adiabatic forcings, and defines the thermal inertia of climate.

Building upon the ideas proposed in [36], we have shown that response theory allows to put in a broader and well defined context concepts like climate sensitivity:

-

we understand that the equilibrium climate sensitivity relates to the zero-frequency response of the system to doubling of the CO\(_2\) concentration: it is then clear that if we are not able to resolve the slowest time scales of the climate system, we will find so-called state-dependency [87, 88] when estimating equilibrium climate sensitivity on slow (but not ultraslow) time scales, because we sample different regions of the climatic attractor;

-

we have that concepts like time-scale dependency [89] of the climate sensitivity are in fact related to the concept of inertia of the climate response, which can be explored by generalizing the idea of transient climate sensitivity [81] to all time scales of perturbations;

-

in the case of coupled atmosphere-ocean models, looking at the transient climate sensitivity for different rates of CO\(_2\) concentration increase can be useful for understanding their multiscale properties.

The analysis of the spatial pattern of climate change signal using response theory is entirely new and never attempted before. Clearly, when going from the global to local scale we have to expect lower signal-to-noise ration, as the variability is enhanced, and the possibility that linearity is a worse approximation, as a result of powerful local nonlinear effects. Response theory provides an excellent tool also for predicting the change in the zonal mean of the surface temperature, except for an underestimation of the warming in the very high latitude regions in the time horizons of 40–60 y. This is, in fact, the reason for the small bias found already when looking at the prediction of the globally averaged surface temperature. By looking at the 2D spatial patterns, we can associate such bias to a misrepresentation of the warming in the region where the presence of sea-ice is most sensitive to changing temperature patterns. The fact that linear response theory has problems in capturing the local features of a strongly nonlinear phenomenon like ice-albedo feedback makes perfect sense. It is remarkable that, instead, response theory is able to predict accurately the change in the surface temperature fields in most regions of the planet.

The prediction of the spatial patterns of change in the precipitation is much less successful, as a result of the complex nonlinear processes controlling the structure of the precipitative field. In particular, response theory is not able to deal effectively with describing the poleward shift of the storm tracks, in the widening of the Hadley cell, and in the change of the ICTZ.

This paper provides a possibly convincing case for constructing climate change predictions in comprehensive climate models using concepts and methods of nonequilibrium statistical mechanics. The use of response theory potentially allows to reduce the need for running many different scenarios of climate forcings as in [12], and to derive, instead, general tools for computing climate change to any scenario of forcings from few, selected runs of a climate model. Additionally, it is possible to deconstruct climate response to different sources of forcings apart from increases in CO\(_2\) concentration, e.g. changes in CH\(_4\) and aerosols concentration, in land surface cover, in solar irradiance—and recombine it to construct very general climate change scenarios. While this operation is easier in a linear regime, it is potentially doable also in the nonlinear case, see [49] for details.

6 Challenges and Future Perspectives

The limitations of this paper point at some potentially fascinating scientific challenges to be undertaken. Let’s list some of them:

-

A fundamental limitation of this study is the impossibility to resolve the centennial oceanic time scales. It is of crucial importance to test whether response theory is able to deal with prediction on a wider range of temporal scales, as required when ocean dynamics is included. We foresee future applications using a fully coupled yet efficient model like FAMOUS [90].

-

One should perform a systematic investigation of how appropriate linear approximation is in describing climate response to forcings, by computing estimates of the Green function using different level of CO\(_2\) increases and testing them against a wide range of time modulating functions describing different scenarios of forcings.

-

It is necessary to study in greater detail what is the minimum size of the ensemble needed for achieving a good precision in the construction of the Green function; the requirement depends on the specific choice of the observable, including how it is constructed in terms of spatial and temporal averages of the actual climatic fields.

-

It is crucial to look at the effect of considering multiple classes of forcings in the climate change scenarios and test how suitable combination of the individual Green functions associated to each separate forcing are able to predict climate response in general.

Different points of view on the problem of predicting climate response should as well be followed. The ab-initio construction of the linear response operator has proved elusive because of the difficulties associated with dealing effectively with both the unstable and stable directions in the tangent space. It is promising to try to approach the problem by using the formalism of covariant Lyapunov vectors (CLVs) [91–94] for having a convincing representation of the tangent space able to separate effectively and in an ordered manner the dynamics on the unstable and stable directions. CLVs have been recently shown to have great potential for studying instabilities and fluctuations in simple yet relevant geophysical systems [95]. By focusing on the contributions coming form the stable directions, one can also expect that such an approach might allow for estimating the — otherwise hard to control — error in the evaluation of the response operator introduced when applying the standard form of the fluctuation–dissipation theorem in the context of nonequilibrium systems possessing singular invariant measure. This would help understanding under which conditions climate-related applications of the fluctuation–dissipation theorem [17–21] have hope of being successful.

One of the disadvantages of the CLVs is that constructing them is rather demanding in terms of computing power and requires a global (in time) analysis of the dynamics of the system, in order to ensure covariance, thus requiring relevant resources in terms of memory. Additionally, one expects that all the CLVs of index up to approximately the Kaplan–Yorke dimension of the attractor of the system are relevant for computing the response. Such a number, albeit typically much lower than the number of degrees of freedom, can still be extremely large for an intermediate complexity or, a fortiori, in a comprehensive climate model.