Abstract

In this paper, we discuss and compare empirically various ways of computing multistep quantile forecasts of demand, with a special emphasis on the use of the quantile regression methodology. Such forecasts constitute a basis for production planning and inventory management in logistics systems optimized according to the cycle service level approach. Different econometric methods and models are considered: direct and iterated computations, linear and nonlinear (GARCH) models, simulation and non-simulation based procedures and parametric as well as semiparametric specifications. These methods are applied to compute multiperiod quantile forecasts of the monthly microeconomic time series from the popular M3 competition database. According to various accuracy measures for quantile predictions, the best procedures are based on simulation techniques using predictive distributions generated by either the quantile regression methodology combined with random draws from the uniform distribution or parametric and nonparametric bootstrap techniques. These methods lead to large reductions in the total costs of logistics systems as compared with non-simulation based procedures. For example, in the case of forecasting 12 months ahead, relatively short time series and a high cycle service level, the quantile regression based simulation approach reduces the average supply chain cost per unit of output by about 70–85%. At the shortest horizons, the GARCH model should be seriously considered among the preferred forecasting solutions for production and inventory planning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Demand forecasting in supply chain and logistics management is important for several reasons, including service level targeting, costs minimization and managing the overall risk of supply chains. It constitutes the first step an organization should undertake in its strategic and operational planning (see, for example, Chopra and Meindl 2007, Chap. VII). Because of this, forecasting is among the most important components of logistics systems. The uncertainty concerning future demand makes supply chain management very challenging, while the forecast accuracy to a large extent defines the overall cost of a supply chain (see Syntetos et al. 2016, and compare, for example, Thomassey 2010), even if managers not always consider costs and key financial measures when evaluating their forecasts (see results of the survey studies discussed by McCarthy et al. 2006). From the perspective of risk forecasting and management, the so-called demand risk is considered as one of the most important components of the total risk faced by a supply chain, which consists of risks within a company such as process risks and control risks, risks outside of a company but within the supply chain, encompassing demand risks and supply risks, and risks outside of the supply chain—so-called environmental risks (see Pohl et al. 2010). Thus, the demand risks are considered as a part of the within supply chain risks. On the other hand, the supply chain risks are also divided into operational risks associated with some ‘usual’ uncertainties and so-called disruption risks of natural or anthropogenic kind such as natural disasters, terrorist attacks or economic crises (see, for example, Tang 2006; Choi and Chiu 2012, section 1.1). The ‘usual’ uncertainties defining operational risks are those resulting from demand and supply uncertainty or costs variability, and thus the demand risks are basically treated as operational uncertainties.Footnote 1 However, in principle, the ‘unusual’ uncertainties will also influence the variability of demand, the uncertainty of supply as well as the total variability of operational costs. This may call for considering demand risk within a different notion of uncertainty, namely that called Knightian uncertainty in recognition of the work of Knight (1921), in which case the probability model of a random variable of interest to the decision-maker is unknown and not estimable with a reasonable accuracy and which differs from the notion of risk under a known probability distribution. It also calls for a broader usage of semiparametric methods in more objectively dealing with what Knight (1921) names a third type of uncertainty and which is the error associated with estimating a probability distribution.

Quantile forecasts of demand are of interest to both supply chain managers, who want, for example, to find the optimal level of product availability or the supply chain’s production or distribution potential, as well as managers in a single firm willing to optimize the firm’s inventory level (see, for example, Pearson 2006; Chopra and Meindl 2007, Chap. XII; Ferbar et al. 2009; Tratar 2010, 2015; Wang and Petropoulos 2016; and the dynamic formulation of the newsvendor model in Alwan et al. 2016).Footnote 2 In particular, multiperiod quantile forecasts are indispensable under the forecast based periodic review (T, S) policy in the popular cycle service level (CSL) framework (see, for example, Strijbosch and Moors 2005; Syntetos and Boylan 2008; and Syntetos et al. 2010).Footnote 3 In such policy, the inventory position is reviewed at equidistant points in time and replenished to a certain level depending on the assumed service level requirements. To explicitly define this inventory policy, we introduce the following notation:

- T:

Prespecified review (replenishment) period (in months),

- S:

Order-up-to (target) inventory level (in units),

- L:

Constant lead time (in months),

- \( y_{t} \):

Random demand in period t,

- \( y_{t,n} \):

Cumulated random demand over n consecutive periods starting in t, i.e., \( y_{t,n} = \sum\nolimits_{i = 0}^{n - 1} {y_{t + i} }, \)

- \( \hat{y}_{t} ,\hat{y}_{t,n} \):

Forecasts of the mean values of \( y_{t} \) and \( y_{t,n} \), respectively, i.e., the conditional expectations \( E(y_{t} |\varOmega_{t - 1} ) \) and \( E(y_{t,n} |\varOmega_{t - 1} ) \) given the set of information available in period \( t - 1 \), \( \varOmega_{t - 1} , \)

- \( \hat{\sigma }_{t}^{2} ,\hat{\sigma }_{t,n}^{2} \):

Forecasts of the variances of \( y_{t} \) and \( y_{t,n} \), respectively, i.e., the conditional variances \( Var(y_{t} |\varOmega_{t - 1} ) = E(y_{t}^{2} |\varOmega_{t - 1} ) - E^{2} (y_{t} |\varOmega_{t - 1} ) \) and \( Var(y_{t,n} |\varOmega_{t - 1} ) = E(y_{t,n}^{2} |\varOmega_{t - 1} ) - E^{2} (y_{t,n} |\varOmega_{t - 1} ), \)

- \( \varPhi ( \cdot ),\varPhi_{n} ( \cdot ) \):

Standardized conditional cumulative distribution functions of \( y_{t} \) and \( y_{t,n} \), respectively, which are assumed to be absolutely continuous and constant over time, and

- CSL:

Quantile order of interest to the decision-maker, i.e., service level requirement in terms of the probability of no stockout during the replenishment cycle.

Then, the order-up-to level S is defined through the equation:

i.e., as the quantile of order CSL of the cumulated actual demand over the period \( T + L \). This is solved as:

Under the Gaussianity, stationarity and independence assumptions, (1) can be rewritten as:

The scaling or ‘square root of \( T + L \)’ method (2) is a popular textbook solution and an industry standard for computing quantile forecasts of demand, and is also used as an approximation in the case of correlated demand.

In this paper, we undertake the task of an empirical evaluation of different procedures of multiperiod quantile forecasting which do not require the stationarity and independence assumptions, encompassing direct and iterated approaches, maximum likelihood (ML) and quantile regression estimation, simulation and non-simulation based methods and models with homoscedastic as well as GARCH errors. The basis for this study constitutes a popular dataset of demand data—the monthly microeconomic time series from the M3 forecast competition database (see Makridakis and Hibon 2000). The main part of this paper is preceded by an introductory discussion concerning alternative decision-theoretic approaches that can be of use in supply chain and logistics forecasting. Then, Sect. 3 presents the analyzed methods together with our evaluation framework, encompassing different measures of accuracy for quantile forecasts and tests of quantile unbiasedness, and Sect. 4 discusses the main findings. Finally, the last section offers brief conclusions.

2 Forecasting for supply chain and logistics operations

It is worth recalling the basic fact that the CSL parameter can be optimized assuming that the costs of under- and overstocking are linear functions of the forecast error (see, for example, Chopra and Meindl 2007, Chap. XII). Thus, it is assumed that the supply chain cost is given as:

where \( c_{u} \) and \( c_{o} \) are the unit costs of under- and overstocking, and y and \( \hat{y} \) are the realization and the forecast, respectively. The function (3) is the well known LINLIN (doubly linear) forecast loss (cost) function, which is of the ‘prediction error’ type, i.e., it is an example of a forecast loss dependent solely on the forecast error. Then, applying to (3) the Bayes rule:

leads to optimal forecasts in the form of quantile predictions (see, for example, Gneiting 2011a, b; Komunjer 2013), i.e., the optimal forecast is as follows:

where Y is distributed according to the forecaster’s subjective or objective predictive distribution F of demand and, for simplification, we assume throughout the discussion that the distribution F is absolutely continuous and strictly increasing. Thus, under the loss function (3), optimal forecasts in a given logistics system take the form of quantiles of order \( CSL = \tau = \tfrac{{c_{u} }}{{c_{u} + c_{o} }} \) of the demand distribution.

An alternative way of optimizing a logistics system is based on the notions of Expected Shortage (ES) and Fill Rate (FR). The Expected Shortage is defined as the mean level of lost sales under a prespecified product availability S, i.e., as:

where f is the probability density function associated with F. On the other hand, the Fill Rate is the fraction of demand immediately available from stock. In its so-called infinite period version, it is defined as follows:

The Fill Rate (6) is also known as the type II service level, while the CSL is called the type I service level or the vendor service level (see, for example, Lewis 1997; Brandimarte and Zotteri 2007).

Then, the optimization of a logistics system according to the type II service level requires solving:

or, alternatively,

where \( \tau \in (0,\,1) \) denotes the required service level. In practice, this is often performed under the Gaussianity assumption, \( Y \sim N(m_{Y} ,\sigma_{Y} ) \), in which case we have:

with

where \( \varphi \) and \( \varPhi \) are the probability density function and the distribution function of the standard normal distribution.

On the contrary, the so-called single period version of the Fill Rate is given as (see Chen et al. 2003; Thomas 2005):

and, under the assumption that the demand variable is positive, fulfils the condition \( FR(S) \le FR^{1} (S) \) (see Chen et al. 2003). Thus, a calibration of a logistics system utilizing \( FR^{1} \) will require solving the equation:

It is worth noting that, similar to the case of the type I service level, we can specify forecast loss functions whose expected values are uniquely minimized at the statistical functionals of the distributions of demand which solve the Eqs. (7) and (10). In other words, these statistical functionals are elicitable in the sense of Gneiting (2011b). This is explicitly stated in the following two lemmas formulated with the help of the so-called identification function approach of Osband (1985) (for details, see Gneiting 2011b and the references therein).

Lemma 1

Let F be a class of absolutely continuous distributions with a positive mean value\( 0 < EY < \infty \), and let\( T:{\text{F}} \to R^{ + } \)be the functional defined according to the infinite period Fill Rate requirement of the form:

for a certain service level\( \tau \in (0,\,1) \). Then the loss function\( L:R \times R^{ + } \to R \):

where a is F-integrable and\( \varphi \)is two times differentiable and strictly convex with derivative\( \varphi^{\prime} \)and such that\( \varphi \,{\mathbf{1}}_{{( - \infty ,{\kern 1pt} x]}} \)is F-integrable for every\( x \in R \), is strictly consistent for T.

Proof

Let \( S = T(Y) \) for a certain distribution in F, i.e.,

and let \( f(y) \) be the probability density function of Y. In order to check that \( EL(Y,\hat{y}) \) is uniquely minimized at S, let us consider:

Taking the derivative with respect to \( \hat{y} \), we have:

The first-order condition is then as follows:

or, equivalently,

The thesis follows since \( \varphi \) is strictly convex and the expression in square brackets in (13) is negative for \( \hat{y} < S \) and positive for \( \hat{y} > S \). \( \square \)

Lemma 2

Let F be a class of absolutely continuous distributions on the positive half-axis such that\( E\left( {\frac{1}{Y}} \right) \)exists, and let\( T:{\text{F}} \to R^{ + } \)be the functional defined according to the single period Fill Rate requirement of the form:

for a certain service level\( \tau \in (0,\,1) \). Then the loss function\( L:R^{ + } \times R^{ + } \to R \):

where a is F-integrable and\( \varphi \)is two times differentiable and strictly convex with derivative\( \varphi^{\prime} \)and such that\( E\left( {\frac{\varphi (Y)}{Y}{\mathbf{1}}_{{\{ Y \le x\} }} } \right) \)exists for every\( x \in R^{ + } \), is strictly consistent for T.

Proof

Let \( S = T(Y) \), i.e.,

and let \( f(y) \) be the probability density function of Y. As previously, let us consider the expected value of the examined loss function:

Then, differentiating with respect to \( \hat{y} \), we arrive at:

Thus, the first-order condition is the following:

As previously, the thesis follows since \( \varphi \) is strictly convex and the expression in square brackets in (16) is negative for \( \hat{y} < S \) and positive for \( \hat{y} > S \). \( \square \)

Thus, for a large class of distributions fulfilling certain moment conditions, the functionals defined according to (7) and (10) are elicitable in the sense of Gneiting (2011b). If a statistical functional of interest to the decision-maker is elicitable, the loss function associated with it can be utilized at both the computation and evaluation steps of forecasting procedures in a way similar to the LINLIN loss and the MAPE (Mean Absolute Percentage Error) accuracy measure (see Weiss and Andersen 1984; Weiss 1996; Engle and Manganelli 2004; and Bruzda 2016, 2018) or the loss functions discussed recently in the context of the estimation and forecasting of financial risk (see Fissler and Ziegel 2016; Dimitriadis and Bayer 2017; Maume-Deschamps et al. 2017; Patton et al. 2017), even if such loss functions are not of the ‘prediction error’ type.Footnote 4

Example loss functions (12) and (15) are obtained if one takes \( \phi (y) = y^{2} \) and \( a(y) = \tau y^{2} \) in (12), and \( \phi (y) = \tfrac{1}{2}y^{2} \) and \( a(y) = \tfrac{1}{2}y \) in (15), which leads, respectively, to:

and

Loss functions such as (17) and (18) can be interpreted, for example, in terms of costs associated with maintaining a firm’s production and/or distribution potential.

In the next section we concentrate on supply chain and logistics forecasting under the LINLIN loss and discuss the many ways of computing multiperiod forecasts of this type. We also present our evaluation framework encompassing tests of quantile unbiasedness and different measures of accuracy for quantile forecasts. Similar considerations under loss functions such as (17) and (18) will constitute an interesting topic for further studies.

3 Quantile forecasts of demand

It is worth emphasizing that in the practice of supply chain management there is a need to compute two sorts of multiperiod quantile forecasts of demand. On the one hand, a decision-maker may be interested in quantiles of the predictive distribution of demand in some future period \( n + h \), i.e., in quantile forecasts of the variable \( D_{n + h} \), while, on the other, there is a need to forecast quantiles of sums of the variables \( D_{n + i} \) in the whole forecast horizon, i.e., quantiles of the variable \( \sum\nolimits_{i = 1}^{h} {D_{n + i} } \). The first sort of multiperiod quantile forecasts can be utilized in early warning systems signaling changes on the market and possible threads (compare De Nicolò and Lucchetta 2017), in medium- or long-term (production and distribution) capacity planning, in production planning if products are not stocked (for example, in the food sector) and will also be used in the case of delays in information flows required for operational decisions. The second type of quantile forecasts is required in inventory and production management systems operating under the CSL approach and, as was already mentioned, is often computed on the basis of the approximation (2), although exact solutions are also considered under prespecified data generating mechanisms of the ARMA type, for example, AR(1), MA(1) and ARMA(1,1) in Ali et al. (2012), and ARIMA(0,1,1) in Babai et al. (2013). Our further discussion is mainly focused on the second type of quantile forecasts, which are also exclusively considered later in the empirical part of this paper.

In the general linear case of (possibly nonstationary) ARMA processes, the exact forecasting solution is directly derived as follows. Let us consider an ARMA(p, q) process of the form:

where \( \varepsilon_{t} \sim i.i.d. \) The process (19) can be rewritten in the equivalent form of an infinite moving average:

Then, the forecasts of the sums of the process in the forecast horizon h are given as:

where \( \varOmega_{t} \) is the set of information available in period t, and the forecast error is expressed as:

Thus, the variance of the forecast error is the following:

and quantile forecasts of \( \sum\nolimits_{i = 1}^{h} {y_{t + i} } \) are obtained as follows:

where \( \Phi \) is the standardized conditional distribution function of demand.

In the case of ARMA processes with some sort of conditional heteroscedasticity,Footnote 5 the conditional variance of either \( y_{n + h} \) or \( \sum\nolimits_{i = 1}^{h} {y_{n + i} } \) can be derived directly from its definition. For example, for \( y_{n + h} \) we have:

However, when computing multiperiod quantile forecasts under conditional heteroscedasticity, one should resort to simulations since in such a case the conditional distribution becomes a nonlinear combination of the error terms \( \varepsilon_{t} \), rendering the use of, for example, quantiles of the standard normal distribution, \( z_{\tau } \), inappropriate. This, together with the need to account for estimation errors, sets simulation techniques in the centre of our interest when computing multiperiod forecasts of demand for inventory management applications.

The basis for our forecasting procedures in the empirical part of the paper constitute autoregressive models with polynomial trend functions, which is justified taking into account the results of unit root tests on the data used in this study, indicating (trend-)stationarity in the majority of cases. The maximum autoregression order is set to \( k = 6 \) and the maximum degree of polynomial trend functions is set to \( p = 2 \). The AICC criterion in its versions for the ML and quantile regression estimations is used in model selections. In the case of the direct approach to forecasting, the models are always chosen separately for each forecast horizon, as are also the quantile regression equations for different quantiles, utilized here either in the direct quantile forecasting or in a quantile regression based simulation approach. Since the analyzed data are trending, the approximation (2) has been excluded from the comparison.

The following forecasting procedures are evaluated:

- 1–2

The least squares estimation of models of the form:

$$ y_{t} = \sum\limits_{j = 0}^{q} {\alpha_{j} t^{j} } + \beta_{1} y_{t - 1} + \cdots + \beta_{m} y_{t - m} + \varepsilon_{t} $$(26)(\( q \le p = 2,\;m \le k = 6 \)) with quantile forecasts computed according to the formula (24), where quantiles of the standardized conditional distribution are obtained nonparametrically (1) or are taken from the standard normal distribution (2).Footnote 6

- 3–4

The least squares estimation of the ‘direct’ (MIDAS-type) equation:

$$ D_{t}^{T} = \sum\limits_{i = 0}^{T - 1} {y_{t + i} } = \sum\limits_{j = 0}^{q} {\alpha_{j} t^{j} } + \beta_{1} y_{t - 1} + \cdots + \beta_{m} y_{t - m} + \eta_{t} $$(27)(\( q \le p = 2,\;m \le k = 6 \)) with quantile correction computed nonparametrically (3) or assuming the normal distribution (4).

- 5.

A direct quantile regression approach, in which the quantile regression estimator (see Koenker and Bassett 1978; Koenker 2005) is applied to estimate the (27) and the quantile forecasts are computed in one step.

- 6.

A simulation approach utilizing the quantile regression estimation of the (26) for different quantile orders τ (compare the method of forecasting the Quantile Autoregressive—QAR—processes in Koenker 2011), specified here separately for each quantile order; in the first step, 1000 quantile regressions are estimated for quantile orders being equally spaced values between 0 and 1; the estimated equations are directly used to obtain the one-step ahead predictive distribution of \( y_{t} \); then, random sequences of quantile orders from those used in the first step are generated, the one-step ahead quantile forecasts are substituted for actuals in the quantile regressions chosen according to the random sequences of quantile orders to produce the two-step ahead predictive distribution, and the computations are continued in this way to obtain the h-step ahead predictive distribution; finally, the distributions are cumulated and the forecasts of \( \sum\nolimits_{i} {y_{t + i} } \) are computed with the Matlab function ‘quantile’.

- 7–8

The basic bootstrap method as presented in Clements (2005), §4.2.3, based on the percentile method of Efron and the approach suggested in Pascual et al. (2001) (see the formula (4.9) in Clements 2005), performed on the (26) without bias correction; the nonparametric (7) or parametric (8) bootstrap is used, i.e., to generate the bootstrap samples, either resampled residuals are utilized or innovations are generated assuming the normal distribution;Footnote 7 the predictive distributions of \( y_{t + i} \) are cumulated and the final forecasts are computed; the number of bootstrap samples is set to 1000.

- 9–10

The model (26) is estimated jointly with the GARCH(1,1) equation for the conditional variance assuming the conditional normal distribution; in the estimation, the Matlab functions ‘garchfit’ and ‘garchget’ from the Econometrics Toolbox are used; the predictive distributions of \( y_{t + i} \) are generated either by resampling standardized residuals (9) or through random draws from the standard normal distribution (10); the predictive distributions are then cumulated and the final forecasts are obtained; the estimation errors are not accounted for;Footnote 8 1000 replications.

- 1L–2L

The model (26) is estimated on logarithms of the data, and either resampled residuals (1L) or random draws from the normal distribution (2L) are used to generate the predictive distributions for logarithms of \( y_{t + i} \); the logarithmic transformation is then reversed, and the distributions are cumulated; 1000 replications.

- 6L–10L

As 6–10 but all estimations are performed on logarithms and the final forecasts are computed as in 1L–2L.

Our evaluation of the forecast accuracy is based on the following aggregate measures introduced in Bruzda (2018):

Relative LINLIN costs:

$$ LINLIN^{ * \tau } = \frac{1}{K}\sum\limits_{i = 1}^{K} {\frac{1}{T}\sum\limits_{t = 1}^{T} {\frac{{\left\{ {\tau {\kern 1pt} u_{it}^{ + } + (1 - \tau ){\kern 1pt} u_{it}^{ - } } \right\}}}{{\hat{y}_{it}^{\tau } }}} } = \frac{1}{K}\sum\limits_{i = 1}^{K} {\frac{1}{T}\sum\limits_{t = 1}^{T} {LINLIN_{it}^{ * \tau } } } , $$(28)$$ LINLIN^{ * * \tau } = \frac{1}{K}\sum\limits_{i = 1}^{K} {\frac{1}{T}\sum\limits_{t = 1}^{T} {\frac{{\left\{ {\tau {\kern 1pt} u_{it}^{ + } + (1 - \tau ){\kern 1pt} u_{it}^{ - } } \right\}}}{{y_{it} }}} } = \frac{1}{K}\sum\limits_{i = 1}^{K} {\frac{1}{T}\sum\limits_{t = 1}^{T} {LINLIN_{it}^{ * * \tau } } } , $$(29)Hit Ratio:

$$ Hit^{\tau } = \frac{1}{K}\sum\limits_{i = 1}^{K} {\frac{1}{T}\sum\limits_{t = 1}^{T} {I_{{\left\{ {y_{it} - \hat{y}_{it}^{\tau } > 0} \right\}}} } } , $$(30)Fill Rate:

$$ FR^{\tau } = \frac{1}{K}\sum\limits_{i = 1}^{K} {\frac{1}{T}\sum\limits_{t = 1}^{T} {\left\{ {1 - \frac{{(y_{it} - \hat{y}_{it}^{\tau } )^{ + } }}{{y_{it} }}} \right\}} } , $$(31)where \( \hat{y}_{it}^{\tau } \) and \( y_{it} \) are, respectively, the tth quantile forecast for quantile of order τ for the ith time series and the corresponding realization, \( u_{it}^{ + } \) and \( u_{it}^{ - } \) are the absolute values of the positive and negative forecast errors, K denotes the number of series, T is the number of (fixed horizon) forecasts for a single time series, and \( I_{A} \) is the indicator function for the set A.

It is worth underlining that the aggregate measures (28)–(31) may be interesting not only as a statistical approach to the evaluation of quantile forecasts, but they can also be interpreted in economic terms. In particular, (28) and (29) may be thought of as proportional to supply chain costs per unit of output and per unit of sales, respectively, if the unit costs of over- and understocking are equal across different products and over time or, alternatively, to supply chain costs per the (Dollar or Euro) values of output and sales if the unit costs of over- and understocking are proportional to the unit prices of products (see Bruzda 2018).

Furthermore, we also examine average ranks of our forecasting procedures in rankings based on the LINLIN loss:

and perform the Kupiec (1995) test for quantile unbiasedness, which is based on the following test statistic:

where N denotes the number of hits (violations) and \( K \cdot T \) is the total number of forecasts for a given quantile. Under the hypothesis that the Hit Ratio is equal to the nominal value \( 1 - \tau \), the statistic (33) has asymptotically the χ2(1) distribution. The forecast evaluation presented in the next section was executed after replacing all negative demand forecasts with zeros.

4 Empirical results and discussion

The dataset used in this study comprises the 474 monthly microeconomic time series from the M3 forecast competition (see Makridakis and Hibon 2000), which are described as ‘shipments’.Footnote 9 The time series are of different lengths—the first 277 of them are of length 68–69, while the last 197—of length 126. The ratio-to-moving average method with seasonal factors recomputed on the whole range of observations was applied to seasonally adjust the data if the given time series was treated as seasonal in the exponential smoothing procedures in the M3 competition.Footnote 10

The maximum forecast horizon h was set to 12, and all forecasting models were built on data shortened by 12 observations in order to facilitate a forecast evaluation. Since one forecast for each forecast horizon was calculated, in computing the aggregate accuracy measures we set \( T = 1 \). The following quantile orders τ were examined: 0.5; 0.75; 0.9; 0.95; 0.975 and 0.99, while the detailed statistics are presented mainly for 0.75 and 0.95.Footnote 11 Our presentation of the empirical outcomes is given separately for the two subsets of time series. It is worth underlining that the relative LINLIN costs and the Fill Rate statistics were also computed in trimmed (robustified) versions excluding 5 (10, 20)% of the forecasts (compare Bruzda 2018).

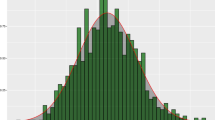

Turning to the analysis of our empirical results, first we note that, for the short time series of length 68–69 and the relative LINLIN costs (28) and (29), the dominant solution turns out to be the procedure 6L, making use of random draws of quantile orders and quantile regression estimation performed on logarithms of the data (see Tables 1 and 2 and, for the cost (29), also compare the first two panels in Fig. 1). Only in the case of one- or two-step ahead quantile forecasts (and up to four-step ahead for \( \tau = 0.99 \)) this procedure is outperformed by some other methods—most of all the bootstrap approach and, particularly for one-step ahead forecasting, the simulations based on GARCH models and the normal distribution (the procedures 10 and 10L). The dominance of the procedure 6L (and also the GARCH models one step ahead) over the other methods becomes even more visible if the robustified (trimmed) versions of the costs (28) and (29) are examined (not reported). The bootstrap procedures with ols estimation on logarithms or, alternatively, levels of the data appear to be the second best solution.

It is interesting to note that the simulation based procedures lead to large reductions in the total costs of logistics systems as compared with non-simulation based methods. In particular, in the case of forecasting 12 months ahead and τ = 0.95, the quantile regression based simulation approach reduces the average supply chain cost per unit of output by about 70% (see Table 1). For \( \tau \in \{ 0.975;\,0.99\} \) these reductions are even larger and for τ = 0.99 reach about 85%. This indicates that the choice of a forecasting procedure for production and inventory planning can have a substantial impact on the total costs of a logistics system.

On the other hand, in the case of the longer data, for which the mean relative costs are reported in Tables 3 and 4 (also compare the last two panels in Fig. 1), we observe that the simulations utilizing quantile regression (the procedures 6 and 6L) are able to outperform all the other methods usually exclusively for the lower-order quantiles among those considered and at shorter horizons (no longer than 8–9 months in the case of \( \tau \in \{ 0.5;\,0.75\} \), and exclusively for two- and three-step ahead forecasts for \( \tau = 0.9 \)). For longer forecast horizons, the dominant solution becomes the bootstrap method, most often when performed on levels of the data and in its parametric version (the method 8). For example, the method 8 produced the best forecasts on average at horizons in the range 7–12 for \( \tau = 0.9 \) (not reported). Thus, longer-term quantile forecasting in this dataset can be based on linear processes and the normal distribution, although accounting for estimation errors seems then to be crucial taking into account large discrepancies between the values of forecast accuracy measures for the different approaches (see Tables 3, 4). For example, for \( h = 12 \) and τ = 0.95, the methods 8 and 9 lead to about 60% reductions in the average supply chain costs per unit of output (see Table 3). In general, these reductions are somewhat smaller than those observed for the first dataset, and, furthermore, they also tend to differ to a large extent among the different procedures.

Besides, it is worth noticing that the GARCH models turn out to be very useful in short horizon forecasting (up to three steps ahead), particularly for large quantiles, more often producing the best outcomes than in the case of the shorter time series in the first dataset. For example, for \( h = 1 \) and τ = 0.95, the GARCH based procedure 10 reduces the average cost (28) by about 17% (or more) as compared with the non-GARCH based methods (see Table 3). On the contrary, for h lying in the middle of the range examined here, the linear methods 1 and 2 (especially 1) often outperform the other approaches. All of this is also confirmed to a large extent when the robustified accuracy measures are analyzed.

It is worth noting that the direct ols or quantile regression based forecasting approaches (the methods 3–5) often provide inferior solutions relative to the iterated ols based methods or the simulations utilizing quantile regression, in particular in the case of short time series, longer forecast horizons and large quantiles. Interestingly, we also observe that generating predictive distributions with a large number of quantile regressions estimated on levels (the method 6) can—and, in fact, often does—provide better one-step ahead quantile forecasts than the direct quantile forecasting (the method 5).

Turning to the analysis of the Hit Ratios, whose values transformed to the Kupiec statistics are collated for τ = 0.75 and 0.95 in Tables 5 and 6, first we see that, in terms of quantile unbiasedness, all the methods perform poorly in multistep forecasting. The only exception to this rule are median forecasts for the first dataset, in which case all procedures show excellent performance for almost all h. As can be seen in Table 5, this generally does not apply to larger quantiles, although it can be noted that, for this dataset, in the case of τ = 0.75, the bootstrap methods (7, 8, 7L, 8L) together with the procedure 6L are able to fulfill the assumed service level requirements at longer horizons, i.e., for h in the range 7–12. For quantiles above 0.75, the performance of the different forecasting procedures applied to the first dataset deteriorates further, with only few methods providing quantile unbiased forecasts at horizons beyond \( h = 1 \). However, with almost no exceptions, the unbiasedness at horizons longer than \( h = 2 \) can not be confirmed. Interestingly, the best performance in terms of longer horizon forecasting most often is shown by the parametric bootstrap methods, which produce the smallest and, at the same time, the closest to their nominal values Hit Ratio statistics for large h.

On the other hand, in the case of the second dataset (see the example statistics in Table 6), the performance of the different methods in terms of quantile unbiasedness at higher quantiles improves, although usually the unbiasedness does not hold for forecasts more than three steps ahead, with the exception of the procedure 1, which produces quantile unbiased forecasts up to eight steps ahead for \( \tau = 0.99 \) and up to six steps ahead for \( \tau = 0.975 \). At the longest horizons, the smallest (and, at the same time, the closest to their nominal values) Hit Ratios are for the bootstrap methods, especially when performed on levels of the data and in their parametric versions. Interestingly, for \( \tau = 0.75 \) a good performance at horizons from one to three in terms of the Hit Ratios is achieved under the Gaussianity assumption (i.e., with the procedures 2, 4 and 8).

Figure 2 shows average ranks of the different forecasting procedures for quantiles of orders 0.75 and 0.95. It turns out that, for the first dataset, this part of the forecast evaluation favors the method 6L (usually for forecast horizons starting from h = 5–7) and the bootstrap approach, particularly the one based on the normal distribution if shorter forecast horizons are considered. On the other hand, if the second dataset is analyzed, the favored approaches are all based on modeling levels and, for longer forecast horizons, take the form of the bootstrap method (particularly the parametric procedure 8, although for \( \tau = 0.95 \) the procedure 7 largely dominates the other methods), while in the case of shorter horizon forecasting, the ols based iterated procedures 1 and/or 2 lead to the best outcomes.

Finally, examining the Fill Rates, which are not reported to save space, we observe that, once more, the dominant solution for the time series of length 68–69 are the parametric simulation approach accounting for estimation errors and the simulations utilizing quantile regression, with the latter providing best forecasts at horizons 2–8 or even longer, while the methods 1, 2, 7 and 8 can be distinguished in the case of the second dataset, with the bootstrap approach performing particularly well at the longer horizons among those considered and more often in its parametric form. We also note that, after robustification, for the largest quantiles and particularly in the case of the methods indicated above, the Fill Rate statistics often reach 100%.

The detailed analysis presented above shows that, although certain discrepancies across the accuracy metrics and the datasets are observed, it is safe to say that the most promising approaches to multistep quantile forecasting of demand are those which either explicitly account for estimation errors or make use of predictive distributions generated with the help of quantile regression. In forecasting at the shortest horizons, especially based on larger estimation samples, the AR-GARCH models with trend functions are able to outperform the other approaches examined here. It is also worth underlining that, for our datasets, there are substantial discrepancies among the different methods’ performance in terms of the costs associated with forecasts, particularly in the case of longer-term forecasting, and that it is rarely the case that these methods produce quantile unbiased multistep predictions.

5 Conclusions

Multistep forecasting of demand in logistics systems optimized according to the cycle service level approach is often based on exact analytic formulas ignoring estimation errors. Simulation (Monte Carlo or bootstrap) procedures are rarely used to this end. Our empirical results obtained for the popular dataset of 474 monthly microeconomic time series from the M3 forecast competition indicate that simulation based procedures should certainly be taken into account when deciding upon a forecasting approach for supply chain management. In particular, the best forecasts at annual horizons were obtained either with simulation techniques utilizing quantile regression or with procedures able to explicitly account for estimation errors. It is worth underlining that, according to our results, a careful choice of a forecasting procedure for multistep quantile forecasting is crucial since huge discrepancies in the forecast accuracy were observed among the analyzed methods, indicating that such a choice will have a substantial influence on the total costs of a logistics system. From the cost perspective, it may be advisable to invest in fast computational tools, which will deliver better forecasts on a regular (for example, weekly or monthly) basis.

Our empirical study also shows that direct approaches to quantile forecasting (either ols or quantile regression based), which use disaggregated date to forecast aggregates, are often outperformed by some sort of iterated forecasting, especially in the case of high cycle service levels. Finally, we also found that GARCH models, particularly when estimated on longer time series, should be seriously considered among the preferred solutions for short horizon forecasting of demand in supply chain management applications.

Among future research challenges in the field of supply chain and logistics forecasting one can distinguish a comparison of different approaches to computing other types of optimal forecasts of demand, in particular those fulfilling the type II service level constraints, defined earlier in this paper. It will be worth investigating, for example, if the different functionals of demand can be better forecasted with the help of models belonging to the recently introduced class of generalized autoregressive score models (see Creal et al. 2013; Patton et al. 2017). Furthermore, it is also worth examining if some other characteristics of demand, such as single period Fill Rates for a given CSL used in performance forecasting, which are not elicitable in the sense of Gneiting (2011b) (for proof, see Bruzda 2018), can be made elicitable jointly with other functionals of demand. In a recent paper, Fissler and Ziegel (2016) have shown that Expected Shortfall is elicitable jointly with Value-at-Risk and that this fact has immense implications for the derivation, evaluation and testing of forecasts of this functional (also compare Dimitriadis and Bayer 2017; Patton et al. 2017). This finding opens a new chapter of applied forecasting in finance and sets further research questions concerning possible higher-order elicitability of other functionals of interest to decision makers, including popular demand characteristics. Some steps in this direction have been already taken in Bruzda (2018), but more is needed.

Notes

A comprehensive review of definitions and classifications of supply chain risks that were developed in the literature can be found in Sodhi et al. (2012) and Heckmann et al. (2015). There are also different mathematical approaches to mitigate supply chain risks. A non-exhaustive review of them is given in Choi and Chiu (2012, Chapter 1), and covers the use of such diversified concepts as von Neumann–Morgenstern utility functions, profit target probability measures, Value-at-Risk and Conditional Value-at-Risk, and mean-risk (mean–variance, mean-semivariance) analysis (see Choi and Chiu 2012, Table 1.1).

It is worth mentioning that quantile forecasts are also widely discussed in the context of energy forecasting. For example, the Global Energy Forecasting Competition (GEFC) is concerned with comparing probabilistic forecasting methods through the computation of forecasts of load or price at 99 quantiles on a rolling basis—see Hong et al. (2016) for a review of the winning solutions in the GEFC 2014, encompassing both popular and new more advanced methods (often making use of the quantile regression methodology). This discussion supplements the stream of research concerning the use of simplified and advanced solutions to the problem of constructing mean value forecasts in energy forecasting (see, for example, De Cosmis et al. 2013; Leopold 2016; Nalcaci et al. 2018).

Compare Dallery and Babai (2005), Syntetos et al. (2010) and Hill et al. (2015) for an introduction to quantile forecasting in a simple reorder point based system and certain approximate solutions to this task. Also note that reorder point systems will in principle require the use of continuous time models as in, for example, Silver et al. (2009).

Properties of optimal forecasts with loss functions of the ‘prediction error’ type, i.e., depending solely on the forecast error, have been established by Patton and Timmermann (2007). In particular, in the simple case of the conditional mean dynamics, such loss functions lead to optimal forecasts of the form ‘the conditional mean + a correction term’, where the correction term depends solely on the distribution of innovations and the loss function (also see Granger 1969; Christoffersen and Diebold 1997). For an introduction to the decision-theoretic forecasting in a more general case, see Clements (2005, Chap. VI); Granger (1969, 1999), Granger and Machina (2006), Patton and Timmermann (2010) and Gneiting (2011b).

A conditional heteroscedasticity in demand is usually dealt with smoothing the mean absolute deviation (MAD) and smoothing the mean squared error (MSE) as in, for example, Strijbosch and Moors (2005) and Syntetos et al. (2010a, b), but GARCH models have also been suggested and discussed in this context (Datta et al. 2007, 2009).

In the nonparametric quantile estimation in this paper, we rely on the Matlab function ‘quantile’.

Under the Gaussianity assumption, it is potentially possible to obtain an analytic solution accounting for estimation errors, but such a solution would be only approximate and rather tedious to derive.

Attempts to apply bootstrap methods to the AR(m)-GARCH(1,1) models with trend functions estimated on the data from the M3 competition were unsuccessful, leading to much worse results as compared with the simulation approach. Also, our experimentation with simulations based on linear ARCH (LARCH) models estimated as in Francq and Zakoïan (2010), which we considered to be close competitors to the simulations utilizing quantile regression estimation, turned out to provide inferior results compared to the other methods when evaluated on our dataset.

The other empirical results are available upon request.

References

Ali MM, Boylan JE, Syntetos AA (2012) Forecast errors and inventory performance under forecast information sharing. Int J Forecast 28:830–841

Alwan LC, Xu M, Yao D-Q, Yue X (2016) The dynamic newsvendor model with correlated demand. Decis Sci 47:11–30

Babai MZ, Ali MM, Boylan JE, Syntetos AA (2013) Forecasting and inventory performance in a two-stage supply chain with ARIMA(0,1,1) demand: theory and empirical analysis. Int J Prod Econ 143:463–471

Brandimarte P, Zotteri G (2007) Introduction to distribution logistics. Wiley, Hoboken

Bruzda J (2016) Quantile forecasting in operational planning and inventory management. An initial empirical verification. Dyn Econ Model 16:5–20

Bruzda J (2018) Quantile smoothing in supply chain and logistic forecasting. Working Paper, Nicolaus Copernicus University, Toruń. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3038014

Chen J, Lin DKJ, Thomas DJ (2003) On the single item fill rate for a finite horizon. Oper Res Lett 31:119–123

Choi T-M, Chiu C-H (2012) Risk analysis in stochastic supply chains. A mean-risk approach. Springer, New York

Chopra S, Meindl P (2007) Supply chain management strategy, planning, and operations, 3rd edn. Pearson Education, Upper Saddle River

Christoffersen PF, Diebold FX (1997) Optimal prediction under asymmetric loss. Econ Theory 13:808–817

Clements MP (2005) Evaluating econometric forecasts of economic and financial variables. Palgrave Macmillan, New York

Creal D, Koopman SJ, Lucas A (2013) Generalized autoregressive score models with applications. J Appl Econ 28:777–795

Dallery Y, Babai Z (2005) Inventory management: forecast based approach vs standard approach. In: International conference on industrial engineering and systems management IESM05, May 2005, Marrakech, Morocco, hal-00118642

Datta S, Graham DP, Sagar N, Doody P, Slone R, Hilmola O-P (2009) Forecasting and risk analysis in supply chain management: GARCH proof of concept. In: Wu T, Blackhurst JV (eds) Managing supply chain risk and vulnerability. Tools and methods for supply chain decision makers. Springer, London, pp 187–203

Datta S, Granger CWJ, Barari M, Gibbs T (2007) Management of supply chain: an alternative modelling technique for forecasting. J Oper Res Soc 58:1459–1469

De Cosmis S, De Leone R, Kropat E, Meyer-Nieberg S, Pickl S (2013) Electric load forecasting using support vector machines for robust regression. In: EAIA and MatH ‘13 Proceedings of the Emerging M&S Applications in Industry & Academia/Modeling and Humanities Symposium, Article No. 9

De Nicolò G, Lucchetta M (2017) Forecasting the tail risks. J Appl Econ 32:159–170

Dimitriadis T, Bayer S (2017) A joint quantile and expected shortfall regression framework. Working Paper, University of Konstanz, arXiv:170402213v1

Engle RF, Manganelli S (2004) CAViaR conditional autoregressive Value at Risk by regression quantilies. J Bus Econ Stat 22:367–381

Ferbar L, Čreslovnik D, Mojškerc B, Rajgelj M (2009) Demand forecasting methods in a supply chain: smoothing and denoising. Int J Prod Econ 118:49–54

Fissler T, Ziegel JF (2016) Higher order elicitability and Osband’s principle. Ann Stat 44:1680–1707

Francq C, Zakoïan J-M (2010) Inconsistency of the MLE and inference based on weighted LS for LARCH models. J Econ 159:151–165

Gneiting T (2011a) Quantiles as optimal point forecasts. Int J Forecast 27:197–207

Gneiting T (2011b) Making and evaluating point forecasts. J Am Stat Assoc 106:746–762

Granger CWJ (1969) Prediction with a generalized cost of error function. Oper Res Q 20:199–207

Granger CWJ (1999) Outline of forecast theory using generalized cost functions. Span Econ Rev 1:161–173

Granger CWJ, Machina MJ (2006) Forecasting and decision theory. In: Elliott G, Granger CWJ, Timmermann A (eds) Handbook of economic forecasting, vol 1. North Holland, Amsterdam, pp 81–98

Heckmann I, Comes T, Nickel S (2015) A critical review on supply chain risk—definition, measure and modeling. Omega 52:119–132

Hill AV, Zhang W, Burch GF (2015) Forecasting the forecastability quotient for inventory management. Int J Forecast 31:651–663

Hong T, Pinson P, Fan S, Zareipour H, Troccoli A, Hyndman RJ (2016) Probabilistic energy forecasting: global Energy Forecasting Competition 2014 and beyond. Int J Forecast 32:896–913

Knight FH (1921) Risk, uncertainty and profit. Houghton Mifflin Company, Boston

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Koenker R (2011) Quantile regression, London School of Economics short course, 16–17 May 2011

Koenker R, Bassett G Jr (1978) Regression quantiles. Economics 46:33–50

Komunjer I (2013) Quantile prediction. In: Elliot G, Timmermann A (eds) Handbook of economic forecasting, vol 2. North Holland, Amsterdam, pp 961–994

Kupiec P (1995) Techniques for verifying the accuracy of risk management models. J Deriv 3:73–84

Leopold A (2016) Energy related system dynamic models: a literature review. Cent Eur J Oper Res 24:231–261

Lewis CD (1997) Demand forecasting and inventory control. A computer aided learning approach, Woodhead Publishing, Abington

Makridakis S, Hibon M (2000) The M3-competition: results, conclusions and implications. Int J Forecast 16:451–476

Maume-Deschamps V, Rullière D, Said K (2017) Multivariate extensions of expectiles risk measures. Depend Model 5:20–44

McCarthy TM, Davis DF, Golicic SL, Mentzer JT (2006) The evolution of sales forecasting management: a 20-year longitudinal study of forecasting practices. J Forecast 25:303–324

Nalcaci G, Özmen A, Weber GW (2018) Long-term load forecasting: models based on MARS, ANN and LR methods. Cent Eur J Oper Res. https://doi.org/10.1007/s10100-018-0531-1

Osband KH (1985) Providing incentives for better cost forecasting. Ph.D. thesis, University of California, Berkeley

Pascual L, Romo J, Ruiz E (2001) Effects of parameter estimation uncertainty on prediction densities: a bootstrap approach. Int J Forecast 17:83–103

Patton AJ, Timmermann A (2007) Properties of optimal forecasts under asymmetric loss and nonlinearity. J Econ 140:884–918

Patton AJ, Timmermann A (2010) Generalized forecast errors, a change of measure, and forecast optimality conditions. In: Bollerslev Y, Russell JR, Watson MW (eds) Volatility and time series econometrics: essays in honor of Robert F Engle. Oxford University Press, Oxford, pp 194–212

Patton AJ, Ziegel JF, Chen R (2017) Dynamic semiparametric models for expected shortfall (and Value-at-Risk). Working Paper, arXiv:170705108v1

Pearson M (2006) The application of prediction capability and adaptive target control to the newspaper business. Eur J Oper Res 168:475–491

Pohl H-C, Köhler H, Thomas D (2010) State of the art in supply chain risk management research: empirical and conceptual findings and a roadmap for the implementation in practice. Logist Res 2:33–44

Silver EA, Naseraldin H, Bischak DP (2009) Determining the reorder point and order-up-to-level in a periodic review system so as to achieve a desired fill rate and a desired average time between replenishments. J Oper Res Soc 60:1244–1253

Sodhi MS, Son B-G, Tang CS (2012) Researchers’ perspectives on supply chain risk management. Prod Oper Manag 21:1–13

Strijbosch LWG, Moors JJA (2005) The impact of unknown demand parameters on (R, S)-inventory control performance. Europ J Oper Res 162:805–815

Syntetos AA, Babai Z, Boylan JE, Kolassa S, Nikolopoulos K (2016) Supply chain forecasting: theory, practice, their gap and the future. Eur J Oper Res 252:1–26

Syntetos AA, Babai MZ, Davies J, Stephenson D (2010a) Forecasting and stock control: a study in a wholesaling context. Int J Prod Econ 127:103–111

Syntetos AA, Boylan JE (2008) Demand forecasting adjustments for service-level achievement. IMA J Manag Math 19:175–192

Syntetos AA, Nikolopoulos K, Boylan JE (2010b) Judging the judges through accuracy-implication metrics: the case of inventory forecasting. Int J Forecast 26:134–143

Tang CS (2006) Perspectives in supply chain risk management. Int J Prod Econ 103:451–488

Thomas DJ (2005) Measuring item fill-rate performance in a finite horizon. Manuf Serv Oper Manag 7:74–80

Thomassey S (2010) Sales forecasts in clothing industry: the key success factor of the supply chain management. Int J Prod Econ 128:470–483

Tratar LF (2010) Joint optimisation of demand forecasting and stock control parameters. Int J Prod Econ 127:173–179

Tratar LF (2015) Forecasting method for noisy demand. Int J Prod Econ 161:64–73

Wang X, Petropoulos F (2016) To select or to combine? The inventory performance of model and expert forecasts. Int J Prod Res 54:5271–5282

Weiss AA (1996) Estimating time series models using the relevant cost function. J Appl Econ 11:539–560

Weiss AA, Andersen AP (1984) Estimating time series models using the relevant forecast evaluation criterion. J R Stat Soc Ser A 147:484–487

Other sources

The Forecasting Principles website at http://www.forecastingprinciples.com/index.php/data [accessed 30.06.2018]

The website of the International Institute of Forecasters at https://forecasters.org/resources/time-series-data/m3-competition/ [accessed 30.06.2018]

Acknowledgments

This research was supported by the Polish National Science Center under Grant Nos. DEC-2013/09/B/HS4/02716 and DEC-2017/27/B/HS4/01025. The author expresses her sincere gratitude to the Anonymous Reviewers for their helpful suggestions and advice.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bruzda, J. Multistep quantile forecasts for supply chain and logistics operations: bootstrapping, the GARCH model and quantile regression based approaches. Cent Eur J Oper Res 28, 309–336 (2020). https://doi.org/10.1007/s10100-018-0591-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10100-018-0591-2

Keywords

- Production planning

- Inventory management

- Supply chain forecasting

- Quantile forecasts

- LINLIN loss

- M3 competition