Abstract

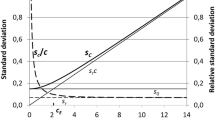

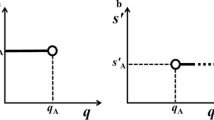

The interpretation and reporting the results of measurements on materials where the concentration of the analyte is close to or may even be zero has been the subject of much discussion with the use of such concepts as limit of detection (LOD) and limit of quantification (LOQ). While these concepts have taken into account the measurement uncertainty, they have not utilised the fact that the value of the measurand, i.e., the concentration, is constrained to be zero or greater. Taking this into account the distribution of values attributable to the measurand can be derived from the probability density function (PDF) that determines the distribution of the observed values. When this PDF is normal the distribution of the values attributable to the measurand is a truncated t distribution with a lower limit of \( t_L = - x_m /\left( {{s \mathord{\left/ {\vphantom {s {\sqrt n }}} \right. \kern-\nulldelimiterspace} {\sqrt n }}} \right), \) re-normalised so that the total probability is one, where x m is the mean of the n observed values and s their standard deviation. When x m much greater than \( {s \mathord{\left/ {\vphantom {s {\sqrt n }}} \right. \kern-\nulldelimiterspace} {\sqrt n }} \) then the distribution reverts to the unmodified t distribution. The probability that the value of the measurand is above or below a limit can be calculated directly from this truncated t distribution and the interpretation of the result does not require the use of concepts such as LOD and LOQ. Also it deals with the problem of negative observations.

Similar content being viewed by others

References

Cowen S, Ellison SLR (2006) Analyst 131:710–717

Van der Veen AMH (2004) Accred Qual Assur 9:232–236

de Jongh WK (1986) International laboratory, pp 62–65

ISO 11843

Currie L A, IAEA-TECDOC-1401, ISBN 92-0-108404-8, pp 9–33

Thomas J, Danish Atomic Energy Commission Research Establishment Risö, Report No. 70

Jeffreys H (1957) Scientific inference, Cambridge University Press, London

Lee PM (2004) Bayesian statistics, 3rd edn., Hodder Arnold, London, pp 63–64

Author information

Authors and Affiliations

Consortia

Additional information

This Report was written by Alex Williams (e-mail: aw@camberley.demon.co.uk) for the Statistical Subcommittee and approved by the Analytical Methods Committee.

Appendix

Appendix

The PDF (that is, the uncertainty) of the values attributable to the value of a measurand, taking into account the prior information available, can be calculated using Bayes theorem from Eq. (1):

where \( G({\mathbf{x}}|\varvec{\uptheta}) \) gives the probability of obtaining a set of observed values x = [x 1,...,x n ] in terms of the parameters θ = [θ 1,...,θ m ] of the probability distribution G; \( {\text{d}}H(\varvec{\uptheta}|{\mathbf{x}})({\text{d}}\theta _1 \ldots {\text{d}}\theta _m ) \) is the probability that the parameters have values in the interval θ 1 + dθ 1 , etc. given the observed values; and P(θ)(dθ 1...dθ m ) is the prior probability that the values of parameters θ lie in the interval θ 1 + dθ 1, etc.

In principle Eq. (1) can be used whatever the form of G, providing that the prior probabilities are known. Parameters of no interest are normally integrated over their possible values, giving dH for those parameters that are of interest. When G is normal there are only two parameters, the mean μ and variance σ 2.

First, taking G as normal and using an approach similar to Jeffreys [7], the PDF dH(μ) will be calculated without the constraint on the values of the measurand to show that this leads to the t distribution. Then it will be shown that including the prior information that μ ≥ 0, the PDF becomes a truncated t distribution [8].

Assume that there are n independent random observations x i drawn from a normal distribution with mean μ and variance σ 2, and that these values are used to determine the PDF dH(μ), and that the value of the measurand lies between μ and μ + dμ.

Using Eq. (1)

But

and

where x m is the mean of x and s is the standard deviation of the observations.

Hence by putting \( k = (n - 1)s^2 \left( {1 + \frac{{t^2 }} {{n - 1}}} \right), \) Eq. (2) becomes

Taking \( P(\sigma ) = \left\{ {\begin{array}{*{20}c} {{1 \mathord{\left/ {\vphantom {1 {\sigma ,\sigma \ge 0}}} \right. \kern-\nulldelimiterspace} {\sigma , \quad \sigma \ge 0}}} \\ {0, \quad \sigma < 0} \\ \end{array} } \right. \) (see for example, Jefferys [7]) and integrating Eq. (3) over σ gives

Taking P(μ) as constant for −∞ < μ < ∞ and integrating over μ in the denominator gives the t distribution for μ, namely

When μ is constrained to be greater than zero, that is, when \( t \ge - x_m /\left( {{s \mathord{\left/ {\vphantom {s {\sqrt n }}} \right. \kern-\nulldelimiterspace} {\sqrt n }}} \right), \) we have

which is the t distribution truncated at \( t_L = - x_m /\left( {{s \mathord{\left/ {\vphantom {s {\sqrt n }}} \right. \kern-\nulldelimiterspace} {\sqrt n }}} \right). \)

Rights and permissions

About this article

Cite this article

Analytical Methods Committee, The Royal Society of Chemistry. Measurement uncertainty evaluation for a non-negative measurand: an alternative to limit of detection. Accred Qual Assur 13, 29–32 (2008). https://doi.org/10.1007/s00769-007-0339-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00769-007-0339-5