Abstract

The use of three artificial neural network (ANN)-based models for the prediction of unconfined compressive strength (UCS) of granite using three non-destructive test indicators, namely pulse velocity, Schmidt hammer rebound number, and effective porosity, has been investigated in this study. For this purpose, a sum of 274 datasets was compiled and used to train and validate three ANN models including ANN constructed using Levenberg–Marquardt algorithm (ANN-LM), a combination of ANN and particle swarm optimization (ANN-PSO), and a combination of ANN and imperialist competitive algorithm (ANN-ICA). The constructed ANN-LM model was proven to be the most accurate based on experimental findings. In the validation phase, the ANN-LM model has achieved the best predictive performance with R = 0.9607 and RMSE = 14.8272. Experimental results show that the developed ANN-LM outperforms a number of existing models available in the literature. Furthermore, a Graphical User Interface (GUI) has been developed which can be readily used to estimate the UCS of granite through the ANN-LM model. The developed GUI is made available as a supplementary material.

Highlights

-

Estimation of unconfined compressive strength of granite using artificial neural networks.

-

Representation of available proposals for correlating granite compressive strength.

-

A comparative assessment of results using hybrid artificial neural network-based models.

-

Pulse velocity, Schmidt hammer rebound number and effective porosity were considered.

-

A closed-form prediction equation was derived and implemented in a Graphical User Interface for practical applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The unconfined compressive strength (UCS) of rocks is undoubtedly a key input parameter of numerous constitutive models predicting the stress of rocks (Hoek and Brown 1980, 1997, 2019; Hoek 1983; Hoek et al. 2002). Determining the UCS of a rock sample in the laboratory requires specialist equipment and intact rock samples free of fissures and veins which are generally not easy to obtain. A viable alternative is to associate the rock’s UCS with various physical and mechanical test indexes such as the pulse velocity (Vp), Schmidt hammer rebound number (Rn), effective porosity (ne), total porosity (nt), dry density (γd), point load index (Is50), shear wave velocity (Vs), Brazilian tensile strength (BTS), slake durability index (SDI), and using simple and multiple regression analyses (Sachpazis 1990; Tuğrul and Zarif 1999; Katz et al. 2000; Kahraman 2001; Yılmaz and Sendır 2002; Yaşar and Erdoğan 2004; Dinçer et al. 2004; Fener et al. 2005; Aydin and Basu 2005; Sousa et al. 2005; Shalabi et al. 2007; Çobanoğlu and Çelik 2008; Vasconcelos et al. 2008; Sharma and Singh 2008; Kılıç and Teymen 2008; Diamantis et al. 2009; Yilmaz and Yuksek 2009; Yagiz, 2009; Moradian and Behnia 2009; Khandelwal and Singh 2009; Altindag 2012; Kurtulus et al. 2012; Bruno et al. 2013; Mishra and Basu 2013; Khandelwal 2013; Tandon and Gupta 2015; Karaman and Kesimal 2015; Ng et al. 2015; Armaghani et al. 2016a, b; Azimian 2017; Heidari et al. 2018; Çelik and Çobanoğlu 2019; Barham et al. 2020; Ebdali et al. 2020; Teymen and Mengüç 2020; Li et al. 2020). While a significant number of empirical relationships in predicting the UCS of rocks have been proposed in the literature, their predictive accuracy is generally significantly lower than obtained using artificial neural networks (ANNs) (Yılmaz and Yuksek 2008, 2009; Dehghan et al. 2010; Yagiz et al. 2012; Ceryan et al. 2013; Minaeian and Ahangari 2013; Yesiloglu-Gultekin et al. 2013; Yurdakul and Akdas 2013; Momeni et al. 2015; Armaghani et al. 2016a; Armaghani, et al. 2016b; Madhubabu et al. 2016; Ferentinou and Fakir 2017; Barham et al. 2020; Pandey et al. 2020; Ceryan and Samui 2020; Ebdali et al. 2020; Teymen and Menguc 2020; Moussas and Diamantis 2021; Armaghani et al. 2021). ANNs are advanced computational models, which can simulate highly non-linear relationships between various input and output parameters, but their predictive ability is limited to the range of input parameter values to which they have been trained; that is neural network (NN) models are unable to provide any predictions beyond the data input range to which they have been trained and developed. Within the input parameter value range, the frequency distribution of the input and output parameter values significantly affects the predictive accuracy of the NN model. A uniform input/output parameter value distribution, with the majority of data input/output a value spanned over a limited value range does not constitute a statistically suitable database to train and develop ANN models.

This research aimed at training and developing three ANN models for the prediction of the UCS of granite by compiling a high-quality data and site-independent database spanning the weak to strong granite range by consolidating a significant number of non-destructive tests results reported in the literature. As part of an ongoing research, this study expands the research recently reported by Armaghani et al. (2021), by introducing the Rn as a third non-destructive test index and by extending the range of the input parameter values; and therefore, expanding the predictive capability of the NN model. A data and site-independent database comprising 274 datasets correlating the Rn, Vp, and ne with the UCS of granite was compiled and used to train and develop various ANN models using Levenberg–Marquardt (LM) algorithm and two widely used optimization algorithms (OAs) namely particle swarm optimization (PSO) and imperialist competitive algorithm (ICA).

2 Research Significance

Despite the fact that a variety of semi-empirical/empirical expressions for predicting the UCS of rocks using various non-destructive test parameters have been published in the literature, the majority of the offered expressions do not provide a high degree of accuracy or any generalized solutions. This is mostly owing to an insufficient description of rock characteristics, the presence of complex correlation among the input parameters, very less experimental results, and inconsequential methods of calculations. Therefore, a more sophisticated method that is capable of capturing the complex behavior of rock UCS based on a large experimental result is required.

With their expertise in non-linear modelling, machine learning (ML) algorithms can capture the complex behavior of influencing parameters and provide feasible tools for simulating many complex problems. In the past, several ML algorithms, namely ANN, adaptive neuro-fuzzy inference system (ANFIS), fuzzy inference system (FIS); extreme gradient boosting machine (XGBoost), multivariate adaptive regression splines (MARS), extreme learning machine (ELM), ensemble learning techniques, support vector machine (SVM), and so on, have been employed to estimate the desired output including rock strength, landslide displacement, slope stability analysis, prediction of soil–water characteristic curve, inverse analysis of soil and wall properties in braced excavation, concrete compressive strength and so on (Armaghani et al. 2016a, b; Asterisand and Kolovos 2017; Zhang et al. 2017, 2018, 2020, 2021, 2022a, b, c; Zhang and Phoon 2022; Zhang and Liu 2022; Wang et al. 2019).

A detailed review of literature demonstrates the widespread applicability of ANNs in a variety of engineering disciplines, including the prediction of rock UCS. ANNs can simulate highly non-linear relationships between various inputs and output parameters, and provide a quick solution. However, their predictive ability is limited to the range of input parameter values to which they have been trained. In this study, a data and site-independent database comprising 274 datasets correlating the Rn, Vp, and the ne with the UCS of granite was compiled and used to train and develop various ANN models.

3 Literature Review on the Available Proposals

This section presents and discusses a comprehensive review of prior researches using semi-empirical and soft computing methodologies in predicting the UCS of granite. However, prior to the extended assessment of previous studies, a brief overview of experimental works is presented in the following sub-sections.

3.1 Experimental Works

The Schmidt hammer rebound number, i.e., Rn, is a hardness index of rock, which is obtained by pressing a piston perpendicular to the specimen surface and measuring the spring rebound. Depending on the applied impact energy, two Schmidt hammer types are available, the N-type (2.207 Nm impact energy) and L-type hammer (0.735 impact energy). The N-type Schmidt hammer was originally used to determine the rebound number of concrete cubes and was then also introduced by the ASTM standards for rock hardness testing, although it has been debated that the higher impact energy may actively fissures and cracks. Note that, the Rn depends on the degree of weathering, water content, sample size, hammer axis orientation and on the data reduction techniques (Poole and Farmer 1980; Ballantyne et al. 1990; Katz et al. 2000; Sumner and Nel 2002; Basu and Aydin 2004; Demirdag et al. 2009; Niedzielski et al. 2009; Çelik and Çobanoğlu 2019).

The compressional wave velocity, i.e., Vp, is a mechanical index, which involves the propagation of compressional waves below the yield strain of rock. The Vp is determined by calculating the required travel time of the waves between the transmitter and receiver. It depends on the mineralogy, texture, fabric, weathering grade, water content and density of rock (Yasar and Erdogan 2004; Kilic and Teymen 2008; Dheghan et al. 2010; Mishra and Basu 2013; Tandon and Gupta 2015; Momeni et al. 2015; Ng et al. 2015; Heidari et al. 2018).

The effective porosity, i.e., ne, is a physical rock index which shows the amount of interconnected void space. The voids are quantified in the form of inter-granular space, micro-fractures at grain boundaries, joints and faults (Franklin and Dusseault 1991). The rock’s porosity depends on various parameters such as the particle size, particle shape and weathering grade, which may change the pore size distribution, pore geometry, and even result in new pore formation (Tuǧrul 2004).

3.2 Semi-empirical Proposals

The significant number of simple and multiple regression analysis relationships correlating the UCS of granite with the three non-destructive test indexes (i.e., Rn, Vp, and ne) used in earlier researches are summarized in Table 1. The UCS of granite is generally proposed to reduce exponentially with increasing effective porosity, whereas linear and exponential growth patterns are suggested for the relationship between UCS and Rn, Vp. Most of the proposed relationships predict the rock UCS using only one input parameter and only a limited number of relationships have been proposed using two or three of the non-destructive test indices as input parameters for the prediction of the UCS of granite. The proposed relationships predict the UCS of weak to very strong granite (ISRM 2007).

3.3 Soft Computing Proposals

During the last decades, a significant number of soft computing models, namely ANN, ANFIS, back-propagation neural network (BPNN), FIS, radian basic function NN (RBFN), gene expression programming (GEP), extreme gradient boosting machine with firefly algorithm (XGBoost-FA), and SVM, have been reported for the prediction of UCS of rocks (Meulenkamp and Grima 1999; Gokceoglu and Zorlu 2004; Yılmaz and Yuksek 2008; Dehghan et al. 2010; Monjezi et al. 2012; Yagiz et al. 2012; Mishra and Basu 2013; Yesiloglu-Gultekin et al. 2013; Momeni et al. 2015; Mohamad et al. 2015; Torabi-Kaveh et al. 2015; Teymen and Mengüç 2020; Barham et al. 2020; Ceryan and Samui 2020; Mahmoodzadeh et al. 2021; Yesiloglu-Gultekin and Gokceoglu 2022; Asteris et al. 2021). The proposed models predict the UCS of various rock types and formation methods spanning the very soft to hard rock range. Interestingly, few models have been proposed which specifically predict the UCS of granite (Yesiloglu-Gultekin et al. 2013; Armaghani et al. 2016a, b, 2021; Cao et al. 2021; Jing et al. 2021; Asteris et al. 2021; Mahmoodzadeh et al. 2021). Table 2 shows the different ML algorithms, input parameters, and database size and prediction accuracy of the UCS of granite reported in the literature. While the type and number of input parameters used generally varies, the Rn and Vp are included in most of the proposed models. In most of the reported models, the database includes generally limited data.

4 Experimental Database

The experimental database used in this research to train and develop soft computing models for the prediction of the UCS of granite was compiled from 274 datasets reported in the literature (Tuğrul and Zarif 1999; Mishra and Basu 2013; Ng et al. 2015; Koopialipoor et al., 2022). The database comprises three input parameters obtained from non-destructive tests, including the Rn, Vp and ne and a single output parameter, the UCS of soft to hard granite (UCS = 20.30–211.9 MPa). The study reference and the descriptive details of the collected datasets are presented in Tables 3 and 4, respectively.

The consolidated database only includes experimental results reported in compliance with international testing standards, which conform to the principles of statistical analysis regarding the definition of statistically significant sample size and statistical distribution of the reported test results. The robustness of the methodology used in this research to consolidate the test results reported by different researchers determines the reliability of the actual model prediction. A correlation matrix is presented in Fig. 1 to illustrate the degree of correlation (based on Pearson correlation coefficient) between the parameters. In addition, the comparative histograms of the input and output parameters are shown in Fig. 2. Note that the normalized values of input and output parameters were considered in illustrating comparative histograms.

5 Methodology

This section presented theoretical background of ANN followed by a brief overview of PSO and ICA algorithms. After that, methodological development of ANN-LM model and hybrid ANN-PSO and ANN-ICA models are presented and discussed.

5.1 Artificial Neural Network (ANN)

ANNs are developed with the goal of learning from experimental or analytical data. These models may categorize data, forecast values, and aid in decision-making. ANN maps input parameters to a given output. In comparison to traditional numerical analysis processes (e.g., regression analysis), a trained ANN can deliver more trustworthy results with far less processing effort. ANN functions in the same way as the biological neural network in the human brain does. The basic building block in ANN is the artificial neuron, which is a mathematical model that seeks to replicate the activity of a biological neuron (Hornik et al. 1989; Hassoun 1995; Samui 2008; Das et al. 2011; Samui and Kothari 2011; Asteris and Plevris 2013, 2016; Nikoo et al. 2016, 2017, 2018; Asteris and Kolovos 2017; Asteris et al. 2017; Cavaleri et al. 2017; Psyllaki et al. 2018; Mohamad et al. 2019; Koopialipoor et al. 2020; Pandey et al. 2020; Apostolopoulou et al. 2018, 2019, 2020).

In ANN, the input data are sent into the neurons, which then processed using a mathematical function to produce an output. To imitate the random nature of the biological neuron, weights are assigned to the input parameters before the data reaches the neuron. The architecture of a back-propagation neural network (BPNN) can be expressed as: \(N-{H}_{1}-{H}_{2}-\cdots -{H}_{\mathrm{NHL}}-M\), where N is the number of input neurons (i.e., the number of input parameters), Hi is the number of neurons in the ith hidden layer for i = 1, …, NHL, NHL is the number of hidden layers, and M is the number of output neurons (output parameters). A typical structure of a single node (with the corresponding R-element input vector) of a hidden layer is presented in Fig. 3.

For each one neuron i, the individual input dataset \({p}_{1}, \dots , { p}_{R}\) are multiplied with the corresponding values of weights \({w}_{i,1}, \dots , { w}_{i,R}\) and the weighted values are fed to the junction of the summation function, where the dot product (\(W\cdot p\)) of the weight vector \(W=\left[{w}_{i,1}, \dots , {w}_{i,R}\right]\) and the input vector \(p={\left[{p}_{1}, \dots , { p}_{R}\right]}^{T}\) is generated. The value of bias b (threshold) is added to the dot-product forming the net input \(n\), which is the argument of the activation function ƒ:

The suitable selection of the activation/transfer function ƒ plays a key role during the training and development of ANN-based models affecting the structure and prediction accuracy of ANN. Although the hyperbolic Tangent Sigmoid function and the log-sigmoid function are the most commonly used activation function, many other types of functions have been proposed the last decade. In the research presented herein, an in-depth investigation on the effect of transfer functions on the performance of the trained and developed models has been conducted studying ten different activation functions. The main goal during the development process of an ANN model and especially during their training is to correlate the various input and the output parameters and minimize the error (Psyllaki et al 2018; Kechagias et al. 2018; Roy et al. 2019; Armaghani et al. 2019; Armaghani et al. 2019; Cavaleri et al. 2019, Chen et al. 2019, Xu et al. 2019; Yang et al. 2019; Pandey et al. 2020).

5.2 Optimization Algorithms

Kennedy and Eberhart (1995) invented PSO in 1995, inspired by the behavior of social species in groups, such as bird and fish schools or ant colonies. PSO simulates the sharing of information between members. In the last two decades, PSO has been used in a variety of areas in conjunction with other techniques (Koopialipooret al. 2019a, b; Moayedi et al. 2020). This approach searches for the best solution using particles whose trajectories are changed by a stochastic and a deterministic component. Each particle is impacted by its ‘best’ obtained position and the ‘best’ achieved position of the group, but it moves at random. In PSO, a particle \(i\) is defined by its position vector, x, and its velocity vector,\(v\). During the course of each iteration, each particle changes its position as follows:

where \(w\), \({c}_{1}\), \({c}_{2}\), \({r}_{1}\), and \({r}_{2}\) are, respectively, inertia weight, two positive constants and two random parameters. Figure 4 illustrates a 2-D representation of a particle, ‘i’, movement between two positions. It can be observed how the particle best position, Pbest, and the group best position, gbest, influence the velocity of the particle at the k + 1 iteration.

Atashpaz-Gargari and Lucas (2007) proposed ICA as a global search population-based on optimization algorithm. ICA begins with the creation of a randomly generated starting population known as countries. The process continues to create N countries, after which the number of imperialists is chosen as a specified number of the lowest-cost countries. The remaining countries are used as special functions among otherempires.in ICA, imperialists are more powerful when they have more colonies. ICA is made up of three major operators: assimilation, revolution, and competition. The body of the ICA is made up of colonies that are all equally absorbed by imperialists. However, the revolution is bringing about a lot of unexpected developments. The imperialists are competing to get more colonies in the competition portion, and any empire that can meet the needed requirements finally wins. This technique is continued until the target benchmark is reached. Several studies provide further information concerning ICA (Atashpaz-Gargari and Lucas 2007).

5.3 ANN-LM and Hybrid ANN Modelling

The basic steps of a holistic methodology were followed in order to obtain the optimum ANN model that estimates the UCS of granite. Typically, in the context of ANN training and development, the majority of researchers selects in advance: (a) the method to be used to normalize the data, (b) the transfer function to be used, (c) the training algorithm to be used for the training of neural networks, (d) the number of hidden layers and (e) the number of neurons for each hidden layer. Specifically, in order to estimate the layout of the neural network (i.e., hidden layers, and neurons), several semi-empirical methodologies are available in the literature, taking into account the number of input parameters, output parameters, the number datasets used for training or even combinations of the above. Table 5 presents a representative list of these semi-empirical relationships which are widely accepted and commonly used in practice. In this work, however, rather than relying on empirical patterns, a different approach has been adopted, as a means to determine the most suitable layout of the neural network. Specifically, a thorough and in-depth investigation algorithm is employed, the steps of which are outlined as follows.

Step 1. Develop and train a plethora of ANN models: The development and training of multiple alternative ANNs takes place with varying number of hidden layers (1 or 2) and number of neurons (1–30). Furthermore, each alternative ANN is trained for ten different activation functions, as well as using Levenberg–Marquardt (LM) algorithm as training algorithm. Finally, the alternative variations are further expanded by examining ten different random initial values of weights and bias for each developed ANN model.

Step 2. Select the optimal architectures: From the previously developed ANNs which have been trained with the LM algorithm, the optimal architecture (ANN-LM) was selected that achieved best statistical performance indices using the training datasets.

Step 3. Upon the two optimum architectures, selected in the previous step, several different optimization algorithms were implemented such as PSO and ICA, so that optimized values of weights and bias are obtained.

Step 4. Depending on the performance indices, achieved by the examined ANNs, the optimum one is selected and proposed.

The proposed algorithm is time consuming, since it requires the development and evaluation of numerous alternative ANN models, but provides higher credibility in reaching an optimum architecture upon which the optimization algorithms will be applied. An additional benefit of the proposed algorithm that is missing from the available semi-empirical methodologies in the literature is that the optimum combination of transfer functions is also obtained (besides the number of hidden layers, and the number of neurons).

5.4 Hybridization Procedure of ANN and OAs

In the last decade, many studies have been conducted in engineering applications to improve the ability of ANN models using OAs (Koopialipoor et al. 2019a, b; Moayedi et al. 2020; Golafshani et al. 2020). Specifically. The learning parameters (weights and biases) of ANNs are optimized using OAs. PSO and ICA, two extensively utilized OAs, were used to optimize the weights and biases of ANNs in this study. The ANN and PSO, and ICA approaches work together to provide a prediction model for rock UCS. The methodological development of hybrid ANN can be described as: (a) initialization of ANN; (b) set hyper-parameters such as number of hidden layers, number of hidden neurons, and activation function; (c) initialization of OA; (d) select swarm/particle size and other deterministic parameters of OA; (e) set terminating criteria; (f) training of ANN using training dataset; (g) calculate fitness; (h) select optimum values of weights and biases based on the performance criteria; and (i) testing of ANN. Figure 5a represents the entire process of hybrid ANN construction followed in this work.

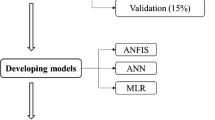

5.5 Performance Indices

The complete dataset of 274 observations was divided into training, validation, and testing datasets prior to computational modelling. Specifically, a total of 183 observations were used for the training of ANN models, whereas 45 observations were used for validation and 46 data for testing. The steps of computational modelling are illustrated in Fig. 5b. Right after the model construction, their predictive accuracy was assessed using four widely used indices (Chandra et al. 2018; Raja and Shukla 2020, 2021a, b; Raja et al. 2021; Khan et al. 2021, 2022; Aamir et al. 2020; Bhadana et al. 2020), namely root mean square error (RMSE), mean absolute percentage error (MAPE), correlation coefficient (R), and variance account for (VAF). The mathematical expressions of these indices are given as follows:

where \(n\) denotes the total number of datasets, and \({y}_{i}\) and \({\widehat{y}}_{i}\) represent the predicted and target values, respectively. Recent research has highlighted the limitations of the RMSE, the MAPE, and the R in assessing the predictive accuracy of neural networks (Asteris et al. 2021; Armaghani et al. 2021). To this end, the a20-index was used to estimate the model accuracy. Note that, a20-index is a recently proposed index which has a physical engineering meaning and can be used to ensure the reliability of a data-driven model (Apostolopoulou et al. 2019, 2020). The mathematical expression of this index can be given by:

where M is the number of dataset sample and \(\mathrm{m}20\) is the number of samples with a value of (experimental value)/(predicted value) ratio, between 0.80 and 1.20. In a 100% accurate predictive model, the a20-index would be equal to 1 or 100%. The a20-index shows the number of samples that satisfy the predicted values with a deviation of ± 20%, compared to experimental values.

6 Results and Discussion

6.1 Development of ANN-LM Models

As stated above, a sum of 274 datasets was compiled and used to train and develop 3 ANN-based models. From the 274 data in the database, a sum of 183 observations was used for the training of ANN models, while 45 observations were used for validation and 46 data for testing. The parameters presented in Table 6 were used for the training and development of the ANN models. The combinations of these parameters yield 240,000 different ANN architecture models with one hidden layer.

The above ANN models were assessed based the RMSE index using the testing datasets. Table 7 presents the 20 best models for the case of ΑΝΝ. From the information shown in Table 7, it appears that the optimum ANN with one hidden layer is the ANN-LM 3-11-1 model which corresponds to minmax normalization technique in the range [− 1.00, 1.00], 11 neurons for the hidden layer and transfer functions the normalized radial basis transfer function and the symmetric saturating linear transfer function (SSL). Table 8 presents the performance indices for the optimum ANN model both for training and testing datasets, while their architecture is presented in Fig. 6. Herein, the performance of the developed ANN-LM 3-11-1 model is presented for both training and testing datasets. A model with the lowest RMSE and highest R values in the testing phase (RMSE = 14.8272 and R = 0.9607) was selected for the construction of ANN-LM 3-11-1 model. The value of a20-index for optimum ANN-LM 3-11-1 model was determined to be 0.8470 and 0.7174 in the training and testing phases, respectively. However, to better illustrate the predictive outcomes, Fig. 7 represents a comparison of actual vs. predicted values of granite UCS prediction. Two different diagrams, viz., scatter plot and line diagram are presented for the optimum ANN-LM 3-11-1 model.

6.2 Development of ANN-PSO Model

The PSO was used to optimize the weights of biases of the optimum ANN-LM 3-11-1 model and ANN-PSO 3-11-1 model was constructed. The basic settings for constructing ANN-PSO 3-11-1 model are presented in Table 9. Specifically, the population size was set between 10 and 100 by step 5, 50 different cases of random numbers, and the maximum number of iterations was set to 100. Therefore, the total number of combinations was determined to be 95,000. Note that the RMSE was selected as the cost function. The convergence behavior of all the developed ANN-PSO 3-11-1 models is presented in Fig. 8. It can be seen from this figure that the ANN-PSO 3-11-1 model converged into a good solution within 30–40 iterations. A flat curve was discovered beyond this range, indicting no better solution was found. The influence of random numbers generation in ANN-PSO modelling is also illustrated in Fig. 9. The performance of all the developed ANN-PSO 3-11-1 models is presented in Table 10. In the training phase, the values of R and RMSE ranged from 0.9170 to 0.9335 and 0.2019 to 0.1815, respectively, while in the testing phase, these values ranged from 0.9293 to 0.9335 and 0.2020 to 0.1968, respectively. The performance of the most appropriate ANN-PSO 3-11-1 model is presented in Table 11. As can be observed from this table, the ANN-PSO 3-11-1 model has achieved the desired predictive accuracy with a20-index = 0.5519, R = 0.9330, RMSE = 23.4893, MAPE = 20.95%, and VAF = 86.9750 in the training phase and a20-index = 0.5435, R = 0.9330, RMSE = 23.9205, MAPE = 24.50%, and VAF = 79.3740 in the testing phase. Figure 10 depicts a comparison of actual and expected granite UCS values in order to further show the predicting conclusions. Two distinct diagrams, a scatter plot and a line diagram, are provided for the optimal ANN-PSO 3-11-1 model.

a, b Training and testing performance curves of ANN-PSO 3-11-1 models for different random numbers (1:1:100) while the population size is constant to optimum size 60, c, d training and testing performance curves of ANN-PSO 3-11-1 models for different population sizes (10:2:60) while the random number is constant to optimum 60

6.3 Development of ANN-ICA Model

Analogous to PSO, the ICA was utilized to optimize the weights and biases of the optimal ANN-LM 3-11-1 model, and the ANN-ICA 3-11-1 model was created. Table 12 presents the settings of hyper-parameters for building the ANN-ICA 3-11-1 model. In particular, the population size was set between 10 and 100 by step 5, 10 different sets (1 to 10 by step 1) for random number generation, and the maximum number of epochs was set to 200. Therefore, the total numbers of possible combinations were 380,000. The RMSE was selected as the cost function in each case. Figure 11 depicts the convergence behavior of all produced ANN-ICA 3-11-1 models. This graph demonstrates that the ANN-ICA 3-11-1 model converged to a satisfactory solution within 160–180 iterations. Beyond this range, a flat curve was identified, indicating there is no better solution. The influence of random number (i.e., initial values of weights and biases) on the performance of ANN-ICA 3-11-1 model is presented in Fig. 12. Table 13 presents the performance of all produced ANN-ICA 3-11-1 models. During the training phase, R and RMSE values varied between 0.9241 and 0.9317 and 0.1929 and 0.1832, respectively. During the testing phase, these values ranged between 0.9272 and 0.9286 and 0.2048 and 0.2030, respectively. Table 14 displays the performance of the best suitable ANN-ICA 3-11-1 model. The ANN-ICA 3-11-1 model has achieved the desired predictive accuracy, as shown in the table, with a20-index = 0.6339, R = 0.9286, RMSE = 18.6663, MAPE = 21.21%, and VAF = 85.0725 in the training phase and a20-index = 0.6739, R = 0.9285, RMSE = 22.5228, MAPE = 16.48%, and VAF = 82.3275 in the testing phase. Figure 13 displays a comparison of actual and predicted granite UCS values to further illustrate the conclusive predictions. The ideal ANN-ICA 3-11-1 model is shown by two separate diagrams: a scatter plot and a line diagram.

a, b Training and testing performance curves of ANN-ICA 3-11-1 models for different random numbers (1:1:10) while the population is constant to optimum size 75 and the number of empires is constant to optimum size 12; c, d training and testing performance curves of ANN-ICA 3-11-1 models for different population numbers (15:5:100) while the random number is constant to optimum 6 and the number of empires is constant to optimum size 12; and (e, f) training and testing performance curves of ANN-ICA 3-11-1 models for different empires numbers (2:2:20) while the random number is constant to optimum 6 and the number of population is constant to optimum size 75

A performance summary of the developed models is furnished in Table 15. In terms of the a20-index criterion, the produced ANN-LM 3-11-1 model achieved the highest precision with 84.70% and 71.74% accuracies, respectively, in the training and testing phases. The values of other indices also demonstrate that the proposed ANN-LM 3-11-1 is efficient and robust. Based on the RMSE index, the ANN-LM 3-11-1 model outperforms the ANN-PSO 3-11-1 and ANN-ICA 3-11-1 models by 103.73% and 61.90%, respectively, in the training phase, and by 61.33% and 51.90%, respectively, in the testing phase. These results corroborate the aforementioned premise in every aspects.

To further demonstrate the overall performance of the optimum ANNs in a succinct manner, Taylor diagram and error histogram for both training and testing stages are presented in this sub-section. Note that, Taylor diagram is a two-dimensional mathematical diagram that is used to provide a brief assessment of a model’s accuracy (Taylor 2001). It denotes the associations between the real and estimated observations in terms of R, RMSE, and ratio of standard deviations indices. In Taylor diagram, a model is represented by a point. It should be emphasized that for an ideal model, the point’s position should correspond with the reference point (as shown in black color circle). On the contrary, error histogram displays the distribution of error between the actual and estimated values for a data-driven model. Figure 14 illustrates the optimum ANNs generated in this study, whereas Fig. 15 presents the error histogram between the estimated and actual UCS of granite. From these figures, the robustness of the proposed ANN-LM 3-11-1 model can be visualized.

6.4 Closed-Form Equations for Estimating Granite UCS Using Optimum ANN-LM 3-11-1 Model

It is quite common for the majority of published studies pertaining to the studied problem, to present primarily the architecture of the resulting optimal ANN model, together with the values of the statistical indicators based on which the model performance was evaluated. Yet, this information alone makes it impossible to assess the reliability of the proposed mathematical model, and more importantly prohibit any substantial comparison with other models available in the literature. In order to characterize the reliability of the proposed computational model, it is necessary for the researchers to provide all the pertinent data that clearly describe the model so that it can be reproduced and checked by other researchers. Especially for the case of ANNs, apart from the architecture, it is deemed necessary to provide the transfer functions corresponding to the proposed ANN model, and more importantly the finalized values of weights and biases of their developed and proposed models. In addition, since a publication targets not only researchers but also practicing engineers, it would be particularly useful to include a Graphical User Interface (GUI) in the study, implementing the proposed ANN-LM 3-11-1 model so that it can be checked by experts and be utilized by anyone interested in this problem.

To overcome this deficiency, the explicit mathematical equation that represents the optimum developed ANN-LM 3-11-1 model, along with the accompanying weights and biases, are provided in this study. Therefore, the proposed model can be readily reproduced (e.g., in a spreadsheet environment), by any interested third party. Even if an understanding of neural networks is lacking, an implementation of the model is still possible, since the required calculation steps are defined through the provided explicit mathematical formula. In light of the above, the derived equations for the prediction of both normalized (UCSnorm) and real (UCSreal) values of granite UCS, using Rn, Vp, and ne values are expressed by the following equations for the optimum developed ANN-LM 3-11-1 model:

where a = − 1.00 and b = 1.00 are the lower and upper limits of the minmax normalization technique applied on the data, UCSmax = 211.90 and UCSmin = 20.30 are the maximum and minimum values of granite UCS present in the database that was used for training and the development of ANN models. The satlins and radbasn are the symmetric saturating linear transfer function and the normalized radial basis transfer function, respectively, as discussed in Table 6. In addition, their details (equations and graphs) are presented in detail in Table 18 of the Appendix.

Equation (48) describes the developed ANN-LM 3-11-1 model in a purely mathematical form, so that its reproduction becomes straightforward. In Eq. (48), \([\mathrm{IW}\{\mathrm{1,1}\}]\) is a 11 \(\times\) 3 matrix that contains the weights of the hidden layer; \(\left[\mathrm{LW}\left\{\mathrm{2,1}\right\}\right]\) is a 1 \(\times\) 11 vector with the weights of the output layer; \(\left[\mathrm{IP}\right]\) is a 3 × 1 vector with the three input variables; \(\left[\mathrm{B}\left\{\mathrm{1,1}\right\}\right]\) is a 11 \(\times\) 1 vector that contains the bias values of the hidden layer; and \(\left[\mathrm{B}\left\{\mathrm{2,1}\right\}\right]\) is a 1 \(\times\) 1 vector with the bias of the output layer. The \([IP]\) vector contains the three normalized values of the input variables Rn, Vp, and ne. It can be expressed as

where the \(\mathrm{min}\left({n}_{e}\right)\)=0.06, \(\mathrm{max}\left({n}_{e}\right)\)=7.23; \(\mathrm{min}\left({V}_{p}\right)\)=1160, \(\mathrm{max}\left({V}_{p}\right)\)=7943; \(\mathrm{min}\left({R}_{n}\right)\)=16.80, and \(\mathrm{max}\left({R}_{n}\right)\)=72 are the input parameters’ minimum and maximum values (shown in Table 4). The values of final weights and biases that determine the matrices \(\left[\mathrm{IW}\left\{\mathrm{1,1}\right\}\right]\), \(\left[\mathrm{LW}\left\{\mathrm{2,1}\right\}\right]\), \(\left[\mathrm{B}\left\{\mathrm{1,1}\right\}\right]\) and \(\left[\mathrm{B}\left\{\mathrm{2,1}\right\}\right]\) are presented in Table 16.

In this form of matrix multiplication, Eq. (49) can be easily programmed in an Excel spreadsheet and, therefore, it can be more easily evaluated and used in practice. It is worth to note that such an implementation can be used by various interested parties (i.e., researchers, students, and engineers), without placing heavy requirements in effort and time. Figure 16 presents the developed Graphical User Interface (GUI), implementing Eqs. (48) and (49). The developed GUI is also provided as a supplementary material. The developed GUI can be used as an alternate tool to estimate the UCS of rocks. Researchers and practitioners can utilize the developed GUI for estimating the rock UCS in the preliminary stages of any major/minor projects such as tunneling works, railway projects, and highway projects.

6.5 Comparison of the Optimum ANN-LM 3-11-1 Model with Semi-empirical Relationships Available in the Literature

The accuracy of the optimum ANN-LM-3-11-1 model developed in this study to predict the UCS of granite is compared with the prediction accuracy of the top nine models available in the literature. The prediction accuracy of the various models is assessed using a range of statistical indices including the a20-index, R, RMSE, MAPE and VAF. Herein, the comparison is presented for the testing dataset because a model that made more accurate prediction in the testing phase is considered to be more accurate and should be accepted with more conviction. Table 17 shows that the optimum developed ANN-LM-3-11-1 model significantly outperforms the prediction accuracy of the top nine models reported in the literature. The ANN-LM-3-11-1 model predicts the UCS of granite with less than ± 20% deviation from the experimental data for 71.74% of the specimen, while the second in rank model proposed by Mishra and Basu (2013) predicts the UCS of granite with less than ± 20% deviation from the experimental data for 54.35% of the specimen. The variation of the various statistical analysis indexes suggests that the a20-index is the most prediction accuracy sensitive index. For example, while the Pearson correlation coefficient of the first and second rank models only differ by 16%, the a20-indexes differ by 32%.

It is of interest to reiterate that the developed optimum ANN-LM-3-11-1 model can predict the UCS of granite strictly within the range of values to which it has been trained and which is presented in detail in Table 4. The prediction accuracy of the proposed model is particularly high for the range where the various parameter value distribution is dense and which typically comprises 5% of the total parameter values.

7 Summary and Conclusion

The aim of this research is to estimate the UCS of rocks using three non-destructive test indicators, namely Rn (L), Vp, and ne. For this purpose, a sum of 274 datasets was compiled and used to train and validate three ANN-based models including ANN-LM 3-11-1, ANN-PSO 3-11-1, and ANN-ICA 3-11-1. Specifically, the performance of constructed ANNs was evaluated initially, followed by a comparison of the predicted accuracy of models currently available in the literature. Existing models in the literature employ only Vp and/or ne as input parameters for predicting the UCS of granites; however, to this purpose, the created ANN-based models were compared utilizing three test indicators, viz., Rn (L), Vp, and ne. Using a20-index, R, RMSE, MAPE, and VAF criteria, the ANN-LM 3-11-1 was found to be the highest performing model in both the training and testing phases of UCS prediction. Furthermore, when compared to the predicted UCS of granite using existing models in the literature, the ANN-LM 3-11-1 proposed in this study delivers a more accurate prediction. Considering the experimental results, the developed GUI based on the constructed ANN-LM 3-11-1 model can be used as a tool to estimate the UCS of granites.

The goal of this study was to not only build a high-performance ML model, but also to provide adequate details of numerous proposals that had been proposed in the literature. Details of previous studies employing soft computing models to predict granite UCS are also provided and discussed. The main advantages of the study include (a) closed-from equation; (b) available GUI platform as a readymade tool for estimating rock UCS; and (c) the most efficient model. However, the optimum developed ANN-LM 3-11-1 model can predict the UCS of soft to hard granite ranging between 20.30 and 211.9 MPa. This is one of the limitations of this study. In addition, the prediction accuracy of the ANN-LM 3-11-1 model could be influenced by any potential variations of the input data distribution to which it has been trained and developed. The descriptive statistics of the input parameters demonstrates that the number of samples with Vp ranging between 1000 and 3000 m/s is relatively limited. Therefore, extending the database in this range would require a recalibration of the optimum developed ANN-LM 3-11-1 model. The future direction of the study could include (a) a comprehensive evaluation of the ANN-LM model’s accuracy relative to other soft computing models using real-world data from a variety of fields; (b) an evaluation of the ANN-LM 3-11-1 model’s superiority over other hybrid ANNs constructed with different optimization algorithms; and (c) implementation of dimension reduction techniques such as principal component analysis (PCA), independent component analysis, and kernel-PCA for a comprehensive evaluation of results. Nonetheless, to the best of the authors’ knowledge, this is the first research to employ three non-destructive test indicators (Rn, Vp, and ne) to predict the UCS of granites.

Data Availability

The raw/processed data required to reproduce these findings will be made available on request.

References

Aamir M, Tolouei-Rad M, Vafadar A, Raja MNA, Giasin K (2020) Performance analysis of multi-spindle drilling of Al2024 with TiN and TiCN coated drills using experimental and artificial neural networks technique. Appl Sci 10(23):8633

Altindag R (2012) Correlation between P-wave velocity and some mechanical properties for sedimentary rocks. J Southern Afr Inst Min Metall 112(3):229–237

Apostolopoulou M, Armaghani DJ, Bakolas A, Douvika MG, Moropoulou A, Asteris PG (2019) Compressive strength of natural hydraulic lime mortars using soft computing techniques. Proc Struct Integrit 17:914–923. https://doi.org/10.1016/j.prostr.2019.08.122

Apostolopoulou M, Asteris PG, Armaghani DJ, Douvika MG, Lourenço PB, Cavaleri L, Bakolas A, Moropoulou A (2020) Mapping and holistic design of natural hydraulic lime mortars. Cement Concrete Res 136:106167. https://doi.org/10.1016/j.cemconres.2020.106167

Apostolopoulou M, Douvika MG, Kanellopoulos IN, Moropoulou A and Asteris PG (2018) Prediction of compressive strength of mortars using artificial neural networks. In: Proceedings of the 1st International Conference TMM_CH, Transdisciplinary Multispectral Modelling and Cooperation for the Preservation of Cultural Heritage, Athens, Greece (pp. 10-13).

Armaghani DJ, Mohamad ET, Hajihassani M, Yagiz S, Motaghedi H (2016a) Application of several non-linear prediction tools for estimating uniaxial compressive strength of granitic rocks and comparison of their performances. Eng Comput 32(2):189–206

Armaghani D, Tonnizam Mohamad E, Momeni E et al (2016b) Prediction of the strength and elasticity modulus of granite through an expert artificial neural network. Arab J Geosci 9:48

Armaghani DJ, Hatzigeorgiou GD, Karamani Ch, Skentou A, Zoumpoulaki I, Asteris PG (2019) Soft computing-based techniques for concrete beams shear strength. Proc Struct Integrit 17:924–933

Armaghani DJ, Mamou A, Maraveas C, Roussis PC, Siorikis VG, Skentou AD, Asteris PG (2021) Predicting the unconfined compressive strength of granite using only two non-destructive test indexes. Geomech Eng 25(4):317–330

Asteris PG, Roussis PC, Douvika MG (2017) Feed-forward neural network prediction of the mechanical properties of sandcrete materials. Sensors 17(6):1344

Asteris PG and Kolovos KG (2017) Self-compacting concrete strength prediction using surrogate models. Neural Comput Appl 31(1): 409-424.

Asteris PG and Plevris V (2013) Neural network approximation of the masonry failure under biaxial compressive stress. In: Proceedings of the 3rd South-East European Conference on Computational Mechanics (SEECCM III), an ECCOMAS and IACM Special Interest Conference, Kos Island, Greece (pp. 12-14)

Asteris PG and Plevris V (2016) Anisotropic masonry failure criterion using artificial neural networks. Neural Comput Appl 28(8):2207-2229.

Asteris PG, Mamou A, Hajihassani M, Hasanipanah M, Koopialipoor M, Le TT, Kardani N and Armaghani DJ (2021) Soft computing based closed form equations correlating L and N-type Schmidt hammer rebound numbers of rocks. Transport Geotech 29:100588 https://doi.org/10.1016/j.trgeo.2021.100588

Atashpaz-Gargari E and Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. In 2007 IEEE congress on evolutionary computation (pp 4661–4667). IEEE

Aydin A, Basu A (2005) The Schmidt hammer in rock material characterization. Eng Geol 81(1):1–14

Azimian A (2017) Application of statistical methods for predicting uniaxial compressive strength of limestone rocks using nondestructive tests. Acta Geotech 12(2):321–333

Ballantyne CK, Black NM, Finlay DP (1990) Use of the Schmidt test hammer to detect enhanced boulder weathering under late-lying snowpatches. Earth Surf Processes Landf 15:471–474

Barham WS, Rabab’ah SR, Aldeeky HH, Al Hattamleh OH (2020) Mechanical and physical based artificial neural network models for the prediction of the unconfined compressive strength of rock. Geotech Geol Eng 38(5):4779–4792

Basu A, Aydin A (2004) A method for normalization of Schmidt hammer rebound values. Int J Rock Mech Min Sci 41(7):1211–1214

Bhadana V, Jalal AS and Pathak P (2020) A comparative study of machine learning models for COVID-19 prediction in India. In: 2020 IEEE 4th conference on information and communication technology (CICT) (pp 1–7). IEEE

Bruno G, Vessia G, Bobbo L (2013) Statistical method for assessing the uniaxial compressive strength of carbonate rock by Schmidt hammer tests performed on core samples. Rock Mech Rock Eng 46(1):199–206

Cao J, Gao J, Nikafshan Rad H, Mohammed AS, Hasanipanah M and Zhou J (2021) A novel systematic and evolved approach based on XGBoost-firefly algorithm to predict Young’s modulus and unconfined compressive strength of rock. Eng Comput 1–17. https://doi.org/10.1007/s00366-020-01241-2

Cavaleri L, Chatzarakis GE, Di Trapani FD, Douvika MG, Roinos K, Vaxevanidis NM, Asteris PG (2017) Modeling of surface roughness in electro-discharge machining using artificial neural networks. Adv Mater Res 6(2):169

Cavaleri L, Asteris PG, Psyllaki PP, Douvika MG, Skentou AD, Vaxevanidis NM (2019) Prediction of surface treatment effects on the tribological performance of tool steels using artificial neural networks. Appl Sci 9(14):2788

Çelik SB, Çobanoğlu İ (2019) Comparative investigation of Shore, Schmidt, and Leeb hardness tests in the characterization of rock materials. Environmental Earth Sciences 78(18):1–16

Ceryan N, Samui P (2020) Application of soft computing methods in predicting uniaxial compressive strength of the volcanic rocks with different weathering degree. Arab J Geosci 13(7):1–18

Ceryan N, Okkan U, Kesimal A (2013) Prediction of unconfined compressive strength of carbonate rocks using artificial neural networks. Environ Earth Sci 68(3):807–819

Chandra S, Agrawal S, Chauhan DS (2018) Soft computing based approach to evaluate the performance of solar PV module considering wind effect in laboratory condition. Energy Rep 4:252–259

Chen H, Asteris PG, Armaghani DJ, Gordan B, Pham BT (2019) Assessing dynamic conditions of the retaining wall using two hybrid intelligent models. Appl Sci 9:1042. https://doi.org/10.3390/app9061042

Çobanoğlu İ, Çelik SB (2008) Estimation of uniaxial compressive strength from point load strength, Schmidt hardness and P-wave velocity. Bull Eng Geol Env 67(4):491–498

Das SK, Samui P, Sabat AK (2011) Application of artificial intelligence to maximum dry density and unconfined compressive strength of cement stabilized soil. Geotech Geol Eng 29(3):329–342

Dehghan S, Sattari GH, Chelgani SC, Aliabadi MA (2010) Prediction of uniaxial compressive strength and modulus of elasticity for Travertine samples using regression and artificial neural networks. Min Sci Technol 20(1):41–46

Demirdag S, Yavuz H, Altindag R (2009) The effect of sample size on Schmidt rebound hardness value of rocks. Int J Rock Mech Min Sci 46(4):725–730

Diamantis K, Gartzos E, Migiros G (2009) Study on uniaxial compressive strength, point load strength index, dynamic and physical properties of serpentinites from Central Greece: test results and empirical relations. Eng Geol 108(3–4):199–207

Dinçer I, Acar A, Çobanoğlu I, Uras Y (2004) Correlation between Schmidt hardness, uniaxial compressive strength and Young’s modulus for andesites, basalts and tuffs. Bull Eng Geol Environ 63(2):141–148

Ebdali M, Khorasani E, Salehin S (2020) A comparative study of various hybrid neural networks and regression analysis to predict unconfined compressive strength of travertine. Innov Infrastruct Solut 5(3):1–14

Fener M, Kahraman S, Bilgil A, Gunaydin O (2005) A comparative evaluation of indirect methods to estimate the compressive strength of rocks. Rock Mech Rock Eng 38(4):329–343

Ferentinou M, Fakir M (2017) An ANN approach for the prediction of uniaxial compressive strength, of some sedimentary and igneous rocks in eastern KwaZulu-Natal. Procedia Eng 191:1117–1125

Franklin JA, Dusseault MB (1991) Rock engineering applications. McGraw-Hill, New York

Gokceoglu C, Zorlu K (2004) A fuzzy model to predict the uniaxial compressive strength and the modulus of elasticity of a problematic rock. Eng Appl Artif Intell 17(1):61–72

Golafshani EM, Behnood A, Arashpour M (2020) Predicting the compressive strength of normal and High-Performance Concretes using ANN and ANFIS hybridized with Grey Wolf Optimizer. Constr Build Mater 232:117266

Hassoun MH (1995) Fundamentals of artificial neural networks. MIT press

Hecht-Nielsen R (1987) Kolmogorov”s mapping neural network existence theorem, IEEE First Annual Int. Conf. on Neural Networks, San Diego. 3, 11–13

Heidari M, Mohseni H, Jalali SH (2018) Prediction of uniaxial compressive strength of some sedimentary rocks by fuzzy and regression models. Geotech Geol Eng 36(1):401–412

Hoek E (1983) Strength of jointed rock masses. Geotechnique 33(3):187–223. https://doi.org/10.1680/geot.1983.33.3.187

Hoek E, Brown ET (1980) Empirical strength criterion for rock masses. J Geotech Geoenviron Eng 106:15715. https://doi.org/10.1061/AJGEB6.0001029

Hoek E, Brown ET (1997) Practical estimates of rock mass strength. Int J Rock Mech Min Sci 34(8):1165–1186. https://doi.org/10.1016/S1365-1609(97)80069-X

Hoek E, Brown ET (2019) The Hoek-Brown failure criterion and GSI–2018 edition. J Rock Mech Geotech Eng 11(3):445–463

Hoek E, Carranza-Torres C, Corkum B (2002) Hoek-Brown failure criterion-2002 edition. Proc NARMS-Tac 1(1):267–273

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2:359–366

Hunter D, Yu H, Pukish MS III, Kolbusz J, Wilamowski BM (2012) Selection of proper neural network sizes and architectures—a comparative study. IEEE Trans Industr Inf 8(2):228–240

Jing H, Nikafshan Rad H, Hasanipanah M, JahedArmaghani D, Qasem SN (2021) Design and implementation of a new tuned hybrid intelligent model to predict the uniaxial compressive strength of the rock using SFS-ANFIS. Eng Comput 37(4):2717–2734

Kaastra I, Boyd M (1996) Designing a neural network for forecasting financial and economic time series. Neurocomputing 10:215–236. https://doi.org/10.1016/0925-2312(95)00039-9

Kahraman S (2001) Evaluation of simple methods for assessing the uniaxial compressive strength of rock. Int J Rock Mech Min Sci 38(7):981–994

Kanellopoulos I, Wilkinson GG (1997) Strategies and best practice for neural network image classification. Int J Remote Sens 18:711–725. https://doi.org/10.1080/014311697218719

Karaman K, Kesimal A (2015) A comparative study of Schmidt hammer test methods for estimating the uniaxial compressive strength of rocks. Bull Eng Geol Env 74(2):507–520

Katz O, Reches Z, Roegiers JC (2000) Evaluation of mechanical rock properties using a Schmidt Hammer. Int J Rock Mech Min Sci 37(4):723–728

Kechagias J, Tsiolikas A, Asteris P and Vaxevanidis N (2018) Optimizing ANN performance using DOE: application on turning of a titanium alloy. In: Proceedings of the 22nd International Conference on Innovative Manufacturing Engineering and Energy, Chisinau, Moldova. In MATEC Web of Conferences (Vol. 178, p. 01017). EDP Sciences.

Kennedy J and Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN'95-international conference on neural networks (Vol 4, pp 1942–1948). IEEE

Khan MUA, Shukla SK, Raja MNA (2021) Soil–conduit interaction: an artificial intelligence application for reinforced concrete and corrugated steel conduits. Neural Comput Appl 33(21):14861–14885

Khan MUA, Shukla SK, Raja MNA (2022) Load-settlement response of a footing over buried conduit in a sloping terrain: a numerical experiment-based artificial intelligent approach. Soft Comput 26:6839–6856

Khandelwal M (2013) Correlating P-wave velocity with the physico-mechanical properties of different rocks. Pure Appl Geophys 170(4):507–514

Khandelwal M, Singh TN (2009) Correlating static properties of coal measures rocks with P-wave velocity. Int J Coal Geol 79(1–2):55–60

Kılıç A, Teymen A (2008) Determination of mechanical properties of rocks using simple methods. Bull Eng Geol Env 67(2):237–244

Koopialipoor M, Jahed Armaghani D, Hedayat A, Marto A, Gordan B (2019a) Applying various hybrid intelligent systems to evaluate and predict slope stability under static and dynamic conditions. Soft Comput 23(14):5913–5929

Koopialipoor M, Fallah A, Armaghani DJ, Azizi A, Mohamad ET (2019b) Three hybrid intelligent models in estimating flyrock distance resulting from blasting. Eng Comput 35(1):243–256

Koopialipoor M, Murlidhar BR, Hedayat A, Armaghani DJ, Gordan B, Mohamad ET (2020) The use of new intelligent techniques in designing retaining walls. Eng Comput 36(1):283–294

Koopialipoor M, Asteris PG, Mohammed AS, Alexakis DE, Mamou A, Armaghani DJ (2022) Introducing stacking machine learning approaches for the prediction of rock deformation. Transport Geotech 34:100756. https://doi.org/10.1016/j.trgeo.2022.100756

Kurtulus CENGİZ, Bozkurt A, Endes H (2012) Physical and mechanical properties of serpentinized ultrabasic rocks in NW Turkey. Pure Appl Geophys 169(7):1205–1215

Li D, Armaghani DJ, Zhou J, Lai SH, Hasanipanah M (2020) A GMDH predictive model to predict rock material strength using three non-destructive tests. J Nondestruct Eval 39(4):1–14

Li JY, Chow TW and Yu YL (1995) The estimation theory and optimization algorithm for the number of hidden units in the higher-order feedforward neural network. In: Proceedings of ICNN'95-International Conference on Neural Networks, vol. 3. IEEE. pp 1229–1233

Madhubabu N, Singh PK, Kainthola A, Mahanta B, Tripathy A, Singh TN (2016) Prediction of compressive strength and elastic modulus of carbonate rocks. Measurement 88:202–213

Mahmoodzadeh A, Mohammadi M, Ibrahim HH, Abdulhamid SN, Salim SG, Ali HFH, Majeed MK (2021) Artificial intelligence forecasting models of uniaxial compressive strength. Transport Geotech 27:100499

Mahmoodzadeh A, Mohammadi M, Ghafoor Salim S, Farid Hama Ali H, Hashim Ibrahim H, NarimanAbdulhamid S, Nejati HR and Rashidi S (2022) Machine learning techniques to predict rock strength parameters. Rock Mech Rock Eng 1–21

Masters (1993) Practical neural network recipies in C++, 1st edn. Academic Press Professional, Inc.

Meulenkamp F, Grima MA (1999) Application of neural networks for the prediction of the unconfined compressive strength (UCS) from Equotip hardness. Int J Rock Mech Min Sci 36(1):29–39

Minaeian B, Ahangari K (2013) Estimation of uniaxial compressive strength based on P-wave and Schmidt hammer rebound using statistical method. Arab J Geosci 6(6):1925–1931

Mishra DA, Basu A (2013) Estimation of uniaxial compressive strength of rock materials by index tests using regression analysis and fuzzy inference system. Eng Geol 160:54–68

Moayedi H, Moatamediyan A, Nguyen H, Bui XN, Bui DT, Rashid ASA (2020) Prediction of ultimate bearing capacity through various novel evolutionary and neural network models. Eng Comput 36(2):671–687

Mohamad ET, JahedArmaghani D, Momeni E, AlaviNezhad Khalil Abad SV (2015) Prediction of the unconfined compressive strength of soft rocks: a PSO-based ANN approach. Bull Eng Geol Env 74(3):745–757

Mohamad ET, Koopialipoor M, Murlidhar BR, Rashiddel A, Hedayat A, Armaghani DJ (2019) A new hybrid method for predicting ripping production in different weathering zones through in situ tests. Measurement 147:106826

Momeni E, Armaghani DJ, Hajihassani M, Amin MFM (2015) Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 60:50–63

Monjezi M, AminiKhoshalan H, YazdianVarjani A (2012) Prediction of flyrock and backbreak in open pit blasting operation: a neuro-genetic approach. Arab J Geosci 5(3):441–448

Moradian ZA, Behnia M (2009) Predicting the uniaxial compressive strength and static Young’s modulus of intact sedimentary rocks using the ultrasonic test. Int J Geomech 9(1):14–19

Moussas VM, Diamantis K (2021) Predicting uniaxial compressive strength of serpentinites through physical, dynamic and mechanical properties using neural networks. J Rock Mech Geotech Eng 13(1):167–175

Ng IT, Yuen KV, Lau CH (2015) Predictive model for uniaxial compressive strength for Grade III granitic rocks from Macao. Eng Geol 199:28–37

Niedzielski T, Migoń P, Placek A (2009) A minimum sample size required from Schmidt hammer measurements. Earth Surf Process Landforms 34(13):1713–1725

Nikoo M, Ramezani F, Hadzima-Nyarko M, Nyarko EK, Nikoo M (2016) Flood-routing modeling with neural network optimized by social-based algorithm. Nat Hazards 82(1):1–24

Nikoo M, Hadzima-Nyarko M, KarloNyarko E, Nikoo M (2018) Determining the natural frequency of cantilever beams using ANN and heuristic search. Appl Artif Intell 32(3):309–334

Nikoo M, Sadowski L, Khademi F and Nikoo M (2017) Determination of damage in reinforced concrete frames with shear walls using self-organizing feature map. Appl Comput Intell Soft Comput 1–10

Pandey SK, Sodum VR, Janghel RR and Raj A (2020) ECG arrhythmia detection with machine learning algorithms. In: Data Engineering and Communication Technology. Springer, Singapore, pp 409–417

Paola JD, Schowengerdt RA (1995) A review and analysis of backpropagation neural networks for classification of remotely-sensed multi-spectral imagery. Int J Remote Sens 16:3033–3058. https://doi.org/10.1080/01431169508954607

Poole RW, Farmer IW (1980) Consistency and repeatability of Schmidt hammer rebound data during field testing. Int J Rock Mech Min Sci Geomech Abstr 17:167–171

Psyllaki P, Stamatiou K, Iliadis I, Mourlas A, Asteris P and Vaxevanidis N (2018) Surface treatment of tool steels against galling failure. In: Proceedings of the 5th International Conference of Engineering against failure, MATEC Web of Conferences, 188, 04024, Chios, Greece

Raja MNA, Shukla SK and Khan MUA (2021) An intelligent approach for predicting the strength of geosynthetic-reinforced subgrade soil. Int J Pavement Eng 49(5):1280-1293.

Raja MNA, Shukla SK (2021a) Multivariate adaptive regression splines model for reinforced soil foundations. Geosynth Int 28(4):368–390

Raja MNA, Shukla SK (2021b) Predicting the settlement of geosynthetic-reinforced soil foundations using evolutionary artificial intelligence technique. Geotext Geomembr 49(5):1280–1293

Ripley BD (2008) Pattern recognition and neural networks, 1st edn. Cambridge University Press, Cambridge

Rogers LL, Dowla FU (1994) Optimization of groundwater remediation using artificial neural networks with parallel solute transport modeling. Water Resour Res 30:457–481. https://doi.org/10.1029/93WR01494

Roy P, Mahapatra GS, Dey KN (2019) Forecasting of software reliability using neighborhood fuzzy particle swarm optimization based novel neural network. IEEE/CAA J Automatica Sinica 6(6):1365–1383

Sachpazis CI (1990) Correlating Schmidt hardness with compressive strength and Young’s modulus of carbonate rocks. Bull Int Assoc Eng Geol 42(1):75–83

Samui P (2008) Support vector machine applied to settlement of shallow foundations on cohesionless soils. Comput Geotech 35(3):419–427. https://doi.org/10.1016/j.compgeo.2007.06.014

Samui P, Kothari DP (2011) Utilization of a least square support vector machine (LSSVM) for slope stability analysis. Scientia Iranica 18(1):53–58

Shalabi FI, Cording EJ, Al-Hattamleh OH (2007) Estimation of rock engineering properties using hardness tests. Eng Geol 90(3–4):138–147

Sharma PK, Singh TN (2008) A correlation between P-wave velocity, impact strength index, slake durability index and uniaxial compressive strength. Bull Eng Geol Env 67(1):17–22

Shibata K and Ikeda Y (2009) Effect of number of hidden neurons on learning in large-scale layered neural networks. In 2009 ICCAS-SICE. IEEE, pp 5008–5013

Sousa LM, del Río LMS, Calleja L, de Argandona VGR, Rey AR (2005) Influence of microfractures and porosity on the physico-mechanical properties and weathering of ornamental granites. Eng Geol 77(1–2):153–168

Sumner P, Nel W (2002) The effect of rock moisture on Schmidt hammer rebound: tests on rock samples from Marion Island and South Africa. Earth Surf Process Landforms 27(10):1137–1142

Tamura SI, Tateishi M (1997) Capabilities of a four-layered feedforward neural network: four layers versus three. IEEE Trans Neural Netw 8(2):251–255

Tandon RS, Gupta V (2015) Estimation of strength characteristics of different Himalayan rocks from Schmidt hammer rebound, point load index, and compressional wave velocity. Bull Eng Geol Env 74(2):521–533

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res Atmos 106:7183–7192

Teymen A, Mengüç EC (2020) Comparative evaluation of different statistical tools for the prediction of uniaxial compressive strength of rocks. Int J Min Sci Technol 30(6):785–797

Torabi-Kaveh M, Naseri F, Saneie S, Sarshari B (2015) Application of artificial neural networks and multivariate statistics to predict UCS and E using physical properties of Asmari limestones. Arab J Geosci 8(5):2889–2897

Tuǧrul A (2004) The effect of weathering on pore geometry and compressive strength of selected rock types from Turkey. Eng Geol 75(3–4):215–227. https://doi.org/10.1016/j.enggeo.2004.05.008

Tuğrul A, Zarif IH (1999) Correlation of mineralogical and textural characteristics with engineering properties of selected granitic rocks from Turkey. Eng Geol 51(4):303–317

Vasconcelos G, Lourenço PB, Alves CAS, Pamplona J (2008) Ultrasonic evaluation of the physical and mechanical properties of granites. Ultrasonics 48(5):453–466

Wang L, Zhang W, Chen F (2019) Bayesian approach for predicting soil-water characteristic curve from particle-size distribution data. Energies 12(15):2992

Wang C (1994) A theory of generalization in learning machines with neural network applications. Phd, University of Pennsylvania

Xu H, Zhou J, Asteris PG, Armaghani DJ, Tahir M (2019) Supervised machine learning techniques to the prediction of tunnel boring machine penetration rate. Appl Sci 9(18):3715. https://doi.org/10.3390/app9183715

Yagiz S (2009) Predicting uniaxial compressive strength, modulus of elasticity and index properties of rocks using the Schmidt hammer. Bull Eng Geol Environ 68(1):55–63

Yagiz S, Sezer EA, Gokceoglu C (2012) Artificial neural networks and nonlinear regression techniques to assess the influence of slake durability cycles on the prediction of uniaxial compressive strength and modulus of elasticity for carbonate rocks. Int J Numer Anal Met 36(14):1636–1650

Yang H, Koopialipoor M, Armaghani DJ, Gordan B, Khorami M, Tahir MM (2019) Intelligent design of retaining wall structures under dynamic conditions. Steel Compos Struct Int J 31(6):629–640

Yaşar E, Erdoğan Y (2004) Estimation of rock physicomechanical properties using hardness methods. Eng Geol 71(3–4):281–288

Yesiloglu-Gultekin N, Gokceoglu C (2022) A comparison among some non-linear prediction tools on indirect determination of uniaxial compressive strength and modulus of elasticity of basalt. J Nondestr Eval 41(1):1–24

Yesiloglu-Gultekin N, Gokceoglu C, Sezer EA (2013) Prediction of uniaxial compressive strength of granitic rocks by various nonlinear tools and comparison of their performances. Int J Rock Mech Min Sci 62:113–122

Yılmaz I, Sendır H (2002) Correlation of Schmidt hardness with unconfined compressive strength and Young’s modulus in gypsum from Sivas (Turkey). Eng Geol 66(3–4):211–219

Yılmaz I, Yuksek AG (2008) An example of artificial neural network (ANN) application for indirect estimation of rock parameters. Rock Mech Rock Eng 41(5):781–795. https://doi.org/10.1007/s00603-007-0138-7

Yilmaz I, Yuksek G (2009) Prediction of the strength and elasticity modulus of gypsum using multiple regression, ANN, and ANFIS models. Int J Rock Mech Min Sci 46(4):803–810

Yurdakul M, Akdas H (2013) Modeling uniaxial compressive strength of building stones using non-destructive test results as neural networks input parameters. Constr Build Mater 47:1010–1019

Zhang W, Zhang Y, Goh AT (2017) Multivariate adaptive regression splines for inverse analysis of soil and wall properties in braced excavation. Tunn Undergr Space Technol 64:24–33

Zhang W, Zhang R, Goh AT (2018) Multivariate adaptive regression splines approach to estimate lateral wall deflection profiles caused by braced excavations in clays. Geotech Geol Eng 36(2):1349–1363

Zhang W, Zhang R, Wu C, Goh ATC, Lacasse S, Liu Z, Liu H (2020) State-of-the-art review of soft computing applications in underground excavations. Geosci Front 11(4):1095–1106

Zhang W, Li H, Li Y, Liu H, Chen Y, Ding X (2021) Application of deep learning algorithms in geotechnical engineering: a short critical review. Artif Intell Rev 54(8):5633–5673

Zhang W, Li H, Tang L, Gu X, Wang L, Wang L (2022b) Displacement prediction of Jiuxianping landslide using gated recurrent unit (GRU) networks. Acta Geotech 17(4):1367–1382

Zhang W, Li H, Han L, Chen L and Wang L (2022a) Slope stability prediction using ensemble learning techniques: a case study in Yunyang County, Chongqing, China. J Rock Mech Geotech Eng 14(4):1089-1099

Zhang W, Gu X, Tang L, Yin Y, Liu D and Zhang Y (2022c) Application of machine learning, deep learning and optimization algorithms in geoengineering and geoscience: comprehensive review and future challenge. Gondwana Res 109: 1-17

Zhang W and Phoon KK (2022) Editorial for Advances and applications of deep learning and soft computing in geotechnical underground engineering. J Rock Mech Geotech Eng 14(3):671-673

Zhang W and Liu Z (2022) Editorial for machine learning in geotechnics. Acta Geotech. 17, 1017. https://doi.org/10.1007/s11440-022-01563-z

ISRM (2007). The complete ISRM suggested methods for rock characterization, testing and monitoring: 1974-2006, in Suggested Methods Prepared by the Commission on Testing Methods, International Society for Rock Mechanics, Turkish National Group; Ankara, Turkey.

Funding

Open access funding provided by HEAL-Link Greece. The authors received no financial support for the research, authorship, and/or publication of this article.

Author information

Authors and Affiliations

Contributions

ADS: formal analysis and investigation, and writing—original draft preparation; AB: methodology, software, writing—original draft preparation, and writing—review and editing; AM: formal analysis and investigation; MEL: formal analysis and investigation; GK: writing—review and editing; PS: writing—review and editing; DJA: conceptualization, writing—review and editing, and supervision; PGA: conceptualization, methodology, software, writing—review and editing, and supervision.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Supplementary Materials

Graphical User Interface (GUI) for estimating UCS of granite.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Skentou, A.D., Bardhan, A., Mamou, A. et al. Closed-Form Equation for Estimating Unconfined Compressive Strength of Granite from Three Non-destructive Tests Using Soft Computing Models. Rock Mech Rock Eng 56, 487–514 (2023). https://doi.org/10.1007/s00603-022-03046-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00603-022-03046-9