Abstract

Background

A variety of human computer interfaces are used by robotic surgical systems to control and actuate camera scopes during minimally invasive surgery. The purpose of this review is to examine the different user interfaces used in both commercial systems and research prototypes.

Methods

A comprehensive scoping review of scientific literature was conducted using PubMed and IEEE Xplore databases to identify user interfaces used in commercial products and research prototypes of robotic surgical systems and robotic scope holders. Papers related to actuated scopes with human–computer interfaces were included. Several aspects of user interfaces for scope manipulation in commercial and research systems were reviewed.

Results

Scope assistance was classified into robotic surgical systems (for multiple port, single port, and natural orifice) and robotic scope holders (for rigid, articulated, and flexible endoscopes). Benefits and drawbacks of control by different user interfaces such as foot, hand, voice, head, eye, and tool tracking were outlined. In the review, it was observed that hand control, with its familiarity and intuitiveness, is the most used interface in commercially available systems. Control by foot, head tracking, and tool tracking are increasingly used to address limitations, such as interruptions to surgical workflow, caused by using a hand interface.

Conclusion

Integrating a combination of different user interfaces for scope manipulation may provide maximum benefit for the surgeons. However, smooth transition between interfaces might pose a challenge while combining controls.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Camera scopes provide surgeons with extensive visualization of internal organs during minimally invasive surgeries. Traditionally, the operating surgeon relies on human assistance to move the camera for optimal views. The human assistant is required to hold the scope in a stable manner so there are no shaky views of the operating field. Long operating times lead to interrupted visualization due to fatigue, tremors, miscommunication, and increased need for cleaning when the lens accidentally touches nearby organs. Poor maneuvering of camera scopes by human assistance can complicate procedures [1].

Camera assistant roles are often assigned to junior surgical residents. Handling the scope requires complex psychomotor skills such as visual-spatial processing, hand–eye coordination, and knowledge of the surgical procedure. Camera navigation skills, such as target centering and smooth movements, are assessed using structured tools or simulators that are designed to differentiate between experienced and inexperienced assistants [2]. The type of skills required vary with the procedure. For example, assistants require more advanced navigation skills for colorectal resections, than for cholecystectomies. As surgeons are fully dependent on camera views during laparoscopic surgeries, any unstable views, smudges on the lens, or collisions with instruments caused by the human assistant can prolong operating time. This may compromise patient safety [3]. Inexperienced assistants may unintentionally rotate the camera scope, thereby affecting the surgeon’s visual perception. This can cause misidentification of anatomic structures and lead to intraoperative injuries [4].

Issues with human camera assistance can be resolved by using scope holders. Camera scope holders that replace human assistance can provide images without the effect of hand tremors. Passive scope holders are maneuvered manually between fixed camera positions. Although clear views without hand tremors are provided, smooth movement of the scope can be challenging [5, 6]. To overcome this, robotic scope holders that allow visual stability and full control by the operating surgeon have become commonplace. Compared to a human camera assistant, an active robotic scope holder provides the operating surgeon with a flexible and steady view, in addition to reducing operating time and cost [5]. Optimal views in human-assisted laparoscopy depend on the training and experience of the assistant, while there is less dependency on these factors in a robot-assisted procedure [7]. Using robotic scope holders offers improved ergonomics for surgeons [8]. While musculoskeletal disorders are prevalent among laparoscopic surgeons due to posture and repetitive movements, reports of physical discomfort, such as wrist, shoulder, back and neck pain, are much lower in robotic surgeries [9].

In robot-assisted surgical procedures, the surgeon controls the slave robot using a master interface. Robotic systems utilize a variety of user interfaces, which include control by foot, hand, voice, head, eyes, and image-based tracking of surgical tools. (Detailed descriptions of each user interface type are presented in the first part of the Results section.) To reduce cognitive load on the surgeon, natural and direct mapping of interface movement with the robotic actuator is required. An ideal interface is intuitive, ergonomic, and user-friendly [10, 11]. Intuitive interfaces help decrease the time required for endoscope tip positioning, which is imperative while performing advanced surgical interventions [12].

Surgical robotic systems (and hence the user interfaces to control them) vary as per the intervention site. Surgical sites close to an entry port may only require rigid or semi-rigid scopes for visualization. However, complex procedures in the gastrointestinal tract, such as endoscopic submucosal dissection (ESD), require robotically actuated flexible scopes for manipulation and optimal positioning [13]. Biopsies of peripheral pulmonary lesions benefit from robotic bronchoscopy, which allows scope navigation for direct visualization through bronchi that branch at different angles, and become progressively smaller deeper in the lungs [14]. Improved surgical precision that allows fine dissection makes robot assistance favorable for urological and colorectal surgeries.

To our knowledge, current literature does not provide a detailed review of the different scope user interfaces in robotic surgery. This review aims to provide an overview of user interfaces for robotically actuated camera scopes. The Results section describes the common user interfaces used by robotic systems for visualization during surgery. It also covers the different robotic surgical systems that actuates scope. It further provides mapping of user interfaces with the robotic systems as well as the surgeries performed under different specialties. The Discussion section describes the evolution of user interfaces over time. A comparison of key features of different user interfaces are also presented.

Methods

The review follows the Preferred Reporting Items for Systematic Reviews and Meta-Analysis extension for Scoping Reviews (PRISMA-ScR) guidelines [15]. An extensive search of scientific literature was conducted using PubMed and IEEE Xplore databases to identify articles describing user interfaces for robotic scope control in surgery. The search strategy for PubMed is given in Supplementary Content 1. Additional records were identified through thorough citation searches, websites, and patents. A total of 720 records were screened. Articles related to surgical systems using actuated scopes with user interfaces published between 1995 and 2022 were included. The records were screened using Rayyan app (https://www.rayyan.ai/). Duplicate reports, non-robotic passive systems, soft robots, systems not related to endoscopic or laparoscopic visualization, and papers not in English were excluded. Data extracted from the records were categorized into user interfaces and types of robotic systems. Additional citations were also used (such as company websites) to provide references for the technical specifications of the robotic systems. In addition, papers comparing different user interfaces were also identified.

Results

A total of 127 articles describing 67 different robot-assisted surgical platforms were included in the review after identifying and screening (Fig. 1). The platforms were grouped into: (a) 6 unique user interfaces to provide scope maneuvering commands (Fig. 2) and (b) 6 different categories based on the scope actuation mechanism (Fig. 3). Various characteristics of each robotic system, including (a) visualization type (stereo vision, high-definition, camera size, resolution), (b) degree(s) of freedom (DOF), (c) manipulation type (insertion, retraction, pan, tilt, rotate), (d) actuation method (motor, pneumatically driven), (e) control type (teleoperated, cooperative), (f) control interface, (g) development stage (commercial, research), (h) year, and (i) clinical application were also extracted.

Primary findings of the searches conducted are presented in the three subsequent sections. The first section describes the user interfaces for actuated scope control. The second section presents robot-assisted surgical platforms based on scope manipulation. A more detailed account of user interfaces used with different robot-assisted surgical platforms and in different surgeries is presented in the third section.

User interfaces to provide scope maneuvering commands

Robotic systems increase the performance of camera scopes by filtering tremors and translating precise movements. Intuitive user interfaces have been developed for control of robotic systems. These can be categorized by mode of input, which includes control by foot, hand, voice, head, eyes, and image-based tracking of surgical tools, as illustrated in Fig. 2.

Foot control

Foot pedals are often used as a clutch to activate scope control using handles such as finger loops or joystick [16]. The camera position is fixed unless the clutch is engaged. Foot pedals may also act as an independent control, such as the consoles developed by Yang et al. [17] and Huang et al. [16], where the novel foot interface controls the scope in four degrees of freedom (DOF). Foot control frees the hands for controlling surgical instruments. However, the buttons pressed by the foot may distract the surgeon’s attention, as they look down to differentiate the correct pedal from the ones used for operating an electric knife or other instruments [18].

Hand control

The types of hand control devices that have been adopted by commercially available systems include joysticks, buttons, finger loops, touch pads, and trackballs. These allow operating surgeons to have independent control on the visualization without relying on human assistance. The application of this type of interfacing is limited because surgeons cannot simultaneously operate the scope and their instruments [16]. Surgical flow is interrupted as the operating surgeon switches between control of surgical instrument and camera scope. Additionally, pain in the fingers and thumb is commonly reported for robotic surgeries during prolonged use [9].

Voice control

In systems controlled by voice, the surgeon speaks out commands such as “up”, “down”, “in”, “out” etc., to move camera scopes. Manipulating camera scopes using voice control mimics the default communication method used between operating surgeon and assistant, and there is no physical fatigue [19]. Noise in the background, however, can potentially affect voice recognition accuracy. Repetition of voice commands causing considerable delay in scope movement make it unfavorable for surgeons [20]. The typical task time for voice control is 2 s [21].

Head control

Head motion tracking provides a non-verbal intuitive control method using the surgeon’s head position as input data. Recognition of facial gestures [22] and use of head mounted displays [23] allows smooth scope control without discontinuing surgical tasks. However, it can be challenging to intuitively control the depth of the endoscope using head movements [24].

Eye tracking

Eye tracking involves navigating the scope using eye gaze control by measuring reflections in the cornea. Although eye tracking methods free up hands for surgical instruments, they can be considered distracting. In a study [25] reporting surgeon’s opinion on interfaces, 3 out of 5 surgeons rated eye tracking unfavorably.

Tool tracking

Tool tracking uses image analysis that continuously detects the surgical instruments when activated and controls the scope position accordingly. Automatic view centering and zoom adaption is possible with the computer-based instrument tip tracking system. However, surgeons might have different priorities in terms of what they want to see while using instrument tracking [26]. This control can be challenging for tasks without surgical tools.

Robot-assisted surgical platforms based on scope manipulation

This section presents the robot-assisted surgical platforms that utilize aforementioned user interfaces to visualize the operative field during surgery. As depicted in Fig. 3, two main categories were used: (i) robotic surgical systems (grouped based on access to surgical site: multiple port, single port, and natural orifice), and (ii) robotic scope holders (grouped based on flexibility of scope used: rigid, articulated, and flexible endoscopes).

Robotic surgical systems for multiple-port surgeries

As opposed to conventional laparoscopic surgery, robotic surgery provides enhanced visualization, dexterity, and ergonomics. Systems made for multiple-port surgeries utilize several incisions to gain access to the target area [27]. A surgeon console, either closed or open, with controllers is employed to teleoperate the robotic arm holding the camera scope. The surgeon may also switch ports over the course of the procedure. Robotic systems for multiple-port surgeries (Table 1), such as the da Vinci Xi (Intuitive Surgical Inc., USA) and Senhance (Asensus Surgical, USA), are utilized for a wide variety of clinical applications such as colorectal, general, gynecological, thoracic, and urological surgeries [28,29,30].

Robotic surgical systems for single-port surgeries

Compared to multiple-port procedures, single-port surgeries reduce invasiveness and significantly benefit patients with less scarring, low recovery time and reduced postoperative pain [56]. Robotic systems developed for single-incision laparoscopic surgeries, as detailed in Table 2, usually have a single arm with multiple instruments and a scope for visualization that extends outwards. The incision may be of different sizes depending on the system used and the procedure. Single-port surgery may prove challenging for the surgeon due to poor ergonomics. To avoid collision, distally actuated arms that achieve triangulation of the instruments around the target organ are often required [57]. Much like the ones for multiple-port surgeries, these systems utilize either closed or open surgeon console with controllers to manipulate the robotic arm. The da Vinci SP (Intuitive Surgical Inc., USA) has US Food and Drug Administration (FDA) approval for urologic and transoral otolaryngology procedures. Other platforms under development target gynecological and general surgery applications.

Robotic surgical systems for natural orifice procedures

Further minimizing surgical aggressiveness, robotic systems for natural orifice procedures approach the site of interest through the natural openings in the body such as the mouth or anus [67]. This is especially beneficial when the patient has a compromised immune system. The robot consists of a highly flexible and dextrous arm that can be steered towards intricate structures. An open surgeon console or a bed-side controller is used to manipulate the arm, and correspondingly the camera. Table 3 describes robotic systems used for transoral applications such as vocal cord lesion resection and bronchoscopy, as well as colorectal surgeries. Systems aimed for endoscopic submucosal dissection (ESD) in the gastrointestinal tract and ear, nose, throat (ENT) surgeries are under development.

Robotic scope holders for rigid scopes

Minimally invasive surgeries employ rigid scopes for visualization that is either zero-degree which is forward-viewing or angulated that provides a wide range of view. Robotically actuated scope holders, which are used to hold and maneuver rigid scopes, provide a tremor-free stable view that is directly controlled by the operating surgeon. It eliminates the need to communicate desired scope position changes to an assistant [84]. Several holders have been developed for rigid scopes, with AESOP (Computer Motion, USA) being one of the earliest robotic scope holders using hand, foot, and voice control. As described in Table 4, they are used extensively in general, urology, gynecology, and colorectal surgeries. SOLOASSIST II (AKTORmed, Germany) has applications in transoral thyroid surgeries as well.

Robotic scope holders for articulated scopes

Articulated scopes have a flexible distal end that improves visualization around complex anatomy. Such scopes reduce the chance of interference with surgical instruments inserted through the same port. Research prototypes of scope holders described by Li et al. [121] and Huang et al. [26] aim towards thoracic surgery applications (Table 5). These research prototypes tend to use a variety of different control interfaces for scope manipulation.

Robotic scope holders for flexible endoscopes

Flexible endoscopes are highly dexterous and heavily used in gastroscopy and colonoscopy procedures. Complex movements are required when compared to rigid scopes [127]. Few robotic scope holders have been developed for forward-viewing flexible endoscopes (Table 6). Certain motions, such as rotation, are still controlled manually in some of these systems. Majority of the scope holders are exclusively used for colonoscopy and gastroscopy. The Avicenna Roboflex (ELMED Medical Systems, Türkiye) has applications in urology as well.

User interfaces used in robot-assisted surgical platforms

Robot-assisted surgical platforms presented above utilize different user interfaces for scope manipulation. Overall, the results presented in Fig. 4a and Table 7 suggest that robotic surgical systems predominantly use hand control interfaces, whereas robotic scope holders tend to utilize and experiment with a variety of different interfaces, including tool tracking. In robotic surgical systems for multiple port, single port, and natural orifice, the design of closed consoles requires the surgeon to place their head on the stereo viewer. This limits the surgeon’s range of movement, making hand controllers appropriate for scope control. Most commercially available robotic scope holders offer a hand control interface due to its familiarity and intuitiveness which is necessary while performing surgical procedures. Advantages such as user-friendliness, easy hand–eye coordination, and lower cognitive load make hand control popular.

As shown in Fig. 4b and Table 8, all categories of interfaces are used in general, urology, and gynecology surgeries. Otolaryngology, which focuses on ears, nose, and throat, predominantly utilizes hand control, and has the least variety of interfaces applied. Figure 5 illustrates the key surgical applications of the robotic systems, and the entry port sites. About 85% of prostatectomies in the USA are performed using robot assistance [148]. Complexity of the procedure and surgeon’s prior experience with related technology both affect the learning curve in robotic surgery [25].

Discussion

Use of robot assistance in surgeries has increased in the past decade. Early appearances of user interfaces in research and commercial robotic systems are illustrated in Fig. 6. In the period of 1990–2010, commercial systems were chiefly controlled using foot, hand, voice, and head interfaces, while the period of 2010–2020 has witnessed the emergence of eye-gaze and tool tracking scope control interfaces. AESOP and ZEUS systems (Computer Motion Inc., USA) developed during the mid to late 1990s both utilized voice commands as input [32], mimicking the default communication between surgeon and assistant. Computer Motion Inc. was acquired by Intuitive Surgical which uses hand interfaces for their da Vinci systems. Intuitive Surgical has been the market leader since early 2000s [149]. Head motion for rigid scope control was first used in EndoSista (Armstrong Healthcare, UK) during the mid-1990s [150]. It was later commercialized by FreeHand Surgical, UK in 2008. Tool tracking, as implemented in the AutoLap system (MST Medical Surgery Technologies, Israel) in 2016, has received more attention recently.

There has been a limited number of studies comparing different user interfaces. These studies focus on robotic scope holders for rigid scopes. A summary of these studies is presented in Table 9, which illustrates that surgeons increasingly prefer scope control interfaces that free their hands to control surgical instruments and do not interrupt surgical tasks. Voice control was favored due to its reduced length of operating time and improved concentration [151]. However, foot control was preferred in multiple studies. In studies [19,20,21] comparing foot and voice controls that keep surgeon’s hands free, foot control was preferred, as voice commands had a higher chance of misinterpretation. In addition to task completion time, Allaf, Jackman [19] measured operator-interface failures, which was defined as occasions where the surgeon had to focus attention on the interface rather than the surgical field. The protocol was also repeated to assess the percentage of improvement retained after two weeks, where foot control was found easier to learn. While comparing AESOP and ViKY systems [21], it was found that voice commands had to be repeated due to speech recognition failures. Voice control was found to be affected by pronunciation while evaluating the RoboLens [20]. The system was assessed based on time for procedure completion, need for cleaning, image stability, and procedure field centering during several laparoscopic cholecystectomies. A significant lag between voice command and scope movement was observed. Although foot control is preferred over voice, eye–foot coordination might not be ideal, and surgeons often looked down to choose the right pedal from multiple ones [151]. Tool tracking is increasingly preferred as there is no interruption to surgery to control the scope. In a study by Avellino et al. [120] comparing joystick controlled by hand, body posture tracking and tool tracking, surgeons evaluated the interfaces based on a defined set of tasks. Joystick received good ratings and was ranked behind tool tracking, while posture tracking was found suitable for tasks requiring short distance movements. Despite raising concerns for tasks that do not involve surgical instruments, tool tracking was well-regarded.

Overall, actuated scopes utilize a variety of user interfaces such as foot, hand, voice, head, eyes, and tool tracking to provide stable views and smooth control during minimally invasive surgeries. Hand control is the most popular interface across all categories of surgical systems as it is familiar, intuitive and requires less mental load. However, various other interfaces are being investigated to address the interruption to surgical workflow caused by hand control. Head tracking interfaces are being explored in research prototypes such as the multiple-port system by Jo et al. [48]. This helps address the issue of interruption to surgical procedure caused by hand interfaces when switching control between surgical instrument and scope. Breaks in surgical workflow can result in longer operating time and increased risk of patient injury [48]. Having an easy-to-use and intuitive single-person interface is considered important for scope control by surgeons and gastroenterologists [152]. In teleoperated systems, where the surgeon is away from the patient, there is a preference for an open surgeon console. In an open console design, the surgeon views the video feedback through a head-up display, as opposed to an enclosed stereo viewer. Compared to a closed console, an open platform offers increased situational awareness, enables the expert surgeon to effectively mentor interns, and improve team communication [153, 154]. Preference for working position, either sitting or standing, varies among surgeons [152].

Majority of the systems utilizing hand controllers (such as da Vinci—Intuitive Surgical, Revo-i—Revo Surgical Solutions, and Enos—Titan Medical) or head-motion-based controllers (such as FreeHand system and MTG-H100–HIWIN) requires a foot pedal to activate the scope control mechanism. In these multimodal user interfaces, the foot pedal has two functionalities. First, it acts as an on–off switch that triggers the motion of the scope. In case of hand controllers, it enables the operator to switch the control from surgical instruments motion (to operate on the tissue) to scope maneuvering (to navigate the operative field). In case of head-motion-based controllers, it activates the scope motion only when the foot pedal is pressed and thus allows the surgeons to freely move the head during the rest of the procedure [155, 156]. Second, the foot pedal acts as a clutch and facilitates ergonomic repositioning of the hand controllers or head position [157]. Another example of a multimodal user interface for scope control is head-mounted display (HMD) devices. HMDs have been used in the operating room for surgical navigation and planning [158, 159]. In case of actuated scope maneuvering, the operative field view is rendered by HMD devices in a virtual reality or a mixed reality environment, whereas head motions detected by the device’s sensors are used to maneuver the scope [160,161,162]. In contrast to visualizing the operative field on a physical screen, the usage of HMD devices offers the surgeon the flexibility to ergonomically place the virtual view of the operative field in the operating room [5, 163, 164]. It decreases the surgeon’s shift of focus from the screen to the operating site [165, 166] and thus may assist in reducing the prolonged strains (in the neck and lower back) due to bad monitor positioning [167, 168]. Further end-user clinical studies would be required to assess the potential of HMD devices as a multimodal user interface (i.e., to immerse the operator with the information pertaining to the operating field and evaluate the control of the robotic system [169, 170]).

Limitations of this review include removal of non-English literature. The exclusion may have prevented a broad representation and insight. Methodological quality of the included studies was also not assessed. Additionally, there are no studies comparing all the different user interfaces with the same surgical task and scenario, which would have provided an equal assessment.

In conclusion, the observations in this review indicate that integration of multiple control interfaces for camera control would be ideal, especially for scope holders used in bed-side procedures. As each interface has its own benefits, merging different control types enables the surgeon to benefit specifically from each interface in various surgical steps [120]. The surgeon would be free to choose the appropriate control type throughout different stages of the surgical procedure. Integration of head tracking, which is efficient for 3D navigation, or tool tracking, which lowers cognitive load, would be advantageous. Nevertheless, merging several controls may result in limitations such as redundancy. It may also pose a challenge for the surgeon to achieve seamless transition while changing interfaces. It would be helpful to further explore the impact of different user interfaces on surgical outcomes in future studies.

References

Ali JM, Lam K, Coonar AS (2018) Robotic camera assistance: the future of laparoscopic and thoracoscopic surgery? Surg Innov 25:485–491

Ishimaru T, Deie K, Sakai T, Satoh H, Nakazawa A, Harada K, Takazawa S, Fujishiro J, Sugita N, Mitsuishi M, Iwanaka T (2018) Development of a skill evaluation system for the camera assistant using an infant-sized laparoscopic box trainer. J Laparoendosc Adv Surg Tech 28:906–911

Huettl F, Lang H, Paschold M, Watzka F, Wachter N, Hensel B, Kneist W, Huber T (2020) Rating of camera navigation skills in colorectal surgery. Int J Colorectal Dis 35:1111–1115

Zhu A, Yuan C, Piao D, Jiang T, Jiang H (2013) Gravity line strategy may reduce risks of intraoperative injury during laparoscopic surgery. Surg Endosc 27:4478–4484

Ohmura Y, Suzuki H, Kotani K, Teramoto A (2019) Comparative effectiveness of human scope assistant versus robotic scope holder in laparoscopic resection for colorectal cancer. Surg Endosc 33:2206–2216

Kim JS, Park WC, Lee JH (2019) Comparison of short-term outcomes of laparoscopic-assisted colon cancer surgery using a joystick-guided endoscope holder (Soloassist II) or a human assistant. Ann Coloproctol 35:181–186

Ngu JC-Y, Teo N-Z (2021) A novel method to objectively assess robotic assistance in laparoscopic colorectal surgery. Int J Med Robot Comput Assist Surg 17:e2251

Wijsman PJM, Molenaar L, van’t Hullenaar CDP, van Vugt BST, Bleeker WA, Draaisma WA, Broeders IAMJ (2019) Ergonomics in handheld and robot-assisted camera control: a randomized controlled trial. Surg Endosc 33:3919–3925

Wee IJY, Kuo L-J, Ngu JC-Y (2020) A systematic review of the true benefit of robotic surgery: ergonomics. Int J Med Robot Comput Assist Surg 16:e2113

Ruiter JG, Bonnema GM, van der Voort MC, Broeders IAMJ (2013) Robotic control of a traditional flexible endoscope for therapy. J Robot Surg 7:227–234

Velazco-Garcia JD, Navkar NV, Balakrishnan S, Abi-Nahed J, Al-Rumaihi K, Darweesh A, Al-Ansari A, Christoforou EG, Karkoub M, Leiss EL, Tsiamyrtzis P, Tsekos NV (2021) End-user evaluation of software-generated intervention planning environment for transrectal magnetic resonance-guided prostate biopsies. Int J Med Robot 17:1–12

Rozeboom E, Ruiter J, Franken M, Broeders I (2014) Intuitive user interfaces increase efficiency in endoscope tip control. Surg Endosc 28:2600–2605

Zorn L, Nageotte F, Zanne P, Legner A, Dallemagne B, Marescaux J, Mathelin Md (2018) A novel telemanipulated robotic assistant for surgical endoscopy: preclinical application to ESD. IEEE Trans Biomed Eng 65:797–808

Chen AC, Pastis NJ Jr, Mahajan AK, Khandhar SJ, Simoff MJ, Machuzak MS, Cicenia J, Gildea TR, Silvestri GA (2021) Robotic bronchoscopy for peripheral pulmonary lesions: a multicenter pilot and feasibility study (BENEFIT). Chest 159:845–852

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, Moher D, Peters MDJ, Horsley T, Weeks L, Hempel S, Akl EA, Chang C, McGowan J, Stewart L, Hartling L, Aldcroft A, Wilson MG, Garritty C, Lewin S, Godfrey CM, Macdonald MT, Langlois EV, Soares-Weiser K, Moriarty J, Clifford T, Tunçalp Ö, Straus SE (2018) PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Internal Med 169:467–473

Huang Y, Lai W, Cao L, Liu J, Li X, Burdet E, Phee SJ (2021) A three-limb teleoperated robotic system with foot control for flexible endoscopic surgery. Ann Biomed Eng 49:2282–2296

Yang YJ, Udatha S, Kulić D, Abdi E (2020) A novel foot interface versus voice for controlling a robotic endoscope holder. In: 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), pp 272–279

Berkelman P, Cinquin P, Boidard E, Troccaz J, Létoublon C, Long J-a (2005) Development and testing of a compact endoscope manipulator for minimally invasive surgery. Comput Aided Surg 10:1–13

Allaf ME, Jackman SV, Schulam PG, Cadeddu JA, Lee BR, Moore RG, Kavoussi LR (1998) Laparoscopic visual field. Surg Endosc 12:1415–1418

Mirbagheri A, Farahmand F, Meghdari A, Karimian F (2011) Design and development of an effective low-cost robotic cameraman for laparoscopic surgery: RoboLens. Sci Iran 18:105–114

Gumbs AA, Crovari F, Vidal C, Henri P, Gayet B (2007) Modified robotic lightweight endoscope (ViKY) validation in vivo in a porcine model. Surg Innov 14:261–264

Nishikawa A, Hosoi T, Koara K, Negoro D, Hikita A, Asano S, Kakutani H, Miyazaki F, Sekimoto M, Yasui M, Miyake Y, Takiguchi S, Monden M (2003) FAce MOUSe: a novel human-machine interface for controlling the position of a laparoscope. IEEE Trans Robot Autom 19:825–841

Zinchenko K, Komarov O, Song K (2017) Virtual reality control of a robotic camera holder for minimally invasive surgery. In: 2017 11th Asian Control Conference (ASCC), pp 970–975

Kuo JY, Song KT (2020) Human interface and control of a robotic endoscope holder based on an AR approach. In: 2020 International Automatic Control Conference (CACS), pp 1–6

Aaltonen IE, Wahlström M (2018) Envisioning robotic surgery: surgeons’ needs and views on interacting with future technologies and interfaces. Int J Med Robot Comput Assist Surg 14:e1941

Huang Y, Li J, Zhang X, Xie K, Li J, Liu Y, Ng CSH, Chiu PWY, Li Z (2022) A Surgeon preference-guided autonomous instrument tracking method with a robotic flexible endoscope based on dVRK platform. IEEE Robot Autom Lett 7:2250–2257

Chen Y, Zhang C, Wu Z, Zhao J, Yang B, Huang J, Luo Q, Wang L, Xu K (2021) The SHURUI system: a modular continuum surgical robotic platform for multiport, hybrid-port, and single-port procedures. IEEE/ASME Trans Mechatron 27:3186

Millan B, Nagpal S, Ding M, Lee JY, Kapoor A (2021) A scoping review of emerging and established surgical robotic platforms with applications in urologic surgery. Soc Int d’Urol J 2:300–310

Khandalavala K, Shimon T, Flores L, Armijo PR, Oleynikov D (2019) Emerging surgical robotic technology: a progression toward microbots. Ann Laparosc Endosc Surg 5:3

Peters BS, Armijo PR, Krause C, Choudhury SA, Oleynikov D (2018) Review of emerging surgical robotic technology. Surg Endosc 32:1636–1655

Schurr MO, Buess G, Neisius B, Voges U (2000) Robotics and telemanipulation technologies for endoscopic surgery. Surg Endosc 14:375–381

Kakeji Y, Konishi K, Ieiri S, Yasunaga T, Nakamoto M, Tanoue K, Baba H, Maehara Y, Hashizume M (2006) Robotic laparoscopic distal gastrectomy: a comparison of the da Vinci and Zeus systems. Int J Med Robot Comput Assist Surg 2:299–304

Da Vinci Instruments. Intuitive Surgical. https://www.intuitive.com/en-us/products-and-services/da-vinci/instruments. Accessed 25 Apr 2022

Wang Y, Li Z, Yi B, Zhu S (2022) Initial experience of Chinese surgical robot “Micro Hand S”-assisted versus open and laparoscopic total mesorectal excision for rectal cancer: short-term outcomes in a single center. Asian J Surg 45:299–306

Pappas T, Fernando A, Nathan M (2020) 1—Senhance surgical system: robotic-assisted digital laparoscopy for abdominal, pelvic, and thoracoscopic procedures. In: Abedin-Nasab MH (ed) Handbook of robotic and image-guided surgery. Elsevier, Amsterdam, pp 1–14

Koukourikis P, Rha KH (2021) Robotic surgical systems in urology: What is currently available? Investig Clin Urol 62:14–22

Kawashima K, Kanno T, Tadano K (2019) Robots in laparoscopic surgery: current and future status. BMC Biomed Eng 1:12

Lim JH, Lee WJ, Choi SH, Kang CM (2021) Cholecystectomy using the Revo-i robotic surgical system from Korea: the first clinical study. Updat Surg 73:1029–1035

Lee HK, Lee KE, Ku J, Lee KH (2021) Revo-i: the competitive Korean surgical robot. Gyne Robot Surg 2:45–52

Bitrack. Rob Surgical. https://www.robsurgical.com/bitrack/. Accessed 25 Apr 2022

avateramedical GmbH. What makes avatera so special? https://www.avatera.eu/en/avatera-system. Accessed 08 Jun 2022

Liatsikos E, Tsaturyan A, Kyriazis I, Kallidonis P, Manolopoulos D, Magoutas A (2022) Market potentials of robotic systems in medical science: analysis of the Avatera robotic system. World J Urol 40:283–289

Morton J, Hardwick RH, Tilney HS, Gudgeon AM, Jah A, Stevens L, Marecik S, Slack M (2021) Preclinical evaluation of the versius surgical system, a new robot-assisted surgical device for use in minimal access general and colorectal procedures. Surg Endosc 35:2169–2177

Kawasaki Group (2021) Flying high in achieving a medical revolution: The hinotori*TM robotic-assisted surgery system. Scope, Kawasaki Heavy Industries Quarterly Newsletter 127. https://global.kawasaki.com/en/scope/pdf_e/scope127_01.pdf. Accessed 28 Apr 2022

Nature Research Custom Media, Medicaroid. A new era of robotic-assisted surgery. Springer Nature Limited. https://www.nature.com/articles/d42473-021-00164-w. Accessed 28 Apr 2022

Chassot J, Friedrich M, Schoeneich P, Salehian M (2021) Surgical robot systems comprising robotic telemanipulators and integrated laparoscopy. European Patent Office, EP3905980A2

Wessling B (2022) Distalmotion, the company behind Dexter, raises $90 million in funding. The Robot Report. https://www.therobotreport.com/distalmotion-the-company-behind-dexter-raises-90-million-in-funding/. Accessed 27 Jun 2022

Jo Y, Kim YJ, Cho M, Lee C, Kim M, Moon H-M, Kim S (2020) Virtual reality-based control of robotic endoscope in laparoscopic surgery. Int J Control Autom Syst 18:150–162

MicroPort Scientific Corporation (2020) MicroPort MedBot’s. Toumai® endoscopic surgical system completes first robot-assisted extraperitoneal radical prostatectomy. https://microport.com/news/microport-medbots-toumai-endoscopic-surgical-system-completes-first-robot-assisted-extraperitoneal-radical-prostatectomy#. Accessed 21 Jul 2022

Shu Rui cracks the “Da Vinci Code”, and the localization of endoscopic surgical robots goes further. https://www.hcitinfo.com/axzwe1m00kzc.html. Accessed 28 Apr 2022

Nature Research Custom Media, Shu Rui. Getting to grips with enhanced dexterity. https://www.nature.com/articles/d42473-020-00269-8. Accessed 10 May 2022

Medtronic. HugoTM RAS System. https://www.medtronic.com/covidien/en-gb/robotic-assisted-surgery/hugo-ras-system.html. Accessed 16 May 2022

Whooley S (2022) The road to a robot: Medtronic’s development process for its Hugo RAS system Mass Device. https://www.massdevice.com/the-road-to-a-robot-medtronics-development-process-for-hugo-ras-system/

Digital Innovation Hub Healthcare Robotics (DIH-HERO). Surgical robot with DLR technology on the market. https://dih-hero.eu/surgical-robot-with-dlr-technology-on-the-market/. Accessed 27 Jun 2022

SS Innovations. SSI Mantra. https://ssinnovations.com/home/technology/. Accessed 21 Jul 2022

Brodie A, Vasdev N (2018) The future of robotic surgery. Ann R Coll Surg Engl 100:4–13

Omisore OM, Han S, Xiong J, Li H, Li Z, Wang L (2022) A review on flexible robotic systems for minimally invasive surgery. IEEE Trans Syst Man Cybern Syst 52:631–644

Kneist W, Stein H, Rheinwald M (2020) Da Vinci Single-Port robot-assisted transanal mesorectal excision: a promising preclinical experience. Surg Endosc 34:3232–3235

Xu K, Zhao J, Fu M (2015) Development of the SJTU unfoldable robotic system (SURS) for single port laparoscopy. IEEE/ASME Trans Mechatron 20:2133–2145

Vicarious Surgical US, Inc. Vicarious Surgical Robotic System. https://www.vicarioussurgical.com/. Accessed 26 May 2022

Sachs A, Khalifa S (2017) Virtual reality surgical device. Vicarious Surgical Inc., Cambridge, MA

Ren H, Chen CX, Cai C, Ramachandra K, Lalithkumar S (2017) Pilot study and design conceptualization for a slim single-port surgical manipulator with spring backbones and catheter-size channels. In: 2017 IEEE International Conference on Information and Automation (ICIA), pp 499–504

Li C, Gu X, Xiao X, Lim CM, Ren H (2019) A robotic system with multichannel flexible parallel manipulators for single port access surgery. IEEE Trans Ind Inf 15:1678–1687

Titan Medical Inc. Discover Enos Technology. https://titanmedicalinc.com/technology/. Accessed 24 Apr 2022

Seeliger B, Diana M, Ruurda JP, Konstantinidis KM, Marescaux J, Swanström LL (2019) Enabling single-site laparoscopy: the SPORT platform. Surg Endosc 33:3696–3703

Virtual Incision Corporation. Virtual Incision announces approval to complete clinical study enrollment for its MIRA® platform. https://virtualincision.com/approval-to-complete-clinical-study-enrollment/. Accessed 24 Apr 2022

Zhu J, Lyu L, Xu Y, Liang H, Zhang X, Ding H, Wu Z (2021) Intelligent soft surgical robots for next-generation minimally invasive surgery. Adv Intell Syst 3:2100011

Johnson PJ, Serrano CMR, Castro M, Kuenzler R, Choset H, Tully S, Duvvuri U (2013) Demonstration of transoral surgery in cadaveric specimens with the medrobotics flex system. Laryngoscope 123:1168–1172

Maloney L (2016) A twist for surgical robotics. GlobalSpec. https://insights.globalspec.com/article/3544/a-twist-for-surgical-robotics. Accessed 12 Jun 2022

Graetzel CF, Sheehy A, Noonan DP (2019) Robotic bronchoscopy drive mode of the Auris Monarch platform. In: 2019 International Conference on Robotics and Automation (ICRA), pp 3895–3901

da Veiga T, Chandler JH, Lloyd P, Pittiglio G, Wilkinson NJ, Hoshiar AK, Harris RA, Valdastri P (2020) Challenges of continuum robots in clinical context: a review. Prog Biomed Eng 2:032003

Johnson & Johnson (2022) Ethicon’s MONARCH® endoscopic robotic platform receives FDA 510(k) clearance for urology procedures. https://www.jnjmedtech.com/en-US/news-events/ethicons-monarch-endoscopic-robotic-platform-receives-fda-510k-clearance-urology. Accessed 18 May 2022

Auris Health, Inc. MonarchTM Platform user manual. https://usermanual.wiki/Auris-Surgical-Robotics/MONARCH-3852937.pdf. Accessed 12 Jun 2022

Berthet-Rayne P, Gras G, Leibrandt K, Wisanuvej P, Schmitz A, Seneci CA, Yang G-Z (2018) The i2Snake robotic platform for endoscopic surgery. Ann Biomed Eng 46:1663–1675

Caycedo A (2021) Intuitive Ion endoluminal system—a robotic-assisted endoluminal platform for minimally invasive peripheral lung biopsy. SAGES, Los Angeles

Agrawal A, Murgu S. Robot-assisted bronchoscopy. World Association for Bronchology and Interventional Pulmonology (WABIP) Newsletter 7(3). https://www.wabip.com/misc/497-tech-7-3. Accessed 24 Apr 2022

Food and Drug Administration (FDA), Department of Health and Human Services. K182188 Ion™ Endoluminal System (Model IF1000) 510(k) premarket notification. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpmn/pmn.cfm?ID=K182188. Accessed 24 Apr 2022

Kume K, Sakai N, Ueda T (2019) Development of a novel gastrointestinal endoscopic robot enabling complete remote control of all operations: endoscopic therapeutic robot system (ETRS). Gastroenterol Res Pract 2019:6909547–6909547

Hwang M, Kwon D-S (2020) K-FLEX: a flexible robotic platform for scar-free endoscopic surgery. Int J Med Robot Comput Assist Surg 16:e2078

Olympus Corporation. Olympus GIF Type 2T160. http://www.olympus-ural.ru/files/GIF-2T160.pdf. Accessed 11 May 2022

Tay G, Tan H-K, Nguyen TK, Phee SJ, Iyer NG (2018) Use of the EndoMaster robot-assisted surgical system in transoral robotic surgery: a cadaveric study. Int J Med Robot Comput Assist Surg 14:e1930

Atallah S, Sanchez A, Bianchi E, Larach S (2021) Video demonstration of the ColubrisMX ELS robotic system for local excision and suture closure in a preclinical model. Tech Coloproctol 25:1333–1333

EndoQuest Robotics. Endoluminal Robotic Surgical System. https://endoquestrobotics.com/next-generation-robotic-surgery.html. Accessed 09 Feb 2023

Li Z, Chiu PWY (2018) Robotic endoscopy. Visc Med 34:45–51

Taylor RH, Funda J, Eldridge B, Gomory S, Gruben K, LaRose D, Talamini M, Kavoussi L, Anderson J (1995) A telerobotic assistant for laparoscopic surgery. IEEE Eng Med Biol Mag 14(3):279–288

Schneider A, Feussner H (2017) Chapter 10—mechatronic support systems and robots. In: Schneider A, Feussner H (eds) Biomedical engineering in gastrointestinal surgery. Academic Press, Cambridge, pp 387–441

Buess GF, Arezzo A, Schurr MO, Ulmer F, Fisher H, Gumb L, Testa T, Nobman C (2000) A new remote-controlled endoscope positioning system for endoscopic solo surgery. Surg Endosc 14:395–399

Polet R, Donnez J (2004) Gynecologic laparoscopic surgery with a palm-controlled laparoscope holder. J Am Assoc Gynecol Laparosc 11:73–78

Polet R, Donnez J (2008) Using a laparoscope manipulator (LAPMAN) in laparoscopic gynecological surgery. Surg Technol Int 17:187–191

Pisla D, Gherman BG, Suciu M, Vaida C, Lese D, Sabou C, Plitea N (2010) On the dynamics of a 5 DOF parallel hybrid robot used in minimally invasive surgery. In: Pisla D, Ceccarelli M, Husty M, Corves B (eds) New trends in mechanism science. Springer, Dordrecht, pp 691–699

Yamada K, Kato S (2008) Robot-assisted thoracoscopic lung resection aimed at solo surgery for primary lung cancer. Gen Thorac Cardiovasc Surg 56:292–294

Takahashi M, Takahashi M, Nishinari N, Matsuya H, Tosha T, Minagawa Y, Shimooki O, Abe T (2017) Clinical evaluation of complete solo surgery with the “ViKY®” robotic laparoscope manipulator. Surg Endosc 31:981–986

Gossot D, Grigoroiu M, Brian E, Seguin-Givelet A (2017) Technical means to improve image quality during thoracoscopic procedures. J Vis Surg 3:53–53

Voros S, Haber GP, Menudet JF, Long JA, Cinquin P (2010) ViKY robotic scope holder: initial clinical experience and preliminary results using instrument tracking. IEEE/ASME Trans Mechatron Mechatron 15:879–886

Gossot D, Abid W, Seguin-Givelet A (2018) Motorized scope positioner for solo thoracoscopic surgery. Video-Assist Thorac Surg 3:47

FreeHand Surgical. FreeHand. https://www.freehandsurgeon.com/. Accessed 28 Apr 2022

Herman B, Dehez B, Duy KT, Raucent B, Dombre E, Krut S (2009) Design and preliminary in vivo validation of a robotic laparoscope holder for minimally invasive surgery. Int J Med Robot Comput Assist Surg 5:319–326

Trévillot V, Sobral R, Dombre E, Poignet P, Herman B, Crampette L (2013) Innovative endoscopic sino-nasal and anterior skull base robotics. Int J Comput Assist Radiol Surg 8:977–987

Sina Robotics & Medical Innovators Co., Ltd. RoboLens: Laparoscopic Surgery Assistant Robot (Standalone model). https://sinamed.ir/robotic-tele-surgery/robolens-stand-alone-model/. Accessed 16 Jun 2022

Shervin T, Haydeh S, Atousa J, Zahra A, Alireza M, Ali J, Faramarz K, Farzam F (2014) Comparing the operational related outcomes of a robotic camera holder and its human counterpart in laparoscopic ovarian cystectomy: a randomized control trial. Front Biomed Technol 1:48

Alireza M, Farzam F, Borna G, Keyvan Amini K, Sina P, Mohammad Javad S, Mohammad Hasan O, Faramarz K, Karamallah T (2015) Operation and human clinical trials of RoboLens: an assistant robot for laparoscopic surgery. Front Biomed Technol 2:184

Wijsman PJM, Broeders IAMJ, Brenkman HJ, Szold A, Forgione A, Schreuder HWR, Consten ECJ, Draaisma WA, Verheijen PM, Ruurda JP, Kaufman Y (2018) First experience with THE AUTOLAP™ SYSTEM: an image-based robotic camera steering device. Surg Endosc 32:2560–2566

Wijsman PJM, Voskens FJ, Molenaar L, van’t Hullenaar CDP, Consten ECJ, Draaisma WA, Broeders IAMJ (2022) Efficiency in image-guided robotic and conventional camera steering: a prospective randomized controlled trial. Surg Endosc 36:2334–2340

Riverfield Inc. EMARO Pneumatic Endoscope Manipulator Robot. https://www.riverfieldinc.com/en/products/emaro/. Accessed 28 Apr 2022

Tadano K, Kawashima K (2015) A pneumatic laparoscope holder controlled by head movement. Int J Med Robot Comput Assist Surg 11:331–340

Yoshida D, Maruyama S, Takahashi I, Matsukuma A, Kohnoe S (2020) Surgical experience of using the endoscope manipulator robot EMARO in totally extraperitoneal inguinal hernia repair: a case report. Asian J Endosc Surg 13:448–452

HIWIN Technologies Corp. Medical Equipments. https://www.hiwin.tw/download/tech_doc/me/Medical_Equipment(E).pdf. Accessed 11 May 2022

Zinchenko K, Wu C, Song K (2017) A study on speech recognition control for a surgical robot. IEEE Trans Ind Inf 13:607–615

Friedrich DT, Sommer F, Scheithauer MO, Greve J, Hoffmann TK, Schuler PJ (2017) An innovate robotic endoscope guidance system for transnasal sinus and skull base surgery: proof of concept. J Neurol Surg B Skull Base 78:466–472

Aesculap AG. EinsteinVision® Aesculap® 3D Laparoscopy. https://www.bbraun.dk/content/dam/catalog/bbraun/bbraunProductCatalog/CW_DK/da-dk/b5/einsteinvision-3dlaparoscopy.pdf. Accessed 15 Jun 2022

Beckmeier L, Klapdor R, Soergel P, Kundu S, Hillemanns P, Hertel H (2014) Evaluation of active camera control systems in gynecological surgery: construction, handling, comfort, surgeries and results. Arch Gynecol Obstet 289:341–348

AKTORmed GmbH. SOLOASSIST II. https://aktormed.info/en/products/soloassist-ii. Accessed 27 Apr 2022

Kristin J, Kolmer A, Kraus P, Geiger R, Klenzner T (2015) Development of a new endoscope holder for head and neck surgery—from the technical design concept to implementation. Eur Arch Otorhinolaryngol 272:1239–1244

Kristin J, Geiger R, Kraus P, Klenzner T (2015) Assessment of the endoscopic range of motion for head and neck surgery using the SOLOASSIST endoscope holder. Int J Med Robot Comput Assist Surg 11:418–423

Park J-O, Kim M, Park Y, Kim M-S, Sun D-I (2020) Transoral endoscopic thyroid surgery using robotic scope holder: our initial experiences. J Minimal Access Surg 16:235–238

Zimmer Biomet. ROSA ONE® Brain: robotic neurosurgery. https://www.zimmerbiomet.com/en/products-and-solutions/zb-edge/robotics/rosa-brain.html. Accessed 06 Jul 2022

De Pauw T, Kalmar A, Van De Putte D, Mabilde C, Blanckaert B, Maene L, Lievens M, Van Haver A-S, Bauwens K, Van Nieuwenhove Y, Dewaele F (2020) A novel hybrid 3D endoscope zooming and repositioning system: design and feasibility study. Int J Med Robot Comput Assist Surg 16:e2050

Zhong F, Li P, Shi J, Wang Z, Wu J, Chan JYK, Leung N, Leung I, Tong MCF, Liu YH (2020) Foot-controlled robot-enabled EnDOscope manipulator (FREEDOM) for sinus surgery: design, control, and evaluation. IEEE Trans Biomed Eng 67:1530–1541

Chan JYK, Leung I, Navarro-Alarcon D, Lin W, Li P, Lee DLY, Liu Y-h, Tong MCF (2016) Foot-controlled robotic-enabled endoscope holder for endoscopic sinus surgery: a cadaveric feasibility study. Laryngoscope 126:566–569

Avellino I, Bailly G, Arico M, Morel G, Canlorbe G (2020) Multimodal and mixed control of robotic endoscopes. In: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, pp 1–14

Li Z, Zin Oo M, Nalam V, Duc Thang V, Ren H, Kofidis T, Yu H (2016) Design of a novel flexible endoscope—cardioscope. J Mech Robot. https://doi.org/10.1115/1.4032272

Li Z, Ng CSH (2016) Future of uniportal video-assisted thoracoscopic surgery—emerging technology. Ann Cardiothorac Surg 5:127–132

Omori T, Arai M, Moromugi S (2021) A prototype of a head-mounted input device for robotic laparoscope holders using lower jaw exercises as command signals detected by a photoreflector array. In: 2021 IEEE 30th International Symposium on Industrial Electronics (ISIE), pp 1–6

Arai M, Omori T, Moromugi S, Adachi T, Kosaka T, Ono S, Eguchi S (2019) A robotic laparoscope holder operated by jaw movements and triaxial head rotations. In: 2019 IEEE International Symposium on Measurement and Control in Robotics (ISMCR), pp A1–5–1-A1–5–6

Legrand J, Ourak M, Van Gerven L, Vander Poorten V, Vander Poorten E (2022) A miniature robotic steerable endoscope for maxillary sinus surgery called PliENT. Sci Rep 12:2299

Ma X, Song C, Qian L, Liu W, Chiu PW, Li Z (2022) Augmented reality-assisted autonomous view adjustment of a 6-DOF robotic stereo flexible endoscope. IEEE Trans Med Robot Bionics 4:356–367

Iwasa T, Nakadate R, Onogi S, Okamoto Y, Arata J, Oguri S, Ogino H, Ihara E, Ohuchida K, Akahoshi T, Ikeda T, Ogawa Y, Hashizume M (2018) A new robotic-assisted flexible endoscope with single-hand control: endoscopic submucosal dissection in the ex vivo porcine stomach. Surg Endosc 32:3386–3392

Eickhoff A, Van Dam J, Jakobs R, Kudis V, Hartmann D, Damian U, Weickert U, Schilling D, Riemann JF (2007) Computer-assisted colonoscopy (the neoguide endoscopy system): results of the first human clinical trial (“pace study”). Am J Gastroenterol 102:261–266

Food and Drug Administration (FDA), Department of Health and Human Services (2017) K162330 Flex Robotic System and Flex Colorectal Drive. https://www.accessdata.fda.gov/cdrh_docs/pdf16/K162330.pdf. Accessed 13 Jun 2022

Sekhon Inderjit Singh HK, Armstrong ER, Shah S, Mirnezami R (2021) Application of robotic technologies in lower gastrointestinal tract endoscopy: a systematic review. World J Gastrointest Endosc 13:673–697

Food and Drug Administration (FDA), Department of Health and Human Services (2017) K070622 NeoGuide Endoscopy System, special 510(K) device modifications summary. https://www.accessdata.fda.gov/cdrh_docs/pdf7/K070622.pdf. Accessed 28 Jun 2022

Era Endoscopy SRL. Endotics System. http://www.endotics.com/index.php. Accessed 13 Jun 2022

Cosentino F, Tumino E, Passoni GR, Morandi E, Capria A (2009) Functional Evaluation of the endotics system, a new disposable self-propelled robotic colonoscope: in vitro tests and clinical trial. Int J Artif Organs 32:517–527

ECE Medical Systems. endodrive®. http://www.endodrive.de/. Accessed 27 Apr 2022

Lim SG (2020) The development of robotic flexible endoscopic platforms. Int J Gastrointest Interv 9:9–12

Rassweiler J, Fiedler M, Charalampogiannis N, Kabakci AS, Saglam R, Klein J-T (2018) Robot-assisted flexible ureteroscopy: an update. Urolithiasis 46:69–77

ELMED Medical Systems. Avicenna Roboflex. https://elmed-as.com/products/avicenna-roboflex/. Accessed 19 Jun 2022

Reilink R, de Bruin G, Franken M, Mariani MA, Misra S, Stramigioli S (2010) Endoscopic camera control by head movements for thoracic surgery. In: 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, pp 510–515

GI View. Aer-O-Scope GI Endoscopic System. https://www.giview.com/aer-o-scope. Accessed 12 Jun 2022

Vucelic B, Rex D, Pulanic R, Pfefer J, Hrstic I, Levin B, Halpern Z, Arber N (2006) The Aer-O-Scope: proof of concept of a pneumatic, skill-independent, self-propelling, self-navigating colonoscope. Gastroenterology 130:672–677

Food and Drug Administration (FDA), Department of Health & Human Services (2016) K161791 Aer-O-Scope Colonoscope System. https://www.accessdata.fda.gov/cdrh_docs/pdf16/K161791.pdf. Accessed 28 Jun 2022

Groth S, Rex DK, Rösch T, Hoepffner N (2011) High cecal intubation rates with a new computer-assisted colonoscope: a feasibility study. Am J Gastroenterol 106:1075–1080

Food and Drug Administration (FDA), Department of Health & Human Services (2016) K161355 invendoscopy E200 System. https://www.accessdata.fda.gov/cdrh_docs/pdf16/K161355.pdf. Accessed 28 Jun 2022

Li Y, Liu H, Hao S, Li H, Han J, Yang Y (2017) Design and control of a novel gastroscope intervention mechanism with circumferentially pneumatic-driven clamping function. Int J Med Robot Comput Assist Surg 13:e1745

Kume K, Sakai N, Goto T (2018) Haptic feedback is useful in remote manipulation of flexible endoscopes. Endosc Int Open 6:E1134–E1139

Kume K, Sakai N, Goto T (2015) Development of a novel endoscopic manipulation system: the Endoscopic Operation Robot ver.3. Endoscopy 47:815–819

Sivananthan A, Kogkas A, Glover B, Darzi A, Mylonas G, Patel N (2021) A novel gaze-controlled flexible robotized endoscope; preliminary trial and report. Surg Endosc 35:4890–4899

Han J, Davids J, Ashrafian H, Darzi A, Elson DS, Sodergren M (2022) A systematic review of robotic surgery: from supervised paradigms to fully autonomous robotic approaches. Int J Med Robot Comput Assist Surg 18:e2358

Takács Á, Nagy D, Rudas I, Haidegger T (2016) Origins of surgical robotics: From space to the operating room. Acta Polytech Hung 13:13–30

Finlay PA, Ornstein MH (1995) Controlling the movement of a surgical laparoscope. IEEE Eng Med Biol Mag 14:289–291

Mettler L, Ibrahim M, Jonat W (1998) One year of experience working with the aid of a robotic assistant (the voice-controlled optic holder AESOP) in gynaecological endoscopic surgery. Hum Reprod 13:2748–2750

Kranzfelder M, Schneider A, Fiolka A, Koller S, Wilhelm D, Reiser S, Meining A, Feussner H (2014) What Do we really need? Visions of an ideal human-machine interface for NOTES mechatronic support systems from the view of surgeons, gastroenterologists, and medical engineers. Surg Innov 22:432–440

Avellino I, Bailly G, Canlorbe G, Belgihti J, Morel G, Vitrani M-A (2019) Impacts of telemanipulation in robotic assisted surgery. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, Glasgow, Scotland UK, pp 583

Hares L, Roberts P, Marshall K, Slack M (2019) Using end-user feedback to optimize the design of the Versius Surgical System, a new robot-assisted device for use in minimal access surgery. BMJ Surg Interv Health Technol 1:e000019

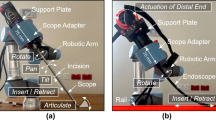

Khorasani M, Abdurahiman N, Padhan J, Zhao H, Al-Ansari A, Becker AT, Navkar N (2022) Preliminary design and evaluation of a generic surgical scope adapter. Int J Med Robot. https://doi.org/10.1002/rcs.2475

Abdurahiman N, Khorasani M, Padhan J, Baez VM, Al-Ansari A, Tsiamyrtzis P, Becker AT, Navkar NV (2023) Scope actuation system for articulated laparoscopes. Surg Endosc. https://doi.org/10.1007/s00464-023-09904-z

Abdurahiman N, Padhan J, Zhao H, Balakrishnan S, Al-Ansari A, Abinahed J, Velasquez CA, Becker AT, Navkar NV (2022) Human-computer interfacing for control of angulated scopes in robotic scope assistant systems. In: 2022 IEEE International Symposium on Medical Robotics (ISMR), pp 1–7

Velazco-Garcia JD, Navkar NV, Balakrishnan S, Younes G, Abi-Nahed J, Al-Rumaihi K, Darweesh A, Elakkad MSM, Al-Ansari A, Christoforou EG, Karkoub M, Leiss EL, Tsiamyrtzis P, Tsekos NV (2021) Evaluation of how users interface with holographic augmented reality surgical scenes: interactive planning MR-Guided prostate biopsies. Int J Med Robot 17:e2290

Mojica CMM, Garcia JDV, Navkar NV, Balakrishnan S, Abinahed J, El Ansari W, Al-Rumaihi K, Darweesh A, Al-Ansari A, Gharib M, Karkoub M, Leiss EL, Seimenis I, Tsekos NV (2018) A prototype holographic augmented reality interface for image-guided prostate cancer interventions. In: Eurographics Workshop on Visual Computing for Biology and Medicine, pp 17–21

Hong N, Kim M, Lee C, Kim S (2019) Head-mounted interface for intuitive vision control and continuous surgical operation in a surgical robot system. Med Biol Eng Comput 57:601–614

Qian L, Song C, Jiang Y, Luo Q, Ma X, Chiu PW, Li Z, Kazanzides P (2020) FlexiVision: teleporting the surgeon’s eyes via robotic flexible endoscope and head-mounted display. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 3281–3287

Mak YX, Zegel M, Abayazid M, Mariani MA, Stramigioli S (2022) Experimental evaluation using head motion and augmented reality to intuitively control a flexible endoscope. In: 2022 9th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), pp 1–7

Zorzal ER, Gomes JMC, Sousa M, Belchior P, da Silva PG, Figueiredo N, Lopes DS, Jorge J (2020) Laparoscopy with augmented reality adaptations. J Biomed Inform 107:103463

Jayender J, Xavier B, King F, Hosny A, Black D, Pieper S, Tavakkoli A (2018) A novel mixed reality navigation system for laparoscopy surgery. Springer, Berlin, pp 72–80

Park A, Lee G, Seagull FJ, Meenaghan N, Dexter D (2010) Patients benefit while surgeons suffer: an impending epidemic. J Am Coll Surg 210:306–313

Monfared S, Athanasiadis DI, Umana L, Hernandez E, Asadi H, Colgate CL, Yu D, Stefanidis D (2022) A comparison of laparoscopic and robotic ergonomic risk. Surg Endosc 36:8397–8402

Sari V, Nieboer TE, Vierhout ME, Stegeman DF, Kluivers KB (2010) The operation room as a hostile environment for surgeons: physical complaints during and after laparoscopy. Minim Invasive Ther Allied Technol 19:105–109

Catanzarite T, Tan-Kim J, Whitcomb EL, Menefee S (2018) Ergonomics in surgery: a review. Female Pelvic Med Reconstr Surg 24:1–12

Velazco-Garcia JD, Navkar NV, Balakrishnan S, Abinahed J, Al-Ansari A, Darweesh A, Al-Rumaihi K, Christoforou E, Leiss EL, Karkoub M, Tsiamyrtzis P, Tsekos NV (2020) Evaluation of interventional planning software features for MR-guided transrectal prostate biopsies. In: 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), pp 951–954

Velazco-Garcia JD, Navkar NV, Balakrishnan S, Abinahed J, Al-Ansari A, Younes G, Darweesh A, Al-Rumaihi K, Christoforou EG, Leiss EL, Karkoub M, Tsiamyrtzis P, Tsekos NV (2019) Preliminary evaluation of robotic transrectal biopsy system on an interventional planning software. In: 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering (BIBE), pp 357–362

Acknowledgements

This work was supported by NPRP award (NPRP13S-0116-200084) from the Qatar National Research Fund (a member of The Qatar Foundation) and IRGC-04-JI-17-138 award from Medical Research Center (MRC) at Hamad Medical Corporation (HMC). All opinions, findings, conclusions, or recommendations expressed in this work are those of the authors and do not necessarily reflect the views of our sponsors.

Funding

This work was supported by NPRP award (NPRP13S-0116-200084) from the Qatar National Research Fund (a member of The Qatar Foundation) and IRGC-04-JI-17-138 award from Medical Research Center (MRC) at Hamad Medical Corporation (HMC). Open Access funding provided by the Qatar National Library. All opinions, findings, conclusions, or recommendations expressed in this work are those of the authors and do not necessarily reflect the views of our sponsors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

The authors of this submission, Hawa Hamza, Victor M. Baez, Abdulla Al-Ansari, Aaron T. Becker, and Nikhil V. Navkar, have no conflict of interest or financial ties to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hamza, H., Baez, V.M., Al-Ansari, A. et al. User interfaces for actuated scope maneuvering in surgical systems: a scoping review. Surg Endosc 37, 4193–4223 (2023). https://doi.org/10.1007/s00464-023-09981-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-023-09981-0