Abstract

Neuroimaging for dementia has made remarkable progress in recent years, shedding light on diagnostic subtypes of dementia, predicting prognosis and monitoring pathology. This review covers some updates in the understanding of dementia using structural imaging, positron emission tomography (PET), structural and functional connectivity, and using big data and artificial intelligence. Progress with neuroimaging methods allows neuropathology to be examined in vivo, providing a suite of biomarkers for understanding neurodegeneration and for application in clinical trials. In addition, we highlight quantitative susceptibility imaging as an exciting new technique that may prove to be a sensitive biomarker for a range of neurodegenerative diseases. There are challenges in translating novel imaging techniques to clinical practice, particularly in developing standard methodologies and overcoming regulatory issues. It is likely that clinicians will need to lead the way if these obstacles are to be overcome. Continued efforts applying neuroimaging to understand mechanisms of neurodegeneration and translating them to clinical practice will complete a revolution in neuroimaging.

Similar content being viewed by others

Introduction

Brain imaging in dementia is undergoing a revolution that is transforming neuroimaging research from merely describing changes in the brain, to understanding what those changes mean. This revolution has been driven primarily by a need for biomarkers to evaluate potential disease modifying treatments, leading to a better understanding of the association between neuroimaging changes and underlying pathology. The effect has been a suite of neuroimaging methods and analytics that help with:

-

identifying diagnostic subtypes

-

predicting prognosis

-

monitoring pathology in vivo.

The benefits of using neuroimaging in this way may find their way to memory clinics in the near future. Neuroimaging for the clinical diagnosis of dementia has traditionally been used to rule out alternative causes of cognitive impairment. Times are changing, and nearly all the diagnostic criteria for neurodegenerative diseases now include neuroimaging as a supportive criterion, and in some cases, such as Frontotemporal Dementia [1], imaging changes are part of the core criteria. However, these criteria remain vague on the specific sequences or measures required to support a diagnosis, usually specifying ‘atrophy’ in a region of interest. As automation and quantification becomes more prevalent to evaluate neuroimaging, it is likely that future criteria will become more specific on the extent of change that suggests a specific diagnosis and the type of neuroimaging required as evidence.

In this review, we discuss a few of the most significant recent advances in neuroimaging and what they mean for our understanding of dementia and the potential relevance for clinical practice. Due to space constraints we cannot cover all of this field including the use of neurophysiology with MEG [2], the application of arterial spin labelling as a promising disease measure [3, 4], and insightful new approaches using combinations of imaging modalities to investigate disease aetiology [5].

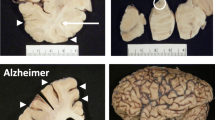

Structural imaging

Since seminal studies of progressive hippocampal atrophy in Alzheimer’s disease in the 1990s [6], structural MRI has been the workhorse of neuroimaging in dementia. Structural imaging has revealed atrophy decades prior to the onset of symptoms in cohorts of people with Mendelian forms of Alzheimer’s Disease [7] and Frontotemporal Dementia [8].

Changes in brain structure continue to provide insight, particularly on the subtypes of disease. For example, the identification of distinct atrophy patterns associated with different rates of disease progression in Alzheimer’s disease and Frontotemporal Dementia [9]. The SuStaIn model used in this work adds to an understanding of clinical heterogeneity in disease progression by associating differential rates of changes over time with specific patterns of atrophy.

Despite its long history, relying on structural MRI for the early diagnosis of Alzheimer’s disease in the form of mild cognitive impairment (MCI) is not currently recommended. A recent Cochrane review found a high false negative rate of 27% and a false positive rate of 29% [10] based on data from 33 studies. Most of the data came from studies looking solely at the hippocampus although overall the data quality was sparse with insufficient data available to establish whether, for example, total brain volume could better differentiate between MCI and Alzheimer’s disease.

Although this seems a disappointing finding, there may yet be more sensitive analytical tools or mathematical approaches using machine learning that can pick up patterns of structural change that may prove useful as a diagnostic biomarker [11].

An alternative approach to identifying a more sensitive imaging biomarker is to use a more powerful scanner, such as 7 T MRI. The “Tesla” refers to the strength of the magnetic field of an MRI scanner, with 1.5 T the one most often used in clinical practice, and 3 T MRI scanners being used routinely for research at academic centres. Although 7 T scanners are less common, shared protocols are helping to facilitate data-sharing to build larger cohorts between 7 T centres, such as those published by the UK7T network [12]. The stronger magnetic field is particularly useful in identifying vascular abnormalities, including cerebral microbleeds, which was the focus of much of the early work with7T MRI in neurodegeneration [13, 14]. One study found that 78% of people with early Alzheimer’s disease/MCI had cerebral microbleeds [15], presumably relating to some form of amyloid angiopathy. These findings strengthen the argument for an early and important role for vascular abnormalities in Alzheimer’s disease.

This increased imaging power offered by 7 T MRI, also allows for better resolution of small structures in the brain, including subfields of the hippocampus. Analysis of the hippocampus in this way has suggested that the pre-subiculum is the earliest subfield to be involved in Alzheimer’s disease [16], and that atrophy is greatest in the pre-subiculum and subiculum in this condition—a finding that is supported by other imaging approaches as well as pathological studies [17, 18]. This level of resolution is now getting to a point where we may be able to image pathology in vivo, a claim strengthened by the possibility of identifying cortical layers in vivo using 7 T MRI [19].

Other small structures relevant to less common neurodegenerative diseases are now also amenable to measurement using 7 T MRI, for example the locus coeruleus in progressive supranuclear palsy [20]. An atlas of the locus coeruleus in an older population is freely available for this purpose (https://www.nitrc.org/projects/lc_7t_prob/).

High-field MRI is therefore playing an increasing role in evaluating structural brain changes at ever increasing levels of resolution which is now approaching that of delineating pathology in situ.

Positron emission tomography (PET)

The pathology of dementia in vivo promises to be revealed by the fastest growing player in dementia imaging: Positron Emission Tomography (PET). PET ligands for beta-amyloid are now well established [21], though their use in elderly populations is limited given the high rates of false positives; in an Australian cohort of cognitively normal people the number of positive beta-amyloid scans was 18% at 60–69, rising to 65% over the age of 80 [22]. To determine the clinical value of beta-amyloid PET, a real world study of 11,409 participants in the US aims to assess its utility in memory clinics (https://www.ideas-study.org/). The study is ongoing, but initial results demonstrate that an amyloid PET scan led to a change in patient management in 60.2% of people with MCI and 63.5% of people with dementia, with approximately three quarters of the change being the commencement of a drug for Alzheimer’s disease [23]. It is hard to know whether this is useful in that a potential biomarker has had such a large impact, or rather that there are concerns given the high false positive rate could lead to over-diagnosis and unnecessary treatment. In this respect, the planned follow-up studies to assess whether there is a beneficial clinical outcome to this widespread use of amyloid PET and the associated change in clinical practice is much anticipated.

Ligands targeting proteins other than beta-amyloid have also been developed, with tau being the most advanced. First-generation ligands such as AV-1451 [24] are particularly useful in Alzheimer’s disease which is associated with abnormally hyperphosphorylated and misfolded tau that contains both 3 and 4 repeats of exon 10 of the MAPT gene, so-called 3R/4R tau. Other tauopathies such as progressive supranuclear palsy (4R tau), corticobasal degeneration (4R tau), frontotemporal dementia (3R tau) and chronic traumatic encephalopathy (3R/4R tau) are characterised by different and distinct types and conformations of abnormally folded tau [25, 26]. The tau PET ligands bind less avidly to these alternative isoforms forms of tau [27]. Furthermore, there are issues of off-target binding, for example AV-1451 binds to the TDP-43 protein found in Semantic Dementia, motor neurone disease and a proportion of people with frontotemporal dementia [28, 29], and with monoamine oxidase in the basal ganglia [30, 31]. Second generation tau ligands are emerging with less off-target binding, but none have yet demonstrated good affinity for non-Alzheimer tauopathies [32]. Despite their limitations, the first-generation tau ligands do show changes in expected brain regions in tauopathies including progressive supranuclear palsy [33] and apraxia of speech (a subtype of non-fluent variant primary progressive aphasia) [34]. Therefore, at the present time, tau PET is most relevant for Alzheimer’s disease as a potential diagnostic biomarker but may be useful for tracking changes in pathology for both Alzheimer’s disease and other tauopathies.

Other PET ligands target potentially important disease mechanisms. Inflammation is a very active research topic in dementia at the present time, in particular the role of microglia and their function in driving the disease state [35]. Activated microglia express the protein TSPO that has been a target for PET ligands [36]. Applying the first generation of these ligands has suggested an early role for inflammation in a number of neurodegenerative diseases, including dementia with Lewy bodies [37], Alzheimer’s disease [38, 39], corticobasal syndrome [40], progressive supranuclear palsy [41] and frontotemporal dementia [42]. The second generation ligands are more specific, but limited by the fact that approximately 30% of the population has a genetic variation meaning the ligand will not bind to TSPO [43].

Other ligands have emerged as possibly being useful in dementia states, for example UCB-J to measure synaptic density [44]. The UCB-J ligand has been looked at in Alzheimer’s disease [45] and revealed reduced synaptic density in the hippocampus that was correlated with episodic memory, although this study only used a group of 10 people.

Perhaps surprisingly in this field, there has not yet been a successful ligand to target alpha-synuclein as found in Parkinson’s Disease, Dementia with Lewy Bodies and Multiple System Atrophy, although efforts are ongoing [46, 47].

PET will continue to add to our knowledge of human in vivo neurodegeneration as ligands improve and the range of targeted ligands broadens. The relationship of PET to cognition and neuropathology needs to be clarified further, but one imagines this field will mature very quickly. It is likely to provide a source of biomarkers for trials of disease modifying treatments.

Despite revealing pathology, PET does not necessarily explain why specific brain regions are affected or how the brain compensates for the presence of pathology, something which connectivity analysis studies promises to shed light on.

Structural and functional connectivity

The field of connectivity uses neuroimaging to examine connections between brain regions, either functional connections [48, 49] by examining time series data (functional MRI, EEG, MEG); or with structural connections (diffusion tensor imaging, cortical thickness). The importance of brain networks was highlighted by pioneering work demonstrating that brain networks are a template for atrophy in various neurodegenerative diseases [50, 51], for example the default mode is associated with Alzheimer’s disease [52] and the salience network with frontotemporal dementia [53].

It has been tempting to link these networks to the theory of protein templating and spread of pathological proteins through connected brain regions in a prion-like fashion [54], and indeed the distribution of tau has been linked to functional networks [55]. This concept of ‘prion-like’ spread posits that abnormally conformed proteins cross synapses between connected brain regions to cause normal proteins to become abnormal in the “infected” region. Alternatively it may be that regions within these brain networks share a common susceptibility to disease linked to neurodevelopmental changes laid down through genetic variance that associates with later disease states [56]. Ultimately, it is likely that both spread and susceptibility play a part in neurodegeneration, but have different roles in different disorders; for example we have used functional connectivity and PET to examine the distribution of tau in neurodegeneration, finding that the pattern in Alzheimer’s disease was more in keeping with trans-synaptic spread, and in PSP was more in keeping with susceptibility of metabolically active regions [57].

As well as providing susceptibility to disease, there is some evidence that brain networks can be helpful in compensating for the effects of early pathology, maintaining cognition in the long presymptomatic phase during which atrophy is detectable on scanning but cognition remains normal [58]. This is in line with the idea of cognitive reserve, that early life education, social interactions and genetic factors protect the brain from the effects of dementia [59, 60]. Cognitive reserve appears to be associated with a specific network of brain regions, though the implications for neurodegeneration are not yet fully understood [61]. However, FDG-PET studies have demonstrated a role for cognitive reserve in Alzheimer’s disease [62], Dementia with Lewy Bodies [63] and Corticobasal Degeneration [64], and the TMEM106B genotype has been found to modulate the protective effect of cognitive reserve in genetic forms of frontotemporal dementia [65].

It remains to be seen whether connectivity measures are reliable enough to be useful as diagnostic or longitudinal biomarkers, but they are beginning to reveal a complex interaction between brain structure and function in neurodegeneration.

Big data and artificial intelligence

The field of neuroimaging has led the scientific community in open science initiatives, particularly in the creation of large repositories of open data [66, 67]. The most widely used datasets in the field of neurodegeneration are the Alzheimer’s Disease Neuroimaging Initiative (ADNI) [68], the Dominantly Inherited Alzheimer Network (DIAN) [69], the Parkinson’s Progression Markers Initiative (PPMI) [70], the Genetic Frontotemporal Initiative (GenFI) [8], and the ARTFL-LEFTDS Longitudinal Frontotemporal Lobar Degeneration (ALLFTD) cohort [71]. Given some of these datasets consist of over 1000 participants, they have attracted the attention of groups working with Artificial Intelligence (AI) methods.

AI describes a set of mathematical tools for identifying relationships and patterns within data and is particularly suitable for the complex and non-linear relationships found in neuroimaging data [72]. These methods need large datasets to pick up subtle patterns and to work out what might be ‘signal’ and what might be ‘noise’. Machine learning methods are a subset of AI tools that have been used to predict the path of cognition in people with early signs of cognitive change with reasonable success [73, 74], and in one study these findings were replicable in a second cohort of patients [75]. Hence, machine learning methods are showing some promise in predicting cognitive change but do need to be translated to the clinical setting, which may require a cultural shift by clinicians who will need to adapt to using new information available from AI algorithms [76].

More complex models have been applied to the challenge of diagnosis using a subset of machine learning methods called ‘deep learning’ algorithms. Theoretically, these methods can detect a wider variety of features within a dataset, and they do indeed achieve a high accuracy (up to 96.0%) in the diagnosis of people with dementia [11]. But this improved accuracy comes at a cost of interpretability, ie it is not clear what parts of the scan are being used to assign people to a diagnostic group. It could be argued that the lack of transparency doesn’t matter, as long as the answer is correct. But, if these methods are going to be transferred into routine clinical practice, the clinician must have an answer as to ‘why’ the algorithm assigns someone to having dementia or not. It may be there are other explanations for the brain changes picked out by the algorithm that could lead to misclassification, for example a person may have hippocampal sclerosis causing hippocampal atrophy and not Alzheimer’s disease. A few emerging methods may address the challenge of interpretation in deep learning, such as the DeepLight method that has successfully been applied to functional MRI data in the human connectome project [77]. Another way to overcome these challenges is by brute force—larger sets of training datasets in the tens or hundreds of thousands of scans, rather than the few hundred to a couple of thousand that we currently have available. These larger datasets will allow the deep learning algorithm to have ‘seen’ a particular abnormality multiple times before, even if it is uncommon, and the error rates will fall as a result. Even so, it may be that doctors are reluctant to trust an algorithm they do not fully understand.

Translation

The advances in imaging shedding light on diagnosis, prognosis and pathology are welcome in the research world, but as yet they have made very little impact on clinical practice.

One critical issue in achieving this is the standardisation of methodologies for neuroimaging data collection, preprocessing and analysis. Standard work schemes are beginning to emerge driven by the Organisation for Human Brain Mapping [78] and large datasets such as the UK biobank (https://imaging.ukbiobank.ac.uk/) and the Human Connectome Project [79]. However, even with standard methods there remain significant regulatory hurdles that are required to properly assess and register these methods as medical products. This process takes time and energy, probably beyond the scope of the scientists who develop them for their own academic work. Companies would usually take on and scale up such products but may be reluctant to take on methods that are already in the public domain through (hugely valuable) open science initiatives. It may, therefore, fall to clinicians to take the lead to ensure that methods considered standard in academic circles are translated to clinical practice.

The next big thing…?

It is, of course, notoriously difficult to predict future trends in any field. But one modality of MRI that is receiving increasing attention is Quantitative Susceptibility Mapping (QSM). This technique is sensitive to iron, calcium and other magnetic substances [80]. The deposition of iron measured using QSM has been linked with cognition in Alzheimer’s disease [81] and Parkinson’s disease [82]. In Alzheimer’s disease, iron is found in the plaques and tangles that characterise the disease, though there is still some work required to establish what exactly is being identified, for example whether ferrous or ferric iron is picked up by QSM. Although iron chelation therapy is now being trialled in some of these condition, the drugs can cause life-threatening complications of agranulocytosis and neutropenia which tempers ones enthusiasm for them [83], but nevertheless this imaging modality may yet shed light on the role of iron in the aetiology of neurodegeneration, and prove to be a useful non-invasive biomarker.

Conclusions

In conclusion, the field of neuroimaging is maturing from the simple measurement of volume and structure, to a host of methods that can identify better methods for monitoring disease progression and pathology as well as help us to better understand patterns of neurodegeneration, and uncover mechanisms that protect cognitive function in the face of neuropathology. As these methods mature, it will be up to clinicians to lead their translation to the clinical world. If that can be achieved, the revolution in neuroimaging will be complete.

References

Rascovsky K, Hodges JR, Knopman DS et al (2011) Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain 134:2456–2477. https://doi.org/10.1093/brain/awr179

de Haan W, van der Flier WM, Wang H et al (2012) Disruption of functional brain networks in Alzheimer’s disease: what can we learn from graph spectral analysis of resting-state magnetoencephalography? Brain Connect 2:45–55. https://doi.org/10.1089/brain.2011.0043

Yoshiura T, Hiwatashi A, Noguchi T et al (2009) Arterial spin labelling at 3-T MR imaging for detection of individuals with Alzheimer’s disease. Eur Radiol 19:2819–2825. https://doi.org/10.1007/s00330-009-1511-6

Bron EE, Steketee RME, Houston GC et al (2014) Diagnostic classification of arterial spin labeling and structural MRI in presenile early stage dementia. Hum Brain Mapp 35:4916–4931. https://doi.org/10.1002/hbm.22522

Iturria-Medina Y, Carbonell FM, Sotero RC et al (2017) Multifactorial causal model of brain (dis)organization and therapeutic intervention: application to Alzheimer’s disease. NeuroImage 152:60–77. https://doi.org/10.1016/j.neuroimage.2017.02.058

Fox NC, Freeborough PA, Rossor MN (1996) Visualisation and quantification of rates of atrophy in Alzheimer’s disease. Lancet 348:94–97. https://doi.org/10.1016/S0140-6736(96)05228-2

Tondelli M, Wilcock GK, Nichelli P et al (2012) Structural MRI changes detectable up to ten years before clinical Alzheimer’s disease. Neurobiol Aging 33:825.e25–825.e36. https://doi.org/10.1016/j.neurobiolaging.2011.05.018

Rohrer JD, Nicholas JM, Cash DM et al (2015) Presymptomatic cognitive and neuroanatomical changes in genetic frontotemporal dementia in the Genetic Frontotemporal Dementia Initiative (GENFI) study : a cross-sectional analysis. Lancet Neurol 14:253–262. https://doi.org/10.1016/S1474-4422(14)70324-2

Young AL, Marinescu R-VV, Oxtoby NP et al (2017) Uncovering the heterogeneity and temporal complexity of neurodegenerative diseases with subtype and stage inference. Nat Commun 9:4273. https://doi.org/10.1101/236604

Lombardi G, Crescioli G, Cavedo E et al (2020) Structural magnetic resonance imaging for the early diagnosis of dementia due to Alzheimer’s disease in people with mild cognitive impairment. Cochrane Database of Syst Rev. https://doi.org/10.1002/14651858.CD009628.pub2

Jo T, Nho K, Saykin AJ (2019) Deep learning in Alzheimer’s disease: diagnostic classification and prognostic prediction using neuroimaging data. Front Aging Neurosci 11:220. https://doi.org/10.3389/fnagi.2019.00220

Clarke WT, Mougin O, Driver ID et al (2020) Multi-site harmonization of 7 tesla MRI neuroimaging protocols. NeuroImage 206:116335. https://doi.org/10.1016/j.neuroimage.2019.116335

Theysohn JM, Kraff O, Maderwald S et al (2011) 7 tesla MRI of microbleeds and white matter lesions as seen in vascular dementia. J Magn Reson Imaging 33:782–791. https://doi.org/10.1002/jmri.22513

Conijn MMA, Geerlings MI, Luijten PR et al (2010) Visualization of cerebral microbleeds with dual-echo T2*-weighted magnetic resonance imaging at 7.0 T. J Magn Reson Imaging 32:52–59. https://doi.org/10.1002/jmri.22223

Brundel M, Heringa SM, de Bresser J et al (2012) High prevalence of cerebral microbleeds at 7 Tesla MRI in patients with early Alzheimer’s disease. J Alzheimers Dis 31:259–263. https://doi.org/10.3233/JAD-2012-120364

Parker TD, Cash DM, Lane CAS et al (2019) Hippocampal subfield volumes and pre-clinical Alzheimer’s disease in 408 cognitively normal adults born in 1946. PLoS ONE 14:e0224030. https://doi.org/10.1371/journal.pone.0224030

Davies DC, Wilmott AC, Mann DMA (1988) Senile plaques are concentrated in the subicular region of the hippocampal formation in Alzheimer’s disease. Neurosci Lett 94:228–233. https://doi.org/10.1016/0304-3940(88)90300-X

Carlesimo GA, Piras F, Orfei MD et al (2015) Atrophy of presubiculum and subiculum is the earliest hippocampal anatomical marker of Alzheimer’s disease. Alzheimers Dement Diagn Assess Dis Monit 1:24–32. https://doi.org/10.1016/j.dadm.2014.12.001

Trampel R, Bazin P-L, Pine K, Weiskopf N (2019) In-vivo magnetic resonance imaging (MRI) of laminae in the human cortex. NeuroImage 197:707–715. https://doi.org/10.1016/j.neuroimage.2017.09.037

Kaalund SS, Passamonti L, Allinson KSJ et al (2020) Locus coeruleus pathology in progressive supranuclear palsy, and its relation to disease severity. Acta Neuropathol Commun 8:11. https://doi.org/10.1186/s40478-020-0886-0

Klunk WE, Engler H, Nordberg A et al (2004) Imaging brain amyloid in Alzheimer’s disease with Pittsburgh Compound-B. Ann Neurol 55:306–319. https://doi.org/10.1002/ana.20009

Rowe CC, Ellis KA, Rimajova M et al (2010) Amyloid imaging results from the Australian Imaging, Biomarkers and Lifestyle (AIBL) study of aging. Neurobiol Aging 31:1275–1283. https://doi.org/10.1016/j.neurobiolaging.2010.04.007

Rabinovici GD, Gatsonis C, Apgar C et al (2019) Association of amyloid positron emission tomography with subsequent change in clinical management among medicare beneficiaries with mild cognitive impairment or dementia. JAMA 321:1286–1294. https://doi.org/10.1001/jama.2019.2000

Lowe VJ, Curran G, Fang P et al (2016) An autoradiographic evaluation of AV-1451 Tau PET in dementia. Acta Neuropathol Commun 4:58. https://doi.org/10.1186/s40478-016-0315-6

Falcon B, Zivanov J, Zhang W et al (2019) Novel tau filament fold in chronic traumatic encephalopathy encloses hydrophobic molecules. Nature 568:420–423. https://doi.org/10.1038/s41586-019-1026-5

Zhang W, Tarutani A, Newell KL et al (2020) Novel tau filament fold in corticobasal degeneration. Nature. https://doi.org/10.1038/s41586-020-2043-0

Passamonti L, Rodríguez PV, Hong YT et al (2018) PK11195 binding in Alzheimer disease and progressive supranuclear palsy. Neurology 90:e1989–e1996. https://doi.org/10.1212/WNL.0000000000005610

Bevan-Jones WR, Cope TE, Jones PS et al (2018) [18F]AV-1451 binding in vivo mirrors the expected distribution of TDP-43 pathology in the semantic variant of primary progressive aphasia. J Neurol Neurosurg Psychiatry 89:1032–1037. https://doi.org/10.1136/jnnp-2017-316402

Makaretz SJ, Quimby M, Collins J et al (2018) Flortaucipir tau PET imaging in semantic variant primary progressive aphasia. J Neurol Neurosurg Psychiatry 89:1024–1031. https://doi.org/10.1136/jnnp-2017-316409

Drake LR, Pham JM, Desmond TJ et al (2019) Identification of AV-1451 as a weak, nonselective inhibitor of monoamine oxidase. ACS Chem Neurosci 10:3839–3846. https://doi.org/10.1021/acschemneuro.9b00326

Murugan NA, Chiotis K, Rodriguez-Vieitez E et al (2019) Cross-interaction of tau PET tracers with monoamine oxidase B: evidence from in silico modelling and in vivo imaging. Eur J Nucl Med Mol Imaging 46:1369–1382. https://doi.org/10.1007/s00259-019-04305-8

Leuzy A, Chiotis K, Lemoine L et al (2019) Tau PET imaging in neurodegenerative tauopathies—still a challenge. Mol Psychiatry 24:1112–1134. https://doi.org/10.1038/s41380-018-0342-8

Passamonti L, Vázquez Rodríguez P, Hong YT et al (2017) 18F-AV-1451 positron emission tomography in Alzheimer’s disease and progressive supranuclear palsy. Brain 140:781–791. https://doi.org/10.1093/brain/aww340

Utianski RL, Whitwell JL, Schwarz CG et al (2018) Tau-PET imaging with [18F]AV-1451 in primary progressive apraxia of speech. Cortex 99:358–374. https://doi.org/10.1016/j.cortex.2017.12.021

Hickman S, Izzy S, Sen P et al (2018) Microglia in neurodegeneration. Nat Neurosci 21:1359–1369. https://doi.org/10.1038/s41593-018-0242-x

Endres CJ, Pomper MG, James M et al (2009) Initial evaluation of 11C-DPA-713, a novel TSPO PET ligand, in humans. J Nucl Med 50:1276–1282. https://doi.org/10.2967/jnumed.109.062265

Nicastro N, Mak E, Williams GB et al (2020) Correlation of microglial activation with white matter changes in dementia with Lewy bodies. NeuroImage Clin 25:102200. https://doi.org/10.1016/j.nicl.2020.102200

Parbo P, Ismail R, Sommerauer M et al (2018) Does inflammation precede tau aggregation in early Alzheimer’s disease? A PET study. Neurobiol Dis 117:211–216. https://doi.org/10.1016/j.nbd.2018.06.004

Parbo P, Ismail R, Hansen KV et al (2017) Brain inflammation accompanies amyloid in the majority of mild cognitive impairment cases due to Alzheimer’s disease. Brain 140:2002–2011. https://doi.org/10.1093/brain/awx120

Gerhard A, Watts J, Trender-Gerhard I et al (2004) In vivo imaging of microglial activation with [11C](R)-PK11195 PET in corticobasal degeneration. Mov Disord 19:1221–1226. https://doi.org/10.1002/mds.20162

Gerhard A, Trender-Gerhard I, Turkheimer F et al (2006) In vivo imaging of microglial activation with [11C](R)-PK11195 PET in progressive supranuclear palsy. Mov Disord 21:89–93. https://doi.org/10.1002/mds.20668

Cagnin A, Rossor M, Sampson EL et al (2004) In vivo detection of microglial activation in frontotemporal dementia. Ann Neurol 56:894–897. https://doi.org/10.1002/ana.20332

Kreisl WC, Jenko KJ, Hines CS et al (2013) A Genetic polymorphism for translocator protein 18 kda affects both in vitro and in vivo radioligand binding in human brain to this putative biomarker of neuroinflammation. J Cereb Blood Flow Metab 33:53–58. https://doi.org/10.1038/jcbfm.2012.131

Nabulsi NB, Mercier J, Holden D et al (2016) Synthesis and preclinical evaluation of 11C-UCB-J as a PET tracer for imaging the synaptic vesicle glycoprotein 2A in the brain. J Nucl Med 57:777–784. https://doi.org/10.2967/jnumed.115.168179

Chen M-K, Mecca AP, Naganawa M et al (2018) Assessing synaptic density in Alzheimer disease with synaptic vesicle glycoprotein 2A positron emission tomographic imaging. JAMA Neurol 75:1215–1224. https://doi.org/10.1001/jamaneurol.2018.1836

Yousefi BH, Shi K, Arzberger T, et al (2019) Translational study of a novel alpha-synuclein PET tracer designed for first-in-human investigating. In: Nuklearmedizin. Georg Thieme Verlag KG, p L25

Maurer A, Leonov A, Ryazanov S et al (2020) 11C Radiolabeling of anle253b: a putative PET tracer for Parkinson’s disease that binds to α-synuclein fibrils in vitro and crosses the blood–brain barrier. ChemMedChem 15:411–415. https://doi.org/10.1002/cmdc.201900689

Pievani M, de Haan W, Wu T et al (2011) Functional network disruption in the degenerative dementias. Lancet Neurol 10:829–843. https://doi.org/10.1016/S1474-4422(11)70158-2

Bullmore ET, Sporns O (2009) Complex brain networks: graph theoretical analysis of structural and functional systems. Nat Rev Neurosci 10:186–198. https://doi.org/10.1038/nrn2575

Zhou J, Gennatas ED, Kramer JH et al (2012) Predicting regional neurodegeneration from the healthy brain functional connectome. Neuron 73:1216–1227. https://doi.org/10.1016/j.neuron.2012.03.004

Zhou J, Greicius MD, Gennatas ED et al (2010) Divergent network connectivity changes in behavioural variant frontotemporal dementia and Alzheimer’s disease. Brain 133:1352–1367. https://doi.org/10.1093/brain/awq075

Greicius MD, Srivastava G, Reiss AL, Menon V (2004) Default-mode network activity distinguishes Alzheimer’s disease from healthy aging: evidence from functional MRI. Proc Natl Acad Sci USA 101:4637–4642. https://doi.org/10.1073/pnas.0308627101

Day GS, Farb NAS, Tang-Wai DF et al (2013) Salience network resting-state activity: prediction of frontotemporal dementia progression. JAMA Neurol 70:1249–1253. https://doi.org/10.1001/jamaneurol.2013.3258

Walsh DM, Selkoe DJ (2016) A critical appraisal of the pathogenic protein spread hypothesis of neurodegeneration. Nat Rev Neurosci 17:251–260. https://doi.org/10.1038/nrn.2016.13

Ossenkoppele R, Iaccarino L, Schonhaut DR et al (2019) Tau covariance patterns in Alzheimer’s disease patients match intrinsic connectivity networks in the healthy brain. NeuroImage Clin 23:101848. https://doi.org/10.1016/j.nicl.2019.101848

Rittman T, Rubinov M, Vértes PE et al (2016) Regional expression of the MAPT gene is associated with loss of hubs in brain networks and cognitive impairment in Parkinson’s disease and progressive supranuclear palsy. Neurobiol Aging 48:153–160. https://doi.org/10.1016/j.neurobiolaging.2016.09.001

Cope TE, Rittman T, Borchert RJ et al (2018) Tau burden and the functional connectome in Alzheimer’s disease and progressive supranuclear palsy. Brain 141:550–567. https://doi.org/10.1093/brain/awx347

Rittman T, Borchert R, Jones MS et al (2019) Functional network resilience to pathology in presymptomatic genetic frontotemporal dementia. Neurobiol Aging 77:169–177. https://doi.org/10.1016/j.neurobiolaging.2018.12.009

Stern Y (2009) Cognitive reserve. Neuropsychologia 47:2015–2028. https://doi.org/10.1016/j.neuropsychologia.2009.03.004

Stern Y, Arenaza-Urquijo EM, Bartrés-Faz D et al (2018) Whitepaper: defining and investigating cognitive reserve, brain reserve, and brain maintenance [in press]. Alzheimers Dement. https://doi.org/10.1016/j.jalz.2018.07.219

Stern Y, Gazes Y, Razlighi Q et al (2018) A task-invariant cognitive reserve network. NeuroImage 178:36–45. https://doi.org/10.1016/j.neuroimage.2018.05.033

Perneczky R (2006) Schooling mediates brain reserve in Alzheimer’s disease: findings of fluoro-deoxy-glucose-positron emission tomography. J Neurol Neurosurg Psychiatry 77:1060–1063. https://doi.org/10.1136/jnnp.2006.094714

Perneczky R, Haussermann P, Diehl-Schmid J et al (2007) Metabolic correlates of brain reserve in dementia with Lewy bodies: an FDG PET study. Dement Geriatr Cogn Disord 23:416–422. https://doi.org/10.1159/000101956

Isella V, Grisanti SG, Ferri F et al (2018) Cognitive reserve maps the core loci of neurodegeneration in corticobasal degeneration. Eur J Neurol 25:1333–1340. https://doi.org/10.1111/ene.13729

Premi E, Grassi M, van Swieten J et al (2017) Cognitive reserve and TMEM106B genotype modulate brain damage in presymptomatic frontotemporal dementia: a GENFI study. Brain 140:1784–1791. https://doi.org/10.1093/brain/awx103

Eickhoff S, Nichols TE, Van Horn JD, Turner JA (2016) Sharing the wealth: neuroimaging data repositories. Neuroimage 124:1065–1068. https://doi.org/10.1016/j.neuroimage.2015.10.079

Van Horn JD, Toga AW (2014) Human neuroimaging as a “Big Data” science. Brain Imaging Behav 8:323–331. https://doi.org/10.1007/s11682-013-9255-y

Mueller SG, Weiner MW, Thal LJ et al (2005) The Alzheimer’s disease neuroimaging initiative. Neuroimaging Clin N Am 15:869–877. https://doi.org/10.1016/j.nic.2005.09.008

Bateman RJ, Xiong C, Benzinger TLS et al (2012) Clinical and biomarker changes in dominantly inherited Alzheimer’s disease. N Engl J Med 367:795–804. https://doi.org/10.1056/NEJMoa1202753

Parkinson Progression Marker Initiative (2011) The Parkinson progression marker initiative (PPMI). Prog Neurobiol 95:629–635. https://doi.org/10.1016/j.pneurobio.2011.09.005

Boeve B, Bove J, Brannelly P et al (2019) The longitudinal evaluation of familial frontotemporal dementia subjects protocol: Framework and methodology. Alzheimers Dement. https://doi.org/10.1016/j.jalz.2019.06.4947

Hainc N, Federau C, Stieltjes B et al (2017) The bright, artificial intelligence-augmented future of neuroimaging reading. Front Neurol. https://doi.org/10.3389/fneur.2017.00489

Fisher CK, Smith AM, Walsh JR (2019) Machine learning for comprehensive forecasting of Alzheimer’s disease progression. Sci Rep 9:1–14. https://doi.org/10.1038/s41598-019-49656-2

Archetti D, Ingala S, Venkatraghavan V et al (2019) Multi-study validation of data-driven disease progression models to characterize evolution of biomarkers in Alzheimer’s disease |Elsevier Enhanced Reader. NeuroImage Clin 24:101954. https://doi.org/10.1016/j.nicl.2019.101954

Giorgio J, Landau S, Jagust W et al (2020) Modelling prognostic trajectories of cognitive decline due to Alzheimer’s disease. NeuroImage Clin. https://doi.org/10.1016/j.nicl.2020.102199

Bruffaerts R (2018) Machine learning in neurology: what neurologists can learn from machines and vice versa. J Neurol 265:2745–2748. https://doi.org/10.1007/s00415-018-8990-9

Thomas AW, Heekeren HR, Müller K-R, Samek W (2019) Analyzing neuroimaging data through recurrent deep learning models. Front Neurosci 13:1321. https://doi.org/10.3389/fnins.2019.01321

Nichols TE, Das S, Eickhoff SB et al (2017) Best practices in data analysis and sharing in neuroimaging using MRI. Nat Neurosci 20:299–303. https://doi.org/10.1038/nn.4500

Glasser MF, Smith SM, Marcus DS et al (2016) The Human Connectome Project’s neuroimaging approach. Nat Neurosci 19:1175–1187. https://doi.org/10.1038/nn.4361

Acosta-Cabronero J, Milovic C, Tejos C, Callaghan MF (2018) A multi-scale approach to quantitative susceptibility mapping (MSDI). ISMRM 183:7–24. https://doi.org/10.1016/J.NEUROIMAGE.2018.07.065

Ayton S, Fazlollahi A, Bourgeat P et al (2017) Cerebral quantitative susceptibility mapping predicts amyloid-β-related cognitive decline. Brain 140:2112–2119. https://doi.org/10.1093/brain/awx137

Thomas GEC, Leyland LA, Schrag A-E et al (2020) Brain iron deposition is linked with cognitive severity in Parkinson’s disease. J Neurol Neurosurg Psychiatry 91:418–425. https://doi.org/10.1136/jnnp-2019-322042

Sammaraiee Y, Banerjee G, Farmer S et al (2020) Risks associated with oral deferiprone in the treatment of infratentorial superficial siderosis. J Neurol 267:239–243. https://doi.org/10.1007/s00415-019-09577-6

Funding

TR is funded by the NIHR Cambridge Biomedical Research Centre and the Cambridge Centre for Parkinson's Plus.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

TR has received honoraria from NICE (National Institute for Health and Care Excellence, UK) and Oxford Biomedica (Oxford, UK).

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Availability of data and material

Not applicable.

Code availability

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rittman, T. Neurological update: neuroimaging in dementia. J Neurol 267, 3429–3435 (2020). https://doi.org/10.1007/s00415-020-10040-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00415-020-10040-0