Abstract

Recent technological advances in optical atomic clocks are opening new perspectives for the direct determination of geopotential differences between any two points at a centimeter-level accuracy in geoid height. However, so far detailed quantitative estimates of the possible improvement in geoid determination when adding such clock measurements to existing data are lacking. We present a first step in that direction with the aim and hope of triggering further work and efforts in this emerging field of chronometric geodesy and geophysics. We specifically focus on evaluating the contribution of this new kind of direct measurements in determining the geopotential at high spatial resolution (\(\approx \)10 km). We studied two test areas, both located in France and corresponding to a middle (Massif Central) and high (Alps) mountainous terrain. These regions are interesting because the gravitational field strength varies greatly from place to place at high spatial resolution due to the complex topography. Our method consists in first generating a synthetic high-resolution geopotential map, then drawing synthetic measurement data (gravimetry and clock data) from it, and finally reconstructing the geopotential map from that data using least squares collocation. The quality of the reconstructed map is then assessed by comparing it to the original one used to generate the data. We show that adding only a few clock data points (less than 1% of the gravimetry data) reduces the bias significantly and improves the standard deviation by a factor 3. The effect of the data coverage and data quality on the results is investigated, and the trade-off between the measurement noise level and the number of data points is discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Chronometry is the science of the measurement of time. As the time flow of clocks depends on the surrounding gravity field through the relativistic gravitational redshift predicted by Einstein (Landau and Lifshitz 1975), chronometric geodesy considers the use of clocks to directly determine Earth’s gravitational potential differences. Instead of using state-of-the-art Earth’s gravitational field models to predict frequency shifts between distant clocks (Pavlis and Weiss (2003), ITOC projectFootnote 1), the principle is to reverse the problem and ask ourselves whether the comparison of frequency shifts between distant clocks can improve our knowledge of Earth’s gravity and geoid (Bjerhammar 1985; Mai 2013; Petit et al. 2014; Shen et al. 2016; Kopeikin et al. 2016). For example, two clocks with an accuracy of \(10^{-18}\) in terms of relative frequency shift would detect a 1-cm geoid height variation between them, corresponding to a geopotential variation \(\varDelta W\) of about \(0.1~\hbox {m}^{2}\hbox {s}^{-2}\) (for more details, see, e.g., Delva and Lodewyck 2013; Mai 2013; Petit et al. 2014).

Until recently, the performances of optical clocks had not been sufficient to make applications in practice for the determination of Earth’s gravity potential. However, ongoing quick developments of optical clocks are opening these possibilities. Chou et al. (2010) demonstrated the ability of the new generation of atomic clocks, based on optical transitions, to sense geoid height differences with a 30-cm level of accuracy. To date, the best of these instruments reach a stability of \(1.6 \times 10^{- 18}\) (NIST, RIKEN + Univ. Tokyo, Hinkley et al. 2013) after 7 hours of integration time. More recently, an accuracy of \(2.1 \times 10^{- 18}\) (JILA, Nicholson et al. 2015) has been obtained, equivalent to geopotential differences of \(0.2~\hbox {m}^{2}\hbox {s}^{-2}\), or 2 cm on the geoid. Recently, Takano et al. (2016) demonstrated the feasibility of cm-level chronometric geodesy. By connecting clocks separated by 15 km with a long telecom fiber, they found that the height difference between the distant clocks determined by the chronometric leveling (see Vermeer 1983) was in agreement with the classical leveling measurement within the clocks uncertainty of 5 cm. Other related work using optical fiber or coaxial cable time-frequency transfer can be found in (Shen 2013; Shen and Shen 2015).

Such results stress the question of what can we learn about Earth’s gravity and mass sources using clocks that we cannot easily derive from existing gravimetric data. Recent studies address this question; for example, Bondarescu et al. (2012) discussed the value and future applicability of chronometric geodesy for direct geoid mapping on continents and joint gravity potential surveying to determine subsurface density anomalies. They find that a geoid perturbation caused by a 1.5-km radius sphere with 20 percent density anomaly buried at 2 km depth in the Earth’s crust is already detectable by atomic clocks with present-day accuracy. They also investigate other applications, for earthquake prediction and volcanic eruptions (Bondarescu et al. 2015b), or to monitor vertical surface motion changes due to magmatic, post-seismic, or tidal deformations (Bondarescu et al. 2015a, c).

Here we will consider the “static” or “long-term” component of Earth’s gravity. Our knowledge of Earth’s gravitational field is usually expressed through geopotential grids and models that integrate all available observations, globally or over an area of interest. These models are, however, not based on direct observations with the potential itself, which has to be reconstructed or extrapolated by integrating measurements of its derivatives. Yet, this quantity is needed in itself, like using a high-resolution geoid as a reference for height on land and dynamic topography over the oceans (Rummel and Teunissen 1988; Rummel 2002, 2012; Sansò and Venuti 2002; Zhang et al. 2008; Sansò and Sideris 2013; Marti 2015).

The potential is reconstructed with a centimetric accuracy at resolutions of the order of 100 km from GRACE and GOCE satellite data (Pail et al. 2011; Bruinsma et al. 2014) and integrated from near-surface gravimetry for the shorter spatial scales. As a result, the standard deviation (rms) of differences between geoid heights obtained from a global high-resolution model as EGM2008, and from a combination of GPS/leveling data, reaches up to 10 cm in areas well covered in surface data (Gruber 2009). The uneven distribution of surface gravity data, especially in transitional zones (coasts, borders between different countries) and with important gaps in areas difficult to access, indeed limits the accuracy of the reconstruction when aiming at a centimeter-level of precision. This is an important issue, as large gravity and geoid variations over a range of spatial scales are found in mountainous regions, and because a high accuracy on altitudes determination is crucial in coastal zones. Airborne gravity surveys are thus realized in such regions (Johnson 2009; Douch et al. 2015); local clock-based geopotential determination could be another way to overcome these limitations.

In this context, here, we investigate to what extent clocks could contribute to fill the gap between the satellite and near-surface gravity spectral and spatial coverages in order to improve our knowledge of the geopotential and gravity field at all wavelengths. By nature, potential data are smoother and more sensitive to mass sources at large scales than gravity data, which are strongly influenced by local effects. Thus, they could naturally complement existing networks in sparsely covered places and even also contribute to point out possible systematic patterns of errors in the less recent gravity data sets. We address the question through test case examples of high-resolution geopotential reconstructions in areas with different characteristics, leading to different variabilities of the gravity field. We consider the Massif Central in France, marked by smooth, moderate altitude mountains and volcanic plateaus, and an Alps–Mediterranean zone, comprising high reliefs and a land/sea transition.

Throughout this work, we will treat clock measurements as direct determinations of the disturbing potential T (see below and Sect. 3 for details). We implicitly assume that the actual measurements are the potential differences between the clock location and some reference clock(s) within the area of interest. These measurements are obtained by comparing the two clocks over distances of up to a few 100 km. Currently two methods are available for such comparisons, fiber links (Lisdat et al. 2016) and free space optical links (Deschênes et al. 2016). The free space optical links are most promising for the applications considered here, but are presently still limited to short (few km) distances. However, projects for extending these methods based on airborne or satellite relays are on the way, but still require some effort in technology development.

The paper is organized as follows. In Sect. 2, we briefly summarize the method schematically. In Sect. 3, we describe the regions of interest and the construction of the high-resolution synthetic data sets used in our tests. In Sect. 4, we present the methodology to assess the contribution of new clock data in the potential recovery, in addition to ground gravity measurements. Numerical results are shown in Sect. 5. We finally discuss in Sect. 6 the influence of different parameters like the data noise level and coverage.

2 Method

The rapid progress of optical clocks performances opens new perspectives for their use in geodesy and geophysics. While they were until recently built only as stationary laboratory devices, several transportable optical clocks are currently under construction or test (see, e.g., Bongs 2015; Origlia et al. 2016; Vogt et al. 2016). The technological step toward state-of-the-art transportable optical clocks is likely to take place within the next decade. In parallel, in order to assess the capabilities of this upcoming technology, we chose an approach based on numerical simulation in order to investigate whether atomic clocks can improve the determination of the geopotential. Based on the consideration that ground optical clocks are more sensitive to the longer wavelengths of the gravitational field around them than gravity data, our method is adapted to the determination of the geopotential at regional scales. In Fig. 1a scheme of the method used in this paper is shown:

-

1.

In the first step, we generate a high spatial resolution grid of the gravity disturbance \(\overline{\delta {g}}\) and the disturbing potential \(\overline{T}\), considered as our reference solutions. This is done using a state-of-the-art geopotential model (EIGEN-6C4) and by removing low and high frequencies. It is described in details in Sect. 3.

-

2.

In the second step, we generate synthetic measurements \(\delta {g}\) and T from a realistic spatial distribution, and then we add generated random noise representative of the measurement noise. This is described in details in Sect. 4.

-

3.

In a third step, we estimate the disturbing potential \(\widetilde{T}\) from the synthetic measurements \(\delta {g}\) and/or T on a regular grid thanks to least square collocation (LSC) method. Interpolating spatial data are realized by making an assumption on the a priori gravity field regularity on the target area, as described in Sect. 5. This prior is expressed by the covariance function of the gravity potential and its derivatives. It allows to predict the disturbing potential on the output grid from the observations using the signal correlations between the data points and with the estimated potential.

-

4.

Finally, we evaluate the potential recovery quality for different data distribution sets, noise levels, and types of data, by comparing the statistics of the residuals \(\delta \) between the estimated values \(\widetilde{T}\) and the reference model \(\overline{T}\).

Let us underline that in this work, we use synthetic potential data while a network of clocks would give access to potential differences between the clocks. We indeed assume that the clocks-based potential differences have been connected to one or a few reference points, without introducing additional biases larger than the assumed clock uncertainties. Note that these reference points are absolute potential points determined by other methods (GNSS/geoid for example).

In this differential method, significant residuals \(\delta \) (higher than the machine precision) can have several origins, depending on the parameters of the simulation that can be varied:

-

1.

The modeled instrumental noise added to the reference model at step 2. This noise can be changed in order to determine, for instance, whether it is better to reduce gravimetry noise by one order of magnitude, rather than using clock measurements.

-

2.

The data distribution chosen in step 2. This is useful to check for instance the effect of the number of clock measurements on the residuals or to find an optimal coverage for the clock measurements.

-

3.

The potential estimation error, due to the intrinsic imperfection of the covariance model chosen for the geopotential. In our case, this is due to the low-frequency content of the covariance function chosen for the least square collocation method (see Sect. 5).

All these sources of errors are somewhat entangled with one another, such that a careful analysis must be done when varying the parameters of the simulation. This is discussed in details in Sect. 6.

3 Regions of interest and synthetic gravity field reference models

3.1 Gravity data and distribution

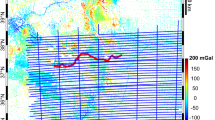

Our study focuses on two different areas in France. The first region is the Massif Central located between \(43^{\circ }\,\hbox {to}\,47^{\circ }\hbox {N}\) and \(1^{\circ }\,\hbox {to}\,5^{\circ }\hbox {E}\) and consists of plateaus and low mountain range, see Fig. 2. The second target area, much more hilly and mountainous, is the French Alps with a portion of the Mediterranean Sea located at the limit of different countries and bounded by \(42^{\circ }\,\hbox {to}\,47^{\circ }\hbox {N}\) and \(4.5^{\circ }\,\hbox {to}\,9^{\circ }\hbox {E}\), see Fig. 3. Topography is obtained from the 30-m digital elevation model over France by IGN, completed with Smith and Sandwell (1997) bathymetry and SRTM data.

Available surface gravity data in these areas, from the BGI (International Gravimetric Bureau), are shown in Figs. 2b–3b. Note that the BGI gravity data values are not used in this study, but only their spatial distribution in order to generate realistic distribution in the synthetic tests. In these figures, it is shown that the gravity data are sparsely distributed: The plain is densely surveyed while the mountainous regions are poorly covered because they are mostly inaccessible by the conventional gravity survey. The range of free-air gravity anomalies (see Moritz 1980; Sansò and Sideris 2013) which are quite large reflects the complex structure of the gravity field in these regions, which means that the gravitational field strength varies greatly from place to place at high resolution. The scarcity of gravity data in the hilly regions is thus a major limitation in deriving accurate high-resolution geopotential model.

3.2 High-resolution synthetic data

Here, we present the way to simulate our synthetic gravity disturbances \(\overline{\delta {g}}\) and disturbing potentials \(\overline{T}\) by subtracting the gravity field long and short wavelengths influence of a high-resolution global geopotential model.

The generation of the synthetic data \(\overline{\delta {g}}\) and \(\overline{T}\) at the Earth’s topographic surface was carried out, in ellipsoidal approximation, with the FORTRAN program GEOPOTFootnote 2 (Smith 1998) of the National Geodetic Survey (NGS). This program allows to compute gravity field-related quantities at given locations using a geopotential model and additional information such as parameters of the ellipsoidal normal field, tide system. The ellipsoidal normal field is defined by the parameters of the geodetic reference system GRS80 (Moritz 1984). As input, we used the static global gravity field model EIGEN-6C4 (Förste et al. 2014). It is a combined model up to degree and order (d/o) 2190 containing satellite, altimetry, terrestrial gravity, and elevation data. By using the spherical harmonics (SH) coefficients up to d/o 2000, it allows us to map gravity variations down to 10 km resolution. Thus, these synthetic data do not represent the full geoid signal. The choice is motivated by the fact that at a centimeter-level of accuracy, we expect large benefit from clocks at wavelengths \(\ge \)10 km.

Our objective is to study how clocks can advance knowledge of the geoid beyond the resolution of the satellites. In a first step, as illustrated in Fig. 4, the long wavelengths of the gravity field covered by the satellites and longer than the extent of the local area are completely removed up to the degree \(n_{\text {cut}} = 100\) (200 km resolution). This data reduction is necessary for the determination of the local covariance function in order to have centered data, or close to zero, as detailed in Knudsen (1987, 1988). Between degree 101 and 583, the gravity field is progressively filtered using 3 Poisson wavelets spectra (Holschneider et al. 2003), while its full content is preserved above degree 583. In this way, we realize a smooth transition between the wavelengths covered by the satellites and those constrained from the surface data.

To subtract the terrain effects included in EIGEN-6C4, we used the topographic potential model dV_ELL_RET2012 (Claessens and Hirt 2013) truncated at d/o 2000. Complete up to d/o 2160, this model provides in ellipsoidal approximation the gravitational attraction due to the topographic masses anywhere on the Earth’s surface. The results of this data reduction yields to the reference fields \(\overline{\delta {g}}\) and \(\overline{T}\) for both regions, shown in Figs. 5 and 6.

Synthetic reference fields of gravity disturbances \(\overline{\delta {g}}\) and disturbing potential \(\overline{T}\) in the Massif Central area. Anomalies are computed at the Earth’s topographic surface from the EIGEN-6C4 model up to d/o 2000 after removal of the low and high frequencies of the gravity field

Synthetic reference fields of gravity disturbances \(\overline{\delta {g}}\) and disturbing potential \(\overline{T}\) in the Alps–Mediterranean area. Anomalies are computed at the Earth’s topographic surface from the EIGEN-6C4 model up to d/o 2000 after removal of the low and high frequencies of the gravity field

Figures 5 and 6 show the different characteristics of the residual field in these two regions. The residual anomalies have smaller amplitudes in the Massif Central area when compared to the Alps. In addition, the presence of high mountains on part of the latter zone results in an important spatial heterogeneity of the residual gravity anomalies, with large signals also at intermediate resolutions.

4 Data set selection and synthetic noise

4.1 Gravimetric location points selection

Our goal is to reproduce a realistic spatial distribution of the gravity points. The BGI gravity data sets contain hundreds of thousands points for the target regions (see Figs. 2b–3b). In order to reduce the size of the problem and make it numerically more tractable, we build a distribution with no more than several thousand points from the original one.

Starting from the spatial distribution of the BGI gravity data sets, a grid \(\overline{\delta {g}}\) of N cells is built with a regular step of about 6.5 km. Each cell contains \(n_i\) points with \(i=\{1,2,\dots ,N\}\). These \(n_i\) points are replaced by one point which location is given by the geometric barycenter of the \(n_i\) points, in the case that \(n_i>0\). If \(n_i=0\), then there is no point in the cell i. Figure 7 show the new distributions of gravimetric data for the Massif Central and the Alps regions; they have, respectively, 4374 and 4959 location points. These new spatial distributions reflect the initial BGI gravity data distribution but are be more homogeneous. They will be used in what follows.

4.2 Chronometric location points selection

We choose to put clock measurements only where existing land gravity data are located. Indeed, these data mainly follow the roads and valleys which could be accessible for a clock comparison. Then, we use a simple geometric approach in order to put clock measurements in regions where the gravity data coverage is poor. Since the potential varies smoothly compared to the gravity field, a clock measurement is affected by masses at a larger distance than in the case of a gravimetric measurement. For that reason, a clock point will be able to constrain longer wavelengths of the geopotential than a gravimetric point. This is particularly interesting in areas poorly surveyed by gravity measurement networks. Finally, in order to avoid having clocks too close to each other, we define a minimal distance d between them. We chose d greater than the correlation length of the gravity covariance function (in this work \(\lambda \sim 20\) km, see Table 1).

Here we give more details about our algorithm to select the clock locations:

-

1.

First, we initialize the clock locations on the nodes of a regular grid \(\overline{T}\) with a fixed interval d. This grid is included in the target region at a setback distance of about 30 km from each edge (outside possible boundary effects).

-

2.

Secondly, we change the positions of each clock point to the position of the nearest gravity point from the grid \(\overline{\delta {g}}\), located in cell i (see the previous paragraph); in cell i are located \(n_i\) points of the initial BGI gravity data distribution.

-

3.

Finally, we remove all the clock points located in cells where \(n_i > n_\mathrm{max}\). This is a simple way to keep only the clock points located in areas with few gravimetric measurements.

This method allows to simulate different realistic clock measurement coverages by changing the values of d and \(n_\mathrm{max}\). The number of clock measurements increases when the distance d decreases or when the threshold \(n_\mathrm{max}\) increases and vice versa. It is also possible to obtain different spatial distributions but the same number of clock measurements for different sets of d and \(n_\mathrm{max}\).

In Fig. 7, we propose an example of clock coverage used hereafter for both target regions with 32 and 33 clock locations, respectively, in the Massif Central and the Alps, corresponding to \(\sim \)0.7% of the gravity data coverage. For the chosen distributions, the value of d is about 60 km and \(n_\mathrm{max} = 15\).

4.3 Synthetic measurements simulation

For each data point, the synthetic values of \(\delta {g}\) and T are computed by applying the data reduction presented in Sect. 3.2. It is important to note that the location points of the simulated data T are not necessarily at the same place than the estimated data \(\overline{T}\).

A Gaussian white noise model is used to simulate the instrumental noise of the measurements. We chose, for the main tests in the next section, a standard deviation \(\sigma _{\delta {g}}=1\) mGal for the gravity data and \(\sigma _{T}=0.1\) m\(^2\)/s\(^2\) for the potential data. In terms of geoid height, the latter noise level is equivalent to 1 cm. Other tests with different noise levels are discussed in Sect. 6.

5 Numerical results

In this section, we present our numerical results showing the contribution of clock data in regional recovery of the geopotential from realistic data points distribution in the Massif Central and the Alps. The reconstruction of the disturbing potential is realized from the synthetic measurements \(\delta {g}\) and T, and by applying the least squares collocation (LSC) method.

5.1 Planar Least Squares Collocation

The LSC method, described in Moritz (1972, 1980), is a suitable tool in geodesy to combine heterogeneous data sets in gravity field modeling. Assuming that the measured values are linear functionals of the disturbing potential T, this approach allows us to estimate any gravity field parameter based on T from many types of observables.

Consider \(\mathbf {l} = [ \mathbf {l}_T, \mathbf {l}_{\delta {g}} ] = l_k\) a data vector composed by p data T and q data \(\delta {g}\), affected by measurement errors \(\varepsilon _k\), with \(k=\{1,2,\ldots ,p+q\}\). The estimation of the disturbing potential \(\widetilde{T}_P\) at point P from the data \(\mathbf {l}\) can be performed with the relation

with \(\mathbf {C}_{l,l}\) the covariance matrix of the measurement vector \(\mathbf {l}\), \(\mathbf {C}_{\epsilon ,\epsilon }\) the covariance matrix of the noise, \(\mathbf {C}_{T_P,l}\) the cross-covariance matrix between the estimated signal \(T_P\) and the data \(\mathbf {l}\), and \(\omega \) the Tikhonov regularization factor (Neyman 1979), also called weight factor.

In practice, the data \(\mathbf {l}\) are synthesized as described in Sects. 3 and 4. Therefore, the measurement noise is known to be a Gaussian white noise. Noise and signal (errorless part of \(l_k\)) are assumed to be uncorrelated, and the covariance matrix of the noise can be written as

with \(\mathbf {I}_n\) the identity matrix of size n.

Empirical and best fitting covariance function of the ACF of \(\delta {g}\). Values of the parameters are given in Table 1. a Massif Central, b Alps-Mediterranean

Because \(\mathbf {C}_{l,l}\) can be very ill-conditioned, the matrix (5.3) plays an important role in its regularization before inversion, since positive constant values are added to the elements of its main diagonal. To avoid any iterative process to find an optimum value of \(\omega \) in case where this matrix \(\mathbf {C}_{l,l}\) is not definite positive, we chose to fix the weight factor \(\omega = 1\) and to apply a singular value decomposition (SVD) to pseudo-inverse the matrix. As shown in (Rummel et al. 1979), these two approaches are similar.

Accuracy of the disturbing potential T reconstruction on a regular 10-km step grid in Massif Central, obtained by comparing the reference model and the reconstructed one. In a, the estimation is realized from the 4374 gravimetric data \(\delta g\) only and in b by adding 33 potential data T to the gravity data. a Without clock data, b With clock data

5.2 Estimation of the covariance function

Implementation of the collocation method requires to compute the covariance matrices \(\mathbf {C}_{T_{P},l}\) and \(\mathbf {C}_{l,l}\). This step has been carried out using a logarithmic spatial covariance function from (Forsberg 1987), see “A Covariance function.” This stationary and isotropic model is well adapted to our analysis. Indeed, it provides the auto-covariances (ACF) and cross-covariances (CCF) of the disturbing potential T and its derivatives in 3 dimensions with simple closed-form expressions.

The spatial correlations of the gravity field are analyzed with the program GPFIT (Forsberg and Tscherning 2008). The variance \(C_0\) is directly computed from the gravity data on the target area, and the parameters \(\alpha \) and \(\beta \) (see “A Covariance function”) are estimated by fitting the a priori covariance function to the empirical ACF of the gravity disturbances \(\delta {g}\).

Results of the optimal regression analysis for both regions are given in Fig. 8 and Table 1. The estimated covariance models reflect the different characteristics of the gravity signals in the two areas and the data sampling, which is less dense in high relief areas. Finally, the gravity anomaly covariances show similar correlation lengths, with a larger variance for the case of the Alps; their shapes, however, slightly differ, with a broader spectral coverage for the Alps.

Knowing the parameter values of the covariance model, we can now estimate the potential anywhere on the Earth’s surface.

5.3 Contribution of clocks

The contribution of clock data in the potential recovery is evaluated by comparing the residuals of two solutions to the reference potential on a regular grid interval of 10 km. The first solution corresponds to the errors between the estimated potential model computed solely from gravity data and the potential reference model, while the second solution uses combined gravimetric and clock data. To avoid boundary effects in the estimated potential recovery, a grid edge cutoff of 30 km has been removed in the solutions.

Accuracy of the disturbing potential T reconstruction on a regular 10-km step grid in Alps, obtained by comparing the reference model and the reconstructed one. In a, the estimation is realized from the 4959 gravimetric data \(\delta g\) only and in b by adding 32 potential data T to the gravity data. a Without clock data, b With clock data

For the Massif Central region, the disturbing potential is estimated with a bias \(\mu _T \approx 0.041~\hbox {m}^{2}\hbox {s}^{-2}\) (4.1 mm) and a rms \(\sigma _T \approx 0.25~\hbox {m}^{2}\hbox {s}^{-2}\) (2.5 cm) using only the 4374 gravimetric data, see Fig. 9a. When we now reconstruct T by adding the 33 potential measurements to the gravimetric measurements, the bias is improved by one order of magnitude (\(\mu _T \approx -0.002~\hbox {m}^{2}\hbox {s}^{-2}\) or \(-0.2\) mm) and the standard deviation by a factor 3 (\(\sigma _T \approx 0.07~\hbox {m}^{2}\hbox {s}^{-2}\) or 7 mm), see Fig. 9b.

For the Alps, Fig. 10, the potential is estimated with a bias \(\mu _T \approx 0.23~\hbox {m}^{2}\hbox {s}^{-2}\) (2.3 cm) and a standard deviation \(\sigma _T \approx 0.39~\hbox {m}^{2}\hbox {s}^{-2}\) (3.9 cm) using only the 4959 gravimetric data. When adding the 32 potential measurements, we note that the bias is improved by a factor 4 (\(\mu _T \approx -0.069~\hbox {m}^{2}\hbox {s}^{-2}\) or \(-6.9\) mm) and the standard deviation by a factor 2 (\(\sigma _T \approx 0.18~\hbox {m}^{2}\hbox {s}^{-2}\) or 1.8 cm).

It can be noticed that the residuals in both areas differ. This results from the covariance function that is less well modeled when the data survey has large spatial gaps. It should also be stressed that a trend appears in the reconstructed potential with respect to the original one when no clock data are added in both regions. This effect is discussed in Sect. 6.

6 Discussion

6.1 Effect of the number of clock measurements

Figure 11 shows the influence of the number of clock data in the potential recovery, and therefore, of their spatial distribution density. We vary the number and distribution of clock data by changing the mesh grid size d, which represents the minimum distance between clock data points (see Sect. 4). The particular cases shown in detail in Sect. 5 are included. We characterize the performance of the potential reconstruction by the standard deviation and mean of the differences between the original potential on the regular grid and the reconstructed one. When increasing the density of the clock network, the standard deviation of the differences tends toward the centimeter-level, for the Massif Central case, and the bias can be reduced by up to 2 orders of magnitude. Note that we have not optimized the clock locations such as to maximize the improvement in potential recovery. The chosen locations are simply based on a minimum distance and a maximum coverage of gravity data (c.f. Sect. 4). An optimization of clock locations would likely lead to further improvement, but is beyond the scope of this work and will be the subject of future studies.

Moreover, the results indicate that it is not necessary to have a large number of clock data to improve the reconstruction of the potential. We can see that only a few tens of clock data, i.e., less than 1% of the gravity data coverage, are sufficient to obtain centimeter-level standard deviations and large improvements in the bias. When continuing to increase the number of clock data, the standard deviation curve seems to flatten at the cm-level.

Performance of the potential reconstruction (expressed by the standard deviations and mean of differences between the original potential on the regular grid and the reconstructed one) wrt the number of clocks. In green, number of clock data in terms of percentage of \(\delta {g}\) data. a Massif Central area, b Alps area

Effect of the number of gravity data combined with 38 clock data on the disturbing potential recovery in the Massif Central region. Panel a: absolute value of the mean of the residuals of T; panel b: the rms. The noise of the measurements is 1 mGal for \(\delta {g}\) and \(0.1~\hbox {m}^{2}\hbox {s}^{-2} \) for T. Note that for each coverage of gravity data, a new covariance model is fitted on the empirical covariance model

6.2 Effect of the number of gravity measurements

We have performed numerical tests in order to study the influence of the density of gravity measurements on the reconstructed disturbing potential, with or without clocks. We take the case of the Massif Central region and set up simulations where the clock coverage is fixed (either no clocks, or 38 clocks at fixed locations where we also have gravity data). Then, we progressively increase the spatial resolution of the gravity data, from 91 to 6889 points, and evaluate as before the quality of the potential reconstruction with or without clocks. Here, in contrast with the tests presented in the previous section, the gravity points are randomly generated from a complete 5-km step grid. Figure 12 shows the results of these tests. If we compare the rms values between configurations where we add clocks or not, we observe that the behavior of the results is globally similar and improved with clocks. The interpolation error due to a too low resolution of the gravity data with respect to scales of the field variations predominates when we have less than \(\sim \)1500 gravity measurements, leading to large rms values even with clocks. Above this number, the large-scale reconstruction errors significantly contribute to the rms of residuals, explaining that the rms further decreases only when clocks are added. Looking at the bias between the reconstructed and original potential, we can see that it is poorly dependent on the number of gravity data in the tests without clocks. It probably reflects the fact that these data are more sensitive to the smaller scale components of the gravity potential. When we add clocks, the improvement on the bias is always important, which is consistent with the fact that the higher sensitivity of clocks to the longer wavelengths of the field reduces significantly the trend from the modeling error.

6.3 Covariance function consistency

In Figs. 10a and 11a, a trend appears in the residuals, but disappears when gravimetric and clock data are combined. This is due to the fact that the covariance function does not have the same spectral coverage as the data generated from the gravity field model EIGEN-6C4. Indeed, the covariance function contains low frequencies while we have removed them for the synthetic data. Therefore, some low-frequency content is present in the recovered potential. While the issue could be avoided by using a covariance parametric model from which we can remove the low-frequency content in a perfectly consistent way with the data generation (e.g., a closed-form Tscherning–Rapp model Tscherning and Rapp 1974; Tscherning 1976), it is not obvious that the corresponding results would allows realistic conclusions. Indeed, the spectral content of real surface observations, after removal of lower frequencies from a global spherical harmonics model, may still retain some unknown low frequencies. As consequence, it is not obvious to match to that of a single covariance function, while perfect consistency can only be achieved from synthetic data. We chose to keep this mismatch, thereby investigating the interest of clocks for high-resolution geopotential determination when our prior knowledge on the surface data signal and noise components is not perfect. More detailed studies on this issue are considered beyond the scope of our paper, which presents a first step to quantify the possible use of clock measurements in potential recovery.

6.4 Influence of the measurement noise

We have also investigated the effect of the noise levels applied to the synthetic data, see Tables 2, 3, by using various standard deviations to simulate white noise of the measurements: \(\sigma _T = \{1, 0.1\}~\hbox {m}^{2}\,\hbox {s}^{-2} \) for the clock measurements and \(\sigma _{\delta {g}} = \{1, 0.1, 0.01\}\) mGal for the gravimetric measurements. These results were obtained for the same conditions as in Sect. 5, i.e., 33 (resp. 32) clock data points and 4374 (resp. 4959) gravity data points for the Massif Central (resp. Alps).

We can see that adding clocks improves the potential recovery (smaller standard deviation \(\sigma \) and bias \(\mu \) of the residuals) for both regions and whatever the noise of the gravimetric or clock measurements.

We observe that decreasing the noise of the gravity data by up to 2 orders of magnitude only improves the standard deviation of the residuals \(\sigma \) of the recovered potential by comparatively small amounts (less than a factor 2). This is probably due to the fact that the covariance function does not reflect the gravity field correctly in these regions, combined with a limited data coverage. Note that the low-frequency content in the covariance function (see above) is unlikely to be the main cause here, as the comparatively small reduction of \(\sigma \) is also observed when clocks are present in spite of the fact that they remove the low-frequency trend (c.f. Figs. 10b and 11b).

When adding clocks, the standard deviations are decreased by up to a factor 3.7 with low clock noise (\(0.1~\hbox {m}^{2}\hbox {s}^{-2}\) or 1 cm) and a factor 1.5 with higher clock noise (\(1~\hbox {m}^{2}\hbox {s}^{-2}\) or 10 cm). The effect is stronger in the Massif Central region than in the Alps. We attribute this again to the mismatch between the covariance function and the complex structure of the gravity field, which is larger in the Alps.

Basically, the simulations put in evidence that the solutions depend on two types of errors, the measurement accuracy and the representation error. Indeed, if we increase the number of gravity data at high spatial resolution, we reduce the modeling error, which solves the problem of data interpolation; inversely, the modeling error will be more important if we have a poor coverage and gaps. But the quality of the covariance model is also reflected by the quality of the measurements as illustrated by the first column in Tables 2 and 3 where we have used a high noise level for the gravity measurements, discussed in the next section.

Thus, optical clocks with just an accuracy of \(1~\hbox {m}^{2}\hbox {s}^{-2}\) (or 10 cm) are interesting no matter what the gravity data quality. With an accuracy of \(0.1~\hbox {m}^{2}\hbox {s}^{-2}\) (or 1 cm), we can expect a gain of up to a factor 4 in the estimated potential with respect to simulations using no clock data. Of course, this gain depends on the number of clocks and the geometry of the clock coverage. For several tested configurations, we have remarked that it is possible to obtain the same gain in terms of rms with less clocks (e.g., about 10 clocks) but with a slightly larger bias. Additionally, different spatial distribution of the same number of clocks can degrade or improve the quality on the determination of T.

6.5 Aliasing of the very high-resolution components

We have studied the aliasing of gravity variations at scales shorter than 10 km spatial resolution that would be present in real data but under-sampled by the finite spatial density of the surveys. Errors in the topographic corrections may reach a few mGal for DTM (digital terrain model) sampled at hundreds of meters resolution (Tziavos et al. 2009), while local geological sources may lead to gravity signals up to \(\sim 10\) mGal (Yale et al. 1998; Bondarescu et al. 2012; Castaldo et al. 2014). Furthermore, we have analyzed the Bouguer gravity anomalies from the BGI database along profiles in the Massif Central and the Alps, and found, after smoothing the profiles at 10 km resolution, high-resolution components with rms \(\sim \)1 mGal in the Massif Central, and \(\sim \)3 mGal in the Alps. An order of magnitude of the corresponding geoid variations can be derived by assuming that the gravity signals at a given spatial scale are created by a point mass at the corresponding depth. We find that a 5-km width, 5 mGal (resp. 10 mGal) gravity anomaly corresponds to a 1.3-cm (resp. 2.6 cm) geoid variation, above the centimeter-level indeed.

We simulate these previously neglected signals beyond 10 km resolution by increasing the noise level on the gravity data in our tests, up to 5 mGal in the Massif Central, and 10 mGal in the Alps. Note that these rms values are large with respect to the observed high-resolution variabilities in the data. As previously, numerical simulations are performed with and without adding clocks, and the results are presented in the first column of Tables 2 and 3. We can see that decreasing the accuracy of the gravimetric measurements increases the residuals as compared to the previous solutions. This is due to the fact that the signal-to-noise ratio decreases, degrading the covariance function modeling. However, our previous conclusions on the benefit of clocks remain the same, even in the presence of significant signals at the shortest spatial scales.

7 Conclusions

Optical clocks provide a tool to measure directly the potential differences and determine the geopotential at high spatial resolution. We have shown that the recovery of the potential from gravity and clock data with the LSC method can improve the determination of geopotential at high spatial resolution, beyond what is available from satellites. Compared to a solution that does not use the clock data, the standard deviation of the disturbing potential reconstruction can be improved by a factor 3, and the bias can be reduced by up to 2 orders of magnitude with only a few tens of clock data. This demonstrates the benefit of this new potential geodetic observable, which could be put in practice in the medium term when the first transportable optical clocks and appropriate time transfer methods will be developed (see Bongs 2015; Lisdat et al. 2016; Deschênes et al. 2016; Vogt et al. 2016). Since clocks are sensitive to low frequencies of the gravity field, this method is particularly well adapted in hilly and mountainous regions for which the gravity coverage is more sparsely distributed, allowing to fill areas not covered by the classical geodetic observables (gravimetric measurements). Additionally, adding new observables helps to reduce the modeling errors, e.g., coming from a mismatch between the covariance function used and the real gravity field.

In the same way, GPS and leveling data have been used, in combination with gravity data, to derive high-resolution gravimetric geoids (Kotsakis and Sideris 1999; Duquenne 1999; Denker et al. 2000; Duquenne et al. 2005; Nahavandchi and Soltanpour 2006). Using clocks is, however, different from performing GPS and leveling measurements. They provide an information of similar nature as the gravity data, in contrast with these geometric observations. The latter are affected by different sources of errors (e.g., Duquenne 1998; Marti et al. 2001) and quite expensive in the case of leveling campaigns. We can expect that clocks could help identify and reduce errors in the gravity and GPS/leveling through their joint analysis for geopotential determination. Beyond the application considered in this work, the clocks can also contribute to the unification of height systems realizations (Shen et al. 2011, 2016; Denker 2013; Kopeikin et al. 2016; Takano et al. 2016), connecting distant points to a high-resolution reference potential network.

To our knowledge, this is the first detailed quantitative study of the improvement in field determination that can be expected from chronometric geodesy observables. It provides first estimates and paves the way for future more detailed and in depth works in this promising new field.

To overcome some limitations in the a priori model, as discussed in the previous section, we intend in a forthcoming work to investigate in more details the imperfections of the covariance function model. Moreover, as the gravity field is in reality non-stationary in mountainous areas or near the coast, some numerical tests with non-stationary covariance functions will be conducted. Another promising source of improvement could be the optimization of the positioning of the clock data. For example, the correlation lengths and the variations of the gravity field could be used as constraints. A genetic algorithm could also be considered to solve this location problem. Finally, it will be interesting to focus on the improvement of the potential recovery quality by combining other types of observables such as leveling data and gradiometric measurements. As knowledge of the geopotential provides access to height differences, this could be a way to estimate errors of the GNSS technique for the vertical positioning or contribute to regional height systems unification.

References

Bjerhammar A (1985) On a relativistic geodesy. Bull Déod 59(3):207–220. doi:10.1007/BF02520327

Bondarescu R, Bondarescu M, Hetényi G, Boschi L, Jetzer P, Balakrishna J (2012) Geophysical applicability of atomic clocks: direct continental geoid mapping. Geophys J Int 191(1):78–82. doi:10.1111/j.1365-246X.2012.05636.x

Bondarescu M, Bondarescu R, Jetzer P, Lundgren A (2015a) The potential of continuous, local atomic clock measurements for earthquake prediction and volcanology. In: European Physical Journal Web of Conferences, European Physical Journal Web of Conferences, vol 95, p 4009, doi:10.1051/epjconf/20159504009, arXiv:1506.02853

Bondarescu R, Schärer A, Jetzer P, Angélil R, Saha P, Lundgren A (2015b) Testing general relativity and alternative theories of gravity with space-based atomic clocks and atom interferometers. In: European Physical Journal Web of Conferences, European Physical Journal Web of Conferences, vol 95, p 2002, doi:10.1051/epjconf/20159502002, arXiv:1412.2045

Bondarescu R, Schärer A, Lundgren A, Hetényi G, Houlié N, Jetzer P, Bondarescu M (2015c) Ground-based optical atomic clocks as a tool to monitor vertical surface motion. Geophys J Int 202:1770–1774. doi:10.1093/gji/ggv246. arXiv:1506.02457

Bongs K, Singh Y, Smith L, He W, Kock O, Świerad D, Hughes J, Schiller S, Alighanbari S, Origlia S, Vogt S, Sterr U, Lisdat C, Targat RL, Lodewyck J, Holleville D, Venon B, Bize S, Barwood GP, Gill P, Hill IR, Ovchinnikov YB, Poli N, Tino GM, Stuhler J, Kaenders W (2015) Development of a strontium optical lattice clock for the SOC mission on the ISS. C R Phys 16(5):553–564. doi:10.1016/j.crhy.2015.03.009

Bruinsma SL, Förste C, Abrikosov O, Lemoine JM, Marty JC, Mulet S, Rio MH, Bonvalot S (2014) Esa’s satellite-only gravity field model via the direct approach based on all goce data. Geophys Res Lett 41(21):7508–7514. doi:10.1002/2014GL062045L062045

Castaldo R, Fedi M, Florio G (2014) Multiscale estimation of excess mass from gravity data. Geophys J Int p ggu082

Chou CW, Hume DB, Rosenband T, Wineland DJ (2010) Optical clocks and relativity. Science 329(5999):1630–1633. doi:10.1126/science.1192720

Claessens SJ, Hirt C (2013) Ellipsoidal topographic potential: new solutions for spectral forward gravity modeling of topography with respect to a reference ellipsoid. J Geophys Res Solid Earth 118(11):5991–6002. doi:10.1002/2013JB010457B010457

Delva P, Lodewyck J (2013) Atomic clocks: new prospects in metrology and geodesy. Acta Futura, Issue 7, p 67-78 7:67–78, arXiv:1308.6766

Denker H (2013) Regional gravity field modeling: theory and practical results. Springer, Berlin. doi:10.1007/978-3-642-28000-9_5

Denker H, Torge W, Wenzel G, Ihde J, Schirmer U (2000) Investigation of different methods for the combination of gravity and gps/levelling data. In: Geodesy Beyond 2000, Springer, Berlin. pp 137–142

Deschênes JD, Sinclair LC, Giorgetta FR, Swann WC, Baumann E, Bergeron H, Cermak M, Coddington I, Newbury NR (2016) Synchronization of distant optical clocks at the femtosecond level. Phys Rev X 6(021):016. doi:10.1103/PhysRevX.6.021016

Douch K, Panet I, Pajot-Métivier G, Christophe B, Foulon B, Lequentrec-Lalancette MF, Diament M (2015) Error analysis of a new planar electrostatic gravity gradiometer for airborne surveys. J Geod 89:1217–1231. doi:10.1007/s00190-015-0847-8

Duquenne H (1998) Qgf98, a new solution for the quasigeoid in France. In: Proceeding of the Second Continental Workshop on the Geoid in Europe. Reports of the Finnish Geodetic Institute, vol 98, pp 251–255

Duquenne H (1999) Comparison and combination of a gravimetric quasigeoid with a levelled gps data set by statistical analysis. Phys Chem Earth Part A Solid Earth Geod 24(1):79–83. doi:10.1016/S1464-1895(98)00014-3

Duquenne H, Everaerts M, Lambot P (2005) Merging a gravimetric model of the geoid with GPS/levelling data : an example in Belgium. Springer, Berlin. doi:10.1007/3-540-26932-0_23

Forsberg R (1987) A new covariance model for inertial gravimetry and gradiometry. J Geophys Res 92:1305–1310. doi:10.1029/JB092iB02p01305

Forsberg R, Tscherning CC (2008) An overview manual for the GRAVSOFT. University of Copenhagen, Denmark

Förste C, Bruinsma S, Abrikosov O, Flechtner F, Marty JC, Lemoine JM, Dahle C, Neumayer H, Barthelmes F, König R, Biancale R (2014) EIGEN-6C4 - The latest combined global gravity field model including GOCE data up to degree and order 1949 of GFZ Potsdam and GRGS Toulouse. In: EGU General Assembly Conference Abstracts, EGU General Assembly Conference Abstracts, vol 16, p 3707

Gruber T (2009) Evaluation of the egm2008 gravity field by means of gps-levelling and sea surface topography solutions. External quality evaluation reports of EGM08, Newton’s Bulletin 4, Bureau Gravimétrique International (BGI) / International Geoid Service (IGeS)

Hinkley N, Sherman JA, Phillips NB, Schioppo M, Lemke ND, Beloy K, Pizzocaro M, Oates CW, Ludlow AD (2013) An atomic clock with 10–18 instability. Science 341(6151):1215–1218. doi:10.1126/science.1240420

Holschneider M, Chambodut A, Mandea M (2003) From global to regional analysis of the magnetic field on the sphere using wavelet frames. Phys Earth Planet Inter 135(2–3):107–124. doi:10.1016/S0031-9201(02)00210-8

Johnson B (2009) Noaa project to measure gravity aims to improve coastal monitoring. Science 325(5939):378–378. doi:10.1126/science.325_378

Knudsen P (1988) Determination of local empirical covariance functions from residual terrain reduced altimeter data. Tech. rep, DTIC Document

Knudsen P (1987) Estimation and modelling of the local empirical covariance function using gravity and satellite altimeter data. Bull Géod 61(2):145–160. doi:10.1007/BF02521264

Kopeikin SM, Kanushin VF, Karpik AP, Tolstikov AS, Gienko EG, Goldobin DN, Kosarev NS, Ganagina IG, Mazurova EM, Karaush AA, Hanikova EA (2016) Chronometric measurement of orthometric height differences by means of atomic clocks. Gravit Cosmol 22(3):234–244. doi:10.1134/S0202289316030099

Kotsakis C, Sideris MG (1999) On the adjustment of combined gps/levelling/geoid networks. J Geod 73(8):412–421

Landau L, Lifshitz EM (1975) The Classical Theory of Fields. No. vol. 2 in Course of theoretical physics, Butterworth-Heinemann

Lisdat C, Grosche G, Quintin N, Shi C, Raupach SMF, Grebing C, Nicolodi D, Stefani F, Al-Masoudi A, Dörscher S, Häfner S, Robyr JL, Chiodo N, Bilicki S, Bookjans E, Koczwara A, Koke S, Kuhl A, Wiotte F, Meynadier F, Camisard E, Abgrall M, Lours M, Legero T, Schnatz H, Sterr U, Denker H, Chardonnet C, Le Coq Y, Santarelli G, Amy-Klein A, Le Targat R, Lodewyck J, Lopez O, Pottie PE (2016) A clock network for geodesy and fundamental science. Nature Communications 7:12,443 EP –, doi:10.1038/ncomms12443

Mai E (2013) Time, atomic clocks, and relativistic geodesy. Deutsche Geodätische Kommission bei der Bayerischen Akademie der Wissenschaften, Reihe A, Theoretische Geodäsie, Beck

Marti U (2015) Gravity, Geoid and Height Systems: Proceedings of the IAG Symposium GGHS2012, October 9-12, 2012, Venice, Italy. International Association of Geodesy Symposia, Springer, Berlin https://books.google.fr/books?id=2f8qBgAAQBAJ

Marti U, Schlatter A, Brockmann E (2001) Combining levelling with gps measurements and geoid information

Moritz H (1972) Advanced Least-squares Methods. Ohio State University, Department of Geodetic Science, Ohio State University

Moritz H (1980) Advanced physical geodesy

Moritz H (1984) Geodetic reference system 1980. Bulletin géodésique 58(3):388–398. doi:10.1007/BF02519014

Nahavandchi H, Soltanpour A (2006) Improved determination of heights using a conversion surface by combining gravimetric quasi-geoid/geoid and gps-levelling height differences. Studia Geophysica et Geodaetica 50(2):165–180. doi:10.1007/s11200-006-0010-3

Neyman YM (1979) The variational method of physical geodesy. Bulletin géodésique. Nedra Publishers, Moscow

Nicholson TL, Campbell SL, Hutson RB, Marti GE, Bloom BJ, McNally RL, Zhang W, Barrett MD, Safronova MS, Strouse GF, Tew WL, Ye J (2015) Systematic evaluation of an atomic clock at \(2 {\times } 10^{-18}\) total uncertainty. Nat Commun 6:6896. doi:10.1038/ncomms7896. arXiv:1412.8261

Origlia S, Schiller S, Pramod MS, Smith L, Singh Y, He W, Viswam S, Świerad D, Hughes J, Bongs K, Sterr U, Lisdat C, Vogt S, Bize S, Lodewyck J, Le Targat R, Holleville D, Venon B, Gill P, Barwood G, Hill IR, Ovchinnikov Y, Kulosa A, Ertmer W, Rasel EM, Stuhler J, Kaenders W, SOC2 consortium contributors t (2016) Development of a strontium optical lattice clock for the SOC mission on the ISS. arXiv:1603.06062

Pail R, Bruinsma S, Migliaccio F, Förste C, Goiginger H, Schuh WD, Höck E, Reguzzoni M, Brockmann JM, Abrikosov O, Veicherts M, Fecher T, Mayrhofer R, Krasbutter I, Sansò F, Tscherning CC (2011) First goce gravity field models derived by three different approaches. J Geod 85(11):819–843. doi:10.1007/s00190-011-0467-x

Pavlis NK, Weiss MA (2003) The relativistic redshift with \(3 \times 10^{-17}\) uncertainty at nist, boulder, colorado, usa. Metrologia 40(2):66

Petit G, Wolf P, Delva P (2014) Atomic time, clocks, and clock comparisons in relativistic spacetime: a review. In: Kopeikin SM (ed) Frontiers in Relativistic Celestial Mechanics -, vol 2., Applications and ExperimentsDe Gruyter Studies in Mathematical Physics, De Gruyter, pp 249–279

Rummel R (2002) Global Unification of Height Systems and GOCE. Springer, Berlin. pp 13–20 doi:10.1007/978-3-662-04827-6_3

Rummel R, Schwarz KP, Gerstl M (1979) Least squares collocation and regularization. Bull Geod 53:343–361. doi:10.1007/BF02522276

Rummel R (2012) Height unification using GOCE. J Geod Sci 2:355–362. doi:10.2478/v10156-011-0047-2

Rummel R, Teunissen P (1988) Height datum definition, height datum connection and the role of the geodetic boundary value problem. Bull Géod 62(4):477–498. doi:10.1007/BF02520239

Sansò F, Sideris M (2013) Geoid Determination: Theory and Methods. Lecture Notes in Earth System Sciences, Springer, Berlin

Sansò F, Venuti G (2002) The height datum/geodetic datum problem. Geophys J Int 149(3):768–775. doi:10.1093/gji/149.3.768

Shen WB (2013) Orthometric height determination based upon optical clocks and fiber frequency transfer technique. In: 2013 Saudi International Electronics, Communications and Photonics Conference, pp 1–4, doi:10.1109/SIECPC.2013.6550987

Shen W, Ning J, Liu J, Li J, Chao D et al (2011) Determination of the geopotential and orthometric height based on frequency shift equation. Nat Sci 3(05):388

Shen Z, Shen WB, Zhang S (2016) Formulation of geopotential difference determination using optical-atomic clocks onboard satellites and on ground based on Doppler cancellation system. Geophys J Int. doi:10.1093/gji/ggw198

Shen Z, Shen W (2015) Geopotential difference determination using optic-atomic clocks via coaxial cable time transfer technique and a synthetic test. Geodesy and Geodynamics 6(5):344–350. doi:10.1016/j.geog.2015.05.012, http://www.sciencedirect.com/science/article/pii/S1674984715000816

Smith DA (1998) There is no such thing as “the” egm96 geoid: subtle points on the use of a global geopotential model. IGeS Bull 8:17–28

Smith WHF, Sandwell DT (1997) Global sea floor topography from satellite altimetry and ship depth soundings. Science 277(5334):1956–1962. doi:10.1126/science.277.5334.1956

Takano T, Takamoto M, Ushijima I, Ohmae N, Akatsuka T, Yamaguchi A, Kuroishi Y, Munekane H, Miyahara B, Katori H (2016) Geopotential measurements with synchronously linked optical lattice clocks. Nat Photon 10(10):662–666. doi:10.1038/nphoton.2016.159 letter

Tscherning CC (1976) Covariance expressions for second and lower order derivatives of the anomalous potential. Tech. Rep. 225, Ohio State University. Department of Geodetic Science

Tscherning CC, Rapp RH (1974) Closed covariance expressions for gravity anomalies, geoid undulations, and deflections of the vertical implied by anomaly degree variance models. Tech. Rep. 208, Ohio State University. Department of Geodetic Science

Tziavos IN, Vergos GS, Grigoriadis VN (2009) Investigation of topographic reductions and aliasing effects on gravity and the geoid over Greece based on various digital terrain models. Surv Geophys 31(1):23. doi:10.1007/s10712-009-9085-z

Vermeer M (1983) Chronometric Levelling. Reports of the Finnish Geodetic Institute, Geodeettinen Laitos, Geodetiska Institutet

Vogt S, Häfner S, Grotti J, Koller S, Al-Masoudi A, Sterr U, Christian L (2016) A transportable optical lattice clock. Journal of Physics: Conference Series 723(1):012,020, http://stacks.iop.org/1742-6596/723/i=1/a=012020

Yale MM, Sandwell DT, Herring AT (1998) What are the limitations of satellite altimetry? The Lead Edge 17(1):73–76

Zhang L, Li F, Chen W, Zhang C (2008) Height datum unification between Shenzhen and Hong Kong using the solution of the linearized fixed-gravimetric boundary value problem. J Geod 83(5):411. doi:10.1007/s00190-008-0234-9

Acknowledgements

We thank René Forsberg for providing us the FORTRAN code of the logarithmic covariance function. We gratefully acknowledge financial support from Labex FIRST-TF, ERC AdOC (Grant No. 617553 and EMRP ITOC (EMRP is jointly funded by the EMRP participating countries within EURAMET and the European Union). We thank Olivier Jamet and Matthias Holschneider for discussions about the collocation method. We thank Gwendoline Pajot-Métivier for discussions on high-resolution gravity signals. We thank anonymous reviewers and the associate editor for their useful comments on this manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix: Covariance function

Appendix: Covariance function

Let us consider two points P and Q with the Cartesian coordinates \((x_P,y_P,z_P)\) and \((x_Q,y_Q,z_Q)\), respectively. To compute the ACF and CCF of the disturbing potential T and its derivatives, Forsberg (1987) proposed a planar attenuated logarithm covariance model with upward continuation that can be expressed in the generic form

with

This model is characterized by three parameters: \(C_0\) the variance of the gravity disturbance \(\delta {g}\) and two scale factors acting as high and low-frequency attenuators: \(\alpha \) the shallow depth parameter and \(\beta \) the compensating depth, respectively. The function \(K=K(x,y,z_i)\) is logarithmic function modeling the covariances between the gravity field quantities. For example, by putting \(r_i = \sqrt{d^2 + \alpha _i^2}\) and \(d = \sqrt{x^2 + y^2}\), the ACF of \(\delta {g}\) and T can be evaluated, respectively, with

and the CCF between T and \(\delta {g}\) with

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Lion, G., Panet, I., Wolf, P. et al. Determination of a high spatial resolution geopotential model using atomic clock comparisons. J Geod 91, 597–611 (2017). https://doi.org/10.1007/s00190-016-0986-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00190-016-0986-6