Abstract

As robotic systems transition from traditional setups to collaborative work spaces, the prevalence of physical Human Robot Interaction has risen in both industrial and domestic environments. A popular representation for robot behavior is movement primitives which learn, imitate, and generalize from expert demonstrations. While there are existing works in context-aware movement primitives, they are usually limited to contact-free human robot interactions. This paper presents physical Human Robot Interaction Primitives (pHRIP), which utilize only the interaction forces between the human user and robot to estimate user intent and generate the appropriate robot response during physical human robot interactions. The efficacy of pHRIP is evaluated through multiple experiments based on target-directed reaching and obstacle avoidance tasks using a real seven degree of freedom robot arm. The results are validated against Interaction Primitives which use observations of robotic trajectories, with discussions of future pHRI applications utilizing pHRIP.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Traditional robots rely on their accuracy, reliability, and strength to perform tasks. However, the variety of tasks performed with a single robot is generally constrained due to financial and time costs to adapt or replace its current program. The same costs exist for control methods that achieve safe Human Robot Interaction (HRI) using industrial robots with fine parameter tuning and heavily pre-determined environments (Djuric et al. 2016).

Recent advances in collaborative robots have paved the way for current research in collaborative interactions between humans and robots due to their inherent safety and improvements in productivity (Bloss 2016). The increasing adoption of collaborative robots has also boosted the prevalence of HRI applications in both industrial and domestic environments. Physical Human–Robot Interactions (pHRI) is a sub-field of research which includes interactions with direct physical contact or an exchange of forces with a robotic system. Applications with pHRI aim to augment the dexterity of human operators with the precision and repeatability of robotic systems to achieve various tasks, with common applications in haptic feedback (Carmichael et al. 2017), disturbance categorization (Haddadin et al. 2017), and system stabilization (Ferraguti et al. 2019).

To improve the robustness of robots in collaborative work spaces, the ability to learn, generalize, and adapt to different tasks is crucial. Two umbrella terms for frameworks which employ expert-learner techniques to generalize robotic motions to different tasks are Programming by Demonstration (PbD) (Billard et al. 2008) and Learning from Demonstration (LfD) (Argall et al. 2009). Task-oriented measures are regularly used to tackle variability exhibited by human users during pHRI applications (Carmichael et al. 2019; Lai et al. 2018; Hamaya et al. 2017). However, for non-expert users, these measures may degrade the interactions since the user may have no prior knowledge on the dynamics of the system.

Intuitively, humans exert forces to indicate their intention during physical interactions in everyday situations such as target-reaching tasks. Thus, the integration of interaction forces in Movement Primitives (MP) may improve the estimation of user intent when generating the appropriate robotic response. More importantly, a correct estimate of user intent in pHRI applications contribute towards the efficacy of the robotic response. Furthermore, probabilistic models of the interaction artifacts can capture system noise and user variability simultaneously.

Prior works for HRI, such as Interaction Primitives (IP) (Ben Amor et al. 2014), have utilized observations of the human hand to infer intent and generate an appropriate robotic trajectory in response. However, in a coupled human–robot dyad, the position of the hand and the robot endpoint are identical, precluding the use of this method. Thus, there is a need for a method to integrate interaction forces during pHRI.

This article introduces physical Human Robot Interaction Primitives (pHRIP), extending upon IP, to integrate dense interaction forces observed in a coupled human–robot dyad during interactions. The parameters for robotic motion are inferred without the robot endpoint position, conditioning only upon partial observations of the interaction forces, representing user intention. The proposed method is validated through: (a) a user-directed reaching experiment; and (b) a planar and a Cartesian target-reaching task.

This article is organized as follows: Sect. 2 consists of a review of related works, followed by the methodology for pHRIP in Sect. 3. Section 4 outlines the system setup for all validations. The user-directed reaching experiment is outlined in Sect. 5, with the planar and Cartesian target-reaching task presented in Sects. 6 and 7. Section 8 discusses the limitations of the proposed method, the results, and future work, before concluding the article in Sect. 9.

2 Review of related works

Movement Primitives (MP) are a family of elementary operations to represent robotic trajectories for motion planning. Inspired by human locomotion (Hogan and Sternad 2013), MPs are compact representations of complex locomotion in multi-degree of freedom (DoF) systems, forming the basis for various robotic capabilities: e.g. learning, imitation, and generalization of trajectories.

One popular MP used in the robotics community is Dynamic Movement Primitives (DMP), which capture acceleration-based dynamics using low dimensional basis functions. DMP leverages expert demonstrations to model the motion using a few parameters, with favorable properties such as trajectory dilation, rotational invariance, and temporal scaling (Ijspeert 2008).

Adaptation of the original formulation to overcome limitations (Hoffmann et al. 2009) have made DMPs suitable for many domestic and industrial applications: e.g. obstacle avoidance (Ginesi et al. 2019), industrial assembly (Karlsson et al. 2017), surface wiping (Gams et al. 2016), collaborative object transfer (Sidiropoulos et al. 2019; Prada et al. 2013), and co-operative sawing (Peternel et al. 2018). While there are adaptations or new formulations of MP such as Kernelized Movement Primitives (Huang et al. 2019b) (for further compression of trajectory representation), Interactive DMPs (Kulvicius et al. 2013) (for reaching equilibrium trajectories based on interaction forces), and Coupling Movement Primitives (Gams et al. 2014) (for achieving equal interaction forces), traditional DMPs remain popular due to their versatility and track record.

Given that there is inherent variability in human locomotion (Sternad 2018), applications for HRI and pHRI require some form of generalization which capture system noise and covariances across the different DoFs. This is especially crucial in multi-DoF systems for both the human and the robot, leading to applications with probabilistic models over DMP parameters, e.g. generating movement in a musculoskeletal system (Rückert and d’Avella 2013), table tennis swinging (Matsubara et al. 2010), and stiffness sensitive tasks (Denisa et al. 2016; Nemec et al. 2018).

Due to the robustness of probabilistic models, there is a significant amount of interest in probabilistic approaches to LfD and PbD, allowing for context-driven responses based on MPs (Cui et al. 2016; Paraschos et al. 2018; Maeda et al. 2017b). These probabilistic models rely on a Bayesian context to perform LfD and PbD (Fig. 1). Probabilistic modeling also paved the way for multi-model and multi-modal applications with a single model such as dust sweeping (Pervez and Lee 2018), teleoperation (Pervez et al. 2019; Yang et al. 2018), domestic feeding (Calinon et al. 2010), industrial assembly (Kyrarini et al. 2019), and ball throwing (Zhou et al. 2020).

A probabilistic approach to trajectory generation and user intent estimation are popular due to probabilistic properties of the model, with notable applications for HRI utilizing Interaction Primitives (IP) and its extensions: e.g. human robot gestures (Ben Amor et al. 2014), collaborative object covering (Cui et al. 2019), and hand shaking (Campbell et al. 2019). The probabilistic model embeds the user intent in their outputs, making it suitable to utilize probabilistic operators to adapt the model for goal and trajectory adaptation (Bajcsy et al. 2017; Koert et al. 2019), sequential intent estimation (Matsubara et al. 2015), and stiffness adaptation (Rozo et al. 2016).

While MP-based frameworks such as Coupled Cooperative Primitives (Huang et al. 2019a) have been applied to exoskeletons to minimize interaction forces between the user and exoskeleton, tightly coupled systems remain a challenge for MPs in pHRI applications. Rather than the robot system initiating the task process, Compliant Parametric Dynamic Movement Primitives (Ugur and Girgin 2020) actively incorporate user input by adapting stiffness based on the variance of the trajectory at that phase. However, such reliance on the human user to take over control might not be suitable for certain applications such as post-injury assistive robotics.

Applications of MPs in pHRI have generally relied on the modification of DMP formulations to embed interaction forces when motion planning for the robot arm. Despite the appeal for bespoke MP formulations, probabilistic approaches for MPs (without any modifications) provide robust solutions for a larger range of pHRI applications. Probabilistic operators also provide flexibility for concatenation and pruning of different objectives and observations. In the context of this work, the integration of interaction forces leverages probabilistic operators when building the model.

3 Methodology

3.1 Dynamic movement primitives

Dynamic Movement Primitives (DMP) generate globally stable trajectories by treating the trajectory as a spring-damper system with an attractor system to encode non-linear dynamics (Schaal et al. 2005). The attractor landscape is represented by a linear system of basis functions across time or phase.

While the original DMP formulation produced stable trajectories, a modified formulation overcame several undesired artifacts during edge case reproductions such as trajectory “mirroring” and accelerations that are beyond the capabilities of existing robots (Pastor et al. 2009). Each degree of freedom in the system is modeled as

The phase of the trajectory, s, is modeled as a first-order system with parameter \(\alpha \) for temporal scaling \(\tau {\dot{s}} = -\alpha s\). The attractor landscape, represented by the forcing function, f, is encoded using the weighted sum of Gaussian basis functions with centers, \({\varvec{c}}\), and is spread evenly across the trajectory’s temporal phase,

producing a \(M\times 1\) vector of weights, \({{\varvec{\omega }}}\), for each DoF of the trajectory. While the Gaussian kernel has been used in DMPs, any smooth functions or mollifiers could alternatively be used Ginesi et al. (2021).

Representing the forcing function (from T observations) as a linear system allows the weights to be obtained using linear least squares regression

3.2 Interaction primitives for HRI

For HRI tasks with non-physical interactions, Interaction Primitives (IP) (Ben Amor et al. 2014) used demonstrations to: (1) build a parameter distribution, \(p({\varvec{\theta }})\); and (2) use partial observations of phase-aligned trajectories to obtain the predictive distribution over DMP parameters.

IP models a distribution, \(p({\varvec{\theta }})\), over the parameters of a DMP, \(\omega \), along with the goal of the trajectory, g. Given multiple demonstrations, an estimated Gaussian distribution over the parameters, \({\varvec{\theta }}\), is generated. The predictive distribution is then obtained by using partial observations, \(\tau _o\) of a trajectory to obtain the conditional distribution, \(p({\varvec{\theta }}\vert \tau _o)\), by applying Bayes rule.

The likelihood, \(p(\tau _o\vert {\varvec{\theta }})\), is obtained by computing the forcing function of the observed trajectory, \(\tau _o\), and the weighting matrix, \(\varOmega \). The conditional distribution is then obtained by applying Gaussian conditioning on the joint distribution, \(p(\tau _o,{\varvec{\theta }})\), and the IP parameters generate the robotic response using DMP.

IP couples multiple DoF systems by extending the parameter set, \({\varvec{\theta }}\), to incorporate the weights and goals for each DoF, enabling multi-agent interactions. We note that IP requires the observations of the partial trajectories of either agent to predict the most likely set of parameters.

For de-coupled HRI systems, this spatial and temporal correlation allows for seamless interactions. However, in a coupled human–robot system, the endpoint of the robot and human are identical since they are located the the point of contact. A naïve approach to integrate the interaction forces in IP is to apply DMPs to the observed forces.

However, since DMPs are acceleration-based dynamical systems, the resultant parameters from interaction forces would be very noisy. Furthermore, the need for a goal in IP makes the use of interaction forces counter-intuitive. Thus, this limits the application of IP to physical interactions during pHRI.

3.3 Physical human robot interaction primitives

Physical Human Robot Interaction Primitives (pHRIP) aims to predict user intent by using interaction artifacts available in coupled human–robot dyads during pHRI. pHRIP extend upon IP to: (1) integrate observed interaction forces during demonstrations into the parameter distribution, \(p({\varvec{\theta }})\); and (2) use partial observations of phase-aligned interactions, \(\chi \), to obtain the predictive distribution over the pHRIP parameter set, \(p({\varvec{\theta }}\vert \chi )\), and generate a robotic response matching the user’s intent.

3.3.1 Building the pHRIP parameter distribution

As discussed in Sect. 3.1, a DMP encodes a single trajectory using a set of weights, \(\omega \), represented by M Gaussian basis functions equally spaced across the phase of the trajectory. In each trajectory, the observed interaction forces (T samples) are re-sampled into z samples, giving a \((z\times 1)\) vector, F. This phase-aligns the interaction forces to the trajectory.

For a n-DoF robotic system with d-DoF of observed interaction forces, the pHRIP parameters for a single trajectory is a \(((nM + dz) \times 1)\) vector:

We note that, in contrast to IP, the goal for each demonstrated trajectory is not included in \({{\varvec{\theta }}}\). Thus, given K demonstrated trajectories, the distribution over the pHRIP parameters, \(p({\varvec{\theta }})\), follows as:

3.3.2 Phase estimation for partial observations

Once the pHRIP distribution is built, partial observations are then used to predict the user’s intent. The observed interaction forces, \(F^*\), are re-sampled to the same frequency as the robotic system.

To understand the context of the partial observations, the current phase of the interaction is needed. This is performed using a multi-dimensional Dynamic Time Warping (DTW) algorithm (Shokoohi-Yekta et al. 2017), comparing a \((r\times n)\) reference trajectory, R, against the \((v\times n)\) sub-sequence observed, \(\nu \).

During the DTW process, the matrix used to compare R and \(\nu \) provides a \((v\times r)\) warping map, W, indicating the distance from one observation from R to another in \(\nu \). Using this warping map, a \((v\times 1)\) index vector \(\rho \) is obtained which outlines the phase alignment for each observation in the observed sub-sequence \(\nu \):

where col(.) is a function to obtain the column number of the vector/matrix.

3.3.3 pHRIP parameter set inference

To infer a set of pHRIP parameters which represents the user’s intent, the predictive distribution is obtained using partial observations, \(\chi \), between the user and the robot during pHRI. Applying Bayes rule, we obtain: \(p({{\varvec{\theta }}}\vert \chi ) \propto p(\chi \vert {{\varvec{\theta }}})p({{\varvec{\theta }}})\).

The pHRIP distribution consists of the interaction forces and robotic trajectory encoded using a \((dz\times 1)\) vector representing the phase alignment of the interaction forces, and the \((nM\times 1)\) Gaussian basis function weights from DMPs. Thus, the likelihood distribution, \(p(\chi \vert {{\varvec{\theta }}})\), is modeled using a Gaussian distribution:

where \(\sigma ^2\) is the observation variance, and \(\varOmega \) is a \((v(d+n)\times (dz+nM))\) weight matrix for the observations using only the interaction forces:

The weight matrix for each DoF of the phase-aligned interaction forces, \(\lambda \), is a \((v\times z)\) matrix, starting off as a zero matrix and filled based on the index vector \(\rho \). For each row of \(\lambda \), corresponding with each sample v of \(\nu \), the value of the column numbered closest to integer-rounded \(z\rho _v\) is filled:

If the trajectory of the robot is available from partial observations, pHRIP can utilize both interaction forces along with robotic trajectory, where the weight matrix, \(\varOmega _{I+T}\), is defined as:

where \({\varvec{\phi }}\) is a \((\nu \times M)\) matrix representing the Gaussian basis functions for each dimension of the robot.

Given the likelihood, \(p(\chi \vert {{\varvec{\theta }}})\), is modeled with the robotic trajectory, the unavailable trajectories are set to 0, giving \(\chi = [{F_1^*}^T,\ldots ,{F_d^*}^T, {{\varvec{0}}}_{(1\times vn)}]^T\) where \(F^*\) are the interaction forces observed. The joint distribution is then defined as:

where \(A = \sigma ^2I + \varOmega \varSigma _{{\varvec{\theta }}}\varOmega ^T\), and the mean and variance of conditional distribution, \(p({{\varvec{\theta }}}\vert \chi )\) is derived as

A new set of pHRIP parameters, \(\theta _n\), is then sampled from this conditional distribution. The robot then generates a new trajectory using the subset DMP parameters and the estimated phase of the final observation, \(\rho _v\), continuing on from its last position. The endpoint of the reference trajectory is used as the goal position of the new trajectory.

4 System setup

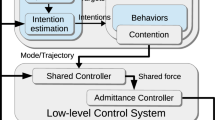

A coupled human–robot dyad is used to validate pHRIP since this is an arrangement commonly seen in pHRI applications. The robotic system in the coupled dyad, shown in Fig. 2, consists of a 7 DoF Sawyer robotic manipulator (HAHN Robotics, Germany) with a 6-axis Axia80 force-torque sensor (ATI Industrial Automation, USA) affixed between the endpoint and a bespoke handle. Robotic data from the Sawyer robotic manipulator are recorded at 100 Hz while wrench data from the force-torque sensor is recorded at 125 Hz.

The robotic arm utilizes Rethink Robotics’ proprietary software, Intera SDK, and an endpoint velocity threshold of 2.5 cm s\(^{-1}\) is used to determine the start and end of the demonstration. In all trials, generated trajectories were sent to the robot’s native motion controller interface to be performed in an open-loop fashion. While it is possible to integrate low-level robotic feedback controllers with pHRIP, this article focuses on the integration of interaction forces for high-level trajectory generation which reflects the user’s intention.

5 User-directed reaching experiment

An experiment based on user-directed reaching movements was conducted to validate pHRIP across different users. Four participants (3 male and 1 female) took part in the experiment. Additional analysis was conducted to compare the results when applied to Cartesian space (pHRIP) and joint state space (pHRIP-q). During user interactions, the robotic arm was set up to enter an orientation-locked gravity compensation mode.

Participants were instructed to move the handle from a defined starting position to one of two target surfaces (Fig. 2) in a “natural manner”. Since DTW is used to phase-align the observed interaction forces, a reference trajectory is recorded for each trial to facilitate this function and obtain the end pose. No other part of the reference trajectory is utilized once the phase estimate of the interaction forces are obtained. Each trial consists of a reference and a test trajectory, with participants instructed to perform both trajectories “consistently”

A total of 90 training demonstrations were recorded (45 for each target surface) using kinesthetic teaching via the bespoke handle for participants. During the test trajectory, participants were instructed to release the handle after 0.5–1.0 s while the new trajectory is generated and performed by the robot arm.

Partial observations for IP consist of Cartesian trajectory while pHRIP utilized Cartesian interaction forces only. Partial observations of both interaction forces and trajectory were used to perform further comparisons. Prior to the start of the experiment, participants were given a 5-min window to interact with the robotic manipulator to familiarize themselves with the setup.

The conditional weights obtained conditioned upon partial observations of: a trajectory; and b interaction forces. These correlate with the generated trajectories seen in Fig. 3b, c, respectively. c Shows the variance in the training weights, noting the large variance towards the end of the trajectory contributing to conflicting IP conditional weights

5.1 Results

A total of 28 trials were conducted in the experiment. For each trial, participants demonstrated a reference trajectory as shown in Fig. 3a. Partial observations from the test trajectory were then used to generate new robotic trajectories. Trajectories were generated post hoc using IP and pHRIP. Partial observations of trajectories were used for IP and the resultant output shown in Fig. 3b. For pHRIP, partial observations of interaction forces were used with the resultant outputs shown in Fig. 3c.

Visual inspection of the trajectories between IP and pHRIP indicates the advantage of pHRIP over IP despite no information on the robot trajectory. Using only interaction forces, the trajectories generated from pHRIP followed the shape of those in the reference trajectories. This is evident in the DTW scores in Table 1 of the generated trajectories indicating a better match in shape to the reference trajectory.

The trajectories generated by IP are quite ill-formed, with completely erroneous trajectory shapes despite empirical evidence on its efficacy (Ben Amor et al. 2014). We posit the variance in the training demonstrations towards the end of the trajectory contributed towards the sensitivity of the distribution. Figure 4c highlights the large variances of the basis function weights in all three axis at different sections of the trajectory. The effect of this variance can be seen in the generated conditional distribution shown in Fig. 4a, noting that weights which are completely erroneous occur during the sections of trajectories with high variance.

In contrast, the interaction forces overcome this sensitivity, generating a better conditional distribution as highlighted in Fig. 4b despite the high variance. Therefore, looking at the results for pHRIP in Table 1, the use of force and trajectory observations account for the degradation in performance.

5.2 Cartesian versus joint trajectories

While pHRIP has been shown to generate trajectories that reflect the user’s intention in Cartesian space, for most robotic arm control systems, action policies generally operate in joint space. Further analysis was conducted using the data from the user-directed reaching experiment to investigate the application of pHRIP in joint state space (pHRIP-q). The joint states of the robot and the Cartesian interaction forces at the endpoint were used to build the weight distribution.

When assessing the performance of the pHRIP-q variant, the robotic response (joint states) are re-mapped to Cartesian space (endpoint) using forward kinematics. Comparisons and analysis of the pHRIP-q are all based off the robot endpoint rather than the joint states.

A visualization of the discrepancy between the endpoint in the reference trajectories and those generated from IP, pHRIP, and pHRIP-q. Ellipsoid fitting indicating the spread is performed using Li and Griffiths (2004)

For both the original human target reaching experiment and this analysis, identical parameters were used with 20 basis functions, \(K = 80\) N/m, \(D = 20\) Ns/m, \(\tau = 0.35\), \(h = 0.0008\), and \(\alpha = 1\). Forward kinematics was performed for the pHRIP-q trajectories to obtain their Cartesian trajectories, showing similar trajectory shapes to the reference trajectories (see Fig. 3d). This is supported by the mean RMSE and DTW distances as tabulated in Table 1.

Initial observations of the overall results suggest that pHRIP-q is the better variant. However, mapping out the vectors showing the difference between the reference and resultant endpoint, as seen in Fig. 5, suggest that the appropriate pHRIP variant will depend on the priority of the task. For example, pick and place operations of heavy objects will prioritize the precise endpoint of the trajectory, making pHRIP more appropriate. Conversely, if the task is to conform to the shape of a trajectory performed by an expert, as is commonly seen during physical rehabilitation, it may be more suitable to use the joint variant of pHRIP.

In this experiment, the trajectory of the endpoint is relatively small when considering the total work space of the robotic arm. In joint space, this may result in certain joints which have a small range across the demonstrations, a phenomenon exacerbated by the redundancy introduced with the 7-DoF robot and orientation-locked endpoint trajectories (4 redundant joints). This can account for the similarity in the resultant RMSE and DTW distances in pHRIP-q when considering the inclusion of observing joint trajectories when obtaining a conditional set of pHRIP parameters. For a lower DoF robotic system, the necessity for all joints to move when moving in Cartesian space may mitigate this effect.

While the results show that appropriate consideration is required when choosing which variant to use, they show promising indications for the integration of haptic information during motor skill learning in pHRI applications. One potential for pHRI application is in training and development systems, where expert demonstrations may be collected remotely via a haptic interface, providing intuitive motor skill learning remotely. Learning from the interaction forces on the haptic interface is transferable across various platforms provided kinesthetic teaching of the robotic response is performed.

5.2.1 Influence of observation length

The influence of the observation length between trajectories generated using IP and pHRIP can be seen in Fig. 6, indicating the DTW distances for trajectories generated using pHRIP are much lower than those of IP. As observation lengths increase, errors from the generated trajectories would approach zero, giving diminishing returns for pHRIP or IP. While a longer observation can improve the performance of HRI applications using movement primitives, this is undesirable in pHRI applications since the goal of the robot is to contribute meaningfully as soon as possible. Trajectories from pHRIP consistently produce better trajectories when compared against IP, reinforcing our hypothesis that using interaction forces during pHRI can help reduce uncertainty.

The results from the planar obstacle avoidance task. a The box plot and spread for each observation length highlights the RMSE spread between IP and pHRIP for the 20 testing trajectories across varying observation lengths; b displays the training trajectories recorded to avoid the obstacle; and c shows the trajectories generated by pHRIP and IP when 30% of the trajectory is observed

We note that the exponential fit used to model the DTW distance and the observation length phase produces a profile for pHRIP-q which is vastly different to pHRIP. As mentioned above, it may be possible that this particular task is well-suited for the joint state variant for pHRIP. One other possible explanation is that the model is over-fitted, with not enough samples to accurately determine the correct profile for pHRIP-q. However, the results from pHRIP-q still improve upon IP, supporting the motivation for pHRIP.

6 Planar target-reaching task

A planar target-reaching task was conducted to validate pHRIP, comparing against IP, with the setup shown in Fig. 7. An object was place in between the start and the end of the trajectory, creating two distinct paths for the robot to reach the end position. A total of 30 training trajectories and 20 testing trajectories were recorded with, an even split for each path, while the robotic arm is setup to enter an orientation-locked zero-g mode constrained to the XY plane.

The analysis and trajectory generation for the planar obstacle avoidance experiment were conducted post-hoc. Thus, all recorded trajectories and interaction forces were re-sampled to 400 and 500 samples respectively (matching the 100 Hz and 125 Hz data collection frequency). A comparison between IP and pHRIP is performed using partial observations of: (a) trajectory only (IP); and (b) interaction force only (pHRIP).

6.1 Results

For the 20 test trials conducted in the planar validation, the RMSE between the generated trajectories and their respective reference trajectories was calculated. The pHRIP and DMP tests were conducted post-hoc against observation lengths (as a % of the total trajectory) varying from 10 to 50%.

Results shown in Fig. 8a highlight the ability for pHRIP to address ambiguities in the trajectories, utilizing only interaction forces to generate the intended path. The critical advantage of pHRIP over DMPs is shown when there are less observations such as those when only 10% of the trajectory is observed.

While the results may indicate that the advantage of pHRIP diminishes as more observations are obtained, the generated trajectories highlight an aspect of motor skill learning which is not inherited through DMPs. The task to avoid the static obstacle is redundant, meaning there are multiple ways to complete the task. During the recording of training trajectories, two distinct paths were taught kinesthetically by the demonstrator, showing this phenomenon as seen in Fig. 8b. From Fig. 8c, trajectories generated by DMP (in red) all collide with the obstacle severely while the same only occur to 25% of pHRIP trajectories. Observations of the pHRIP trajectories also show decreased severity during collisions, with only two trajectories (10%) clearly going through the obstacle.

The results in Fig. 8c highlight the limitation of IPs in multi-modal distributions which is overcome by using the interaction forces in pHRIP. We observe that IPs, built only upon trajectories, create an “averaging” effect. By adding additional DoF from interaction forces, pHRIP requires less observations to achieve the correct trajectory, reinforcing our belief that integrating interaction artifacts into conventional frameworks improve motor skill learning for pHRI systems.

7 Cartesian target-reaching application

Further experiments were conducted to reinforce the applicability of pHRIP to estimate user intention during pHRI. The user is tasked with moving the endpoint from the same starting position to various end regions. In total, 4 configurations were setup using a number of blocks which simulate changing task parameters and environments, as is common in pHRI applications. For each configuration, 10 training trajectories were recorded and are shown in Fig. 9, while the 4 configuration setups are seen in Fig. 10. The pHRIP parameters from all 40 trajectories are built into a single distribution, \(p(\theta )\).

To validate pHRIP in this Cartesian target-reaching task, a total of 12 trials were conducted in Cartesian space. Similar to the user-directed target reaching task, the user releases the handle after 0.5–1.0 s, allowing the robot to perform the trajectory generated by pHRIP. Successful trials are defined as generated trajectories that reach their intended end zone and follow the shape of the reference trajectory. For all trials, identical sets of parameters were used with 30 basis functions, \(K = 80\) N/m, \(D = 20\) Ns/m, \(h = 0.0008\), and \(\alpha = 0.8\).

One issue which arises when the number of samples for each trajectory are different in DMP is the sum of activation from the basis functions. A static value of \(\tau \) will affect the quality of the reproduced trajectory based on the sample length. An exponential model is used to determine the relationship between the trajectory length (number of samples \(\gamma \)) and a \(\tau \) value. Assuming that the other DMP parameters are constant, this ensures that the sum of Gaussian basis function activation, across all samples, is above 0.5. For all trials in the Cartesian obstacle avoidance experiment, the model and its coefficients used are

For each of the 12 trials, an estimate of \(\tau \) parameter is performed using the reference trajectory length.

7.1 Results

Of the 12 trials, there were 4 unsuccessful trajectories, which all veered away from the intended end zone towards the end of the trajectory, as seen in Fig. 11a. Visual observations of the trajectories show that unsuccessful trajectories were caused by inaccurate estimates of the \(\tau \) parameter. This phenomenon is evident in Fig. 11b where the forcing function value for the unsuccessful trajectories drops to 0, causing the trajectories to deviate significantly. For the successful trials, pHRIP was able to correctly infer the user’s intent when generating trajectories to avoid the obstacles. This is supported by the measures of similarity as shown in Table 2, and the image sequences taken from the experiments highlight successful and unsuccessful trials (Fig. 12).

8 Discussion

The motivation of pHRIP is to build upon IPs by integrating physical interaction forces in a coupled system during pHRI. As IPs utilize observed trajectories, the identical location of the user endpoint and the robot endpoint precludes its use in pHRI applications. Both methods encode trajectories using DMP parameters.

A similar method for encoding trajectories is Probabilistic Movement Primitives (ProMP) (Paraschos et al. 2013). Rather than encoding the attractor landscape (also known as the “forcing function”) and generating accelerations in DMP, ProMP encodes the raw position and velocity separately using Gaussian basis functions equally spaced across the phase of the trajectory. Removing the reliance on a dynamical system enables primitive blending and co-activation of multiple primitives during the execution and generation of a new trajectory (Paraschos et al. 2018). This independence also better facilitates the modeling of a sequence of primitives for multi-MP based frameworks.

Between ProMP, IP, and pHRIP, all three methods utilize probabilistic operators to couple multiple DoFs of data together. However, the reliance on the DMP framework provides properties which are associated with the dynamical system. This facilitates a representative comparison between IP and pHRIP to demonstrate pHRIP’s ability to extend upon IP and integrate interaction forces during trajectory generation. A similar method to integrate interaction forces into ProMPs can be seen in prior works (Dermy et al. 2017).

Other DMP-based probabilistic methods for capturing user intent include stylistic DMPs (Matsubara et al. 2010) and Associative Skill Memories (ASM) (Pastor et al. 2012). While both use similar probabilistic operators to capture variances across multiple demonstrations, their integration of user intent and input differ greatly from pHRIP.

Stylistic DMP embeds a weighting factor in the Gaussian kernels when encoding the attractor landscape to provide parametric distinction of different intent. The discrete nature of the parameters precludes its use in coupled human–robot systems since there are many factors which influence the observed interaction forces. While the interaction forces could be parameterized, such as using the maximum or RMS value, these metrics are time-dependent and are influenced by the observation window, making it unsuitable.

ASM constrains user intent by attaching a Dynamic Bayesian Network (DBN) to probabilistic infer the next state of the human–robot system. Integrating observed interaction forces in this DBN can infer user intent, however, a similar issue exist for parameterizing the interaction forces. Furthermore, the DBN would be more akin to hierarchical frameworks which utilize individual DMP systems such as seen in Mei et al. (2017), where an arbitration occurs to determine the appropriate DMP.

The behavior of pHRIP for known setups. *Indicates an unsuccessful trial with the red ellipse showing the collisions. All collisions occurred at the end of their trajectory due to inaccurate estimates of the \(\tau \) parameter as discussed in Sect. 7.1 (Color figure online)

8.1 Limitations and future work

One limitation on pHRIP is the inheritance of DMP parameters. While they provide various functionalities, such as temporal invariance and amplitude control, the parameters (K, D, \(\tau \), and \(\alpha \)) are still empirically obtained, with various factors that influence which set of parameters is best suited for any particular application. The original DMP framework was designed for one-shot LfD, tracking the start and goal positions while encoding the non-linear “forcing function”. However, for multiple trajectories, each with their own start and goal positions, generalizing the DMP weights is non-trivial.

Probabilistic methods are popular since a distribution of DMP weights can be generated, allowing probabilistic operators to infer a new set of DMP weights. A contemporary non-probabilistic method in Ginesi et al. (2021) aligns the DMP-based weights from each trajectory into the same start and end points, and calculates a generalized set of DMP weights using linear regression. Temporal scaling of the aligned DMPs enable trajectories of various lengths to be captured in the generalized set of DMP weights.

A constraint for pHRIP is the reliance on DTW to phase align the partial observations, requiring a reference trajectory. For a multi-modal task, it is possible to bypass this constraint by using a single reference trajectory for each trajectory mode. However, the performance of DTW degrades if there are any adaptations to the task at hand. A common approach is to use Bayesian statistics to embed the phase of the trajectory into the built distribution, such that observations can perform phase estimation and user intent simultaneously. This has been realized using Interactive ProMPs (Maeda et al. 2017a) and Bayesian IPs (Campbell and Ben Amor 2017).

Future work for pHRIP will look to address these limitations, finding ways to handle DMP parameters and phase estimation without the need for additional reference trajectories. Furthermore, more complex scenarios need to be considered in future work, focusing on the robustness of pHRIP during challenging circumstances which are present in pHRI. These include sparse physical interactions which is limited by the need for phase estimation using DTW. In the context of human biomechanics, the interaction forces during pHRI can also be derived from external systems decoupled from the robotic platform such as biomechanical models (Rückert and d’Avella 2013) and physiological measures (Peternel et al. 2018). Thus, future work will also explore context-dependent observations for pHRIP, leveraging knowledge on the non-linear behavior of human locomotion and force generation (D’Avella et al. 2003; Hogan 1985).

9 Conclusion

This article introduces physical Human Robot Interaction Primitives (pHRIP), a framework that infers the intent of the user to generate the appropriate robotic response during physical human robot interactions using only the interaction forces. pHRIP extends upon IPs by embedding interaction forces in the distribution over the robotic response to allow for user intent inference when generating robotic trajectories.

A series of experiments based on target reaching tasks were conducted to validate the efficacy of pHRIP, showing accurate inference of user intent with a small number of observations. Comparisons of a planar task demonstrated the advantage of utilizing interaction forces in pHRIP, instead of the robot trajectory in IPs, during multi-modal tasks for pHRI. The adaptation of pHRIP to novel situations also demonstrates its adaptability.

While the experiments to validate pHRIP are approached through the lenses of motion planning to reflect user intent, developing a control system which derives from pHRIP outputs will create opportunities to improve user assistance during pHRI.

References

Argall, B. D., Chernova, S., Veloso, M., & Browning, B. (2009). A survey of robot learning from demonstration. Robotics and Autonomous Systems, 57(5), 469–483. https://doi.org/10.1016/j.robot.2008.10.024.

Bajcsy, A., Losey, D. P., & O’malley, M. K., & Dragan, A. D. (2017). Learning robot objectives from physical human interaction. Proceedings of Machine Learning Research,78, 217–226.

Ben Amor, H., Neumann, G., Kamthe, S., Kroemer, O., & Peters, J. (2014). Interaction primitives for human–robot cooperation tasks. In Proceedings—IEEE international conference on robotics and automation (pp. 2831–2837). Institute of Electrical and Electronics Engineers Inc. https://doi.org/10.1109/ICRA.2014.6907265

Billard, A., Calinon, S., Dillmann, R., & Schaal, S. (2008). Robot programming by demonstration. In B. Siciliano & O. Khatib (Eds.), Springer handbook of robotics (pp. 1371–1394). Berlin: Springer. https://doi.org/10.1007/978-3-540-30301-5_60.

Bloss, R. (2016). Collaborative robots are rapidly providing major improvements in productivity, safety, programing ease, portability and cost while addressing many new applications. Industrial Robot, 43(5), 463–468. https://doi.org/10.1108/IR-05-2016-0148.

Calinon, S., D’Halluin, F., Sauser, E. L., Caldwell, D. G., & Billard, A. G. (2010). Learning and reproduction of gestures by imitation. IEEE Robotics and Automation Magazine,17(2), 44–54. https://doi.org/10.1109/MRA.2010.936947

Campbell, J., & Ben Amor, H. (2017). Bayesian interaction primitives: A SLAM approach to human–robot interaction. In S. Levine, V. Vanhoucke & K. Goldberg (Eds.) Proceedings of the 1st annual conference on robot learning, proceedings of machine learning research, PMLR (Vol. 78, pp. 379–387).

Campbell, J., Stepputtis, S., & Ben Amor, H. (2019). Probabilistic multimodal modeling for human–robot interaction tasks. In Proceedings of robotics: Science and systems. FreiburgimBreisgau, Germany. https://doi.org/10.15607/RSS.2019.XV.047

Carmichael, M. G., Liu, D., & Waldron, K. J. (2017). A framework for singularity-robust manipulator control during physical human–robot interaction. The International Journal of Robotics Research, 36(5–7), 861–876. https://doi.org/10.1177/0278364917698748.

Carmichael, M. G., Aldini, S., Khonasty, R., Tran, A., Reeks, C., Liu, D., Waldron, K. J., & Dissanayake, G. (2019). The ANBOT: An intelligent robotic co-worker for industrial abrasive blasting. In 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 8026–8033). IEEE, Macau, China. https://doi.org/10.1109/iros40897.2019.8967993

Cui, Y., Poon, J., Matsubara, T., Miro, J. V., Sugimoto, K., & Yamazaki, K. (2016). Environment-adaptive interaction primitives for human–robot motor skill learning. In IEEE-RAS international conference on humanoid robots (pp. 711–717). IEEE Computer Society. https://doi.org/10.1109/HUMANOIDS.2016.7803352

Cui, Y., Poon, J., Miro, J. V., Yamazaki, K., Sugimoto, K., & Matsubara, T. (2019). Environment-adaptive interaction primitives through visual context for human–robot motor skill learning. Autonomous Robots, 43(5), 1225–1240. https://doi.org/10.1007/s10514-018-9798-2.

D’Avella, A., Saltiel, P., & Bizzi, E. (2003). Combinations of muscle synergies in the construction of a natural motor behavior. Nature Neuroscience,6(3), 300–308. https://doi.org/10.1038/nn1010.

Denisa, M., Gams, A., Ude, A., & Petric, T. (2016). Learning compliant movement primitives through demonstration and statistical generalization. IEEE/ASME Transactions on Mechatronics, 21(5), 2581–2594. https://doi.org/10.1109/TMECH.2015.2510165.

Dermy, O., Paraschos, A., Ewerton, M., Peters, J., Charpillet, F., & Ivaldi, S. (2017). Prediction of intention during interaction with iCub with probabilistic movement primitives. Frontiers Robotics AI, 4, 45. https://doi.org/10.3389/frobt.2017.00045.

Djuric, A. M., Urbanic, R. J., & Rickli, J. L. (2016). A framework for collaborative robot (CoBot) integration in advanced manufacturing systems. SAE International Journal of Materials and Manufacturing, 9(2), 457–464.

Ferraguti, F., Talignani Landi, C., Sabattini, L., Bonfé, M., Fantuzzi, C., & Secchi, C. (2019). A variable admittance control strategy for stable physical human–robot interaction. The International Journal of Robotics Research,38(6), 747–765. https://doi.org/10.1177/0278364919840415.

Gams, A., Nemec, B., Ijspeert, A. J., & Ude, A. (2014). Coupling movement primitives: Interaction with the environment and bimanual tasks. IEEE Transactions on Robotics, 30(4), 816–830. https://doi.org/10.1109/TRO.2014.2304775.

Gams, A., Petrič, T., Do, M., Nemec, B., Morimoto, J., Asfour, T., & Ude, A. (2016). Adaptation and coaching of periodic motion primitives through physical and visual interaction. Robotics and Autonomous Systems, 75, 340–351. https://doi.org/10.1016/j.robot.2015.09.011.

Ginesi, M., Meli, D., Calanca, A., Dall’Alba, D., Sansonetto, N., & Fiorini, P. (2019). Dynamic movement primitives: Volumetric obstacle avoidance. In 2019 19th international conference on advanced robotics (ICAR) (pp. 234–239). Institute of Electrical and Electronics Engineers (IEEE). https://doi.org/10.1109/icar46387.2019.8981552

Ginesi, M., Sansonetto, N., & Fiorini, P. (2021). Overcoming some drawbacks of Dynamic Movement Primitives. Robotics and Autonomous Systems, 144, 103844. https://doi.org/10.1016/J.ROBOT.2021.103844.

Haddadin, S., De Luca, A., & Albu-Schäffer, A. (2017). Robot collisions: A survey on detection, isolation, and identification. IEEE Transactions on Robotics, 33(6), 1292–1312. https://doi.org/10.1109/TRO.2017.2723903.

Hamaya, M., Matsubara, T., Noda, T., Teramae, T., & Morimoto, J. (2017). Learning assistive strategies for exoskeleton robots from user–robot physical interaction. Pattern Recognition Letters, 99, 67–76. https://doi.org/10.1016/j.patrec.2017.04.007.

Hoffmann, H., Pastor, P., Park, D. H., & Schaal, S. (2009). Biologically-inspired dynamical systems for movement generation: Automatic real-time goal adaptation and obstacle avoidance. In 2009 IEEE international conference on robotics and automation (pp. 2587–2592). Institute of Electrical and Electronics Engineers (IEEE). https://doi.org/10.1109/robot.2009.5152423

Hogan, N. (1985). The mechanics of multi-joint posture and movement control. Biological Cybernetics, 52(5), 315–331. https://doi.org/10.1007/BF00355754.

Hogan, N., & Sternad, D. (2013). Dynamic primitives in the control of locomotion. Frontiers in Computational Neuroscience, 7, 71. https://doi.org/10.3389/fncom.2013.00071.

Huang, R., Cheng, H., Qiu, J., & Zhang, J. (2019). Learning physical human–robot interaction with coupled cooperative primitives for a lower exoskeleton. IEEE Transactions on Automation Science and Engineering, 16(4), 1566–1574. https://doi.org/10.1109/TASE.2018.2886376.

Huang, Y., Rozo, L., Silvério, J., & Caldwell, D. G. (2019). Kernelized movement primitives. The International Journal of Robotics Research, 38(7), 833–852. https://doi.org/10.1177/0278364919846363.

Ijspeert, A. J. (2008). Central pattern generators for locomotion control in animals and robots: A review. Neural Networks, 21(4), 642–653. https://doi.org/10.1016/j.neunet.2008.03.014.

Karlsson, M., Carlson, F. B., Robertsson, A., & Johansson, R. (2017). Two-degree-of-freedom control for trajectory tracking and perturbation recovery during execution of dynamical movement primitives. IFAC-PapersOnLine, 50(1), 1923–1930. https://doi.org/10.1016/j.ifacol.2017.08.383.

Koert, D., Pajarinen, J., Schotschneider, A., Trick, S., Rothkopf, C., & Peters, J. (2019). Learning intention aware online adaptation of movement primitives. IEEE Robotics and Automation Letters, 4(4), 3719–3726. https://doi.org/10.1109/LRA.2019.2928760.

Kulvicius, T., Biehl, M., Aein, M. J., Tamosiunaite, M., & Wörgötter, F. (2013). Interaction learning for dynamic movement primitives used in cooperative robotic tasks. Robotics and Autonomous Systems, 61(12), 1450–1459. https://doi.org/10.1016/j.robot.2013.07.009.

Kyrarini, M., Haseeb, M. A., Ristić-Durrant, D., & Gräser, A. (2019). Robot learning of industrial assembly task via human demonstrations. Autonomous Robots, 43(1), 239–257. https://doi.org/10.1007/s10514-018-9725-6.

Lai, Y., Sutjipto, S., Clout, M. D., Carmichael, M. G., & Paul, G. (2018). GAVRe2: Towards data-driven upper-limb rehabilitation with adaptive-feedback gamification. In 2018 IEEE international conference on robotics and biomimetics (ROBIO), IEEE (pp. 164–169). https://doi.org/10.1109/ROBIO.2018.8665105

Li, Q., & Griffiths, J. G. (2004). Least squares ellipsoid specific fitting. In Proceedings—geometric modeling and processing 2004 pp. 335–340. https://doi.org/10.1109/gmap.2004.1290055

Maeda, G., Ewerton, M., Neumann, G., Lioutikov, R., & Peters, J. (2017). Phase estimation for fast action recognition and trajectory generation in human–robot collaboration. The International Journal of Robotics Research, 36(13–14), 1579–1594. https://doi.org/10.1177/0278364917693927.

Maeda, G. J., Neumann, G., Ewerton, M., Lioutikov, R., Kroemer, O., & Peters, J. (2017). Probabilistic movement primitives for coordination of multiple human–robot collaborative tasks. Autonomous Robots, 41(3), 593–612. https://doi.org/10.1007/s10514-016-9556-2.

Matsubara, T., Hyon, S. H., & Morimoto, J. (2010). Learning stylistic dynamic movement primitives from multiple demonstrations. In IEEE/RSJ 2010 international conference on intelligent robots and systems, IROS 2010—conference proceedings (pp. 1277–1283). https://doi.org/10.1109/IROS.2010.5651049

Matsubara, T., Miro, J. V., Tanaka, D., Poon, J., & Sugimoto, K. (2015). Sequential intention estimation of a mobility aid user for intelligent navigational assistance. In Proceedings—IEEE international workshop on robot and human interactive communication (Vol. 2015-November, pp. 444–449). Institute of Electrical and Electronics Engineers Inc. https://doi.org/10.1109/ROMAN.2015.7333580

Mei, Z., Chen, Y., Jiang, M., Wu, H., & Cheng, L. (2017). Mobile robots path planning based on dynamic movement primitives library. In Chinese control conference, CCC (pp. 6906–6911). IEEE Computer Society. https://doi.org/10.23919/ChiCC.2017.8028446

Nemec, B., Likar, N., Gams, A., & Ude, A. (2018). Human robot cooperation with compliance adaptation along the motion trajectory. Autonomous Robots, 42(5), 1023–1035. https://doi.org/10.1007/s10514-017-9676-3.

Paraschos, A., Daniel, C., Peters, J. R., & Neumann, G. (2013). Probabilistic Movement Primitives. In: C. J. C. Burges, L. Bottou, M. Welling, Z. Ghahramani & K. Q. Weinberger (Eds.) Advances in neural information processing systems (Vol. 26, pp. 2616–2624). Curran Associates, Inc

Paraschos, A., Daniel, C., Peters, J., & Neumann, G. (2018). Using probabilistic movement primitives in robotics. Autonomous Robots, 42(3), 529–551. https://doi.org/10.1007/s10514-017-9648-7.

Pastor, P., Hoffmann, H., Asfour, T., & Schaal, S. (2009). Learning and generalization of motor skills by learning from demonstration. In IEEE international conference on robotics and automation (pp. 763–768). Institute of Electrical and Electronics Engineers (IEEE). https://doi.org/10.1109/robot.2009.5152385

Pastor, P., Kalakrishnan, M., Righetti, L., & Schaal, S. (2012). Towards associative skill memories. In IEEE-RAS international conference on humanoid robots (pp. 309–315). IEEE. https://doi.org/10.1109/HUMANOIDS.2012.6651537

Pervez, A., & Lee, D. (2018). Learning task-parameterized dynamic movement primitives using mixture of GMMs. Intelligent Service Robotics, 11(1), 61–78. https://doi.org/10.1007/s11370-017-0235-8.

Pervez, A., Latifee, H., Ryu, J. H., & Lee, D. (2019). Motion encoding with asynchronous trajectories of repetitive teleoperation tasks and its extension to human-agent shared teleoperation. Autonomous Robots, 43(8), 2055–2069. https://doi.org/10.1007/s10514-019-09853-4.

Peternel, L., Tsagarakis, N., Caldwell, D., & Ajoudani, A. (2018). Robot adaptation to human physical fatigue in human–robot co-manipulation. Autonomous Robots, 42(5), 1011–1021. https://doi.org/10.1007/s10514-017-9678-1.

Prada, M., Remazeilles, A., Koene, A., & Endo, S. (2013). Dynamic movement primitives for human–robot interaction: Comparison with human behavioral observation. In IEEE international conference on intelligent robots and systems (pp. 1168–1175). https://doi.org/10.1109/IROS.2013.6696498

Rozo, L., Calinon, S., Caldwell, D. G., Jiménez, P., & Torras, C. (2016). Learning physical collaborative robot behaviors from human demonstrations. IEEE Transactions on Robotics, 32(3), 513–527. https://doi.org/10.1109/TRO.2016.2540623.

Rückert, E., & d’Avella, A. (2013). Learned parametrized dynamic movement primitives with shared synergies for controlling robotic and musculoskeletal systems. Frontiers in Computational Neuroscience. https://doi.org/10.3389/fncom.2013.00138.

Schaal, S., Peters, J., Nakanishi, J., & Ijspeert, A. (2005). Learning movement primitives. Springer Tracts in Advanced Robotics, 15, 561–572. https://doi.org/10.1007/11008941_60.

Shokoohi-Yekta, M., Hu, B., Jin, H., Wang, J., & Keogh, E. (2017). Generalizing DTW to the multi-dimensional case requires an adaptive approach. Data Mining and Knowledge Discovery, 31(1), 1–31. https://doi.org/10.1007/s10618-016-0455-0.

Sidiropoulos, A., Karayiannidis, Y., & Doulgeri, Z. (2019). Human–robot collaborative object transfer using human motion prediction based on dynamic movement primitives. In 2019 18th European control conference, ECC 2019 (pp. 2583–2588). Institute of Electrical and Electronics Engineers Inc. https://doi.org/10.23919/ECC.2019.8796249

Sternad, D. (2018). It’s not (only) the mean that matters: Variability, noise and exploration in skill learning. Current Opinion in Behavioral Sciences,20, 183–195. https://doi.org/10.1016/J.COBEHA.2018.01.004.

Ugur, E., & Girgin, H. (2020). Compliant parametric dynamic movement primitives. Robotica, 38(3), 457–474. https://doi.org/10.1017/S026357471900078X.

Yang, C., Zeng, C., Fang, C., He, W., & Li, Z. (2018). A DMPs-based framework for robot learning and generalization of humanlike variable impedance skills. IEEE/ASME Transactions on Mechatronics, 23(3), 1193–1203. https://doi.org/10.1109/TMECH.2018.2817589.

Zhou, Y., Gao, J., & Asfour, T. (2020). Movement primitive learning and generalization: Using mixture density networks. IEEE Robotics and Automation Magazine, 27(2), 22–32. https://doi.org/10.1109/MRA.2020.2980591.

Acknowledgements

The authors would like to thank the editors and reviewers of this manuscript for their detailed feedback for the article. The authors would also like to thank Jaime Valls Miros & Marc Carmichael for their insightful comments, discussions, and feedback about the article. This research is supported by an Australian Government Research Training Program scholarship and a UTS FEIT HDR Collaboration Experience Award.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lai, Y., Paul, G., Cui, Y. et al. User intent estimation during robot learning using physical human robot interaction primitives. Auton Robot 46, 421–436 (2022). https://doi.org/10.1007/s10514-021-10030-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-021-10030-9