Abstract

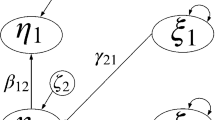

Generalized structured component analysis (GSCA) is a structural equation modeling (SEM) procedure that constructs components by weighted sums of observed variables and confirmatorily examines their regressional relationship. The research proposes an exploratory version of GSCA, called exploratory GSCA (EGSCA). EGSCA is analogous to exploratory SEM (ESEM) developed as an exploratory factor-based SEM procedure, which seeks the relationships between the observed variables and the components by orthogonal rotation of the parameter matrices. The indeterminacy of orthogonal rotation in GSCA is first shown as a theoretical support of the proposed method. The whole EGSCA procedure is then presented, together with a new rotational algorithm specialized to EGSCA, which aims at simultaneous simplification of all parameter matrices. Two numerical simulation studies revealed that EGSCA with the following rotation successfully recovered the true values of the parameter matrices and was superior to the existing GSCA procedure. EGSCA was applied to two real datasets, and the model suggested by the EGSCA’s result was shown to be better than the model proposed by previous research, which demonstrates the effectiveness of EGSCA in model exploration.

Similar content being viewed by others

References

Adachi, K. (2009). Joint Procrustes analysis for simultaneous nonsingular transformation of component score and loading matrices. Psychometrika, 74, 667–683.

Adachi, K. (2013). Generalized joint Procrustes analysis. Computational Statistics, 28, 2449–2464.

Alamer, A. (2022). Exploratory structural equation modeling (ESEM) and bifactor ESEM for construct validation purposes: Guidelines and applied example. Research Methods in Applied Linguistics, 1, 100005.

Asparouhov, T., & Muthén, B. (2009). Exploratory structural equation modeling. Structural Equation Modeling: a Multidisciplinary Journal, 16, 397–438.

Bartholomew, D. J., Knott, M., & Moustaki, I. (2011). Latent variable models and factor analysis: A unified approach (3rd ed.). New York: Wiley.

Bernaards, C. A., & Jennrich, R. I. (2003). Orthomax rotation and perfect simple structure. Psychometrika, 68, 585–588.

Bentler, P. M. (1980). Multivariate analysis with latent variables: Causal modeling. Annual Review of Psychology, 31, 419–456.

Bentler, P. M. (1986). Structural modeling and Psychometrika: An historical perspective on growth and achievements. Psychometrika, 51, 35–51.

Bergami, M., & Bagozzi, R. P. (2000). Self-categorization, affective commitment and group self-esteem as distinct aspects of social identity in the organization. British journal of social psychology, 39, 555–577.

Browne, M. (1972). Orthogonal rotation to a partially specified target. British Journal of Mathematical and Statistical Psychology, 25, 115–120.

Browne, M. W. (1972). Oblique rotation to a partially specified target. British Journal of Mathematical and Statistical Psychology, 25, 207–212.

Browne, M. W. (2001). An overview of analytic rotation in exploratory factor analysis. Multivariate Behavioral Research, 36, 111–150.

Esposito Vinzi, V., & Russolillo, G. (2013). Partial least squares algorithms and methods. Wiley Interdisciplinary Reviews: Computational Statistics, 5, 1–19.

Goodall, C. (1991). Procrustes methods in the statistical analysis of shape. Journal of the Royal Statistical Society: Series B (Methodological), 53, 285–321.

Gower, J. C., & Dijksterhuis, G. B. (2004). Procrustes problems (Vol. 30). Oxford: OUP.

Harris, C. W., & Kaiser, H. F. (1964). Oblique factor analytic solutions by orthogonal transformations. Psychometrika, 29, 347–362.

Hwang, H. (2009). Regularized Generalized Structured Component Analysis. Psychometrika, 74, 517–530.

Hwang, H., Cho, G., Jung, K., Falk, C. F., Flake, J. K., Jin, M. J., & Lee, S. H. (2021). An approach to structural equation modeling with both factors and components: Integrated generalized structured component analysis. Psychological Methods, 26, 273.

Hwang, H., Desarbo, W. S., & Takane, Y. (2007). Fuzzy Clusterwise Generalized Structured Component Analysis. Psychometrika, 72, 181–198.

Hwang, H,, Kim, S., Lee, S. & Park, T. (2017). gesca: Generalized Structured Component Analysis (GSCA). R package version 1.0.4. https://CRAN.R-project.org/package=gesca

Hwang, H., & Takane, Y. (2004). Generalized structured component analysis. Psychometrika, 69, 81–99.

Hwang, H., & Takane, Y. (2014). Generalized structured component analysis: A component-based approach to structural equation modeling. Boca Raton: CRC Press.

Hwang, H., Takane, Y., & Jung, K. (2017). Generalized structured component analysis with uniqueness terms for accommodating measurement error. Frontiers in Psychology, 8, 2137.

Jennrich, R. I. (1974). Simplified formulae for standard errors in maximum-likelihood factor analysis. British Journal of Mathematical and Statistical Psychology, 27, 122–131.

Jennrich, R. I. (2007). Rotation methods, algorithms, and standard errors. In Factor analysis at 100 (pp. 329–350). Routledge.

Jöreskog, K. G., & Sörbom, D. (1996). LISREL 8: User’s reference guide. Scientific Software International.

Kaiser, H. F. (1958). The Varimax criterion for analytic rotation in factor analysis. Psychometrika, 23, 187–200.

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika, 39, 31–36.

Kiers, H. A. L. (1994). Simplimax: Oblique rotation to an optimal target with simple structure. Psychometrika, 59, 567–579.

Kiers, H. A. L. (1998). Joint orthomax rotation of the core and component matrices resulting from three-mode principal components analysis. Journal of Classification, 15, 245–263.

Lorenzo-Seva, U. (2003). A factor simplicity index. Psychometrika, 68, 49–60.

Lorenzo-Seva, U., & Ten Berge, J. M. (2006). Tucker’s congruence coefficient as a meaningful index of factor similarity. Methodology: European Journal of Research Methods for the Behavioral and Social Sciences, 2, 57.

Marsh, H. W., Guo, J., Dicke, T., Parker, P. D., & Craven, R. G. (2020). Confirmatory factor analysis (CFA), exploratory structural equation modeling (ESEM), and set-ESEM: Optimal balance between goodness of fit and parsimony. Multivariate Behavioral Research, 55, 102–119.

Marsh, H. W., Lüdtke, O., Muthén, B., Asparouhov, T., Morin, A. J., Trautwein, U., & Nagengast, B. (2010). A new look at the big five factor structure through exploratory structural equation modeling. Psychological Assessment, 22, 471–491.

Marsh, H. W., Morin, A. J. S., Parker, P. D., & Kaur, G. (2014). Exploratory structural equation modeling: An integration of the best features of exploratory and confirmatory factor analysis. Annual Review of Clinical Psychology, 10, 85–110.

McLarnon, M. J. (2022). Into the heart of darkness: A person-centered exploration of the Dark Triad. Personality and Individual Differences, 186, 111354.

Mulaik, S. A. (1986). Factor analysis and Psychometrika: Major developments. Psychometrika, 51, 23–33.

Pianta, R. C., Lipscomb, D., & Ruzek, E. (2022). Indirect effects of coaching on pre-K students’ engagement and literacy skill as a function of improved teacher-student interaction. Journal of School Psychology, 91, 65–80.

Poier, S., Nikodemska-Wołowik, A. M., & Suchanek, M. (2022). How higher-order personal values affect the purchase of electricity storage–Evidence from the German photovoltaic market. Journal of Consumer Behaviour.

Ten Berge, J. M. (1993). Least squares optimization in multivariate analysis. Leiden: DSWO Press, Leiden University.

Tenenhaus, M. (2008). Component-based structural equation modelling. Total Quality Management, 19, 871–886.

Tenenhaus, M., Vinzi, V. E., Chatelin, Y. M., & Lauro, C. (2005). PLS path modeling. Computational Statistics & Data Analysis, 48, 159–205.

Tucker, L. R. (1951). A method for synthesis of factor analysis studies (Personnel research section report no. 984). Department of the Army.

Trendafilov, N. T. (2014). From simple structure to sparse components: A review. Computational Statistics, 29, 431–454.

Trendafilov, N. T., Fontanella, S., & Adachi, K. (2017). Sparse exploratory factor analysis. Psychometrika, 82, 778–794.

Wang, J., & Wang, X. (2019). Structural equation modeling: Applications using Mplus. New York: Wiley.

Wold, S., Sjmöstrmöm, M., & Eriksson, L. (2001). PLS-regression: A basic tool of chemometrics. Chemometrics and Intelligent Laboratory Systems, 58, 109–130.

Yang, Y., Chen, M., Wu, C., Easa, S. M., & Zheng, X. (2020). Structural equation modeling of drivers’ situation awareness considering road and driver factors. Frontiers in Psychology, 11, 1601.

Acknowledgements

The author is deeply grateful to the reviews and the associate editor for their careful reviews and constructive comments for improving the quality of the paper. The author also thanks Professor Henk Kiers at the University of Groningen for his helpful advice.

Funding

This research was supported by JSPS KAKENHI Grant Number 23K16854.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We have no conflicts of interest to disclose.

Data Availability

R source code for the proposed method can be obtained from the author on request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

In this appendix, we consider the minimization problem

over \(\textbf{W}_1\),\(\textbf{W}_2\),\(\textbf{C}_1\),\(\textbf{C}_2\),\(\textbf{B}_1\), and \(\textbf{B}_2\), subject to the constraint

The loss function in (38) is equivalent to the minimization of (10) with \(\tilde{\textbf{B}}_1\) and \(\tilde{\textbf{B}}_2\) being the matrices filled with zeros, as assumed in the EGSCA procedure. The component score is constrained to be orthogonal within the variable set in order to avoid the standardization issue after the rotation of the parameter matrices. The estimated parameter matrices are used for an initial solution for the EGSCA procedure. All elements in the parameter matrices are treated as free parameters to be estimated, while some are fixed in the conventional GSCA procedure.

The following iterative algorithm is used for minimizing (38).

-

1.

Initialize \(\textbf{W}_2\), \(\textbf{C}_1\), \(\textbf{C}_2\), \(\textbf{B}_1\), and \(\textbf{B}_2\).

-

2.

Update \(\textbf{W}_1\) by the one minimizing \(\phi \) with other parameter matrices kept fixed.

-

3.

Update \(\textbf{W}_2\) by the one minimizing \(\phi \) with other parameter matrices kept fixed.

-

4.

Update \(\textbf{C}_1\) and \(\textbf{C}_2\) by the ones minimizing \(\phi \) with other parameter matrices kept fixed.

-

5.

Update \(\textbf{B}_1\) and \(\textbf{B}_2\) by the ones minimizing \(\phi \) with other parameter matrices kept fixed.

-

6.

Terminate the algorithm if the decrement of \(\phi \) value is less than \(\epsilon \), otherwise go back to Step 2.

The loss function is guaranteed to decrease at Steps 2–5, and the algorithm starts from \(M_{ST}\) initial values to avoid local minimum. \(M_{ST} = 100\) and \(\epsilon = 1.0 \times 10^{-6}\) were used for all the simulation studies and the applications.

The update formulae for the Steps 2–5 are presented in the following.

First, consider to minimize \(\phi \) over \(\textbf{W}_1\) subject to (39). \(\phi \) is expanded as

where c denotes the constant irrelevant to the parameter matrices. (40) indicates that the minimization of \(\phi \) over \(\textbf{W}_1\) is equivalent to maximize

where \(\textbf{M}_1 = \textbf{Z}_1^{\prime }{} \textbf{Z}_1\textbf{C}_1^{\prime } + \textbf{Z}_1^{\prime }{} \textbf{Z}_2\textbf{W}_2(\textbf{B}_1^{\prime } + \textbf{B}_2)\) with the dimensionality of \(J_1 \times D_1\). Here, using \(\tilde{\textbf{W}}_1 = (\textbf{Z}_1^{\prime }{} \textbf{Z}_1)^{1/2}{} \textbf{W}_1\), the first constraint in (39) can be rewritten as

and \(\phi _{\textbf{W}_1}\) becomes

Thus, the minimization of \(\phi \) over \(\textbf{W}_1^{\prime }\textbf{Z}_1^{\prime }{} \textbf{Z}_1\textbf{W}_1 = \textbf{I}_{D_1}\) is equivalent to maximizing \(\phi _{\textbf{W}_1}\) over the column-orthonormal matrix \(\tilde{\textbf{W}}_1\). \(\phi _{\textbf{W}_1}\) is maximized as

using the singular value decomposition

where \(\textbf{K}_{1}\) and \(\textbf{L}_{1}\) are the matrices of the left and right singular vectors of \((\textbf{Z}_1^{\prime }{} \textbf{Z})^{-1/2}\textbf{M}_1\), respectively, and \({\varvec{\Lambda }}_{1}\) is the diagonal matrix of the singular value arranged in descending order (Ten Berge, 1993). The equality in (45) holds when \(\tilde{\textbf{W}}_1 = \textbf{K}_{1}{} \textbf{L}_{1}^{\prime }\) which leads

as the update formula for \(\textbf{W}_1\) in Step 2.

The update formula for the subsequent step is similarly given by

where the columns of \(\textbf{K}_2\) and \(\textbf{L}_2\) are the left and right singular vectors of \((\textbf{Z}_2^{\prime }{} \textbf{Z}_2)^{-1/2}(\textbf{Z}_2^{\prime }{} \textbf{Z}_2\textbf{C}_2^{\prime } + \textbf{Z}_2^{\prime }{} \textbf{Z}_1\textbf{W}_1(\textbf{B}_1 + \textbf{B}_2^{\prime }))\), respectively.

The \(\textbf{C}_1\) and \(\textbf{C}_2\) minimizing \(\phi \) is simply obtained by the multivariate regression;

The update formulae in Step 5 are also given by

The second example in the fourth section fixes \(\textbf{B}_2\) as \(_{D_2}{} \textbf{O}_{D_1}\), and it is accomplished by setting \(\textbf{B}_2 = \ _{D_2}{} \textbf{O}_{D_1}\) in Step 1, and suppressing the update of \(\textbf{B}_2\) in Step 5.

Appendix B

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yamashita, N. Exploratory Procedure for Component-Based Structural Equation Modeling for Simple Structure by Simultaneous Rotation. Psychometrika (2023). https://doi.org/10.1007/s11336-023-09942-5

Received:

Published:

DOI: https://doi.org/10.1007/s11336-023-09942-5