Abstract

Machine learning is a broad field of study that can be applied in many areas of science. In mining, it has already been used in many cases, for example, in the mineral sorting process, in resource modeling, and for the prediction of metallurgical variables. In this paper, we use for defining estimation domains, which is one of the first and most important steps to be taken in the entire modeling process. In unsupervised learning, cluster analysis can provide some interesting solutions for dealing with the stationarity in defining domains. However, choosing the most appropriate technique and validating the results can be challenging when performing cluster analysis because there are no predefined labels for reference. Several methods must be used simultaneously to make the conclusions more reliable. When applying cluster analysis to the modeling of mineral resources, geological information is crucial and must also be used to validate the results. Mining is a dynamic activity, and new information is constantly added to the database. Repeating the whole clustering process each time new samples are collected would be impractical, so we propose using supervised learning algorithms for the automatic classification of new samples. As an illustration, a dataset from a phosphate and titanium deposit is used to demonstrate the proposed workflow. Automating methods and procedures can significantly increase the reproducibility of the modeling process, an essential condition in evaluating mineral resources, especially for auditing purposes. However, although very effective in the decision-making process, the methods herein presented are not yet fully automated, requiring prior knowledge and good judgment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Machine Learning in Mining

Machine learning (ML), a term introduced by [31], enables computers to learn from data to perform tasks then and make decisions based on the mathematic models that were built, without giving specific commands to these computers. It has been heavily applied in many fields of science and industry. Although not a new subject, it has enjoyed high popularity and a sharp increase in development over the last decades, thanks to the growth of computer power and easy access to the public.

ML is a complex and broad scientific field and must be used with caution. It can offer interesting solutions, especially regarding complex problems with big databases and high dimensionality, when properly applied. In the mining industry, it has already been applied in several tasks, for example in the definition of domains for resource modeling (e.g., [12, 18, 19, 27]), in the mineral analysis and sorting process (e.g., [6, 14, 33]) and for the prediction of metallurgical variables (e.g., [16, 22, 23]).

This paper specifically addresses the matter of defining domains for modeling using cluster analysis. We compare different algorithms and elaborate on the formal validation of the spatial distribution of the resulting clusters using correlograms of the indicators, as suggested in [21].

Additionally, we discuss and apply supervised learning algorithms for the automatic classification of new samples. Consequently, incorporating new data into the database respects the same rules used to define those domains, which has been suggested by [27] and is now applied in a real case scenario with a phosphate-titanium illustration case.

1.2 Stationarity in the Context of Mineral Resource Modeling

The concept of stationarity is closely related to the homogeneity of geological bodies. We will assume that a phenomenon is stationary when it shows constant expected values, covariance moments, and autocorrelation structures across the study area in any given location, a simplified definition based on [15]. However, these characteristics are rarely present over large volumes of in situ natural materials. Therefore, it becomes necessary to distinguish more homogeneous portions inside mineral deposits, the so-called “modeling domains”. Hence, the geologic block models are more accurate to the reality that they intend to represent.

Proper data statistics and a good understanding of the geological context are essential for an adequate segmentation of a mineral deposit into domains for modeling. Unsupervised machine learning techniques, specifically cluster analysis algorithms, can be especially suited for this matter. These methods can divide the available data into groups based on similarities and dissimilarities.

1.3 Types of Clustering Algorithms and Background

Traditionally, cluster analysis uses the relationships that are present in the data based only on statistical parameters, that is, the relationships in the attribute (multivariate) space (e.g., [7, 17, 34]), without considering the spatial connectivity or the geological aspects [29]. Thus, using such traditional algorithms on geostatistical datasets encounters considerable limitations, as statistical similarity does not necessarily imply geographic continuity.

In the last decades, new methods were introduced to analyze the clustering of spatial data. These algorithms offer the possibility of grouping data into spatially contiguous clusters and, at the same time, respect the statistical relationships between the variables (e.g., [3, 8, 18, 24, 27, 32]).

The method presented by Oliver and Webster [24] uses spatial analysis to determine the scale of spatial variation. This is then applied for clustering the samples into spatial contiguous groups. Ambroise et al. [1] introduced a technique where spatial constraints are applied to the expectation–maximization algorithm [5]. Scrucca [32] presented a method based on the autocorrelation statistics developed by Getis and Ord [13] and Ord and Getis [25].

Romary et al. [28] applied spatial clustering based on the traditional agglomerative hierarchical method and, later, Romary et al. [27] added a new approach, inspired on the spectral clustering algorithm. Fouedjio [8, 9] applied a non-parametric kernel estimator, developing an algorithm also based on the spectral approach. Fouedjio [10] then extended the spectral clustering approach presented by Fouedjio [8] to be applied to large scale geostatistical datasets.

Martin and Boisvert [18] introduced a method that involves: (i) introduction of a new algorithm based on clustering ensembles and (ii) a metric that combines the spatial and the multivariate character of the data.

D`Urso and Vitale [3] proposed a modification of the technique that was presented by Fouedjio [9], aiming at neutralizing eventual spatial outliers. [11] reviewed many of the spatial clustering techniques, categorizing them according to their characteristics.

1.4 Discussions on the Validation Process

All unsupervised techniques aim at finding patterns in unlabeled data, allowing the definition of groups based on their similarities/dissimilarities. This fact is what brings up one of the most significant challenges in cluster analysis: validation. Because there are no true values for reference, validation is a subjective task.

Some specific validation techniques, such as the Silhouette [30], the Davies-Bouldin [4], and the Calinski-Harabaz [2] methods can aid in this task, but only access the effectiveness of the clustering process in the multivariate space. Martin and Boisvert [18] proposed an alternative approach for measuring the clustering quality in the multivariate and geographic spaces simultaneously: the “dual-space metrics”.

An additional challenge is to choose the most appropriate number of clusters. An excessive number of domains can unnecessarily complicate the subsequent steps in the modeling workflow (e.g., contour modeling, estimation, simulation). On the other hand, very few domains can imply the mixing of statistical populations, compromising the accuracy of the resulting models.

Therefore, there is no ultimate methodology for cluster analysis when applied to geostatistical datasets. The results have to be tested and different scenarios compared in both multivariate and geographic spaces. Nevertheless, what is being tested is not the veracity of the clusters, but their relative quality, their practical sense, in other words, whether or not it was possible to group the data satisfactorily.

1.5 Supervised Learning Applied to the Classification of New Samples

Mining is a dynamic activity, and new information is constantly added to the database in every operation. Performing the entire cluster analysis each time that new samples are collected would be somewhat impractical. Furthermore, as the clustering techniques are based on the search for complex relationships in the data, other configurations could arise, slightly different from those already defined, which would require the revision of the whole modeling process, including the definition of contours and the analysis of the spatial continuity (i.e. variography).

At the same time, the new samples must be labeled according to the previously conducted cluster analysis [27]. In other words, they are classified according to the same rules so that a new sample, when assigned to a particular group, is more similar to the other samples of the same cluster than to samples from other clusters. As these designations do not follow simple rules, classifying new samples is not a trivial task, and supervised machine learning techniques are especially suitable for this matter. This can be achieved with algorithms such as decision trees, random forests, support vector machines, and artificial neural networks.

Figure 1 presents a flowchart with the proposed steps for the integrated cluster analysis and automatic classification of samples in a mineral resource modeling context [20]. Sporadically, as the database grows in number, the definition of groups can be updated, as indicated by the dashed line in the flowchart of Fig. 1, using samples that had not been previously used in the cluster analysis. The supervised classifier must then be updated so that it incorporates the new information to be used in the classification of new samples in a continuous process.

2 Methods and Workflow

2.1 Clustering Algorithms

In order to observe the behavior of different types of clustering approaches, four algorithms were applied in this study. Two of them do not consider the spatial distribution of the samples. The other two are of the spatial type, which account for the relationships of data in the attribute space as well as for the position of the samples in the geographic space:

-

(i)

k-means [17]—one of the most traditional and widely used clustering algorithms;

-

(ii)

Agglomerative hierarchical [34]—another widely used technique, firstly introduced in the field of taxonomy, and later adopted in many fields of science;

-

(iii)

Dual-space clusterer [18]—a specially designed method to deal with spatial data, herein referred to as dsclus,

-

(iv)

Autocorrelation-based clusterer [32]—another algorithm for handling spatial data, based on the clustering of local autocorrelation statistics, herein mentioned as acclus.

2.2 Validation Methods

The following validation methods were applied to evaluate the results and help to choose the best clustering algorithm and configuration:

-

(i)

The Silhouette [30], the Calinski-Harabasz [2], and the Davies-Bouldin [4] scores;

-

(ii)

The dual space metrics [18], which comprehends spatial entropy (H) and within clusters sum of squares (wcss);

-

(iii)

Indicators correlograms to assess the geographic continuity of the clusters (as suggested in [21]);

-

(iv)

Visual inspection of the spatial distribution and statistical evaluation of the clusters.

The metrics indicated on item (i) measure how well the elements are clustered inside the respective groups using Euclidean distances in the multivariate space. Higher values of both Silhouette and Calinski-Harabasz scores and lower Davies-Bouldin scores are desired, indicating more organized clusters.

Martin and Boisvert [18] proposed the dual-space metrics for simultaneously verifying multivariate and spatial cohesion of clusters. H and wcss are calculated independently but simultaneously evaluated, so the goodness of the clusterings are evaluated in geographic and multivariate spaces.

Generally, the best configurations for spatial data clustering are not those with the lowest multivariate scores nor those with the lowest spatial entropy, but ones with intermediate values. Lower H and wcss are desired. However, a tradeoff between these two metrics is usually observed. As already pointed out by Oliver and Webster [24] and was later empirically confirmed by Martin and Boisvert [18], higher cohesion in the multivariate space usually leads to the geographic fragmentation of the clusters. The solution is to combine both metrics and evaluate the results comparatively.

In order to further analyze and validate the geographic connectivity of the clusters, we used the mapping of the spatial continuity of the indicators based on Modena et al. [19]. However, those authors used variograms, which may be noisy in short distances. Here we use correlograms, as these are standardized, which gives more stability to the results, especially regarding short distances. In this method, a binary variable is defined, assuming value 1 for samples within the cluster being evaluated and 0 for all others. The correlogram for this binary variable is then plotted for different lags. Continuous clusters will result in structured correlograms and, fragmented clusters, in noisy correlograms and/or high nugget effects.

Choosing the adequate clustering configuration is a very subjective task. It is important to observe the statistical distributions of the clusters using tools such as histograms, boxplots, and scatter plots. Additionally, comparisons between the defined clustering domains and some geological aspects of the samples (e.g., rock type, alteration patterns) are essential.

2.3 Automatic Classification of New Samples

In order to perform the automatic classification, first the clustering-labeled dataset must be used to calibrate (test and define the parameters) the classifier. Differently from what happens in unsupervised techniques, validating the performance of a supervised algorithm is straightforward, as the data is already labeled, as it will be demonstrated in the illustration case that follows. In order to do so, the dataset must be divided into the training and test subsets. The quality of the classifier can be assessed with confusion matrices and with the examination of global metrics such as recall, precision, and accuracy. A prevalent and proper validation technique for supervised learning applications is the k-fold cross-validation, which splits the dataset into train and test sets several times, each of these with different sets of data to avoid overfitting.

2.4 Workflow

For a better understanding, the proposed workflow can be organized as follows:

-

(i)

Exploratory data analysis;

-

(ii)

Data standardization according to

$$Z= \frac{(X-m)}{s}$$(1)where Z is the standardized value, X is the original value, m is the mean, and s, the standard deviation of the original values;

-

(iii)

Computation of the mean scores of the Silhouette, Calinski-Harabasz, Davies-Bouldin, wcss, and H;

-

(iv)

Selection of the best scenarios based on the resulting scores from step (iii);

-

(v)

Verification of the geographic contiguity of the clusters, using the correlograms of the indicators defined by the classes;

-

(vi)

Visual inspection and statistical analysis of the scenarios selected in step (v);

-

(vii)

Selection of the best-suited scenario based on the previous steps;

-

(viii)

Calibration of a supervised classifier and automatic classification of new samples.

All methods were run using a Jupyter Notebook, under Windows 10, 64 bit with Python 3.6.5 installed via Anaconda; processor Intel® i7-3.20Ghz, with 24.0 GB RAM.

3 Case Study

3.1 Exploratory Data Analysis

The techniques mentioned in the previous sections were applied to a three-dimensional isotopic dataset from a phosphate-titanium deposit, containing 19.344 samples assayed for 12 oxides (P2O5, Fe2O3, MgO, CaO, Al2O3, SiO2, TiO2, MnO, Na2O, K2O, BaO, and Nb2O5) and loss on ignition (LoI). The samples also carry two categorical attributes: weathering patterns (Fig. 2a) and rock types (Fig. 2b). Figure 3 shows the histograms of the continuous variables, and Fig. 4, the bar charts with the sample counts of the two categorical variables. Tables 1 and 2 show brief descriptions of each of the typologies.

The distributions of each continuous variable are quite different, and some of them show considering asymmetry. Besides, it can be observed that complex relationships are present from the scatterplots of the most relevant variables (Fig. 5). These complexities and the differences in the scale of the raw variables can lead to inconsistencies when applying machine learning algorithms, so all continuous variables were standardized according to Eq. 1.

3.2 Applying Cluster Analysis and Verifying the Results

The four clustering algorithms (k-means, hierarchical, dsclus and acclus) were applied in seven different scenarios, each corresponding to a different number of clusters, from two to seven. The validation methods mentioned in the previous sections were applied in order to evaluate the clustering configurations.

To run the hierarchical and k-means techniques, we used the algorithms available on the Scikit-learn library [26]. For the first, we used the ward’s proximity distances and for the latter, the k-means++ option for the centroid initialization, which seeks to maximize the separation between the initial centroids, increasing speed and accuracy.

For dsclus and acclus, we used the algorithms available on GitHub, hosted in the account mentioned in Martin and Boisvert [18]. The dsclus being a clustering ensemble technique, we used 100 realizations, with 20 nearest neighbors and search volume = (0, 0, 0, 400, 400, 12). For acclus, 30 nearest neighbors were set, also with a search volume = (0, 0, 0, 400, 400, 12).

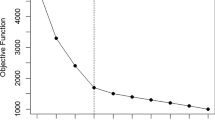

Figure 6 shows the results of the multivariate metrics and the spatial entropy for all four algorithms in all scenarios. It becomes evident that the traditional algorithms produce better results than the spatial techniques in the multivariate space, with k-means outperforming the hierarchical method. On the other hand, spatial techniques show better results in the geographic space, with dsclus outperforming acclus in almost every case. Therefore, it is noticeable that dsclus is preferable, as it shows more balanced results between the multivariate and the geographic aspects.

To verify geographic connectivity and multivariate organization simultaneously, the dual space metrics of [18]—wcss and spatial entropy—can be plotted in a scatter plot (Fig. 7). As already stated, low values for both H and wcss, are desirable. However, it is apparent in the figure that these two metrics are inversely proportional. The solution is to look for intermediate configurations that present a better balance between multivariate and geographic cohesion.

As for choosing the adequate number of clusters (k), intermediate values of all metrics are also preferable. Thus, even though the scenarios with two or three clusters show good scores, these configurations can lead to the statistical mixing of populations, which must be avoided, especially concerning a complex situation with high dimensionality. On the other hand, grouping the data in seven, eight, or even six clusters can take unnecessary complications in the following steps of the modeling process, such as the definition of contours and estimation, as some groups will be somewhat redundant. Therefore, the dsclus-clustered data with four or five groups seem to be better options and will be the only scenarios to be considered from now on.

Figure 8 shows the spatial distribution of the samples, colored by clustering code, to understand the clusters’ layout better. It becomes clear that the distribution in each case is comparable to the distributions of the weathering patterns and rock type (see Fig. 2), with a dominant horizontal layout.

A statistical evaluation of samples was also performed, and, as can be seen in the boxplots of Figs. 9 and 10, both scenarios show different statistical distributions, depending on the considered variable.

Finally, a sample count was performed to compare clusters and the categorical variables—weathering and rock type—and Fig. 11 presents the bar charts with the results.

3.3 Discussions on the Results of the Cluster Analysis

From the statistical analysis and the comparisons between clusters, weathering patterns, and rock types, a description of each cluster can be drawn so that they can compose modeling domains (Tables 3 and 4).

The scenario with four domains can be compared to the model used at the mine site in the case study. What is considered phosphate and titanium ores are saprolite materials comprised in our domains 0 and 2, respectively. The overburden waste, composed of soil, clay, and laterite, corresponds to our domain 3. Altered and fresh rocks from the base of the deposit are grouped in our domain 1. In the mine, semi-altered rocks can also be mined as phosphate ore, depending on P2O5 grades and CaO/P2O5 ratios.

Although the four-cluster scenario is the most similar to the model practiced in the mine, this resemblance shows some inconsistencies, mainly regarding the rocky materials (RSA and RSI). In the mine, fresh and altered rock materials are included in different modeling domains. This is due to a technical artifact: this way, particularly low grades of P2O5 and/or high grades of CaO will not contaminate the estimates of blocks classified as an altered rock in the model, which can be mined as ore in certain areas. In the global perspective of the cluster analysis, as conducted in this study, there is no evidence that RSA and RSI should constitute different modeling domains. Besides, the four-cluster configuration implies considerable losses of materials that could be mined as ore (included in our domain 1).

In the five-domain scenario, domain 0 comprises the rocky waste and phosphate-bearing rocks with high carbonate contents. Domain 1 is the phosphate ore, domain 4 is the titanium ore, and domain 2 is the overburden. Domain 3, although mainly composed of overburden, can be an alternate source of titanium. We conclude that this configuration is adequate compared to the four-domain scenario.

Another important observation is that these comparisons between the clustering results and the mine model are subjective evaluations because the geologic classifications (weathering patterns and rock types) are products of the personal interpretations of the company’s personnel. Thus, those classifications should not be taken as true data labels, although the matches with the clustering domains show a remarkable similarity.

3.4 Supervised Learning Applied to the Automatic Classification of New Samples

Once the most suitable scenario is chosen, the clustered data can be used to train a supervised classifier to be applied in the classification of new samples. Many algorithms can be used, such as k-nearest neighbors, decision trees, and random forests. In this paper, the latter was chosen.

First, the 19.344 labeled dataset was segmented into stratified training and testing sets in a proportion of 85%–15% (17.252 and 2.902 samples, respectively). As this is a case where the spatial configuration is important in defining clusters, the xyz coordinates were also used as input variables, along with all continuous attributes.

The input variables were standardized according to Eq. 1. The ‘Randomized Search CV’ (Pedregosa et al., 2012) method was used to define the best parameters of the Random Forest classifier. Fivefold stratified cross-validation was applied, and results are shown in Figs. 12 and 13.

The model was run with the 2.902-sample test set. It was verified that the results (Fig. 14) are consistent to those observed in the fivefold stratified cross validation, attesting that there is no under or overfitting in the model. The statistical affinities between some groups lead to some misclassifications, but results are acceptable, with a 92% overall accuracy.

As a final evaluation, the statistical distribution and the visual inspection of the automatically classified samples from the test set were checked. Figures 15 and 16 show that the results are consistent (see Figs. 8b and 10 for comparison).

4 Conclusions

Although very efficient in many cases, the application of traditional clustering algorithms is quite limited in modeling mineral resources since they only consider data relationships in the multivariate space, neglecting their geographic distribution. Thus, techniques that also consider the geographic distribution of samples are more appropriate, as demonstrated in the case study.

Parameterization and the validation of the clustering results are still complex decisions and entirely subjective. Therefore, despite being very effective in the decision-making process, those methods are not yet fully automated, requiring specialized knowledge and good judgment.

The geological characteristics of a mineral deposit should guide the classification of the samples. However, traditionally, these characteristics lead to subjective classifications, resulting from personal interpretation in the data acquisition phase. Such classifications should therefore not be given as unquestionable labels, and differences should arise compared to the results of clustering algorithms. It is evident that the geological characteristics must be reflected in the clusters in some way.

Once the best scenario has been defined, the codes of each group can be fed as labels in supervised learning algorithms (e.g., decision trees, random forests, k-nearest neighbors) for the calibration of mathematical models for the automatic classification of new samples. Periodically, the complete analysis should be revisited with all samples, and the supervised classifier, updated.

One of the most significant advantages of applying machine learning to mining problems is their ability to make measurements in a multidimensional space, using complex mathematical relationships, which is practically impossible for a human analyst. Apart from the subjectivity of the validation and final decision, this automation significantly increases reproducibility in the modeling processes, which is essential in evaluating mineral resources, especially for audition purposes.

References

Ambroise, C., Dang, M., Govaert, G.: Clustering of spatial data by the EM algorithm. In: Proceedings of GEOEV I—Geostatistics for Environmental Applications, pp. 493–504 (1997). https://doi.org/10.1007/978-94-017-1675-8_40

Calinski, T., Harabasz, J.: A dendrite method for cluster analysis. Commun. Stat. Theory Methods 3(1), 1–27 (1974). https://doi.org/10.1080/03610927408827101

D`Urso, P., Vitale, V.: A robust hierarchical clustering for georeferenced data. Spatial Stat., 35 (2020)

Davies, D.L., Bouldin, D.W.: A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 2, 224–227 (1979). https://doi.org/10.1109/TPAMI.1979.4766909

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. Roy. Stat. Soc.: Ser. B (Methodol.) 39(1), 1–22 (1977). https://doi.org/10.1111/j.2517-6161.1977.tb01600.x

Drumond, D. A.: Estimativa e classificação de variáveis geometalúrgicas a partir de técnicas de aprendizado de máquinas. Universidade Federal do Rio Grande do Sul (2019). Retrieved from https://www.lume.ufrgs.br/handle/10183/202480

Ester, M., Kriegel, H.-P., Sander, J., Xu, X.: A density-based algorithm for discovering clusters in large spatial databases with noise. In: Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, pp. 226–231 (1996). https://www.aaai.org/Papers/KDD/1996/KDD96-037.pdf

Fouedjio, F.: A hierarchical clustering method for multivariate geostatistical data. Spatial Stat. 18, 333–351 (2016). https://doi.org/10.1016/j.spasta.2016.07.003

Fouedjio, F.: A spectral clustering approach for multivariate geostatistical data. Int. J. Data Sci. Anal. 4(4), 301–312 (2017). https://doi.org/10.1007/s41060-017-0069-7

Fouedjio, F.: A spectral clustering method for large-scale geostatistical datasets. In: Proceedings of the Thirteenth International Conference in Machine Learning and Data Mining in Pattern Recognition, vol. 10358 LNAI, pp. 248–261 (2017). https://doi.org/10.1007/978-3-319-62416-7_18

Fouedjio, F.: Clustering of multivariate geostatistical data. Wiley Interdiscip. Rev. Comput. Stat. 1–13 (2020). https://doi.org/10.1002/wics.1510

Fouedjio, F., Hill, E.J., Laukamp, C.: Geostatistical clustering as an aid for ore body domaining: case study at the Rocklea Dome channel iron ore deposit, Western Australia. Appl. Earth Sci. Trans. Inst. Mining Metallurgy 127(1), 15–29 (2018). https://doi.org/10.1080/03717453.2017.1415114

Getis, A., Ord, J.K.: The analysis of spatial association by use of distance statistics. Geogr. Anal. 24(3), 189–206 (1992). https://doi.org/10.1111/j.1538-4632.1992.tb00261.x

Gülcan, E., Gülsoy, Ö.Y.: Performance evaluation of optical sorting in mineral processing—A case study with quartz, magnesite, hematite, lignite, copper and gold ores. Int. J. Miner. Process. (2017). https://doi.org/10.1016/j.minpro.2017.11.007

Journel, A.G., Huijbregts, C.: Mining geostatistics. Academic Press Limited, London (1978)

Lishchuk, V., Lund, C., Ghorbani, Y.: Evaluation and comparison of different machine-learning methods to integrate sparse process data into a spatial model in geometallurgy. Miner. Eng. 134, 156–165 (2019). https://doi.org/10.1016/j.mineng.2019.01.032

MacQueen, J.: Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, vol. 1, pp. 281–296 (1967).

Martin, R., Boisvert, J.: Towards justifying unsupervised stationary decisions for geostatistical modeling: Ensemble spatial and multivariate clustering with geomodeling specific clustering metrics. Comput. Geosci. 120, 82–96 (2018). https://doi.org/10.1016/j.cageo.2018.08.005

Modena, R.C.C., Moreira, G. de C., Marques, D.M., Costa, J.F.C.L.: Avaliação de técnicas de agrupamento para definição de domínios estacionários com o auxílio de geoestatística. In: Proceedings of the Twentieth Mining Symposium at the Fifth ABM Week, pp. 91–100 (2019). São Paulo. https://doi.org/10.5151/2594-357x-33405

Moreira, G.C.: Análise de agrupamento aplicada à definição de domínios de estimativa para a modelagem de recursos minerais. Universidade Federal do Rio Grande do Sul (2020). Retrieved from https://lume.ufrgs.br/handle/10183/212457

Moreira, G.C., Costa, J.F.C.L., Marques, D.M.: Defining geologic domains using cluster analysis and indicator correlograms: a phosphate-titanium case study. Appl. Earth Sci. 129(4), 176–190 (2020). https://doi.org/10.1080/25726838.2020.1814483

Nakhaei, F., Mosavi, M.R., Sam, A., Vaghei, Y.: Recovery and grade accurate prediction of pilot plant flotation column concentrate: Neural network and statistical techniques. Int. J. Miner. Process. 110, 140–154 (2012). https://doi.org/10.1016/j.minpro.2012.03.003

Niquini, F.G.F., Costa, J.F.C.L.: Mass and metallurgical balance forecast for a zinc processing plant using artificial neural networks. Nat. Resour. Res. (2020). https://doi.org/10.1007/s11053-020-09678-4

Oliver, M.A., Webster, R.: A geostatistical basis for spatial weighting in multivariate classification. Math. Geol. 21(1), 15–35 (1989). https://doi.org/10.1007/BF00897238

Ord, J.K., Getis, A.: Local spatial autocorrelation statistics: distributional issues and an application. Geogr. Anal. 27, 286–306 (1995). https://doi.org/10.1111/j.1538-4632.1995.tb00912.x

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Romary, T., Ors, F., Rivoirard, J., Deraisme, J.: Unsupervised classification of multivariate geostatistical data: two algorithms. Comput. Geosci. 85, 96–103 (2015). https://doi.org/10.1016/j.cageo.2015.05.019

Romary, T., Rivoirard, J., Deraisme, J., Quinones, C., Freulon, X.: Domaining by clustering multivariate geostatistical data. In: Geostatistics Oslo, pp. 455–466 (2012). https://doi.org/10.1007/978-94-007-4153-9_37

Rossi, M.E., Deutsch, C.V.: Mineral resource estimation. Mineral Resource Estimation. Springer Science & Business Media (2014). https://doi.org/10.1007/978-1-4020-5717-5

Rousseeuw, P.J.: Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 20(C), 53–65 (1987). https://doi.org/10.1016/0377-0427(87)90125-7

Samuel, A.L.: Some studies in machine learning using the game of checkers. IBM J. Res. Dev. 3(3), 210–229 (1959)

Scrucca, L.: Clustering multivariate spatial data based on local measures of spatial autocorrelation. Quaderni del Dipartimento di Economia, Finanza e Statistica 20(1), 1–25 (2005). http://www.ec.unipg.it/DEFS/uploads/spatcluster.pdf

Shu, L., Osinski, G.R., McIsaac, K., Wang, D.: An automatic methodology for analyzing sorting level of rock particles. Comput. Geosci. 120, 97–104 (2018). https://doi.org/10.1016/j.cageo.2018.08.001

Sokal, R.R., Sneath, P.H.A.: Principles of numerical taxonomy. J. Mammal. 46(1), 111 (1965). https://doi.org/10.2307/1377831

Acknowledgements

The authors would like to thank the Mineral Exploration and Mining Planning Laboratory (LPM) at the Federal University of Rio Grande do Sul (UFRGS), for providing the necessary conditions for developing this work. Luiz Englert Foundation (FLE), the Coordination for the Improvement of Higher Education Personnel (Capes) and the National Council for Scientific and Technological Development (CNPq) are acknowledged for their financial support. We would also like to thank Dr. Ryan Martin for giving access to his codes and Mosaic Fertilizantes for providing the data.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

de Castro Moreira, G., Costa, J.F.C.L., Marques, D.M. (2023). Applying Clustering Techniques and Geostatistics to the Definition of Domains for Modelling. In: Avalos Sotomayor, S.A., Ortiz, J.M., Srivastava, R.M. (eds) Geostatistics Toronto 2021. GEOSTATS 2021. Springer Proceedings in Earth and Environmental Sciences. Springer, Cham. https://doi.org/10.1007/978-3-031-19845-8_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-19845-8_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19844-1

Online ISBN: 978-3-031-19845-8

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)