Abstract

The ultimate aim of radiobiological research is to establish a quantitative relationship between the radiation dose absorbed by biological samples (being this a cell, a tissue, an organ, or a body) and the effect caused. Therefore, radiobiological investigations need to be supported by accurate and precise dosimetric measurements. A rigorous standardized methodology has been established to assess and quantify the radiation dose absorbed by biological samples and these will be reviewed and discussed in this chapter. Dosimetric concepts at the macro- and microscopic levels are discussed with a focus on key physical quantities, their measurement technologies, and the link to the biological damage and response. This chapter will also include a description of state-of-the-art irradiation facilities (e.g., mini- and micro-beams) used for probing mechanisms underpinning radiobiological responses. Finally, the link between energy deposition events and detectable biological effects (from the molecular to the organism level) is investigated using Monte Carlo simulation codes and macroscopic radiobiological models.

Fabiana Da Pieve is currently employed by the European Research Council Executive Agency, European Commission, BE-1049 Brussels, Belgium. The views expressed are purely those of the authors and may not in any circumstances be regarded as stating an official position of the European Commission

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

FormalPara Learning Objectives-

To understand the concept of dose and the notion of energy deposition and energy transfer.

-

To learn the working principles of the main types of detectors used for radiation dosimetry.

-

To understand how the dose is measured in micrometric volumes and what the importance of microdosimetry is in radiobiology.

-

To understand how early DNA damage is generated by ionizing radiation.

-

And how this can be simulated using Monte Carlo (MC) track structure codes.

-

To get an overview of the state of the art of the mechanistic simulation and the DNA damage scoring methods.

-

To get to know micro-beams and mini-beams, their production and use, and why they are important for radiobiological research.

-

To understand the underlying assumptions and derivation of target theory, which is the basis for all stochastic dose-response models at molecular and cellular level.

-

To learn about the linear quadratic model, the strengths and limitations of the model as well as the different interpretations of the model with respect to the underlying biology.

-

To understand the difficulties in modeling stochastic effects for whole organisms and for different dose rates.

4.1 Principles of Radiation Dosimetry

4.1.1 Energy Deposition and Transfer

4.1.1.1 Fluence

Particle fluence, or planar fluence, Φ, is defined as the number of ionizing particles which traverse a finite plane in space some distance from the source. If dN particles are incident on a planar surface of area, dA, then the fluence is Φ = dN/dA [1, 2].

We may also define the energy fluence, Ψ, which is the radiant energy, dR, which crosses a plane of area, dA, as Ψ = dR/dA. The radiant energy, R, of a radiation field is defined as the total energy of the particles that cross the plane, excluding their rest mass energy. The fluence rate may be defined in terms of energy fluence or planar fluence and is simply the rate at which either energy fluence or planar fluence cross unit area. In the context of the amount of radiant energy absorbed in matter, these concepts provide the basis from which all the remaining dosimetric quantities are defined [1, 2].

4.1.1.2 Exposure

Exposure, X, is defined as the total charge which is liberated per unit mass in air by ionizing radiation [1, 2]. Its unit is the Roentgen, R, where one Roentgen is 2.58 × 10−4 C kg−1. Exposure is related to energy fluence, Ψ, by the following equation:

where (μen/ρ)air is the mass energy absorption coefficient of air which defines the fraction of the energy of a beam of particles which is absorbed per unit mass of air at a particular beam energy. Wair = 33.97 eV is the energy required to produce an ion pair in air and e is the charge of the electron.

4.1.1.3 Kerma

Kerma, K, is defined as the kinetic energy released per unit mass of material by a specific combination of an incident radiation field and an absorbing material. Kerma is related to energy fluence, Ψ, by the following equation:

where μtr/ρ is the mass energy transfer coefficient, which defines the fraction of the incident radiant energy which is released as kinetic energy in charged particles in a given volume of material. More strictly, the Kerma is the amount of energy liberated through ionization in the volume encompassed by a unit mass of an absorbing material [1, 2]. This energy is transferred through ionization of the material at the atomic level and is ultimately manifested in the kinetic energy of ionization electrons in the material. As may be seen from Fig. 4.1, kinetically charged particles or photons, created in collisions between incident ionizing particles and the material, may not deposit their energy in the mass volume. Therefore, Kerma is a measure of the amount of ionizing energy offered for absorption in the material, which in this case is the initial kinetic energy of the primary electron.

4.1.1.4 Energy Imparted

The energy imparted, ϵ, by ionizing radiation to the matter in a volume is given by the following equation [3]:

where the first and second terms in the equation, respectively, describe the sums of all the radiant energies of all ionizing radiations entering and leaving a particular volume. The third term denotes the sum of all the mass energies of all the particles produced during the interactions of the ionizing radiations with the matter to which it is imparting energy. In diagnostic radiology, the photon energy is not sufficient to instigate pair production (production of positrons and electrons in the vicinity of a strongly positive nucleus), and therefore particle production does not occur. Thus, for diagnostic energies, the third term on the right-hand side of Eq. (4.3) is zero [1, 4]. Energy Imparted is quoted in the units of energy, the Joule, J.

A distinction must be made between the term “Energy Imparted” and the term “Imparted Energy.” Energy Imparted is the term for a gross quantity or concept, where the energy is imparted to matter that has a macroscopic size. Imparted Energy is the energy that is imparted in a single interaction between any particle and the matter in a given volume. The Imparted Energy, dϵ, in an interaction is a stochastic quantity, and is difficult to measure, and impossible to infer with any great accuracy [3, 5]. Thus, the Energy Imparted is also a stochastic quantity. However, repeated measurements can establish mean energy imparted, \( \overline{\varepsilon} \), which is a non-stochastic quantity (Box 4.1).

Box 4.1 Dosimetry Quantities: Kerma and Exposure

-

Kerma, K, is defined as the kinetic energy released per unit mass of material by a specific radiation field and it is related to energy fluence, ψ

-

Exposure, X, is defined as the total charge which is liberated per unit mass in air by ionizing radiation. Its unit is the Roentgen, R.

4.1.2 Absorbed Dose

The absorbed dose (sometimes referred to simply as “dose”) is the radiant ionizing energy absorbed per unit mass of absorbing material. It is therefore defined as:

The quantity ε/m is sometimes referred to as the specific energy. It is stochastic in the same way that the imparted energy in a given interaction is stochastic, but with repeated measurements, and on macroscopic scales involving many single particle interactions, it becomes a measurable quantity ([1], p. 86; [2, 4]). The unit of Absorbed dose is the Gray, Gy, which is equal to J kg−1.

The Kerma in Eq. (4.2) may be split into two parts depending on the ways in which the energy of the photon is lost through interactions with the material [1]. Photons may either release their energy through collision interactions in which excitation and ionization of the stopping material occur or through radiative processes in which their energy is radiated through the release of photons. Thus, the Kerma can be expressed as:

where Kc is the portion transferred through collisions, and Kr is the portion transferred through radiative interactions [1]. Radiative interactions generally occur in situations in which charged particles are incident on a material [1]. In the case of diagnostic radiology, Kerma is released through collision interactions, with Collision Kerma therefore given by:

In diagnostic radiology, a simple relationship between Kerma and Absorbed dose may be derived. When charged particle equilibrium (CPE) exists in a medium, the number of charged particles leaving a unit mass volume is replaced by an equal number entering from other mass volumes. In such a situation, which occurs at the photon energies in diagnostic radiology, all Kerma is absorbed in the unit mass volume. It has been shown by Attix, that for a medium of uniform density and atomic composition, such a situation does indeed exist for a field of X-ray photons and a uniformly irradiated medium [1, 2]. In this case the Absorbed dose, D, and Collision Kerma, Kc, are equal, such that

Box 4.2 Dosimetry Quantities: Absorbed Dose

-

The Absorbed Dose is the energy absorbed per unit mass of material. The unit of Absorbed Dose is the Gray, Gy, which is equal to J kg−1

4.1.3 Radiation Detectors

In general, radiation detectors operate by providing the means to measure the energy deposited over time in the detector absorbing material from exposure to a source of ionizing radiation. This is typically measured as the quantity of charge, Q, over time elicited from an absorbing medium forming the main component of the detecting element. An ideal radiation detector is one that gives spatial resolution, temporal resolution, information regarding the energy of the particle, and information regarding the identity of the radiation. In reality, single detectors of this type are difficult to construct such that practical detectors that are used in the field have a focused range of capabilities which should be taken into consideration when a detector is chosen for a particular application [6, 7].

4.1.3.1 Ionization Chambers

Ionization chambers are designed to measure the number and/or total energy deposited as a result of the ionizations produced when a charged particle or ionizing photon traverses the detector medium. Therefore, they are not suitable for the detection or analysis of neutral particles. Ionization detectors consist of an isolated detection medium, generally a gas such as air that can be easily ionized (i.e., has a low ionization potential), which is placed between two oppositely charged electrodes (Fig. 4.2). The medium should be chosen such that it does not respond adversely to ionization such that its characteristics will not change with use.

The charged particle will ionize the detector medium along its path and these ions will then be accelerated towards the detector electrodes. In general, a high electric field is applied between the electrodes to prevent the recombination of ions produced by the traversal of the charged particle. As the charged particle traverses the sensitive region of the detector (i.e., the gas) it produces multiple electron-ion pairs, which begin to drift along the electric field lines and reach the plates of the detector. These ions may produce further ionization of the neutral gas atoms via further collision, ultimately producing a small current that induces a voltage drop across the resistor. These chambers typically generate very low measurement currents per ionizing particle, and therefore require low noise amplifiers to improve their operating performance.

The amplified output signal from the detector may be used to trigger a counting mechanism to measure the number of incident charged particles or ionizing photons (i.e., exposure) or its pulse height may be analyzed to determine the total energy within the beam (i.e., dose). The amount of ionization that is detected is dependent on the nature of the gas used in the detector, the level of the applied electrical field, and the characteristics of the plates used in the detector. How the chambers operate, i.e., as a device for the measurement of absolute energy deposition or number of charged particle incident on the detector, depends on the HV level applied to the detector, as depicted in Fig. 4.3 [7].

Signal response to ionization as a function of the applied voltage for heavily ionizing (top curve) and weakly ionizing particles (lower curve). In the Geiger region, the output does neither depend on the voltage nor on the amount of deposited energy or initial ionization. [Adapted from Fig. 4.12 Martin and Shaw (2006). Copyright (2006), Wiley Publishers]

When the applied voltage is small, the electrons and ions can recombine soon after they are produced and only a small fraction of the ions reach their respective plates in the detector (Fig. 4.3). As the applied voltage between the plates is increased, a region is reached where the output pulse reflects the amount of ionization seen in the chamber (Ionization region). When the voltage is increased still further, the electrons and positive ions released by the initial ionization can themselves cause further ionizations in the medium and thus amplify the ionization pulse (Proportional region). Increasing the applied field still further (Geiger region) creates an avalanche effect and a highly amplified output signal. Any further increases in the applied voltage lead to a continuous discharge of the detector [6].

It is possible to determine the typical output current that will be generated by an ionization chamber in the presence of a source of known activity. Consider the case of an in-air ionization chamber (where air has an ionization potential of 30 eV) which is exposed to alpha particles (Eα = 5.486 MeV) from a 10 MBq Am-241 source. The total number of ionizations produced by a single Am-241 α-particle will be the ratio of the energy of the alpha particle to the ionization potential of air:

In this case, the total number of ionizations produced will be the product of the activity of the source and the number of ionizations produced by a single alpha particle, or N = 1.829 × 1012 ionizations, which are observed in a single second (as the unit of activity, the Bq is s−1 in SI units). The final step is then to compute the product of the total number of ionizations with the charge on the electron such that the total current observed will be:

Frequently, ionization chambers are open to the air to allow for changes in ambient pressure which could collapse or expand a sealed chamber, damaging the thin chamber walls. As a consequence, chamber outputs must be adjusted for changes in ambient temperature, T, and pressure, P, from those at which the chamber was calibrated, Tn and Pn, respectively. In practice, we can multiply the chamber output by the following correction factor to adjust for ambient conditions (where all temperatures are expressed in Kelvin and pressures in Pascals [6]):

4.1.3.2 Proportional Counters

While the ionization chamber provides a device for the measurement of absolute energy deposition, it does not provide information on directionality. Proportional counters are ionization chambers that may be used for both measuring absolute energy deposition (through a measurement of the pulse height) in addition to giving directional information on the path of charged particle (through the output of a given anode wire, each of which is independently amplified).

Multi-wire proportional chambers (MWPCs) such as those shown in Fig. 4.4 are used in high-energy particle physics experiments as a means of tracking the path of charged particles. Anode wires (typically with a ~2 mm separation) are positioned between the cathode plates of the chamber (which have a typical separation of 1 mm) and the construct is sandwiched between thin mylar windows or some other superstructure, with an operating gas infused into the region between the plates. In practice, several individual chambers may then be joined together to provide fine detail on the direction of passage of individual charged particle, where pulses will be produced on the anode electrodes closest to the path of the charged particle through the detector as a result of ionization of the gas in the region closest to each anode. MWPCs can be used to infer further information on the momentum of beams of charged particles via the degree of their deflection in a magnetic field (which is typically how they have been used in collider experiments such as the LHC at CERN [6]).

4.1.3.3 Scintillators and Photomultiplier Tubes

Scintillators are materials that react to the passage of a charged particle by the emission of a very small flux of photons of light. Charged particles may excite electrons within atoms of the scintillating material to a higher energy state; these atoms then emit photons as they de-excite to their ground state. Scintillators can be developed from organic (e.g., naphthalene or anthracene) or inorganic (including sodium iodide or cesium iodide) materials and have applications as the first detection element with gamma cameras used in nuclear medicine.

Scintillating materials typically need to be chosen to detect photons of a specific wavelength and may often be doped to achieve specific wavelength sensitivity. They are generally coupled to photomultiplier tubes (PMT) to amplify the intensity of the weak photon signal output from the scintillator, either for photon counting or imaging applications.

In Fig. 4.5, a schematic of a photomultiplier tube is shown. An incoming charged particle or ionizing photon impacts the scintillator, which emits a photon flux towards a photocathode material (constructed typically with a negatively charged plate covered by a photosensitive material such as gallium–arsenide or indium–gallium–arsenide). Here, the photon flux is converted to an electron flux as they enter the inner (evacuated) environment of the PMT tube. These electrons are accelerated towards the first of several dynodes by the high-voltage field between the photocathode and the anode. When each electron collides with a dynode, it causes the emission of several electrons (typically 5–10), which are then accelerated towards subsequent dynode and amplify the electron flux through collision and reemission. At the detector anode, a significant and measurable electric current is then generated as a result of the acquisition of a single photon. Apart from a small degree of signal fluctuation, the current seen at the anode is linearly proportional to the photon flux seen at the photocathode [6, 7].

4.1.3.4 Semiconductor Detectors

Semiconductor detectors based upon p–n junction diodes offer a practical and robust option for detector construction, operating in a similar manner to an ionization chamber [6, 7]. Here a p–n junction is constructed through the joining together of a piece of p-type semiconductor (such as silicon or germanium) to a piece of n-type silicon. P-type material is doped with atoms of a material with one vacant outer-electron state, such as boron, B, while N-type material is doped with atoms of a material with an extra “free” electron in its outermost energy level, such as antimony, Sb. At the junction between the two materials a “depletion layer” is formed where electrons from the N-type material migrate to the P-type material to fill vacant energy states or “holes” leaving behind holes in the N-type material surrounding the junction. This creates a region where electrons and holes are depleted around the junction and creates a barrier to conduction. For the purposes of photon or particle detection, the depletion region is the sensitive portion of the electronic detector. When operated in reverse bias (Fig. 4.6a, b), this depletion region is larger and these detectors are typically operated in reverse bias with a voltage of 100 V to increase the depletion layer and therefore the sensitive region of the detector [6, 7].

When the sensitive element of the detector is exposed to a charged particle or ionizing photon, this causes electrons within the depletion layer to be promoted from the valence band to the conduction band (Fig. 4.7), and their conduction through the junction towards the positive terminal of the detector. Here, the valence band is equivalent to the energy level of the outermost electron, while the conduction band is the energy level of the next vacant energy state above the valence band. A current is produced which is proportional to the energy loss by the charged particle or photon within the depletion layer [6, 7]

The creation of an electron–hole pair in a silicon or germanium semiconductor requires as little as ~3 eV in comparison to the 30 eV required for in-air ionization chambers. Detectors can be constructed and tuned to radiation of a specific wavelength, λ, by altering the energy difference between the valence and conduction bands, or the band gap, Egap, as shown in Fig. 4.7 and Table 4.1.

Semiconducting materials, when incorporated in radiation detectors can therefore produce a large signal in response to irradiation with a small photon flux. The detectors can be constructed very thinly (as little as 200–300 μm) for the detection of ionized particles, or larger for stopping of photons. Their performance is approximately linear if an electric field is applied that prevents the recombination of the electrons and holes formed by the radiation [6, 7].

4.1.3.5 Cerenkov Detectors

The phenomenon of Cerenkov radiation was first observed and described by Pavel Cerenkov in 1934 and characterized by Franck and Tamm in 1937. This work resulted in all three being given the Nobel Prize in physics in 1958.

To understand the operation of Cerenkov detectors we must first describe the effect itself. Suppose that we have a charged particle traveling at a relatively low velocity though a static medium. As the particle travels slowly relative to the speed at which the ions/molecules of the material can orient and reorient themselves as it passes, the ions/molecules will orient themselves such that the part of the ion/molecule that is charged opposite to the charge of the ionizing projectile would be in the direction of the particle (Fig. 4.8a). The molecules are displaced in an isotropic conformation relative to the position and direction of movement of the charged particle, and therefore there is no overall change in the energy of the medium locally [6, 7].

(a) Passage of a charged particle through a medium of refractive index n at velocities that polarize the medium. (b) The generation of coherent light waves via the Cerenkov effect. (c) The formation of a cone of Cerenkov light along the path of the charged particle through a medium with positive and (d) negative refractive index. [Taken from Shaffer et al., Nature Nanotechnology, 12, 106–117 (2017). Copyright Springer Nature]

However, in instances where the velocity of the particle, v ~ c/n or v > c/n, the molecules of the medium that are displaced by the passage of the charged particle are generally anisotropic relative to the position and diNurUhr (Fig. 4.8b). By Huygens principle of wavelets, each of the reoriented molecules of the medium can reradiate the energy delivered to them and do so as point wavelet sources. These sources will be coherent along a direction as shown in Fig. 4.8c. If ϑ is the angle at which the point sources reradiate, then it may be shown that

where n is the refractive index of the medium and β = v/c, where v is the velocity of the particle and c is the speed of light.

It is therefore possible to discriminate the identity of high energy charged particles purely based on the angle of Cerenkov emission or the threshold value of n at which Cerenkov emission is observed [6, 7]. In particle physics, experiments materials of various refractive indices are typically used to provide several potential Cerenkov thresholds for the detection of a variety of radiation types. The weak Cerenkov photons can be detected using PMTs or electronic photodetectors. Cerenkov photons are also observable as a result of the passage of charged particles through human tissue and Cerenkov imaging has seen a recent application for in vivo dosimetry in radiotherapy [8].

4.1.3.6 Calorimeters

Calorimeters allow the estimation of the total energy of a high-energy charged particle or ionizing photon through absorption of its total energy, via successive ionization of the material in the detector in a process that is termed a particle shower (Fig. 4.9), in a detector that is capable of absorbing all of the particles incident radiation. These devices may be ionization chambers as described earlier or semiconductor detectors, or a combination of the two. Depending on the nature and identity of the incident particle, it can create ionizing photons through bremsstrahlung or can produce further “hard” ionizing particles that may not be stopped easily in detectors with unsuitable absorbing characteristics, and therefore a single detector type will not achieve the experimental objectives (Box 4.3).

Box 4.3 Radiation Detectors

-

Radiation detectors measure the energy deposited over time in the detector-absorbing material

-

An ideal radiation detector provides spatial resolution, temporal resolution, information regarding the energy of the particle, and information regarding the identity of the radiation. No single detector can offer these simultaneously

-

Ionization chambers are common dosimeters that measure the ionizations produced when a charged particle or ionizing photon traverses the detector medium (generally a gas, requiring temperature and pressure corrections). An electric field is applied between the electrodes to prevent the recombination of ions produced.

-

Proportional counters are ionization chambers that also provide directional information on the path of charged particles

-

Scintillator materials are also used as dosimeters by relating the flux of photons emitted to the energy deposited. They are generally coupled to photomultiplier tubes (PMT) to amplify the intensity of the photon signal

-

Semiconductor detectors measure the number of charge carriers produced by the radiation in the detector material. Semiconductor materials are used due to the small energy required to produce electron-hole pairs

-

Cerenkov detectors record light produced by charged particles traveling through materials at a velocity greater than that at which light can travel through the material

-

Calorimeters quantify the absolute dose absorbed by measuring the increase in temperature produced by radiation

4.1.4 Monte Carlo Methods

The Monte Carlo (MC) method is a numerical calculation method based on random draws. A succession of draws is carried out in order to sample the random variables of the treated problem to deduce a value of interest. Repeated several times, this procedure allows to obtain a distribution of the values of interest and thus an estimation of their mean and their associated confidence interval. However, the number of samples must be sufficiently large for the empirical mean of the results to be an unbiased estimator of the expectation of the quantity of interest and its distribution as predicted by the central-limit theorem. In this process, the quality of the random number generator is essential. However, only pseudo-random numbers (having a period) can be generated and each Monte Carlo calculation code uses a different mathematical algorithm for that purpose.

The Monte Carlo method is currently used in many fields of physics to model the interactions of particles in a medium. In particular, it is used in dosimetry to estimate the energy loss of the particles in the medium and thus the absorbed dose.

To simulate the course of the particles, MC codes use the notion of cross-sections expressed in barn (b) (1 barn = 10−22 cm2). This cross-section is a physical quantity representing the probability of collision between an incident particle and a target, as it is proportional to the ratio between the interaction rate (T) and the incoming particle fluence (φ):

with Ntarget the number of target particles in the target volume, corresponding to the surface S of the beam intercepting the target and starget the number of target particles per surface unit.

Therefore, we can calculate the probability p for a particle to interact with the target in the following way:

With NA the Avogadro’s constant, ρ the target medium density, d the target thickness, and A the atomic mass of the target medium. Sigma?

We see that the probability of interaction depends directly on the quantity (ρ ⋅ d), which has the unit of g cm−2. Moreover, we see the unit of σ: p appear without dimension, σ has the dimensions of a surface. One can imagine σ as a geometrical surface: a particle striking the target in this area would interact, while outside this area it would cross the target without diffusion (Fig. 4.10).

From this concept of interaction cross-section, it is possible to define the mean free path (λ) of a particle by means of the equation:

This mean free path corresponds to the average value of a random variable representing the path traveled by a particle between two interactions (l). The probability density of this random variable is given by: \( p(l)=\frac{1}{\lambda }{e}^{-l/\lambda } \). This probability density allows to sample the distance traveled by a particle between two interactions using a random variable ξ0 uniformly distributed between 0 and 1 as follows l = − λ ln ξ0.

Cross-sections used in the MC codes are obtained either experimentally or are calculated from theoretical diffusion models and then used to determine the probability distributions of the random variables related to a trajectory as mean free path but also the type of interaction or the energy loss.

A key point of these MC calculations is related to the simulation of the electron (and positron) interactions. Indeed, these particles lose a very small part of their energy at each interaction they undergo. Thus, they generate a very large number of events before being finally absorbed into the medium. The detailed simulation of this cascade of interactions and of these weak energy deposits is particularly slow. Thus, most Monte Carlo codes apply simplifying theories called “condensed histories” or “multiple scattering” that summarize a certain number of interactions in a single step, allowing to reduce the simulation time. The compromise between the detail of the simulation and the speed of the calculation conditions the performance of the calculation code. Among the most used MC codes in dosimetry, we can highlight PENELOPE « Penetration and ENErgy LOss of Positrons and Electrons, EGSnrc « Electron Gamma Shower », MCNP6 and MCNPX « Monte-Carlo N-Particle eXtended », or Geant4 « GEometry And Tracking » [9,10,11,12,13].

Thanks to their capacity to include a large part of the physical processes involved in radiation–matter interactions and the possibility of taking into account all the different components of the experimental geometry of the problems, MC codes have clear advantages since they can provide information on the values of certain quantities that cannot be determined experimentally.

In radiotherapy, it is required to deliver a dose to the tumor with an uncertainty equal to or less than 5% [14]. Prescribed dose metrology involves the determination of quantities characterizing the transfer and absorption of energy in the irradiated media. In principle, Monte Carlo simulations allow the dose calculation with the required accuracy using phantoms or even patient’s voxelized images and thus provide information on dose distribution in the organ volume. However, to do this it is necessary to have quite exact knowledge of the beam characteristics, which means the need for detailed consideration of each accelerator including head shielding and structural components, which is very time-consuming and often submitted to industrial secret. Therefore, up to now, Monte Carlo codes have been mainly used to calculate the correction factors, often close to unity, to be applied to the experimental values obtained at the hospital during this metrological control.

Nevertheless, The Monte Carlo technique is increasingly used for clinical treatment planning by implementing MC-based algorithms that are used in situations where conventional analytical methods used by the Treatment Planning Systems (TPS) of the machines are not enough. To decrease the computation time, most implementations for radiotherapy divide the calculation into two steps. The first one consists in simulating the head of the treatment machine. This part being fixed and independent of the ballistics associated with the treatment of patients, a phase space can be recorded at the output of the treatment head and be reused. The second step consists in tracking the particles previously recorded in the phase space in the specific geometry of a patient for a specific treatment. Both parts must be, of course, experimentally verified by comparisons with percentage depth dose curves (PDD) and absorbed dose profiles at various depths in water or with measurements in situations where electronic equilibrium is not respected, for example, at the interfaces of materials of different densities (Box 4.4).

Box 4.4 Monte Carlo Simulation Method

-

The Monte Carlo (MC) method is a numerical calculation method used to estimate the dose deposited through simulation of the stochastic events through which radiation deposits energy

4.2 Radiation Microdosimetry

Microdosimetry was first introduced by H.H. Rossi in 1955 and is a fundamental and evolving research field in experimental radiation science [15, 16]. It studies the interaction between radiation and matter in micrometric volumes of cell-like dimensions taking into account the stochastic nature of the energy deposition process.

4.2.1 Definition, Concepts, and Units

The interaction between radiation and matter is a stochastic process that manifests itself as energy deposition, δ-electron production, or nuclear reactions. The latter produce charged particles, called secondaries, which in turn interact with surrounding matter, releasing energy, as δ-electrons do. The fundamental quantity in microdosimetry is the lineal energy, y, which aims to quantify the individual energy deposition events. Energy deposition is a stochastic quantity defined as the energy deposited at the point of interaction:

where Tin is the energy of the incident ionizing particle (exclusive of rest mass), Tout is the sum of the energies of all ionizing particles leaving the interaction site (exclusive of rest mass), and QΔm is the change of rest mass energy of the atom and all particles involved in the interaction. εi is usually expressed in eV. The lineal energy, y, is therefore defined by the ICRU report 36 ([17], p. 36) as the quotient of εtot by l, where εtot is the total energy imparted to a volume of matter by a single energy deposition event and l is the mean chord length in that volume:

The lineal energy is usually expressed in keV/μm. A single energy deposition event denotes the energy imparted by correlated charged particles. Due to the stochastic nature of radiation interaction, each particle traversal gives rise to a different lineal energy value thus producing a probability distribution function. Such probability distribution functions fully characterize the irradiation at a given point. The individual energy deposition events (opportunely corrected for the detector charge collection efficiency and converted into energy to tissue equivalent material) are collected in a form of spectrum [f (ε) vs ε] where f (ε) is the probability of an energy deposition event ε. From these energy spectra, the lineal energy spectra [f (y) vs y; with y = lineal energy] can be calculated by dividing the energy events by the average chord length of the detector, which is the average distance that the particle will traverse in the detector. In the case of a spherical detector, this can be demonstrated to be 2/3 of the diameter, while for thin plate detectors in a unidirectional particle beam, this can be approximated to the detector thickness [18]. The probability density function f (y), also called lineal energy frequency distribution, is independent of the absorbed dose or dose rate. Its expectation value \( \overline{y} \)F is called frequency mean lineal energy and, being a mean value, is no longer a stochastic quantity.

As the radiation biological damage is proportional to the dose delivered, it is useful to consider also the lineal energy dose distribution d (y), as it provides the fraction of the total absorbed dose in the interval [y, y + δy]. The dose-weighted lineal energy distribution d (y) is therefore given by:

By definition, this distribution is normalized and is generally plotted as d (y) vs log (y) to make it easier to appreciate the relative contribution of various energy deposition events (see Fig. 4.11).

Similar to the frequency mean lineal energy \( \overline{y} \)F, the dose-weighted mean lineal energy \( \overline{y} \)D can be defined as

This quantity provides the average lineal energy value when each energy deposition event is weighted based on its contribution to the total dose.

A crucial parameter for the calculation of the lineal energy is the mean cord length (\( \overline{l} \)), as the energy lost by a charged particle traversing a finite volume is proportional to the path traveled (track length) in that volume. The cord length however is itself a random quantity and for microdosimetric calculations, its mean can be estimated through Monte Carlo simulations or, for convex volumes, using the Cauchy formula \( \overline{l} \) = 4 V/S where V is the body volume and S is its surface area.

4.2.2 Technologies and Detectors

The first microdosimeter detector was designed and developed by Rossi in 1955 [16]. It was a spherical proportional counter made of tissue-equivalent plastic walls and filled with low-pressure tissue-equivalent gas (TEPC—Tissue Equivalent Proportional Counter). The low pressure allows to simulate micrometer volumes using a millimeter-size chamber (10–150 mm diameter), which is easier to handle and manufacture. Methane or propane-based gases are typically used at a pressure of ~0.9 kPa to simulate volumes of a few micrometer in diameter. The electrons produced by the traversal of the radiation through the chamber are amplified and collected by an electric field. Every radiation traversal generates therefore a small current that gives rise to a pulse later processed by the acquisition electronics. This allows the quantification of the energy deposited in micrometer volumes by individual radiation events. TEPCs are still the most common detector for microdosimetry measurements. Their main limitation is related to the wall-effects, which are events generated by the interaction of the incoming radiation with the walls of the device, and to controlling the electron avalanche process caused by high electric fields, which are required to simulate very small volumes. New devices are addressing these limitations with wall-less TEPC where specially designed electrodes are aligned to generate an electric field within a confined volume and reduce electron avalanches (avalanche confinement TEPCs). The new devices are also less cumbersome than the first TEPC designed by Rossi and can be operated in clinically relevant radiation beams.

More recently, solid-state detectors have been employed as microdosimeters taking advantage of their unique characteristics including compact size, economic development, and low sensitivity to vibrations, which makes them particularly suitable for clinical environment. The working principle is based on the electron-hole pairs produced by the radiation as it crosses the sensitive volume of the semiconductor crystal. The number of electron-hole pairs is proportional to the total energy deposition (ΔE) and the crystal ionization energy (W; average energy required to produce an electron-hole pair) by

The ionization energy is specific for each crystal and in the order of a few eV for typical semiconductor materials, which is an order of magnitude lower than that required for gas detectors. Furthermore, it is largely independent of the energy of the incoming radiation. Similar to TEPCs, the current generated by the collection of the produced electrons is used to quantify the energy deposition events. As the sensitive volume of the detector can be of a few micrometers, the pulses generated provide a microdosimetric spectrum of the incident radiation. In order to serve as a microdosimeter, solid-state detectors need to have well-defined and micrometer-sized sensitive volumes coupled to an efficient charge collection mechanism, as the electrical signal generated can be very small. A potential drawback of solid-state microdosimeters is their non-tissue equivalence, which generally requires additional conversion calculations provided by Monte Carlo simulations.

Silicon and diamond microdosimeters have been realized with sensitive volumes as low as 1 μm in thickness and a few hundred μm2 area and collection charges approaching 100%. Their small geometry provides also high-spatial resolution and the possibility to measure full therapeutic beam intensities, as the electronic chain is not saturated by the large number of particles required for clinical use. A main limitation of semiconductor microdosimeters is the electronic noise, as the devices work with little or no electronic gain due to the small voltage that can be applied to the small-volume semiconductor. This limits the lowest energy events that can be detected. The fixed size of the crystal also implies that different detectors may need to be used to obtain microdosimetric information for different sensitive volumes while gas-based detectors can achieve this by varying the gas-pressure. The advantages and disadvantages of both detector types are confronted in Table 4.2.

4.2.3 Biological Relationship Response

In the framework of radiation biology, either the linear energy transfer LET or the lineal energy can be used to specify the radiation quality. While the LET is frequently used, at least for broad classifications of different radiation qualities (high vs low LET, i.e., densely vs sparsely ionizing radiation), the use of the lineal energy is less common due to the limited experimental data and the complexity in analyzing the microdosimetric spectra. However, the use of LET to determine radiation quality is affected by some intrinsic limitations such as different particles of different mass and energy having the same LET being still characterized by a different energy distribution of the secondary electrons. In general, the microdosimetric spectra provide information that is not captured in the LET and it may be very beneficial for fundamental radiobiological studies aimed at linking biological response to energy deposition events, as well as for radiation protection and clinical work, e.g., predicting treatment efficacy.

Several LET-based RBE models have been developed over the years. The Microdosimetric Kinetic Model (MKM) is a model based on the dual radiation action theory and specifically developed to link microdosimetric measurements to radiobiological effects [19]. The central hypothesis of the dual radiation action theory is that the number of lethal lesions is, through a linear quadratic relationship, proportional to the specific energy deposited in a microscopic site. The specific energy (z) is defined as the ratio between the energy imparted (ε) and the mass of the microscopic volume (m) [17]:

As both the specific energy (z) and the lineal energy (y) measured by microdosimetry are related to a microscopic volume, the two quantities are linked through the microscopic volume mass (m) and mean chord length (l):

with l, m, ρ, and rd the mean chord length, the mass, the density, and the radius of the microscopic volume, respectively.

Kase et al. [20] formalized the link between cell survival fraction SF and the microdosimetric measurements:

with

where α0 and β0 are the linear quadratic parameters specific for each cell line (usually taken from X-ray measurements), D is the macroscopic dose absorbed by the cell and ρ is the cell density. y0 and rd are fixed parameters accounting for the overkill effect observed at high lineal energy values (usually set at y0 = 150 keV/μm) and for the sensitive critical volume of the specific cell line, respectively. The parameter y* includes the measured microdosimetric spectrum (f (y)) providing therefore a direct link between radiobiological response and physical measurements. The use of the MKM and microdosimetry is the only approach providing a link between physical and biological measurements, considering that LET values cannot be experimentally determined. Supported by the fast development of technologies that will facilitate microdosimetric measurements, there is renewed interest in this approach. However, the precise estimation of the y0 and rd parameters requires further investigation (Box 4.5).

Box 4.5 Microdosimetry

-

Microdosimetry quantifies individual energy deposition events through the lineal energy

-

Microdosimetry is performed through tissue equivalent proportional counters (TEPC) or solid state microdosimeters

-

Microdosimetry is able to directly link radiobiological response to physical measurements

4.3 From Track Structure to Early DNA Damage

4.3.1 Introduction

When ionizing radiation (IR) interacts with a biological sample (which is composed of ~70% water in weight and the rest biological molecules), it can either directly hit the biological molecules or the water molecules. In both cases, these interactions can lead to an energy deposition at the interaction point (inelastic scattering) and the production of secondary particles, mostly electrons that can, in turn, also interact with the target. The ionized or excited molecules, particularly those of water, generate radicals (water radiolysis) that also can attack the biological unit molecules or aggregates, leading to subsequent structural damage of these molecules, which could ultimately have consequences on the functioning of the cell and its outcome.

In this section, we will review the current state of knowledge concerning the different stages that lead from the physical interactions between ionizing radiation and biological matter (known as the physical stage) to the formation of damage to biomolecules as schematically depicted in Fig. 4.12. As indicated above, this includes the description of the production of radicals and their chemical interactions with molecules (chemical stage) but also the consideration of the geometrical and chemical structure of the target molecules. In particular, we will look at the effect of these interactions on the DNA contained in the cell nuclei, because it is well established that the DNA is a privileged target with regard to the effects of radiation on cells [21]. This continuous description will reveal differences at each stage level between the various types of radiation that will enable us to categorize them according to their capacity to produce these damages in terms of number and complexity.

Different experimental techniques have been developed and used in recent years to measure this damage, as we will see in Sect. 4.3.3. However, in most cases, these techniques do not allow to have access to the total number of strand breaks or double strand breaks as well as to the base damage or to the complexity of the damage cluster. Thus, the Monte Carlo simulation method has become the “gold standard” for the prediction of these damages. This means however that it is necessary to know, with the least possible uncertainty, all the data allowing the description of these stages to feed the codes. In Sect. 4.3.4, we will detail what these data or parameters are, their current uncertainties, and therefore the current simulation capabilities with the different codes.

4.3.2 Physical Stage (Direct Damage)

Ionizing radiation interacts with the exposed target through a cascade of random interactions with the atoms of the medium. The result concerns as much the interactions of the primary radiations as the slowing down of the secondary radiations emerging from them. During these interactions, radiation can be scattered or absorbed by gradually losing energy. If these absorption processes lead to sufficiently high energy transfers (typically >10 eV in radiobiology), they can lead to the ejection of electrons and modify the electronic layer of atoms and molecules and their chemical properties, which gives them the power to induce effects. Indeed, IR can be categorized as directly and indirectly ionizing depending on whether it is composed of charged or uncharged particles, respectively, but in all cases with enough energy to produce ions in matter.

While ionization is considered the most important physical phenomenon to explain radiation-induced effects, excitation, a phenomenon in which electrons are transferred to higher atomic or molecular levels, is also considered among the possible events to be precursors of the radiation-induced effect. It is assumed that the ratio of energy loss by ionization and excitation is stable between radiations of different natures and energies, and, therefore, that the measurement of ionizations alone is sufficient. This approximation is important for the validity of reference dosimetric and microdosimetric measurement techniques using gas detectors. These techniques only “see” the ionizations but apply global physical data such as the average ionization energy (W) that accounts for both phenomena. It is generally recognized that the distinction between ionization and excitation is more blurred in condensed states, which are ultimately the ones targeted in dosimetry using other measurement methods than gas meters, and in radiobiology.

From a mechanistic perspective, if one wants to identify which energy deposition will result in damage to the structure of the target biomolecules, this proportionality between the number of ionizations in a volume (however small it may be) and the deposited energy is therefore not detailed enough. Indeed, in this context, it is necessary to “zoom in” on the scale of the target’s constituents at the nanometric scale to look at all the energy deposits (or energy transfers) produced by the initial radiation, as well as the secondary particles, notably the electrons. This is the study of the so-called track structure of radiation. At this scale, the differences between the spatial distribution of energy deposits defined by the tracks produced by different types of radiation (photons, electrons, energetic ions of different energies, etc.) lead to variations in early damage sufficient to produce a great diversity of later effects at both the cellular and tissue levels.

Thus, for example, in the case of irradiation by high energy ions, we can look at the track they produce as being formed by a “core” and a “penumbra” region. The core is formed by the energy deposits of the projectile itself and is almost straight as elastic scattering does not have an important influence on the ion direction at energies under 10 MeV. The penumbra region is formed by the energy deposits of secondary electrons produced during ionizations with energies of ~1–100 MeV, interacting with many molecules in the target [22, 23].

However, when the primary particle ionizes water molecules, the main component of biological matter, many of the electrons are produced with low energy [24, 25]. Indeed, the energy of the emitted electrons for a given material is mainly determined by the oscillator-strength distribution of its valence electronic structure. The long-range of Coulomb interactions and the cross section that peaks at ~20–30 eV and decreases to very low values at 100 eV leads to the formation of electrons with energies, in general, less than 100 eV [26]. These low energy electrons (more extensively defined as those ≤10 keV) have a small penetration range (<1 μm) and inelastic mean free path (IMFP) (<10 nm) in typical condensed media [27] like water or DNA components. Therefore, most of the direct damage is produced around the track and, more specifically, at the track ends, where they are produced in high quantity.

In fact, the electrons below ~20 eV seem to be particularly effective because, in addition to participating in the production of direct damage by ionizations or excitations of the constituents of the DNA, they can undergo resonant scattering with molecules, generating reactive radicals and molecular species, which can themselves contribute to DNA breaks [28] and oxidative damage. Experiments have indicated that electrons (or photons) with energies as low as ~10 eV can still induce double strand breaks, possibly through a resonance mechanism [29, 30].

4.3.3 Physicochemical and Chemical Stages (Indirect Effect)

In the previous section, we were interested in the interactions between IR and the target molecule (DNA) and how some of these interactions can cause damage in a direct way. However, IR interacts in the same way with the surrounding water medium and induces local electronic instability. The physicochemical stage corresponds to the set of rapid electronic and atomic modifications resulting from the readjustments of the medium in order to return to thermal equilibrium. Thus, water molecules that are in an excited or ionized state can dissociate into new chemical species (radiolysis):

Among these species, the OH° (hydroxyl) radical is particularly interesting in radiobiology, because it can be the origin of DNA damages that are difficult to repair by the cell. This radical is mainly produced from the radiolysis of pure water following different mechanisms (dissociation directly after an ionization or an excitation of the water molecule).

Moreover, under-excitation electrons (with an energy lower than the last excitation shell of the water molecule, 8.22 eV) will undergo elastic scattering and will continue to lose energy by vibrational and rotational interactions until reaching the energy of the medium, the so-called thermalization energy. This thermalization process is in competition with two processes of electron capture, either by a neutral water molecule (“dissociative attachment”) or by an ionized water molecule (“geminal recombination”) and is supposed to be completed within a picosecond after the irradiation.

Beyond the picosecond, the newly created radiolytic species are free to diffuse randomly in the medium and to interact with each other, which is the chemical stage. Initially localized around the energy deposits of the track, they propagate and distribute more homogeneously in the medium as time evolves. The initial distribution of species depends strongly on the LET of the incident particle. In the case of high-energy electron projectiles (low LET), the initial distribution in the form of clusters will be more strongly marked than in the case of ions, where the LET is more important, and thus the energy depositions are more homogenously located all over the track. It is generally accepted that beyond the microsecond, most of the reactions between different clusters are completed and the chemical stage can be considered as finished for a given track.

As indicated, all the simultaneous reactions are thus in competition and the temporal evolution of the chemical species, as shown in the example in Fig. 4.13, can strongly depend on the initial parameters. These reactions are very numerous in a liquid water medium [31] and increase even more in complex biological media. Thus, even in rigorous radiation chemistry experiments studying the kinetics of elementary chemical reactions, it can be difficult to measure the impact of secondary and competing reactions. In this context, simulation becomes a powerful tool to predict the complex dynamics of macroscopic observables, starting from elementary mechanisms [32].

To do so, one category of numerical simulations consists in dividing the modeling into two phases with different levels of granularity and acceptable simplifying assumptions. In the first one, each radical species is considered individually, and we are interested in the calculation of the reaction rate, the diffusion coefficient, or the branching ratios. This first phase can be simulated using molecular dynamics (like Born–Oppenheimer or Car–Parrinello) and/or quantum mechanical calculations like TD-DFT. However, this approach is unfortunately prohibitive in terms of computation time for a high number of molecules, which limits their application to systems such as a cell. In the second phase, approximations can be made to significantly reduce the computation time. For example, molecules of the same species can be grouped in order to describe their evolution by a unique variable (concentrations) and two types of methods are often applied: either probabilistic (Gillespie algorithms) or based on the solution of differential equations.

A second category of numerical simulations consists of describing the medium as a solvent or continuum and only calculating the diffusion and the chemical reactions of particular interesting species. This method is well adapted when the number of molecules is relatively small and, more particularly, when their distribution is inhomogeneous like in this case. Therefore, most of the track structure codes including the simulation of the chemical stage use this approach (Sect. 3.3.4) and include other simplifications as considering each molecule spherical and diffusing independently of the other molecules. In this frame of a diffusion-reaction model, their diffusion in the medium can be solved with the Green Function of the Diffusion equation (GFDE). The eventual reaction of two particles is considered when the interparticle distance is smaller than their reaction radius. The reactions can be either fully or partially diffusion-controlled and involve neutral or charged particles. This gives four classes of reactions that were introduced by Green et al. [33]. For totally diffusion-controlled reactions (type I), the rate constant is assumed to be infinite, meaning that the particles react whenever they collide. In this case, the GFDE solution can be calculated using the Smoluchowsky boundary conditions in three dimensions [34]. This reaction mechanism is the one most often triggered when radiolytic species diffuse and encounter a reactive site that is either representing other radicals or a DNA constituent (sugar-phosphate backbone or bases with high rate constants). For other reaction types, including those representing the scavenger effects, please refer to the literature [35, 36].

Within this frame (GFDE), different stochastic simulation techniques have been proposed in order to calculate the probability of reactions to happen depending on the position of each molecule at a given time [33, 37, 38] as the step by step method or the IRT for Independent Reaction Time method.

Indirect damages are the consequence of these reactions for the DNA molecule and can represent between 30 and 90% of the total DNA damage depending on the LET of the irradiation. Among them, of importance are the strand breaks produced by the hydroxyl radical capturing the hydrogen of the deoxyribose at the C4 position or the addition of hydroxyl radical to a nitrogenous base, resulting in base alterations. These altered bases are often unstable and can either decompose or react with environmental molecules and radiolytic species. The underlying reactions are therefore multiple and complex [39]. DNA-protein or DNA–DNA bridging can also occur under the effect of radical species produced by radiation [40].

It should be noted that the description of the chemical stage process as explained above becomes much more complex if we take into account a more realistic chemistry of the cellular environment adding factors such as the pH, the oxygen concentration, or the presence of more complex molecules around the DNA, commonly called “scavengers” because of their action on the radical species. In particular, the concentration of oxygen has been shown to have a significant impact on radiation resistance: indeed, carcinogenic cells, which are hypoxic, are 2–3 times more resistant to radiation than healthy, normoxic cells. This “oxygen effect” is also believed to be one of the possible explanations for the protective effect on healthy tissue in the case of FLASH radiotherapy as the depletion of oxygen during irradiation could create a temporary hypoxic environment for both healthy and cancer cells. Nevertheless, this hypothesis is still not completely proven and the mechanism behind this FLASH effect however remains unknown [41].

4.3.4 Biological Stage (Early DNA Damage Scoring)

Radiation-induced damage is multiple and depends on numerous factors such as the type of radiation, the DNA configuration, or the irradiated medium condition. They are the result of the physical, physicochemical, and chemical processes explained in the previous sections and thus generated either by direct or indirect effects. The main DNA damages are strand breaks (simple, double, or clustered), base alterations, protein-DNA, and DNA–DNA bridges. Of these, the radiobiology and simulation communities have historically been most interested in double strand breaks (DSB) or clustered damage including at least one DSB. Indeed, in most repair models this type of DNA damage is called “lethal” or “semi-lethal,” as they are considered to lead to misrepair and cell death [42,43,44]. In all cases, and even if they can sometimes be correctly repaired by cellular repair mechanisms, it is established that these complex damages can have important consequences on the cellular survival or its functioning. Moreover, DSB can be detected experimentally and compared to the results of predictions from simulations. Several detection techniques exist, which are adapted according to the irradiation configuration, the dose used, or the cell type. Historically, comet assay or pulsed field electrophoresis (PFE) has been used with high-dose irradiation in order to generate DNA fragments that can be separated and measured leading to a given number of DSB detected. Data obtained in this way, for example, in the case of proton irradiations at different energies or gamma rays [40, 45], have been used extensively to validate codes such as PARTRAC [46], KURBUC [47], or, more recently, Geant4-DNA [48].

Other techniques, used at low dose, consist in using immunofluorescent probes to localize the radio-induced DSB within the genome. For example, in the case of H2AX immunofluorescence; the histone closest to a double strand break that contains the H2AX variant of histone H2A (approximately present at 25% of H2A histones and evenly distributed in the DNA) allows the detection of DNA double strand breaks through its phosphorylation. This phosphorylation is visible using specific antibodies, containing a fluorochrome substance, making the double strand breaks appear as luminous points called “foci” or IRIF (ionizing radiation-induced foci) [49].

An important quantity of experimental data has been obtained recently using this technique or with other fluorescent biomarkers such as the 53BP1 protein, which allows to quantify the DSB produced by different types of radiation and to compare them with the simulation results. However, an important bias of this technique is that, in general, one detectable focus does not correspond to a single DSB formed in the DNA [50], and therefore the irradiation conditions and the geometry of the target must be explicitly considered in the simulation for such validations [51, 52] .

4.3.5 Track Structure Monte Carlo Codes

As we described earlier in this chapter, particle transport through matter using MC codes is generally handled via a “condensed history” (CH) approach [53], currently used for dosimetry and the majority of microdosimetry applications for very energetic particles. In such a CH approach, many scattering events are grouped into fewer artificial steps, much longer than the mean free path of the particle, using multiple-scattering theories and a continuous energy loss along those steps. However, in order to simulate the physics at the nanoscale and to possibly link it to the biological effects of radiation with track structure properties in the nm regime [54], an event-by-event tracking of the different physical events is necessary to allow for better spatial resolution. Therefore, so-called track structure codes have been developed for applications in micro- and mostly nanodosimetry. In Table 1 taken from [55], we present the list of the main track structure codes that have been developed since the 80 s of the last century. In this table, it is indicated if the code includes the possibility of simulating the chemical stage and the materials available for the simulation of the physical stage.

Indeed, in order to model all the physical interactions taking place in the physical stage, these codes need to include cross sections for simulating ionization, electronic excitations below the ionization threshold, and, ideally, vibrational or rotational excitations of the medium, in principle for all the interacting particles but particularly for secondary electrons, for the reasons explained in Sect. 4.3.2. Therefore, track structure codes either rely on pre-parameterized or tabulated sets of total and differential elastic and inelastic cross sections in order to calculate the energy deposition in condensed matter. An important point to consider is that at these low energies, the interaction cross sections depend on the composition of the material but also on its state. That is to say that the cross sections are not the same for a medium in a gaseous or a solid state. This leads to a particular difficulty because it is very difficult (not to say, almost impossible) to obtain experimental cross sections for biological media in their condensed state [24]. Only a few data obtained under very specific conditions exist for liquid water [56, 57] and these data are the basis for the set of models utilized to calculate the cross sections used by most track structure codes.

However, still, some track structure codes use atomic ionization/electronic excitation cross sections [58] obtained in the gas phase even if, in principle, they are not suitable for low energy excitations of valence electrons in water, since such excitations are sensitive to the electronic structure of the target [54, 59,60,61].

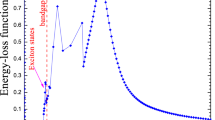

Nevertheless, most of the theoretical models for the calculation of the cross sections used in these codes are based on the first Born approximation that uses the dielectric formalism. Here, the properties of a given material in terms of characterizing the inelastic interactions with charged particles are given in what is called the Energy Loss Function (ELF). This function allows calculating the mean free path and thus the inelastic cross sections. However, this function depends on the energy and momentum of the charged particles. As the existing experimental data have been obtained in the optical limit (i.e., for a zero momentum transfer), it is necessary to extend the calculation of this function for non-zero momentum transfers. Different dispersion algorithms based on the electron gas theory [53] are then used to redistribute the imaginary part of the function between the different ionization and excitation levels while preserving the agreement of their sum with the initial experimental data.

However, differences in results of inelastic scattering obtained with different dispersion algorithms to extrapolate optical data to finite momentum transfer reach about a factor 2 in the range 50–200 eV (and even further at still lower energies) [62] and consequently, these differences impact the obtained results. Recent studies have reported a potentially relevant effect of ionization clustering [63] or DNA damage induction [64].

The description of the dielectric function of water also continues to be studied. Thus, only recently have works been published that address exchange and correlation effects based on the electron gas model or that improve the description of effects beyond the first Born approximation [27, 65]. The objective is to improve previous dispersion algorithms [66], to develop new TS codes [67, 68], and to clarify differences in inelastic scattering between different condensation phases [69]. Besides, other authors still work on measuring or adapting the theoretical model, using, for example, pre-parametrized models [70], to obtain cross-sections for targets other than water to be included in TS codes.

Concerning the elastic scattering models for low-energy electrons, different theoretical approaches are also developed and included in TS codes. Some use screening parameters derived from experiments to enlarge the applicability of the first Born approximation [71] and others use the Dirac partial wave analysis [72, 73].

Overall, the accuracy of the results for water at energies below 100 eV remains questionable, and it would be desirable to have results for the dielectric function, the electron energy loss and the inelastic mean free path from ab initio TD-DFT approaches, i.e., with no free parameters and which, as a consequence, are prone to have predictive power and to be extended to a variety of targets (Table 4.3).

4.3.6 Simulation of DNA Damage

DNA damage is calculated from the energy depositions at nanometric scale in liquid water simulated with track structure codes and overlaid onto DNA models. DNA geometrical description can be as simple as cylindrical models of the DNA [74, 75] or as complex as a full atomistic description of human chromosomal DNA [76]. Nowadays, some of these models are directly included in the physical stage simulation (see Fig. 4.14) [48, 78], in order to facilitate the use of DNA material cross-sections instead of liquid water if they are available in the MC TS code. Besides, some subcellular structures are implemented in some TS codes [79] for the calculation of energy deposited in mitochondria or cellular membranes, for instance.

Example of DNA target geometrical model used in the mechanistic simulation of DNA radiation-induced damage with the Geant4-DNA code [48]. The generation of this geometrical model was done with the DNAFabric software [77] from the nucleotide description to the complete genome of an eukaryotic cell nucleus in the G0/G1 phase

From the resulting energy deposition values or interactions registered in the DNA volumes, direct damages are calculated using different approaches depending on the TS code. For instance, in some cases, an energy threshold value (often of 17.5 eV) in the nucleotide backbone is used to define a direct strand break [30, 80]. Others, as in the case of the PARTRAC code, use a uniform probability linear function from 5 to 37.5 eV [81] in order to calculate the resulting direct strand breaks, taking into account that very small energy depositions from vibrational excitations can also lead to this kind of DNA damage.

After the simulation of the physical stage, the geometrical model of the DNA target (essentially the position of all its constituents) as well as the position of the surrounding ionized or excited liquid water molecules are “translated” in terms of chemical species and injected in the code for the simulation of the chemical stage as described in Sect. 4.3.3. Here also, different codes use different parameters for the definition or the calculation of the indirect strand breaks depending on the DNA geometrical model; the number of included reactions or the duration of the chemical stage simulation [32].

Finally, in order to quantify the results, an important issue is the definition of double strand breaks and, above all, of clustered damage. Indeed, these notions are fundamental if we want to be able to compare the results of the simulation predictions with the experimental data, representing either the fragments produced (PFE, comet assay) or the signaling of a repair process set in motion by the cell (foci). The way of quantifying the damage predicted by the modeling of the physical, physicochemical, and chemical stages must thus be adapted each time to the characteristics of the experimental observable used for the validation. Nevertheless, for a relative comparison of different radiations, other types of classification can be used. Finally, in order to extend the modeling to later stages and include the repair mechanisms, the scoring method must also be adapted to the initial damage definitions of each repair model. Thus, the definition of a double stranded break is relatively well established as two breaks in the sugar-phosphate group on opposite strands separated by less than 10 base pairs (bp). More complex breaks or clustered damages are very author-dependent: DSBs accompanied by altered bases or single breaks at less than 10 bp, two double breaks separated by less than 25 bp, for instance [82], or more complete definitions as the classification proposed by Nikjoo et al. [83].

Recently, a standardized format for the simulation output results [84] has been proposed by different researches of this community, in order to preserve a maximum of information on the DNA damage simulated by the different codes and their location in the genome. This standard output amounts to a mapping of the individual damages produced (and the information of their direct or indirect origin) so that it can then be adapted to the scoring required for each use of the code, validation with experimental data, or use as input to repair models (Box 4.6).

Box 4.6 Radiation Track Structures

-

MC Track structure developed over the years allow the simulation of energy deposition at nanometric scale

-

From these results and a DNA geometrical target model, direct DNA damages can be calculated

-

Chemical reactions between radiation-induced chemical species in the cell nucleus and the DNA target generate the so-called indirect effects that account for up to 70–90% of the total strand breaks

-

The way of considering damage and its complexity must be adapted to the different experimental methods

4.4 Micro-Beams and Minibeams

4.4.1 Micro-Beams and Minibeams

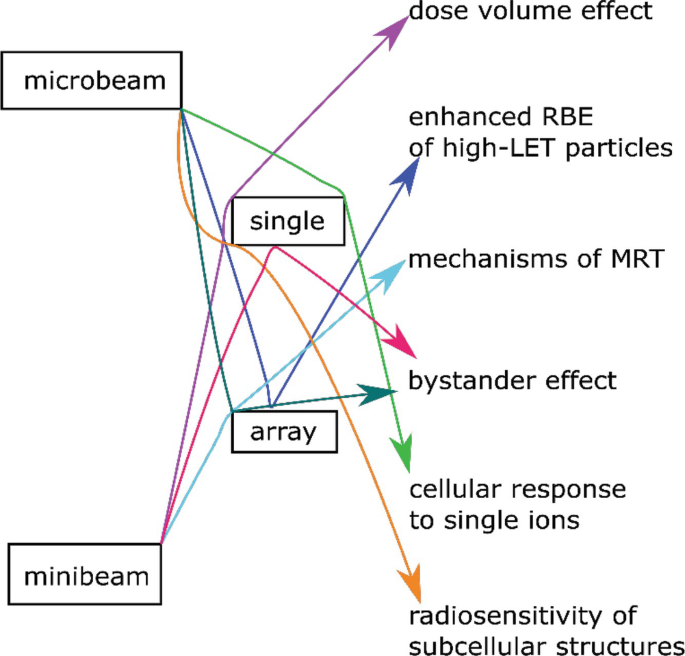

Conventional radiobiological studies are using broad (in the range of cm) irradiation fields for irradiating a whole cell population with a homogeneous dose in order to be able to screen an average reaction of this population to radiation. Already in the 1950s, the reaction of single cells to homogeneous irradiation or even to irradiation of subcellular parts became of interest [85].

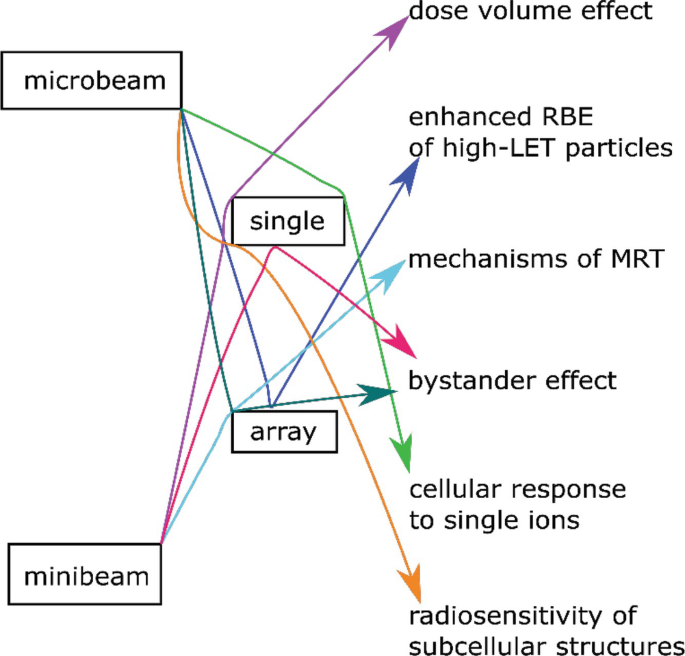

Furthermore, in the 1990s the question arose whether there is a reaction of non-irradiated cells when they are located close to an irradiate one—the so-called bystander effect. To address these and other related topics, it is necessary to be able to apply a single, subcellular-sized radiation beam (in the range of sub-micron to a few micron) with an accuracy in the range of 1/a few μm. This is the field of microbeam research, where the term microbeam is used for beam sizes at full width at half maximum in the range of ~1 to ~10 μm for photon as well as particle beams. Additionally, the development of micro-beams makes it possible to not only apply single beams but also arrays of beams, which can then be used to directly study the kinetics of DNA repair, the movement of damage sites, the connection to chromatin organization, and their relation to radiation quality and outcome.

When beam sizes get larger (~100 μm–~1 mm), the beam or beam array is then termed minibeam or minibeam array. Here, the beam sizes become large compared to cell size and the difference in the effects switch from single cell differences to differences in cell population. An effect in this size range was described in the 1980s as the so-called dose-volume effect [86].

This effect is exploited in modern radiotherapy approaches such as Microbeam radiation therapy (MRT) using photon beams with a beam size around 100 μm and particle minibeam radiotherapy (MBRT) using submillimeter-sized beams of protons or heavier ions (Table 4.4).

4.4.1.1 Micro-Beams

A new wave of interest worldwide in the use of micro-beams in radiation biology in the 1990s has led to the development of a number of tools that eventually evolved into facilities with potential clinical utility [87, 88]. Single cell micro-beams provide a unique opportunity to control precisely the dose to individual cells in vitro and the localization of dose within the cell. This makes it possible to study a number of important radiobiological processes in ways that cannot be achieved by other methods. Figure 4.15 shows such micro-beams as single or array application visualized on fluorescent nuclear track detectors and also via the foci of 53BP1 repair protein in human HeLa cells.

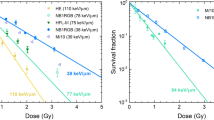

Proton microbeam with a size of 0.8 μm (fwhm) visualized by a fluorescent nuclear track detector. Array of proton micro-beams with a point distance of 5 μm in both directions. 53BP1 accumulation in HeLa cells after microbeam array irradiation with a single carbon ion per point [beam size 0.8 μm (fwhm) and point distance 5 μm]