Abstract

Despite decades of research to understand the biological effects of ionising radiation, there is still much uncertainty over the role of dose rate. Motivated by a virtual workshop on the “Effects of spatial and temporal variation in dose delivery” organised in November 2020 by the Multidisciplinary Low Dose Initiative (MELODI), here, we review studies to date exploring dose rate effects, highlighting significant findings, recent advances and to provide perspective and recommendations for requirements and direction of future work. A comprehensive range of studies is considered, including molecular, cellular, animal, and human studies, with a focus on low linear-energy-transfer radiation exposure. Limits and advantages of each type of study are discussed, and a focus is made on future research needs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the current system of radiological protection, risk to a specific organ or tissue is considered to depend on the absorbed energy averaged over the target mass exposed. The biological outcome of the exposure is determined not only by the total absorbed dose but also by the time frame of the dose delivery, and by the type of ionising radiation responsible for the energy deposition (radiation quality). To account for the effects of dose and the temporal variation in dose delivery, a single dose and dose rate effectiveness factor (DDREF) is currently applied for the purposes of radiological protection. However, the evidence base for this judgement continues to be debated, as reflected by previous and ongoing work performed in Task Group 91 of the International Commission on Radiological Protection (ICRP) (Rühm et al. 2015, 2016; Wakeford et al. 2019).

The EU MELODI (Multidisciplinary Low Dose Initiative) platform is considering inhomogeneity in dose delivery, both at the temporal and spatial level, as a priority research area. Mechanisms responsible for biological effects of different dose rates or of inhomogeneous spatial dose deposition are not fully characterised. At the cellular level, such effects are investigated with in vitro studies, but when it comes to how they finally affect human health risk (both cancer and non-cancer diseases), few relevant experimental models or validated datasets exist (https://melodi-online.eu/). To cover the topic of the effects of spatial and temporal variation in dose delivery, a digital workshop was conducted in November 2020 evaluating what is known on the effect of dose rate, among other aspects. This publication builds on the outcomes of this meeting.

The present paper summarises current evidence for the influence of dose rate upon radiation-related effects. Endpoints considered include molecular, cellular, organism, and human studies. Emphasis will be placed on dose rates relevant for radiological protection settings. We focus on low linear-energy-transfer (LET) external exposures, since for internal contamination with radionuclides, a decrease in dose rate with time will occur to varying extents due to the physical and biological half-lives of the involved radionuclides, complicating the interpretation of results.

The manuscript structure includes the history of low-dose rate definition, ongoing work on DDREF under ICRP TG91, presentations of experimental work (in vitro and in vivo), and epidemiological studies. Limits and advantages of each approach are discussed, and a focus is made on future research needs.

The dose rate concept

Definition of low-dose rate

The United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR) first defined low-dose rate (LDR) with respect to radiation-related cancer in its 1986 Report (UNSCEAR 1986). For all types of radiation, LDR were < 0.05 mGy/min (3 mGy/h) and high-dose rate (HDR) were > 0.05 Gy/min (3000 mGy/h), with dose rates in between defined as “intermediate dose rates”. These definitions were reiterated in the UNSCEAR (1988) Report. The UNSCEAR (1993) Report, Annex F comprehensively discussed how dose rates might be classified according to a number of approaches: microdosimetric considerations, cellular experiments, animal experiments, and human epidemiology. UNSCEAR (1993) concluded that information on LDR relevant to assessing radiation carcinogenesis in humans could be obtained from animal experiments. On the basis of animal studies, UNSCEAR (1993) was of the view that following exposure to low-LET radiation, a dose rate effectiveness factor should be applied to reduce the excess cancer risk per unit dose if the dose rate was < 0.1 mGy/min (when averaged over about an hour), whatever the total dose received.

Of interest is the position adopted by ICRP in Publication 60, the 1990 Recommendations (ICRP 1991). In ICRP Publication 60, in the context of stochastic health effects, an LDR was defined as < 0.1 Gy/h (equivalent to 1.67 mGy/min), a dose rate that was a factor of 33 larger than the < 0.05 mGy/min defined by UNSCEAR in its 1988 Report, but no explanation was provided as to how this value was derived or why it differed substantially from the definition then recently adopted by UNSCEAR (1988). The ICRP Publication 60 definition of an LDR as < 0.1 Gy/h contrasts with that of < 0.1 Gy/day adopted by the UK National Radiological Protection Board (NRPB) in 1988 in its report NRPB-R226 (Stather et al. 1988) and referred to in the UNSCEAR 1993 Report (UNSCEAR 1993). A definition of an LDR as < 0.1 Gy/day is equivalent to < 0.07 mGy/min, which is very close to the definition given in the UNSCEAR 1988 Report of < 0.05 mGy/min. The ICRP 2007 Recommendations, ICRP Publication 103 (ICRP 2007), although frequently referring to LDR in the context of a low-dose rate effectiveness factor, does not define the range of dose rates considered to be LDR. However, the recently published ICRP Publication 147 (Harrison et al. 2021a, 2021b) states that a DDREF should not be applied to reduce solid cancer risks if the dose rate for low-LET radiation exceeds 5 mGy/h, implying a definition of LDR of 0.1 mGy/min when averaged over approximately 1 h, which is the definition of LDR as restated in the UNSCEAR 2019 Report (UNSCEAR 2019) and in the 2020/2021 Report (UNSCEAR 2021) defines a low dose rate for hight-LET radiation as "no more than one high-LET track traversal per cell per hour".

The above definitions of LDR have been established with respect to stochastic effects, especially cancers. We note, however, that ICRP Publication 60 mentions in the context of deterministic effects that dose rates lower than 0.1 Gy/min of low-LET radiation “result in progressively less cell killing until a dose rate of about 0.1 Gy/h or less is reached for mammalian cells” (ICRP 1991).

Low-dose rate in the current system of radiological protection

In the current scheme of radiological protection recommended by ICRP, following the definitions used by UNSCEAR, for an exposure to a low dose (conventionally < 100 mGy of low-LET radiation) or for an exposure at an LDR (< 0.1 mGy/min of low-LET radiation when averaged over about 1 h, i.e., approximately 5 mGy/h), the excess risk of adverse stochastic health effects (cancer in the exposed individual and hereditary disease in the subsequently conceived descendants of the exposed individual) is taken to be directly proportional to the dose of radiation received with no-threshold dose below which there is an absence of excess risk. This is the linear no-threshold (LNT) dose–response model.

For low-level exposures (low doses or LDR), the current ICRP recommendations incorporate a DDREF, which reduces the risk per unit dose when risk estimates derived from exposures to moderate-to-high doses received at an HDR are applied to exposures to low doses or LDR. Risk estimates for solid cancers obtained from the Japanese atomic-bomb survivors are halved (corresponding to a DDREF of 2) when applied to low-level exposures. A DDREF is not applied to leukaemia, because a linear-quadratic dose–response model is used (rather than linear dose–response models used for solid cancers), which is implicitly consistent with a reduction of risk at low levels of exposure (Cléro et al. 2019).

The DDREF can be considered a combination of a low-dose effectiveness factor (LDEF) and a dose rate effectiveness factor (DREF). The LDEF essentially addresses the degree of upward curvature of the dose–response following a range of doses received from acute exposures to low-LET radiation, whereas the DREF compares the risk per unit dose following high and LDR exposures. Here, epidemiological evidence will be examined to assess the degree of support for the application of a DREF (and specifically, a DREF of 2) to the risk per unit dose obtained from the Japanese atomic-bomb survivors to obtain the risk per unit dose appropriate for LDR exposures.

Recent positions on DDREF

The numerical value of the DDREF is internationally debated. ICRP, in its Publication 60, proposed a value of 2 for low-LET radiation (ICRP 1991). This value was also adopted by UNSCEAR in 1993 (UNSCEAR 1993). While ICRP has confirmed this value in their most recent general recommendations in Publication 103 (ICRP 2007), other expert bodies came to different conclusions. For example, around the same time, the US National Academy of Sciences proposed a value of 1.5 with a range from 1.1 to 2.3 (NRC 2006). While UNSCEAR did not apply a DDREF in their analysis of solid cancers for the UNSCEAR 2006 Report, a linear-quadratic dose–response model was used, which implicitly considers a reduction of risk at low doses (UNSCEAR 2008). DDREF was not directly considered in the report of the French Academy of Sciences, but variations of radiation effects with dose rate were considered as an additional source of uncertainty in the assessment of risks at low doses (Averbeck 2009; Tubiana 2005). Later, the World Health Organisation applied no reduction factor (i.e., a DDREF of 1) in its report on health risk assessment after the Fukushima accident (WHO 2013); and the German Radiation Protection Commission (SSK) opted to abolish the DDREF, corresponding to an implicit value of 1 (SSK 2014). The historical development has been briefly reviewed by Rühm et al. (2015). More recently, UNSCEAR emphasised that while the DDREF is a concept to be used for radiological protection purposes, extrapolation of radiation risks from moderate or high doses and HDR to low doses or LDR may depend on various factors and, consequently, cannot—from a scientific point of view—be described by a single factor (UNSCEAR 2017). For use in probability of causation calculations, values between 1.1 and 1.3 have recently been proposed (Kocher et al. 2018), although the methodology has been questioned (Wakeford et al. 2019).

To review the use of the DDREF for radiological protection purposes, ICRP has initiated Task Group 91 on Radiation Risk Inference at Low-dose and Low-dose Rate Exposure for Radiological Protection Purposes. Since 2014, this group is reviewing the current scientific evidence on low dose and LDR effects, including radiation-induced effects from molecular and cellular studies, studies on experimental animals, and epidemiological studies on humans. Results of this activity have been published regularly in the peer-reviewed literature (Haley et al. 2015; Rühm et al. 2015, 2016, 2017, 2018; Shore et al. 2017; Tran and Little 2017; Wakeford et al. 2019; Little et al. 2020).

Experimental evidence of a dose rate effect

Experimental setup

The difficulty to study biological effects of different dose rates is well illustrated in Elbakrawy et al. (2019), where micronucleus formation was used as an endpoint. HDR exposure is usually short (less than an hour), whereas LDR exposures can last hours to reach the same dose. For this reason, additional groups were added with HDR irradiation performed in parallel at the start of LDR exposure or at the end. When comparing LDR to HDR effects, they found a difference between LDR and HDR when HDR is done at the beginning of LDR exposure and no difference when performed at the end.

As the time between point/period of exposure and biological endpoint measured impacts the result, performing robust experimentations to understand dose rate effects is challenging. Experimental setups should consider cumulative doses, duration of exposure but also the delay between the start and the end of exposure. For such reasons, experimental design should be well conducted with an appropriate statistical analysis and parallel controls always included.

Due to these difficulties in studying LDR, alternative approaches to detect some differences might be necessary. This includes increasing the dose, making the comparison of LDR and HDR effects less relevant for considering radiological protection. Another possible approach is to apply an adaptive response scheme, where the modulation of the response to a challenging dose due to a priming LDR treatment is used to evidence LDR effects (Satta et al. 2002; Carbone et al. 2009; Elmore et al. 2008).

Dedicated infrastructures

In Europe, there are several facilities for in vitro and in vivo exposures to low-dose rates. In the framework of the CONCERT EJP-WG Infrastructure activities, information on some of them has been published in AIR2 bulletins (https://www.concert-h2020.eu/en/Concert_info/Access_Infrastructures/Bulletins).

Among European LDR exposure infrastructures, it is worth mentioning the FIGARO facility, located at the Norwegian University of Life Sciences (NMBU), that allows gamma irradiation of up to 150 mice at 2 mGy/h and larger numbers at lower dose rates (AIR2 No. 1, 2015). In addition, three facilities with similar features located at the UK Health Security Agency (UKHSA, Harwell), Istituto Superiore di Sanità (ISS, Rome, Italy), and Stockholm University (Sweden) are available for irradiation of cells and/or small animals in a dose rate range 2 µGy/h–100 mGy/h (AIR2 No. 11, 2016; AIR2 No. 16, 2016). Another platform, the MICADO’LAB, is located at the French Institute for Radiological Protection and Nuclear Safety (IRSN, France). It has been designed to study the effects on ecosystems of chronic exposure to ionising radiation and is able to accommodate experimental equipment for the exposure of different biological models (cell cultures, plants, and animals at dose rates ranging from 5 µGy/h to 100 mGy/h (AIR2, No. 19, 2017).

Other interesting facilities where studies at extremely low-dose rates have been carried out are Deep Underground Laboratories (DULs) where dose rates are significantly lower than on the Earth’s surface. Although the main research activity in these infrastructures concerns the search for rare events in astroparticle physics and neutrino physics, DULs offer a unique opportunity to run experiments in astrobiology and biology in extreme environments (Ianni 2021) highlighting biological mechanisms impacted by differences in dose rates. The large majority of data have been collected so far in Italy at the Gran Sasso National laboratory (LNGS, AIR2, No. 3, 2015), and in the US at the Waste Isolation Pilot Plant (WIPP). Recently, the interest in this field has been shared by many other DULs where underground biology experiments already started or are planned (SNOLAB Canada, CANFRANC Spain, MODANE France, CJML/JINPING China, BNO Russia, ANDES Argentina). Compared to that at the Earth’s surface, inside DULs, the dose/dose rate contribution due to photons and directly ionising low-LET (mostly muons) cosmic rays can be considered negligible, being reduced by a factor between 104 and 107 depending upon shielding. Radiation exposure due to neutrons is also extremely low, being reduced by a factor between 102 and 104. One further contribution to the overall dose/dose rate can come from radon decay products, but it depends upon the radon concentration, which can be kept at the same levels of the reference radiation environment by a suitable ventilation system. Terrestrial gamma rays represent the major contribution to the dose/dose rate inside the DULs (Morciano et al. 2018b).

Dedicated cellular and animal models

MELODI embarked on a large effort beginning around 2008 to collect all archives and tissues from animal irradiation studies done in Europe. The result of this was the European Radiobiological Archive (ERA) that is available to all investigators worldwide, and some of the animal studies included in this collection and database include low-dose rate studies (Birschwilks et al. 2012); www.bfs.de/EN/bfs/science-research/projects/era/era_node.html. In the US, the Department of Energy (DOE) collected archived tissue samples and databases from long-term studies involving approximately 49,000 mice, 28,000 dogs, and 30,000 rats. Data from many of these studies are available on the website janus.northwestern.edu/wololab. While many of these experimental animal studies had been done at low-dose rates and studies were published, the ability to re-analyse them with new statistical and computational approaches allowed for the assessment of the data from new perspectives.

Rodents are particularly radioresistant and wild-type strains will not develop some pathologies of interest, such as atherosclerosis. Therefore, to study some specific mechanisms, the use of transgenic mice can be beneficial for understanding effects observed in humans. Most transgenic mouse studies are limited by the fact that they are imperfect models of the human situation. For example, animals with oncogenic mutations develop caners, but they are often similar but not identical to the human disease (Cheon and Orsulic 2011). Another limitation is that most human disorders that are modelled in transgenic situations have multi-genic causes, but the creation of a transgenic mouse often assumes that a single gene is responsible for the disease. In fact, the transgenic model is a means of testing the molecular consequences of a particular genetic alteration, but the mimicking of disease may be limited. Limitations of models have been pointed out for virtually all animal models that have been studied (Shanks et al. 2009). Finally, one can argue that mice (or indeed any experimental animal) may not adequately model human diseases.

Similar limitations are present in cellular models. Any in vitro experiment is limited by observable endpoints and sensitivity of assays. Despite these limitations, valuable insights can be generated from such experiments, if these findings are not extrapolated beyond the context of the model and experimental setup.

Dose rate effects at molecular and cellular level

The studies listed in Tables 1, 2, 3, 4 have been selected from the literature to draw some conclusions about radiation biology studies and are explained in some detail below.

Gene expression, protein modification, and cell cycle effects

There have been several studies that have examined gene and protein expression in animals prone to particular conditions (either genetically engineered or having background genetic mutations) using LDR exposures (Ina and Sakai 2005; Ebrahimian et al. 2018a; Mathias et al. 2015; Ishida et al. 2010). These all showed differences in gene expression patterns between LDR and HDR exposed mice and differences in lymphocyte activation and cytokine expression. A tissue-specific response has been identified among tissues linked to the difference in DNA damage repair processes (Taki et al. 2009). Changes in cell cycle progression have also been reported to show dose rate effects with increases in survival, accumulation of cells in G2 phase following LDR, and delays of DNA synthesis (Matsuya et al. 2018, 2017).

In addition to mice, other animals and cultured cells have been analysed for gene expression and protein modifications after LDR exposures (see Table 1).

Alterations in several genes related to ribosomal proteins, membrane transport, respiration, and antioxidant regulation for increased reactive oxygen species (ROS) removal were also observed in experiments carried out inside DUL on mammalian cell cultures and organisms (Smith et al. 1994; Fratini et al. 2015; Van Voorhies et al. 2020; Liu et al. 2020b; Zarubin et al. 2021; Castillo et al. 2015). In a recent paper, Fischietti et al. (2020) reported that pKZ1 A11 mouse hybridoma cells growing underground at the LNGS display a qualitatively different response to stress; induced by over-growth with respect to the external reference laboratory. Analysis of proteins known to be implicated in the cell stress response has shown that after 96 h of growth, the cell culture kept in the external laboratory shows an increase in PARP1 cleavage, an early marker of apoptosis, while the cells grown underground present a switch from apoptosis toward autophagy, which appears to be mediated by p53. This behaviour is not affected by a further reduction of the gamma radiation dose by shielding. Interestingly, this effect reverted when, after 4 weeks of underground culture, cells were moved to the reference radiation environment for 2 more weeks, indicating a plasticity of cells in their response to the low-radiation environment. Transcriptomic and methylation analysis are presently underway to understand the genetic and epigenetic bases of the observed effects. Of crucial importance is also trying to identify the component(s) of the radiation spectrum triggering the biological response.

Overall, the data suggest that biological systems are very good sensors of changes in environmental radiation exposure, in particular regarding dose rate effects, and also support the hypothesis that environmental radiation contributes to the development and maintenance of defence response in cells and cultured organisms. Nevertheless, it should be noted that extrapolation from experimental cell or animal models to humans is very challenging, because it depends upon many parameters, including the model, endpoints, and radiation exposure type. More work is needed to determine which models are best for certain human endpoints.

Mutation

Early studies were done by William and Leanne Russell at Oak Ridge National Laboratory in the 1960s examining the development of coat colour mutations in mice following exposure to gamma rays (Russell 1963, 1965; Russell et al. 1958). This work is now considered classic and helped establish that LDR exposure (8 mGy/min or less) induced fewer hereditary mutations in mice compared to the same dose administered at HDR. Later, this work was confirmed by Lyon et al. (1979) and Favor et al. (1987).

In contrast, an inverse dose rate effect for survival was originally observed initially in both S3HeLa and V79 cells in culture (Mitchell et al. 1979). This initial work was expanded to include experiments on mutation induction by LDR carried out in the 1990s. Among them, the work of Amundson and Chen (1996) reported an inverse dose rate effect in syngeneic human TK6 and p53-deficient WTK1 lymphoblastoid cell lines exposed to continuous LDR γ-irradiation. These data have been interpreted on the basis of the assumption that at low-dose rates, cell cycling can cause mutated cells to progress to resistant phases before they are killed, resulting in previously resistant surviving cells progressing to a sensitive part of the cycle, where they can undergo mutagenesis (Brenner et al. 1996). Different results have been obtained by Furuno-Fukushi et al. (1996), who using WIL2-NS human lymphoblasts did not find an inverse dose rate effect.

The studies cited above, along with other published data on HPRT mutation in various rodent and mammalian cells, were re-analysed by Vilenchik and Knudson (2000). They showed that for both somatic and germ-line mutations, there is an opposite, inverse dose rate effect, with reduction from low to very low-dose rate, the overall dependence of induced mutations being parabolically related to dose rate, with a minimum in the range of 0.1 to 1.0 cGy/min (60 to 600 mGy/h). They suggested that this general pattern could be attributed to an optimal induction of error-free DNA repair in a dose rate region of minimal mutability. This study also predicts on a quantitative level that induction of DNA repair and/or antioxidant enzymes by radiation depends not only on the level, but also on the rate of production, of certain DNA lesions and ROS, with an optimal response to an increase of 10–100% above the “spontaneous’’ background rates.

In human telomere reverse transcriptase (TERT)-immortalised fibroblast cells obtained from normal individuals, Nakamura et al. (2005) demonstrated that the genetic effects (HPRT mutation induction and size of the deletions induced) of low-dose rate radiation were much lower in nonproliferating human cells than those seen after high-dose rate irradiation, suggesting that LDR radiation-induced damage was repaired efficiently and correctly with a system that was relatively error-free compared to that repairing damage caused by HDR irradiation.

Koana et al. (2007), investigated mutation induction in Drosophila spermatocytes after low and high X-ray doses delivered at two different dose rates (0.05 Gy min and 0.5 Gy/min). They obtained evidence of error-free DNA repair functions activated by low dose of low-dose-rate radiation (0.2 Gy; 0.05 Gy/min) able to repair spontaneous DNA damage (detectable in the sham sample). This was not observed at the higher dose rate. After a high-dose exposure (10 Gy), a significant increase in the mutation frequency with respect to the sham-irradiated group was observed, independently on the dose rate (0.5 Gy/min or 0.05 mGy/min). The authors proposed the presence at low-dose rate of a threshold between 0.2 and 10 Gy below which no increase in mutation frequency is detected.

Mutation experiments have also been carried out at the LNGS underground laboratory. The first evidence was obtained in yeasts, which showed a high frequency of recombination when grown underground as compared to above ground (Satta et al. 1995). Afterwards, using Chinese hamster V79 lung cells, an increased mutation frequency at the hprt locus was observed before (spontaneous level) and after irradiation with challenging X-ray doses in cultures kept for 10 months underground compared to those kept above ground (Satta et al. 2002), suggesting more damage at a very low-dose-rate exposure. Further long-term experiments provided evidence against mutant selection and in favour of the involvement of epigenetic regulation in the observed increase of spontaneous hprt mutation frequency after 10 months of growth underground and other 6 months above ground (Fratini et al. 2015). Biochemical measurements of antioxidant enzymatic activity have shown that cells maintained in the presence of “reference” background radiation are more efficient in removing ROS than those cultured in the underground environment.

A summary of the experiments described here can be found in Table 2.

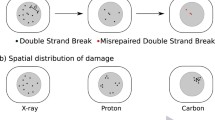

DNA and chromosomal damages

The dose rate effect on chromosomal aberrations (CA) after ex vivo blood exposure is well known, since Scott et al. (1970) reported fewer chromosomal aberration yield when the dose rate decreases. More recent data (Bhat and Rao 2003b) have confirmed the linear-quadratic response for chromosomal damage induction (micronuclei) after acute (high does rate) exposure (178.2 Gy/h) and the trend to a linearity when the dose rate decreases to reach a linear dose response for the lower dose rate (125 mGy/h).

However, the in vitro studies used to establish this dose rate effect have mainly been performed using a dose rate of the order of Gy/min, which is much higher than that received in the environment or by workers, and is more in the area of high- and medium-dose rate as defined by UNSCEAR.

In vitro experiments have shown an increase in radiation-induced micronuclei frequency (2 Gy challenging dose) in TK6 lymphoblasts after six months of continuous growth in reduced environmental radiation background at the LNGS underground laboratory as compared to the external reference laboratory at the ISS (Carbone et al. 2009).

In vivo experimental studies have measured dicentrics and translocations produced in mice after much lower dose rates starting from 1 mGy/day. One of them compares induction of chromosomal damage after exposure to ~ 1 mGy/day, ~ 20 mGy/day, ~ 400 mGy/day (16.7 mGy/h) with 890 mGy/min (53,400 mGy/h) as an acute group; cumulative doses ranged from 125 mGy to 8 Gy. The dose rate effect on both types of CAs was confirmed and a dose rate effect was even measured when comparing translocations and dicentrics induced after 20 mGy/day and 1 mGy/day exposure but also with a higher translocations yield after 1 mGy/d exposure compared to the control group (Tanaka et al. 2013, 2014). This was also confirmed in another study (Sorensen et al. 2000) comparing 50 mGy/day with 200 mGy/day and 400 mGy/day (duration of exposure up to 90 days with cumulative doses up to 3.6 Gy). No difference among the chronically exposed group was identified but again a difference from the acute exposed group was detected.

The main limitation of both studies is that cumulative doses and/or duration of exposures are different among the groups. When the analysis was restricted to doses more compatible with what could be received in the whole exposure time of an individual (between 0.3 and 1 Gy), then the difference in dose rate was not so important and, consequently, it is very difficult to draw any conclusions on whether there is or not a dose rate effect.

Some DDREFs have been derived from the above studies based on the modelling of dose rate relationship without excluding the higher doses which drives the beta coefficient of the curves. Based on Tanaka et al.’s (2013) data sets, the DDEF values calculated ranged from 2.3 (translocation for 100 mGy) to 17.8 (dicentrics for 1000 mGy).

Other in vivo studies do not find a dose rate effect. No significant dose rate effect for micronuclei induction frequency across the dose range has been observed as examined by Turner et al. (2015) in spite of approximately 300 times difference between the two dose rates compared of 1.03 Gy/min and 186 mGy/h, but these dose rates are much higher than those used in Tanaka et al. (2013) and close to the in vitro studies.

A summary of selected experiments can be found in Table 3.

Epigenetics and ageing

Epigenetics is the study of the mitotically and/or meiotically heritable changes in gene activity and transcript architecture, including splicing variation, that cannot be explained solely by changes in DNA sequence. Epigenetic alterations include DNA methylation, chromatin remodelling, histones’ modifications, and microRNA-regulated transcriptional silencing. Their impact appears to be greater with low-dose rates than acute exposure. Genetic and epigenetic mechanisms appear to have their common origin in the radiation-induced ROS and/or reactive nitrogen species. Both mechanisms contribute to the complex response to radiation exposure and underlie non-linear phenomena (e.g., adaptive responses), particularly relevant at low doses/LDR (Vaiserman 2011; Schofield and Kondratowicz 2018; Belli and Tabocchini 2020).

Kovalchuk et al. reported different patterns of radiation-induced global genome DNA methylation in C57/Bl mice after whole-body exposure to 50 mGy/day over a period of 10 days or an acute X-ray irradiation of 500 mGy. This was found in the liver and muscle of exposed male and female mice, with hypomethylation induced in the muscle of both males and females, but not in the liver tissue. Sex- and tissue-specific differences in methylation of the p16INKa promoter were also observed (Kovalchuk et al. 2004). A role of DNA hypermethylation was suggested to be involved in adaptive response induced by long-term exposure to low-dose γ-irradiation of human B lymphoblast cells. A novel mechanism of radiation-induced adaptive response was proposed involving the global genomic DNA methylation which is crucial for cell proliferation, gene expression, and maintenance of genome stability, but also important for maintenance of chromatin structure and regulation of cellular radiation response (Ye et al. 2013).

Other laboratory and field studies have demonstrated changes in overall DNA methylation and trans-generational effects in organisms, including C. elegans and zebrafish, exposed chronically to ionising radiation (Kamstra et al. 2018; Horemans et al. 2019).

Post-translational modifications on histone proteins controlling the organisation of chromatin and hence transcriptional responses that ultimately affect the phenotype have been observed in fish (zebrafish and Atlantic salmon). Results from selected loci suggest that ionising radiation can affect chromatin structure and organisation in a dose rate-dependent manner, and that these changes can be detected in F1 offspring, but not in subsequent generations (Lindeman et al. 2019).

A peculiar aspect of low dose/LDR exposure is that related to the ionising radiation background. Experiments carried out in DULs using cultured cells or organisms suggest that very low levels of chronic exposure, such as the natural background, may trigger a defence response without genetic change, therefore mediated by epigenetic mechanisms (Fratini et al. 2015; Morciano et al. 2018a, 2018b). This explanation is consistent with the hypothesis of the epigenetic origin of responses such as adaptive response and non-targeted effects.

Chronic radiation exposure of primary human cells to gamma-radiation between 6 and 20 mGy/h over 7 days has been demonstrated to reduce histone levels in a dose rate-dependent manner (Lowe et al. 2020). This is linked to the induction of senescence, which is a key cellular outcome of LDR radiation exposure (Loseva et al. 2014). Since senescence is linked to many age-related pathologies, including cardiovascular disease, the increase of senescent cells with a tissue following chronic radiation exposure would be expected to cause premature ageing. However, there is contradictory evidence. First, some animal experiments have shown (albeit rarely) that lifespan has been extended by chronic radiation exposure, albeit at much lower dose rates than these in vitro experiments. Second, the development of an epigenetic clock to measure biological age using changes in DNA methylation (Horvath 2013) has demonstrated that cells cultured while being exposed to dose rates between 1 mGy/h and 50 mGy/h do not show any difference in epigenetic age (Kabacik et al. 2022).

Studies specifically showing dose rate dependence of epigenetic effects are summarised in Table 4.

Discussion

Dose rate effects are evident when examining gene expression and protein modifications; nevertheless, a comparison of such studies demonstrates that there are broad differences in gene and protein expression depending upon cell type, radiation conditions, culture conditions, and others. This suggests that the endpoints of gene/protein expression may be sensitive markers of radiation effects, but that they are influenced by many factors making broad application of the results difficult. In addition, most changes are observed shortly after exposure and cannot necessarily be linked to adverse health effects among humans. Similarly, no clear response can be highlighted from epigenetic studies. In vitro and in vivo studies have investigated the dose rate effect on mutations, allowing meta-analyses to be conducted, which broadly support an inverse dose rate response.

The study of LDR with in vitro models is limited as such models can only be exposed for durations from minutes to weeks and late endpoints might be affected by too many parameters. The impact of dose rate generally observed shortly after exposure might not be reflected on later endpoints.

Conclusions from dose rate effects at molecular and cellular level

For chromosomal aberrations, a dose rate effect is well described but only clear for cumulative doses over 0.5 Gy when an increase in aberrations is observed. An inverse dose rate effect has been reported consistently for limited endpoints including mutations and cell survival.

Overall, evidence from studies at cellular and molecular level suggests potential positive cellular effects and minimal adverse genetic effects at low-radiation dose rates, as long as a total cumulative dose remains low.

Dose rate effects on lifespan, cancer, and non-cancer endpoints

Many endpoints are impossible to study in vitro; therefore, it is necessary to use animal models to observe specific end points and systematic effects. Here, we describe radiation dose rate and its effects on lifespan, cancer, and non-cancer endpoints. Again, key studies we have considered are summarised in Table 5.

Lifespan and cancer-related end points

The development of a meta-analysis of animals from large-scale databases permitted a reassessment of the DDREF as had been reported by the BEIR VII Committee in the US (Haley et al. 2015). It determined that the values used were based on the use of low doses without direct comparisons of dose rate, so were considered inaccurate. These studies used lifespan as an endpoint. More recent comparisons used rodents in a large-scale multi-year single study that were exposed to protracted vs acute exposures. Considering cancer mortality, the authors concluded that the ratio of HDR to LDR (< 5 mGy/h) gamma dose–response slopes, for many tumour sites was in the range 1.2–2.3, albeit not statistically significantly elevated from one (Tran and Little 2017). These studies used non-cancer and cancer causes of death in their determinations. Based on the work of Tanaka et al. and Zander et al. (see Table 5), animals exposed to LDR lived longer cancer-free than similar mice exposed to the same dose at HDR. Causes of death were similar for control and gamma-exposed animals, although the time to expression of cancer in these animals was more rapid in the gamma-exposed animals than in the controls (Zander et al. 2020). Interestingly, animals sham-irradiated with 120 fractions (i.e., taken to the chamber but not irradiated) had a significant increase in lymphoma incidence over other sham-irradiated animals (i.e., fewer trips to the chamber), and also when compared to non-sham-irradiated animals; this suggests that controls must be carefully considered and any radiation effect may be minimal compared to such environmental factors. Animals exposed to 120 fractions of radiation were not included in this analysis. They had an apparently a lower incidence than the sham-irradiated, but more work is needed to understand this. This study highlights the necessity to have suitable control groups. LDR studies with large numbers of animals were also performed at the IES facility in Aomori Prefecture in Japan. A comparison of males revealed that mice exposed to LDR (0.4 Gy over 400 fractions for 22 h per day, 1.1 mGy/day) had similar causes of death as animals that received high-dose-rate exposures (8 Gy over 400 fractions for 22 h per day, 21 mGy/d) (Tanaka et al. 2007, 2017; Braga-Tanaka et al. 2018a). Female mice, on the other hand, had some dose rate-specific differences noted in the digestive system and circulatory system, which were higher in the animals receiving the higher dose rate than those exposed to a lower dose rate. A comparison of their studies to those by Zander et al. (2020) revealed remarkable similarities in both sexes except in digestive system, respiratory system, and non-neoplastic endpoints. It is possible that differences in ventilation, bedding, and diet could have contributed to these differences.

Studies carried out on flies in parallel above ground (at the reference laboratory at L’Aquila University) and below ground (at the LNGS underground laboratory) have shown that the maintenance in extremely low-radiation environment prolongs the life span, limits the reproductive capacity of both male and female flies, and affects the response to genotoxic stress. These effects were observed as early as after one generation time (10–15 days) and are retained in a trans-generational manner (at least for two more generations) (Morciano et al. 2018a). It is interesting to note that organisms well known to be radioresistant can sense such small changes in the environmental radiation.

Developmental and morphometric endpoints were also investigated in DULs. Data so far obtained on lake whitefish embryos have shown a significant increase in body length and body weight of up to 10% in embryos reared underground, suggesting that incubating embryos inside the SNOLAB can have a subtle yet significant effect on embryonic growth and development (Thome et al. 2017; Pirkkanen et al. 2021). Experiments were also performed using the nematode Caenorhabditis elegans at WIPP have shown that worms growing in the below normal radiation environment had faster rates of larval growth and earlier egg laying; furthermore, more than 100 genes were differentially regulated, compared to normal background radiation levels (Van Voorhies et al. 2020).

Based on these studies, it is clear that at least some examined dose rate effects are evident at the whole organism level.

Non-cancer endpoints: inflammation and other systemic effects

The influence of LDR exposures on inflammatory responses was studied using two different animal models: ApoE−/− mice that develop atherosclerosis at a high frequency (Mitchel et al. 2011, 2013; Mathias et al. 2015; Ebrahimian et al. 2018b) and MRL-lpr/lpr mice (Ina and Sakai 2005) that develop a systemic lupus erythematosus-like syndrome. While one can argue that both mouse models have only a moderate relationship to human disease, the effects of radiation exposures particularly at low doses were interesting. In all cases, exposure of animals to LDR radiation exposure demonstrated enhanced life expectancy, in most cases accompanied by either a reduction in pro-inflammatory responses (Mathias et al. 2015) or by an enhanced expression of anti-inflammatory effects (Ebrahimian et al. 2018b). These were evident at lower dose rates but not high-dose rates when they were compared within the study. The protective effects of LDR exposures were not dependent on p53 (Mitchel et al. 2013). Taken together, these results suggest that LDR radiation can inhibit inflammatory responses under the appropriate conditions.

Non-cancer endpoints: cataract

Acute exposure to ionising radiation has provided clear evidence of an increased incidence of cataract. However, limited studies have been carried out specifically to address the effect of dose rate on radiation-induced cataract. The most comprehensive study to date (Barnard et al. 2019) exposed C57BL/6 mice to gamma-radiation at 0.84, 3.7, or 18 Gy/h, and found an inverse dose rate response in cataract formation in the lens of the eye. This supports previous epidemiological evidence as reviewed in Hamada et al. (2016).

Discussion

In addition to studies described here, there are other non-cancer effects of ionising radiation, particularly cardiovascular disease, that have been well studied using acute radiation exposure. However, specific experiments to establish the effect, if any, of dose rate have yet to be addressed.

Animal research is always dependent on control studies, ensuring that sham-irradiated animals are appropriately tested and that accurately matched controls are being examined. Numerous and extensive studies have documented the impact of the mouse strain on results, since strain-specific differences in pathology (particularly cancer type) and even radiation sensitivity have been noted in the literature (Reinhard et al. 1954; Lindsay et al. 2007). Cross-comparisons of animals from one study to another may be limited by these concerns. In addition, long-term low-dose experiments often require very large animal populations to identify significance of potentially small effects. In addition, LDR studies require not only large numbers of animals but also housing of animals sometimes for years to reach cancer and lifespan endpoints.

Despite these limitations, animal studies have the advantage of examining the total body experience, keeping cells in the context of the tissue, including immune, circulatory, and other systems of the body. This allows for studies on multiple impacts on endpoints and not just single-cell impacts examined in cells in culture. The ability to manipulate specific genes through transgenic mice provides a mechanism by which one can examine the impact of under- or over-expression of these genes. Animal studies also have the advantage (over human epidemiologic work) of having carefully controlled conditions to allow for the best assessment of radiation effects.

Conclusions from animal studies

There have been several large-scale animal studies examining dose rate effects. In general, animals exposed to the same dose of radiation at LDR survived longer than those exposed HDR. In addition, the major cause of death in these animals was cancer induction (Tran and Little 2017), although the type of cancer differed in different mouse strains. Studies of inflammatory responses suggest that LDR radiation exposure may inhibit inflammation under appropriate conditions, which, along with an adaptive response, could explain the extended lifespan seen at low-dose rates. Cataract induction (much like results shown for mutations in cellular studies) points to the existence of an inverse dose rate effect.

While radiation exposure has been shown to modulate cancer induction differently in male and female mice (with certain cancers predominating in each sex depending in part on mouse strain), there were few dose rate-specific differences observed in cancer induction between the two sexes. Some non-cancer endpoints, such as digestive system disorders and respiratory disorders, were shown to have sex-specific differences with LDR exposure.

Dose rate effects in human populations

Cancer risk epidemiology

To date, most epidemiological studies have focused on risk of cancer after exposure to ionising radiation. These studies of exposure to ionising radiation have included persons who have experienced a wide range of doses received at a wide range of dose rates (McLean et al. 2017; Kamiya et al. 2015). On the one hand, there are the Japanese survivors of the atomic bombings of Hiroshima and Nagasaki and patients treated with radiotherapy, who received a range of doses at an HDR, and on the other hand, there is the general population chronically exposed to a range of LDR of terrestrial gamma and cosmic background radiation. In addition, there are other groups, such as patients undergoing exposure to radiation for medical diagnostic purposes and workers who have experienced a series of low-level exposures in their workplaces.

A-bomb survivors

The Japanese atomic-bomb survivors are usually adopted as the reference group for HDR exposures, because the Life Span Study (LSS) cohort has been the subject of careful study and there is little ambiguity in considering a group that has experienced an excess risk of cancer as a result of receiving moderate-to-high doses during a brief exposure to radiation of a few seconds.

More specifically, because of the atomic-bomb explosions over Hiroshima and Nagasaki, radiation exposures of the inhabitants of both cities were due to prompt and delayed radiation, primary and secondary radiation, and gamma and neutron radiation. At a distance of 1000 m from the hypocentre in Hiroshima, for example, the highest contribution to kerma free-in-air (2.77 Gy) was from delayed gamma radiation (gamma radiation produced by the decay of fission products in the rising fireball), which lasted for about 10 s and, consequently, resulted in a dose rate of 0.277 Gy/s. The second highest contribution to kerma free-in-air (1.38 Gy) was from prompt secondary gamma radiation (from prompt neutrons produced during the explosion that resulted in additional gamma radiation when they were transported through the atmosphere and interacted with air and soil), which lasted for about 0.2 s and, consequently, resulted in a dose rate of about 6.9 Gy/s. The third highest contribution to kerma free-in-air (0.24 Gy) was from prompt neutrons (which were produced during the explosion and transported through the atmosphere to the ground), which lasted for only about 10 µs and, consequently, resulted in an HDR of 2.4 × 104 Gy/s. Finally, the fourth highest contribution to kerma free-in-air (0.07 Gy) was from prompt primary gamma radiation (which was produced during the explosion), which lasted for only about 1 µs and, consequently, resulted in a HDR of 7 × 104 Gy/s. Table 6 summarises these dose and dose rate contributions for distances from the hypocentre of 1000 and 2000 m. Similar values for kerma free-in-air hold for Nagasaki. Kerma is calculated here as sum of the kerma from gamma radiation and neutron radiation; for details, see Rühm et al. (2018).

Furthermore, the survivors experienced an exposure that was effectively a uniform whole-body exposure to gamma radiation (although there was a generally small component of exposure to high-LET neutrons that needs to be borne in mind), so that all organs/tissues were exposed at doses that are approximately equal (although smaller for organs/tissues that are deeper within the body).

Finally, a survivor located at 1000 m distance from the hypocentre at time of bombing had experienced a mean dose rate of 2.4 × 103 Gy/s (8.6 × 109 mGy/h) if the dose rates of the four components given in Table 6 were weighted by their corresponding free-in-air kerma values. Similarly, a survivor at 2000 m had experienced a mean dose rate of 5.2 × 101 Gy/s (1.9 × 108 mGy/h). If the contribution from prompt neutrons is multiplied by a factor 10 to account for an increased relative biological effectiveness of neutrons as compared to gamma radiation, these mean dose rates translate to 8.8 × 104 Gy/s (3.2 × 1011 mGy/h) and 6.9 × 101 Gy/s (2.5 × 108 mGy/h) at 1000 m and 2000 m, respectively.

Medically exposed cohorts

Medical exposures for diagnostic purposes involve doses that are much lower than the (usually localised) doses received during radiotherapy. While the doses received from discrete external exposure radio-imaging procedures are likely to be low, a series of diagnostic exposures, such as computed tomography (CT) scans, could produce cumulative doses that are > 100 mGy (Rehani et al. 2019). It is important to consider that the highest doses may be confined to tissues that are in the vicinity of that part of the body under scrutiny, and the individual exposures could be temporally separated by periods of days. Nonetheless, dose rates during exposure are likely to be moderate-to-high. This potential mix of low dose and HDR effects could lead to difficulties of interpretation, because the two effects described by the two factors, LDEF and DREF, cannot be distinguished.

Considering that the typical tissue dose received during a CT-scan is about 10 mGy and although an examination lasts between 5 and 20 s or so, the vast majority of the dose is delivered as while passing through the ring (under the direct beam), which usually takes less than 1 s. The dose rate is therefore of the order of 10 to 20 mGy/s. Obviously, precautions should be taken as this estimated dose rate is variable depending upon the patient's corpulence, the location of the organ/tissue, and, of course, the scanner settings (current, tube voltage and rotation speed of the X-ray tube, table movement speed, collimation, and filtration) among other considerations.

Studies of those being treated with radiotherapy pose rather more problems of interpretation, because the exposure is generally more localised and being used to treat diseased tissue. This results in high doses to tissues where the radiation is being directed and a gradient of doses to normal tissue away from the focus of treatment, producing a range of doses to healthy tissues being exposed, mainly from scattered radiation.

A classic radiotherapy treatment corresponds to a dose of 2 Gy per fraction (perhaps 20 or more fractions) localised as much as possible to the tumour, with a treatment duration typically around a few minutes depending on the treatment technique. The mean dose rates delivered by the linear accelerators used for radiotherapy treatment are limited to 6 Gy/min and can reach dose rates of up to 24 Gy/min for flattening filter free photon beam. Also, some recent techniques in development of FLASH radiotherapy produce dose rates that are still higher, at mean dose rates in excess > of 40 Gy/s. Flash radiotherapy is based on a series of very short pulses (with a duration of a few microseconds) delivered over a total duration of some milliseconds. Therefore, within one of these pulses, the dose rate can reach extreme values of several 105 Gy/s (Esplen et al. 2020). The competing effects of cell killing at high doses and HDR will depress the risk per unit dose of cancer, which is why comparisons between effects in patients receiving high-level exposure as therapy and those in groups exposed at lower levels need to be conducted with considerable care. A further complication is that the disease being treated with radiotherapy and other therapies in the treatment regimen (chemotherapy, for example) could affect the risk posed by radiation exposure.

Long-term health effects of radiotherapy have been demonstrated for both cancer (Berrington de Gonzalez et al. 2013) and non-cancer diseases, especially diseases of the circulatory system (Little 2016). For lower doses associated with medical exposure, induction of DNA damage by a CT-scan examination has been demonstrated (Jánošíková et al. 2019). Several epidemiological studies investigated the effects of radiation exposure due to CT scans in childhood. Even if the estimated risks are influenced by potential biases and are associated with large uncertainties, accumulated results show that CT exposure in childhood appears to be associated with increased risk of (at least, certain types of) cancer (Abalo et al. 2021). Nevertheless, all these results derived from medical studies relate to radiation exposures at high- or very-high-dose rate.

Occupationally and environmentally exposed cohorts

Many studies have been published dealing with exposure of various groups of individuals to low-dose rates of ionising radiation. Among these are occupationally exposed cohorts such as, for example, air crew (Hammer et al. 2014), Western nuclear workers (Leuraud et al. 2015; Richardson et al. 2015), Russian Mayak workers (Sokolnikov et al. 2015, 2017; Kuznetsova et al. 2016), Chernobyl emergency workers (Ivanov et al. 2020a), and others (Shore et al. 2017). Table 7 summarises the typical cumulative doses and dose rates for these cohorts. Groups of individuals exposed to high natural background radiation have also been investigated, especially in Kerala, India (Nair et al. 2009; Jayalekshmi et al. 2021), and Yangjiang, China (Tao et al. 2012), as well as those exposed to man-made contaminations, such as the Techa River population in the Southern Urals of Russia (Krestinina et al. 2013; Davis et al. 2015) and the inhabitants of buildings containing 60Co contaminated steel in Taiwan (Hsieh et al. 2017).

Occupational exposures are predominantly received at an LDR, albeit that cumulative doses can be moderate or even high, but consisting of a series of many discreet, small doses received over a working lifetime (Wakeford 2021). Of particular importance are the studies of the workers at the Mayak nuclear complex in Russia and the International Nuclear Workers Study (INWORKS). INWORKS is an international collaborative study of mortality in nuclear workers from the UK, France, and five sites in the USA (Leuraud et al. 2015; Richardson et al. 2015). These are powerful studies involving large numbers of workers, some of whom have accumulated moderate-to-high doses, and the findings of these studies can offer substantial information on dose rate effects when compared with those of the Japanese atomic-bomb survivors.

Shore et al. (2017) made a detailed examination of the excess relative risk (ERR) per unit dose (ERR/Gy) reported by LDR studies (mainly occupational) for solid cancer (all cancers excluding leukaemia, lymphoma, and multiple myeloma) in comparison with the ERR/Gy found in equivalent analyses of the LSS cohort of the Japanese atomic-bomb survivors. Although the results of the Mayak workforce provided support for a DREF of 2, the other occupational studies did not indicate that a reduction in ERR/Gy to account for lower dose rates was required. In particular, the ERR/Gy estimate for INWORKS was compatible with a DREF of 1. When excluding studies with mean doses above 100 mSv (therefore excluding the Mayak worker cohort and the Kerala study), then the estimated DREF was compatible with a value of 1 (Shore et al. 2017).

Recently, Leuraud et al. (2021) made a detailed comparison of the ERR/Gy estimate for solid cancer obtained from INWORKS and from the LSS, selecting subgroups from these studies that were as closely aligned as possible. The ERR/Gy estimates for INWORKS and the LSS were very close, confirming that INWORKS offers little support for any reduction in ERR/Gy for solid cancer derived from the Japanese atomic-bomb survivors when applied to LDR exposures. However, the potential influence of baseline cancer risk factors upon radiation-related risks must be borne in mind when making such comparisons (Wakeford 2021).

Preston et al. (2017) conducted a similar exercise for Mayak workers, comparing the ERR/Gy for mortality from solid cancers excluding lung, liver, and bone cancers (the cancers expected to be associated with plutonium deposition) in the Mayak workforce with that obtained from the LSS cohort members exposed as adults. The ratio of the Mayak and LSS risk estimates pointed to a DREF of 2–3. Similar conclusions were reached by Hoel (2018).

Another recent synthesis considered cancer in epidemiological studies with mean cumulative doses below 100 mGy; therefore excluding, for instance, the Mayak worker cohort and the Kerala natural background radiation cohort (Hauptmann et al. 2020). When focusing on adulthood exposure, the meta-analysis included only LDR studies. The meta-analysis of these studies produced an ERR at 100 mGy of 0.029 (95% CI 0.011 to 0.047) for solid cancers (based on 13 LDR studies) and of 0.16 (95% CI 0.07 to 0.25) for leukaemia (based on 14 LDR studies). The authors concluded that these LDR epidemiological studies directly support excess cancer risks from low doses of ionising radiation, at a level compatible with risk estimates derived from the Japanese atomic-bomb survivors (Hauptmann et al. 2020).

A further strand of evidence on the DREF and DDREF comes from consideration of the findings of studies of those exposed to radiation in the environment. Foremost among these studies are those of residents of areas of high natural background gamma radiation in Yangjiang, China (Tao et al. 2012), and Kerala, India (Nair et al. 2009; Jayalekshmi et al. 2021), and of riverside communities along the Techa River, which was heavily contaminated by radioactive discharges from the Mayak installation in the late-1940s and 1950s (Davis et al. 2015; Krestinina et al. 2013). The Yangjiang and Kerala studies offer little evidence for an excess risk of solid cancer resulting from high natural background gamma radiation. In particular, the latest findings from Kerala (Jayalekshmi et al. 2021) suggest that the ERR/Gy for the incidence of all cancers excluding leukaemia following chronic exposure to LDR gamma radiation may be significantly less than that following acute exposure during the atomic bombings of Japan, although some criticisms have been expressed about the quality of the data used in the Kerala study (Hendry et al. 2009). Also, it is puzzling that the latest analysis of cancer incidence in Kerala (Jayalekshmi et al. 2021) includes 135 cases of leukaemia, but that no quantitative findings for leukaemia are presented.

In contrast, analysis of the Techa River data for mortality (Schonfeld et al. 2013), and incidence of (Davis et al. 2015), solid cancers provides evidence for an excess risk related to enhanced exposure to radiation as a result of radioactive contamination, but the ERR/Gy estimates are similar to those for the LSS and so do not indicate a DREF greater than 1. This conclusion is supported by the study of Preston et al. (2017), comparing solid cancer incidence and mortality in the Techa River and LSS cohorts, which found that ERR/Gy estimates for the two cohorts were very similar for both solid cancer incidence and mortality.

Contamination of construction steel with cobalt-60 in Taiwan in the early 1980s led to several thousand people being exposed at an LDR to elevated levels of gamma radiation over a period of about 10 years (Hsieh et al. 2017). In a cohort of exposed people, indications of excess incidence rates of leukaemia (excluding CLL) and solid cancers (particularly breast and lung cancers) that are related to estimated doses from 60Co have been reported (Hsieh et al. 2017), but the precision of risk estimates is insufficient to draw conclusions about an effect of dose rate.

Several large case–control studies have been conducted recently of childhood cancer, in particular childhood leukaemia, in relation to natural background radiation exposure. This interest principally arises because of the prediction of standard leukaemia risk models derived from LSS data that around 15–20% of childhood leukaemia cases in the UK might be caused by background radiation exposure (Wakeford 2004; Wakeford et al. 2009) and that sufficiently large case–control studies should be capable of detecting such an effect (Little et al. 2010). However, the results of large nationwide studies have been mixed and further work is required before reliable conclusions can be drawn (Mazzei-Abba et al. 2020).

A summary of the ERR/Gy estimates reported from main studies of occupational and environmental exposure to radiation at an LDR (and comparisons with the ERR/Gy estimates from the LSS, where available) is provided in Table 8. However, it must be borne in mind that differences in baseline cancer rates in these populations may affect the ERR/Gy estimates, as well as any effect of different dose rates (see discussion further below).

Dose rates due to cosmic radiation for astronauts

For completeness and comparison with other situations of human exposure discussed in this review, some typical traits from space exposure are given below. This is despite astronaut exposures being governed by mostly high-LET radiation.

Recently, the radiation dose on the surface of the moon was measured as part of the Chinese Chang’E 4 mission which landed on the moon on 3 January 2019. The mission included the Lunar Lander Neutrons and Dosimetry experiment, which provided a mean dose equivalent rate from galactic cosmic radiation (GCR) of 57.1 ± 10.6 µSv/h. For comparison, at the same time period, the dose equivalent rate onboard the International Space Station (ISS) was 731 µSv/d or about 30 µSv/h when averaging over the contributions from the GCR and from protons in the South Atlantic Anomaly (Zhang et al. 2020a).

As for Mars, data measured by the Mars Science Laboratory during a cruise to Mars indicate dose equivalent rates of about 1.8 mSv/d (75 µSv/h) (Zeitlin et al. 2013), while the Curiosity Rover measured dose equivalent rates of about 0.6 mSv/d (25 µSv/h) on the Mars surface (Hassler et al. 2014). Hence, a total mission to Mars (taking 180 d to Mars, 500 d on Mars, and another 180 d back to Earth) would roughly accumulate 1 Sv (Hassler et al. 2014).

During a large solar particle event, dose rates can be even higher, albeit only during a short period of time. Based on measurements of the Cosmic Ray Telescope for the Effects of Radiation (CRaTER), Schwadron and co-workers estimated the dose rates obtained by astronauts from solar energetic particles (SEPs). For the SEP event that occurred in September 2017, they found that during an extravehicular activity, an astronaut would have received a dose of 170 mGy ± 9 mGy in 3 h (average of 57 mGy/h). Extreme events could result in significantly higher dose rates (Schwadron et al. 2018). This compares to dose rates reported by Dyer et al. who estimated retrospectively that for a hypothetical Concorde flight in 1956 during the event on February 23, dose rates at an altitude of 17 km might have been as high as 0.5 mSv/h, which is about a 100 times higher than those at typical flight altitudes (Dyer et al. 2003).

Epidemiology for non-cancer endpoints

Evidence for increased risks of incidence and mortality from Diseases of the Circulatory System (DCS) and specific types of DSC (particularly ischaemic heart disease, myocardial infarction, and stroke) were observed in populations exposed to HDR, especially in patients treated with radiation therapy and survivors of atomic bombings at Hiroshima and Nagasaki (Shimizu et al. 2010; Darby et al. 2005; McGale et al. 2011) about 10–20 years ago. ICRP Publication 118 (Stewart et al. 2012) classified DCS as tissue reactions, with a suggested threshold due to acute and fractionated/prolonged exposures of 0.5 Gy (absorbed dose to the brain and blood vessels) for radiological protection purposes. In the last decades, several studies of populations exposed at LDR also demonstrated associations between cumulated dose and DCS risk, in the Mayak worker cohort (Azizova et al. 2018, 2015), in other groups of nuclear workers (Gillies et al. 2017a, b; Zhang et al. 2019; de Vocht et al. 2020), and Chernobyl liquidators (Kashcheev et al. 2016, 2017). However, uncertainties relating to the shape of the dose–response in the low-dose region are considerable, and there are broader issues concerning the interpretation of these epidemiological studies (Wakeford 2019). Up to now, available data do not allow a precise quantification of a potential modifying impact of dose rate on the dose–risk relationships.

Excess risks of posterior subcapsular and cortical lens opacities (cataract) at low-to-moderate doses and dose rates have also been reported in Chernobyl liquidators, US Radiologic Technologists and Russian Mayak nuclear workers (Little et al. 2021). Nevertheless, determination of a potential modifying impact of dose rate on the dose–risk relationship from these data is difficult to assess.

Discussion

Summary of results

At present, the results of epidemiological studies that relate to dose rate effects for human health outcomes following radiation exposure suggest a DREF in a range of 1 to 3. Of the large occupational studies, INWORKS points to no dose rate effect for solid cancer mortality after protracted exposure to LDR in the workplace. Conversely, the Mayak workers cohort provides some evidence of a lower ERR/Gy estimate than directly predicted by the LSS data, by a factor of around 2–3. The seemingly different conclusions on DREF reached from a comparison of the ERR/Gy estimates derived from the LSS with those from INWORKS and Mayak is an important issue that remains to be resolved (Wakeford 2021). Of environmental exposure studies, the Techa River residents provide some evidence for a raised ERR/Gy estimate for solid cancer (incidence and mortality) that is compatible with the LSS data, but with no indication of a lower ERR/Gy estimate, although the power to reveal a dose rate reduction factor of around 2 is limited. On the other hand, the Kerala study does not indicate a raised risk of solid cancer incidence from chronic exposure to raised levels of natural background gamma radiation, and this finding provides evidence of a lower ERR/Gy estimate for solid cancer than the equivalent estimate derived from the LSS (or that there is no increased risk from these levels of exposure). Interestingly, recent meta-analyses of data restricted to low cumulative doses (mean doses below 100 mSv) led to DREF estimates close to 1.

Limitation and advantages of low-dose-rate studies

Clearly, knowledge about the effect of dose rate improved substantially over the last 2 decades, thanks to new published results from populations exposed chronically to radiation. Nevertheless, at low doses, the expected risks are small, and difficult to demonstrate. There are still some limitations of those studies addressing low levels of exposure to radiation: accuracy of dose estimates (particularly when doses have had to be reconstructed from historical data), the quality of some cancer incidence data, lack of control of confounding factors (such as smoking), and for many studies, there is still limited statistical power to assess any dose rate effect. Issues, such as improved dosimetry and better control of confounding, must be addressed if the results of these studies are to be properly interpreted. Nonetheless, the construction of large studies such as INWORKS has notably improved the situation in recent years, and efforts to expand these studies to include more study subjects and extend follow-up will inevitably increase power. A systematic analysis of potential impact of biases (confounding and selection bias, sources of dose errors, loss of follow-up and outcome uncertainty, lack of study power, and model misspecification) concluded that the recent epidemiological results showing increased cancer risk at low doses were not likely to be due to methodological bias (Berrington de Gonzalez et al. 2013; Hauptmann et al. 2020). Differences in the relative biological effectiveness (RBE) of radiation between various exposure situations can also play a role in the observed differences—there is some evidence that low-energy photons (X-rays) are more effective than high-energy photons (gamma rays) at causing DNA damage relevant to stochastic effects (NCRP 2018). Also, it should be underlined that, with a few exceptions (Techa River cohort, Taiwanese contaminated dwellings), most of the available data relate to adulthood exposure, and so data on children are clearly lacking.

Excess relative risk versus excess absolute risk models

All results presented above have been obtained using excess relative risk models. Wakeford (2021) points out that not only ERR/Gy should be considered when comparing the results of studies of low-dose rates with those of the Japanese atomic-bomb survivors, but also Excess Absolute Risk per unit dose (EAR/Gy). Comparison of ERR/Gy implicitly assumes that it is valid to compare the proportional increase in risk per unit dose between different populations, which is correct if radiation interacts multiplicatively with those other risk factors that are largely responsible for generating baseline cancer rates, but if the baseline rates in the comparison populations differ and the interaction of radiation with other risks is sub-multiplicative, then the ERR/Gy estimates will differ as a consequence of the difference in the baseline rates. This is reflected in the way excess radiation-related risk is transferred from one population to another—in the ICRP system, for most cancers, a 50/50 mixture of ERR/Gy and EAR/Gy is assumed in the transfer of risk (ICRP 2007; Cléro et al. 2019; Zhang et al. 2020b), which is important when baseline rates differ, as they do between the LSS, Mayak and INWORKS cohorts. Consequently, a difference in ERR/Gy between cohorts may be due to a difference in dose rates to which the members were exposed, but it may also be due to a difference in baseline cancer rates if the interaction between radiation and other risk factors is sub-multiplicative, as is the assumption of ICRP for most types of cancer. Therefore, epidemiological findings in relation to dose rates must be interpreted with substantial caution, and should not depend solely upon comparisons of ERR/Gy when baseline cancer rates differ between the populations under study (Wakeford 2021). This point has also been highlighted in a recent UNSCEAR report, comparing the application of different models to specific exposure situations (UNSCEAR 2020).

Conclusions from epidemiological studies

At high-dose rates, such as people exposed to radiation from atomic bombs and therapeutic radiation, an increase in cancer incidence is clearly observed, particularly for leukaemia and also for some solid cancers. At low-dose rates, knowledge about cancer risks substantially improved over the last 2 decades. Recent epidemiological studies showed an increased risk of leukaemia and solid cancers, even if risk estimates are associated with large uncertainties (Rühm et al. 2022). A dose–risk relationship is clearly demonstrated for diseases of the circulatory diseases at high doses and high-dose rates. However, there are insufficient data at present to conclude if non-cancer effects are affected by dose rate.

Conclusions and future needs to understand radiation dose rate effects

Summary of results and conclusions

The present article presents a comprehensive assessment of radiation dose rate studies to date, including epidemiological studies, and in vitro and in vivo experimental studies.

Figure 1 illustrates the dose rates covered in the studies mentioned in this publication. Note the log scale of dose rates and therefore huge range of dose rates considered.

Representation of dose rate ranges (log scale in mGy/h) considered by the different studies presented separately for human, in vivo and in vitro studies. The range of external dose rates received in the general population is shown along with the average 2.4 mGy/h exposure rate worldwide (blue dashed line) (UNSCEAR 2000). LDR definition corresponds to 5 mGy/h and HDR to 0.05 Gy/min (solid red lines). For epidemiological studies, average dose rates in specific situations are shown and represent radiation exposure above background (white diamonds). In comparison, dose rates from the LSS (given in terms of free-in-air kerma) are large and of the order of 1.9 × 108 mGy/h–8.6 × 109 mGy/h, for atomic-bomb survivors located at 2000 m and 1000 m distance from the Hiroshima hypocentre at time of the incident, respectively (for details, see text). For medical exposure situations, average dose rates to the tumours have been considered for radiotherapy and to the area of the body explored for CT-scan. Note that for exposures of atomic-bomb survivors and patients due to diagnostic and therapeutic procedures, times of exposure are short and, therefore, dose rates given in terms of mGy/h may be misleading. For in vivo and in vitro studies, a range is shown that is representative of the dose rates used in selected publications discussed. Data from experiments carried out in Deep Underground Laboratories (DULs) are also reported (grey bars between 1 × 10–6 and 1 × 10–5 mGy/h)

Of importance is that no in vitro studies have been carried out at the dose rate range corresponding to nuclear worker level or LDR definition. Data from in vitro studies are all performed with dose rates higher than the UNSCEAR LDR definition (0.1 mGy/min or 5 mGy/h) or with extremely low-dose rates for experiments conducted in DUL facilities. This representation also highlights the fact that few data are available outside of epidemiology for dose-rate levels pertinent for radiological protection. At the upper end of the scale, the high-dose rate delivered by nuclear bombing is only partly covered by radiobiology studies. Environmental and occupational human exposures are all around or just above typical background levels, whereas medical exposures are above 1 Gy/h, albeit for short durations. Except for potential astronaut exposure (not included in Fig. 1, for details), there are practically no situations of human exposure in the range around 1–1000 mGy/h. In vivo studies currently have the largest representation of dose rate range.

In conclusion, dose rate effects have indeed been observed. The most compelling evidence comes from:

-

1.

In vivo experiments that generally show reduced inflammation at low-dose rates, unlike higher doses that generate a pro-inflammatory response, particularly at higher dose rates, which usually result in higher total doses. However, it is worth noting that these have typically been seen in models with already increased inflammation.

-

2.

In vivo experiments, very-low-dose rates have generally been shown to increase lifespan through a mechanism thought to be via adaptive response; an inverse dose rate response appears to exist for mutation and cataract formation.

-

3.

In vitro and in vivo experiments carried out below the natural background radiation, where inverse dose rate response has been observed for DNA-related end points (i.e., mutation and DNA/chromosome damage).

-

4.

Certain animal studies demonstrated increased cancer incidence with high-dose rates compared to exposures at low-dose rates when similar total doses were compared particularly when total doses exceeded 0.5 Gy.

-

5.

Changes have been observed in chromosome damage and gene expression with increased dose rates; however, these studies vary in their conclusions and are often difficult to distinguish dose rate from total dose effects.

-

6.

Epidemiological studies, which show no or little reduction of the dose–risk relationship at LDR compared to high-dose rates for cancers (results compatible with an absence of reduction or a reduction by a factor of about 2). There are currently insufficient data to conclude about an effect of dose rate on non-cancer risks.

Perspectives and recommendations

Considerations and requirements for in vitro and in vivo experiments to determine dose rate effects

Based on our experience and review of the literature, it was possible to highlight some recommendations for conducting dose rate experiments to provide informative data (Table 9).