Abstract

Psychologists are increasingly interested in whether treatment effects vary in randomized controlled trials. A number of tests have been proposed in the causal inference literature to test for such heterogeneity, which differ in the sample statistic they use (either using the variance terms of the experimental and control group, their empirical distribution functions, or specific quantiles), and in whether they make distributional assumptions or are based on a Fisher randomization procedure. In this manuscript, we present the results of a simulation study in which we examine the performance of the different tests while varying the amount of treatment effect heterogeneity, the type of underlying distribution, the sample size, and whether an additional covariate is considered. Altogether, our results suggest that researchers should use a randomization test to optimally control for type 1 errors. Furthermore, all tests studied are associated with low power in case of small and moderate samples even when the heterogeneity of the treatment effect is substantial. This suggests that current tests for treatment effect heterogeneity require much larger samples than those collected in current research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In the last decades, psychologists have conducted a large number of randomized controlled trials and observational studies to test the effectiveness of specific interventions, such as, for example, an educational support program or a psychotherapy (Kravitz et al., 2004). In almost all of these studies, the parameter of interest is the average treatment effect, a measure of the overall impact of treatment. However, researchers and practitioners know that treatment effects can be highly heterogeneous across study participants. For example, students with a low social status may benefit more from an educational support program than students with a higher social status, or certain patients may respond more to a specific treatment than other patients.

Knowing the variables that are responsible for the heterogeneity in treatment effects is very interesting from an applied perspective because this would allow to better tailor the results of randomized controlled trials to particular persons (e.g., to identify the right educational support program for a student or the right treatment for a patient; see Deaton & Cartwright, 2018, but also Cook, 2018). Hence, there is a growing interest in statistical approaches that allow to detect whether – and if so, why – treatment effects vary in randomized controlled trials. Specifically, multiple methods have been suggested for detecting how the treatment effect varies based on variables that are measured prior to treatment. Among these approaches are standard linear methods such as the moderated regression model (Cox, 1984), but also different machine learning methods such as causal forests (Athey et al., 2019), causal boosting (Powers, Qian, and et al., 2018), and various meta-learners (e.g., the S-Learner, the T-Learner, or the X-Learner, see Künzel et al., 2019; Salditt et al., 2023; Wendling et al., 2018).

However, in some settings, the relevant variables may not have been measured, so that these methods cannot be applied. Then, researchers may simply want to assess whether the average treatment effect observed in their randomized controlled trial varies across participants to such a degree that it is of substantive importance. If it does not, this would indicate that the treatment can be applied to all individuals. Conversely, if it does vary, this would indicate the need for further research to investigate which variables are driving the heterogeneity. A number of tests have been proposed to assess the null hypothesis of homogeneity of treatment effects in the causal inference literature to answer this question. Despite the increasing focus on heterogeneity of treatment effects, only some of these tests are known in psychology (see Kaiser et al., 2022 for a meta-analytic application of one of these tests), and to date there are no simulation studies that have examined and compared the performance of all of these tests in different settings.

In this article we aim to present the different tests suggested in the causality literature for detecting heterogeneous treatment effects. Furthermore, we conducted a simulation study to examine their performance subject to the amount of treatment effect heterogeneity, the sample size, and the inclusion of an additional covariate when performing the test. In the following, we first introduce the average treatment effect using the potential outcomes framework (Imbens & Rubin, 2015). We then describe the different test procedures to test the null hypothesis that the average treatment effect is constant across persons. Afterwards, we present the results of the simulation study, and then conclude with a discussion of the results and questions for future research.

Potential outcome framework and heterogeneous treatment effects

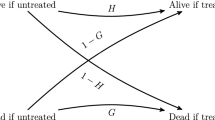

We use the potential outcome framework to define homogeneous and heterogeneous treatment effects (see Hernan & Robins, 2020; Imbens & Rubin, 2015; Rosenbaum, 2010 for introductions). To this end, let \(A_i\) be the binary treatment variable with 0 indicating that person i is in the control group and 1 that she is in the experimental group. The potential outcome corresponding to the outcome person i would have experienced had she received the treatment is denoted by \(Y_{i}(1)\) and the outcome had she been in the control condition is \(Y_{i}(0)\). The causal effect of the treatment for the ith person then is

The stable unit treatment value assumption (SUTVA, see Imbens & Rubin2015, 2015; Rosenbaum, 2002) entails that the observed outcome equals the potential outcome under the condition actually received:

Since a single person i can only be assigned to either the experimental or the control group, only \(Y_{i}(1)\) or \(Y_{i}(0)\) can be observed, such that it is impossible to observe \(\tau _{i}\) (see Holland, 1986). However, under certain additional assumptions, such as the assumption that the potential outcomes are independent from treatment (i.e., \(A_{i} \perp \!\!\!\!\perp \lbrace Y_{i}(0),Y_{i}(1) \rbrace \)), we can estimate the average of each potential outcome using the average of the observed outcome values in the experimental and control group, respectively:

This in turn allows to estimate the average of the individual treatment effects:

When the treatment effect is homogeneous across all persons, that is, \(\tau _{i} = \tau \) for all \(i = 1, \dots , n\), the average treatment effect equals the constant effect, \(\mathbb {E}[\tau _{i}] = \tau \). Thus, the treatment increases the mean difference between the experimental and control group by amount \(\tau \):

Tests for heterogeneous treatment effects

The goal of all test procedures suggested in the causal inference literature is to test the null hypothesis that the treatment effect is constant for all persons:

The proposed tests differ in the sample statistics they use and in whether they make distributional assumptions for the potential outcomes. Concerning sample statistics, the different tests use estimates of the variance parameters in the control and the experimental group, the empirical distribution functions of the two groups, or they compare a grid of quantiles estimated in the two groups. Concerning the distributional assumptions, some tests presume that the potential outcomes, and therefore the observed outcomes, are normally distributed, while other tests belong to the class of Fisher randomization tests (FRT) that do not rely on any distributional assumptions (Imbens & Rubin, 2015; Rosenbaum, 2002).

Comparing variance terms

There are several ways to implement a comparison of variance terms for testing treatment effect heterogeneity. All of these tests are based on the observation that when the treatment effect is constant across persons, it does not influence the variance of the potential outcomes:

where the first equality follows from Eq. 1 and the second uses the fact that the variance of a constant is zero. Under the assumptions introduced above, \(\mathbb {V}[Y_{i}(1)]\) and \(\mathbb {V}[Y_{i}(0)]\) equal the variance of the observed values in the experimental and the control group, respectively:

Variance ratio

Using Eq. 8, a potential test statistic to test for treatment effect heterogeneity consists of computing the ratio of the two estimates of the aforementioned variance terms:

When we assume that each potential outcome is normally distributed, \(T_{V}\) is F-distributed with degrees of freedom \(n_{1} - 1\) and \(n_{0} - 1\) and testing \(T_{V}\) equals the F-test for two variance terms (e.g., Casella & Berger, 2002). Alternatively, one can use a heterogeneous regression model in which the residual variance is modeled as a function of the treatment variable (Bloome & Schrage, 2021; Western & Bloome, 2009):

Here, the estimate \(\hat{\gamma }_1\) measures the difference between the variance of the two groups on a log-scale and a test statistic \(T_{\gamma }\) is obtained by dividing \(\hat{\gamma }_1\) by its standard error. The resulting test statistic can be used to test the presence of a heterogeneous treatment effect (Bloome & Schrage, 2021), and we will simply refer to this test as \(T_{\gamma }\) in the simulation study reported later. Importantly, \(\hat{\gamma }_1\) equals \(T_{V}\) after exponentiation, which is why we only consider \(T_{\gamma }\) and not the F-test for \(T_{V}\) as variance-ratio-based test that assumes normally distributed data, because the two tests yield the same decision concerning the null hypothesis.

A test that does not rely on such distributional assumptions can be obtained when using \(T_{V}\) in a Fisher randomization test (FRT, Imbens & Rubin, 2015; Rosenbaum, 2002; 2010). The basis of the FRT is that – given the aforementioned null hypothesis – the missing potential outcome values can be imputed assuming that we know the treatment effect. Thus, when person i belongs to the experimental group, her observed value is \(Y_i = Y_i(1)\) and the missing potential outcome is \(Y_i(0) = Y_i(1) - \tau = Y_i - \tau \). Alternatively, when person i is in the control group, her missing potential outcome is \(Y_i(1) = Y_i + \tau \).

Using this, the FRT constructs a sampling distribution of the test statistic (\(T_{V}\) in this case) by randomly permuting the observed values of the treatment variable. To illustrate, when the observed treatment values are 0, 1, 1, 0, 0, and 1 for person one to six, a randomly permuted treatment variable would be 1, 0, 0, 1, 1, and 0. The test statistic is then computed again using the group assignments in the permuted treatment variable. This process is repeated a large number of times (e.g., B = 1000) and the resulting distribution of the test statistic (e.g., the distribution of the \(T_{V}\) values) is used to obtain a p value by computing the relative frequency of the values of the test statistic that are greater than the observed test statistic:

where \(T_{\textrm{obs}}\) is the observed test statistic (i.e., \(T_{V}\) here) and \(T_j\) is the value of the test statistic in the jth randomization sample. As said above, the basis of the FRT is that given the null hypothesis of a constant treatment effect, the missing potential outcome values can be imputed assuming that one knows the true average treatment effect. In case of \(T_{V}\) the assumption of knowing the true treatment effect is not important, because the test compares two variance parameters that – given the null hypothesis – are not affected by the ‘constant’ \(\tau \).

Variance difference

\(T_{V}\) and \(T_{\gamma }\) use the ratio of the two variance terms. Another way to test the null hypothesis of a constant treatment effect consists of taking the absolute difference between the two variance terms

and to use \(T_{D}\) in a FRT (we refer to this test as \(T_{D}\) in the simulation study reported later). Alternatively, when one assumes normally distributed potential outcomes, one can implement a test of the variance difference in a random coefficient regression model or in the multiple group structural equation model framework. In a random coefficient regression model, the slope of a predictor variable is allowed to vary across individuals (as in the case of a multilevel model), although only one score is available for a single participant (in econometrics this model is called the Hildreth–Houck model, see Hildreth & Houck, 1968; Muthén et al., 2017). Applied to the present context, the slope of the treatment variable is modeled to differ between persons:

where \(u_i\) is a normally distributed error term with expectation zero and variance \(\sigma _{u}^{2}\). When the (normally distributed) error term is uncorrelated with \(u_i\), the conditional variance of \(y_i\) is given by

Thus, the variance in the control group is \(\mathbb {V}[y_{i}|A_i = 0] = \sigma _{\epsilon }^{2}\) and in the experimental group the variance is \(\mathbb {V}[y_{i}|A_i = 1] = \sigma _{u}^{2} + \sigma _{\epsilon }^{2}\), showing that the difference between the two is \(\sigma _{u}^{2}\). When we fit the model to the data (using Mplus, see below and Muthen & Muthen, 1998-2017), a Wald test can then be employed to check whether the sample estimate \(\hat{\sigma }_{u}^{2}\) is significantly different from zero. In the simulation study, we call this test \(T_{\beta }\).

The second way to implement the variance difference test when assuming normally distributed data is to use a multiple group structural equation model (Tucker-Drob, 2011). Specifically, one fits a structural equation model to the data of the experimental group and another one to the control group and compares the fit of this unconstrained multiple group model to the fit of a constrained multiple group model in which the variance terms of the outcome variable are restricted to be equal. Formally, the fit of the two models is compared using a chi-squared difference test that is a likelihood-ratio (LR) test. The LR-test is asymptotically equivalent to a Wald-test that compares the difference between the two variance terms (Bollen, 1989; Muthén et al., 2017). Later, we abbreviate this test with \(T_{LR}\).

Variance ratio and variance differences with rank data

All tests described so far use the observed data to compute the respective test statistic. However, in the case of testing the null hypothesis of no average treatment effect, simulation studies showed that a FRT that uses rank data has good error rates across different simulation conditions (Imbens & Rubin, 2015; Rosenbaum, 2002, 2010). To conduct such a rank-based FRT, one first transforms the outcome to ranks and then computes the test statistic on the resulting rank data. To examine whether the good performance of the rank-based FRTs for testing the average treatment effects against zero generalizes to the context of heterogeneous treatment effects, we also consider rank-based versions of the FRTs in the simulation study (referred to as \(T^{R}_{V}\) and \(T^{R}_{D}\), respectively).

Comparing the cumulative distribution functions

A second group of tests is based on the two-sample Kolmogorov–Smirnov test statistic. The idea is to compare the marginal distribution function in the control group with the marginal distribution function in the experimental group, because under the null hypothesis of a constant treatment effect, the two distribution functions differ only by the average treatment effect \(\tau \). Thus, if \(\tau \) would be known, one could use (Ding et al., 2016)

where \(\hat{F}_{0}\) and \(\hat{F}_{1}\) denotes the empirical cumulative distribution functions of the outcome in the control and experimental group, respectively. \(T_{\textrm{KS}}\) presumes that the true treatment effect \(\tau \) is known. Because this is not the case, an obvious fix would be to use the estimated average treatment effect \(\hat{\tau }\), resulting in the ’shifted’ test statistic:

However, (Ding et al., 2016) show that the sampling distribution of \(T_{\textrm{SKS}}\) is not equivalent to the sampling distribution of the standard KS test statistic (see Wasserman, 2004 for the function) and therefore yields either inflated or deflated false-positive and false-negative error rates. To deal with this problem, they suggest to use \(T_{\textrm{SKS}}\) in a FRT. To this end, one first computes the potential outcomes \(Y_{i}(0)\) and \(Y_{i}(1)\) for each participant by plugging in the estimated average treatment effect \(\hat{\tau }\). That is, \(Y_{i}(0) = Y_i\) and \(Y_{i}(1) = Y_i + \hat{\tau }\) for the persons in the control group, and \(Y_{i}(0) = Y_i - \hat{\tau }\) and \(Y_{i}(1) = Y_i\) for the persons in the experimental group. These potential outcomes are then used as the basis for the FRT in which \(T_{\textrm{SKS}}\) is computed in each randomization sample. The resulting distribution of the \(T_{\textrm{SKS}}\) scores is then used to test the null hypothesis (see Eq. 11).

Ding et al. (2016) call this the FRT-Plug in test (henceforth, FRT-PI) and argue that the procedure should yield valid results when the estimated average treatment effect is close to the true average treatment effect (e.g., when the sample size is large). However, as there are no guarantees that this is the case, Ding et al. (2016) suggest a second FRT in which not only one value for the hypothesized constant treatment effect is plugged in, but rather a range of plausible average treatment effects. Specifically, they suggest to construct a 99.9% confidence interval for the estimated average treatment effect and to use a grid of values covering this interval in the FRT. That is, for each of these treatment effects, the FRT is performed and one then finds the maximum p value over all the resulting randomization tests. When this p value is smaller than the significance level, one rejects the null hypothesis. Following Ding et al. (2016), we henceforth call this test FRT-CI.

Comparing quantiles

A third test was suggested by Chernozhukov & Fernandez-Val (2005) and is based on a comparison of quantiles instead of the cumulative distribution functions. Specifically, the test is based on the observation that

where \(F^{-1}_{0}\) and \(F^{-1}_{1}\) is the inverse of the cumulative distribution function in the control and experimental group, respectively, q is a certain quantile, and \(\tau (q)\) is the average treatment effect at the qth quantile. When the null hypothesis of a constant treatment effect is true, \(\tau (q)\) would be constant across all quantiles q. Therefore, Chernozhukov & Fernandez-Val (2005) suggest testing the null hypothesis with a type of KS-statistic in which one first obtains estimates of the treatment effect at certain quantiles and then takes the largest difference between these values and the average treatment effect:

In their implementation of the test, Chernozhukov & Fernandez-Val (2005) use quantile regression (Hao & Naiman, 2007), in which the outcome Y is regressed on the treatment indicator A at a quantile q, to obtain an estimate of \(\hat{\tau }(q)\).

Similar to the shifted KS statistic (see Eq. 16), \(T_{\textrm{sub}}\) uses an estimate of the true average treatment effect. Therefore, Chernozhukov & Fernandez-Val (2005) suggest to use a subsampling approach to obtain a valid test statistic. In subsampling, the sampling distribution of a statistic is obtained by drawing subsamples of a certain size b without replacement from the current dataset. In each subsample, the respective statistic is computed (\(T_{\textrm{sub}}\) in our case) and the resulting sampling distribution is then used – similar to the bootstrap – for inferences concerning the statistic. Chernozhukov & Fernandez-Val (2005) show that their subsampling approach is asymptotically correct. They also found their approach to yield valid type 1 error rates in a simulation study.

Comparing coefficients of variation

Finally, it was also suggested to compare the coefficients of variation (CV) instead of the variance terms to detect heterogeneous treatment effects (Nakagawa et al., 2015; Volkmann et al., 2020), because the magnitude of the variance depends on the mean (e.g., the larger the mean on a bounded scale, the lower a variable’s reachable variance). The coefficient of variation is the ratio of the standard deviation \(\sigma \) of a variable to its mean \(\mu \)

and a potential test statistic then is to compare the estimated CVs from the two groups

However, a problem with using \(T_{CV}\) is that the test statistic is not only affected by a heterogeneous treatment effect (e.g., \(\hat{\sigma }_{1} \ne \hat{\sigma }_{0}\)), but also by whether there is a nonzero average treatment effect. For instance, when \(\hat{\sigma }_{1}\) = \(\hat{\sigma }_{0}\) = 1 and \(\hat{\mu }_{1} = \hat{\mu }_{0} + 1\), then \(T_{CV}\) would be equal to 0.5. Thus, \(T_{CV}\) cannot be used to test the null hypothesis of a constant treatment effect, at least when the average treatment effect is nonzero.Footnote 1 Therefore, we do not consider this test statistic in our simulation.

Considering covariates

So far we assumed that data is available for the treatment variable and the outcome only. However, when a randomized controlled trial is conducted in practice, researchers may also have assessed a number of person-level pretreatment covariates. When testing the average treatment effect against zero, it is well known (e.g., Murnane & Willett, 2011) that considering these covariates in the model can increase the precision of the treatment effect estimate and power. In a similar way, considering covariates may help to increase the power of the treatment heterogeneity tests.

In case of the test based on the heterogeneous regression model (\(T_{\gamma }\), see Eq. 10), the random coefficient model (\(T_{\beta }\), see Eq. 13), and the structural equation model (\(T_{LR}\)), one can consider such covariates by including them as predictors of the outcome variable.Footnote 2 For the other tests, the outcome variable is first regressed on the covariates. The residuals of this regression can then be used in the FRT approaches or in the tests based on the cumulative distribution function. In the latter case, one then computes a ‘regression-adjusted KS statistic’ (cf., Ding et al., 2016). For the quantile-based test (i.e., \(T_{\textrm{sub}}\)), Koenker and Xiao (2002) suggested to estimate \(\tau (q)\) in a quantile regression of the outcome on the treatment variable and the covariates and to use these estimates when computing \(T_{\textrm{sub}}\).

The present research

The foregoing discussion showed that a number of tests were proposed in the causal inference literature to test for heterogeneity in treatment effects (see Table 1 for a summary) and simulation studies conducted so far found that some of the proposed tests keep their nominal type 1 error rates while also preserving low type 2 error rates. Bloome and Schrage (2021), for example, examined the performance of \(T_{\gamma }\) in case of normally distributed potential outcomes and a sample size of 200 or 1000 persons per group. They found that \(T_{\gamma }\) has good type 1 error rates at both sample sizes and that the type 2 error rate gets smaller the larger the size of the two groups. Ding et al. (2016) compared their proposed FRT approaches (i.e., FRT-PI and FRT-CI) with the subsampling approach of (Chernozhukov & Fernandez-Val, 2005) at sample sizes of 100 or 1000 persons in each group. When the treatment effect is homogeneous, all three tests yielded good type 1 error rates. Furthermore, the power of the tests to detect heterogeneous treatment effects was low for all three methods when the size of the samples was small. However, as expected, the power increased with increasing sample sizes and with increasing treatment effect heterogeneity.

The simulation study reported here is aimed at replicating and extending these results. Specifically, we conducted the simulation study with four purposes. First, in our review we described several tests whose suitability for testing treatment effect heterogeneity has not yet been investigated (e.g., the random coefficient model, rank-based FRTs), neither alone nor in comparison to the other tests. We wanted to compare all of the proposed tests in one simulation study, rather than evaluating a subset of the tests only. We believe that this is important because so far psychologists often compare – if at all – the variance terms to test the presence of heterogeneous treatment effects and seldomly use (rank-based) FRT versions. Thus, if the other tests have higher power, applied researchers would currently make a type 2 error unnecessarily often (i.e., they would underestimate the frequency of heterogeneous treatment effects). Second, we wanted to examine the error rates at smaller sample sizes. So far, sample sizes of 100, 200, or 1000 persons per group have been investigated. However, randomized controlled trials sometimes involve fewer persons per condition and it is thus important to evaluate the performance of the tests at such smaller sample sizes. Third, to the best of our knowledge, there is no simulation study that has investigated whether and to what extent the inclusion of covariates affects the power of the various tests. Finally, we also wanted to investigate whether (and how) the error rates are affected when variables are not normally distributed, but stem from distributions in which more extreme values can occur, or which are skewed.

Simulation study

We performed a simulation study to assess the type 1 and type 2 error rates in different sample size conditions and in different conditions of treatment effect heterogeneity. We also varied the size of the average treatment effect and the distribution of the potential outcomes, and we examined whether the consideration of a covariate increase the power of the tests. The R code for the simulation study can be found at https://osf.io/nbrg2/.

Simulation model and conditions

The population model that we used was inspired by the model used in Chernozhukov and Fernandez (2005) and Ding et al. (2016). Specifically, the model used to generate the data was an additive treatment effect model:

To manipulate the size of the heterogeneous treatment effect, we followed (Ding et al., 2016) by setting \(\sigma _{\tau }\) to 0, 0.25, or 0.5, corresponding to a constant treatment effect across participants, a moderate, or a large heterogeneous treatment effect. Although no conventions with regard to the size of heterogeneous treatment effect exist, we call the two conditions moderate and large, because setting \(\sigma _{\tau }\) to 0.25 (0.50) implies that the variance in the experimental group is about 1.5 (2) times larger than the variance in the control group (i.e., \(\sigma ^2_{0} = 1\) vs. \(\sigma ^2_{1} = 1.56\) and \(\sigma ^2_{1} = 2.25\), respectively). To manipulate the type of underlying distribution, \(\epsilon _{i}\) was generated from a standard normal distribution (i.e., \(\epsilon _{i} \sim \mathcal {N}(0,1)\)), from a t-distribution with 5 degrees of freedom, or from a log-normal distribution with mean 0 and standard deviation 1 (both on a log-scale). The t-distribution is a symmetric distribution that has thicker tails compared to the normal distribution. The chance that extreme values occur in a random sample are thus larger. The log-normal distribution, by contrast, is an asymmetric distribution. Using this distribution thus allows us to examine how skewness of the data influences the five tests. Furthermore, the average treatment effect \(\tau \) was set to either 0 or 1. The latter manipulation was examined to examine whether the performance of the tests is affected by the presence of an nonzero average treatment effect and we set \(\tau \) to this rather large value (i.e., in the normal case the implied d is 1) to not miss any effects that an (incorrect) estimation of the average treatment effect might have.

The number of participants was set to 25, 50, 100, or 250 in each group. A sample size of 25 per group is a rather small sample size for a randomized controlled trial and 250 participants per group can be considered a large sample (reflecting, for example, the growing number of multi-center studies). The other two sample sizes are in between and were included to examine the error rates in case of more moderate sample sizes. Finally, to examine the effect of including a covariate on the power of the tests, we generated a standard normal variable \(X_i\) and used

to generate \(Y_{i}(0)\) in the first step of the respective conditions. In the case where \(\epsilon _{i}\) is drawn from a standard normal distribution, this implies that the correlation between \(X_i\) and each of the potential outcomes is 0.30, which corresponds to a moderate effect.

Tests and dependent variables

For each of the 3 (size of heterogeneity) \(\times \) 3 (type of distribution) \(\times \) 2 (zero versus nonzero average treatment effect) \(\times \) 4 (no. of participants in a group) \(\times \) 2 (zero versus nonzero covariate) = 144 conditions, we generated 1000 simulated data sets. For each condition, the 1000 replications were analyzed by computing the ten test statistics, that is, \(T_{\gamma }\), \(T_{V}\), \(T^{R}_{V}\), \(T_{LR}\), \(T_{\beta }\), \(T_{D}\), \(T^{R}_{D}\), FRT-PI, FRT-CI, and \(T_{\textrm{sub}}\).Footnote 3\(T_{V}\), \(T^{R}_{V}\), \(T_{D}\), and \(T^{R}_{D}\) were each implemented as a FRT. We used B = 2000 randomization samples to obtain the sampling distribution of the statistic in each replication. This distribution was then used to obtain the p value for this replication. When the p value was smaller than \(\alpha = .05\), the null hypothesis of a constant treatment effect was rejected, otherwise it was accepted.

We used the functions of Ding et al. (2016) to compute the FRT-PI and the FRT-CI, respectively. For both tests, we proceeded the same way as for \(T_{V}\), but with the difference that we followed the suggestions of Ding et al. (2016) and set the number of randomization samples to B = 500. In case of FRT-CI, a grid of 150 values was used to compute the maximum p value (this is the default value in the functions of Ding et al. 2016). \(T_{\gamma }\) was obtained by regressing the treatment variable on the outcome variable in a regression model. Furthermore, the residual variance of this model (see Eq. 10) was also modeled as function of the treatment variable and the latter coefficient was used to test for heterogeneous treatment effects. Specifically, if the absolute value of the estimate divided by its standard error was greater than 1.96, the null hypothesis of a constant treatment effect was counted as rejected. The heterogeneous regression model was estimated using remlscore function in the R package statmod (Giner & Smyth, 2016). We used Mplus (Muthén & Muthén, 1998-2017) to compute the random coefficient regression model to obtain \(T_{\beta }\). Mplus implements a maximum likelihood estimator for the model’s parameters (see, Muthén et al., 2017. In the simulation, we used the R package MplusAutomation (Hallquist & Wiley, 2018) to control Mplus from R. To obtain \(T_{LR}\), we fitted two multiple group structural equation models with the lavaan package (Rosseel, 2012). The first model was an unconstrained model, in which the variance of the outcome variable in the experimental and the control group were allowed to differ, and in the second, constrained model, they were restricted to be equal. The LR-test is then obtained by comparing the fit of the two models with a chi-square difference test. Finally, we used the functions of Chernozhukov and Fernandez-Val (2005) with their default settings to obtain \(T_{\textrm{sub}}\) (resulting in a subsampling size of \(b \approx \) 20).

In each replication, we determined whether a test statistic rejected the null hypothesis of a constant treatment effect and used this information to calculate the rejection rate per simulation condition. When the standard deviation \(\sigma _{\tau }\) is 0, this rate measures the type 1 error rate. If \(\sigma _{\tau }\) is greater than 0, the rejection rate is an indicator of the power of a test.

Results

We first discuss the results for the case that no covariate was considered in the tests. The results for the different tests concerning the two error rates were very similar for an average treatment effect of zero and one. Therefore, we focus on the results for the conditions of a zero average treatment effect here and later discuss some noticeable differences from the conditions in which the treatment effect is one.

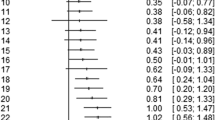

Table 2 presents the results concerning type 1 error and power rates when no covariate was considered when performing the tests. Turning to the number of type 1 errors first (i.e., the columns with \(\sigma _{\tau } = 0\)), \(T_{V}\) \(T_{V}^{R}\), \(T_{D}\), \(T_{D}^{R}\), \(T_{\gamma }\), \(T_{LR}\) and the FRT-PI yielded exact or almost exact rejection rates when the potential outcomes were normally distributed, irrespective of the size of the two groups. For FRT-CI, \(T_{\textrm{sub}}\), and \(T_{\beta }\) the rates were too conservative, but they almost approached the nominal \(\alpha \)-level as the sample size increased. When the data followed a t-distribution, all FRT-based except FRT-CI tests (i.e., \(T_{V}\), \(T_{V}^{R}\), \(T_{D}\), \(T_{D}^{R}\), FRT-PI) were still near the nominal value. For the latter, the type 1 error rate again approached the nominal value with an increasing sample size, while the rates for \(T_{\textrm{sub}}\) and \(T_{\beta }\) remained largely too conservative. For \(T_{\gamma }\) and \(T_{LR}\), rejection rates were too liberal. In case of the log-normal distribution, \(T_{\textrm{sub}}\) and \(T_{\beta }\) yielded even higher type 1 error rates. Interestingly, while FRT-PI showed a good size for normally and t-distributed potential outcomes, the test was too liberal when the potential outcomes were log-normally distributed and when the samples size was small to moderate. In this case, the FRT-CI yielded more exact rejection rates. Finally, \(T_{V}\) \(T_{V}^{R}\), \(T_{D}\), and \(T_{D}^{R}\) were again near the nominal value, and the rates for \(T_{\textrm{sub}}\) and \(T_{\beta }\) were again largely unaffected by the sample size.

With regard to the power of the tests (see columns with \(\sigma _{\tau } = 0.25\) and \(\sigma _{\tau } = 0.50\) in Table 2), all tests were more powerful the larger the size of the effect and the larger the size of the two groups. However, there were notable differences between the tests depending on conditions. Specifically, \(T_{V}\), \(T_{D}\), \(T_{\gamma }\), \(T_{LR}\), and \(T_{\beta }\) were the most powerful tests in case of normally distributed data, irrespective of sample size and level of treatment effect variation. The rank-based FRT versions \(T_{V}^{R}\) and \(T_{D}^{R}\) were always less powerful than their raw-data counterparts \(T_{D}\) and \(T_{V}\), respectively, and \(T_{\textrm{sub}}\) was more powerful than FRT-CI, but not FRT-PI. However, the latter three tests reached power rates above 80% only at 250 participants per group and when treatment effect variation was large. All other tests reached these values prior to these three tests when treatment effect variation was moderate and large, but even these tests did not reach a sufficient power for the small sample size condition. A similar result pattern occurred in conditions in which the potential outcomes were t-distributed. Again, \(T_{V}\), \(T_{D}\), \(T_{\gamma }\), \(T_{LR}\) and \(T_{\beta }\) were the most powerful tests, although the result for \(T_{\gamma }\) and \(T_{LR}\) has to be considered in light of the large type 1 error rates when there was no effect variation. Interestingly, \(T_{\beta }\), although also based on normal-theory, performed similar to \(T_{V}\) and \(T_{D}\). Furthermore, and in contrast to the normal distribution condition, FRT-PI was more powerful than FRT-CI and \(T_{\textrm{sub}}\) and the rank-based versions of \(T_{V}\) and \(T_{D}\) had the same power rates as their raw-data counterparts. Finally, when the potential outcomes were log-normally distributed, \(T^{R}_{V}\), \(T^{R}_{D}\), \(T_{\gamma }\), \(T_{LR}\) and FRT-PI were the most powerful tests. Again, for \(T_{\gamma }\) and \(T_{LR}\) this result has to be considered in light of the worse performance of the two tests in case of no treatment effect variation. Similarly, the results concerning the rank-based tests should be interpreted in comparison to their performance when the average treatment effect is one, where \(T^{R}_{V}\) and \(T^{R}_{D}\) yielded high rejection rates when the treatment effect was constant (see the discussion below).

The type 1 and type 2 error rates of the tests when considering an additional covariate were, unexpectedly, very similar to the error rates when not considering the covariate (see Table 3 for the specific results). When we examine the small sample size condition only (i.e., 25 participants per condition) and average across all tests, we find that for normally distributed data the mean rejection rate is 0.038 when \(\sigma _{\tau }\) is zero, it is .137 when \(\sigma _{\tau }\) is 0.25, and it is 0.331 when \(\sigma _{\tau }\) is 0.50. These values differ only slightly from the values that we obtain when we consider the tests without covariates, \(\sigma _{\tau }\) = 0: 0.038, \(\sigma _{\tau }\) = 0.25: .133, \(\sigma _{\tau }\) = 0.50: 0.333. Very similar results are obtained when we consider the conditions in which the data was t- or log-normal-distributed, and also when we consider each specific tests. Thus, in the conditions studied here, taking a covariate into account leads, if at all, only to a small improvement in the power of the tests, when the correlation between the covariate and the dependent variable does not exceed 0.3.

Finally, as mentioned above, the results are very similar for the conditions in which the average treatment effect is one (see Tables 6 and 7 in the Appendix for the specific error rates of the considered tests). Exceptions were the results for the rank tests \(T^{R}_{V}\) and \(T^{R}_{D}\) when the data were log-normally distributed. Here, rejection rates were unacceptably high when there was no treatment effect variation (i.e., \(\sigma _{\tau }\) = 0). To better understand these results, we conducted another small simulation study in which we examined the performance of a FRT when testing the null hypotheses that the average treatment effect is zero for log-normal data. The results showed (see Table 4) that the rank based test performed better than the raw-data based test when there was no treatment effect heterogeneity (i.e., \(\sigma _{\tau }\) = 0). However, when the treatment effect was zero, we found that rejection rates of the rank test increased the larger the variation in the treatment effect, while the raw-data based test had the correct size. This suggests that nonzero treatment effect heterogeneity results in a difference between the two outcome means in the rank data, which is actually not present, and this increases with higher heterogeneity. This ’bias’ also affects the rank-test of the variances (analogous to the test using the coefficient of variation, as discussed above). Finally, when the average treatment is one, then the actual difference in means is ’added’ to this bias, leading to the exceptional rejection rates.

Discussion

Psychological researchers are increasingly interested in methods that allow them to detect whether a treatment effect investigated in a study is non-constant across participants. Based on the potential outcome framework, a number of procedures were suggested to test the null hypothesis of a homogeneous treatment effect. These tests can be distinguished according to whether they make distributional assumptions and to which sampling statistics are used in the test. The present study was done to examine the type 1 and type 2 error rates of the suggested tests as a function of the amount of treatment heterogeneity, the presence of an average causal effect, the distribution of the potential outcomes, the sample size, and of whether a covariate is considered when conducting the test.

With regard to the type 1 error rate, our results replicate the findings of earlier simulation research (Bloome & Schrage, 2021; Ding et al., 2016) by showing that the majority of tests were close to the nominal \(\alpha \)-level regardless of sample size when the data was normally distributed. However, extending prior research we also found that the variance tests in the heterogeneous regression model \(T_{\gamma }\) and the LR test implemented with a multiple group structural equation model \(T_{LR}\) rejected the null hypothesis too often in the case of non-normally distributed data. Furthermore, we found that the subsampling procedure \(T_{sub}\) and the test in the random coefficient regression model \(T_{\beta }\) decided too conservatively in these conditions, and that the FRT-CI performed best in case of skewed data. Overall, these results suggest that, regardless of sample size, applied researchers should use a Fisher randomization test with variance ratios or variance differences (i.e., \(T_{V}\), \(T_{D}\)) or the empirical distribution function (i.e., FRT-CI), because they protect well against false positive decisions. When the data are normally distributed, they could, again regardless of sample size, use the variance tests in the heterogeneous regression model \(T_{\gamma }\) and the LR test, but should use one of the FRT tests as a sensitivity check if there is any doubt as to whether the normality assumption is met.

Concerning the power of the tests, the results of the simulation – at least for the small sample sizes of 25 and 50 persons per group – are quite sobering, because none of the tests reached satisfactory power levels even when size of treatment heterogeneity was stronger. When samples sizes were larger, our results were consistent with earlier research showing that the variance test of the heterogeneous regression model is characterized by good type 2 error rates in case of normally distributed data (Bloome & Schrage, 2021). They also show that the LR-test and the test of the random coefficient regression model achieved good power. Power rates of all three tests were slightly larger than the power rates of the variance ratio and the variance difference tests, but this is to be expected given that the parametric assumptions are met in these conditions. Furthermore, the power of FRT-PI increased with larger sample sizes and with larger treatment effect heterogeneity. The same pattern occurred for FRT-CI, although it performed better (in terms of type 2 and type 1 error rates) than FRT-PI in case of skewed data. For practitioners these results suggest that they should use the FRT variance tests, the variance test in the heterogeneous regression model \(T_{\gamma }\) or the LR test when the data is normally distributed. However, they should keep in mind that the power is only acceptable for sample sizes greater than or equal to 50 participants per group in case of large heterogeneity in treatment effects, or with 250 participants per group in case of moderate heterogeneity. In the case of non-normally distributed data, they should use the FRT variance tests or the FRT-PI test. Once again, however, when interpreting the result, it is important to bear in mind that the power is only sufficiently high for very large samples (250 people per group in case of high heterogeneity).

We also examined the performance of rank-based versions of the variance ratio and the variance difference tests, because in the case of the average treatment effect, simulation studies show that an FRT that uses rank data (i.e., the initial data is transformed into ranks in a first step) performs well across many simulation conditions (Imbens & Rubin, 2015; Rosenbaum, 2002, 2010). In our simulations, these tests performed similar (in case of t-distributed data) or worse (in case of normally distributed data) than their raw-data based counterparts. Furthermore, in the case of log-normal distributed data, their performance was unsatisfactory. Thus, we provisionally conclude that the performance advantage that is observed for the average treatment effect does not generalize to the detection of a heterogeneous treatment effect. However, our results have to be replicated in future simulation studies to reach a final conclusion.

Finally, we also investigated whether the power of the tests is increased by considering a relevant covariate. However, our results show only a tiny effect of including a covariate. The obvious explanation is that the influence of the covariate was too small in our simulation to detect an effect on power. We used a moderate effect, because we think this is the most plausible value for the considered setting in which participants are randomly assigned to treatment. Nevertheless, future research should replicate and also extend our results by additionally examining larger sizes of the covariate’s effect. Another point to consider is that covariates are often not measured with perfect reliability, which in turn may also affect the error rates of the tests for treatment effect heterogeneity. In fact, we assumed here that the outcome variable is not subject to measurement error and it remains unclear how a violation of this assumption would affect the different tests. We think that this is also an interesting question to examine in future research.

Furthermore, in our simulation study, we focused on tests that have been proposed in the causal inference literature to test for treatment effect heterogeneity. However, in the context of methods that compare variance terms, the statistical literature suggests a number of further tests that are aimed to be more robust to violations of the normal distribution, such as the Levene test or the Brown and Forsythe test (see Wilcox, 2017b, for an overview) or tests that are based on bootstrapping (see Lim & Loh, 1996; Wilcox, 2017a). We expect these tests to perform similarly to the FRT tests, though this assumption should be validated in a future simulation study. Finally, in the case of the average treatment effect, previous research has found that the meta-analytical integration of the results of many small-sample studies can match a single, large-sample study in terms of power (e.g., Cappelleri et al., 1996). This suggests that the pooled results of several heterogeneous treatment effect tests may have a sufficient power, even when the single studies are underpowered due to using small samples. We think that testing this hypothesis in future research is not only interesting from an applied perspective, but also from a methodological point of view: Although meta-analytic methods for the variance ratio statistic exist (see Nakagawa et al., 2015; Senior et al., 2020)), it is currently unclear, at least to our knowledge, how to best pool the results of the FRT tests or the quantile tests (i.e., whether Fisher’s or Stouffer’s method is appropriate, for example, when there is substantial between-study heterogeneity).Footnote 4

To summarize, our results suggest that researchers often fail to reject the null hypothesis of a constant treatment effect in a single study even when there is actual heterogeneity in effects present in the data. We think that this result is relevant for applied researchers from multiple points of view. To begin with, currently the sample sizes are determined a priori in such a way that a pre-specified average (causal) effect can be tested with as much power as possible. Thus, assuming that future studies will extend their focus to treatment effect heterogeneity, much larger samples sizes have to be assessed, at least when the variables that are responsible for treatment effect heterogeneity are not known before conducting the RCT.

Specifically, in our study we examined the setting that researchers have not assessed or do not know the heterogeneity-generating variables and therefore perform one of the (global) tests considered here. However, researchers may sometimes know these variables prior to the randomization and may then, assuming that they have assessed them and that a linear model is true, compute a regression model with an interaction term to test whether the treatment effect varies with this variable. In this case, the power of the interaction test is larger than the power of the tests considered in the simulation. For instance, when the population model is linear and the interaction term has a moderate effect (\(R^2\) about .13), the power of the interaction test is higher than the power of the variance ratio and the variance difference tests (see Table 5).Footnote 5 For applied researchers this result suggests, second, that they should already consider which variables can explain potential variation in the average treatment effect during the planning stage of the study, because in this case a much higher power can be achieved at lower samples sizes.

A final recommendation for applied researchers pertains to the case that one of the tests studied here leads to the rejection of the null hypothesis of a constant treatment effect. Then, researchers will be motivated to detect the person-level variables that explain differences in the treatment effect. In the introduction we mentioned that there are a number of statistical approaches available (classical as well as more modern approaches from machine learning) that can then be used for this task. When performing these (post-hoc) analyses, however, it has to be taken into account that one potentially conducts a large number of statistical tests, which may lead to many false-positive results (Schulz and Grimes, 2005; Sun et al., 2014). Thus, one has to investigate in other studies how stable (in terms of replicability) and generalizable (in terms of external validity) the resulting findings are, also given that the sample sizes are (typically) optimized with respect to the statistical test of the average treatment effect.

To conclude, we conducted a simulation study to investigate the type 1 and type 2 error rates of different tests of treatment effect heterogeneity that were suggested in the causal inference literature. The results suggest that a randomization test should be used in order to have good control of the type 1 error. Furthermore, all tests studied are associated with high type 2 error rates when sample sizes are small to moderate. Thus, to detect heterogeneous treatment effects with sufficient power, much larger samples are needed than those typically collected in current studies, or new test procedures must be developed that have higher power even with smaller samples.

Notes

We also computed \(T_{CV}\) in selected conditions of our simulation. When the average treatment effect and the variance of the treatment effects was 0, the rejection rate of \(T_{CV}\) was near the nominal value (i.e., 0.05). However, when the average treatment effect was 1, but the variance of the treatment effects was 0, the rejection rate was 0.935.

The heterogeneous regression model, the random coefficient model, and the structural equation model assume a linear relationship between the covariates and the outcome. When this assumption is suspected to be violated, one could also first predict the outcome with the covariates using any machine learning model and then proceed with the residuals from this model.

Chernozhukov and Fernandez (2005) also suggest a bootstrap-variant of their approach that we also included in the simulation. However, the performance of the test with regard to the type 1 and type 2 error rates was unsatisfactory (see Ding et al., 2016, for similar findings), so that we decided to not report results concerning this test.

In a preliminary simulation study, we examined the power of a pooled variance ratio test depending on the size of treatment effect heterogeneity in the single studies, the number of the studies to be pooled, and the amount of between-study heterogeneity. Specifically, to generate the data for a single study, we used the same population model as in the simulation study reported in the main text. The differences were that we generated data for a small, a moderate, and a large heterogeneous treatment effect (i.e., the standard deviation of \(\sigma _{\tau }\) was set to 0.10, 0.25, or 0.50; see Eq. 21), and that we considered the small sample size condition only (i.e., 25 persons in the experimental and the control group in a single study). In addition, we varied the amount of systematic variation of the heterogeneous treatment effect across studies (i.e., the between-study heterogeneity was either 0 or 0.10) and we varied the number of studies that are meta-analytically integrated (i.e., 10 vs. 30 vs. 50 studies). In each of the 1000 replications generated for a simulation condition, we computed a random-effect meta-analysis (using the metafor package, see Viechtbauer, 2010) and checked whether the pooled variance ratio was significantly different from zero. The results showed that when the size of the heterogeneous treatment effect was small, the power of the pooled test was adequate, when the number of aggregated studies was 50 and when there was no between-study heterogeneity (i.e., the power was .816). When between-study heterogeneity was 0.10, the power was lower than .80 when the number of to-be-aggregated studies was 50 (i.e., the power was .746). Furthermore, when the heterogeneous treatment effect was moderate or large, the power of the pooled variance ratio test was already adequate when the number of studies was 10 (i.e., the power was near 1.00 in almost all conditions), independent upon the amount of between-study heterogeneity. The exception was the moderate heterogeneous treatment effect condition in which between-study heterogeneity was 0.10; here, the nominal power level was not reached (i.e., the power was .750). However, we note that these results were obtained under rather optimal conditions (i.e., correct test statistic; distributional assumptions are met) and that they have to be replicated in future simulation research.

The model used to generate the data was

$$\begin{aligned} Y = 0.5 \cdot A \cdot X + \epsilon \end{aligned}$$(23)were A is the treatment variable (with prevalence 0.5) and X is a standard normal variable. The residual terms were also standard normally distributed.

References

Athey, S., Wager, S., & Tibshirani, J. (2019). Generalized random forests. Annals of Statistics, 47, 1148–1178. https://doi.org/10.1214/18-AOS1709

Bloome, D., & Schrage, D. (2021). Covariance regression models for studying treatment effect heterogeneity across one or more outcomes: Understanding how treatments shape inequality. Sociological Methods & Research, 50, 1034–1072. https://doi.org/10.1177/0049124119882449

Bollen, K. A. (1989). Structural equations with latent variables. West Sussex: John Wiley & Sons.

Cappelleri, J. C., & loannidis, J.P.A., Schmid, C.H., de Ferranti, S.D., Aubert, M., Chalmers, T.C., Lau, J. (1996). Large trials vs meta-analysis of smaller trials: How do their results compare? JAMA, 276, 1332–1338. https://doi.org/10.1001/jama.1996.03540160054033

Casella, G., & Berger, R. L. (2002). Statistical inference. Duxbury Press.

Chernozhukov, V., & Fernandez-Val, I. (2005). Subsampling inference on quantile regression processes. Sankhya: The Indian Journal of Statistics, 67, 253–276.

Cook, T. D. (2018). Twenty-six assumptions that have to be met if single random assignment experiments are to warrant ‘gold standard’ status: A commentary on deaton and cartwright. Social science & medicine, 210, 37–40. https://doi.org/10.1016/j.socscimed.2018.04.031

Cox, D. R. (1984). Interaction. International Statistics Review, 52, 1–24. https://doi.org/10.2307/1403235

Deaton, A., & Cartwright, N. (2018). Understanding and misunderstanding randomized controlled trials. Social Science & Medicine, 210, 2–21. https://doi.org/10.1016/j.socscimed.2017.12.005

Ding, P., Feller, A., & Miratrix, L. (2016). Randomization inference for treatment effect variation. Journal of the Royal Statistical Society, Section B, 78, 655–671.

Giner, G., & Smyth, G. K. (2016). statmod: Probability calculations for the inverse gaussian distribution. R Journal, 8, 339–351.

Hallquist, M. N., & Wiley, J. F. (2018). MplusAutomation: An R package for facilitating large-scale latent variable analyses in Mplus. Structural Equation Modeling, 621–638,. https://doi.org/10.1080/10705511.2017.1402334

Hao, L., & Naiman, D. Q. (2007). Quantile regression. Thousand Oaks, California: Sage.

Hernan, M., & Robins, J. M. (2020). Causal inference: What if. Boca Raton: Chapman & Hall/CRC.

Hildreth, C., & Houck, J. P. (1968). Some estimators for a linear model with random coefficients. Journal of the American Statistical Association, 63, 584–595. https://doi.org/10.2307/2284029

Holland, P. W. (1986). Statistics and causal inference. Journal of the American Statistical Association, 81, 945–960. https://doi.org/10.2307/2289064

Imbens, G. W., & Rubin, D. (2015). Causal inference for statistics, social, and biomedical sciences: An introduction. Cambridge: Cambridge University Press.

Kaiser, T., Volkmann, C., Volkmann, A., Karyotaki, E., Cuijpers, P., & Brakemeier, E.- L. (2022). Heterogeneity of treatment effects in trials on psychotherapy of depression. Clinical Psychology: Science and Practice.https://doi.org/10.1037/cps0000079

Koenker, R., & Xiao, Z. (2002). Inference on the quantile regression process. Econometrica, 70, 1583–1612. https://doi.org/10.1111/1468-0262.00342

Kravitz, R. L., Duan, N., & Braslow, J. (2004). Evidence-based medicine, heterogeneity of treatment effects, and the trouble with averages. The Milbank quarterly, 82, 661–687. https://doi.org/10.1111/j.0887-378X.2004.00327.x

Künzel, S. R., Sekhon, J. S., Bickel, P. J., & Yu, B. (2019). Metalearners for estimating heterogeneous treatment effects using machine learning. Proceedings of the National Academy of Science, 116, 4156–4165. https://doi.org/10.1073/pnas.1804597116

Lim, T.- S., & Loh, W.- Y. (1996). A comparison of tests of equality of variances. Computational Statistics & Data Analysis, 22, 287–301. https://doi.org/10.1016/0167-9473(95)00054-2

Murnane, R. J., & Willett, J. B. (2011). Methods matter: Improving causal inference in educational and social science research. Oxford: Oxford University Press.

Muthén, B. O., Muthén, L. K., & Asparouhov, T. (2017). Regression and mediation analyses using mplus. Los Angeles: Muthén & Muthén.

Muthén, B. O., Muthén, L. K., & Asparouhov, T. (2017). Regression and mediation analyses using mplus. Los Angeles, CA: Muthén & Muthén.

Nakagawa, S., Poulin, R., Mengersen, K., Reinhold, K., Engqvist, L., Lagisz, M., & Senior, A. M. (2015). Meta-analysis of variation: Ecological and evolutionary applications and beyond. Methods in Ecology and Evolution, 6, 143–152. https://doi.org/10.1111/2041-210X.12309

Powers, S., Qian, J., K.J., et al. (2018). Some methods for heterogeneous treatment effect estimation in high dimensions. Statistics in Medicine, 37, 1767–1787. https://doi.org/10.1002/sim.7623

Rosenbaum, P. R. (2002). Observational studies. New York: Springer.

Rosenbaum, P. R. (2010). Design of observational studies. New York: Springer.

Rosseel, Y. (2012). Lavaan: An R package for structural equation modeling. Journal of Statistical Software, 48, 1–36. https://doi.org/10.18637/jss.v048.i02

Salditt, M., Eckes, T., & Nestler, S. (2023). A tutorial introduction to heterogeneous treatment effect estimation with meta-learners. Administration and Policy in Mental Health and Mental Health Services Research. https://doi.org/10.1007/s10488-023-01303-9

Schulz, K. F., & Grimes, D. A. (2005). Multiplicity in randomised trials ii: subgroup and interim analyses. The Lancet, 365, 1657–1661. https://doi.org/10.1016/S0140-6736(05)66516-6

Senior, A. M., Viechtbauer, W., & Nakagawa, S. (2020). Revisiting and expanding the meta-analysis of variation: The log coefficient of variation ratio. Research Synthesis Methods, 11, 553–567. https://doi.org/10.1002/jrsm.1423

Sun, X., Ioannidis, J. P., Agoritsas, T., Alba, A. C., & Guyatt, G. (2014). How to use a subgroup analysis: Users’ guide to the medical literature. Jama, 311, 405–411. https://doi.org/10.1001/jama.2013.285063

Tucker-Drob, E. M. (2011). Individual difference methods for randomized experiments. Psychological Methods, 16, 298–318. https://doi.org/10.1037/a0023349

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36(3), 1–48. https://doi.org/10.18637/jss.v036.i03

Volkmann, C., Volkmann, A., & Müller, C. A. (2020). On the treatment effect heterogeneity of antidepressants in major depression: A bayesian meta-analysis and simulation study. Plos One, 15, e0241497. https://doi.org/10.1371/journal.pone.0241497

Wasserman, L. (2004). All of statistics. New York: Springer.

Wendling, T., Jung, K., Callahan, A., Schuler, A., Shah, N. H., & Gallego, B. (2018). Comparing methods for estimation of heterogeneous treatment effects using observational data from health care databases. Statistics in Medicine, 37, 3309–3324. https://doi.org/10.1002/sim.7820

Western, B., & Bloome, D. (2009). Variance function regressions for studying inequality. Sociological Methodology, 39, 293–326. https://doi.org/10.1111/j.1467-9531.2009.0122

Wilcox, R. R. (2017). Introduction to robust estimation and hypothesis testing. West Sussex: John Wiley & Sons.

Wilcox, R. R. (2017). Understanding and applying basic statistical methods using R. West Sussex: John Wiley & Sons.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional materials for this article can be found at https://osf.io/nbrg2/. The materials on the OSF include the R code for the simulation study and the Mplus code to estimate the random coefficient regression model.

Appendix

Appendix

Additional results

Here, we present additional results of the simulation study. Table 6 displays the results for the examined tests when no cova-riates are considered and when the average treatment effect is 1, and Table 7 shows the results for the tests with covariates considered and for an average treatment effect of 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nestler, S., Salditt, M. Comparing type 1 and type 2 error rates of different tests for heterogeneous treatment effects. Behav Res (2024). https://doi.org/10.3758/s13428-024-02371-x

Accepted:

Published:

DOI: https://doi.org/10.3758/s13428-024-02371-x