Abstract

The pupil of the eye provides a rich source of information for cognitive scientists, as it can index a variety of bodily states (e.g., arousal, fatigue) and cognitive processes (e.g., attention, decision-making). As pupillometry becomes a more accessible and popular methodology, researchers have proposed a variety of techniques for analyzing pupil data. Here, we focus on time series-based, signal-to-signal approaches that enable one to relate dynamic changes in pupil size over time with dynamic changes in a stimulus time series, continuous behavioral outcome measures, or other participants’ pupil traces. We first introduce pupillometry, its neural underpinnings, and the relation between pupil measurements and other oculomotor behaviors (e.g., blinks, saccades), to stress the importance of understanding what is being measured and what can be inferred from changes in pupillary activity. Next, we discuss possible pre-processing steps, and the contexts in which they may be necessary. Finally, we turn to signal-to-signal analytic techniques, including regression-based approaches, dynamic time-warping, phase clustering, detrended fluctuation analysis, and recurrence quantification analysis. Assumptions of these techniques, and examples of the scientific questions each can address, are outlined, with references to key papers and software packages. Additionally, we provide a detailed code tutorial that steps through the key examples and figures in this paper. Ultimately, we contend that the insights gained from pupillometry are constrained by the analysis techniques used, and that signal-to-signal approaches offer a means to generate novel scientific insights by taking into account understudied spectro-temporal relationships between the pupil signal and other signals of interest.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

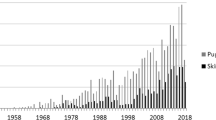

Technological advances in the last half-century, progressing from manual photography to infrared camera and eye-tracking computers, have made pupillometry an increasingly low-cost and popular methodology. The size of the pupil became the focus of interest in psychology about 50 years ago with studies on mental effort and motivational interest. The list of applications of the pupillometric method includes psychiatric and clinical studies (Rukmini et al., 2019; Kremen et al., 2019; Joyce et al., 2018; Granholm et al., 2017; Lim et al., 2016; Steinhauer & Hakerem, 1992), developmental and animal psychology research (Chatham et al., 2009; Hepach et al., 2015), neurophysiology (Gamlin et al., 2007; Reimer et al., 2016; Joshi et al., 2016), and cognitive neuroscience (Schwalm & Jubal, 2017; Urai et al., 2017).

The key processes associated with changes in pupil size are summarized in Table 1. As can be seen, the pupil is associated with a variety of states, some of which may, on their face, seem to bear no similarity to each other (e.g., fatigue and uncertainty); however, most of these states can be conceived of in relation to arousal (fatigue = low arousal; uncertainty = high arousal). Indeed, much of the interest in pupillometry stems from the proposed relationship between the pupil and the noradrenergic system of cognitive arousal. A better understanding of the neural underpinnings of changes in pupil size will make it clearer why these myriad processes are associated – still in a largely mysterious way – with small movements of the pupil and may be driven by the same system or by a few interacting systems (see “Neuralunderpinnings of pupil dynamics”).

We first briefly summarize the historical context of pupillometry in psychological research, as well as the neural underpinnings of changes in pupil size, before moving to our key concern in this article: the analysis of pupil data. We briefly outline possible data pre-processing steps, with a focus on why, how, and in what context each step might be employed; we do not attempt to define a standard but rather to increase awareness around the function of each possible pre-processing step and for which kinds of later analyses it may or may not be relevant. We then provide a sampling of pupil analysis approaches that are epoch- and/or condition-based, to situate a discussion of why one might want to employ more dynamic, signal-to-signal analysis approaches.

Though analyzing mean pupil size in a temporal window of interest has served psychology well for the last half century (and will undoubtedly continue to do so), we aim to show that a variety of powerful inferences may be possible by using more complex analysis techniques which take into account the temporal and/or spectral dynamics of the pupil signal. We are particularly focused on methods to relate the dynamic (i.e., changing over time) pupil signal to a dynamic stimulus (e.g., music, speech). Details about each analysis method and links to further reading and code implementations are provided. Additionally, we provide a code-based tutorial to recreate some of the key examples discussed in this paper. Our goal is to provide a concise and practical overview of existing methods, for those who are interested in pursuing pupillometry research but may lack the appropriate background, either in terms of the history of pupillometry or the conceptual understanding of difficult analysis techniques.

Pupillometry

Irene Loewenfeld, in her monumental monograph on pupillometry in two volumes (1999), pointed out that there is centuries-old anecdotal and semi-scientific knowledge that the diameter of the pupil changes not only in relation to the amount of light entering the eye but also – sometimes visibly – to an individual’s internal states. The pupils were early described poetically by Joshua Sylvester (1563-1618) as “windows of the soul.” It is now common lore that dilated pupils convey the impression of someone looking both “interested” and “interesting” (explaining the cosmetic use of the herbal substance ‘belladonna’ in the Renaissance (Simms, 1967), an idea further popularized by the pioneer of pupillometry in psychology, (Hess, 1975a)).

Until the invention of infrared eye trackers, by which dynamic changes in the size of the pupil can be measured accurately, pupillary changes were simply observed with the naked eye (e.g., during neurological or ophthalmological examinations (e.g., Wilhelm et al., 1999; 2002) or by filming the eye at close range and measuring frame-by-frame the pupil diameter from the film projection (e.g., as done in the classic studies by Hess and Polt (1960, 1964) and Kahnemann and Beatty (1966; 1967). The modern infrared technology was initially developed by physiologists but was revolutionized by the development of computerized systems linked to infrared camera and specialized software for basic eye-data analysis and visualizations. Infrared light cameras also have the advantage of obtaining images in virtual darkness (for the human eye) and independently of eye colors (which vary in iris contrast on standard film).

Modern infrared eye-trackers provide raw data about pupil diameters as samples (either coded by sample frequency or by the computer clock’s time) expressed in arbitrary values (like the number of pixels of the camera) or in ‘mapped’ millimeters (after a calibration routine that also establishes head and eye distance from the camera). Nowadays, several types of eye trackers are commercially available. Broadly, they are stationary (by positioning the camera close to a computer screen) or mobile (head-mounted or integrated into glasses-like frames or within virtual reality goggles). These computerized infrared systems are capable of measuring not only how pupil size may change on average but also the dynamic movements of the pupil over time.

Within psychology, pupillometry has become the standard term, especially after Janisse’s book by the same name (Janisse, 1977). The method entered experimental and cognitive psychology due to several influential publications (Hess & Polt, 1960, 1964; Kahneman & Beatty, 1966; Beatty & Wagoner, 1978; Ahern & Beatty, 1979b). In ’Attention and Effort,’ Kahneman (1973) put forward the idea that pupillometry is a measure of attention and, specifically, of an important aspect of it: load on capacity. He proposed a psychophysiological model of ‘cognitive effort’ where pupil diameters reflect, first of all, the general physiological arousal at each moment but, more specifically, how intensively the cognitive system ‘works’ at a specific moment in time and draws on its limited resources. Subsequent physiological advances on the role of the noradrenergic system of the brain (Aston-Jones & Cohen, 2005) have provided a functional neural basis for cognitive arousal and its energizing role (tonic and phasic) on the activity of various neural systems, all concomitantly reflected in changes of the diameter of the pupil. Hence, both arousal and pupillary size are relevant variables within the current cognitive and affective neurosciences (Aston-Jones et al., 2007), with animal studies able to directly probe the activity in the noradrenergic system of the brain and its relation to pupillary changes (see e.g., Joshi & Gold, 2020 and “Neural underpinnings of pupildynamics” below).

In psychology, the appeal of pupillometry may be also due to pupillary changes being difficult to control voluntarily, unlike other oculomotor dependent measures. Control of pupil size seems only possible after extensive training (Eberhardt et al., 2021) and/or the use of indirect methods or strategies (Loewenfeld & Lowenstein, 1999). This feature of automaticity or reflexive response of the pupil to internal states seems to offer a “window into the innermost mind” (Hess, 1975b) and into mental processes that generally occur below the threshold of consciousness (Laeng et al., 2012; Fink et al., 2018). Since the initial studies within experimental psychology, pupillometry has spread into social (e.g., Goldinger et al., 2009) and developmental psychology (e.g., Hepach et al., 2015). Pupillometry is relevant in the study of low-level processes (e.g., light reflex, near response), mid-level processes (alerting and orienting), and high-level processes (executive functioning), as recently summarized by Strauch et al. (2022). Readers wishing to learn more about the basics of pupillometry are directed to the existing relevant reviews (e.g., Beatty, 1982b; Mathôt, 2018; Zekveld et al., 2018; Winn et al., 2018; Steinhauer et al., 2022; Strauch et al. 2022) or book chapters (e.g., Beatty et al. (2000); Einhäuser (2017); Laeng and Alnaes (2019)).

Neural underpinnings of pupil dynamics

Pupil dilation and constriction are controlled by the smooth dilator and sphincter muscles of the iris, respectively. The sphincter muscle is innervated by parasympathetic axons from the Edinger–Westphal nucleus, while the dilator muscle is enervated by sympathetic axons from the superior cervical ganglion (Loewenfeld & Lowenstein, 1999; Samuels & Szabadi, 2008b, a; Szabadi, 2012). The contribution of these two different pathways can be experimentally dissociated by conducting experiments in a dark room, which reduces parasympathetic tone such that the majority of the pupil dilation response is a result of sympathetic activity (Steinhauer & Hakerem, 1992; Steinhauer et al., 2004), or by using pharmacological agents that block cholinergic or adrenergic receptors in the iris, or mydriasis eye drops (e.g., tropicamide). Such studies have confirmed, for example, that the observed pupil dilation response to an alerting stimulus can be dissociated into two components: an earlier parasympathetic one (600–900 ms) and a later sympathetic one (\(\sim \)1200 ms) (Steinhauer & Hakerem, 1992), and that transient decreases in parasympathetic arousal precede perceptual switches (Nakano et al., 2021) (see Steinhauer et al. (2022)’s Section 1.2.4 for additional discussion).

Neural activity in the locus coeruleus (LC) is highly correlated with changes in pupil size, in both animals and humans (Aston-Jones & Cohen, 2005; Alnæs et al., 2014), corroborating the belief that changes in pupil size reflect the functioning of the locus coeruleus–noradrenergic (LC-NA) system (Aston-Jones & Cohen, 2005; Aston-Jones et al., 1994; Minzenberg et al., 2008; Murphy et al., 2014; Nassar et al., 2012; Rajkowski, 1993). LC-NA activity affects the pupil dilation pathway, with direct stimulation of the LC resulting in a dilation of the pupil within a few hundreds of milliseconds (Joshi et al., 2016).

However, recent evidence suggests that the activity of a variety of brain regions, in addition to LC, is correlated with pupil size changes and may even be capable of driving dilation (Joshi et al., 2016; Wang et al., 2014). For example, Wang et al. (2014) show that the pupil exhibits a similar multiphasic, transient response to both visual and auditory stimuli, and assert that the intermediate layer of the superior colliculus (SCi) is likely the brain region responsible for integrating auditory and visual stimuli and interacting with the nuclei controlling pupil size. In their study, stimulation of the SCi yielded a similar pupillary response to that evoked by visual stimuli; this effect was not observed when stimulating in the superficial layers of the SC. Wang et al. (2014) offer a neuroanatomical model outlining the neural circuitry likely involved in mediating the pupillary response, which they later refine in Wang and Munoz (2015). According to their neurophysiological model, cognitively driven changes in pupil size could occur without any involvement of the LC, as could sensory-driven changes. Wang and Munoz (2015) position the mesencephalic cuneiform nucleus as the critical area receiving signals from the SC and communicating with the pathways controlling pupil dilation and constriction.

Joshi et al. (2016) also highlight some important issues with the LC-NA model. In their study of non-human primates, Joshi et al. (2016) micro-stimulated sites in the LC/subcoeruleus, inferior colliculus, and SCi. They showed that stimulation in each site caused a transient pupil dilation within 1 s (Joshi et al., 2016). They analyzed pupil vs. neural activity on multiple time scales and during spontaneous vs. evoked (tone burst) activity and showed that the LC is not necessarily the region in control. The delay until pupil dilation after LC stimulation was slow enough (500 ms) to suggest the involvement of an indirect pathway. In a recent review paper, Joshi and Gold (2020) summarize evidence suggesting that pupil size modulations can occur via three possible pathways, involving the LC, SC, or pretectal olivary nucleus (PON), respectively. The PON pathway is a direct one (i.e., there exist direct anatomical connections from the retina to the PON and back to Edinger–Westphal nucleus) and is known to be involved in pupil constriction and the pupillary light reflex; the SCi pathway is thought to be both direct and indirect, and is involved in the orienting or saliency response; the LC-NA pathway also seems to have direct and indirect connections to pupil dilation and constriction and influences pupil-linked arousal and cognition (please see Joshi & Gold, 2020 for anatomical diagrams and further details).

The neuromodulatory influences over pupil size may also be more complex than previously thought. Cholinergic (Reimer et al., 2016), dopaminergic (de Gee et al., 2014), and serotonergic (Schmid et al., 2015) activity have all been shown to correlate with changes in pupil size. However, it is possible that the activity of these three neuromodulatory systems may nonetheless be tied to LC-NA activity. Noradrenergic neurons from LC project to ACh neurons in the basal forebrain (Jones, 2004), dopaminergic nuclei are connected with the LC (Sara, 2009), and serotonergic effects on pupil size may be the result of interactions with the LC-NA system (see Larsen & Waters, 2018 for further discussion on this topic). Future studies are needed to definitively determine the complex interactions of the neuromodulatory systems and neuroanatomical pathways capable of influencing pupil size.

Summarizing across the psychological and neural underpinnings of pupillometry, readers may be left with the question of what the purpose of the pupil response is. Why is it that “higher” level processes like mental effort should be connected with the neural systems that control a light reflex, all indexed by pupil size? One way to explain the commonalities is by the underlying neurophysiological processes that are based on an activation-inhibition circuit. The LC modulates the activity of the Edinger–Westphal nucleus by inhibiting it – hence reducing the activity that leads to constrictions of the pupils – while at the same time providing excitatory signals to sympathetic circuits that directly stimulate the dilator muscles of the pupil. In other words, whenever the ascending arousal system – of which the noradrenergic LC is a key center – becomes active (e.g., because of cognitive or affective processing) the pupil dilates in proportion of the LC activation (e.g., Alnæs et al., 2014). Another way to think about commonalities is in terms of behavioral relevance. The pupil is part of an active visual system, which helps us to better explore or detect stimuli so that larger pupils provide higher sensitivity for faint stimuli or when illumination is low, whereas smaller pupils provide sharper acuity. Such an over-arching principle helps us to understand the connection between low and medium level pupillary responses, such as attentional orienting, and several scholars (e.g., Laeng & Alnaes, 2019; Mathôt, 2018) have pointed out that, even with respect to higher level processes, the nervous system, as a whole, should prime itself for an optimal response. For example, when the system is already nearer to load capacity, increased pupil size (due to load) might act as a compensatory mechanism for making sure that important changes in the environment are not missed. A possibility is that, at some evolutionary point, enlarging the pupil would enhance sensitivity to numerosity, which could confer advantages in a prey/predator situation (e.g., Castaldi et al., 2021). Of additional relevance, at least in mice, it has been shown that pupil dilation alters visual sensitivity via a switch from rod to cone-dominated visual responses – a result of the change in the amount of light hitting the retina (Franke et al., 2022). This alteration in spectral sensitivity is causally related to pupil size changes and naturally occurs during periods of increased behavioral activity, in this case locomotion, and is thought to be a behaviorally relevant mechanism to aid in predator detection (Franke et al., 2022). Further research and theorizing are, of course, required in humans. To that end, a better understanding of the relationship between pupil dynamics and other ocular motor behaviors will help in forming a more integrated view of the nervous system. Similarly, alternative analysis techniques may help to elucidate or differentiate specific pupillary functions.

The relationship between pupillary activity and other oculomotor behaviors

As might be implied by the seemingly critical role of the superior colliculus (SC) described above, changes in pupil size have a special relationship to other oculomotor behaviors, such as saccadic movements and blinks.

Saccades and microsaccades

Visual processing is not uniformly distributed throughout the visual field, which makes it necessary that the eyes move to acquire visual information via foveation. Saccades are rapid, conjugate eye movements that occur about 2–3 times per second. Visual processing mostly occurs in between two saccades, when the eyes are seemingly still. However, eye movements are always present and three types of fixational eye movements have been defined: slow movement (drift), superimposed by high-frequency jitter (tremor), interrupted by high-velocity movements (microsaccades). Fixational eye movements, traditionally regarded as noise, have been demonstrated to strongly contribute to high visual acuity (see Rucci & Poletti, 2015 for a review of these concepts). Here, we focus on saccades and microsaccades, which seem to share the function of foveating regions of interest (Rucci & Poletti, 2015).

Both saccades and microsaccades are controlled by the superior colliculus and are linked to shifts in covert attention (Hafed et al., 2009). The SC – more specifically, the intermediate layers of the SC (SCi) – are thought to be an integral part of the pupil dilation response circuit (Joshi et al., 2016; Wang et al., 2014). The SCi receives input from visual, auditory, somatosensory, and fronto-parietal areas, as well as from the superficial layers of the SC (which only receive early visual input). In line with the notion that the SCi is crucial for multi-sensory integration, (Wang et al., 2017) find that pupil dilation, saccade response time, and microsaccade inhibition are correlated variables, and all exhibit greater responses in audiovisual orienting tasks, compared to solely audio or visual tasks. In earlier studies, they showed that microstimulation of the SCi (but not superficial SC layers) in monkeys led to transient pupil dilation (Wang et al., 2012, 2014; Wang & Munoz, 2014) and argue that the SCi acts as a coordinator of orienting responses, which can be measured via pupil, saccades, and microsaccades (Wang et al., 2017).

However, while saccades, microsaccades, and pupil size changes become correlated in response to a salient stimulus, it is not necessarily the case that they correlate at rest. For instance, Joshi et al. (2016) report that during stable fixation only a small proportion of the measured pupil events contained microsaccades and that those microsaccades did not occur with any consistent phase angle to the timing of pupil change events. As always, context is an important factor to consider.

The relationship between ocular motor behaviors likely changes as a function of the task at hand, tonic level of arousal, and other autonomic factors, such as the heartbeat. It has recently been shown that both microsaccades (Ohl et al., 2016) and changes in pupil size are coupled to heart rate (Wang et al., 2018). Further, Ohl et al. (2016) posit that heartbeat-evoked neural responses are capable of creating fluctuations in the oculomotor map of the SC. Such fluctuation would in turn affect the generation of saccades, microsaccades, and possibly changes in pupil size. Indeed, some have used variations in pupil size to reconstruct the heart rate rhythm and shown that pupil size variation is synchronized with very low frequency (0.0033\(-\)0.04 Hz), low frequency (0.04\(-\)0.15 Hz), and high frequency (0.15\(-\)0.4 Hz) cardiac rhythms (Park et al., 2018).

Blinks

Spontaneous blink generation has been linked to striatal do- paminergic functioning (Colzato et al., 2009; Esteban et al., 2004; Jongkees & Colzato, 2016), cf., (Sescousse et al., 2018; Dang et al., 2017), with disruptions in typical eyeblink patterning observed in clinical conditions involving timing and motor impairments (Deuschl & Goddemeier, 1998; Esteban et al., 2004; Karson et al., 1990; Nakano et al., 2011; Shultz et al., 2011; Tavano & Kotz, 2021). Blinks are thought to index endogenous attention; they increase in frequency in conjunction with an increase in Default Mode Network activity and decrease in Dorsal Attention Network activity (Nakano, 2015; Nakano et al., 2013). Blinks are known to occur at structurally salient breaks during reading and speech (Cummins, 2012; Hall, 1945) and to be indicators of cognitive event chunking, as well as cognitive load (Siegle et al., 2008; Stern et al., 1984). Blinks increase whilst speaking, in conjunction with increased facial motor activity (Orchard & Stern, 1991) and are likely to become synchronized between speakers (Nakano & Kitazawa, 2010).

With regard to pupil size, Siegle et al. (2008) show that the proportion of blinks at any given moment in time (averaged across trials, per sample) closely mirrors the pupillary response, during a cognitive load task. Their data suggest that an increase in blink activity precedes an increase in pupil size and that instances of greater blink activity tend to occur when the pupil signal is stationary in terms of acceleration (i.e., not accelerating or decelerating, in other words, when the second derivative of pupil size nears 0). A sustained increase in proportion of blinks was observed following pupil dilation. Interestingly, Siegle et al. (2008) find that blinks at the beginning of a trial are correlated with pupil dilation at a later stage (4–10 s later in a Stroop task), suggesting that the cognitive load indexed by pupil dilation is proportional to the blink response at initiation of the cognitive event. Though blinks and pupil size changes were correlated, Siegle et al. (2008) suggest that they provide unique information, with blinks being more sensitive to event onsets and offsets, and pupil dilation more sensitive to on-going processing.

Such a finding of blinks correlating with the pupil signal, even seconds later, is in line with the more recent work of Klingner et al. (2011) and Knapen et al. (2016). Knapen et al. (2016) show that blinks explain approximately 40% of the variance in pupil data, and show pupil effects lasting approximately 5 s after a blink. In their data, a blink causes a rapid decrease in pupil size, followed by a seconds-long increase. To correct for this, they model the pupillary response to a blink with a double gamma function, find instances of blinks in the pupil data, and deconvolve the blink-related pupil response from the data. Klingner et al. (2011) also determined that blinks affect the timing and magnitude of the pupil signal, and show differences in the pupillary response to the blink which depend on the duration of the blink. To counteract the possibility that blinks were systematically biasing their pupil data, they grouped blinks by duration and calculated an average pupillary response for each blink duration, which they then subtracted from the relevant portion of their pupil data. This average response typically consisted of a brief dilation, followed by constriction, followed by an approximately 2-s return to baseline pupil size, though the timing and magnitude of the changes were a function of blink duration. Interestingly, Klingner et al. (2011) report that their pupil results remained the same whether using their blink correction method as described above or only using standard blink interpolation. Similarly, Zénon (2017) reports a significant negative relationship between pupil dilation and blinks (i.e., pupil constriction after a blink) but shows that, even when accounting for such correlations in a statistical model, the main results related to pupil dilation and arousing images are not affected. Quirins et al. (2018) also find little difference in their results depending on whether they reject blink trials or interpolate pupil data during blinks.

In sum, these studies speak to the importance of at least checking the relationship between blinks and pupil dilation and quite possibly correcting for it using a subtractive or regressive technique. In particular, for tasks in which blink activity is highly correlated with stimulus events in one condition but not another, not correcting for blinks may significantly bias the results. Nonetheless, a balance between theoretical considerations and pragmatic ones must be struck. We discuss such issues further in the pre-processing considerations below.

Pre-processing pupil data

We outlined the neural underpinnings of changes in pupil dynamics, as well as the relationship between changes in pupil size and other oculomotor behaviors to stress the importance of understanding what exactly is being measured when recording pupil size and what can and cannot be inferred from changes in pupillary activity. However, equally important is to note that the insights one can gain from pupillometry are constrained by the analysis techniques one uses. Before arriving at analysis approaches, a consideration of pre-processing steps is necessary. However, we emphasize that the pre-processing steps one chooses should be dependent on one’s planned analyses. For example, many time-series based techniques discussed below require the pupil signal to be evenly sampled and contiguous. This means that pupil data during blinks, saccades, or other moments of data loss should be imputed or interpolated. Further, all pupil data for all participants should be at the same sampling rate and ideally contain the same number of samples. However, depending on the eventual statistical model one wishes to use, some pre-processing steps might become unnecessary: for example, gaze position (van Rij et al., 2019) or blinks (Zénon, 2017) can be entered into statistical models as co-variates, rather than corrected for in the pupil signal; interpolation and filtering should be avoided when using GAMMs, as they increase autocorrelation in model residuals (van Rij et al., 2019; Wood, 2020). Below, we outline possible components of a possible pre-processing pipeline – not a required list. We provide basic details about each possible pre-processing operation and discuss considerations with respect to eventual analysis techniques. Regardless of the pre-processing steps implemented, we cannot stress enough the importance of visualizing data and checking for outlying samples, spikes, etc. In the accompanying code tutorial, readers can explore pupil data for different participants’ and think through potential issues and sources of noise (see code tutorial section Explore basics of time series).

Discarding trials in which too many pupil data points are missing or noisy

Missing data occurs when the pupil size goes to zero, resulting either from a blink or from the eye-tracker’s loss of the pupil. Noisy or problematic data are typically registered via a flag output by the eye-tracker for each pupil sample indicating whether it is valid or invalid, or, alternatively, a continuous measure of tracking quality or confidence (N.B. eye-trackers handle this procedure differently, depending on the manufacturer’s choice and scientific tradition). Missing and invalid pupil data should be set to “not a number” (NaN) for future pre-processing (i.e., interpolation). One way of automating such a process would be to set a threshold-based rule, like, “if greater than x% of the pupil data are missing, the run is discarded.” Note that there is no decisive rule for percent missing data permissible; note also, that, if baseline periods are being used, missing data may need to be evaluated separately for baselines vs. trials (see also “Baseline correctingpupil data”).

Removing improbable data

Mathôt et al. (2018) suggest setting a cutoff threshold (based on visualization of pupil size distributions, not predetermined rules like 2 standard deviations) and removing outlying data points. Perhaps more broadly applicable, Kret and Sjak-Shie (2019) suggest removing outlying pupil data points that 1) contain unrealistic changes in dilation speed or 2) are isolated from surrounding data (e.g., a sparse data point that my occur in the midst of a blink when the eye-tracker erroneously measures the pupil for a few samples or tracks other elements of the face, like eye lashes, especially if the participant has applied mascara). The authors provide equations and code for enacting these cleaning procedures. Please note that though the word “removing” is used, we do not literally mean removing those data points and shortening the signal, we mean setting problematic data points to “empty” or NaN. These empty data points can later be interpolated.

Interpolating missing data

Interpolation involves fitting a line, or quadratic function, to fill in missing data between existing data points. If not due to poor recording quality or participant movement, there will always be brief periods of loss, or extreme values, in the pupil signal due to blinks. Typically, these periods, plus some padding on both sides (usually 50–200 ms), are set to NaN (see section above), then interpolated. Whether to use linear or cubic spline interpolation is a matter of personal preference, as there seems to be no consensus in the extant literature. While fitting a quadratic function (cubic spline interpolation) may more closely mimic the natural fluctuations of the pupil, it can also lead to a wider variety of introduced artifacts as compared to the fitting of a simple line.

Note, however, an alternative to interpolation would be to leave all missing samples as NaN, especially if one only needs to compute the average pupil dilation response in an epoch, or if one plans to use GAMMs. On the contrary, many signal processing techniques (e.g., a fast Fourier transform) require continuous data and cannot handle a time series with empty samples as input. Therefore, in such cases, interpolation becomes a necessity. Readers are thus reminded to think carefully about their particular use case before applying such corrections, and to visualize any signal transformations they employ, such as interpolation, to be sure that no artefacts have been introduced in the process.

Modeling the pupillary response to blinks and saccades using regression

As foreshadowed by the discussion of the relationship between pupillary activity and other oculomotor behaviors above (“The relationship between pupillary activity and otheroculomotor behaviors”), it is important to control for a variety of other oculomotor parameters when analyzing changes in pupil size. One solution is removal and/or interpolation (outlined above). Another solution is Knapen et al. (2016)’s method to model the pupillary response to both blinks and (micro)saccades and deconvolve those stereotyped responses from the pupil data (for more details about the basics of convolution see “Pupillary response function”). Because they show that the effect of blinks and saccades on pupil size lasts approximately 5 s, a method like interpolation will not remove the long-term artifact caused by these oculomotor behaviors, making the deconvolution method a possibly necessary step (notice that interpolation should still be conducted beforehand). For those interested in implementing Knapen et al. (2016)’s finite-impulse-response fitting method, Python code and tutorials are provided alongside the original paper.

As Knapen et al. (2016)’s artifact removal method has only recently been suggested, it is not yet widely adopted. One potential issue is that one needs enough observations of blink and saccade-related pupil activity to estimate a valid deconvolution kernel for those events (i.e., to build a model of saccade and blink-related pupil activity, respectively). Such models will be difficult to estimate if participants rarely blink, for example. One may need to employ specific experimental design choices to ensure enough blinks for a valid model (e.g., allowing participants to blink freely, having a long baseline period in which blinks are sure to occur, etc.). Pragmatically speaking, if blinks or saccades rarely occur, they are probably negligible. Even though they may add measurable noise to the pupil signal, such noise may make no significant difference in terms of statistical results. Nonetheless, the relative frequency and magnitude of blinks and saccades should be assessed. The most important check is that blinks and saccades do not occur in some experimental condition with greater frequency and magnitude vs. another, thus possibly biasing pupil results and interpretations. If significant differences exist for blinks or saccades in certain conditions, those should be reported and the researcher should be careful to control for such confounds in the pupil data.

Filtering

A high-pass filter can be used to remove large-scale (low frequency) drift in pupil data, while a low-pass filter can be used to remove physiologically irrelevant high frequency noise in the data. However, with either high- or low-pass filtering, it is important to be sure that the filtering functions being used do not affect the phase of the pupil signal or create ringing artifacts, which might later appear as activity of interest (see de Cheveigné & Nelken, 2019 for a discussion of this issue and filtering advice). Similarly, this artifactually introduced autocorrelation in the signal can be a problem for some statistical modeling approaches one might later wish to use (e.g., GAMMs; see van Rij et al., 2019). It is also important to note that low frequency information in the pupil signal might actually be of interest, since it may signal changes in tonic activity in the LC-NA system that is meaningful in terms of cognitive processing. In this case, very low or no high-pass filtering should be employed (e.g., for infraslow activity see Blasiak et al. (2013); Okun et al. (2019); for time-on-task effects, see “Accounting for time-on-task”; or for detrended fluctuation analysis see “Detrended fluctuation analysis (DFA)”). Additional examples and discussion can also be found in accompanying code tutorial section Filtering.

Gaze correcting pupil data

When gaze changes occur during a task (e.g., during free-viewing or reading tasks), it is of critical importance to correct for the pupil foreshortening error (Hayes & Petrov, 2016; Gagl et al., 2011; Brisson et al., 2013) – that is, when the pupil falsely appears to have changed size due to the now different angle of the pupil to the eye-tracking camera, as a function of gaze position change. The correction technique of Hayes and Petrov (2016) is fairly straightforward but requires taking measurements of distances from the eye-tracking camera to the eye, to the screen, etc. to be used in calculating an appropriate model. Though such measurements could be easily computed in most cases, they might not be possible if the data are being accessed in an open-source context that has not documented such information. In the event that these measurements are unknown or participants are constantly presented with a fixation cross and nothing else on the screen (e.g., a purely auditory task), an alternative to gaze correction would be to remove any periods in which the eye is greater than a few degrees away from the center fixation cross (Korn & Bach, 2016). If the task only involves changes in horizontal gaze position (e.g., during text reading), then the synthetic correction function of Gagl et al. (2011) can be applied. Alternatively still, rather than correcting pupil size in the pre-processing stage, one can include x and y gaze position as regressors in a later statistical model (see e.g., van Rij et al. (2019), who include x and y gaze as nonlinear interaction terms in a generalized additive mixed model). Similarly, based on gaze position, Madsen et al. (2021) regressed out both local and global luminance from every subject’s pupil data while watching a video. The global luminance was the luminance of the entire frame, while the local luminance was a small, defined radius around the point of gaze. Note, however, that in typical cognitive psychology pupillometry experiments, the general recommendation is for eye position to remain constant between conditions (please see Mathôt & Vilotijević, 2022 for detailed discussion of relevant experimental design principals).

Normalizing pupil data

To compare variance in the pupillary time course related to the task at hand, normalizing pupil can be useful. Several studies normalized their data in some way – for example, by calculating percent change from mean pupil size over the course of a trial (e.g., Lavín et al., 2014), by z-scoring the pupil data for each trial (e.g., Colizoli et al., 2018a; Kawaguchi et al., 2018; Fink et al., 2018; Wainstein et al., 2020), or by using dynamic range normalization (e.g., Piquado et al., 2010 employed a pre-test to ascertain differences in pupil response ranges between younger and older adults and correct trial data based on these individual ranges). Perhaps the most critical aspect of normalization is to clearly report the equation used so that others can easily replicate results or understand how results might diverge based on different normalization choices. To provide a few concrete examples: Fink et al. (2018) report the following equation, normalizing based on the mean and standard deviation of the trial:

while Piquado et al. (2010) report the following equation, normalizing based on the minimum and maximum range of the pupil:

In deciding about data normalization, one should consider what kind of variability is relevant for the research question at hand, and operate accordingly. Again, later statistical modeling approaches that include random effects for individuals may preclude the need to normalize data.

By definition, a normalization procedure will convert the pupil data from the raw measured units to arbitrary or standardized ones. While such a transformation can have advantages for cross-participant or group comparisons, it also has some downsides. For example, the true pupil diameter value in millimeters may provide additional insights as to which type of process underlies pupillary change. Steps up or down of light intensity can change the pupil with constrictions as small as one third of its diameter or dilations that are twice as large as the diameter of the resting state. Such pupillary responses to light increments or decrements are very dramatic, compared to pupillary change due to psychological factors (like mental work or emotional states), best observed when luminance is kept constant. Psychological changes are rarely greater than 0.5 mm\(^3\) or approximately 15 to \(20\%\) increments from rest. Moreover, given that pupil size can range between 2 and 8 mm (Watson & Yellott, 2012) and that pupil changes driven purely by sensory information (e.g., luminance or nearness) are greater than psychosensory responses makes meaningful checking the true values in millimeters (if available), since these may be an important data quality check (Mathôt, 2018). Pupils being part of human anatomy, there is an obvious advantage in expressing pupil size according to real-world dimensions, as is recommended by Steinhauer et al. (2022). However, though some eye-trackers output pupil size in millimeters, others output pupil size in arbitrary units. In the case of arbitrary units, some algorithms to convert to mm exist, if particular parameters are known (e.g., distance to the screen; see Hayes & Petrov, 2016, Fig. 4), otherwise, normalizing can offer the possibility to put pupil data recorded in arbitrary units onto the same scale across participants.

Baseline correcting pupil data

While normalization re-scales a signal based on measured or statistical constants, baseline-correction refers to altering the pupil signal based on measurements taken during a baseline period. Such correction does not necessarily change the unit of pupil measurement (i.e., it can still be in millimeters), but it does make the reported pupil measure relative (to the baseline). The assumption is that, by taking the mean or median of the pupil size during the pre-stimulus period and subtracting (or dividing) it from the stimulation period, aspects of the pupil signal unrelated to the stimulus are removed. Such “aspects” might be person-specific (e.g., general arousal level) and/or stimulus-specific (e.g., luminance). However, Mathôt et al. (2018) show through a series of simulations that baseline correction can create large distortions in the measured pupil data (particularly if a blink occurred during the baseline period) and bias statistical results. Ultimately, they suggest using a subtractive, rather than divisive, baseline correction, as it is less susceptible to artifact. They also provide suggestions for visually inspecting baseline-corrected pupil data to check for artifacts (e.g., rapid changes in pupil size occurring in less than 200 ms following the baseline period are suspect). Similarly, Laeng and Alnaes (2019) suggest a subtractive method and advise against percentage-based corrections, in line with the first generation of researchers in pupillometry (Beatty, 1977). See also Reilly et al. (2019) for further discussion of baseline procedures and the need for standard procedures.

An alternative to baseline-correcting the pupil data of interest, is to include baseline pupil size as a regressor in a final statistical model (van Rij et al., 2019). This approach circumvents the possible issues noted above and is an elegant means to account for a variety of possible baseline effects. For example, Widmann and colleagues illustrate how such an approach unites divergent findings related to the effect of baseline pupil diameter and luminance levels on subsequent pupil diameter changes (Widmann et al., 2022). Combined with a factor analysis separating the pupil trace into parasympathetic and sympathetic components, they show that baseline pupil size has a negative linear relationship with parasympathetically mediated pupil size changes, while the sympathetic component exhibits an inverted U-shaped function. They also suggest that, given the effect of luminance level on the possible range of evoked pupil sizes, pupil data recorded at different luminance levels cannot be directly compared and should always be reported.

Example of using convolution (A) or deconvolution (B) to predict the recorded pupil trace or precipitating events, respectively. Note that one could use the canonical pupillary response (PRF) for each participant or estimate the PRF individually for each participant. Alternatively, estimating the PRF could be the goal of analysis in its own right (see “Temporal response function” below)

Accounting for temporal lag

One possible limitation of pupillometry is the lag between external and/or cognitive events and the subsequent change in pupil dynamics. Such lag is still less than the lag in blood-oxygen level dependent (BOLD) signal in functional magnetic resonance imaging (fMRI), but is considerably larger than the lag of electroencephalographic (EEG) or magnetoencephalographic (MEG) signals. While such a lag should not deter one from conducting pupillometry studies, it should be carefully considered in experimental design (e.g., making sure there is enough time between the presentation of any two successive events for the pupil to return to baseline) and/or analyses (e.g., correcting pupillary responses which may have summed in time due to events occurring rapidly; see e.g., Wierda et al. (2012) and “Pupillary responsefunction”). To date, a few main approaches exist for handling lag in pupil analyses: (1) using convolution or deconvolution with a pupillary response function (PRF), (2) calculating the first derivative of the pupil signal, or (3) separately analyzing a fast and slow pupillary component. We discuss all three approaches in turn.

Pupillary response function

Given the various possible top-down and bottom-up influences on changes in pupil size, it is difficult to ascertain which external or cognitive events drive pupillary changes, and at what time lag. To address this issue, Hoeks and Levelt (1993) asked participants to listen to auditory tones and respond with a button press. They fit the averaged pupillary responses of participants with an Erlang gamma function. The function was estimated to have parameters \(m=1\) (linear exponent), \(n=10.1 +/- 4.1\) (numbers of steps in the signaling cascade) and \(t_{\max } = 930 ms +/- 190 ms\) (latency of maximum pupil response). Such a function can be used to model how the pupil will respond, given some input stimulus. However, with only eight participants, during one type of task, the parameters of this pupillary response function (PRF) remain to be more widely studied in different contexts and with a larger number of participants.

More recent reports have noted that, when no motor response is required, the maximum pupil response latency is around 500 ms, and that, when a motor response is required, two peaks are present in the pupil signal, the first around 750 ms and the second, bigger peak around 1400 ms after tone onset (McCloy et al., 2016). Still others continue to refine PRF models by adding free parameters into the response function (Fan & Yao, 2010) or disregarding the biophysical reality and finding the best fitting model (Korn & Bach, 2016). Additionally, recent work shows that the time to maximum pupil dilation varies across participants but is consistent within participant, suggesting the need to fit a PRF separately for each participant (Denison et al., 2020), rather than using one canonical model for all participants.

The advantage of using a PRF is that it allows one to either forward (convolution) or reverse (deconvolution) model the predicted pupil time series or the correlated cognitive or stimulus events, respectively (see Fig. 1). The (de)convolution technique has been used in a variety of studies to show that the pupil reflects fluctuations in attention and decision-making at a fine temporal resolution; see for example: Wierda et al. (2012); de Gee et al. (2014); Kang and Wheatley (2015); Korn and Bach (2016); Korn et al. (2017); Fink et al. (2018); Denison et al. (2020). Generally defined, convolution is the integral of the product of two functions – in our case, our two times series of interest, with one reversed and shifted along the length of the other. It could also be thought of as the moving dot product calculated at each moment in time when one signal is reversed and shifted in time along the other. Still also, convolution can be thought of as a type of filter or weighting function. For example, in Fig. 1A, we see the amplitude of an acoustic signal. When we convolve that signal with our PRF, or “kernel,” we see that the output signal is now much lower in frequency content (i.e., high frequencies have been removed and the input signal is now weighted by the response properties of the pupil; in other words, in the temporal range of the pupil). To help readers gain a deeper understanding of (de)convolution, we provide an interactive demo in the accompanying code tutorial; see code sections Convolution, Building intuition about how convolution works, Deconvolution, and Predicting pupil data.

Alternative to using pure convolution, one could do the same type of analysis by optimizing a fit between the two signals, using regression-based techniques. Please see “Temporal response function” for more details about such an approach and for more information about using the pupillary response function as a dependent measure, rather than as a means to account for lag between pupil activity and the signal of interest, as explained above.

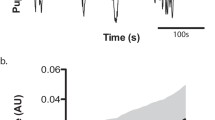

Pupillary difference signals

Depending on the research question, the number of pupillary changes, or the time points of change, may indeed be more interesting than evoked pupil size. For instance, in the simulated data plotted in Fig. 3C, The first and second traces show opposite polarity of pupil size (i.e., when one increases in size, the other decreases); however analyzing the derivative of both signals would show similar instances of pupillary change. Further, analyzing the derivative of pupil size allows one to examine instances of pupillary change which occur on a faster time scale. In relation to preceding events, de Gee et al. (2020) show that, in humans, the first derivative of pupil change can be observed as early as about 240 ms after stimulus onset, bringing the pupil time series onto a much faster timescale, potentially more suitable for certain types of analyses or research questions.

Beyond increasing the temporal resolution of the pupil signal, pupil derivative metrics may be interesting dependent measures in their own right, for instance in classifying clinical conditions (Fotiou et al., 2009), predicting lapses in task performance (van den Brink et al., 2016), or studying attention to auditory sequences (Milne et al., 2021). One could also count the number of changes in pupil size between conditions as a dependent measure, as has been done in both macaque (Joshi et al., 2016) and human studies (Jagiello et al., 2019; Schneider et al., 2016). Note that most of the analysis techniques discussed below in “Analysis techniques” can be conducted on the standard pupil signal or its derivative(s).

Pupillary components

Because the pupil is driven by both parasympathetic and sympathetic activity, another approach to understanding the temporal lag or dynamics in the system is to separate the pupil signal into different components, typically using principal components analysis (PCA; e.g., Steinhauer & Hakerem, 1992; Steinhauer et al., 2004). Such an approach has been used, for example, by Widmann et al. (2018) to show that emotionally arousing music acts on pupil dilation specifically through the sympathetic branch. In addition to segregating by sympathetic and parasympathetic, one could also separate the pupil signal into components thought to be driven by cognitive events, such as an early attentional orienting or sensory component vs. a later executive control one (see e.g., Geva et al., 2013; Geng et al., 2015). Note that PCA is typically used on the pupil dilation response over a somewhat short time window (e.g., 3 s), and to date has not been used over longer time scales. Nonetheless, we discuss it here as one might still wish to employ some of the time series methods discussed below on these short component traces, or to attempt application of PCA to pupil time series of longer duration.

Accounting for time-on-task

Prolonged task performance results in changes in tonic pupil diameter (i.e., time-on-task effects). For example, van den Brink et al. (2016) showed that time-on-task can impose relationships between pupil diameter and task performance that obscure the more nuanced effects of task on pupil dilation. Thus, in addition to revealing interesting phenomena, such as lapses in attention (Kristjansson et al., 2009) or changes in pupil size decrements depending on emotional content of auditory text excerpts (Kaakinen & Simola, 2020), it may be important to control for time-on-task effects in pupil diameter analysis over long time scales.

One way to take into account such effects is to apply a sliding window to the behavioral performance (e.g., accuracy or response times) and pupil data and to extract the average performance as well as pupil diameter and/or velocity (i.e., the first order temporal derivative) within each window. To examine whether the pupillary signal shows time-on-task effects, van den Brink et al. (2016) fitted a straight line to the pupil time series obtained by the moving average and used the slope of the fitted line as an index of linear trend over time. The distribution of slopes across task blocks for each participant was then compared to zero using a t test. Relationships between the time series of pupillary and performance measures can be examined by comparing these measures with multiple regression (see e.g., van den Brink et al., 2016). Including quadratic regressors in statistical models can reveal non-linear relationships between variables, such as the typical Yerkes–Dodson (i.e., the inverted U-shaped) relationship between pupil dilation and task performance, which is compatible with the adaptive gain theory of LC-NE function (Aston-Jones & Cohen, 2005). Such effects may be obscured if the time-on-task effects are not statistically partialled out.

Related to time-on-task, one may also wish to consider the sleepiness of participants. For example, Lüdtke et al. (1998) analyzed slow (0.0\(-\)0.8 Hz) pupillary oscillations as indices of participants’ fatigue. They detected slow waves by applying a fast Fourier transformation for consecutive segments of 82 s over the entire 11-min recording and plotted the power spectrum estimate for each data segment. Slow oscillations (fatigue waves) were more prominent for participants who scored high on self-rated sleepiness. They used a pupillary unrest index (PUI: cumulative changes in pupil diameter based on mean values of consecutive data sequences) to further characterize the differences between alert and sleepy participants. The median power and PUI scores were both higher in the sleepy as compared to the alert participants. Both slow oscillations reflecting fatigue and changes in pupil diameter over time-on-task thus increased when participants were sleepy. Similar observations were made in a seminal paper by Lowenstein et al. (1963). Note that the PUI may also be an interesting dependent measure in its own right, depending on the research question (see e.g., Schumann et al., 2020) and that that these low frequency oscillations have alternatively been referred to as hippus (Bouma & Baghuis, 1971) or fatigue waves (Lowenstein et al., 1963). These < 0.15 Hz oscillations are thought to be mediated mostly by parasympathetic activity, though Schumann et al. (2020) also show a relation with sympathetic measures, namely, the amplitude of pupillary responses, vagal heart rate variability, and spontaneous skin conductance fluctuations.

While one solution to account for time on task would be including regressors in statistical models, other solutions are also available. van den Brink et al. (2016) found that the derivative of pupil diameter (see “Pupillary differencesignals”) was robust to time-on-task effects, suggesting that this measure offers a potential marker of attentional performance that does not require correcting for time on task. Additionally, working with shorter (e.g., 1 s) epochs and z-scoring them accounts for time-on-task effects (see e.g., Madore et al., 2020). Another alternative is to restrict the analyses to pupillary responses from a subset of trials that are not affected by the time on task (see e.g., Aminihajibashi et al., 2020), or to use a high-pass filter to correct pupil drift over time (see “Filtering”). Yet another approach is to think about time-on-task effects as a special case of temporal dependency in the signal; in this case, statistical models that account for autocorrelated errors can be employed (see “Single trialmodels” and van Rij et al. (2019)).

Analysis techniques

Whether analyzing the raw pupil trace, pupil derivative, pupil components, or (de)convolved pupil signal, the eventual goal is to characterize similarities or differences between pupil responses in different conditions, within / between participants, with respect to a given stimulus, or with respect to predicted pupil data (see Fig. 3 for examples). To date, most pupillometry papers have compared mean pupil size or the pupil dilation response across different epoched conditions of interest. This section first outlines those traditional methods based on means, before moving into ways to analyze single-trial pupil signals in both the time and frequency domains, in linear and non-linear ways. While the overall focus and interest of this paper is on signal-to-signal analysis approaches (e.g., comparing the continuous pupil signal to a continuous speech or music signal; see Fig. 3), it is critical to understand epoch-based approaches when considering whether and when to use alternative, continuous, signal-to-signal ones. Additionally, with an appropriate experimental design and planned statistical model, some signal-to-signal measures may be used within epoch-based frameworks.

Table 2 provides a summary of each of the signal-to-signal analysis techniques we discuss below and the type of question they can help to answer. In the subsection for each technique, we aim to provide (1) a conceptual understanding of the mathematical concept, (2) its application in pupillometry, and (3) references to key papers, tutorials, or code toolboxes to learn more about the technique. All code required to recreate every figure in the paper and to step through the analysis techniques with the provided toy data set, is available on GitHub and on Code Ocean.Footnote 1

A brief review of epoch-based approaches

The first and still widely used method for analyzing the pupil diameter (see Laeng & Alnaes, 2019 for a review) either disregards pupil data as time series or approximates it by dividing the pupil response into epochs or bins, typically based on an equal number of samples (e.g., Bianco et al., 2019; Bochynska et al., 2021; Zavagno et al., 2017). However, we wish to note that time is never really “ignored;” rather, the researcher makes the implicit assumption that the window over which they have averaged is the only relevant temporal scale of interest, thereby discarding experiment-wise changes in response patterns.

Many classical and influential studies used a statistical approach which did not take pupillary changes over time into account, although they also often presented graphs of the pupil waveform as an illustration (e.g., Kahneman & Beatty, 1966; Ahern & Beatty, 1979a), relying on the readers’ ability to perform “eyeball statistics” (i.e., viewing that some portions of the waveform belonging to different conditions or groups of participants were visibly above or below one another). In fact, some of the seminal studies by Hess and Polt (1960, 1964), which introduced the method of pupillometry into psychology, did not analyze the pupil with formal, inferential, statistics but simply showed average data in either a table or a bar graph (without any metric of error).

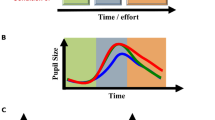

Note that, though most previous studies solely analyzed mean pupil size within an epoched window (sometimes referred to as a task-evoked pupillary response, or TEPR), recent studies have turned to a variety of new epoched measures, such as maximum evoked dilation, latency until maximum dilation, dilation velocity, sustained amplitude, delay until return to baseline, or area under the curve of the dilation response (see e.g., Wang et al., 2014). Visualizations of some of these metrics are provided in Fig. 2, panel A. Panels B and C highlight cases where specific measures differ. An important point of interest to highlight is in Panel C, where taking the mean pupil size in the 3-s epoch would yield the same result for the two pupil traces (solid black and dotted pink), perhaps leading a researcher to conclude that there are no significant differences between the two conditions that correspond to those two traces. However, visualization of the pupil waveform clearly shows some potentially important differences with respect to response onset latency, peak dilation, dilation velocity, etc. We, therefore, urge researchers to visualize their pupil waveforms, rather than blindly taking means within epochs. Such visualization is also important for considering the appropriate epoch duration to choose (Steinhauer et al., 2022).

Examples of possible metrics of interest within a pre-defined epoch (A). Panel B shows two simulated pupil traces, corresponding to two hypothetical conditions of interest (solid black line vs. pink line). These two traces represent an example of when the pupil dilation response differs in mean and peak amplitude, duration of response, area under the curve, etc., but not dilation velocity. Panel C, on the other hand, shows example pupil dilation responses with the same mean amplitude but different response onset latencies, dilation velocities, and sustained dilations. See “A brief review of epoch-based approaches” for more details. All data are simulated from a canonical pupillary response function; see accompanying code tutorial section Fig. 2

While these other metrics can clearly provide alternative insights, compared to means alone, they also present some new challenges. For example, how to define peak pupil dilation. In the black traces in each panel in Fig. 2 the peak pupil amplitude is quite obvious; however, what about the pink trace in Panel C – when exactly does the peak occur? Defining the peak also influences other possible metrics of interest, such as latency to peak or peak to baseline latency, or a metric not pictured here – referred to as peak-to-peak amplitude – which would be relevant if the pupil exhibited a positive peak, followed by a negative one. Thus, defining the peak is an important problem. Looking for the maximum value of pupil size within the epoch is, of course, the easiest way to define the peak amplitude; however such an approach can be susceptible to artifacts. An alternative might be taking a mean within the window of time between two changes in slope (see e.g., Reilly et al., 2019). However, it is also important to keep in mind that averaging waveforms can result in distorted peaks and latencies. Thus, finding peaks on the single trial level then averaging, or constructing a statistical model with single trial peak amplitudes included (see “Single trial models”), may be preferable to finding the peak of an averaged waveform. In general, pupil dilation responses can be conceived of analogously to event-related potentials (ERPs) in EEG analysis. In the ERP literature, the possible pitfalls of making assumptions from averaged waveforms (actually composed of different underlying component waveforms) and analyzing waveform peaks have both been discussed extensively. Please see Luck (2014) for thorough explanations and advice regarding best practices.

If epoched analyses, with statistical inferences, are the sole analysis aims of the reader, many recently developed software tools will work off-the-shelf. For example, CHAP (Hershman et al., 2019), written in MATLAB, provides an easy-to-use and standardized starting point. CHAP can parse input files from a variety of different eye-tracking systems and can deal with basic pre-processing steps (outlying samples, interpolation during blinks, exclusion of outlying trials, and exclusion of outlying participants). In a graphical user interface (GUI), the user can define preferred parameters for exclusion and subsequently define the trial and group level variables that are relevant for analyses. CHAP will provide epoch-based statistics and plots, with respect to the entire epoch or to changes over time during the epoch. For those wishing for programmatic usage of a MATLAB pupil pre-processing toolbox, the recently published PuPl (Kinley & Levy, 2021) offers both GUI and programmatic solutions, and can also be used in the open-source MATLAB alternative, Octave (Eaton, 2002). Further, it provides the possibility to process epoched or continuous data, and to correct pupil size for gaze position. For Python users, PyTrack (Ghose et al., 2020) and Mathôt and Vilotijević (2022) provide similar functionality, while gazeR (Geller et al., 2020) or pupillometryR (Forbes, 2020) will do the job in R.

Single trial models

Rather than calculating epoch averages per condition of interest, and running statistics on these group averages, a more recent trend in pupillometry is to model single trial pupil data. While differences in means between populations or conditions form the foundation of psychological research, single-trial analyses – which take variance within subjects into account – can provide insights impossible to observe on the mean level (for a special issue on this topic, see Pernet et al., 2011). For example, one could analyze fluctuations in task performance over trials as a function of pupil diameter, assess the relationship between stimulus and pupil for each trial (see time series methods below), classify the task or state a participant was in during each trial, etc. Importantly, by reporting both within and between subjects and trials variance, a more full picture of the experimental process under consideration can be obtained.

To date in pupillometry research, a variety of single-trial analysis approaches have been used. In some cases, summary statistics like the ones discussed above (e.g., mean pupil size; peak dilation) have been calculated in some time window and entered into a multi-level model, such as a generalized linear mixed model (GLMM). Such approaches allow for nested, hierarchical data and the possibility to model participants, stimuli, participant-by-condition interactions, etc., as random effects. They also allow one to control for co-variates like baseline pupil size and gaze position. However, such an approach still collapses information across time. For a discussion of the limitations of this single-value approach, see Hershman et al. (2022). Possible alternatives include entering time bin as an additional predictor (and calculating the same pupil metric repeatedly in different time windows), or modeling the parameters of the pupillary time course from the data of the full trial. This latter approach has multiple potential instantiations. For example, some have used growth curve analysis (GCA; see e.g., McLaughlin et al., 2022; Wagner et al., 2019; McGarrigle et al., 2017; Geller et al., 2019). Others have used generalized additive mixed model (GAMM; see van Rij et al., 2019 for detailed review and tutorial). And still others have used Bayesian approaches with repeated t tests across the time courses of two conditions (see Hershman et al., 2022 for an overview). For further discussion of the influence of time window selection on statistical results, please see Peelle et al. (2021).

Typically, such analyses are focused on differences between conditions, measured via pupil diameter, whether that is in a single-value framework, or with respect to dynamic changes over time. Below, we switch focus to approaches that can be referred to as “signal-to-signal”; that is, analytic techniques that define some relationship between the dynamic pupil signal and a dynamic stimulus of interest (e.g., the amplitude envelope of music or speech). Such approaches are different from the measures shown in Fig. 2 in that they define a relationship between the pupil and some other signal(s), rather than being exclusively based on the pupil signal alone. Please note that these approaches do not represent final statistical models. The output from these signal-to-signal techniques might be chosen to be calculated in a time-binned or single-valued way and entered into any number of final statistical models, based on the researchers’ chosen theoretical framework (e.g., frequentist, Bayesian, linear, non-linear, etc.).

Correlation

Rather than looking at central tendency measures in epoched time windows, there are instances in which one might want to analyze the dynamics of the pupil signal over time. For example, one may wish to compare two or more pupil traces with one another, with a predictive model, or with an attended stimulus (see Fig. 3 for examples), to answer questions such as “Does pupil size change with changes in stimulus feature X?” or “Do participants’ pupil traces synchronize with the stimulus?” The most appropriate analytic technique to answer such questions will depend on the characteristics of the data, as well as the specific mathematical properties underlying the question one wishes to address (see Table 2). It is our goal to provide an overview of the types of signal-to-signal analyses that have been applied in pupillometry and the contexts in which one might wish to use them, so that readers can come to their own informed decisions about what technique to apply to their data. Here, we start with the simple case of computing a correlation, before moving on to more complex methods.

Examples of time series one might wish to compare. A Recorded pupil data (black) vs. predicted pupil data (green). B Pupil traces of many different participants perceiving the same stimulus. C Pupil traces of one participant perceiving the same stimulus. Simulated data; see accompanying code tutorial section Fig. 3

Pearson’s correlation coefficient, which ranges from -1 to 1, is used to index the strength of linear covariance between two times series. The coefficient is calculated as the covariance of the two signals, divided by the product of their standard deviations. The Pearson correlation coefficient is scale-invariant (i.e., X or Y can be transformed by some constant and the correlation coefficient will not change) and symmetric (i.e., \(corr(X, Y) = corr(Y,X)\)). While the sign of the correlation coefficient (positive or negative) can be used to understand the relationship of the effects, the square of the correlation coefficient (i.e., the coefficient of determination) is often used as a measure of the proportion of variance one variable can explain in another, ranging between 0 and 1. For example, say one is interested in the correlation between the pupil time series and the amplitude envelope of some audio signal to which a participant was listening. We get a correlation r of .6, which we can interpret to mean that when the amplitude envelope of the sound increases so does the pupil size (and vice versa). We can then square this coefficient and say that the amplitude envelope explains \(36\%\) of the variance in our pupil time series. Note that, when using Pearson’s product-moment correlation, the two time series to be correlated should be normally distributed and the analysis will only capture a linear relationship between X and Y (i.e., it cannot be used to analyze nonlinear relations which might exist in the data).

Depending on the properties of the signals (e.g., what stimulus was presented while the pupil trace was recorded, duration of the recording, etc.), it may be that the assumption of stationarity (constant mean and variance over time) is violated. In such a case, one could instead calculate the correlation coefficient over moving time windows (in which the signal could be assumed to be stationary). Such an approach is referred to as a ‘moving,’ ‘rolling,’ or ‘sliding window’ correlation, and yields a time series of correlation coefficients, with which one can then do further analyses.

Time-domain techniques for analyzing the similarity/difference between two or more signals. Panels A and B correspond to two possible signals of interest. Panel C shows the moving window correlation between both signals at window sizes of 500 ms, 1, 2, and 3 s. Panel D plots the cross-correlation function between the two signals, with their optimal lag highlighted by the cyan dot, while panel E visualizes the two signals against one another at their ideal lag (with zero-padding on either side). In this case, the highest correlation (r = -.25) occurs when the pupil lags the amplitude envelope by 3.55 s. Instead of using a constant lag, one can allow for variable lag between the two signals by using dynamic time warping (Panel F). Here, the distance between the two signals is 2020. Note that the x axis of F is now extended to 60 s. Please see the in-text sections corresponding to each method for more details. Analysis code to reproduce all examples in this figure is provided in the accompanying code tutorial, section Fig. 4

Figure 4, shows two example signals to be correlated (panels A and B). In the current case, the example signals are from the toy data set associated with this paper, which includes the pupil traces of multiple participants listening to the same except of Duke Ellington’s “Take the A Train.” Panel A shows the upper amplitude envelope of this music, while panel B shows the average pupil trace across participants. The Pearson correlation coefficient between both example signals in their entirety is r = -.207, p < .001. Panel C shows the moving window correlation between the two signals at window sizes of 500 ms, 1, 2, and 3 s. As can easily be visualized, the choice of window size will affect results; the four traces are not always in agreement, with respect to the correlation coefficient at each moment in time. As is also obvious in the plot: the larger the window size, the more smoothed out the variation in correlation coefficient will be. The choice of window size should be made according to what the experimenter deems to be the most relevant temporal scale, given the experiment parameters. Note that, when using a windowed moving correlation approach, if a p value for each moment in time is needed, then it is necessary to correct said p values for multiple comparisons. Such correction could be accomplished via Monte Carlo simulations or data permutations to find a critical p value (though see “Appropriate controls” below about permutation considerations).

When the assumption of normality is violated, Spearman’s rank correlation coefficient can be used to assess the monotonic, but not necessarily linear, relationship between two signals. To calculate Spearman’s correlation coefficient, the two raw signals are first converted to ranks, and then the Pearson correlation of the rank sequences is computed. Due to the conversion of samples to ranks, Spearman’s correlation reduces the effect of outlying data points (e.g., the data point with the highest value will have the highest rank, regardless of the magnitude of the raw value). Spearman’s correlation has also been shown to be more robust for distributions with heavy tails; see de Winter et al. (2016) for simulations and discussion, or Schober et al. (2018) for an accessible tutorial with visualizations. In the toy example in Fig. 4, the Spearman correlation coefficient between both example signals in their entirety is r = -.229, p < .001. The moving window analyses could also be conducted using Spearman, instead of Pearson, correlation. To re-run these analyses, see accompanying code tutorial section Fig. 4.

Most programming languages have easy-to-install statistics packages which include correlation and cross-correlation (see “Cross-correlation”) functions, including the possibility to select the “type” of correlation to use (e.g., Pearson, Spearman). For example, in Python, one could find such functions in the NumPy, SciPy, or Pandas libraries, while, in R, the stats or tseries packages would be good starting points. The same code recommendations apply for cross-correlation functions (discussed below in “Cross-correlation”).

Cross-correlation

Like for correlation, the cross-correlation between two signals can be used to understand the degree to which they change together, however it additionally reveals the correlation at varying temporal lags between the two signals. For example, you might hypothesize a relationship between the amplitude envelope of your stimulus and your pupil data, but you likely do not think the relationship is instantaneous, and may be interested in knowing at which temporal lag your pupil data are most highly correlated with your stimulus.