Abstract

What Works Clearinghouse (WWC, 2022) recommends a design-comparable effect size (D-CES; i.e., gAB) to gauge an intervention in single-case experimental design (SCED) studies, or to synthesize findings in meta-analysis. So far, no research has examined gAB’s performance under non-normal distributions. This study expanded Pustejovsky et al. (2014) to investigate the impact of data distributions, number of cases (m), number of measurements (N), within-case reliability or intra-class correlation (ρ), ratio of variance components (λ), and autocorrelation (ϕ) on gAB in multiple-baseline (MB) design. The performance of gAB was assessed by relative bias (RB), relative bias of variance (RBV), MSE, and coverage rate of 95% CIs (CR). Findings revealed that gAB was unbiased even under non-normal distributions. gAB’s variance was generally overestimated, and its 95% CI was over-covered, especially when distributions were normal or nearly normal combined with small m and N. Large imprecision of gAB occurred when m was small and ρ was large. According to the ANOVA results, data distributions contributed to approximately 49% of variance in RB and 25% of variance in both RBV and CR. m and ρ each contributed to 34% of variance in MSE. We recommend gAB for MB studies and meta-analysis with N ≥ 16 and when either (1) data distributions are normal or nearly normal, m = 6, and ρ = 0.6 or 0.8, or (2) data distributions are mildly or moderately non-normal, m ≥ 4, and ρ = 0.2, 0.4, or 0.6. The paper concludes with a discussion of gAB’s applicability and design-comparability, and sound reporting practices of ES indices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Single-case experimental designs (SCEDs) are research designs that can be used to determine whether there exists a causal or functional relationship between the introduction of an intervention and changes in outcome behavior(s). SCED studies have been used to evaluate the effectiveness of interventions in psychology, education, speech pathology, medicine, sports and athletic performance, to name a few (Barker et al., 2011; Byiers et al., 2012; Franklin et al., 1996; Horner et al., 2005; Kunze et al., 2021; Morgan & Morgan, 2009; Vlaeyen et al., 2020). SCED typically employs a small number of cases who serve as their own controls. Among the variety of SCEDs, the multiple baseline (MB) design was by far the most popular design accounting for nearly 50% of published SCED studies (Hammond & Gast, 2010; Horner & Odom, 2014; Pustejovsky et al., 2019; Shadish & Sullivan, 2011; Smith, 2012; Tanious & Onghena, 2021). An MB design consists of one A phase and one B phase across multiple cases, multiple behaviors of one case, or multiple settings for the same behavior of a case. During the A phase, a case (or a behavior) is observed to be stabilized before an intervention is introduced to that case (or that behavior). An intervention in an MB design is successively administered to all cases (behaviors or settings) until all cases (all behaviors, or a behavior in all settings) are intervened to allow for an assessment of its effectiveness.

Advances in SCED methodology

Traditionally, systematic visual analysis of SCED data has been used to determine whether a behavioral change is due to the introduction of an intervention, but not chance fluctuations (Horner et al., 2005; Kazdin, 2011; Wolfe & McCammon, 2022). In recent years, advanced approaches have been applied to quantify intervention effects in SCED studies (Chen et al., 2019; Kazdin, 2019; Tanious & Manolov, 2022; WWC, 2022). Many scholarly journals published special issues to devote exclusively to these advanced approaches, such as Journal of School Psychology (2014, Volume 52, Issue 2), Remedial and Special Education (2017, Volume 38, Issue 6), Developmental Neurorehabilitation (2018, Volume 21, Issue 4), and Perspectives on Behavior Science (2022, Volume 45, Issue 1). The advanced approaches include (1) quantifying an intervention effect with standardized/unstandardized indices (e.g., Hedges et al., 2012, 2013; Moeyaert et al., 2013; Pustejovsky et al., 2014; Ugille et al., 2012, 2014) or with non-overlapping ESs (e.g., Michiels & Onghena, 2019; Parker & Vannest, 2009), (2) employing different methods to estimate an intervention effect (e.g., the method of moments by Hedges et al., 2012, 2013; the restricted maximum likelihood method by Pustejovsky et al., 2014; the Bayesian method by Natesan, 2019 or Natesan & Hedges, 2017), and (3) inferring an intervention effect based on a statistical model (e.g., hierarchical linear modeling by Pustejovsky et al., 2014) or a design (e.g., randomization tests by Michiels & Onghena, 2019, and Onghena, 2020). Among the advanced approaches, design-comparable ESs (D-CESs) were proposed as standardized indices to synthesize intervention effects across SCED and group studies, or over different outcome measures, based on a statistical model (Hedges et al., 2012, 2013; Pustejovsky et al., 2014; Shadish et al., 2014; Zelinsky & Shadish, 2018).

Version 5.0 of the What Works Clearinghouse Procedures and Standards Handbook (WWC, 2022) specifically recommends reporting D-CES indices, along with visual analysis, when assessing an intervention effect in SCED studies. Yet to the best of our knowledge, no published research has investigated D-CES’s statistical assumptions in SCED contexts. If statistical assumptions, such as normality, are not met or not robust, inferences derived from D-CES lack statistical validity. Furthermore, the small sample sizes and limited number of measurements used in most SCED studies render the normality assumption unlikely to be robust, if it is violated.

Small sample sizes and limited number of measurements are also a central concern when an effective intervention is to be generalized to another sample, setting, location, behavior, or measurement (Horner et al., 2005). That is, how does one know that an intervention is generalizable beyond the effect already documented in a SCED study? One way to address, or even enhance, the generalizability of an effect is to systematically replicate an intervention in different contexts using different participants, behaviors, and parallel measurements (Horner et al., 2005; Kazdin, 2011). Replication results are subsequently synthesized using meta-analysis methods (Becraft et al., 2020; Beretvas & Chung, 2008; Moeyaert et al., 2020; Onghena et al., 2018). To this end, methodologists have devised various approaches to perform SCED meta-analysis (Jamshidi et al., 2022; Vlaeyen et al., 2020). According to Becraft et al. (2020) and Moeyaert et al. (2021), there has been a dramatic increase from 1987 to 2019 in the number of scholarly publications on SCED intervention studies and their meta-analyses.

Definition of g AB for SCED studies

One D-CESFootnote 1, namely gAB, was proposed by Pustejovsky et al. (2014) to quantify intervention effects within and across primary SCED studies (Hedges et al., 2012, 2013; Pustejovsky et al., 2014; Shadish et al., 2014; WWC, 2022; Zelinsky & Shadish, 2018). A cursory search of the literature since 2014 has found gAB reported in numerous primary studies or meta-analysis (Anaby et al., 2020; Grasley-Boy et al., 2021; Lee et al., 2022; Peltier et al., 2021; Peltier et al., 2020a, b; Rincón et al., 2021; Rivera Pérez et al., 2022; Romano & Windsor, 2020; Romano et al., 2021; Ruiz et al., 2018; Saul & Norbury, 2021; Teh et al., 2021; Thurmann-Moe et al., 2021). gAB is the D-CES specifically recommended by the What Works Clearinghouse Procedures and Standards Handbook, Version 5.0 (WWC, 2022) for SCED studies.

g AB is a sample estimator of the population standardized mean difference (δAB) between an A phase and a B phase, similar to Cohen’s d or Hedges’ g used in group studies (Pustejovsky et al., 2014; WWC, 2022). The δAB is defined according to Eq. 1:

where μA is the population mean of Phase A measurements, μB is the population mean of Phase B measurements, σ2 is the variance of measurements within cases, and τ2 is the variance of measurements across cases. Thus, (σ2 + τ2) is the total variance of measurements within and across all cases.

Among the three MB design variations mentioned earlier, gAB is suitable only for MB designs across three or more cases of the same behavior. It is a product of a bias correction factor [J(ν)] and the sample estimate of δAB (\({\hat{\updelta}}_{\textrm{AB}}\)), as in Eq. 2:

Both J(ν) and \({\hat{\updelta}}_{\textrm{AB}}\) are estimated by the restricted maximum likelihood (REML) method (Pustejovsky et al., 2014) which we explain in the “Method” section. The performance of gAB under normal and non-normal distributions is the focus of the present simulation study.

Five MB models formulated by Pustejovsky et al. (2014)

Pustejovsky et al. (2014) formulated five models for MB data. They are sequentially named MB1 to MB5 in this paper. All five models permit cases to vary in Phase A levels. They differ in how the intervention effect and the trends in A or B phase are modeled across cases. The five MB models are hierarchical linear models in which Level-1 parameters model the individual data and Level-2 parameters model how the Level-1 parameters vary across cases. Being the simplest and most restrictive model, MB1 assumes a fixed intervention effect for all cases with no trend in either A or B phase. MB1 is recommended by the What Works Clearinghouse Procedures and Standards Handbook, Version 5.0 (WWC, 2022) as a “starting point” (p. 182) for assessing an intervention effect.

MB2 to MB5 are more flexible and extensible than MB1, due to additional parameters and fewer restrictions (Pustejovsky et al., 2014). MB2 assumes a varying immediate effect due to intervention across cases, with no trend in either A or B phase. MB3 assumes a fixed intervention effect with a fixed linear trend in either A, B, or both phases. MB4 assumes a fixed intervention effect with a varying linear trend in the A phase and a fixed linear trend in the B phase. Being the most complex model, MB5 assumes a fixed intervention effect with a varying linear trend in both A and B phases. It is worth noting that MB2 is the only model among the five proposed that allows the immediate effect of intervention to vary across cases. Pustejovsky et al. (2014) conducted three simulation studies under MB1, MB2, and MB4 to provide empirical evidence to support the reporting of gAB. Results from the three simulation studies are summarized next.

Three simulation studies of g AB under MB1, MB2, and MB4

The first simulation study (Study 1) was conducted under MB1, Study 2 under MB2, and Study 3 under MB4. In all three studies, data were simulated from normal distributions. For Studies 1 and 2, four levels of number of cases (m) were used: 3, 4, 5, and 6. For Study 3, two additional levels were added to m: 3, 4, 5, 6, 9, and 12. The number of measurements (N) was either 8 or 16, the within-case reliability (ρ = the ratio of between-case variance to the total variance within and between cases) ranged from 0 to 0.8, and the first-order autocorrelation (ϕ) ranged from −0.7 to 0.7 in Studies 1 to 3. For Studies 2 and 3, the ratio of variance components (λ) was either 0.1 or 0.5. λ was defined in Study 2 as the variance of all cases’ level shifts between A and B phases as a fraction of the variance of all cases’ Phase A levels. In Study 3, λ was defined as the variance of all cases’ baseline slopes as a fraction of the variance of all cases’ Phase A levels. Four criteria were used in all three studies to assess the performance of gAB: relative bias, relative bias of variance estimators, MSE, and coverage rate of the 95% CIs. The 95% CI was constructed using two methods: the symmetric and the noncentral t.

Results from Study 1 of Pustejovsky et al. (2014) showed that relative bias of gAB under MB1 was small. At the smallest m = 3 and N = 8, the relative bias was no more than 4.3%, yet the relative bias of gAB’s variance estimator was 16%. As both m and N increased, gAB’s variance estimate was very close to the true variance. Between the two CI methods, the average coverage rate of the symmetric method was closer to the nominal level of 95% than the noncentral t method.

Results from Study 2 showed that gAB’s average relative bias was small under MB2. Relative bias was generally greater when N = 8 than when N = 16. At the smallest m = 3 and N = 8, the relative bias was no more than 7.3%. For m = 4, the relative bias was always less than 4.9%. The relative bias decreased to no more than 2.9% when m ≥ 5. The variance of gAB was overestimated. The relative bias in gAB’s variance estimator was as large as 43% when m = 3 and N = 16. Even when m = 6 (the largest under MB2) and N =16, the relative bias was still 14%. The MSEs under MB2 ranged from 0.092 when m = 6 and N = 16, to 0.290 when m = 3 and N = 8. The MSEs generally increased as ρ, λ, and ϕ increased. Between the two CI methods, the symmetric method maintained an average coverage rate closer to 95% than the noncentral t method. Based on these results, Pustejovsky et al. (2014) recommended gAB for meta-analysis with m ≥ 4 and the symmetric method for constructing CIs of gAB under MB2.

Results from Study 3 revealed the same pattern under MB4 as under MB2, namely small relative bias. As with results of MB2, gAB as a point estimator was suitable for studies with m ≥ 4. MSE obtained under MB4 was large, compared with those obtained under MB1, especially when m was small. Unlike results obtained under MB1 and MB2, gAB’s variance was underestimated, except when m = 3 and N = 8. The variance’s underestimation was more pronounced when N = 16 than when N = 8. The average MSE of gAB under MB4 ranged from 0.066 when m = 12 and N = 16, to 0.596 when m = 3 and N = 8. For a given m and N, MSE derived under MB4 were larger than those under MB1 or MB2, especially when m was small. MSEs generally increased as ρ, λ, and ϕ increased. The 95% CI based on the symmetric method approached the nominal level when m was large (i.e., ≥ 9). The CI based on the noncentral t method tended to substantially undercover the population δAB when m = 3, 4, 5, 6, or 9.

Based on Studies 1 to 3, Pustejovsky et al. (2014) concluded that the relative bias of gAB was reasonably small, even with very few cases. Yet large sample sizes were needed in order to yield precise point estimates, reasonably accurate SE estimates and CIs under a complex model, namely MB4. Pustejovsky et al. (2014) further cautioned not to rely on model-based SE estimates in meta-analysis, because inaccurate SE estimates lead to inaccurate weights for primary studies and inaccurate estimates of between-study heterogeneity for meta-analysis.

The normality assumption of g AB and the REML method

As previously mentioned, data in Studies 1 to 3 of Pustejovsky et al. (2014) were simulated only from normal distributions. Indeed, gAB assumes that data within and across cases are normally distributed. Yet non-normal data are quite common in SCED studies (e.g., Au et al., 2017; Brosnan et al., 2018; Ferron et al., 2014; Joo, 2017; Stewart & Hall, 2017). Furthermore, due to asymptotic normalityFootnote 2 of the REML method, voluminous data are needed in order to yield an acceptable gAB for δAB. The ML method, of which the REML is a special case, is known to perform poorly when data are limited, even under normal conditions (Braunstein, 1992). Yet small sample sizes (or cases) and limited numbers of measurements are the norm rather than an exception in SCED studies. According to Shadish and Sullivan (2011), 73.5% of 809 SCED studies employed one to 13 participants with an average of 3.64 cases per study. Tanious and Onghena (2021) reported that the median sample size used in 210 MB studies was 4 with an interquartile range of 4. As for the number of measurements, Shadish and Sullivan (2011) reported that 90.6% of 809 SCED studies used 49 or fewer measurements with a median of 20 measurements. Pustejovsky et al.’s (2019) review of 303 SCED studies found a median of 7 measurements in initial baseline phases, with an interquartile range of 7.

Pustejovsky et al. (2014) did not investigate the performance of gAB under non-normal conditions. Furthermore, sample sizes and the number of measurements used in their simulation studies were small for the REML method. It remains unknown whether gAB performs satisfactorily under non-normal conditions with small samples and limited numbers of measurements (Maas & Hox, 2004; Man et al., 2022; Raudenbush & Bryk, 2002). To the best of our knowledge, no published study has systematically investigated the singular impact of non-normality, or the joint impact of non-normality with other data features (e.g., sample size, autocorrelation), on the performance of gAB in primary SCED studies and their meta-analyses.

Aims of the present simulation

The present study aimed to fill the voids in the literature by investigating how distributions of data singularly and jointly impacted the performance of gAB under MB2. We focused on MB2 because MB2 is more flexible, but less researched, than MB1. MB2 is also the only model among the five proposed by Pustejovsky et al. (2014) that allows the immediate effect of an intervention to vary across cases.

The singular and joint impacts of data distribution on gAB were investigated by simulating data from normal and non-normal distributions, and by manipulating five data features (number of cases, number of measurements, autocorrelation, within-case reliability, and ratio of variance components). The five data features were also manipulated in Pustejovsky et al. (2014). The performance of gAB was evaluated by the same four criteria as Pustejovsky et al. (2014): relative bias, relative bias of variance, MSE, and coverage rate. These four criteria have been routinely used to assess a statistic (e.g., gAB) in primary studies (e.g., Algina et al., 2005; Hoogland & Boomsma, 1998), or for meta-analysis (e.g., American Psychological Association, 2020; Hoogland & Boomsma, 1998; Pustejovsky et al., 2014). Based on the evaluation of the four criteria, we identified conditions in which gAB performed acceptably for primary MB studies and meta-analysis.

In sum, the present study aimed to answer two research questions under MB2:

-

RQ1: What is the impact of data distribution, number of cases, number of measurements, within-case reliability, ratio of variance component, and autocorrelation on the performance of gAB as measured by relative bias, relative bias of variance, MSE, and coverage rate?

-

RQ2: What are the conditions in which gAB performed acceptably for primary MB studies and meta-analysis?

Findings from the present study should provide empirical evidence to extend the recommendation made by the What Works Clearinghouse Procedures and Standards Handbook, Version 5.0 (WWC, 2022) to MB2. They should also inform practitioners and researchers about the suitability of gAB for MB studies and their meta-analysis. To this end, we provide general recommendations on conditions under which it is appropriate to use gAB to assess intervention effects. This paper concludes with a discussion of gAB’s applicability in SCED contexts, its design-comparability across SCED and group studies, and sound reporting practices of ES indices including gAB.

Method

In this section, we present MB2 and its assumptions first, followed by the definition of the population standardized mean difference (δAB) under MB2. Next, we describe the simulation design, justifications for the manipulated conditions, and an outline of seven steps for simulating and analyzing simulated data. Details of each step are provided following the outline.

MB2

As previously stated, MB2 assumes that cases vary in the average score of the A phase and also in the immediate intervention effect between A and B phases. Pustejovsky et al. (2014) referred to the average score of the A phase as Phase A level, and the immediate intervention effect as a level shift between A and B phases. MB2 also assumes that there is no linear trend in either A or B phase (Pustejovsky et al., 2014). Thus, for the jth measurement of the ith case, the score Yij is modeled by a within-case model according to Eq. 3:

where β0i = Phase A level for Case i; β1i = level shift for Case i = the immediate change in Case i’s measurement due to intervention; Dij = a dummy variable that equals 0 (for Phase A measurements) or 1 (for Phase B measurements); εij = Level-1 error; i = 1, 2, . . ., m; j = 1, 2, . . ., N; m = the number of cases; and N = the total number of measurements in A and B phases combinedFootnote 3.

Because MB2 assumes a varying Phase A level and a varying level shift between A and B phases across cases, β0i and β1i are further modeled by a between-case model according to Eqs. 4 and 5:

where γ00 = the average Phase A level, γ10 = the average level shift between A and B phases, and η0i and η1i are Level-2 errors.

Substituting Eq. 4 for β0i and Eq. 5 for β1i into Eq. 3, we obtain Eq. 6 of fixed and random effects for the distribution of Yij—the jth measurement of the ith case—under MB2:

Statistical and design assumptions for MB2 are stated in (a) to (f) below, according to Pustejovsky et al. (2014). The present study investigated normality assumptions stated in (a) and (d).

-

(a)

Within cases, εijs are normally distributed with a mean of 0 and a variance of σ2.

-

(b)

Within cases, εijs are correlated with a first-order autocorrelation ϕ, or Cov (εij, εik) = ϕ|k−j|σ2.

-

(c)

Across cases, εijs are homoscedastic and independently distributed, namely, Var(εij) = Var(εhk) = σ2 and Cov (εij, εhk) = 0 for all i ≠ h.

-

(d)

(η0i, η1i) are multivariate normally distributed with mean (0, 0) and a covariance matrix T2×2 = \(\left[\begin{array}{cc}{\uptau}_0^2& {\uptau}_{10}\\ {}{\uptau}_{10}& {\uptau}_1^2\end{array}\right]\), where \({\uptau}_0^2\) is the variance of all cases’ Phase A levels, \({\uptau}_1^2\) is the variance of all cases’ level shifts between A and B phases, and τ10 is the covariance between Phase A levels and level shiftsFootnote 4.

-

(e)

Level-1 errors (εijs) are independent of Level-2 errors (η0i and η1i).

-

(f)

Measurements are equally spaced over time.

Definition of δAB

Under MB2, the population mean difference = (γ00 + γ10) – γ00 = γ10, and the total variance within and across cases = σ2 + \({\uptau}_0^2\). Hence, δAB is defined by Eq. 7:

Equation 7 is identical to Eq. 1, except for the notation differences (Pustejovsky et al., 2014). Guided by Pustejovsky et al. (2014), we setFootnote 5 γ00 = 0, γ10 = 1, and \({\upsigma}^2+{\uptau}_0^2\) = 1. Therefore, δAB = 1 for all simulated conditions in this study and also in Studies 1 to 3 of Pustejovsky et al. (2014).

Simulation design

The present study manipulated six factors according to Table 1. The first factor (Dist or distribution of data) was unique to the present study. The next four factors, namely, m, N, ρ, and λ were manipulated identicallyFootnote 6 as in Study 2 of Pustejovsky et al. (2014). The sixth factor (ϕ) was manipulated slightly differently from Study 2 of Pustejovsky et al. (2014). Justifications for manipulated conditions are given in the next section. A total of 1792 conditions (= 4 × 4 × 2 × 4 × 2 × 7) were manipulated. Table 2 presents the start points for the intervention across cases. The start points were identical to those used in Study 2 of Pustejovsky et al. (2014).

Justifications for manipulated conditions

The distribution of data was manipulated through the joint manipulation of Level-1 and Level-2 error distributions in Eq. 6. Four distributions—one normal and three non-normal—were simulated as the distributions of sums of Level-1 and Level-2 errors. Because of the large number of conditions (= 1792) investigated in this study, we did not simulate Level-1 and Level-2 errors separately from two different distributions (e.g., normal for Level-1 errors and non-normal for Level-2 errors). Each distribution was specified through the specification of its skewness and kurtosis (Joo & Ferron, 2019; Man et al., 2022; Owens & Farmer, 2013). For the normal distribution, we specified skewness = kurtosis = 0. For the nearly normal distribution, skewness = 0 and kurtosis = 0.35 were specified. For the mildly non-normal distribution, skewness = 1 and kurtosis = 0.35 were specified. For the moderately non-normal distribution, we specified skewness = 1 and kurtosis = 3. The four marginal distributions are shown in File 1Footnote 7 at https://osf.io/hsvwu/.

We decided on these four distributions on the basis of empirical skewness and kurtosis of SCED data (Joo, 2017; Solomon, 2014) and conditions manipulated in Owens and Farmer (2013). Joo (2017) reported empirical skewness to range from −0.71 to 1.91 and empirical kurtosis from −1.07 to 3.01, based on 20 MB data sets published in the Journal of Applied Behavior Analysis. Solomon (2014) reported empirical skewness to range from 0.46 to 2.89 and empirical kurtosis from 0.49 to 1.57, based on 104 SCED studies of school-based interventions. Owens and Farmer (2013) investigated Level-2 normality assumption for MB data using multilevel modeling. They manipulated six Level-2 unimodal distributions ranging from normal (skewness = 0, kurtosis = 0), (0, −1), (0, 2), (0, 3.75), (1, 2), to most non-normal (1, 3.75). Of the six, four were symmetric and two were positively skewed. Of the four unimodal distributions manipulated in this study, two were symmetric and two were positively skewed. The three non-normal distributions of the present study were specified with skewness and kurtosis well within their respective empirical ranges reported in Joo (2017) and Solomon (2014). We confirmed that skewness and kurtosis of our simulated data matched closely with those specified in Table 1 (see File 2 at https://osf.io/hsvwu/).

Regarding m and N, Pustejovsky et al. (2014, supplemental materialsFootnote 8) justified their conditions by spreading the intervention start points as evenly as possible over measurements, while keeping at least three measurements in each phase. The within-case reliability (\(\uprho ={\uptau}_0^2/\left({\upsigma}^2+{\uptau}_0^2\right)\), also called the intra-class correlation or ICC) was varied from 0.2 to 0.8 in increments of 0.2. A ρ of 0.2 represented a low between-case variance in levels (\({\uptau}_0^2\)) relative to the within-case variance (σ2), hence a low within-case reliability. A ρ of 0.8 represented a high between-case variance in levels relative to the within-case variance, hence a high within-case reliability. The ratio of variance components (λ = \({\uptau}_1^2\)/\({\uptau}_0^2\)) was set to either 0.1 or 0.5. According to Pustejovsky et al. (2014, supplementary materials), a λ of 0.1 represented a moderate level of the between-case variation in level shifts, relative to the between-case variation in Phase A levels. A λ of 0.5 represented a high level of the between-case variation in level shifts, relative to the between-case variation in Phase A levels.

Because of the repeated observation of the same behavior in SCED studies, each case’s measurements are correlated. Such a correlation is quantified by the first-order autocorrelation (ϕ). Pustejovsky et al. (2014) manipulated the autocorrelation under MB2 to range from −0.7 to 0.7, in increments of 0.2. To ensure that our manipulation of autocorrelation was plausible for MB2 under non-normal distributions, we tested a range of autocorrelations based on empirical and simulation studies (Joo, 2017; Joo & Ferron, 2019; Solomon, 2014). We eventually decided on seven levels of ϕ: −0.4, −0.3, −0.1, 0, 0.1, 0.3, 0.4 for the present study (see File 3).

For each of the 1792 conditions, 20,000 replications were generated. We modified the R scdhlm package (Pustejovsky et al., 2021) for this simulation study. The modified R scdhlm package can be found in File 4 at https://osf.io/hsvwu/. File 4.1 is the superordinate R program to establish simulation conditions, execute File 4, and check convergence of each simulation. Under normal distributions, the modified R scdhlm package produced results comparable to those obtained from Study 2 of Pustejovsky et al. (2014) (see Appendix A).

The simulation and analysis procedures are outlined in seven steps. Details on each step are presented following the outline.

Outline of simulation and analysis procedures

-

Step 1: Generate 20,000 random seeds which were used to create 20,000 replications for the 1792 conditions.

-

Step 2: Given a random seed from Step 1, simulate a replication under a specific condition of MB2.

-

Step 3: Use the REML method to compute gAB based on data generated in Step 2.

-

Step 4: Repeat Steps 2 and 3 until 20,000 replications and 20,000 gABs were obtained for each of the 1792 conditions.

-

Step 5: Compute four criteria as indicators of the performance of gAB.

-

Step 6: Analyze the impact of the six factors on the four criteria.

-

Step 7: Identify conditions in which gAB performed acceptably for MB studies and meta-analysis.

Step 1: Generate 20,000 random seeds for the 1792 conditions

Before the simulation began, we assessed the adequacy of R = 20,000 replications used in Pustejovsky et al. (2014) by examining its Monte Carlo SE. A Monte Carlo SE provides an estimate of the empirical SE resulted from R replications. The Monte Carlo SE for the expected coverage rate of 95% CIs based on 20,000 replications was computed according to Eq. 8:

where 0.95 = the expected coverage rate of 95% CIs. Such a Monte Carlo SE was deemed acceptable by Morris et al. (2019), who suggested keeping the Monte Carlo SE below 0.005. We therefore considered 20,000 replications adequate for the present study. And 20,000 random seeds were generated and used in each condition.

Step 2: Simulate a replication under a specific condition

Given Eq. 6 as the distribution of Yij and start points in Table 2, we generated data from one of the four distributions specified in Table 1. For N scores of a case, Eq. 6 can be expressed in matrix notations as Eq. 9:

The fixed effects in Eq. 6 are expressed as the product of the design matrix (DN×2) and a fixed-effect vector (γ2×1) in Eq. 9. The random effects in Eq. 6 are expressed as an error vector (eN×1) in Eq. 9. The eN×1 vector consists of Level-2 errors [η0i + (η1i × Dij) in Eq. 6] and Level-1 errors (εij in Eqs. 3 and 6).

As previously stated, sums of Level-1 and Level-2 errors followed a normal or non-normal distribution that was specified by its skewness and kurtosis. To generate random errors of eN×1 from a multivariate normal distribution, we specified skewness = 0, kurtosis = 0, and a variance-covariance matrix of errors (ΣN×N) in the mvrnonnorm function of the semTools package (Jorgensen et al., 2021). The ΣN×N is written in matrix notation as Eq. 10:

where DTDT = the variance-covariance matrix of Level-2 errors, (1 – ρ)∙AR(1) = the variance-covariance matrix of Level-1 errors, D = design matrix from Eq. 9, \({\textbf{T}}_{2\times 2}=\left[\begin{array}{cc}\rho & 0\\ {}0& \rho \times \lambda \end{array}\right]=\left[\begin{array}{cc}{\uptau}_0^2& 0\\ {}0& {\uptau}_1^2\end{array}\right]\) (see Footnote 4), and AR(1) is the matrix of first-order autocorrelations with 1s along the diagonal and ϕ|k−j| off-diagonal. Once skewness, kurtosis, and ΣN×N were specified, the mvrnonnorm function produced multivariate normal errors using the Vale and Maurelli method (Vale & Maurelli, 1983). Appendix B describes details in generating a replication of 3 (= m) cases from a normal distribution (Dist = normal) with 8 (= N) measurements, within-case reliability (ρ) = 0.2, ratio of variance components (λ) = 0.1, and first-order autocorrelation (ϕ) = −0.4.

Errors were similarly generated from the other three distributions by specifying their corresponding skewness and kurtosis, plus a ΣN×N in the mvrnonnorm function (see File 4 at https://osf.io/hsvwu/). After data were generated for m cases, a replication was formed and a gAB was computed.

Step 3: Use the REML method to compute g AB

To explain the details of Step 3, we reformulate δAB in matrix notation. Next, we describe the estimation of δAB by gAB and the estimation of the variance of gAB.

Reformulating δAB in matrix notation

Using Pustejovsky et al.’s (2014) matrix notations, we define the vector of fixed effects of MB2 as γ2×1 = (γ00, γ10)T and the vector of variance components as ω5×1 = (\({\upsigma}^2,\upphi, {\uptau}_0^2,{\uptau}_1^2,{\uptau}_{10}\))T. The ω5×1 vector includes the within-case variance σ2, the first-order autocorrelation ϕ, Level-2 variances \({\uptau}_0^2\) and \({\uptau}_1^2\), and the covariance τ10 (see Footnote 4). With two constant vectors defined as p2×1 = (0, 1)T and r5×1 = (1, 0, 1, 0, 0)T, the δAB of Eq. 7 is reformulated as Eq. 11:

Estimating δAB by g AB

g AB is the product of the bias correction factor, J(ν), multiplied with the REML estimate of δAB (i.e., \({\hat{\updelta}}_{\textrm{AB}}\)), as in Eq. 12:

where J(ν) = 1− 3/(4ν −1) and ν is determined from Eq. 13.

where \(\textbf{C}\left(\hat{\boldsymbol{\upomega}}\right)\) is the estimated covariance matrix of \(\hat{\boldsymbol{\upomega}}\), and \(\hat{\boldsymbol{\upomega}}\) is the REML estimate of ω. When m and N both approach infinity, \(\hat{\boldsymbol{\upomega}}\) approaches ω, \(\textbf{C}\left(\hat{\boldsymbol{\upomega}}\right)\) approaches a null matrix, ν approaches infinity, and J(ν) approaches 1; hence, the need for bias correction diminishes.

By plugging γ’s REML estimate (\(\hat{\boldsymbol{\upgamma}}\)) and \(\hat{\boldsymbol{\upomega}}\) into Eq. 11, we obtain \({\hat{\updelta}}_{\textrm{AB}}\) from Eq. 14:

The REML algorithm estimated the random effects (i.e., ω) iteratively using a non-linear maximization approach. The algorithm stopped when it met a pre-specified convergence criterion (i.e., tolerance = 10─6), or when it reached a pre-specified number of iterations (= 50). If the REML algorithm did not converge to the convergence criterion after 50 iterations, we re-simulated data (see File 4.1)Footnote 9. After obtaining the estimated random effects (\(\hat{\boldsymbol{\upomega}}\)), the algorithm estimated fixed effects (\(\hat{\boldsymbol{\upgamma}}\)) using the generalized least squares estimator (Jiang, 2007).

Estimating the variance of g AB

The variance of gAB is estimated from Eq. 15 (Hedges, 2007; Pustejovsky et al., 2014):

where J(ν) and gAB are computed from Eqs. 12 and 14, ν by Eq. 13, and κ by Eq. 16.

where \(\textbf{C}\left(\hat{\boldsymbol{\upgamma}}\right)\) is the estimated covariance matrix of \(\hat{\boldsymbol{\upgamma}}\).

Step 4: Repeat Steps 2 and 3 until 20,000 replications and 20,000 g AB s are obtained

Steps 2 and 3 were repeated until all the data for the present study were generated. At the end of this step, we obtained 20,000 replications and 20,000 gABs in each of the 1792 conditions.

Step 5: Compute four criteria

We applied the same four criteria as those used in Pustejovsky et al. (2014) to assess the performance of gAB. The four criteria were relative bias, relative bias of gAB’s variance estimator, MSE, and coverage rate of symmetric 95% CI. They are abbreviated as RB, RBV, MSE, and CR respectively. Each criterion is defined below.

RB (relative bias)

The RB of gAB was calculated according to Eq. 17:

where \({\overline{g}}_{\textrm{AB}}\) was the mean of 20,000 gABs obtained in each condition. Because δAB = 1, bias and RB were the same. We refer to them both as RB. Based on Hoogland and Boomsma (1998), we interpreted |RB| < 5% as acceptable and |RB| ≥ 5% as unacceptable. In addition, RB < −5% was interpreted as unacceptable underestimate and RB > 5% as unacceptable overestimate.

RBV (relative bias of g AB ’s variance estimator)

The RBV of gAB was calculated according to Eq. 18Footnote 10,

where \({\overline{V}}_{g_{\textrm{AB}}}\) was the mean of 20,000 \({V}_{g_{\textrm{AB}}}\)s obtained under each condition with each \({V}_{g_{\textrm{AB}}}\)computed from Eq. 15, and Var(gAB) was the Monte Carlo variance of 20,000 gABs computed from Eq. 19,

The Monte Carlo variance, or Var(gAB), was used in Eq. 18 as a proxy for the true variance of gAB. Based on Hoogland and Boomsma (1998), we interpreted |RBV| < 21% as acceptable and |RBV| ≥ 21% as unacceptableFootnote 11. In addition, RBV < −21% was interpreted as unacceptable underestimate and RBV > 21% as unacceptable overestimate.

MSE (mean square error)

MSE measured the precision of gAB as a point estimator. MSE is the sum of the squared bias plus variance of gAB which we verified. MSE was calculated according to Eq. 20:

where δAB = 1 and R = 20,000.

To assess the magnitude of MSE, we examined MSE’s distribution in terms of its mean, median, and maximum. As a point of comparison suggested by Pustejovsky et al. (2014), we compared MSE’s mean and median with estimated MSEs of Hedges’ g (Hedges, 1981) obtained from a balanced, two-group experiment with m × N participants when the population ES is 1. The estimated MSE of Hedges’ g with mg participants in a balanced two-group experiment, when the population ES = 1, is given by Eq. 21:

where mdf = mg – 2, \(c\ \left({m}_{df}\right)=\frac{\Gamma \left[\frac{m_{df}}{2}\right]}{\sqrt{\frac{m_{df}}{2}}\times \Gamma \left[\frac{m_{df}-1}{2}\right]}\), and Γ = gamma function.

For m = 3 and N = 8, the MSE of Hedges’ g is estimated to be 0.212 by setting mg = 24 (= 3 × 8) into Eq. 21. Hence, 0.212 was used as a point of comparison when m = 3 and N = 8. Similarly, for m = 4 and N = 8, we specified mg = 32 (= 4 × 8) to obtain a point of comparison = 0.154. For m = 5 and N = 8, the point of comparison = 0.121. For m = 6 and N = 8, the point of comparison = 0.099. For m = 3, 4, 5, 6 and N = 16, the points of comparison = 0.099, 0.073, 0.058, and 0.048, respectively. Additionally, we noted in Table S4 of Pustejovsky et al. (2014), supplemental materials) that maximum MSEs were approximately twice as large as the mean MSEs across levels of m and N under normal conditions. The overall maximum MSE (= 0.664) from Table S4 was approximately four times as large as the overall mean (= 0.167). Extremely large MSEs indicated imprecision. And conditions in which these large MSEs occurred needed to be identified. Hence, we decided to identify unacceptable MSEs as those greater than the 75th percentile of all MSEs.

CR (coverage rate of symmetric 95% CI)

Guided by Pustejovsky et al.’s (2014) findings that symmetric CIs of gAB were closer to the nominal level of 95% than noncentral CIs, we constructed the symmetric 95% CI for δAB using Eq. 22:

where t0.025,υ is the critical value from the t distribution with df = υ (Eq. 13), and \({V}_{{\textrm{g}}_{\textrm{AB}}}\) is computed from Eq. 15. CR was defined as the percentage of the 95% CIs that covered δAB. We defined an acceptable CR to fall between 0.925 (lower bound) and 0.975 (upper bound), according to Algina et al. (2005). A CR outside the range of [0.925, 0.975] was deemed unacceptable. In addition, CR < 0.925 was interpreted as unacceptable under-coverage and CR > 0.975 as unacceptable over-coverage.

Step 6: Analyze the impact of the six factors on four criteria

The impact of the six factors on four criteria (RQ1) was analyzed by four ANOVAs and six plots depicting trends of acceptable and unacceptable criterion values. For each criterion, the ANOVA analyzed the six main effects of Dist, m, N, ρ, λ, and ϕ, plus five two-way interactions of Dist with m, N, ρ, λ, and ϕ, respectively. All effects were treated as fixed. Because each condition yielded one criterion value, the three-way and higher-order interactions were pooled to form the error term in ANOVAsFootnote 12. We defined effects with p-values < 0.05 and eta-squares > 5.9% as having a significant impact on a criterion. An eta-square > 5.9% was labeled by Cohen (1988) as a medium ES.

Step 7: Identify conditions acceptable for MB studies and meta-analysis

To identify conditions in which gAB performed acceptably for MB studies and meta-analysis (RQ2), we applied acceptability standards to the four criteria in each condition. Conditions that yielded all acceptable criteria were identified as acceptable conditions (e.g., Algina et al., 2005; APA, 2020; Hoogland & Boomsma, 1998; Pustejovsky et al., 2014).

Results

Results pertaining to RQ1 are presented first. These include the ANOVA results of the four criteria (RB, RBV, MSE, and CR) and trends of acceptable and unacceptable criterion values. The ANOVA results are presented in the section titled “ANOVA results of the four criteria” and trends of criterion values are presented in the section titled “Trends of acceptable and unacceptable criterion values.” Results pertaining to RQ2 are presented in the section titled “Acceptable conditions.” We summarize all results in “Summary of findings.”

ANOVA results of the four criteria

The ANOVA results presented in Table 3 are eta-squares of the six main effects of Dist, m, N, ρ, λ, ϕ and the five two-way interactions of Dist with m, N, ρ, λ, and ϕ on the four criteria. Eta-squares of effects having a significant impact (p-values < 0.05 and eta-squares > 5.9%) are shown in bold. According to Table 3, RB’s variance was best explained by all effects with a total eta-square of 91.0%. This was followed by 89.7% of MSE’s variance and 83.1% of CR’s variance. RBV’s variance was least explained with an eta-square of 71.5%.

The Dist factor had a significant impact on RB, RBV, and CR, accounting for most variance of RB (explaining 48.7% of its variance) and RBV (27.4%). Furthermore, Dist had the second greatest impact on CR (23.9%). The m factor had a significant impact on RBV, MSE, and CR with the greatest impact on MSE (34.4%) and the second greatest impact on RBV (22.2%). It is evident that Dist and m had greater impact on the four criteria than other factors. The N factor had a significant impact on RBV and CR with the greatest impact on CR (29.5%). The ρ factor had a significant impact on RB and MSE; its impact on MSE (33.9%) was the second greatest, only slightly smaller than the greatest impact by m (34.4%). The λ factor had a significant impact on RB and RBV. The ϕ factor had a significant impact on CR only.

Regarding two-way interactions of Dist with m, N, ρ, λ, and ϕ, Dist interacted with ρ in impacting RB significantly (8.2% of its variance). Dist did not interact with other factors in impacting RBV, MSE, or CR significantly.

Trends of acceptable and unacceptable criterion values

Based on the ANOVA results, we plot trends of acceptable and unacceptable criterion values as indicators of the performance of gAB. Figures 1 and 2 plot trends of RB and RBV, respectively. Figures 3 and 4 plot trends of MSE. Figure 5 plots trends of CR for N = 8, whereas Fig. 6 plots trends of CR for N = 16.

RB (relative bias)

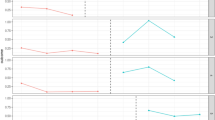

According to Table 3, RB was significantly impacted by Dist, ρ, λ, and the interaction of Dist with ρ. Figure 1 plots RB by Dist, ρ, and λ. Acceptable RBs are inside blue shaded areas and unacceptable RBs are outside blue shaded areas, based on the cutoff for an unacceptable RB (|RB| ≥ 5%) established in the “Method” section.

According to Fig. 1, RBs were all acceptable when Dist was normal, nearly normal, or mildly non-normal. When Dist was moderately non-normal, RBs were acceptable when (1) ρ ≤ 0.4 and λ = 0.1, or (2) ρ ≤ 0.6 and λ = 0.5. Moreover, unacceptable RBs were all overestimates.

RB increased as ρ increased and Dist was mildly or moderately non-normal. Given a fixed Dist and ρ, RB was smaller when λ = 0.5 than when λ = 0.1.

RBV (relative bias of g AB’s variance estimator)

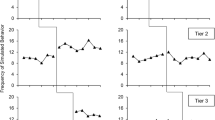

According to Table 3, RBV was significantly impacted by Dist, m, N, and λ. Figure 2 plots RBV by Dist, m, N, and λ. Acceptable RBVs are inside blue shaded areas and unacceptable RBVs are outside blue shaded areas, based on the cutoff for an unacceptable RBV (|RBV| ≥ 21%) established in the “Method” section.

According to Fig. 2, when Dist was normal, RBVs were acceptable only when m = 6 and N = 16. When Dist was nearly normal, RBVs were acceptable when m = 6 and N = 16, or when m = 5, N = 16, and λ = 0.1. When Dist was mildly non-normal, RBVs were acceptable if (1) λ = 0.1, (2) m ≥ 5, N = 8, λ = 0.5, or (3) m ≥ 4, N = 16, and λ = 0.5. When Dist was moderately non-normal, RBVs were acceptable if (1) m ≥ 4, N = 8, and λ = 0.1, (2) m ≥ 5, N = 8, and λ = 0.5, (3) N = 16 and λ = 0.1, or (4) m ≥ 4, N = 16, and λ = 0.5. Moreover, unacceptable RBVs were all overestimates.

RBVs obtained from normal and nearly normal Dists were similar; they were noticeably larger than those obtained from mildly or moderately non-normal Dist. RBV decreased as m increased from 3 to 6, N increased from 8 to 16, or λ decreased from 0.5 to 0.1.

MSE (mean square error)

Table 4 presents means, medians, and maximums of gAB’s MSE and points of comparison based on estimated MSEs of Hedges’ g. It was evident that means and medians of gAB’s MSE were noticeably larger than MSEs of Hedges’ g. When N = 8, gAB’s mean MSEs were approximately 1.5 times larger than MSEs of Hedges’ g. When N = 16, gAB’s mean MSEs were approximately 2.5 times larger than MSEs of Hedges’ g. Similar to Pustejovsky et al.’s (2014) findings, extremely large MSEs were uncovered in this study. Maximum MSEs were approximately twice as large as mean MSEs across levels of m and N. The overall maximum MSE (= 0.808) was approximately four times as large as the overall mean (= 0.194). Thus, we decided that it was justified to use the 75th percentile (= 0.241) of all MSEs as a cutoff to identify unacceptable MSEs.

According to Table 3, MSE was significantly impacted by m and ρ. Figure 3 plots MSE by m, ρ, and Dist. Acceptable MSEs are inside the blue shaded areas and unacceptable MSEs are outside the blue shaded areas, based on the cutoff for an unacceptable MSE (MSE > 0.241).

According to Fig. 3, MSE decreased as m increased for a fixed ρ, regardless of data distribution. At a fixed m, MSE increased as ρ increased, again regardless of data distribution. When m = 6, all MSEs were acceptable. When m = 4 or 5, all MSEs were acceptable, except when ρ = 0.8 and Dist was mildly or moderately non-normal. When m = 3, MSEs were acceptable only when ρ = 0.2, or when ρ = 0.4 and Dist was normal or nearly normal.

Figure 4 presents boxplots of MSEs for each combination of m and ρ across levels of Dist, N, λ, and ϕ. Three reference lines are overlaid corresponding to the 95th percentile (= 0.401), 75th percentile (= 0.241), and median (= 0.170) of the 1792 MSEs, respectively. According to Fig. 4, when m = 3 (the smallest) and ρ = 0.8 (the largest), all MSEs were larger than 0.241 (= P75). When m = 3 and ρ = 0.6, or when m = 4 and ρ = 0.8, more than 75% of MSEs were larger than 0.241 (= P75). Under all other combinations of m and ρ, more than 50% MSEs were smaller than 0.241 (= P75). The exact P25, median, mean, P75, and P95 of each boxplot shown in Fig. 4 are presented in File 7.

CR (coverage rate of symmetric 95% CI)

According to Table 3, CR was significantly impacted by Dist, m, N, and ϕ. Figure 5 plots CR by Dist, m, and ϕ for N = 8, whereas Fig. 6 plots CR by the same factors for N = 16. Acceptable CRs are inside the blue shaded areas and unacceptable CRs are outside the blue shaded areas, based on the cutoff for unacceptable CR (CR < 0.925 or > 0.975) established in the “Method” section.

When N = 8 and m = 5 or 6, CRs were mostly acceptable across the four Dists. As m decreased from 5, 4 to 3, CRs became increasingly unacceptable and overcovering, especially under normal and nearly normal distributions. When m = 3 or 4 under mildly and moderately non-normal Dists, CRs were mostly unacceptable when ϕ ≥ 0.

When N = 16, CRs were mostly acceptable across the four Dists. A few unacceptable overcovering CRs were found when (1) m = 3 or 4 and Dist was normal or nearly normal, or (2) m = 3, ϕ ≥ 0, and Dist was mildly or moderately non-normal. A few unacceptable undercovering CRs were identified when m = 5 or 6, ϕ ≤ −0.3, and Dist was moderately non-normal.

Acceptable conditions

To answer RQ2, we integrated findings across four criteria in Tables 5, 6, 7 and 8. Specifically, Tables 5 and 6 present acceptable conditions for λ = 0.1 and N = 8 or 16, respectively. Tables 7 and 8 present acceptable conditions for λ = 0.5 and N = 8 or 16, respectively. A circle (O) in Tables 5, 6, 7 and 8 indicates that gAB performed acceptably on all four criteria in that condition; hence, gAB was acceptable for MB studies and meta-analysis in that condition (e.g., Algina et al., 2005; APA, 2020; Hoogland & Boomsma, 1998; Pustejovsky et al., 2014). Actual values of the four criteria are shown in File 5 at https://osf.io/hsvwu/.

According to Tables 5, 6, 7 and 8, the number of acceptable conditions marked by O ranged from 55 (normal), 90 (nearly normal), 223 (moderately non-normal), to 233 (mildly non-normal). gAB’s performance was acceptable in far more mildly or moderately non-normal conditions than normal or nearly normal conditions. At fixed λ and N, normal and nearly normal Dists yielded similar patterns, and mildly and moderately non-normal Dists yielded similar patterns.

The number of acceptable conditions increased as m or N increased. When m increased from 3, 4, 5 to 6, the number of acceptable conditions increased from 51, 113, 182, to 255, respectively. When N increased from 8 to 16, the number of acceptable conditions increased from 220 to 381.

In general, the number of acceptable conditions decreased as λ, ρ or ϕ increased. When λ increased from 0.1 to 0.5, the number of acceptable conditions decreased from 320 to 281. When ρ increased from 0.2, 0.4, 0.6 to 0.8, the number of acceptable conditions in general decreased from 172, 180, 157, to 92, respectively. When ϕ increased from −0.4, −0.3, −0.1, 0, 0.1, 0.3 to 0.4, the number of acceptable conditions gradually decreased from 110, 106, 87, 82, 81, 68, to 67, respectively.

According to Table 5, when λ = 0.1 and N = 8, most of the acceptable conditions were associated with (1) ρ = 0.2 or 0.4, m ≥ 4, and mildly or moderately non-normal Dist, (2) ρ = 0.6, m ≥ 5, and mildly non-normal Dist, or (3) ρ = 0.8, m = 6, and normal, nearly normal, or mildly non-normal Dist. According to Table 6, when λ = 0.1 and N = 16, most of the acceptable conditions were associated with (1) ρ = 0.2 or 0.4, and mildly or moderately non-normal Dist, (2) ρ = 0.6, m ≥ 4, and normal, nearly normal, or mildly non-normal Dist, or (3) ρ = 0.8, m ≥ 5, and normal or nearly normal Dist.

According to Table 7, when λ = 0.5 and N = 8, most of the acceptable conditions were associated with (1) ρ = 0.2, m ≥ 4, and moderately non-normal Dist, or (2) ρ = 0.4 or 0.6, m ≥ 5, and mildly or moderately non-normal Dist. According to Table 8, when λ = 0.5 and N = 16, most of the acceptable conditions were associated with (1) ρ = 0.2, and moderately non-normal Dist, (2) ρ = 0.2, m = 3 or 6, and mildly non-normal Dist, (3) ρ = 0.4 or 0.6 , m ≥ 4, and mildly or moderately non-normal Dist, (4) ρ = 0.4, 0.6, or 0.8, m = 6, and normal, nearly normal, or mildly non-normal Dist, or (5) ρ = 0.8, m = 5, and nearly normal Dist.

Summary of findings

Results presented above indicated that gAB as a point estimator was fairly unbiased even under non-normal distributions. gAB’s variance was generally overestimated and its 95% CI was over-covered, especially when data distribution was normal or nearly normal combined with m = 3 or 4, and N = 8. The imprecision of gAB, as measured by MSE, was quite large when m = 3 or 4 and ρ = 0.6 or 0.8 across the four distributions.

Indeed, data distribution played a vital role in impacting gAB’s performance for MB studies and meta-analysis. gAB performed far better under mildly and moderately non-normal distributions than under normal and nearly normal distributions. This was because more RBVs were acceptable under mildly or moderately non-normal distribution than under normal or nearly normal distribution (see Fig. 2). Additionally, data distribution interacted with ρ in impacting the performance of gAB significantly. Under normal or nearly normal distribution, gAB performed more acceptably when ρ = 0.6 or 0.8 than when ρ = 0.2 or 0.4. Under mildly or moderately non-normal distribution, gAB performed more acceptably when ρ = 0.2 or 0.4 than when ρ = 0.8. When ρ = 0.6 and λ = 0.5, gAB performed equally acceptably under mildly and moderately distributions. When ρ = 0.6 and λ = 0.1, gAB performed more acceptably under the mildly non-normal than under the moderately non-normal distribution. The negative impact of ρ on gAB under any data distribution was mitigated by doubling N from 8 to 16 and/or by increasing m from 3 to 6.

Discussion

The What Works Clearinghouse Procedures and Standards Handbook, Version 5.0 (WWC, 2022) recommends gAB as a D-CES (1) to gauge an intervention effect in SCED studies, and also (2) to synthesize findings from SCED and group studies in meta-analysis. As an estimator of its parameter δAB, gAB’s interpretation is conditioned on its assumptions. Yet there have been no published studies that examined gAB’s normality assumption. The present study aimed to investigate the impact of data distributions on the performance of gAB by expanding Study 2 of Pustejovsky et al. (2014) to non-normal data. Our study applied the same REML method to compute gAB and the same four criteria to evaluate the performance of gAB, as Pustejovsky et al. (2014) did. The four criteria were: relative bias (RB), relative bias of gAB’s variance estimator (RBV), mean square error (MSE), and coverage rate of symmetric 95% CI (CR). The present study differed from Pustejovsky et al. (2014) in two aspects. First, we analyzed converged results, whereas Pustejovsky et al. (2014) analyzed both converged and non-converged results. Second, we specified cutoffs for acceptable and unacceptable RB, RBV, MSE, and CR, whereas Pustejovsky et al. (2014) did not establish such standards.

Two research questions (RQ1 and RQ2) were raised concerning the impact of data distributions on the performance of gAB. RQ1 explored the extent to which data distribution (Dist), the number of cases (m), the number of measurements (N), within-case reliability (ρ), ratio of variance components (λ), autocorrelation (ϕ) and the interactions of Dist with m, N, ρ, λ, and ϕ impacted each of the four criteria. Dist was manipulated in this study to range from normal, nearly normal, mildly non-normal to moderately non-normal. The other five factors (m, N, ρ, λ, and ϕ) were manipulated identically or similarly as in Pustejovsky et al. (2014). We answered RQ1 by analyzing the impact of the six main effects and five interactions on each criterion in an ANOVA framework (Table 3) and by plotting trends of acceptable and unacceptable RB (Fig. 1), RBV (Fig. 2), MSE (Figs. 3 and 4), and CR (Figs. 5 and 6).

We answered RQ2 by applying acceptability standards to all four criteria in each condition (Tables 5, 6, 7 and 8). The suitability of gAB for MB studies and meta-analysis (RQ2) was answered by identifying conditions that yielded acceptable results on all four criteria. Our results indicated that gAB as a point estimator was fairly unbiased even under non-normal distributions. Yet gAB’s variance was generally overestimated, and its symmetric 95% CI was over-covered, especially when data distribution was normal or nearly normal combined with m = 3 or 4, and N = 8. The imprecision of gAB, as measured by MSE, was a concern when m = 3 or 4 and ρ = 0.6 or 0.8, regardless of data distribution.

Under normal or nearly normal data distribution, bias in variance estimates of gAB was mostly unacceptable. In contrast, more RBVs were acceptable under mildly or moderately non-normal distribution than under normal or nearly normal distribution. Consequently, gAB was suitable for MB studies and meta-analysis in more conditions under mildly or moderately non-normal distribution than under normal or nearly normal distribution. It may seem counterintuitive as to why more RBVs were unacceptable under normal and nearly normal Dists than under mildly and moderately non-normal Dists. One explanation is given by Eq. 15 on the estimated variance of gAB, or \({V}_{g_{\textrm{AB}}}\). Though Eq. 15 is derived under normality (Pustejovsky et al., 2014), its approximation to the true variance of gAB is poor when m and N are small due to asymptotic normality of the REML method (see Footnote 2). Our results revealed that the largest m (= 6) and N (= 16) in this study were not large enough to yield acceptable estimates of the variance of gAB under normal and nearly normal DistsFootnote 13. Our results also showed that Eq. 15 yielded more biased estimates of gAB’s variance under small m (= 3) and N (= 8) than under large m (= 6) and N (= 16). These findings are consistent with the mathematical derivation of Eq. 15 under the normality assumption. Given the substantial relative bias in gAB’s variance estimator in the present study and in Pustejovsky et al. (2014), Eq. 15 needs to be improved in future research. We offer a few viable alternative methods under “Limitations and future research directions.”

Our results revealed a complex and joint impact of data distribution, along with number of cases, number of measurements, ratio of variance components, within-case reliability, and autocorrelation on the suitability of gAB for MB studies and meta-analysis. According to the ANOVA results, data distribution contributed to approximately 49% of variance in RB and 25% of variance in both RBV and CR. Furthermore, data distribution interacted with within-case reliability in impacting 8% of variance in RB. Number of cases and within-case reliability each contributed to 34% of variance in MSE. Among the four criteria, RB was most accounted for (91%) and RBV was least accounted for (72%) by all effects combined.

Our findings showed that the performance of gAB, as assessed by RB, MSE, RBV, and CR, depended on the distribution of data and five data features investigated in the present study. Of the six data features, the number of cases and measurements are within the control of a SCED researcher or interventionist, whereas data distribution, within-case reliability, ratio of variance components, and autocorrelation are not. In fact, our results demonstrated a mitigating effect of increased cases and measurements on the negative impact due to increased ratio of variance components, within-case reliability, and first-order autocorrelation on the performance of gAB. The mitigating effect of increased m or N on gAB was particularly evident for normal and nearly normal distributions.

Furthermore, large number of cases and measurements are required by the REML method to yield acceptable estimates of gAB’s variance. Therefore, SCED researchers and interventionists should be encouraged to design a study with sufficiently large number of cases and measurements. In light of our findings, we offer general recommendations of gAB for primary MB studies and meta-analysis. The preamble to our recommendations is that each SCED study, for which gAB is applicable, had been well constructed, conducted, and documented (Chen et al., 2020; Kratochwill et al., 2013, 2021; Peng et al., 2013).

Our recommendations

We recommend gAB for primary MB studies and meta-analysis when each study with at least 16 measurements, meets one of the following two conditions:

-

(1)

m = 6, the within-case reliability is 0.6 or 0.8, and the shape, skewness, and kurtosis of the data distribution are similar to the normal or nearly normal distribution investigated in this study;

-

(2)

m ≥ 4, the within-case reliability is 0.2, 0.4, or 0.6, and the shape, skewness, and kurtosis of the data distribution are similar to the mildly or moderately non-normal distribution investigated in this study.

The shape, skewness and kurtosis may be determined from raw data and compared to the four data distributions manipulated in the current study (see File 1 at https://osf.io/hsvwu/ for the four marginal distributions). Alternatively, the shape, skewness and kurtosis of empirical data distributions concerning a specific intervention or outcome measure may be conjectured from review studies, such as Joo (2017), Shadish et al. (2014), and Solomon (2014). Likewise, the within-case reliability of a study’s data may be computed from the ratio of the between-case variance in levels over the sum of the within-case variance plus the between-case variance in levels, and then compared to the four levels manipulated in the current study.

In empirical SCED studies, there may well be exceptions to the conditions recommended above when gAB performs satisfactorily. When applied researchers find their data to not meet the recommended conditions, a cautionary note should be added for interpreting the magnitude of gAB. Practitioners are advised to consider context effects of an intervention holistically when interpreting gAB and its reasonableness.

Limitations and future research directions

As with any simulation study, findings and recommendations presented here are based on specific manipulations of the six factors investigated and acceptable standards established in the present study. Of the six factors, the number of cases (m), the within-case reliability (ρ), and the autocorrelation (ϕ) were well represented by levels commonly found in empirical MB studies. The number of measurements (N = 8 or 16) and the ratio of variance components (λ = 0.1 or 0.5) were represented by only two levels.

The manipulation of data distributions in the present simulation study ranged from normal to moderately non-normal. All four distributions were unimodal, either mesokurtic or leptokurtic. And the two non-normal distributions were both positively skewed. Future studies can examine performance of gAB using discrete or count/frequency data with one or more modes, or different distributions at Level-1 and Level-2.

We fitted MB2 to normal and non-normal data. Hence, the impact of non-normality on gAB has not been investigated under MB1, MB3, MB4, or MB5 (Pustejovsky et al., 2014). Simulation studies conducted under models other than MB2 should yield useful information to inform SCED researchers and interventionists about gAB in broader contexts. Studies on the impact of model misspecification should facilitate our understanding of the application of gAB.

Relative bias in gAB’s variance estimator was substantial in the present study and in Pustejovsky et al. (2014). Such relative bias has impeded gAB’s utility in meta-analysis. In the context of hierarchical modeling of SCED data, alternative methods, such as the Bayesian method (Baek et al., 2020; Joo & Ferron, 2019), have been proposed to improve the variance estimation of a random effect. However, biased variance estimation remains an unresolved issue in meta-analysis of SCED studies.

In sum, future studies should consider: (a) generating data from non-normal distributions not considered in the present study (e.g., platykurtic, discrete, count/frequency, bimodal/multimodal); (b) simulating data from different distributions for Level-1 and Level-2 errors separately; (c) increasing the number of cases > 6 and the number of measurements ≥ 20 to improve the convergence of REML estimates (see File 8 for non-convergence rates); (d) fitting a model different from MB2 to data; and (e) developing Bayesian or robust estimators of gAB’s variance (e.g., Chen & Pustejovsky, 2022; Park & Beretvas, 2019; Tipton, 2015; Verbeke & Lesaffre, 1996; Yuan & Bentler, 2002), or applying the Box-Cox or square root transformation of gAB’s variance (Man et al., 2022).

Concluding remarks

The gAB statistic has been endorsed as a D-CES by the What Works Clearinghouse Procedures and Standards Handbook, Version 5.0 (WWC, 2022) to gauge an intervention effect in a SCED study and to synthesis findings from multiple SCED studies, or across SCED and group studies. Such an endorsement will surely increase the reporting of gAB in published reports. Thus, it is crucial to examine the performance of gAB under non-normal distributions with different data features in order to render appropriate reporting and interpretation of gAB. Findings from our study highlight the importance of data distributions and features in determining the suitability of gAB for primary MB studies and meta-analysis.

Based on gAB’s definition and its REML estimation method, it is worth pointing out several issues associated with gAB’s applicability in SCED contexts. First, gAB is applicable to MB designs across three or more cases of the same behavior, as previously mentioned. gAB is equally applicable to ABk designs with at least three cases of the same behavior. Second, as a study-level ES index, gAB does not permit the examination of factors that may vary between cases within a study, as in moderator analyses (Kratochwill et al., 2021). Third, gAB’s computation and interpretation depend on a model. Such a model may be misspecified, or overly simplistic (Maggin et al., 2022; Valentine et al., 2016)Footnote 14, and the model assumptions (e.g., normality, equally spaced measurements, no trend) may be untenable (Maggin et al., 2022) or non-robust. Fourth, its applicability beyond the “starting point,” or MB1, recommended by the What Works Clearinghouse Procedures and Standards Handbook, Version 5.0 (WWC, 2022) requires extensive research, especially under non-normal distributions with small number of cases and measurements.

In addition to issues discussed above, Kratochwill et al. (2021) raised concerns about the compatibility of constructs underlying outcomes measured in SCED and group studies. Such concerns cast doubt on the design-comparability of gAB across SCED and group studies. Maggin et al. (2022) demonstrated several misleading conclusions based exclusively on ES indices. Thus, Maggin et al. (2022) emphasized the importance of conducting systematic visual analyses of single-case data in terms of level, trend, variability, immediacy of change, overlap, and consistency of data patterns both within and across phases.

Given the issues and concerns summarized above, we caution readers not to rely solely on the magnitude of a D-CES, such as gAB, when determining an intervention’s effectiveness. Instead, a detailed description of an intervention study should be reviewed thoroughly when assessing an intervention. A study description should provide sufficient information regarding the definition of the construct being intervened, the construct validity of its measurements, the verification of the study’s design standards, the examination of the study’s operational issues, visual and quantitative demonstrations of the intervention’s effectiveness. As Chen et al. (2020), Kratochwill et al. (2021), and Wrigley and McCusker (2019) advocated, which we agree with, that it is more meaningful to ask, “For whom and under what conditions does an intervention work?” than to ask, “Does an intervention work?” A sound reporting of gAB, or any ES index, should include (A) a thorough interpretation of the ES magnitude based on similar studies (i.e., construct and population of interest, treatment/intervention introduced, and measurements of outcomes), and (B) clinical or practical importance of the intervention effect.

The data and materials for the simulation study are available at https://osf.io/hsvwu/.

Notes

Asymptotic normality is a property of the REML or ML method in which the normal approximation to the sampling distribution of a ML estimator (or gAB in this study) is valid when an asymptotically large sample of data is used (McNeish, 2017).

N refers to the total number of measurements per case. It was set to be identical for all cases in each condition.

The covariance between Phase A levels and level shifts (τ10) was set to 0 in this study, as Pustejovsky et al. (2014) did.

For the purposes of this simulation study, we set \({\upsigma}^2+{\uptau}_0^2\) = 1, as Pustejovsky et al. (2014) did. σ2 and \({\uptau}_0^2\) were not specified separately.

The present study did not include ρ = 0, because ρ = 0 leads to an undefined λ. Study 2 of Pustejovsky et al. (2014) included ρ = 0.

Supplemental materials of the present study include nine files; they are available at https://osf.io/hsvwu/. File 1 presents the four marginal distributions. File 2 summarizes procedures used to confirm data generation under non-normal distributions. File 3 explains the decisions for the seven levels of ϕ. File 4 presents the modified R scdhlm package. File 4.1 is the superordinate R program to establish simulation conditions, execute File 4, and check convergence of each simulation. File 5 contains the actual values of the four criteria under each condition. File 6 presents alternative three-way ANOVA results including interactions of Dist with two of the other five factors in the model. File 7 presents the P25, P50, mean, P75, P95 of MSEs under each combination of m and ρ. File 8 presents results from converged and non-converged replications and non-convergence rates.

Pustejovsky et al.’s (2014) supplemental materials are available from https://www.jepusto.com/files/Effect-sizes-in-multiple-baseline-designs-Simulation-results.pdf.

Our decision to discard non-converged results was guided by Paxton et al. (2001) who stated, “If the purpose of the Monte Carlo analysis is to provide realistic information to users of the technique, then non-converged samples, which are rarely assessed in practice, will provide irrelevant information and subsequently threaten external validity” (pp. 301–302). In other words, a non-converged result could not be viewed as an optimal REML estimate of δAB. Authors of several simulation studies held similar views on non-converged results, including Bandalos and Leite (2013, p. 655), Bolin et al. (2019, p. 226), Bollen et al. (2014, p. 7), and Fan and Fan (2005, p. 131). Hence, we decided to retain only converged results until we obtained 20,000 replications in each condition. File 8 presents results from converged and non-converged replications and non-convergence rates.

Pustejovsky et al.’s (2014) supplemental materials defined RBV as \(\frac{{\overline{V}}_{g_{\textrm{AB}}}}{\textrm{Var}\left({g}_{\textrm{AB}}\right)}\). They stated, “We assessed the performance of proposed variance estimators using relative bias; for an effect size estimator g [= gAB in the present paper] with associated variance estimator Vg, the relative bias of the variance estimator is the ratio of the expected value of the variance estimator E(Vg) to the true variance of the effect size estimator Var(g). Relative biases close to one mean that the variance estimator is unbiased.” (p. 4).

Hoogland and Boomsma (1998) used 0.10 as the cutoff for an acceptable relative bias in a SE. If a sample SE is denoted as \(\hat{\uptheta}\) and its parameter as θ, Hoogland and Boomsma’s cutoff is expressed as (\(\hat{\uptheta}\) − θ)/ θ < 0.1. Hence, \(\hat{\uptheta}\)/θ < 1.1 or (\(\hat{\uptheta}\)/θ)2 < 1.21. Therefore, (\(\hat{\uptheta}\)2 – θ2)/ θ2 < 0.21 or 21%.

Alternative three-way ANOVA results that included interactions of Dist with two of the five factors are presented in File 6 at https://osf.io/hsvwu/. The alternative three-way ANOVA identified the same main effects and interactions as the two-way ANOVA, that had significant impacts on the four criteria.

The fact that more RBVs were acceptable under mildly and moderately non-normal conditions may be explained by the compensation of non-normality for REML’s tendency to overestimate the true variance of gAB under normal and nearly normal conditions.

Valentine et al. (2016) stated, “In general, when specifying the phase time trends used in the REML model for estimating design-comparable effect sizes, we recommend that users balance prior theory, visual inspection of the data, and parsimony …. we suggest that most users will probably focus on just the two simplest options of no trends or linear trends …. If the user is planning to include the effect size in a synthesis, we recommend that similar model specifications (i.e., phase time trends, and fixed/random effects, as described below) be used for all studies included in the synthesis” (pp. 20-21).

References

Algina, J., Keselman, H. J., & Penfield, R. D. (2005). An alternative to Cohen’s standardized mean difference effect size: A robust parameter and confidence interval in the two independent groups case. Psychological Methods, 10(3), 317–328. https://doi.org/10.1037/1082-989X.10.3.317

American Psychological Association (2020). JARS—Quant Table 9: Quantitative meta-analysis article reporting standards: Information recommended for inclusion in manuscripts reporting quantitative meta-analyses. https://apastyle.apa.org/jars/quant-table-9.pdf

Anaby, D., Avery, L., Gorter, J. W., Levin, M. F., Teplicky, R., Turner, L., Cormier, I., & Hanes, J. (2020). Improving body functions through participation in community activities among young people with physical disabilities. Developmental Medicine & Child Neurology, 62(5), 640–646. https://doi.org/10.1111/dmcn.14382

Au, T. M., Sauer-Zavala, S., King, M. W., Petrocchi, N., Barlow, D. H., & Litz, B. T. (2017). Compassion-based therapy for trauma-related shame and posttraumatic stress: Initial evaluation using a multiple baseline design. Behavior Therapy, 48(2), 207–221. https://doi.org/10.1016/j.beth.2016.11.012

Baek, E., Beretvas, S. N., Van den Noortgate, W., & Ferron, J. M. (2020). Brief research report: Bayesian versus REML estimations with noninformative priors in multilevel single-case data. The Journal of Experimental Education, 88(4), 698–710. https://doi.org/10.1080/00220973.2018.1527280

Bandalos, D. L., & Leite, W. (2013). Use of Monte Carlo studies in structural equation modeling research. In G. R. Hancock, & R. D. Mueller (Eds.), Structural equation modeling: A second course (2nd ed., pp. 625–666). Information Age Publishing.

Barker, J., McCarthy, P., Jones, M., & Moran, A. (2011). Single-case research methods in sport and exercise psychology (1st ed.). Routledge. https://doi.org/10.4324/9780203861882

Barton, E. E., Meadan, H., & Fettig, A. (2019). Comparison of visual analysis, non-overlap methods, and effect sizes in the evaluation of parent implemented functional assessment based interventions. Research in Developmental Disabilities, 85, 31–41. https://doi.org/10.1016/j.ridd.2018.11.001

Becraft, J. L., Borrero, J. C., Sun, S., & McKenzie, A. A. (2020). A primer for using multilevel models to meta-analyze single case design data with AB phases. Journal of Applied Behavior Analysis, 53(3), 1799–1821. https://doi.org/10.1002/jaba.698

Beretvas, S. N., & Chung, H. (2008). A review of meta-analyses of single-subject experimental designs: Methodological issues and practice. Evidence-Based Communication Assessment and Intervention, 2(3), 129–141. https://doi.org/10.1080/17489530802446302

Bolin, J. H., Finch, W. H., & Stenger, R. (2019). Estimation of random coefficient multilevel models in the context of small numbers of level 2 clusters. Educational and Psychological Measurement, 79(2), 217–248. https://doi.org/10.1177/0013164418773494

Bollen, K. A., Harden, J. J., Ray, S., & Zavisca, J. (2014). BIC and alternative Bayesian information criteria in the selection of structural equation models. Structural Equation Modeling: A Multidisciplinary Journal, 21(1), 1–19. https://doi.org/10.1080/10705511.2014.856691

Braunstein, S. L. (1992). How large a sample is needed for the maximum likelihood estimator to be approximately Gaussian? Journal of Physics A: Mathematical and General, 25, 3813–3826.

Brosnan, J., Moeyaert, M., Newsome, K. B., Healy, O., Heyvaert, M., Onghena, P., & Van den Noortgate, W. (2018). Multilevel analysis of multiple-baseline data evaluating precision teaching as an intervention for improving fluency in foundational reading skills for at risk readers. Exceptionality, 26(3), 137–161. https://doi.org/10.1080/09362835.2016.1238378

Byiers, B. J., Reichle, J., & Symons, F. J. (2012). Single-subject experimental design for evidence-based practice. American Journal of Speech-Language Pathology, 21(4), 397–414. https://doi.org/10.1044/1058-0360(2012/11-0036)

Chen, L.-T., 丁麒文, 謝承佑, 陳奕凱, 江宇珊, 黃思婧, 楊同榮, 鄭澈, 劉佩艷, 彭昭英 (2020). 效果量在臺灣心理與教育期刊的應用:回顧與再思[Effect size reporting practices in Taiwanese psychology and education journals: Review and beyond]. 中華心理學刊[Chinese Journal of Psychology], 62(4), 553–592. http://www.cjpsy.com/_i/assets/upload/files/pg066%2B(1).pdf.pdf

Chen, L.-T., Wu, P.-J., & Peng, C.-Y. J. (2019). Accounting for baseline trends in intervention studies: Methods, effect sizes and software. Cogent Psychology, 6(1), Article 1679941. https://doi.org/10.1080/23311908.2019.1679941

Chen, M., & Pustejovsky, J. E. (2022). Multilevel meta-analysis of single-case experimental designs using robust variance estimation. Psychological Methods. Advance online publication. https://doi.org/10.1037/met0000510

Christoffersen, P. F. (2004). Elements of financial risk management (1st ed.). Academic Press. https://doi.org/10.1016/B978-0-12-174232-4.X5000-4

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Lawrence Erlbaum Associates.

Cotter, J., & Hanly, J. (2012). Re-evaluating hedging performance for asymmetry: The case of crude oil. In J. Batten & N. F. Wagner (Eds.), Derivative securities pricing and modeling. Contemporary Studies in Economic and Financial Analysis (Vol. 94, pp. 259–280). https://doi.org/10.1108/S1569-3759(2012)0000094013

Darbyshire, P., & Hampton, D. (2012). Hedge fund modelling and analysis using Excel and VBA (1st ed.). Wiley.

Fan, X., & Fan, X. (2005). Power of latent growth modeling for detecting linear growth: Number of measurements and comparison with other analytic approaches. The Journal of Experimental Education, 73(2), 121–139. https://doi.org/10.3200/JEXE.73.2.121-139

Ferron, J. M., Moeyaert, M., Van den Noortgate, W., & Beretvas, S. N. (2014). Estimating causal effects from multiple-baseline studies: Implications for design and analysis. Psychological Methods, 19(4), 493–510. https://doi.org/10.1037/a0037038

Franklin, R. D., Allison, D. B., & Gorman, B. S. (1996). Introduction. In R. D. Franklin, D. B. Allison, & B. S. Gorman (Eds.), Design and analysis of single-case research (pp. 1–12). Lawrence Erlbaum Associates, Inc.