Abstract

Psychophysical paradigms measure visual attention via localized test items to which observers must react or whose features have to be discriminated. These items, however, potentially interfere with the intended measurement, as they bias observers’ spatial and temporal attention to their location and presentation time. Furthermore, visual sensitivity for conventional test items naturally decreases with retinal eccentricity, which prevents direct comparison of central and peripheral attention assessments. We developed a stimulus that overcomes these limitations. A brief oriented discrimination signal is seamlessly embedded into a continuously changing 1/f noise field, such that observers cannot anticipate potential test locations or times. Using our new protocol, we demonstrate that local orientation discrimination accuracy for 1/f filtered signals is largely independent of retinal eccentricity. Moreover, we show that items present in the visual field indeed shape the distribution of visual attention, suggesting that classical studies investigating the spatiotemporal dynamics of visual attention via localized test items may have obtained a biased measure. We recommend our protocol as an efficient method to evaluate the behavioral and neurophysiological correlates of attentional orienting across space and time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Only a fraction of the information flooding the visual system every time we open our eyes can be processed. Visual attention allows us to selectively focus on specific locations or features while ignoring other aspects of the available information by biasing the neuronal representation of the visual scene (Carrasco, 2011; Treue, 2001). Depending on the attentional state of the observer, the same retinal input elicits different neurophysiological responses (Gandhi et al., 1999; Hillyard & Anllo-Vento, 1998; Reynolds et al., 2000). As a behavioral consequence of these modulations, attention increases spatial resolution (Jigo et al., 2021; Yeshurun & Carrasco, 1998), enhances contrast sensitivity (Jigo & Carrasco, 2020; Lee et al., 1999; Pestilli et al., 2009), and even alters visual appearance (Carrasco & Barbot, 2019; Rolfs & Carrasco, 2012). Given that stimuli presented in the focus of attention are recognized faster than those appearing outside the focus (Posner et al., 1980), the spatial deployment of attention is frequently deduced from manual response times. Reaction times, however, reflect the combined effect of detection-, decision-, and response-dependent processes (Santee & Egeth, 1982), which can only be differentiated with special methods (e.g., speed–accuracy trade-off) and models (e.g., drift-diffusion model). A more direct behavioral correlate of visual attention can be obtained by measuring the sensitivity to discriminate visual features (Macmillan & Creelman, 1991). Studies measuring discrimination performance can isolate the different components by ruling out speed-accuracy trade-offs and/or relying on signal detection theory (SDT), which indexes sensitivity and criterion separately. In a typical paradigm, the spatiotemporal dynamics of visual attention are measured by briefly presenting a test stimulus (commonly among several distractors) at a specific point in time and at one out of several pre-specified locations across the visual field. Observers are instructed to discriminate a target-specific feature or its identity. Since attention enhances visual processing, a higher discrimination performance (measured as %-correct responses) or increased visual sensitivity (measured as d-prime) for a particular item reflects the allocation of attention toward its location.

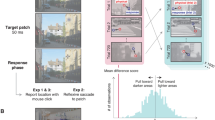

The discrimination approach has become highly popular and a variety of different discrimination features, such as stimulus identity (e.g., Deubel & Schneider, 1996), orientation angle (e.g., Rolfs & Carrasco, 2012), or motion direction (e.g., Szinte et al., 2015) have been employed (see Hanning et al., 2019a for a comparison of the most popular stimuli). However, these conventional approaches face a common shortcoming: They all rely on localized test stimuli to determine the deployment of visual attention. The potential problem with this approach is that these stimuli could bias visual perception by structuring the visual field (Puntiroli et al., 2018; Shurygina et al., 2021; Szinte et al., 2019; Taylor et al., 2015) and may thus affect what they are intended to measure—the spatial distribution of attention. In a paradigm using a typical stimulus configuration as displayed in Fig. 1a, attention is likely biased towards the presented stimuli (as compared to locations in between or further in- or outside), since those are the only locations containing potentially task-relevant visual information (Fig. 1b). This bias could occur automatically, in a bottom-up fashion, but might also have a top-down component, in that observers strategically deploy their attention to potential test locations to benefit their task performance. Critically, these biases are not necessarily linear and hard to estimate. They can unsystematically affect different experimental manipulations and thus distort the interferences drawn from the study.

Rational of the dynamic 1/f noise protocol. (a) Example of a typical paradigm to investigate visual attention (adapted from Rolfs et al., 2011). Observers discriminate a test stimulus (a clockwise or counterclockwise oriented Gabor) presented along with several distractors (here vertical Gabors). (b) The stimulus configuration shown in (a) reveals potential test locations, to which observer’s attention might be biased (visualized by colored blobs). (c) The dynamic 1/f noise protocol. Observers discriminate the tilt angle of an orientation filtered noise patch embedded in an unfiltered 1/f noise background (here at 3 o’clock, tilted counterclockwise relative to vertical). The orientated patch can appear at any location in the noise field; potential test locations remain unknown to the observer. (d) Examples of orientation filters with different widths (σ; upper row) and the resulting filtered noise image (bottom row). By varying orientation filter width, the difficulty of the discrimination task can be continuously adjusted (the narrower the width, the stronger the signal and the easier the task)

To overcome such attentional biases induced by the experimental design, we developed a dynamic, item-free 1/f (“pink”) noise protocol that allows us to assess visual performance across the scene without object-like visual structures. By embedding an orientation discrimination signal into full-field 1/f noise (Fig. 1c) observers’ visual sensitivity can be probed at any location in the noise field without revealing a specified set of potential test locations. The local discrimination signal composed of orientation-filtered 1/f noise (displaying, for example, a clockwise or counterclockwise orientation relative to vertical) is inserted into the 1/f noise field with a soft boundary and can take any shape and size. By altering the orientation filter width, the strength of the discrimination signal can be continuously manipulated (Fig. 1d), which allows us to precisely adjust the overall difficulty of the perceptual discrimination task. Since sudden local changes in the stimulus display capture attention (e.g., Müller & Rabbitt, 1989; Theeuwes, 1991) and potentially bias the temporal dynamics of attention allocation (Hanning et al., 2019a), the full-field noise stimulus (including the embedded orientation signal) continuously changes over time. This dynamic approach also permits to present the orientation signal at any time for a desired presentation duration without providing observers with temporal information regarding test signal occurrence. See Methods for reference to detailed description and example code.

We selected orientation-filtered 1/f spatial noise as the discrimination signal for two major reasons. First, the 1/f falloff of the amplitude spectrum is “naturalistic” in the sense that real-world scenes and textures typically show a similar spatial frequency dependence (Field, 1987; Simoncelli & Olshausen, 2001). Second—and this is a decisive advantage of the pink noise stimulus over conventional approaches—this particular type of test signal should be scale-invariant and yield discrimination thresholds that are constant across retinal eccentricities. Spatial acuity decays with increasing distance from the fovea (the center of gaze), i.e., the visual system becomes less sensitive to higher spatial frequencies further in periphery (Anton-Erxleben & Carrasco, 2013; Itti & Koch, 2001). As argued in a seminal study by Field (Field, 1987), early visual processing can be approximated by assuming an array of spatial band-pass filters (spatial frequency channels) representing the response properties of cortical cells, in which the spatial frequency bandwidth is constant in octaves (e.g., 1 to 2 c/deg, 2 to 4 c/deg, etc.). Under this assumption, a 1/f amplitude fall-off will yield equal energy in equal octaves (e.g., the energy between 1 and 2 c/deg will be equal to the energy between 2 and 4 c/deg; for a detailed analysis see (Field, 1987)). Since peripheral vision can be well modeled by eccentricity (cortical magnification / M-) scaling (Virsu & Rovamo, 1979), it follows that relative energy in the spatial frequency channels is also eccentricity-invariant. As we use orientation filtering that is homogeneous across all spatial frequencies (i.e., the orientation signal contains the full 1/f frequency spectrum), perceptual orientation discrimination thresholds should be largely independent of the eccentricity at which the test signal is presented.

We conducted two experiments to demonstrate the applicability and effectiveness of the dynamic 1/f noise protocol. In Experiment 1 we establish that (1) orientation discrimination accuracy continuously scales with the width of the orientation filter, allowing us to adjust the discrimination task difficulty (via altering orientation filter width) to individual observers’ capabilities; (2) the embedded orientation signal itself does not capture attention, as it is not discriminable when presented outside the focus of attention; (3) local discrimination accuracy is, as predicted, largely independent of the visual eccentricity at which the test signal is presented, enabling direct comparison of visual performance from central to peripheral locations. In Experiment 2 we demonstrate that (4) items in fact structure the visual field and markedly bias visual perception. This emphasizes the relevance of the item-free 1/f noise protocol and its unique capability of measuring the unbiased distribution of visual attention across time and space.

Methods

1/f noise protocol

The noise stimulus comprises a dynamically changing 1/f noise background, gradually changing from one noise image to another, in which a local orientation signal of desired shape and size is neatly embedded for a desired duration. We generated the local orientation signal in the following way. Let fx, fy denote the spatial frequencies in the x- and y-direction. The local orientation signal O(fx, fy) for a given preferred orientation ϕ0 was computed in the frequency domain from the dynamically changing (Fourier-transformed) pink noise S(fx, fy) by multiplication with a Gaussian weighting function that only depended on signal orientation:

σ then represents the width of the orientation filter. The smaller σ, the better the local orientation signal can be discriminated from the unfiltered background. It should be noted that the filtering leaves the signal unaffected in the radial direction \({f}_r=\sqrt{{f_x}^2+{f_y}^2}\), such that its pink noise characteristics is retained.

We normalized the orientation-filtered image to the amplitude range of the original image before embedding the corresponding subpart of the filtered image at the respective location in the unfiltered image. For the experiments presented here we used a circular window of radius r, with softened boundaries that we created with a cosine ramp of width w. To generate the dynamic nature of the stimulus, we present a series of 1/f noise images that gradually change from one image (A) to another image (B) to the next image (C, etc.), within a certain number of steps (e.g., four) and at a certain refresh rate. The image statistics (e.g., contrast, mean luminance) of “hybrid” noise images, created by combining two noise images (e.g., AAB, AB, ABB), were adjusted to the original 1/f noise images.

Custom MATLAB code for generating the stimulus is available on GitHub (https://github.com/nmhanning/oofnoise).

Empirical data and experimental procedure

Observers

Sample sizes were determined based on previous work (Hanning & Deubel, 2020; Hanning et al., 2019a; Hanning et al., 2019b). Six observers (ages 19–28 years, four female) completed Experiment 1a and Experiment 1b, and six observers (ages 20–31 years, five female) completed Experiment 2. All observers were healthy, had normal vision, and except for one author (N.M.H. participated in Experiment 2) were naive as to the purpose of the experiments. The protocols for the study were approved by the ethical review board of the Faculty of Psychology and Education of the Ludwig-Maximilians-Universität München (approval number 13_b_2015), in accordance with German regulations and the Declaration of Helsinki. All observers gave written informed consent.

Apparatus

Gaze position of the dominant eye was recorded using a SR Research EyeLink 1000 Desktop Mount eye tracker (Osgoode, Ontario, Canada) at a sampling rate of 1 kHz. Manual responses were recorded via a standard keyboard. The experimental software was implemented in MATLAB (MathWorks, Natick, MA), using the Psychophysics (Brainard, 1997; Pelli, 1997) and EyeLink toolboxes (Cornelissen et al., 2002). Observers sat in a dimly illuminated room with their head positioned on a chin-forehead rest. Stimuli were presented at a viewing distance of 60 cm on a 21-inch gamma-linearized Sony GDM-F500R CRT screen (Tokyo, Japan) with a spatial resolution of 1024 by 768 pixels and a vertical refresh rate of 120 Hz.

Experimental design

In Experiment 1a (see Fig. 2a), each trial began with observers fixating a central black (~0 cd/m2) fixation dot (radius 0.1°) on a gray background (~60 cd/m2). Once stable fixation was detected within a 1.75° radius virtual circle centered on fixation for at least 200 ms, the trial started with the presentation of a circular dynamic 1/f noise background (r = 14.32°; average luminance ~60 cd/m2) that extended across the screen height and was windowed by a circular cosine ramp (width w = 2.39°). The background noise (including the later embedded orientation filtered patch) was updated at 60 Hz, changing gradually from one noise image to another in four steps. After a random fixation period between 400 and 800 ms, in cued trials (half of trials, randomly intermixed) a circular pink color cue appeared for 75 ms, indicating the location of the upcoming discrimination signal (RGB: [204, 0, 102], ~60 cd/m2, same dimensions as the upcoming discrimination signal). Following a delay of 100 ms, a local orientation signal, oriented 40° clockwise or counterclockwise from vertical, windowed by a circular raised cosineFootnote 1 (r = 1.75°, w = 0.875°), was embedded in the background noise at a randomly selected position at one out of four retinal eccentricities (0°, 3.5°, 7°, or 10.5°). The orientation filter width σ was randomly selected for each trial (9 linear σ-steps between 30° and 70°). In the uncued trials, no color cue was presented, and the discrimination signal occurred at an unpredictable location (same eccentricities, timing, and specifications as in cued trials). After 50 ms, the orientation signal was masked by the reappearance of non-oriented noise for 500 ms, before the background noise disappeared, and observers reported the perceived orientation via button press (two-alternative forced choice). They were informed that their orientation report was non-speeded, and they received auditory feedback for incorrect responses.

Experiment 1. (a) Experiment 1a. Observers (N = 6) fixated a central fixation dot on dynamic 1/f noise background and aimed to discriminate a local orientation signal. This signal was presented at different eccentricities (see b) and either was preceded by a 100% valid color pre-cue (cued; results in d), or was not (uncued; results in c). (b) Visualization of the tested eccentricities. Color code matches the psychometric functions in c, d, and f. (c, d) Group-averaged psychometric functions (discrimination performance as a function of orientation filter width σ) for uncued (c) and cued trials (d). 75% discrimination thresholds (Th75) derived from individual observers’ psychometric functions are shown at the bottom of each plot with filled dots. Error bars denote the SEM. (e) Experiment 1b. The same observers fixated a central fixation dot on a uniform gray background and aimed to discriminate the orientation of a Gabor. The Gabor had the same size as the orientation signal in Experiment 1a and was presented at the same eccentricities (see b). (f) Group-averaged psychometric functions for Experiment 1b. Conventions as in c and d

Experiment 1b (see Fig. 2e). Task and timing were identical to Experiment 1a with the following differences: No dynamic 1/f noise background was presented. Instead, upon trial start a local stimulus stream alternating at 20 Hz between a vertical Gabor patch (2 cpd, random phase, full contrast, ~60 cd/m2) and a Gaussian pixel noise mask (composed of ~0.25°-wide pixels ranging randomly from black to white, ~60 cd/m2) was presented (Hanning et al. 2019a; Wollenberg et al., 2018). The stream had the same size / raised cosine window (r = 1.75°, w 0.875°) and was presented at the same retinal eccentricities as the 1/f orientation signal in Experiment 1a (0°, 3.5°, 7°, or 10.5°). For a duration of 50 ms, the stream contained an orientation signal—a Gabor patch rotated clockwise or counterclockwise relative to the vertical. The tilt angle β was randomly selected for each trial (9 linear β-steps between 25° and 1°). To avoid apparent motion effects, after orientation signal presentation the stream continued with alternating noise patches and blanks. Observers indicated via button press in a non-speeded manner whether they had perceived the orientation to be tilted clockwise or counterclockwise, and received auditory feedback for incorrect responses.

Observers performed 15 experimental blocks (10 of Experiment 1a, 5 of Experiment 1b), each of 180 trials. Trials in which we detected blinks, accidental eye movements, or broken eye fixation (gaze deviating further than 1.75° from the instructed fixation location) were discarded. In total we included 10,223 trials in the analysis of the behavioral results for Experiment 1a (1704 ± 81 trials per observer; mean ± standard error of the mean [SEM]) and 5328 trials for Experiment 1b (888 ± 3 trials per observer).

Experiment 2 (see Fig. 3a). On a rectangular dynamic 1/f noise field (height 2.5° x width 20°, average luminance ~60 cd/m2) we presented five black (~60 cd/m2) circular frames (radius 1.0°), evenly spaced on the horizontal midline (± 0°, 4°, and 8° relative to noise background center). Observers were required to fixate the center of the middle frame. Once stable fixation was detected within a 1.75° radius around the fixation location for at least 200 ms, the trial started with a 300–1000 ms fixation period (duration randomly chosen), after which we embedded a local orientation signal (tilted ± 40° relative to vertical) in the background noise, windowed by a circular raised cosine (r = 1.0°, w = 0.875°). The orientation signal was presented at one out of nine evenly spaced horizontal positions (± 0°, 2°, 4°, 6°, and 8° relative to central fixation), and thus either centered within one of the frames or in between them. Observers were informed that the orientation signal would occur at each of the nine test locations with equal probability. After ~42 ms, the orientation signal was masked by the reappearance of non-oriented noise. Following a masking period of 300–650 ms, the dynamic 1/f background noise disappeared, and observers indicated via button press in a non-speeded manner whether they had perceived the orientation to be tilted clockwise or counterclockwise.

Experiment 2. (a) Experimental design. Observers (N = 6) fixated the central of five black frames presented on dynamic 1/f noise background. A brief orientation signal (here clockwise) was embedded either within or in between the frames. (b) Group average orientation discrimination performance (green line) as a function of horizontal test signal position (corresponding stimulus arrangement shown on top). The colored area indicates the SEM

To ensure a comparable performance level across individual observers, the orientation signal strength was adjusted via the width of the orientation filter in a threshold task preceding the experiment. The experimental design resembled the main experiment, but no circular frames were presented, and the orientation signal was preceded by a 100% valid spatial pre-cue (same cue as in Experiment 1a). For each trial we randomly selected the orientation filter strength out of five linearly spaced filter widths (σ 10° to 50°; note that we used narrower filter values compared with Exp. 1a due to the shorter presentation time and smaller size of the orientation signal). We determined the filter width corresponding to 90% discrimination accuracy individually for each observer by fitting cumulative Gaussian functions to the average discrimination performance per filter width σ (collapsed across signal locations) via maximum likelihood estimation, and used the determined filter width for the main experiment.

Observers performed 150 trials of the threshold task and 347–415 trials of the main experiment. Trials in which we detected blinks, accidental eye movements, or broken eye fixation (gaze deviating further than 1.75° from the required fixation position) were discarded. In total we included 2289 trials in the analysis of the behavioral results for Experiment 2 (382 ± 11 trials per observer).

Behavioral data analysis and visualization

For Experiment 1, we fitted cumulative Gaussian functions to each observer’s average discrimination accuracy (% correct) per filter width σ (Exp. 1a, Fig. 2c, d; also Exp. 2—threshold task) or tilt angle β (Exp. 1b, Fig. 2f), separately for each test eccentricity. Based on these psychometric functions, we estimated each observer’s 75% discrimination threshold (Th75) and plotted the averaged estimates (group-mean ± SEM) together with a group-averaged psychometric function. To quantify the effect of test signal eccentricity on visual performance, we conducted repeated-measures ANOVAs. We report Greenhouse-Geisser corrected p-values when the sphericity assumption was not met. To follow up null findings, we performed Bayesian statistical analyses for ANOVA designs (Rouder et al., 2012) using the default settings of the bayesFactor MATLAB toolbox (Krekelberg, 2022). Bayes factor values of B01 > 3 indicate evidence in favor of the null hypothesis (Kass & Raftery, 1995).

For Experiment 2, we visualized discrimination accuracy across space by interpolating between the group-averaged discrimination accuracy (% correct) for each test location (± 0°, 2°, 4°, 6°, and 8° relative to central fixation). We used permutation tests to determine significant performance differences between two conditions / locations. We resampled our data to create a permutation distribution by randomly rearranging the labels of the respective conditions for each observer and computed the difference in sample means for 1000 permutation resamples (iterations). We then derived p-values by locating the actually observed difference (difference between the group-averages of the two conditions) on this permutation distribution; i.e., the p-value corresponds to the proportion of the difference in sample means that fell below or above the actually observed difference. All p-values were Bonferroni-corrected for multiple comparisons.

Results

To demonstrate that the noise stimulus can accurately measure visual sensitivity across the scene, in Experiment 1a we tested local 1/f noise orientation discrimination performance at various retinal eccentricities with and without spatial attention focused on the test signal location. Observers fixated a central fixation target presented on a circular, dynamic 1/f noise background (Fig. 2a). After a fixation period, a local orientation signal was presented for 50 ms, either centered on the fixation or at a randomly chosen position 3.5°, 7°, or 10.5° (degree of visual angle) away from fixation (Fig. 2b). In half of the trials (cued trials; see Supplementary Video S1), we manipulated the allocation of spatial attention by presenting a brief, salient color pre-cue that indicated the test signal location shortly before signal onset (100% valid); in the other half of trials (uncued trials; see Supplementary Video S2) no information regarding signal location or presentation time was provided. At the end of each trial, observers indicated via button press whether they had perceived an orientation clockwise or counterclockwise relative to vertical (two-alternative forced choice task).

When attention was not directed via the spatial pre-cue (uncued trials; Fig. 2c), discrimination performance scaled with the width of the orientation filter (used to create the orientation signal) if the orientation signal was presented at the center of gaze (0° eccentricity from fixation): the narrower the filter (i.e., the stronger the orientation signal), the higher the proportion of correct orientation judgements. However, for all peripheral test locations (3.5°, 7.0°, and 10.5° eccentricity), observers’ accuracy was low and hardly increased with decreasing filter width. Thus, when observers were unaware of test location and presentation time, they were able to successfully discriminate the orientation signal only when it occurred right at the center of their gaze.

The superior discrimination performance at the central versus peripheral locations may not seem surprising, considering that the visual system's acuity and perceptual sensitivity is highest at the fovea and decays towards the periphery (Anton-Erxleben & Carrasco, 2013; Itti & Koch, 2001). As a consequence, we clearly perceive fine details in the center of gaze (where we are sensitive to high spatial frequencies) that we cannot distinguish when viewing them peripherally (where we are more sensitive to lower spatial frequencies). The 1/f property of the orientation signal, however, should allow equal orientation discriminability across eccentricities. This was in fact the case when the 1/f orientation signal was preceded by a salient, 100% valid pre-cue, which can be assumed to automatically attract exogenous spatial attention to its location (Anton-Erxleben & Carrasco, 2013; Carrasco, 2011). Discrimination accuracy in the cued trials (Fig. 2d) increased with decreasing filter width in a highly similar fashion for all tested eccentricities. The derived 75% perceptual discrimination thresholds (see Methods) remained stable from the fovea (Th75 0°: σ = 52.84° ± 4.83°; mean ± SEM) up to the furthest tested retinal eccentricity (Th75 3.5°: σ = 52.11° ± 2.91°, 7.0°: σ = 52.91° ± 3.50°, 10.5°: σ = 49.13° ± 2.08°). Accordingly, a repeated-measures ANOVA with the factor eccentricity (0°, 3.5°, 7°, 10.5°) yielded a nonsignificant main effect, F(3, 15) = 0.677, p = 0.579, suggesting a similar discrimination performance from fovea to periphery. The corresponding subsequent Bayesian analysis to quantify the null finding supported the absence of an eccentricity effect (B01 = 6.00), indicating that observers discriminated orientation signals presented up to 10.5° in periphery equally well as in their center of gaze. This shows that local 1/f orientation discrimination performance is largely independent of test signal eccentricity—as long as the signal is presented within the focus of attention. When not experimentally manipulated, attention is normally coupled to the focus of gaze, which explains why the orientation signal could only be well discriminated at the central fixation location. This location is also where observers should anchor their attention focus to maximize chances of capturing the discrimination signal occurring anywhere in the surrounding noise field. The observation that even strong 1/f orientation signals (created with a narrow filter) could not be discriminated peripherally without spatial attention being deployed towards them (Fig. 2c, uncued trials 3.5°–10.5°) demonstrates that the orientation signals do not “pop out,” i.e., they do not themselves attract attention.

In summary, we established a consistent relationship between orientation filter width and discrimination accuracy. Discrimination task difficulty thus can be continuously manipulated by adjusting the orientation filter width. Moreover, and of particular relevance for the utility of the noise protocol, local 1/f orientation discrimination performance is largely independent of visual eccentricity. This is a key advantage over conventional psychophysical protocols, for which discrimination performance naturally decreases with increasing distance from fixation.

We illustrate this common issue in Experiment 1b, using an experimental design that was matched to Experiment 1a (Fig. 2e). The same observers were asked to discriminate the orientation (clockwise vs. counterclockwise) of Gabor patches that had a fixed size, contrast, and spatial frequency and were presented at the different retinal eccentricities used in Experiment 1. Akin to manipulating orientation filter width (Experiment 1a), we varied the tilt angle—a common approach to titrate orientation discrimination task difficulty in a Gabor protocol. Fig. 2f shows the typical eccentricity dependence seen in conventional perceptual tasks: Foveal discrimination performance sharply increased as a function of tilt angle, yielding a low 75% threshold (Th75 0°: β = 5.80° ± 2.10°). Across visual eccentricities, however, orientation discrimination accuracy decreased, requiring larger tilt angles for more eccentric test locations to achieve equivalent 75% discrimination accuracy (Th75 3.5°: β = 11.84° ± 4.29°, 7.0°: β = 24.35° ± 8.54°, 10.5°: β = 19.30° ± 2.96°). The repeated-measures ANOVA that was conducted reveals a significant main effect of eccentricity, F(3, 15) = 6.157, p = 0.006. This decay of visual sensitivity with increasing distance from the fovea is common for conventional perceptual measures and can be counteracted by upscaling the stimulus size (according to cortical magnification), increasing contrast, or lowering spatial frequency information. Experiment 1a shows that such adjustments are not necessary with the pink noise stimulus, since the 1/f property ensures that the same orientation signal can be discriminated equally well at foveal and peripheral locations.

We made use of this feature in Experiment 2, in which we evaluated the protocol’s capability of assessing discrimination performance across the visual field, as well as the potential shaping influence of items on this measurement. We presented five circular frames on a rectangular dynamic 1/f noise field and asked observers to fixate the center of the middle item (Fig. 3a). Following a fixation period, we briefly presented a local 1/f orientation signal at one out of nine locations along the horizontal (± 0°, 2°, 4°, 6°, or 8° relative to the center)—either inside one of the frames or between them. After a masking period, observers indicated their orientation discrimination judgement. Critically, the presented frames were completely task-irrelevant, and the critical orientation signal was equally likely to occur at any of the evenly spaced locations (independent of whether the location was framed by an outline or not). To ensure a comparable discrimination performance across observers, orientation signal strength was individually adjusted prior to the experiment (see Methods).

As suspected, the presence of the visual outlines selectively modulated perception. Compared with the average discrimination accuracy for orientation signals presented outside the frames (52.53 ± 1.91%; mean ± SEM), local discrimination performance was significantly improved whenever the orientation signal occurred within one of the frames. For each observer, we found spatially specific peaks in orientation discrimination accuracy inside frames at all three tested eccentricities (Fig. 3b; framed signal at central 0°: 76.67 ± 5.48% vs. no-frame: p = 0.003; framed signal at ± 4°: 61.71 ± 2.18% vs. no-frame: p = 0.003; framed signal at ± 8°: 69.99 ± 4.27% vs. no-frame: p = 0.003). The consistent sensitivity benefit for signals presented inside a visual structure demonstrates that our perception is markedly shaped by the presence of items in the visual scene. Critically, this item-induced bias was uneven across different eccentricities (effect size 0°: d = 2.63; 4°: d = 2.01; 8°: d = 2.36). This demonstrates that visual structures pose the risk of compromising the attention measurement in a non-systematic way. In line with previous work showing the impact of placeholder objects on attentional modulations of visual perception (Puntiroli et al., 2018; Szinte et al., 2019; Taylor et al., 2015), these findings underline the necessity of an unbiased, item-free approach to map perceptual dynamics across the visual field in the absence of object-like structures.

Discussion

Conventional psychophysical paradigms for assessing visual attention rely on localized test items, which may have the adverse side effect of distorting the attention measurement by shaping visual perception across the scene. We created a full-field 1/f noise stimulus that allows for an unbiased investigation of attention across space and time by meeting the following criteria: (1) The protocol assesses visual attention without object-like structures, to avoid artificially biasing attention towards potential test locations. This is achieved by seamlessly integrating the orientation signal into full-field 1/f noise—preventing both automatic bottom-up biases (i.e., prioritized processing of locations containing visual items as compared with “empty” locations) and strategic top-down biases (i.e., observers anticipating potential test locations and selectively deploying processing resources towards them). (2) To ensure that no information is conveyed about presentation time and duration of the test stimulus, the orientation signal is temporally embedded into a continuously changing full-field noise background. We achieved this by dynamically updating the 1/f noise field, such that local signal onset and offset are concealed by continuous full-field changes. (3) The overall discrimination task difficulty can be precisely adjusted. In the 1/f noise protocol, task difficulty scales with orientation filter width: a narrower filter yields a stronger, and thus better distinguishable, orientation signal. (4) Finally, the orientation signal blends sufficiently well into the background pattern, both spatially and temporally, so that it does not “pop out” (i.e., attracting attention itself), which is the case with conventional, abrupt-onset stimuli (Jonides & Yantis, 1988; Müller & Rabbitt, 1989; Theeuwes, 1991; White et al., 2014).

We conducted a first experiment to verify that the 1/f noise protocol in fact meets the criteria established above. Experiment 1a demonstrates that discrimination accuracy indeed scales with orientation filter width: When presented in the focus of attention, narrower orientation signals were discriminated with increasingly higher accuracy. This validates that task difficulty can be flexibly calibrated—an essential requirement to prevent floor and ceiling effects (i.e., the discrimination task is too hard / easy) and to account for inter-individual performance differences as well as potential learning effects across multiple test sessions or experiments (Hanning et al., 2019a). The relationship between orientation filter width and discrimination performance is reminiscent of the link between contrast strength and visual performance: Increasing stimulus contrast, similar to narrowing the orientation filter, results in enhanced orientation discrimination accuracy (Nachmias, 1967; Pestilli et al., 2009; Skottun et al., 1987). While the effect of stimulus contrast on visual performance has been already linked to neurophysiology (Boynton et al., 1999), future studies need to evaluate a potentially similar relationship between 1/f orientation filter width (and visual performance) and neural responses. Such a link, akin to the established contrast response function, would qualify the 1/f noise stimulus to investigate the computational and neurophysiological mechanisms underlying the effects of different types of attention on visual perception.

Our results furthermore show that the orientation signal blends sufficiently well with the background that it can only be successfully discriminated when it is attended: If the signal was not preceded by a salient cue biasing spatial attention to the test signal location, observers' discrimination performance remained at chance level. This verifies that the orientation signal, as intended, did not “pop out”—which is a common undesired side effect of transient visual signals (Jonides & Yantis, 1988; Müller & Rabbitt, 1989; Theeuwes, 1991; White et al., 2014). Discrimination signals that “pop out” can be assumed to attract attention and therefore to bias the attention measurement. In conventional paradigms, attentional pop-out is typically avoided by reducing the overall baseline performance by increasing the number of distractor items presented alongside the discrimination target (Jonikaitis & Deubel, 2011; Szinte et al., 2018; Wollenberg et al., 2018). Our data demonstrate that the 1/f noise stimulus enables a subtle assessment of attention without employing any distractors. It therefore is a promising tool for the investigation of spatial and temporal perceptual dynamics, also in the context of motor actions. Discrimination signals that capture attention not only prevent valid conclusions about the actual distribution of attention in space, but also interfere with motor programming: We recently observed increased saccade latencies when sudden-onset test targets were presented during movement preparation (Hanning et al., 2019a). This effect is compatible with the phenomenon of saccadic inhibition, whereby a transient change in the scene causes a depression in saccadic frequency approximately 100 ms thereafter (Buonocore & McIntosh, 2008; Reingold & Stampe, 2002). Given the tight coupling of eye movement preparation and visual attention (Deubel & Schneider, 1996; Kowler et al., 1995; Montagnini & Castet, 2007; Rolfs et al., 2011), interrupting saccade preparation may likely also affect the temporal dynamics of visual attention. Embedding the discrimination signal in a continuously changing display, such as a dynamic noise background, prevents this potential interference (Hanning et al., 2019a).

The evaluation of perceptual thresholds across retinal eccentricities confirms that 1/f orientation signals can be equally well distinguished at foveal and peripheral locations. This is an outstanding property specific to the 1/f characteristic of the noise stimulus. As exemplified in Experiment 1b, sensitivity for most visual features naturally decreases with increasing distance from the center of gaze (Itti & Koch, 2001; Levi et al., 1985). Spatiotemporal maps of visual attention created with conventional test stimuli are affected by these perceptual inhomogeneities, which prevents a direct comparison of attentional effects on foveal and peripheral visual performance. To account for this confound, the discrimination signal strength needs to be adjusted separately for each tested retinal eccentricity (e.g., Koenig-Robert & VanRullen, 2011; Szinte et al., 2019), which is time-consuming, comparatively more error-prone, and less flexible. Since local 1/f orientation sensitivity is largely constant across visual eccentricities, the noise stimulus is resistant to visual sensitivity changes across space and thus offers the unique opportunity to directly map attentional modulations of visual perception continuously across space, without the need to increase discrimination signal strength with increasing test eccentricity.

Another key advantage of the noise protocol is its capability of assessing visual sensitivity without actual test items. In Experiment 2 we demonstrate why this is crucial for an unbiased assessment of visual performance across space: The circular outlines that we presented on the otherwise item-free noise field markedly biased visual perception, as they elicited consistent, spatially specific performance benefits inside each visual structure. This verifies the hypothesized shaping impact of even task-irrelevant items on visual sensitivity across space. It is to be expected that conventional paradigms that rely on the presentation of discrete test and distractor items likewise bias processing resources towards the presented stimuli (as compared with item-free space). In addition to this automatic, bottom-up component, observers likely strategically prioritize potential target locations, since those may contain task-relevant information. It has been shown that (un)certainty concerning the target stimulus location—experimentally manipulated by indicating the size of the area to be attended (i.e., the “attention field”) with placeholder items—affects the mechanisms by which attention modulates visual perception and the underlying neuronal response (Herrmann et al., 2010; Li et al., 2021; Reynolds & Heeger, 2009). This demonstrates that the spatial distribution of processing resources across the visual field is markedly shaped by scene-structuring objects—affecting both behavioral and neurophysiological correlates of visual perception—and highlights the relevance of a protocol that allows us to measure attention in an item-free, unbiased manner.

Since the noise protocol is not bound to local test items, the orientation signal can take any shape and size, and can be embedded into its background with soft boundaries for any desired presentation duration. This offers a new opportunity to directly investigate the temporal and spatial properties of the focus of attention and reconcile previous findings based on which it has been characterized as a moving spotlight (e.g., Shulman et al., 1979; Tsal, 1983), as a zoom lens (e.g., Eriksen & James, 1986), a gradient of processing resources (e.g., LaBerge & Brown, 1989), or a Mexican hat (e.g., Cutzu & Tsotsos, 2003; Müller et al., 2005). The noise protocol also allows us to (re)examine the size of the attended area, which had yielded divergent results (e.g., Castiello & Umiltà, 1990; Eriksen & Hoffman, 1972; Nakayama & Mackeben, 1989; Tkacz-Domb & Yeshurun, 2018). Moreover, it can shed new light on a fundamental question of attention research, namely whether attention is space-based or object-based (e.g., Duncan, 1984; Hanning & Deubel, 2022; Lavie & Driver, 1996; Moore et al., 1998; for a review, see Chen, 2012). Finally, accurate estimation of the size and shape of the attention focus, as well as its temporal and spatial dynamics during voluntary (endogenous) or automatic (exogenous) attentional orienting, or prior to goal-directed actions has crucial implications for conceptual and computational models of visual attention (e.g., Denison et al., 2021; Jigo et al., 2021; Maunsell, 2015; Reynolds & Heeger, 2009; Schneider, 1995).

Objects undeniably define the scene (Bar, 2004; Võ et al., 2019) and guide our actions and memories (Draschkow & Võ, 2017; Helbing et al., 2020; Torralba et al., 2006). For this reason, there are numerous scientific questions for which an object-based measurement is preferable. Several other questions, however, are better investigated in the absence of localized test items. For instance, when evaluating the distribution of attention surrounding salient or relevant objects (exogenous and endogenous attention) or when assessing the spread of attention across visual structures (object-based attention) or between presented items (e.g., during visual search). Moreover, the item-free 1/f noise protocol is particularly suitable to study attentional modulations in the context of motor actions (premotor attention—for a recent proof of concept see Hanning & Deubel, 2022) and offers promising clinical applications, as it for instance allows one to precisely map out regions with perceptual or attentional deficits.

To conclude, the presented protocol offers several decisive advantages over conventional paradigms that rely on transient, localized test stimuli to measure the perceptual correlates of visual attention. By conveying neither spatial nor temporal information on where or when the test signal is presented, the stimulus does not bias observers’ expectations and thus enables an undistorted attention assessment. Since visual sensitivity for local 1/f orientation signals does not fall off with retinal eccentricity, the protocol allows one to directly compare performance from fovea to periphery, without the need to scale the signal strength with retinal distance. These unique features render the dynamic noise protocol an ideal tool to map the distribution of visual attention across space and time—and to address research questions whose investigation has been limited or impossible with conventional, object-based approaches.

Notes

The orientation signal was presented inside a circular window of radius r, with softened boundaries that were created with a cosine ramp of width w.

References

Anton-Erxleben, K., & Carrasco, M. (2013). Attentional enhancement of spatial resolution: linking behavioural and neurophysiological evidence. Nature Reviews Neuroscience, 14(3), 188–200.

Bar, M. (2004). Visual objects in context. Nature Reviews Neuroscience, 5(8), 617–629.

Boynton, G. M., Demb, J. B., Glover, G. H., & Heeger, D. J. (1999). Neuronal basis of contrast discrimination. Vision Research, 39(2), 257–269.

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436.

Buonocore, A., & McIntosh, R. D. (2008). Saccadic inhibition underlies the remote distractor effect. Experimental Brain Research, 191(1), 117–122.

Carrasco, M. (2011). Visual attention: The past 25 years. Vision Research, 51(13), 1484–1525.

Carrasco, M., & Barbot, A. (2019). Spatial attention alters visual appearance. Current Opinion in Psychology, 29, 56–64.

Castiello, U., & Umiltà, C. (1990). Size of the attentional focus and efficiency of processing. Acta Psychologica, 73(3), 195–209.

Chen, Z. (2012). Object-based attention: A tutorial review. Attention, Perception, & Psychophysics, 74(5), 784–802.

Cornelissen, F. W., Peters, E. M., & Palmer, J. (2002). The Eyelink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behavior Research Methods, Instruments, & Computers, 34(4), 613–617.

Cutzu, F., & Tsotsos, J. K. (2003). The selective tuning model of attention: psychophysical evidence for a suppressive annulus around an attended item. Vision Research, 43(2), 205–219.

Denison, R. N., Carrasco, M., & Heeger, D. J. (2021). A dynamic normalization model of temporal attention. Nature Human Behaviour, 1–12.

Deubel, H., & Schneider, W. X. (1996). Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision Research, 36(12), 1827–1837.

Draschkow, D., & Võ, M. L. H. (2017). Scene grammar shapes the way we interact with objects, strengthens memories, and speeds search. Scientific Reports, 7(1), 1–12.

Duncan, J. (1984). Selective attention and the organization of visual information. Journal of Experimental Psychology: General, 113(4), 501.

Eriksen, C. W., & Hoffman, J. E. (1972). Temporal and spatial characteristics of selective encoding from visual displays. Perception & Psychophysics, 12(2), 201–204.

Eriksen, C. W., & James, J. D. S. (1986). Visual attention within and around the field of focal attention: A zoom lens model. Perception & Psychophysics, 40(4), 225–240.

Field, D. J. (1987). Relations between the statistics of natural images and the response properties of cortical cells. Journal of the Optical Society of America A, 4(12), 2379–2394.

Gandhi, S. P., Heeger, D. J., & Boynton, G. M. (1999). Spatial attention affects brain activity in human primary visual cortex. Proceedings of the National Academy of Sciences, 96(6), 3314–3319.

Hanning, N. M., & Deubel, H. (2020). Attention capture outside the oculomotor range. Current Biology, 30(22), R1353–R1355.

Hanning, N. M., & Deubel, H. (2022). The effect of spatial structure on presaccadic attention costs and benefits assessed with dynamic 1/f noise. Journal of Neurophysiology, 127(6), 1586–1592.

Hanning, N. M., Deubel, H., & Szinte, M. (2019a). Sensitivity measures of visuospatial attention. Journal of Vision, 19(12), 17.

Hanning, N. M., Szinte, M., & Deubel, H. (2019b). Visual attention is not limited to the oculomotor range. Proceedings of the National Academy of Sciences, 116(19), 9665–9670.

Helbing, J., Draschkow, D., & Võ, M. L. H. (2020). Search superiority: Goal-directed attentional allocation creates more reliable incidental identity and location memory than explicit encoding in naturalistic virtual environments. Cognition, 196, 104147.

Herrmann, K., Montaser-Kouhsari, L., Carrasco, M., & Heeger, D. J. (2010). When size matters: attention affects performance by contrast or response gain. Nature Neuroscience, 13(12), 1554–1559.

Hillyard, S. A., & Anllo-Vento, L. (1998). Event-related brain potentials in the study of visual selective attention. Proceedings of the National Academy of Sciences, 95(3), 781–787.

Itti, L., & Koch, C. (2001). Computational modelling of visual attention. Nature Reviews Neuroscience, 2(3), 194–203.

Jigo, M., & Carrasco, M. (2020). Differential impact of exogenous and endogenous attention on the contrast sensitivity function across eccentricity. Journal of Vision, 20(6), 11.

Jigo, M., Heeger, D. J., & Carrasco, M. (2021). An image-computable model of how endogenous and exogenous attention differentially alter visual perception. Proceedings of the National Academy of Sciences, 118(33), e2106436118.

Jonides, J., & Yantis, S. (1988). Uniqueness of abrupt visual onset in capturing attention. Perception & Psychophysics, 43(4), 346–354.

Jonikaitis, D., & Deubel, H. (2011). Independent allocation of attention to eye and hand targets in coordinated eye-hand movements. Psychological Science, 22(3), 339–347.

Kass, R. E., & Raftery, A. E. (1995). Bayes factors. Journal of the American Statistical Association, 90(430), 773–795.

Koenig-Robert, R., & VanRullen, R. (2011). Spatiotemporal mapping of visual attention. Journal of Vision, 11(14), 12.

Kowler, E., Anderson, E., Dosher, B., & Blaser, E. (1995). The role of attention in the programming of saccades. Vision Research, 35(13), 1897–1916.

Krekelberg, B. (2022). A Matlab package for Bayes Factor statistical analysis. GitHub. https://zenodo.org/badge/latestdoi/162604707.

LaBerge, D., & Brown, V. (1989). Theory of attentional operations in shape identification. Psychological Review, 96(1), 101–124.

Lavie, N., & Driver, J. (1996). On the spatial extent of attention in object-based visual selection. Perception & Psychophysics, 58(8), 1238–1251.

Lee, D. K., Itti, L., Koch, C., & Braun, J. (1999). Attention activates winner-take-all competition among visual filters. Nature Neuroscience, 2(4), 375–381.

Levi, D. M., Klein, S. A., & Aitsebaomo, A. P. (1985). Vernier acuity, crowding and cortical magnification. Vision Research, 25(7), 963–977.

Li, H. H., Pan, J., & Carrasco, M. (2021). Different computations underlie overt presaccadic and covert spatial attention. Nature Human Behaviour, 1–14.

Macmillan, N. A., & Creelman, C. D. (1991). Detection theory: A user’s guide. Cambridge University Press.

Maunsell, J. H. (2015). Neuronal mechanisms of visual attention. Annual Review of Vision Science, 1, 373–391.

Montagnini, A., & Castet, E. (2007). Spatiotemporal dynamics of visual attention during saccade preparation: Independence and coupling between attention and movement planning. Journal of Vision, 7(14), 8.

Moore, C. M., Yantis, S., & Vaughan, B. (1998). Object-based visual attention: Evidence from perceptual completion. Psychological Science, 9, 104–110.

Müller, H. J., & Rabbitt, P. M. (1989). Reflexive and voluntary orienting of visual attention: time course of activation and resistance to interruption. Journal of Experimental Psychology: Human Perception and Performance, 15(2), 315–330.

Müller, N. G., Mollenhauer, M., Rösler, A., & Kleinschmidt, A. (2005). The attentional field has a Mexican hat distribution. Vision Research, 45(9), 1129–1137.

Nachmias, J. (1967). Effect of exposure duration on visual contrast sensitivity with square-wave gratings. JOSA, 57(3), 421–427.

Nakayama, K., & Mackeben, M. (1989). Sustained and transient components of focal visual attention. Vision Research, 29(11), 1631–1647.

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10(4), 437–442.

Pestilli, F., Ling, S., & Carrasco, M. (2009). A population-coding model of attention’s influence on contrast response: Estimating neural effects from psychophysical data. Vision Research, 49(10), 1144–1153.

Posner, M. I., Snyder, C. R., & Davidson, B. J. (1980). Attention and the detection of signals. Journal of Experimental Psychology, 109(2), 160–174.

Puntiroli, M., Kerzel, D., & Born, S. (2018). Placeholder objects shape spatial attention effects before eye movements. Journal of Vision, 18(6), 1.

Reingold, E. M., & Stampe, D. M. (2002). Saccadic inhibition in voluntary and reflexive saccades. Journal of Cognitive Neuroscience, 14(3), 371–388.

Reynolds, J. H., & Heeger, D. J. (2009). The normalization model of attention. Neuron, 61(2), 168–185.

Reynolds, J. H., Pasternak, T., & Desimone, R. (2000). Attention increases sensitivity of V4 neurons. Neuron, 26(3), 703–714.

Rolfs, M., & Carrasco, M. (2012). Rapid simultaneous enhancement of visual sensitivity and perceived contrast during saccade preparation. Journal of Neuroscience, 32(40), 13744–13752.

Rolfs, M., Jonikaitis, D., Deubel, H., & Cavanagh, P. (2011). Predictive remapping of attention across eye movements. Nature Neuroscience, 14(2), 252–256.

Rouder, J. N., Morey, R. D., Speckman, P. L., & Province, J. M. (2012). Default Bayes factors for ANOVA designs. Journal of Mathematical Psychology, 56(5), 356–374.

Santee, J. L., & Egeth, H. E. (1982). Do reaction time and accuracy measure the same aspects of letter recognition? Journal of Experimental Psychologie: Human Perception and Performance, 8(4), 489–501.

Schneider, W. X. (1995). VAM: A neuro-cognitive model for visual attention control of segmentation, object recognition, and space-based motor action. Visual Cognition, 2(2-3), 331–376.

Shulman, G. L., Remington, R. W., & Mclean, J. P. (1979). Moving attention through visual space. Journal of Experimental Psychology: Human Perception and Performance, 5(3), 522–526.

Shurygina, O., Pooresmaeili, A., & Rolfs, M. (2021). Pre-saccadic attention spreads to stimuli forming a perceptual group with the saccade target. Cortex, 140, 179–198.

Simoncelli, E. P., & Olshausen, B. A. (2001). Natural image statistics and neural representation. Annual Review of Neuroscience, 24(1), 1193–1216.

Skottun, B. C., Bradley, A., Sclar, G., Ohzawa, I., & Freeman, R. D. (1987). The effects of contrast on visual orientation and spatial frequency discrimination: a comparison of single cells and behavior. Journal of Neurophysiology, 57(3), 773–786.

Szinte, M., Carrasco, M., Cavanagh, P., & Rolfs, M. (2015). Attentional trade-offs maintain the tracking of moving objects across saccades. Journal of Neurophysiology, 113(7), 2220–2231.

Szinte, M., Jonikaitis, D., Rangelov, D., & Deubel, H. (2018). Pre-saccadic remapping relies on dynamics of spatial attention. eLife, 7, e37598.

Szinte, M., Puntiroli, M., & Deubel, H. (2019). The spread of presaccadic attention depends on the spatial configuration of the visual scene. Scientific Reports, 9, 14034.

Taylor, J. E. T., Chan, D., Bennett, P. J., & Pratt, J. (2015). Attentional cartography: mapping the distribution of attention across time and space. Attention, Perception, & Psychophysics, 77(7), 2240–2246.

Theeuwes, J. (1991). Exogenous and endogenous control of attention: The effect of visual onsets and offsets. Perception & Psychophysics, 49(1), 83–90.

Tkacz-Domb, S., & Yeshurun, Y. (2018). The size of the attentional window when measured by the pupillary response to light. Scientific Reports, 8, 11878.

Torralba, A., Oliva, A., Castelhano, M. S., & Henderson, J. M. (2006). Contextual guidance of eye movements and attention in real-world scenes: the role of global features in object search. Psychological Review, 113(4), 766–786.

Treue, S. (2001). Neural correlates of attention in primate visual cortex. Trends in Neurosciences, 24(5), 295–300.

Tsal, Y. (1983). Movement of attention across the visual field. Journal of experimental Psychology: Human Perception and Performance, 9(4), 523–530.

Virsu, V., & Rovamo, J. (1979). Visual resolution, contrast sensitivity, and the cortical magnification factor. Experimental Brain Research, 37(3), 475–494.

Võ, M. L.-H., Boettcher, S. E. P., & Draschkow, D. (2019). Reading Scenes: How Scene Grammar Guides Attention and Aids Perception in Real-World Environments.

White, A. L., Lunau, R., & Carrasco, M. (2014). The attentional effects of single cues and color singletons on visual sensitivity. Journal of Experimental Psychology: Human Perception and Performance, 40(2), 639–652.

Wollenberg, L., Deubel, H., & Szinte, M. (2018). Visual attention is not deployed at the endpoint of averaging saccades. PLoS Biology, 16(6), e2006548.

Yeshurun, Y., & Carrasco, M. (1998). Attention improves or impairs visual performance by enhancing spatial resolution. Nature, 396(6706), 72–75.

Acknowledgements

We are grateful to the members of the Deubel lab, in particular to David Aagten-Murphy, and to Dejan Draschkow for helpful comments and discussions.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was supported by grants of the Deutsche Forschungsgemeinschaft (DFG) to HD (DE336/5-1) and a Marie Skłodowska-Curie individual fellowship by the European Commission to NMH (898520).

Author information

Authors and Affiliations

Contributions

Conceptualization and methodology: NMH, HD; Software: NMH; Investigation: NMH; Formal analysis: NMH; Visualization: NMH; Writing—original draft: NMH; Writing—review & editing: NMH, HD; Funding acquisition: NMH, HD.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

The protocols for the study were approved by the ethical review board of the Faculty of Psychology and Education of the Ludwig-Maximilians-Universität München (approval number 13_b_2015), in accordance with German regulations and the Declaration of Helsinki.

Consent to participate

All observers gave written informed consent.

Additional information

Open Practices Statement

Eyetracking and behavioral data are publicly available at the Open Science Framework (OSF) via the following link: https://osf.io/34j6d. Custom MATLAB code for generating the 1/f stimulus is available on GitHub: https://github.com/nmhanning/oofnoise. None of the experiments was preregistered.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Significance statement

Where (and when) we focus our attention can be experimentally quantified via visual sensitivity: attending to a certain visual signal results in better detection and feature discrimination performance. This approach is widely used, but poses an unrecognized dilemma: the test signal itself, typically a grating or letter stimulus, biases observers’ perception and expectations—and thus also the attention measurement. We developed a stimulus that does not require test items. The signal to measure attention is seamlessly embedded in a dynamic 1/f noise field, so that neither spatial nor temporal information about signal presentation is conveyed. Unlike with conventional approaches, perception and expectations in this new protocol remain unbiased, and the undistorted spatial and temporal spread of visual attention can be measured.

Supplementary Information

Demonstration of stimuli and design of Experiment 1a (cued trial). (MP4 11495 kb)

Demonstration of stimuli and design of Experiment 1a (uncued trial). (MP4 11433 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hanning, N.M., Deubel, H. A dynamic 1/f noise protocol to assess visual attention without biasing perceptual processing. Behav Res 55, 2583–2594 (2023). https://doi.org/10.3758/s13428-022-01916-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-022-01916-2