Abstract

Event detection is the conversion of raw eye-tracking data into events—such as fixations, saccades, glissades, blinks, and so forth—that are relevant for researchers. In eye-tracking studies, event detection algorithms can have a serious impact on higher level analyses, although most studies do not accurately report their settings. We developed a data-driven eyeblink detection algorithm (Identification-Artifact Correction [I-AC]) for 50-Hz eye-tracking protocols. I-AC works by first correcting blink-related artifacts within pupil diameter values and then estimating blink onset and offset. Artifact correction is achieved with data-driven thresholds, and more reliable pupil data are output. Blink parameters are defined according to previous studies on blink-related visual suppression. Blink detection performance was tested with experimental data by visually checking the actual correspondence between I-AC output and participants’ eye images, recorded by the eyetracker simultaneously with gaze data. Results showed a 97% correct detection percentage.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Accurate eyeblink detection is a crucial issue in scientific research for at least two reasons. First, some researchers in various fields (e.g., HCI, psychopathology, ergonomics, cognitive psychology, etc.) are directly interested in the study of the eyeblink, since it is a complex phenomenon that is believed to reflect the influence of higher nervous processes (Bentivoglio et al., 1997; Fogarty & Stern, 1989; Goldstein, Bauer, & Stern, 1992; Orchard & Stern, 1991; Stern, Walrath, & Goldstein, 1984). Within the scientific literature, it is well known that the eyeblink rate is a good indicator of fatigue: The results from previous research have reported that the number of blinks increases as a function of time on task (Fukuda, Stern, Brown, & Russo, 2005; Stern, Boyer, & Schroeder, 1994). Nonetheless, it may be related to aspects of visual (Brookings, Wilson, & Swain, 1996; Veltman & Gaillard, 1996, 1998) and mental (Holland & Tarlow, 1972, 1975; Recarte, Pérez, Conchillo, & Nunes, 2008) workload. Other parameters have also been investigated, such as eyeblink waveform and duration. For example, it is well known that very long blinks are indicators of drowsiness, whereas very short ones are often related to sustained attention (Ahlstrom & Friedman-Berg, 2006; Caffier, Erdmann, & Ullsperger, 2003; Ingre, Åkerstedt, Peters, Anund, & Kecklund, 2006; Marshall, 2008).

Second, other researchers are indirectly faced with the eyeblink, since it often represents a source of artifacts within both electroencephalographic (EEG) and eye-tracking data. For the former, blinks constitute a serious problem, since their signals can be orders of magnitude larger than brain-generated electrical potentials, and methods for eyeblink artifact removal have been proposed (Joyce, Gorodnitsky, & Kutas, 2004). For the latter, such artifacts can lead to significant data alterations, especially in the case of pupil diameter (PD) recording, since eye-tracking devices typically output unusual high and low PD recordings on either side of a blink (Marshall, 2000).

Our new algorithm for eyeblink detection originates from the necessity of performing a clean signal analysis in the framework of research on cognitive workload detection, wherein PD was chosen as the dependent measure. It is widely known that the human pupil dilates as a result of cognitive effort (Bailey, Busbey, & Iqbal, 2007; Beatty, 1982; Beatty & Lucero-Wagoner, 2000; Goldwater, 1972; Kahneman, 1973), and most studies have been conducted by averaging PD values for each experimental condition, which gives us information only at an ordinal level (e.g., average pupil size is higher in task A than in task B). Only recently, a patented method (Marshall, 2000) has been introduced, the index of cognitive activity (ICA), which has two major advantages: First, it allows separating the pupil light reflex from the dilation reflex; second, it allows precisely locating of cognitive load in the time dimension (Marshall, 2002). However, blink artifacts will severely affect the results of both averaged and ICA data. In the first case, abnormal values will affect both mean and variance values; in the second one, artifact-related high values may lead to fake cognitive load point detections, since the ICA is derived from the identification of abrupt positive changes in the PD signal by means of wavelet analysis. Thus, preprocessing of the raw data is necessary for identifying such artifacts and replacing them with linearly interpolated values.

It should, then, be agreed that eyeblink detection is a relevant task in eye-tracking research, both from a research and from an artifact-handling perspective. However, as for most event detection tasks (e.g., fixation, saccade, glissade, etc.), no de facto standards exist, and this makes the comparison of different studies more difficult (Nyström & Holmqvist, 2010). Moreover, as was previously stated, it is believed that blinks (like other eye movements) reflect human cognitive processing, and the algorithms employed for event detection can have a tough impact on higher level analyses, so that different event detection algorithms can lead to different interpretations even when the same raw data are analyzed (Salvucci & Anderson, 2001; Salvucci & Goldberg, 2000).

Eyeblink detection methods

Contact-based recording

Contact-based recording methods have the great advantage of providing accurate eyelid position data and are best suited when the primary scope of the research is related to eyeblink parameter analysis. Different recording methods have been used in eyeblink research, such as attaching lever systems or reflecting mirrors for mechanical or optical eyelid motion transduction, attaching a string to the lid and measuring closure and opening with a potentiometer, or electromyographic (EMG) recording from the muscles related to lid movement (Stern et al., 1984).

Generally, when researchers are directly interested in the eyeblink event, the attachment of electrodes placed on the skin—above and below the eye—ensures precise blink data gathering. Stern et al. (1984) claimed that this technique, known as vertical electrooculography (VEOG), faithfully reproduces eyelid position. In fact, most eyeblink-related studies have been conducted by means of VEOG, given the relative ease of identifying blink events from the morphological features of VEOG signals. Unfortunately, no standards exist, and different studies have reported heterogeneous definitions of various blink parameters, such as start point, endpoint, baseline, and so forth.

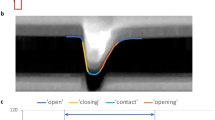

A quite detailed definition of blink waveform parameters has been proposed (Caffier et al., 2003), which, we believe, could be a good basis for outlining a standard. Caffier et al. proposed a schematic representation of a blink signal (Fig. 1a) wherein it is possible to compute higher level parameters (such as opening time, closing time, and, consequently, blink duration) by first identifying lower level parameters such as baseline, peak amplitude, closing flank, and reopening flank. Even if Caffier et al. used an ad hoc self-developed portable device that differed from VEOG, the signal obtained from such a device clearly reflected all the main features of a VEOG signal. It should be noted that this kind of signal cannot be obtained with any contact-free eye-tracking apparatus based on the corneal reflection technique, like the one employed in the present study. Other methods for eyeblink detection exist, such as infrared oculography (Calkins, Katsanis, Hammer, & Iacono, 2001) and different ad hoc solutions (see, e.g., Caffier et al., 2003; Kennard & Glaser, 1964). The detailed review of such methods goes beyond the scope of the present article, whose focus is on eyeblink detection in low-speed eye-tracking protocols.

a Theoretical eyeblink waveform. Regression lines (ā, ē) are fitted to both flanks of the original waveform; the blink start point and endpoint result from intersection (α, β) of such lines with the baseline. If a 50% peak amplitude threshold is used, the start point and endpoint change into (γ, δ), and the measured blink duration is consequentially shorter. b Actual blink waveform recorded with VEOG

Contact-free recording

Online blink detection with computer vision

Many recent computer vision studies have described different algorithms for online eyeblink detection, wherein eyeblinks are identified from a given video stream coming from a standard camera by means of image recognition (Chau & Betke, 2005; Cohn, Xiao, Moriyama, Ambadar, & Kanade, 2003; Kawato & Tetsutani, 2004; Lalonde, Byrns, Gagnon, Teasdale, & Laurendeau, 2007; Morris, Blenkhorn, & Zaidi, 2002; Noguchi, Nopsuwanchai, Ohsuga, & Kamakura, 2007; Ohno, Mukawa, & Kawato, 2003; Smith, Shah, & da Vitoria Lobo, 2003; Sukno, Pavani, Butakoff, & Frangi, 2009); this solution has the advantage of being highly unobtrusive, and blinks—together with other facial features—can be tracked in real time. Sukno et al. tested their algorithm by comparison with ground truth obtained from manual annotations of 861 blinks, which were visually inspected by the authors. Blink parameters—start point and endpoint—were accurately estimated in that study, which has the great value of a consistent testing of the algorithm versus human-inspected images. However, this kind of solution is appropriate for drowsiness detection, but not for cognitive workload assessment, since accurate monitoring of the human pupil requires that the eye is illuminated with an infrared light, which is not the case with a standard camera. Computer vision is definitely best suited for online eyeblink detection in applied research contexts, where detection needs to be performed without physical constraints.

Offline blink detection in eye-tracking protocols

Protocols can be defined as sequences of actions recorded during the execution of some task; they are used by researchers to investigate people’s cognitive strategies (Salvucci & Anderson, 2001). In eye-tracking studies, protocols are stored as log files that can be analyzed offline; the actions herein contained (e.g., fixations, saccades, blinks, etc.) correspond to oculomotor events that need to be detected—from the raw gaze data—with dedicated algorithms. An eye-tracking log file is a table wherein each row contains data at a given moment, depending on the sampling rate of the eyetracker (Table 1). With 50-Hz sampling, we get 50 samples each second; that is, we have a data point (i.e., a table row) every 20 ms. Every column of the table is relative to a specific variable: We have one column for the time stamp, one for the pupil diameter, and so forth. The number of columns depends on the eyetracker type, as well as on the settings adopted by the researcher. In the examples we give in the present study, the following columns are used (Table 1):

-

column 1.

Time stamp: time stamp in microseconds since the eye-tracking system has been started. We can verify the sampling rate of 50 Hz by the increment of approximately 20,000 μs from each row to the following one (Table 1).

-

column 2.

L Dia X: measured horizontal (X) pupil diameter (Dia) of the left eye (L)

-

column 3.

L Dia Y: measured vertical (Y) pupil diameter (Dia) of the left eye (L)

-

column 4.

L POR X: measured point of regard (POR), horizontal coordinate (X)

-

column 5.

L POR Y: measured point of regard (POR), vertical coordinate (Y)

The POR coordinates tell us where the participant is looking at a given moment; think of the PC screen as a Cartesian plan, with the origin in its upper left corner. Since the SMI RED system outputs identical L Dia X and L Dia Y values, we programmed the I-AC to work on column 2. Thus, column 3 can be used as a reference for comparing the results of the I-AC with the originally recorded data. Moreover, visual inspection of the eye images (see the supplemental material) will reveal whether or not the rules that were applied did actually succeed in achieving their goal.

The eyeblink-related information contained in eye-tracking protocols is different from both that obtained with VEOG and that obtained with image recognition software: In eye tracking, we have no direct measurement of eyelid motion or position; thus, eyeblink information needs to be estimated from other sources of information. Although there is a lack of published blink detection algorithms for eye-tracking protocols, the most common approach is to detect blinks as samples in which the pupil is not detected (e.g., Smilek, Carriere, & Cheyne, 2010). This sounds quite straightforward; however, preprocessing of pupil data is essential to avoid high false positive detection percentages (see the Results section): when a blink is made, the eyetracker should register zero values in all the columns (except for the time stamp) depicted in Table 1, during the time in which the eyelid covers the pupil. If so, it will be relatively easy to detect eyeblinks as contiguous zero observations. Unfortunately, as the eyelid passes over the eyeball, abnormal pupil values, besides zeros, are often recorded, because the eyetracker image recognition task is heavily challenged by this situation (Table 1, column 3). By visual inspection of log files, we noted such artifacts to be systematic: thus, we decided to use them as a starting point for eyeblink detection.

A new algorithm for eyeblink detection

Eye-tracking equipment manufacturers provide integrated software for data analysis, and blink detection is often part of it. Unfortunately, source code is not provided so that researchers could modify anything or, at least, get detailed knowledge of how an algorithm works. Therefore, our new algorithm I-AC will hereinafter be described in pseudocode that can be easily translated into Microsoft’s Excel VBA, a programming language that can easily be found in most laptop and desktop computers.

From an implementation perspective, the I-AC algorithm can be seen as having two parts: a preprocessing phase and a blink detection phase.

During the preprocessing phase, artifacts in pupil diameter within blink observations are detected and replaced with zero values. This is done to ensure that zero pupil values correspond to actual moments of full pupil occlusion by the eyelid; this correspondence was manually checked, by the authors, by visual inspection of the eye images shot by the eyetracker camera. Results showed this method to work with a highly acceptable accuracy.

The blink detection phase finally detects the eyeblinks as contiguous zero observations, calculating a blink start point and endpoint and, consequently, the blink duration.

Preprocessing

Calculating thresholds

Artifacts are typically abnormal pupil diameter values; that is, they can be seen as the outliers of the pupil diameter value distribution. In order to classify an observation as an artifact, we first calculate the mean (μ) and the standard deviation (σ) of such a distribution (zero values are excluded from such calculations). Second, we set a low (low threshold) and a high (high threshold) threshold as follows:

The thresholding method has the advantage of being adaptive, since thresholds are calculated for each different log file and are tailored to that specific set of pupil values. Thus, we obtain different thresholds for each experimental trial for each participant. For the I-AC, we presumed that each observation o i has a preceding (o p ) and a following (o f ) observation. We consider an observation o i an artifact, o a,when its value is different from zero and below the low_threshold or above the high_threshold:

Setting artifacts to zero

Zero observations

The first set of rules searches for zero observations o 0 in the pupil diameter column. When a zero observation o 0 is encountered, two different rules are applied to set an artifact o a to zero:

-

a)

If the preceding observation o p is an artifact o a, then o p is set to zero:

-

b)

If the following observation o f is an artifact o a, then o f is set to zero:

Aligning data

The second set of rules searches, in the pupil diameter column, for observations o i that are preceded or followed by a zero observation o 0. In these two cases, the following rules are applied (Table 1b):

-

c)

If the preceding observation o p is a zero observation o 0 and the current observation o i has no valid gaze point, then o i is set to zero:

-

d)

If the following observation o f is a zero observation o 0 and the current observation o i has no valid gaze point, then o i is set to zero:

An invalid gaze point is herein defined as having coordinates that, summed together, result in a number that is lower than 10. Such a definition was adopted since we noted that artifacts may happen even in the gaze coordinates columns. In this cases, values between 0 and 1 or, eventually, negative values are recorded (Table 1a). It might be possible that these values would change if a different eyetracker were used, since eye-tracking systems deal with loss of eye position information in a variety of ways (Gitelman, 2002).

Validating data recording

The third rule directly searches for artifacts o a and applies the following:

-

e)

If the artifact o a has no valid gaze point, then o a is set to zero:

Once the preprocessing phase has been carried out, all the data points corresponding to instants of full pupil occlusion by the eyelid should report a zero in the pupil diameter column. The three different steps may be executed in different order, and each rule should be iterated in order to recursively cover the log file several times until no more rules will apply any changes to the pupil data. In the present study, each single rule was iterated nine times before the next rule was triggered. We used the following order:

Blink detection

The blink detection routine searches for zero observations o 0 in the preprocessed pupil column data. When a o 0 is found, a blink event is created. Blink start points and endpoints need then to be estimated, since we gather no direct eyelid position data from the eyetracker.

The choice of the start point and endpoint is an important matter, since it will directly affect the resulting measured blink duration, and different definitions, when available, have been found in studies in the literature conducted with VEOG (Stern et al., 1984). Since the exact point where the blink ends cannot be accurately estimated (Kennard & Glaser, 1964; Stern et al., 1984), the most common approach is to use a fixed threshold, which is usually set to 50% or 70% of blink amplitude (Fig. 1a). Blink start and end are then derived from the intersection of this threshold with the opening and closing flanks of the blink VEOG signal. It will be apparent that the higher the threshold, the shorter the resulting blink duration, and vice versa.

From our perspective, which is driven from a human–machine interaction research framework, the criterion for defining blink start point and endpoint relies on blink visual suppression, since our concern is with whether or not the person is seeing information (e.g., on a screen) at a given time. Thus, we define blink start as the presumed moment at which the blink-related visual suppression begins; blink end will be similarly defined as the presumed moment at which the person is actually regaining visual perception, on the basis of previous studies of human visual suppression.

Blink start estimation

We set blink start (b_s) as the moment preceding by 60 ms the first zero observation o 0 of the blink in the preprocessed data–that is, 60 ms before the eyelid fully obscures the pupil. Several reasons support this choice. First, visual inspection of eye images revealed that lids actually start their descent at such a moment in the majority of participants, with only some people starting the descent 40 ms before the first o 0. The 60-ms value could even be set adaptively for each participant; however, this would require preventive visual inspection to adjust the algorithm setting for each participant. Second, we can reasonably assume that vision is already inhibited at the b_s, since several studies have demonstrated that vision inhibition onset occurs slightly before the descending upper lid begins to cover the pupil (Volkmann, Riggs, Ellicott, & Moore, 1982; Volkmann, Riggs, & Moore, 1980; Wibbenmeyer, Stern, & Chen, 1983). This phenomenon, similar to saccadic suppression (Zuber & Stark, 1966), may reach its maximum value by 30–40 ms before the upper lid begins to cover the pupil, and recovery from suppression is gradual over a period of 100–200 ms after blink onset (Volkmann, 1986). Third, results show a good overall accuracy when the 60-ms value is selected (see the Results section).

Blink end estimation

We define blink end (b_e) as the first observation o i following the contiguous zero observations o 0 of the blink in the preprocessed data. At this time, the eyelid should be in the reopening phase, partially covering the pupil, but in such a way that the participant is already able to gain visual information. Support for this definition comes from the fact that blink-related visual suppression is systematically more pronounced during the closing phase than during the reopening one (Volkmann, 1986). Moreover, by the time the pupil is being uncovered again during the blink, suppression is substantially diminished, in a mechanism that seems to rapidly enable recapture of the visual scene as the eye reopens (Volkmann et al., 1982). One could notice this phenomenon in front of a mirror. Close your eyes, then start reopening slowly. You will notice that you are already able to see (with some blur caused by the eyelashes) even if your pupil is partially covered by the eyelid and full visual acuity has not been recovered yet. Your (voluntary) blink has now ended.

Blink duration calculation

Blink duration b_d is computed as the difference between the blink end b_e and the blink start b_s time stamp values:

Thus, the computed b_d should correspond to the actual time of visual intake interruption caused by the blink event. When the blink detection phase is complete, linear interpolation of zero data points o 0 can be easily carried out. Additionally, artifacts o a lying outside blink events may be linearly interpolated to obtain a smoother pupil diameter signal.

Data used for algorithm evaluation

The data for testing the algorithm come from a laboratory experiment whose primary scope was the detection of mental workload. Besides recording the eye-tracking log files, we recorded the participants’ eye images shot from the eyetracker cameras. In such a way, we obtained a database of images that we could use for visual inspection to see whether or not our algorithm was actually detecting eyeblinks (see the supplemental material).

Participants

Twenty-four undergraduate students (13 male, Mage = 26, min = 21, max = 33, SD = 4) of the Technische Universität Berlin took part in the experiment. They were informed that they could give up at any time for any reason during the experiment. All the participants were given a €5 reward for every 30 min spent inside the laboratory.

Equipment

A SMI RED binocular remote eyetracker was used, with a nine-point calibration and 50-Hz sampling rate. Such a system tracks the participant’s gaze coordinates and provides pupil diameter values by means of corneal reflection and infrared illumination of the eye, respectively. The RED was attached to the lower side of a 17-in. (38 × 30 cm) LCD screen (1,280 × 1,024 pixels), where the stimuli were presented by means of self-developed software. The screen stood on a 72-cm-high office desk; the average distance between participants’ eyes and the screen was 65 cm. A two-button remote controller was given to participants so that they could manually respond to the experimental stimuli.

All the participants were equipped with a Brain Products ActiCap for EEG recording with 32 channels and a sampling rate of 1000 Hz. Four additional electrodes were placed for recording the vertical EOG signal of the right eye and the horizontal EOG signal of both eyes.

Task

The N-back task (Kirchner, 1958) was chosen: during each 3-min-lasting trial the participants were shown 60 one-digit numbers. Each number was displayed for 1 s, and 2 s separated the offset of the previous number from the onset of the following one. For each of the numbers, the participants’ task was to report whether the current number was the same as the preceding one (match) or not (mismatch). Participants responded by pressing the left (for match) or right (for mismatch) button on the remote controller. Of the 60 numbers shown on each trial, only 18 were targets.

Independent variables

N-back difficulty

We manipulated the working memory task load by introducing two levels of difficulty of the N-back task (Fig. 2). In the N1 condition, participants had to report about the match or mismatch of the currently displayed number with the previous one. In the N2 condition they had to report about the match or mismatch with the last but one number; thus, they needed to manipulate more information within working memory.

N-back visual presentation

We developed two versions of the N-back task. In the scattered version, the numbers appeared all over the screen at pseudorandom locations; in the nonscattered version, all the numbers appeared always in the center of the screen. In order to minimize the light reflex on the participants’ pupils, the N-back task was always presented with a black background. The digits appeared in black within a 52 × 72 pixel white rectangle.

Ambient lighting

A office neon (OSRAM L 58 W/840 Lumilux Cool White) light placed on the ceiling (height: 280 cm from the ground) served as the source of lighting. The lamp was longitudinally mounted and laterally centered with respect to the participant’s seat. We used an AEG luxmeter to measure horizontal (sensor placed on the desk in front of the screen) and vertical (sensor placed at the participant’s eye position) lighting. In the light condition (lights on), horizontal and vertical lighting were 450 and 150 lux, respectively. In the dark condition (lights off), we measured 0.2 lux for horizontal and 0.6 lux for vertical lighting. During both conditions measurements, the screen was switched on with the N-back task software running, so that we could measure the same lighting that the participants would experience during the experimental trials.

Experimental plan

By crossing the three independent variables, each one having two modalities, eight different conditions are obtained: N1_n_D, N1_n_L, N1_s_D, N1_s_L, N2_n_D, N2_n_L, N2_s_D, and N2_s_L. All the participants experienced all eight conditions in random order.

Results

Visual inspection of eye images from seven experimental trials with different participants served as ground truth data for evaluating I-AC detection performance (Table 2). Using the SMI RED software during data recording, we were able to save the participants’ eye images as .jpeg files, each image corresponding to a single data point. Unambiguous correspondence between a .jpeg and a data point is ensured by the time stamp (see the supplemental material). The I-AC output consists of a table containing, for each eyeblink detected, details such as start point and endpoint, duration, and progressive count.

When an eyeblink was detected in the eye images, we checked whether or not the I-AC would output corresponding results, taking into account the following criteria:

-

correct detection. An algorithm detection was marked as correct if the eyeblink was actually visible in the corresponding eye images.

-

fake. An algorithm detection was marked as fake if the eyeblink was not actually visible in the eye images.

-

missed. An algorithm detection was marked as missed if the eyeblink was visible in the eye images, but not in the output log file.

-

correct duration estimation. An algorithm detection was marked as correctly estimated if the start point and endpoint (as defined in the Blink Detection section) corresponded to the actual ones in the eye images, with a maximum error of 40 ms each.

The most striking result is the average 97% correct detection percentage of I-AC, which is obviously linked to the low average fake and miss detection percentages: 4% and 2%, respectively (Table 2). Using the I-AC, we were able to correctly detect 324 of 334 actual eyeblinks. The duration of 267 (79%) of the 324 detected blinks was correctly estimated, with a maximum error of ±40 ms. The same testing on the eye-tracking manufacturer’ s algorithm returned a 75% correct detection percentage, with higher fake (24%) and miss (25%) detection percentages. Comparison on the correct duration estimation percentage parameter would be unfair, since a different definition of blink start point and endpoint may have been adopted in that algorithm.

Percentage values were computed as follows:

-

1)

\( {\hbox{correct}}\_ {\rm{detections}}\_ \% = \left( {{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{algorithm}} - {\hbox{fake}}} \right)/{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{visual}}\_ {\rm{inspection}} * 100 \)

Normalized correct detections percentages, indicated by stars in Table 2, were computed in cases in which the number of blinks detected by the algorithm exceeded the number of blinks detected by visual inspection. This was done to avoid percentage values above 100%, with the following formula:

-

2)

\( {\hbox{normalized}}\_ {\rm{correct}}\_ {\rm{detections}}\_ \% = \left( {{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{algorithm}} - {\hbox{fake}} * 2} \right)/{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{visual}}\_ {\rm{inspection}} * 100 \)

-

3)

\( {\hbox{fake}}\_ \% = {\hbox{fake}}/{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{algorithm}} * 100 \)

-

4)

\( {\hbox{missed}}\_ \% = {\hbox{missed}}/{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{visual}}\_ {\rm{inspection}} * 100 \)

-

5)

\( {\hbox{correct}}\_ {\rm{duration}}\_ {\rm{estimation}}\_ \% = {\hbox{correct}}\_ {\rm{duration}}\_ {\rm{estimation}}/{\hbox{blinks}}\_ {\rm{detected}}\_ {\rm{algorithm}} * 100 \)

Discussion

The blink detection algorithm we present in this study was developed with the aim of removing artifacts from the pupil dilation signal recorded with a remote eyetracker. This is essential for allowing subsequent linear interpolation of blink-contaminated pupil data in order to obtain a clear pupil signal. Since we gather no direct eyelid position data with remote corneal reflection eyetrackers, contact-based methods are preferred in studies in which eyeblink parameters are of primary concern. It is important to bear in mind the distinction between blink detection performed with contact-based and contact-free apparatuses. With the former, a direct measurement of eyelid movement can be obtained. Thus, it is possible to calculate blink occurrence and duration, even if rigorous identification of the point at which the blink ends is especially difficult (Kennard & Glaser, 1964; Stern et al., 1984). With the latter, a further distinction exists between computer vision detection and eye-tracking protocol analysis, the former being well adapted for online detection, the latter for post hoc analyses. While computer vision studies on facial expression recognition and blink detection have grown—especially in the last decade—in number and variety (see the Eyeblink Detection Methods section), very little effort has been spent for blink detection in eye-tracking protocols.

The importance of the preprocessing phase is highlighted by the high fake and miss detection percentages of a simpler algorithm that would directly mark as a blink each single pupil zero observation (or each set of contiguous zeros). Regarding fakes, columns 3 of Table 1a, b clearly explain why many fake detections would occur in such a case. With respect to the corresponding preprocessed data (column 2), two (for a) and three (for b) blinks would be detected instead of one; that was systematically found in the manufacturer’s event detector output. With respect to misses, Table 1c shows how zero pupil observations may not occur in very short blinks. In fact, this blink was missed by the eyetracker manufacturer’s event detector; these detected short blinks are typically those described by Kennard and Glaser (1964), where the eyelid does not completely occlude the pupil.

Concerning blink endpoint estimation, in the definition adopted by Caffier et al. (2003), one important issue needs to be taken into account: The blink waveform in Fig. 1a depicts a perfectly linear baseline, and it seems quite straightforward to detect the start point and endpoint from the intersection with the closing and reopening flank regression lines;Footnote 1 however, not all blink events have such a regular form, especially concerning the baseline, which is often at a different level between the closing and reopening portions (Fig. 1b). Rather, for a more naturalistic task, it would be much more difficult to identify the point where the reopening phase terminates. It would appear that it should be defined as the point at which the lid returns to the position occupied before blink initiation. But, as Kennard and Smyth (1963) underlined, location of the fixation point in the vertical plane affects eyelid position (i.e., the lower the point, the lower the eyelid). Moreover, changes in vertical eye position following a blink were found to be particularly likely. These two facts suggest clearly that return to initial eyelid position cannot be used as the termination criterion (Stern et al., 1984); this is highlighted from the presence of two baselines (baseline 1, baseline 2) in Fig. 1b, which consequently lead to two different 50% peak amplitude (Ō 1, Ū 1) thresholds that directly affect the blink start point and endpoint (γ 1 , δ 1 ) whenever a percentage peak amplitude threshold is used. Thus, when our definition (see the Blink Detection section) of blink start point and endpoint is adopted, we suggest that such points correspond, in a VEOG measurement, to α 1 and δ 1, respectively.

Since we implemented preprocessing and blink detection as separate modules (see the supplemental material), the former could be used even in cases in which different blink detection methods would be developed. Our definition of blink start point and endpoint comes from a human–machine interaction context, where the blink has been historically and primarily considered as a phenomenon disturbing visual intake, which is therefore inhibited during high visually demanding tasks. Such inhibition then is challenged by fatigue, leading to higher blink rates, and sleepiness, associated with longer blink durations. Nonetheless, several other factors, such as emotional excitement, verbalization, and, possibly, mental workload, may be indexed by the endogenous eyeblink (Stern et al., 1984), and recent studies have shown a growing and variegated employment of the eyeblink measure with different event detection settings (Chermahini & Hommel, 2010; Leal & Vrij, 2008; Smilek et al., 2010). Whatever the research field, a widely shared definition of eyeblink detection methods and parametrs for VEOG and eye-tracking studies could be a first step for outlining a scenario where it is possible to make comparisons across studies and across research areas for such a complex variable.

Testing algorithms versus ground truth is time consuming and perhaps inconvenient; thus, little effort has been made to get such an insight into event detection. Although we believe visual inspection testing to be effective especially for blink events, but not for fixations and saccades, we argue that visual inspection or any other appropriate ground truth comparison should be carried out with sufficiently representative samples. That allows one to consistently validate event detection algorithms in eye-tracking, given the dramatic impact algorithms have on higher level analyses (Salvucci & Anderson, 2001; Salvucci & Goldberg, 2000).

The algorithm we propose was developed and tested with 50 Hz sampled data; further testing is required to see whether such an approach is suitable for higher sampling rates. Ideally, we might think that higher sampling rates would return less artifact-contaminated data or, eventually, richer and totally new information for improved blink detection. Moreover, improvements need to be applied to the I-AC in order to make miss and fake detection percentages as close to zero as possible. Rather than a final solution, we may primarily look at the I-AC as an approach for eye-tracking data preprocessing; once such data handling has been carried out, blink detection and missing value interpolation should return more accurate output.

Visual inspection of eyeblink images confirmed features that were previously described in previous studies. First, we noted a slight difference, in all the participants, between left- and right-eye blink onset; that is, the eyelids movements are not perfectly synchronized (Kennard & Glaser, 1964). Second, 1 participant systematically made partial blinks, in that the upper eyelid never fully covered the pupil during its descent (Kennard & Glaser, 1964; Kennard & Smyth, 1963). Should we consider these partial blinks as normal blinks, or are they something different? Finally, few participants exhibited shorter lid closing time (circa 40 ms, instead of 60). For this reason, in our implementation of the I-AC, we set closing time as a variable that could be adaptively changed post hoc for each participant. However, we do not know whether this difference is simply interindividual or has any relation to other factors.

Conclusions

The present study was primarily concerned with eyeblink-related artifact correction in the pupil diameter signal. We developed a new blink detection algorithm (I-AC) for 50-Hz eye-tracking protocols and tested its performance against 334 eyeblink images recorded in an experiment: With I-AC, we were able to correctly detect 97% of 334 eyeblinks. I-AC consists of two phases: preprocessing and blink detection. Such biphasic detection has two major advantages. First, the preprocessing phase works with adaptive pupil size thresholds, which are calculated for each different log file. The researcher will not have to worry about changing thresholds, either for each experiment participant or for each different experimental condition. Once the preprocessing phase has been completed, pupil values corresponding to upper lid occlusion will be set to zero; that is, all blink-related artifacts in the protocol are removed. Second, the blink detection phase was developed with constraints imposed by theoretical aspects of visual intake interruption caused by the blink event. Since the two phases are physically distinct, other researchers may develop different blink detection phases based on different constraints or research needs, bearing the advantage of having data-driven preprocessed data.

One limitation of this study is the fact that we employed only one particular eye-tracking device for data recording. At present, we do not know how the I-AC would work on data logs from different eyetrackers, and such a comparison would be of great value. Furthermore, the adaptive thresholding method described assumes that ambient lighting is kept constant for each experimental trial—that is, for each log file examined by the algorithm. If the amount of light that reaches the pupil changes during the trial, further processing is needed in order to correct the pupil size values in accordance with momentary lighting variations.

Notes

This could be related to the experimental task, since Caffier et al. (2003) asked participants to look at static 25 × 35 cm pictures, which presumably does not require abrupt vertical gaze shifts.

References

Ahlstrom, U., & Friedman-Berg, F. J. (2006). Using eye movement activity as a correlate of cognitive workload. International Journal of Industrial Ergonomics, 36, 623–636. doi:10.1016/j.ergon.2006.04.002

Bailey, B. P., Busbey, C. W., & Iqbal, S. T. (2007). TAPRAV: An interactive analysis tool for exploring workload aligned to models of task execution. Interacting with Computers, 19, 314–329. doi:10.1016/j.intcom.2007.01.004

Beatty, J. (1982). Task-evoked pupillary responses, processing load, and the structure of processing resources. Psychological Bulletin, 91, 276–292. doi:10.1037/0033-2909.91.2.276

Beatty, J., & Lucero-Wagoner, B. (2000). The pupillary system. In J. T. Cacioppo, L. G. Tassinary, & G. G. Berntson (Eds.), Handbook of psychophysiology (2nd ed., pp. 142–162). Cambride: Cambridge University Press.

Bentivoglio, A. R., Bressman, S. B., Cassetta, E., Carretta, D., Tonali, P., & Albanese, A. (1997). Analysis of blink rate patterns in normal subjects. Movement Disorders, 12, 1028–1034. doi:10.1002/mds.870120629

Brookings, J. B., Wilson, G. F., & Swain, C. R. (1996). Psychophysiological responses to changes in workload during simulated air traffic control. Biological Psychology, 42, 361–377. doi:10.1016/0301-0511(95)05167-8

Caffier, P. P., Erdmann, U., & Ullsperger, P. (2003). Experimental evaluation of eye-blink parameters as a drowsiness measure. European Journal of Applied Physiology, 89, 319–325. doi:10.1007/s00421-003-0807-5

Calkins, M. E., Katsanis, J., Hammer, M. A., & Iacono, W. G. (2001). The misclassification of blinks as saccades: Implications for investigations of eye movement dysfunction in schizophrenia. Psychophysiology, 38, 761–767. doi:10.1111/1469-8986.3850761

Chau, M., & Betke, M. (2005). Real time eye tracking and blink detection with USB cameras (Tech. Rep. 2005-12). Boston: Boston University Computer Science.

Chermahini, S. A., & Hommel, B. (2010). The (b)link between creativity and dopamine: Spontaneous eye blink rates predict and dissociate divergent and convergent thinking. Cognition, 115, 458–465. doi:10.1016/j.cognition.2010.03.007

Cohn, J. F., Xiao, J., Moriyama, T., Ambadar, Z., & Kanade, T. (2003). Automatic recognition of eye blinking in spontaneously occurring behavior. Behavior Research Methods, Instruments, & Computers, 35, 420–428.

Fogarty, C., & Stern, J. A. (1989). Eye movements and blinks: Their relationship to higher cognitive processes. International Journal of Psychophysiology, 8, 35–42. doi:10.1016/0167-8760(89)90017-2

Fukuda, K., Stern, J. A., Brown, T. B., & Russo, M. B. (2005). Cognition, blinks, eye-movements, and pupillary movements during performance of a running memory task. Aviation Space and Environmental Medicine, 76(7), 75–85.

Gitelman, D. R. (2002). ILAB: A program for postexperimental eye movement analysis. Behavior Research Methods, Instruments, & Computers, 34, 605–612.

Goldstein, R., Bauer, L. O., & Stern, J. A. (1992). Effect of task difficulty and interstimulus interval on blink parameters. International Journal of Psychophysiology, 13, 111–117. doi:10.1016/0167-8760(92)90050-L

Goldwater, B. C. (1972). Psychological significance of pupillary movements. Psychological Bulletin, 77, 340–355. doi:10.1037/h0032456

Holland, M. K., & Tarlow, G. (1972). Blinking and mental load. Psychological Reports, 31, 119–127.

Holland, M. K., & Tarlow, G. (1975). Blinking and thinking. Perceptual and Motor Skills, 41, 403–406.

Ingre, M., Åkerstedt, T., Peters, B., Anund, A., & Kecklund, G. (2006). Subjective sleepiness, simulated driving performance and blink duration: Examining individual differences. Journal of Sleep Research, 15, 47–53. doi:10.1111/j.1365-2869.2006.00504.x

Joyce, C. A., Gorodnitsky, I. F., & Kutas, M. (2004). Automatic removal of eye movement and blink artifacts from EEG data using blind component separation. Psychophysiology, 41, 313–325. doi:10.1111/j.1469-8986.2003.00141.x

Kahneman, D. (1973). Attention and effort. Englewood Cliffs: Prentice Hall.

Kawato, S., & Tetsutani, N. (2004). Detection and tracking of eyes for gaze-camera control. Image and Vision Computing, 22, 1031–1038. doi:10.1016/j.imavis.2004.03.013

Kennard, D. W., & Glaser, G. H. (1964). An analysis of eyelid movements. The Journal of Nervous and Mental Disease, 139, 31–48.

Kennard, D. W., & Smyth, G. L. (1963). Interaction of mechanisms causing eye and eyelid movement. Nature, 197, 50–52.

Kirchner, W. K. (1958). Age differences in short-term retention of rapidly changing information. Journal of Experimental Psychology, 55, 352–358. doi:10.1037/h0043688

Lalonde, M., Byrns, D., Gagnon, D., Teasdale, N., & Laurendeau, D. (2007). Real-time eye blink detection with GPU-based SIFT tracking. In Proceedings of the 4th Canadian Conference on Computer and Robot Vision (CRV) (pp. 481–487). Montreal, Quebec. doi:10.1109/CRV.2007.54

Leal, S., & Vrij, A. (2008). Blinking during and after lying. Journal of Nonverbal Behavior, 32, 187–194. doi:10.1007/s10919-008-0051-0

Marshall, S. P. (2000). Method and apparatus for eye tracking and monitoring pupil dilation to evaluate cognitive activity. US Patent No. 6,090,051.

Marshall, S. P. (2002). The index of cognitive activity: Measuring cognitive workload. In Proceedings of the 7th Conference on Human Factors and Power Plants (pp. 7.5–7.9). Los Alamitos, CA: IEEE Computer Society. doi:10.1109/HFPP.2002.1042860

Marshall, S. P. (2008). Mental alertness level determination. US Patent No. 7,344,251 B2.

Morris, T., Blenkhorn, P., & Zaidi, F. (2002). Blink detection for real-time eye tracking. Journal of Network and Computer Applications, 25, 129–143. doi:10.1006/jnca.2002.0130

Noguchi, Y., Nopsuwanchai, R., Ohsuga, M., & Kamakura, Y. (2007). Classification of blink waveforms towards the assessment of driver’s arousal level—An approach for HMM based classification from blinking video sequence. In D. Harris (Ed.), Engineering psychology and cognitive ergonomics (HCII 2007, LNAI 4562) (pp. 779–786). Berlin: Springer. doi:10.1007/978-3-540-73331-7_85

Nyström, M., & Holmqvist, K. (2010). An adaptive algorithm for fixation, saccade, and glissade detection in eye-tracking data. Behavior Research Methods, 42, 188–204. doi:10.3758/BRM.42.1.188

Ohno, T., Mukawa, N., & Kawato, S. (2003). Just blink your eyes: A head-free gaze tracking system. In Extended abstract of the ACM Conference on Human Factors in Computing Systems (CHI2003) (pp. 950–951). Ft. Lauderdale, FL. doi:10.1145/765891.766088

Orchard, L. N., & Stern, J. A. (1991). Blinks as an index of cognitive activity during reading. Integrative Physiological and Behavioral Science, 26, 108–116. doi:10.1007/BF02691032

Recarte, M. A., Pérez, E., Conchillo, A., & Nunes, L. M. (2008). Mental workload and visual impairment: Differences between pupil, blink, and subjective rating. The Spanish Journal of Psychology, 11, 374–385.

Salvucci, D. D., & Anderson, J. R. (2001). Automated eye-movement protocol analysis. Human–Computer Interaction, 16, 39–86. doi:10.1207/S15327051HCI1601_2

Salvucci, D. D., & Goldberg, J. H. (2000). Identifying fixations and saccades in eye-tracking protocols. In A. T. Duchowsky (Ed.), Proceedings of the Eye Tracking Research and Applications Symposium (pp. 71–78). New York: ACM Press. doi:10.1145/355017.355028

Smilek, D., Carriere, J. S. A., & Cheyne, J. A. (2010). Out of mind, out of sight: Eye blinking as indicator and embodiment of mind wandering. Psychological Science, 20, 1–4. doi:10.1177/0956797610368063

Smith, P., Shah, M., & da Vitoria Lobo, N. (2003). Determining driver visual attention with one camera. IEEE Transactions on Intelligent Transportation Systems, 4, 205–218. doi:10.1109/TITS.2003.821342

Stern, J. A., Walrath, L. C., & Goldstein, R. (1984). The endogenous eyeblink. Psychophysiology, 21, 22–33. doi:10.1111/j.1469-8986.1984.tb02312.x

Stern, J. A., Boyer, D., & Schroeder, D. (1994). Blink rate: A possible measure of fatigue. Human Factors, 36, 285–297.

Sukno, F. M., Pavani, S., Butakoff, C., & Frangi, A. F. (2009). Automatic assessment of eye blinking patterns through statistical shape models. In Proceedings of the 7th International Conference on Computer Vision Systems (pp. 33–42). Berlin: Springer. doi:10.1007/978-3-642-04667-4

Veltman, J. A., & Gaillard, A. W. K. (1996). Physiological indices of workload in a simulated flight task. Biological Psychology, 42, 323–342. doi:10.1016/0301-0511(95)05165-1

Veltman, J. A., & Gaillard, A. W. K. (1998). Physiological workload reactions to increasing levels of task difficulty. Ergonomics, 41, 656–669.

Volkmann, F. C. (1986). Human visual suppression. Vision Research, 26, 1401–1416. doi:10.1016/0042-6989(86)90164-1

Volkmann, F. C., Riggs, L. A., & Moore, R. K. (1980). Eyeblinks and visual suppression. Science, 207, 900–902.

Volkmann, F. C., Riggs, L. A., Ellicott, A. G., & Moore, R. K. (1982). Measurement of visual suppression during opening, closing and blinking of the eyes. Vision Research, 22, 991–996. doi:10.1016/0042-6989(82)90035-9

Wibbenmeyer, R., Stern, J. A., & Chen, S. C. (1983). Elevation of visual threshold associated with eyeblink onset. The International Journal of Neuroscience, 18, 279–286.

Zuber, B. L., & Stark, L. (1966). Saccadic suppression: Elevation of visual threshold associated with saccadic eye movements. Experimental Neurology, 16, 65–79. doi:10.1016/0014-4886(66)90087-2

Acknowledgments

The authors would like to thank Dipl.-Inform. Stefan Damke and Dipl.-Ing. Mario Lasch for the technical support provided during the experimental setup, Dipl.-Inform. Christian Kothe for the N-back software implementation, and the anonymous reviewers for their contributions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pedrotti, M., Lei, S., Dzaack, J. et al. A data-driven algorithm for offline pupil signal preprocessing and eyeblink detection in low-speed eye-tracking protocols. Behav Res 43, 372–383 (2011). https://doi.org/10.3758/s13428-010-0055-7

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-010-0055-7