Abstract

Aging is associated with changes in cognitive and affective functioning, which likely shape older adults’ social cognition. As the neural and psychological mechanisms underlying age differences in social abilities remain poorly understood, the present study aims to extend the research in this field. To this purpose, younger (n = 30; Mage = 26.6), middle-aged (n = 30; Mage = 48.4), and older adults (n = 29; Mage = 64.5) performed a task designed to assess affective perspective-taking, during an EEG recording. In this task, participants decided whether a target facial expression of emotion (FEE) was congruent or incongruent with that of a masked intervener of a previous scenario, which portrayed a neutral or an emotional scene. Older adults showed worse performance in comparison to the other groups. Regarding electrophysiological results, while younger and middle-aged adults showed higher late positive potentials (LPPs) after FEEs congruent with previous scenarios than after incongruent FEEs, older adults had similar amplitudes after both. This insensitivity of older adults’ LPPs in differentiating congruent from incongruent emotional context-target FEE may be related to their difficulty in generating information about others’ inner states and using that information in social interactions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Emotional perspective-taking is an important source of human empathy and is considered an indispensable element in the fully developed, mature Theory of Mind (ToM; Decety & Jackson, 2004). It is defined as the ability to take the perspective of another person, and determines the success of social interactions (Derntl et al., 2009). Through perspective-taking, one is able to infer, understand, and foresee others’ mental and emotional states – ToM – as well as to share and to respond appropriately to such states – empathy.

Investigations on perspective-taking abilities during aging have been mainly conducted with the perspective-taking subscale of the Interpersonal Reactivity Index (IRI; Davis, 1983). While one study showed that older adults reported better perspective-taking than younger adults but worse than middle-aged adults (O’Brien, Konrath, Grühn, & Hagen, 2012), another study revealed that this ability seems not to change with aging (Yi et al., 2017). However, considering that aging appears to affect ToM abilities (Henry, Phillips, Ruffman, & Bailey, 2013; Moran, 2013), it is important to understand the neural and psychological mechanisms underlying such age-related differences. According to a meta-analysis, younger adults outperform older adults in all types of ToM tasks (Henry et al., 2013). However, studies that included matched control tasks showed that age-related deficits are similar in both ToM and non-ToM conditions, being unclear whether group differences are specific to ToM or secondary to age-related cognitive decline (Henry et al., 2013). A comprehensive review suggested that older adults’ performance in tasks using visual stimuli are relatively independent of cognitive deficits, while performance in tasks using verbal stories are more dependent on cognitive abilities, such as working memory, processing speed, and executive function (Moran, 2013).

Neuroimaging studies suggested that older adults compensate impairments in domain-specific ToM by using domain-general processing skills (Moran, 2013). For instance, while younger and older adults activated the right inferior frontal gyrus (IFG) during a ToM task, an area associated with facial visual encoding (Kelley et al., 1998), older adults also had higher activation of the left IFG (Castelli et al., 2010), an area typically associated with verbal memory (Kelley et al., 1998). Other studies also found that older adults had reduced activation of brain areas associated with ToM, such as the anterior cingulate cortex (Castelli et al., 2010), medial prefrontal cortex, and temporoparietal junction (Moran, Jolly, & Mitchell, 2012).

Functional MRI studies have allowed localizing several regions implicated in perspective-taking processes (e.g., David et al., 2008; Jackson, Meltzoff, & Decety, 2006). However, considering the poor temporal resolution of fMRI, tracking the temporal nature of these processes is crucial to understand neuroimaging and behavioral results better. Studies using event-related potentials (ERPs), which have very precise temporal resolution, are well suited to this function (Liu, Sabbagh, Gehring, & Wellman, 2004). However, to the best of our knowledge, older adults were not included in any of the previous studies examining brain potentials related to ToM (Bowman, Liu, Meltzoff, & Wellman, 2012; Liu et al., 2004; Liu, Meltzoff, & Wellman, 2009; Meinhardt, Sodian, Thoermer, Döhnel, & Sommer, 2011; Sabbagh & Taylor, 2000; Wang et al., 2010), or to perspective-taking abilities (Decety, Yang, & Cheng, 2010; Li & Han, 2010; McCleery, Surtees, Graham, Richards, & Apperly, 2011).

In an attempt to fill this gap, we examine age-related differences in behavioral and neural responses to a perspective-taking task, which assesses participants’ ability in inferring emotional states of others. To this purpose, we adapted a task previously used in an fMRI study (Derntl et al., 2009), in which participants observe scenarios showing two persons involved in social interactions, portraying emotional and neutral scenes. One person in each scenario is masked, and participants must infer his/her affective mental state. Then, a target facial expression of emotion (FEE) is displayed, and participants have to decide if the emotion presented is congruent or incongruent with the emotion inferred during the scenario. In half of the trials the FEEs are congruent with the affective state of the person with the masked expression (congruent condition), whereas in the other half they are not (incongruent condition). This experimental design was based on previous studies that assessed contextual congruency (Diéguez-Risco, Aguado, Albert, & Hinojosa, 2013), defined here as the matching between a target emotion portrayed in a facial expression and the emotion portrayed by the previous scenario. Different to previous studies, an accurate decision in our task requires an accurate inference of the emotional state of the masked intervener. That is, participants have to accurately infer the emotion of a masked actor in a given scenario, compare it to the emotion presented in a target FEE, and decide whether the inferred and visualized emotions were congruent or incongruent. Thus, with this experimental manipulation, we investigate perspective-taking abilities through the behavioral performance, while we assess how these abilities modulate two ERP components that are typically influenced by the affective and evaluative congruency: the N170 and the late positive potential (LPP).

The N170 is an occipitotemporal negative deflection that usually appears at ~170 ms after stimulus onset (Rossion & Jacques, 2008). This component appears to reflect the earliest stage of facial structure encoding (Bentin, Allison, Puce, Perez, & McCarthy, 1996), but is also sensitive to the emotional content (Almeida et al., 2016; Hinojosa, Mercado, & Carretié, 2015). Regarding contextual effects, N170 amplitude is usually larger in congruent trials, at least when pictures are used as contextual stimuli (Hietanen & Astikainen, 2013; Righart & de Gelder, 2006; Righart & de Gelder, 2008a, 2008b). This component appears not to be affected by aging (Gao et al., 2009). While one study showed reduced lateralization (typically evidenced to the right hemisphere), interpreted as compensation for age-related decline (Komes, Schweinberger, & Wiese, 2014), another study showed that older adults had an increased N170 for negative faces and a similar N170 for positive faces (Liao, Wang, Lin, Chan, & Zhang, 2017). Indeed, a study from our group showed that younger, middle-aged, and older adults had similar performances in an emotional identification task, which was accompanied by an increased N170 amplitude in the older group (Gonçalves et al., 2018b).

The LPP is a centro-parietal positive deflection that usually appears between 300 and700 ms after the stimulus onset (Herring, Taylor, White, & Crites, 2011). This component reflects facilitated attention to emotional stimuli, and its amplitude is greater after emotionally arousing compared to neutral pictures (Foti & Hajcak, 2008). In studies such as ours, which manipulated congruency between a prime and a target, the LPP indexes the processing of affective or evaluative congruency, and its amplitude is larger to incongruent targets compared to congruent ones (Morioka et al., 2016). This component is also modulated by cognitive appraisal and motivated attention (Weinberg & Hajcak, 2010), helping scenes (Cowell & Decety, 2015), and morally good actions (Yoder & Decety, 2014). Previous studies showed reduced LPP amplitude in older adults during the presentation of unpleasant stimuli, and augmented amplitude during pleasant scenes (Kisley, Wood, & Burrows, 2007; Wood & Kisley, 2006). However, another study showed reduced LPP for older adults in all emotional scenes, regardless of content (Renfroe, Bradley, Sege, & Bowers, 2016). A study from our group found that, in comparison with younger adults, older adults showed a reduced LPP during the visualization of scenarios displaying harmful action, which participants had to evaluate as intentional or accidental (Pasion et al., 2018)

In our experiment, we used visual stimuli to assess perspective-taking independently of general cognition (Moran, 2013). However, to confirm this assumption, neurocognitive measures were collected, and the scores were correlated with behavioral and electrophysiological results. Based on previous findings about the effects of aging on ToM, we predicted poorer performance of older adults in our perspective-taking task. Regarding electrophysiological results, we predicted the influence of perspective-taking processes on early and later stages of the affective and evaluative processing, indexed by a modulation of N170 and LPP components. The N170 should be enhanced in congruent trials (Hietanen & Astikainen, 2013; Righart & de Gelder, 2006; Righart & de Gelder, 2008a, 2008b), while the LPP should be enhanced in incongruent trials (Diéguez-Risco et al., 2013; Dozolme, Brunet-Gouet, Passerieux, & Amorim, 2015; Herring et al., 2011). As we expected a decreased perspective-taking in the older group, we hypothesized that these ERP modulations would be absent in this group.

Method

Participants

One hundred and eighty-three participants were recruited from the local community and assigned to three age groups: younger adults (age range: 20−35 years), middle-aged adults (age range: 40−55 years), and older adults (age range: 60−75 years). We excluded participants with scores lower than 22 (n = 6) in the Montreal Cognitive Assessment (MoCA; Nasreddine et al., 2005; Portuguese norms by Freitas, Simões, & Santana, 2014), as well as participants who reported uncorrected visual impairments (n = 3), use of psychotropic medication (n = 15), history of brain injury, alcohol or drug abuse, and neurological or psychiatric diagnosis (n = 25). Besides the participants excluded, 45 participants dropped out of the study before the electrophysiological assessment, leading to a final sample composed of 30 younger (15 female; Mage = 26.6, SD = 4.05, Meducation = 16.3, SD = 4.05), 30 middle-aged (14 female; Mage = 48.4, SD = 5.50, Meducation = 15.4, SD = 4.81), and 29 older (18 female; Mage = 64.5, SD = 4.10, Meducation = 14.1, SD = 4.84) adults. All participants were Caucasian and the groups were statistically matched for years of formal education, F(2,88) = 1.96, p = .148, sex, χ2 (2, N = 89) = 0.92, p = .631, and handedness, χ2 (2, N = 89) = 2.77, p = .250.

A formal sample size calculation was not performed due to the exploratory nature of this investigation and the lack of information regarding the effects of perspective-taking abilities in the ERP components of interest. However, prior to conducting the study, we targeted a sample size of 30 for each age group, somewhat higher than in previous studies using ERP methodologies applied to perspective-taking or ToM tasks (Bowman et al., 2012; Decety et al., 2010; Li & Han, 2010; Liu et al., 2004; Liu et al., 2009; Meinhardt et al., 2011; McCleery et al., 2011; Wang et al., 2010). The current study was part of a larger research project (Fernandes et al., 2018; Gonçalves et al., 2018b; Pasion et al., 2018), which was approved by the local Ethics Committee. Participants provided written informed consent in accordance with the Declaration of Helsinki and were compensated with a fixed amount of 20€ (as a gift card) for their time.

Instruments and tasks

Neuropsychological measures

The MoCA was used as a screening instrument since it was specifically developed for the assessment of milder forms of cognitive impairment (Freitas et al., 2014). According to a validation study performed with the Portuguese population, this instrument has an optimal cutoff point of 22 for mild cognitive impairment (Freitas et al., 2014). In addition, we also assessed: (a) executive functioning through the Trail Making Test (TMT = Part B - Part A; Armitage, 1946; Portuguese norms by Cavaco et al., 2013b) and the Institute of Cognitive Neurology Frontal Screening (IFS; Torralva, Roca, Gleichgerrcht, Lopez, & Manes, 2009; Portuguese norms by Moreira, Lima, & Vicente, 2014); (b) visuospatial short-term memory through the Corsi Block-Tapping Task (Wechsler, 2008); (c) non-motor processing speed and language production through the Semantic and Phonemic Fluency tests (Strauss, Sherman, & Spreen, 2006; Portuguese norms by Cavaco et al., 2013a); and (d) learning, immediate and episodic memory through the Auditory Verbal Learning Test (AVLT; Boake, 2000; Portuguese norms by Cavaco et al., 2015). Finally, anxiety and depression were evaluated by the Hospital Anxiety and Depression Scale (HADS; Snaith & Zigmond, 1994; Portuguese norms by Pais-Ribeiro et al., 2007), and psychopathological symptoms were assessed through the Brief Symptom Inventory (BSI; Derogatis, 1982/1993; Portuguese norms by Canavarro, 1999).

Emotional perspective-taking task

This task was adapted from a previous fMRI study that assessed perspective-taking (Derntl et al., 2009). In order to assess this ability during an ERP experiment, we adapted the protocol used by Derntl et al. (2009) according to experimental designs that were previously used in studies of contextual congruency (Diéguez-Risco et al., 2013; Dozolme et al., 2015; Herring et al., 2011; Hietanen & Astikainen, 2013; Righart & de Gelder, 2006; Righart & de Gelder, 2008a, 2008b). In the present study, contextual congruency is defined by the matching between a target FEE and the emotion portrayed by a masked face in a previous scenario. To judge accurately the contextual congruency, participants had to evoke perspective-taking abilities: first, they had to see the scenario and analyze it from the other’s point of view; then they had to judge whether a FEE displayed afterward was congruent or not with the expected emotion of the masked person.

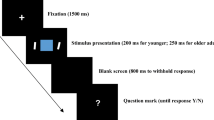

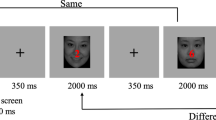

To this purpose, participants viewed 360 pictures depicting scenes of two persons (Caucasians) involved in social interactions. In each scenario, the face of one of the actors was masked, and participants were asked to infer her/his emotional expression (see Fig. 1 for an example). The scenarios portrayed emotional (anger, fear, disgust, sadness, happiness) and neutral scenes. After the scenario, a target FEE was displayed, and participants had to decide if it was congruent or incongruent with the inferred FFE of the masked character, based on their perspective-taking abilities. Participants were instructed to respond only when the response slide was displayed (to avoid preparatory response potentials to overlap with the ERP components of interest) using two response buttons held in the right and left hand. Half of the sample used the left button to respond “yes” and the right button to “no,” while the other half used the opposite response scheme. The structure of each trial is depicted in Fig. 1.

Schematic representation of a trial of the perspective-taking task. In this example, the scenario was a picture portraying a “disgust” scene. The scenario was followed by an interstimulus interval (displaying a “+” in the center of the screen for 250 ms) and by a facial expression of emotion (FEE) congruent (a) or incongruent (b) with the emotion that was portrayed. The FEE was followed by an interstimulus interval (500 ms) and by the response slide. The next trial started after an intertrial interval (500 ms)

The original set of stimuli (Derntl et al., 2009) included ten scenarios for each emotional condition, and each one was repeated six times. In the congruent conditions, the FEE was randomly selected from all alternative actors with the congruent emotion. In the incongruent conditions, the FEE was randomly selected from all the incongruent alternatives. This led to 30 congruent trials (in which the target FEE was congruent with the emotion portrayed in the scenario) and 30 incongruent trials (in which the target FEE was incongruent with the emotion portrayed in the scenario) for each emotional condition. The FEEs were selected from the NimStim Face Stimulus Set (Tottenham et al., 2009), and displayed adult Caucasians with closed mouth and direct eye contact. For each emotional condition, we selected the five most accurately identified facial expressions according to the original study (Tottenham et al., 2009), resulting in 30 female and 30 male facial stimuli.

The task was programmed and delivered in E-Prime 2.0 (2011, Psychology Software Tools, Inc., Sharpsburg, PA, USA), and was composed of two experimental blocks of 180 trials, with a pause between them. The stimuli were presented on a 17-in. screen with 6.67° × 8.55° visual angle and a refresh rate of 60 Hz.

Procedures

Participants were tested individually in two experimental sessions to avoid fatigue effects. The first session included the neuropsychological assessment and the second included the electrophysiological experiment. During the first session, participants gave the informed consent and completed a semi-structured interview followed by the MoCA. The remaining tests and self-report measures were administered afterward, in a random order between participants. The AVLT was applied in the second session to avoid the interference of other words listed during the neuropsychological assessment in the subtests of the AVLT. During the second session, participants sat inside an EEG chamber, with ~115 cm between them and the screen where the experimental task was displayed. After the placement of the EEG cap, participants read the instructions and completed six practice trials. Whenever possible, data collection sessions were conducted in the middle of the morning or afternoon, and the participants from the three groups were equally distributed in this schedule.

EEG recording and processing

The electroencephalographic (EEG) data were recorded through the NetStation V4.5.2 (2008, Electrical Geodesics Inc., Eugene, OR, USA – EGI), using a 128-electrode Hydrocel Geodesic Sensor Net, connected to a Net Amps 300 amplifier. Impedances were kept below 50 kOhm for all electrodes (since this is a high impedance system). The EEG data were recorded with a sampling rate of 500 Hz, filtered with a notch filter of 50 Hz and referenced to the Cz.

During the pre-processing, conducted in version 11 of the EEGLAB (Delorme & Makeig, 2004), a MATLAB toolbox (2010, The Mathworks Inc., Natick, MA, USA), the continuous EEG signal was downsampled to 250 Hz and bandpass filtered (0.2−30 Hz). The EEG signal underwent Independent Components Analysis, and eyeblink, saccade, and heart rate artifacts were corrected, by subtracting the respective Independent Component activity from the data. Channels with artifacts were interpolated (maximum of 10% of the sensors) using the spherical spline interpolation method (Perrin, Pernier, Bertrand, & Echallier, 1989). At this point, the EEG signal was re-referenced to the average of all electrodes and segmented into epochs (-200 to 800 ms) time-locked to the onset of the target FEE. All segments were visually inspected and epochs containing alpha activity, electrode drifts, and cardiac, muscle, and ocular artifacts were rejected. Trials in which participants gave incorrect responses were also excluded, resulting in a significantly different number of valid trials between groups, F(2, 86) = 7.30, p = .001.Footnote 1 All epochs were baseline-corrected (200 ms pre-stimulus) and averaged by congruency (congruent, incongruent) and emotion (anger, fear, disgust, sadness, happiness, neutral).

Based on previous studies and visual inspection of grand-average waveforms and topographical maps, three time-windows and three regions of interest (ROIs)Footnote 2 were selected for statistical analysis (Fig. 2). For the N170 component, its higher amplitude occurs bilaterally at occipitotemporal regions, specifically at P7/P8 and PO7/PO8, being more pronounced at the inferior locations as P9/P10 and PO9/PO10 (Rossion & Jacques, 2008). The visual inspection of our topographical maps showed a negative maximum over these regions, leading us to select a ROI including these and a cluster of surrounding electrodes, in order to increase the signal-to-noise ratio (Musial, Baker, Gerstein, King, & Keating, 2002; Zeman, Till, Livingston, Tanaka, & Driessen, 2007). Thus, the N170 peak amplitudes were calculated between 150 and 250 ms after FEE onset, at right (electrodes 83, 84, 89, 90 [PO8], 91, 95 [P10], 96 [P8]) and left (70, 66, 69, 65 [PO7], 64 [P9], 58 [P7], 59) ROIs. Similarly, previous studies evidenced that the LPP reaches its largest amplitude over centro-parietal sites such as CPz and Pz (Herring et al., 2011). As our topographical maps are consistent with this evidence (see below), we measured the mean LPP amplitudes at the centro-parietal ROI (54, 55 [CPz], 61, 62 [Pz], 78, 79). As the LPP shows a temporally broad distribution (e.g., Diéguez-Risco et al., 2015), we divided its corresponding time-window into an early (LPPe; 300–500 ms after FEE onset) and late component (LPPl; 500–700 ms).

Statistical analysis

The results of the neuropsychological tests were compared through independent one-way ANOVAs, using group (younger, middle-aged, older adults) as a between-participants factor. Perspective-taking results were obtained from the accuracy rates (percentage of correct responses in relation to the total number of trials), computed by participant and condition. To investigate the effects of emotion, congruency, and group on perspective-taking results, we performed a mixed ANOVA, with emotion (anger, fear, disgust, sadness, happiness, neutral) and congruency (congruent, incongruent) as within-participants factors, and group (younger, middle-aged, older adults) as between-participants factor. The same model was used for reaction times.

Regarding electrophysiological results, the N170 was analyzed in a mixed ANOVA, with hemisphere (left, right), congruency, and emotion as within-participants factors, and group as between-participants factor. The LPPs were analyzed in a mixed ANOVA, with congruency and emotion as within-participants factors, and group as between-participants factor. Exploratory analyses using gender as an additional between-participants factor were performed for the perspective-taking and electrophysiological results (described in the Online Supplementary Material).

To test whether group differences were correlated with age-related differences in cognitive abilities, age-specific Pearson’s correlations were computed between neurocognitive scores in which groups differed significantly and perspective-taking and ERP results (averaged by congruency). Significant correlations were explored through linear regression models, conducted by group, in which the neurocognitive scores were entered as main predictors of perspective-taking and electrophysiological results.

The threshold for statistical significance was set at α = .05, and the p-values reported for all analyses are from two-tailed tests. Statistical analysis was performed using SPSS 24 (IBM Corp., Armonk, NY, USA). Violations of sphericity were corrected via the Greenhouse-Geisser method, and post-hoc pairwise comparisons were corrected for multiple comparisons using the Sidak procedure.

Results

Neuropsychological results

The groups did not differ in Semantic and Phonemic Fluency, anxiety, depression nor in psychopathological symptomatology. Significant differences emerged in MoCA, IFS, Corsi Block-Tapping Task, TMT, Learning, Delayed Recall and Delayed Recognition subtests of the AVLT. Younger adults outperformed older adults in all of these neuropsychological tests, while middle-aged adults outperformed older adults in MoCA, TMT, Corsi Block-Tapping Task, Learning and Delayed Recall subtests of the AVLT. Descriptive statistics and one-way ANOVA results are presented in Table 1 of the Online Supplementary Material.

Emotional perspective-taking task

Behavioral results

For accuracy, we found a main effect of group, F(2, 86) = 27.3, p < .001, η2p = .388, revealing that older adults were significantly less accurate than younger (p < .001) and middle-aged adults (p < .001). Middle-aged adults were marginally less accurate than younger adults (p = .054). We found a main effect of emotion, F(5, 430) = 102, p < .001, η2p = .542, ε = .980, revealing accuracy rates significantly higher following scenarios portraying happiness (p < .001), sadness, and neutral scenes, without significant differences between the latter (p > .998). Scenarios portraying anger, disgust, and fear elicited similar accuracy rates (all p > .988). We also found a main effect of congruency, F(1, 86) = 9.77, p = .002, η2p = .102, revealing higher accuracy rates in congruent than in incongruent trials (p = .002). The congruency*group interaction was non-significant, (F < 1), but we found a significant emotion*congruency interaction, F(5, 430) = 18.9, p < .001, η2p = .180, along with a significant emotion*congruency*group interaction, F(10, 430) = 2.69, p = .003, η2p = .059. Post-hoc comparisons (represented in Fig. 3) revealed that the three groups obtained similar results in congruent condition portraying happiness (all p > .534), but younger adults outperformed older adults in the remaining congruent and incongruent conditions (all p < .033). Younger and middle-aged adults had similar performances in all congruent and incongruent conditions (all p > .091), except for incongruent condition portraying disgust (p = .011), in which younger adults had higher accuracy rates. Middle-aged adults outperformed older adults in all incongruent conditions (all p < .024), except for the incongruent condition portraying disgust (p = .074). Regarding congruent conditions, middle-aged adults outperformed older adults in conditions portraying disgust, neutrality, and sadness (all p < .022), having similar performance in conditions portraying anger, fear, and happiness (all p > .186). Descriptive statistics of perspective-taking results are presented in Table 2 of the Online Supplementary Material.

Average rates of accuracy (%) for each emotional and congruence condition. Asterisks and letters represent significant differences (a) between younger and older adults; (b) between middle-aged and older adults; and (c) between younger and middle-aged adults. Error bars indicate 95% confidence intervals

Regarding reaction times, we obtained a main effect of group, F(2, 86) = 3.43 , p < .001, η2p = .177, revealing faster responses for younger (p = .007) and middle-aged adults (p < .001) than for older adults. We also found a main effect of emotion, F(5, 430) = 3.43 , p = .020, η2p = .038, ε = .597, revealing faster responses in scenarios portraying happiness than in the remaining scenarios (all p < .027), with the exception of trials portraying disgust (p = .322). We found a marginal main effect of congruency, F(1, 86) = 3.23 , p = .076, η2p = .036, but no significant interactions emerged (all F < 1). Descriptive statistics of reaction times are presented in Table 3 of the Online Supplementary Material.

The results of the Pearson’s correlations are presented in Table 4 of the Online Supplementary Material. According to the linear regression models (Table 5 of the Online Supplementary Material), the scores of the IFS were significant predictors of the results obtained in the congruent conditions for younger adults, while the scores of the Learning subtest of the AVLT were significant predictors of the results obtained in the congruent conditions for older adults. The scores of the IFS were significant predictors of the results obtained in the incongruent conditions for older adults.

Electrophysiological results Footnote 3

The statistical analysis of the N170 showed a significant main effect of group, F(2, 86) = 13.2, p < .001, η2p = .236, revealing N170 amplitudes that were significantly lower for younger than for middle-aged (p = .017) and older adults (p < .001). Middle-aged adults had N170 amplitudes marginally lower than older adults (p = .065). We found a main effect of hemisphere F(1, 86) = 9.67, p = .003, η2p = .101, revealing N170 amplitudes significantly higher at the right than at the left hemisphere. The main effect of emotion was marginally significant, F(5, 430) = 2.00, p = .078, η2p = .023, but the main effect of congruency was not significant (F < 1). We found a significant emotion*congruency interaction, F(5, 430) = 4.54, p < .001, η2p = .050, along with a significant emotion*congruency*hemisphere*group interaction, F(10, 430) = 2.19, p = .017, η2p = .048. No other significant interactions emerged (all F < 1.79, p > .060). Since our aim was to explore age differences in the processing of FEEs congruent and incongruent with previous scenarios, we explored this three-way interaction through three repeated measures ANOVAs, conducted by group (Fig. 4). Results of younger and middle-aged adults showed marginally significant emotion*congruency interactions, respectively F(5, 145) = 2.66, p = .050, η2p = .084, ε = .720 and F(5, 140) = 2.15, p = .069, η2p = .112. In older adults this interaction was significant, F(5, 140) = 3.52, p = .011, η2p = .112, ε = .887, revealing N170 amplitudes significantly higher for incongruent than for congruent conditions portraying fear (p = .007). Descriptive statistics of N170 amplitudes are presented in Table 6 of the Online Supplementary Material.

Statistical analysis of the LPPe showed a main effect of group, F(2, 86) = 3.79, p = .027, η2p = .081, revealing that younger adults had amplitudes significantly higher than middle-aged adults (p = .042), but marginally higher than older adults (p = .083). A main effect of congruency emerged, F(1, 86) = 29.5, p < .001, η2p = .255, revealing higher amplitudes for congruent than for incongruent conditions. However, we did not find a main effect of emotion, F(5, 430) = 2.38, p = .232, η2p = .016, ε = .947. The emotion*congruency*group was not significant, F(10, 430) = 1.03, p = .421, η2p = .023, but we found a significant congruency*group interaction, F(2, 86) = 5.24, p = .007, η2p = .109. Pairwise comparisons (Fig. 5) revealed that congruent and incongruent conditions elicited similar LPPe amplitudes for older adults (p = .313), but younger (p < .001) and middle-aged adults (p = .006) showed LPPe amplitudes significantly higher for congruent than for incongruent conditions.

Regarding the LPPl, we found a main effect of group, F(2, 86) = 3.27, p = .046, η2p = .071, revealing that younger adults had amplitudes marginally higher than older adults (p = .072). We did not find a main effect of emotion, F(5, 430) = 1.37, p = .236, η2p = .016, ε = .829, but we found a significant main effect of congruency, F(1, 86) = 33.4, p < .001, η2p = .279, revealing higher amplitudes for congruent than for incongruent conditions. The emotion*congruency*group interaction was not significant, F(10, 430) = 1.17, p = .312, η2p = .026, but we found a significant congruency*group interaction, F(2, 86) = 6.91, p = .002, η2p = .138. Pairwise comparisons (Fig. 5) revealed that congruent and incongruent conditions elicited similar LPPl amplitudes for older adults (p = .674), but younger (p < .001) and middle-aged adults (p < .001) showed LPPl amplitudes significantly higher for congruent than for incongruent conditions. The LPPs are plotted in Fig. 6, and descriptive statistics are presented in Table 7 of the Online Supplementary Material.

(a) Grand-average of LPPe (300−500 ms) and LPPl (500−700 ms) for younger, middle-aged, and older adults obtained in the centro-parietal region of interest. (b) Topographical maps for event-related potentials elicited by congruent and incongruent conditions at 300−500 ms (above) and 500−700 ms (below). Note: Since we did not find a main effect of emotion, all emotional conditions were averaged by congruency

For younger adults, the N170 amplitudes elicited by incongruent trials in the left hemisphere were positively correlated with the scores of the Delayed Recognition subtest of the AVLT (Table 8 of the Online Supplementary Material). The LPPe amplitudes elicited by congruent trials were positively correlated with the scores of the Learning subtest of the AVLT for younger adults, while the LPPe amplitudes elicited by incongruent trials were negatively correlated with the scores of the TMT for older adults. The LPPl amplitudes elicited by both congruent and incongruent trials were positively correlated with the scores of the Learning subtest of the AVLT for middle-aged adults, and negatively correlated with the scores of the Delayed Recognition subtest of the AVLT for older adults.

Discussion

Aging seems to affect ToM, but its effect on emotional perspective-taking is less well studied. Few studies have focused on the neural mechanisms underlying emotional perspective-taking, and the rare ones that investigated this ability with ERP methodology did not include older adults. Considering this gap, we adapted a perspective-taking task previously used in an fMRI study in order to build a context-target congruency task that could elicit two ERPs that were shown to be modulated by the affective and evaluative congruency: the N170 and LPP. Our aim was to examine how perspective-taking abilities – assessed in the present study as the accuracy in inferring the emotion of a masked actor of a given scenario, and decide its congruency with a target facial expression of emotion – could modulate the neural responses to congruent and incongruent context-target trials, in different age groups.

Considering previous results found in ToM, we formulated two main predictions. We expected poorer performance of older adults in comparison to middle-aged and younger adults (Henry et al., 2013), independent of neurocognitive performance (Moran, 2013). We also predicted the influence of perspective-taking on earlier and later stages of affective processing, indexed by a modulation in the N170 and LPP components. The N170 should be enhanced in congruent trials (Hietanen & Astikainen, 2013; Righart & de Gelder, 2006, 2008a, 2008b), while the LPP should be enhanced in incongruent trials (Diéguez-Risco et al., 2013; Dozolme, Brunet-Gouet, Passerieux, & Amorim, 2015; Herring et al., 2011). As we expected a decreased perspective-taking in the older group, we predicted an absence of these ERP modulations in this group.

The behavioral results were according to our hypothesis. Younger adults outperformed older adults in all conditions, except in congruent trials portraying happiness, in which groups had similar performances. This finding may be explained by the aging positivity effect (Mather & Carstensen, 2005), which postulates that older adults allocate more attention and memorize positive better than negative emotional stimuli (Nashiro, Sakaki, & Mather, 2012). Our results did not completely support this effect, since older adults had a similar instead of greater accuracy in trials portraying happiness. However, the positivity effect may be manifested by a preserved ability to infer happiness during aging, contrary to what happens in neutral and negative emotions. The worse performance of older adults in the remaining conditions is in accordance with previous findings. For instance, two meta-analyses showed that an age-related decline in emotional recognition is the predominant pattern across all emotions, with increased difficulties for anger, fear, and sadness (Gonçalves et al., 2018a; Ruffman, Henry, Livingstone, & Phillips, 2008). Middle-aged adults also outperformed older adults, but in fewer conditions than younger adults did.

Interestingly, the results obtained in the congruent trials were significantly predicted by better executive functioning for younger adults, being predicted by better working memory for older adults. The results obtained in incongruent trials were also predicted by better executive function, but only for older adults. This was an unexpected finding if we consider results obtained in ToM tasks. According to a comprehensive review (Moran, 2013), older adults’ performance in ToM tasks using visual stimuli are relatively independent of cognitive abilities, while tasks using verbal stories are more dependent on neurocognition. However, the complexity of our task may account for the increased impact of cognitive abilities on perspective-taking performance. Visual stimuli used previously in ToM tasks usually did not require the interpretation of stories (e.g., see the “reading the mind in the eyes” task by Baron-Cohen, Jolliffe, Mortimore, & Robertson, 1997). Contrarily, tasks using verbal stimuli (e.g., see “strange stories” by Happé, 1994), may be more demanding on cognitive abilities, since they comprise situations where people say things that are not literally interpretable. In these stories, to understand the speaker’s intention, participants may have to sidestep the literal meaning of the sentence and infer the speaker’s mental state. Similarly, in our task, participants may have had to forfeit their own perspective for an accurate response, which may explain the role of executive functioning in behavioral performance. Moreover, an accurate answer was also dependent on the ability to maintain in working memory the emotion inferred during the visualization of the scenario, which was then compared with the emotion displayed by the target FEE. Thus, as the performance was predicted by better results in executive functions, our results suggest that the involvement of cognitive abilities in empathic tasks may be dependent on the complexity of the task, particularly on the demands of inhibitory control.

Regarding electrophysiological results, we found significantly more negative N170 amplitudes at the right hemisphere, which is a systematic finding (e.g., Rossion & Jacques, 2008). Interestingly, N170 amplitudes of older adults were significantly higher than those of younger adults and marginally higher than those of middle-aged adults. This is consistent with the results of a previous study conducted by our group, in which we examined age-related differences in emotion identification abilities (Gonçalves et al., 2018b). In this study, such results occur simultaneously with an equivalent performance in emotion identification, which is consistent with the compensation hypothesis (Cabeza, 2002; Reuter-Lorenz & Cappell, 2008). According to this hypothesis, additional neural activity serves a beneficial, compensatory function during aging, without which performance decline would occur. Taken together, these findings suggest that older adults may have a preserved ability to encode facial structure and emotion, supported by increased neural resources employed during this function.

The main effect of emotion was marginally significant, which can be explained by a lack of instructions to identify the emotion displayed by the target FEE. A previous study only found a N170 modulated by emotion in a task demanding emotional discrimination, in comparison with a task demanding sex discrimination (Wronka & Walentowska, 2011). This component was not modulated by the congruency between the emotional contexts and the target’s FEE, which was unexpected considering that previous studies that used pictures as contexts found a systematically higher N170 in congruent trials (Hietanen & Astikainen, 2013; Righart & de Gelder, 2006, 2008a, 2008b). Nonetheless, this modulation is less consistent in tasks using sentences as contexts. Two previous studies did not find a congruency modulation in N170 (Diéguez-Risco et al., 2013; Dozolme et al., 2015), while one study showed a higher N170 in incongruent trials (Diéguez-Risco et al., 2015). Once again, it is possible that our results are closer to those obtained with verbal stimuli; an equivalent demand in interpretation would result only in the modulation of later ERP components.

Taking previous findings into account, the results concerning the emotion by congruency interaction are difficult to interpret. Righart and de Gelder (2006, 2008a, 2008b) found larger N170 amplitudes after fearful faces congruent with fearful scenarios, and Hietanen and Astikainen (2013) reported equivalent results in sad and happy conditions. However, Diéguez-Risco et al. (2015) found larger N170s for anger and happiness incongruent conditions, and we found similar results, but only for older adults and in the fear condition. These discrepant results may arise from differences in the task demands. While previous studies required the emotional identification displayed by the target FEE, here (as in our study) study participants had to judge the congruency between the target FEE and the preceding context. Thus, an enhanced N170 after incongruent trials could reflect the influence of deliberative processes that would involve the interaction of face-processing systems with previous knowledge about the emotional reactions that are expected in different situations. According to this interpretation, perceptual processing would be increased for stimuli involving a violation of expectations, since their emotional meaning is inconsistent with the context (Diéguez-Risco et al., 2015). This interpretation sounds plausible, but does not fully explain why this result was found only for older adults and in such a specific emotional category. Such a discrepancy highlights that further work is needed to clarify the influence of different contextual stimuli in N170 modulation.

The results obtained in the LPPs were consistent between the early and late time-windows, showing higher amplitudes in congruent than in incongruent trials. The direction of this effect is unexpected, since previous studies using context-target congruency tasks found larger LPPs after incongruent FEE, in conditions involving both implicit (Diéguez-Risco et al., 2013; Morioka et al., 2016) and explicit processing of congruency (Diéguez-Risco et al., 2015). Higher LPP amplitudes elicited by incongruent trials are systematically reported in the priming literature, namely in tasks requiring an evaluative congruity between primes and facial (Herring et al., 2011; Werheid, Alpay, Jentzsch, & Sommer, 2005) or verbal targets (Zhang, Li, Gold, & Jiang, 2010). This effect is interpreted as a spreading activation of affective priming (Hietanen & Astikainen, 2013), in which an affective context activates the associated affective evaluation, facilitating the processing of affectively congruent stimuli (Hietanen & Astikainen, 2013). For instance, in evaluative priming paradigms, a prime word such as “champion” activates pleasant concepts in memory, which produce a quicker response to target-congruent words (e.g., “saint”) compared to the target-incongruent words (e.g., “killer”). In such tasks, incongruent targets would evoke larger LPPs, reflecting a more demanding retrieval required to evaluate congruency.

As we found an opposite pattern of activation, it is possible that our task could have evoked other neural processes than the ones underlying the priming effects reported by previous studies (Diéguez-Risco et al., 2013, 2015; Morioka et al., 2016). For instance, Diéguez-Risco et al. (2013, 2015) used happy- or anger-inducing sentences that might have worked as primes during the processing of FEE. Similarly, in Morioka and colleagues’ study (2016), stimuli were sentences describing moderately affective events relevant to participants. On the contrary, we instructed participants to attend the scenes, infer the mental state of the masked interveners, and decide if the FEE displayed after the scene matched the one previously inferred. As the LPP is also modulated by the explicit recognition of stimuli, being larger to recognized stimuli in comparison to new (Danker et al., 2008; Münte, Urbach, Duzel & Kutas, 2000), the increased amplitudes to congruent stimuli may reflect the recognition of the masked person’s emotion in those displayed by the target FEE. In fact, this recruitment of memory resources to perform the perspective-taking task explains the unexpected influence of executive functioning in the behavioral results.

According to our hypothesis, older adults did not show the LPP modulation found in younger and middle-aged adults. That is, while younger and middle-aged adults showed higher LPP amplitudes after FEEs congruent with previous scenarios than after incongruent FEEs, older adults had similar amplitudes after both. It is worth noting that this insensitivity of older adults’ LPP to the congruency of the trials is not explained by an age-related decline in face encoding, bearing in mind the results obtained with the N170 amplitudes (Gonçalves et al., 2018b). Moreover, this was observed independent of the accuracy, since only correct trials were considered on the analysis of the ERPs. Thereby, this result may be interpreted as a difficulty of older adults in inferring accurate emotional states during the visualization of the scenarios. Thus, while younger and middle-aged adults are able to infer the appropriate actor’s emotional state during the observation of the scenario, which they maintain in working memory and then compare with the emotion displayed in the target FEE, older adults only reach the correct response after observing the target FEE, which may act as a clue. This may underlie older adults’ difficulties in using the facial expression of others to understand their inner states and take their emotional perspective, as well as to understand the relation between their mental states and the ongoing social events.

Considering that previous studies suggest there are differences in empathy between males and females (e.g., Christov-Moore et al., 2014), we preformed an exploratory analysis comparing behavioral and electrophysiological results of males and females. Despite the fact that we did not find gender differences (Supplementary Material), a recent review showed quantitative gender differences in the basic networks involved in affective and cognitive forms of empathy, as well as qualitative differences in how emotional information is integrated to support decision-making processes (Christov-Moore et al., 2014). Thereby, our contrasting results unveil the need for further research in this field, and using designs with greater statistical power and considering convergent tasks may improve and help to uncover the nature of gender differences in empathy.

Our results bring important contributions to the state of the art in the research field of aging and perspective-taking. Aging is typically associated with impairments in ToM, which can lead to difficulties in social functioning (Moran, 2013). As emotional perspective-taking is an indispensable element in the fully developed ToM (Decety & Jackson, 2004), research on this ability may help to better understand older adults’ difficulties during social interactions. Moreover, study of the modulation of ERPs by perspective-taking may unveil the time course of the processing of the information regarding contexts and the eliciting emotions, as well as the stage of this processing in which older adults’ difficulties emerged.

Interestingly, middle-aged adults appear to be at an intermediate level between younger and older adults, at least behaviorally. They had better results than older adults, but in fewer conditions than younger adults did. The lack of previous studies with a similar group design makes these results more difficult to interpret. The existing literature typically compares younger and older adults, but introducing middle-aged adults allows better analysis of the development of perspective-taking abilities across adulthood.

Notes

As a consequence, the statistical analyses performed on the ERP results were repeated using the number of valid trials as covariant.

Electrode notation included in the ROIs correspond to the 128-channel Geodesic Sensor Net (EGI). Electrodes described in brackets are their 10-10 International System Equivalents.

The statistical analyses performed on the ERPs results were repeated using the number of valid trails as covariant. The ANCOVA results revealed that there is no effect of the covariate on N170, LPPe, and LPPl amplitudes (all F < 1).

References

Almeida, P. R., Ferreira-Santos, F., Chaves, P. L., Paiva, T. O., Barbosa, F., & Marques-Teixeira, J. (2016). Perceived arousal of facial expressions of emotion modulates the N170, regardless of emotional category: Time domain and time–frequency dynamics. International Journal of Psychophysiology, 99, 48-56. https://doi.org/10.1016/j.ijpsycho.2015.11.017

Armitage, S. G. (1946). An analysis of certain psychological tests used for the evaluation of brain injury. Psychology Monographs, 60(1), 1-48. https://doi.org/10.1037/h0093567

Baron-Cohen, S., Jolliffe, T., Mortimore, C., & Robertson, M. (1997). Another advanced test of theory of mind: Evidence from very high functioning adults with autism or Asperger syndrome. Journal of Child Psychology and Psychiatry, 38, 813-822. https://doi.org/10.1111/j.1469-7610.1997.tb01599.x

Bentin, S., Allison, T., Puce, A., Perez, E., & McCarthy, G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8(6), 551-565. https://doi.org/10.1162/jocn.1996.8.6.551

Boake, C. (2000). Édouard Claparède and the Auditory Verbal Learning Test. Journal of Clinical and Experimental Neuropsychology, 22(2), 286–292. https://doi.org/10.1076/1380-3395(200004)22:2;1-1;FT286

Bowman, L. C., Liu, D., Meltzoff, A. N., & Wellman, H. M. (2012). Neural correlates of belief-and desire-reasoning in 7-and 8-year-old children: an event-related potential study. Developmental Science, 15(5), 618-632. https://doi.org/10.1111/j.1467-7687.2012.01158.x

Cabeza, R. (2002). Hemispheric asymmetry reduction in older adults: the HAROLD Model. Psychology and Aging, 17(1), 85-100.

Canavarro, M. C. (1999). Inventário de sintomas psicopatológicos - BSI. Em M. G. M. R. Simões, Testes e Provas Psicológicas em Portugal (Vol. II) (pp. 87-109). Braga: SHO/APPORT.

Castelli, I., Baglio, F., Blasi, V. A., Falini, A., Liverta-Sempio, O., & Marchetti, A. (2010). Effects of aging on mindreading ability through the eyes: an fMRI study. Neuropsychologia, 48(9), 2586–2594. https://doi.org/10.1016/j.neuropsychologia.2010.05.005

Cavaco, S., Gonçalves, A., Pinto, C., Almeida, E., Gomes, F., Moreira, I., … Teixeira-Pinto, A. (2013a). Semantic fluency and phonemic fluency: regression-based norms for the Portuguese population. Archives of Clinical Neuropsychology, 28(3), 262-271. https://doi.org/10.1093/arclin/act001

Cavaco, S., Gonçalves, A., Pinto, C., Almeida, E., Gomes, F., Moreira, I., … Teixeira-Pinto, A. (2013b). Trail Making Test: Regression-based norms for the Portuguese population. Archives of Clinical Neuropsychology, 28(2), 189-198. https://doi.org/10.1093/arclin/acs

Cavaco, S., Gonçalves, A., Pinto, C., Almeida, E., Gomes, F., Moreira, I., … Teixeira-Pinto, A. (2015). Auditory Verbal Learning Test in a large nonclinical Portuguese population. Applied Neuropsychology: Adult, 22(5), 321-331. https://doi.org/10.1080/23279095.2014.927767

Christov-Moore, L., Simpson, E. A., Coudé, G., Grigaityte, K., Iacoboni, M., & Ferrari, P. F. (2014). Empathy: gender effects in brain and behavior. Neuroscience & Biobehavioral Reviews, 46, 604-627.

Cowell, J. M., & Decety, J. (2015). Precursors to morality in development as a complex interplay between neural, socioenvironmental, and behavioral facets. Proceedings of the National Academy of Sciences, 112(41), 12657-12662. https://doi.org/10.1073/pnas.1508832112

Danker, J. F., Hwang, G. M., Gauthier, L., Geller, A., Kahana, M. J., & Sekuler, R. (2008). Characterizing the ERP Old–New effect in a short-term memory task . Psychophysiology, 45, 784-793. https://doi.org/10.1111/j.1469-8986.2008.00672.x

David, N., Aumann, C., Santos, N. S., Bewernick, B. H., Eickhoff, S. B., Newen, A., ... & Vogeley, K. (2008). Differential involvement of the posterior temporal cortex in mentalizing but not perspective taking. Social Cognitive and Affective Neuroscience, 3(3), 279-289. https://doi.org/10.1093/scan/nsn023

Davis, M. H. (1983). Measuring individual differences in empathy: Evidence for a multidimensional approach. Journal of Personality and Social Psychology, 44(1), 113. https://doi.org/10.1037/0022-3514.44.1.113

Decety, J., & Jackson, P. L. (2004). The functional architecture of human empathy. Behavioral and Cognitive Neuroscience Reviews, 3(2), 71-100.

Decety, J., Yang, C. Y., & Cheng, Y. (2010). Physicians down-regulate their pain empathy response: an event-related brain potential study. Neuroimage, 50(4), 1676-1682. https://doi.org/10.1016/j.neuroimage.2010.01.025

Delorme, A., & Makeig, S. (2004). An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. https://doi.org/10.1016/j.jneumeth.2003.10.009

Derntl, B., Finkelmeyer, A., Toygar, T. K., Hülsmann, A., Schneider, F., Falkenberg, D. I., & Habel, U. (2009). Generalized deficit in all core components of empathy in schizophrenia. Schizophrenia Research, 108(1), 197-206. https://doi.org/10.1016/j.schres.2008.11.009

Derogatis, L. R. (1982/1993). BSI: Brief Symptom Inventory (3rd ed.). Minneapolis: National Computers Systems.

Diéguez-Risco, T., Aguado, L., Albert, J., & Hinojosa, J. A. (2013). Faces in context:modulation of expression processing by situational information. Social Neuroscience, 8(6), 601–620. https://doi.org/10.1080/17470919.2013.834842

Diéguez-Risco, T., Aguado, L., Albert, J., & Hinojosa, J. A. (2015). Judging emotional congruency: Explicit attention to situational context modulates processing of facial expressions of emotion. Biological Psychology, 112, 27-38. https://doi.org/10.1016/j.biopsycho.2015.09.012

Dozolme, D., Brunet-Gouet, E., Passerieux, C., & Amorim, M. (2015). Neuroelectric correlates of pragmatic emotional incongruence processing:empathy matters. PLOS One, 10(6), e0129770. https://doi.org/10.1371/journal.pone.0129770

Fernandes, C., Pasion, R., Gonçalves, A. R., Ferreira-Santos, F., Barbosa, F., Martins, I. P., & Marques-Teixeira, J. (2018). Age differences in neural correlates of feedback processing after economic decisions under risk. Neurobiology of aging, 65, 51-59.

Foti, D., & Hajcak, G. (2008). Deconstructing reappraisal: Descriptions preceding arousing pictures modulate the subsequent neural response. Journal of Cognitive Neuroscience, 20(6), 977-988. https://doi.org/10.1162/jocn.2008.20066

Freitas, S., Simões, M. R., & Santana, I. (2014). Montreal Cognitive Assessment (MoCA): Pontos de corte no Défice Cognitivo Ligeiro, Doença de Alzheimer, Demência Frontotemporal e Demência Vascular. Sinapse,14, 18-30.

Gao, L., Xu, J., Zhang, B., Zhao, L., Harel, A., & Bentin, S. (2009). Aging effects on early-stage face perception: An ERP study. Psychophysiology, 46(5), 970-983. https://doi.org/10.1111/j.1469-8986.2009.00853.x

Gonçalves, A. R., Fernandes, C., Pasion, R., Ferreira-Santos, F., Barbosa, F., & Marques-Teixeira, J. (2018a). Effects of age on the identification of emotions in facial expressions: a meta-analysis. PeerJ, 6, e5278.

Gonçalves, A. R., Fernandes, C., Pasion, R., Ferreira-Santos, F., Barbosa, F., & Marques-Teixeira, J. (2018b). Emotion identification and aging: Behavioral and neural age-related changes. Clinical Neurophysiology, 129(5), 1020-1029. https://doi.org/10.1016/j.clinph.2018.02.128

Happé, F. G. (1994). An advanced test of theory of mind: Understanding of story characters' thoughts and feelings by able autistic, mentally handicapped, and normal children and adults. Journal of Autism and Developmental Disorders, 24, 129-154.

Henry, J. D., Phillips, L. H., Ruffman, T., & Bailey, P. E. (2013). A meta-analytic review of age differences in theory of mind. Psychology and Aging, 28(3), 826-839. https://doi.org/10.1037/a0030677

Herring, D. R., Taylor, J. H., White, K. R., & Crites, S. L. (2011). Electrophysiological responses to evaluative priming: the LPP is sensitive to incongruity. Emotion, 11(4), 794–806. https://doi.org/10.1037/a0022804

Hietanen, J. K., & Astikainen, P. (2013). N170 response to facial expressions is modulated by the affective congruency between the emotional expression and preceding affective picture. Biological Psychology, 92(2), 114-124. https://doi.org/10.1016/j.biopsycho.2012.10.005

Hinojosa, J. A., Mercado, F., & Carretié, L. (2015). N170 sensitivity to facial expression: A meta-analysis. Neuroscience & Biobehavioral Reviews, 55, 498-509. https://doi.org/10.1016/j.neubiorev.2015.06.002

Jackson, P. L., Meltzoff, A. N., & Decety, J. (2006). Neural circuits involved in imitation and perspective-taking. Neuroimage, 31(1), 429-439. https://doi.org/10.1016/j.neuroimage.2005.11.026

Kelley, W. M., Miezin, F. M., McDermott, K. B., Buckner, R. L., Raichle, M. E., Cohen, N. J., … Petersen, S. E. (1998). Hemispheric specialization in human dorsal frontal cortex and medial temporal lobe for verbal and nonverbal memory encoding. Neuron, 20(5), 927-936. https://doi.org/10.1016/S0896-6273(00)80474-2

Kisley, M. A., Wood, S., & Burrows, C. L. (2007). Looking at the sunny side of life: Age-related change in an event-related potential measure of the negativity bias. Psychological Science, 18(9), 838-843.

Komes, J., Schweinberger, S. R., & Wiese, H. (2014). Preserved fine-tuning of face perception and memory: evidence from the own-race bias in high-and low-performing older adults. Frontiers in Aging Neuroscience, 6, 60. https://doi.org/10.3389/fnagi.2014.00060

Li, W., & Han, S. (2010). Perspective taking modulates event-related potentials to perceived pain. Neuroscience Letters, 469(3), 328-332. https://doi.org/10.1016/j.neulet.2009.12.021

Liao, X., Wang, K., Lin, K., Chan, R. C., & Zhang, X. (2017). Neural temporal dynamics of facial emotion processing: age effects and relationship to cognitive function. Frontiers in Psychology, 8, 1110. https://doi.org/10.3389/fpsyg.2017.01110

Liu, D., Meltzoff, A. N., & Wellman, H. M. (2009). Neural Correlates of Belief-and Desire-Reasoning. Child Development, 80(4), 1163-1171. https://doi.org/10.1111/j.1467-8624.2009.01323.x

Liu, D., Sabbagh, M. A., Gehring, W. J., & Wellman, H. M. (2004). Decoupling beliefs from reality in the brain: an ERP study of theory of mind. NeuroReport, 15, 991-995. https://doi.org/10.1097/01.wnr.0000123388.87650.06

Mather, M., & Carstensen, L. L. (2005). Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Sciences, 9(10) 496-502. https://doi.org/10.1016/j.tics.2005.08.005

McCleery, J. P., Surtees, A. D., Graham, K. A., Richards, J. E., & Apperly, I. A. (2011). The neural and cognitive time course of theory of mind. Journal of Neuroscience, 31(36), 12849-12854. https://doi.org/10.1523/JNEUROSCI.1392-11.2011

Meinhardt, J., Sodian, B., Thoermer, C., Döhnel, K., & Sommer, M. (2011). True-and false-belief reasoning in children and adults: An event-related potential study of theory of mind. Developmental Cognitive Neuroscience, 1(1), 67-76. https://doi.org/10.1016/j.dcn.2010.08.001

Moran, J. M. (2013). Lifespan development: The effects of typical aging on theory of mind. Behavioural Brain Research, 237, 32-40. https://doi.org/10.1016/j.bbr.2012.09.020

Moran, J. M., Jolly, E., & Mitchell, J. P. (2012). Social-cognitive deficits in normal aging. Journal of Neuroscience, 32(16), 5553-5561.

Moreira, H. S., Lima, C. F., & Vicente, S. G. (2014). Examining Executive Dysfunction with the Institute of Cognitive Neurology (INECO) Frontal Screening (IFS): normative values from a healthy sample and clinical utility in Alzheimer's disease. Journal of Alzheimer's Disease, 42(1), 261-273. https://doi.org/10.3233/JAD-132348

Morioka, S., Osumi, M., Shiotani, M., Nobusako, M., Maeoka, H., Okada, Y., … Matsuo, A. (2016). Incongruence between Verbal and Non-Verbal Information Enhances the Late Positive Potential. PloS One, 11, 1-11. https://doi.org/10.1371/journal.pone.0164633

Münte, T. F., Urbach, T. P., Duzel, E., & Kutas, M. (2000). Event-related brain potentials in the study of human cognition and neuropsychology. Em F. Boller, G. J., & G. Rizzolatti, Handbook of Neuropsychology, Vol. 1, 2nd edition (pp. 1–97). Netherlands: Elsevier Science Publishers.

Musial, P. G., Baker, S. N., Gerstein, G. L., King, E. A., & Keating, J. G. (2002). Signal-to-noise ratio improvement in multiple electrode recording. Journal of neuroscience methods, 115(1), 29-43. https://doi.org/10.1016/S0165-0270(01)00516-7

Nashiro, K., Sakaki, M., & Mather, M. (2012). Age differences in brain activity during emotion processing: Reflections of age-related decline or increased emotion regulation? Gerontology, 58(2), 156-163. https://doi.org/10.1159/000328465

Nasreddine, Z. S., Phillips, N. A., Bédirian, V., Charbonneau, S. V., Whitehead, I., Collin, I., … Chertkow, H., (2005). The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. Journal of the American Geriatrics Society, 53(4), 695-699. https://doi.org/10.1111/j.1532-5415.2005.53221.x

O’Brien, E., Konrath, S. H., Grühn, D., & Hagen, A. L. (2012). Empathic concern and perspective taking: Linear and quadratic effects of age across the adult life span. Journals of Gerontology Series B: Psychological Sciences and Social Sciences, 68(2), 168-175. https://doi.org/10.1093/geronb/gbs055

Pais-Ribeiro, J., Silva, I., Ferreira, T., Martins, A., Meneses, R., & Baltar, M. (2007). Validation study of a Portuguese version of the Hospital Anxiety and Depression Scale. Psychology, Health & Medicine, 12(2), 225-237. https://doi.org/10.1080/13548500500524088

Pasion, R., Fernandes, C., Gonçalves, A. R., Ferreira-Santos, F., Páscoa, R., Barbosa, F., & Marques-Teixeira, J. (2018). The effect of aging on the (mis) perception of intentionality-an ERP study. Social Neuroscience https://doi.org/10.1080/17470919.2018.1430614

Perrin, F., Pernier, J., Bertrand, O., & Echallier, J. F. (1989). Spherical splines for scalp potential and current density mapping. Electroencephalography and clinical neurophysiology, 72(2), 184-187. https://doi.org/10.1016/0013-4694(89)90180-6

Renfroe, J. B., Bradley, M. M., Sege, C. T., & Bowers, D. (2016). Emotional modulation of the late positive potential during picture free viewing in older and young adults. PloS One, 11(9), e0162323. https://doi.org/10.1371/journal.pone.0162323

Reuter-Lorenz, P. A., & Cappell, K. A. (2008). Neurocognitive aging and the compensation hypothesis. Current Directions in Psychological Science, 17(3), 177-182.

Righart, R., & de Gelder, B. (2006). Context influences early perceptual analysis offaces an electrophysiological study. Cerebral Cortex, 16(9), 1249–1257. https://doi.org/10.1093/cercor/bhj066

Righart, R., & de Gelder, B. (2008a). Rapid influence of emotional scenes onencoding of facial expressions: an ERP study. Social, Cognitive and Affective Neuroscience, 3(3), 270–278. https://doi.org/10.1093/scan/nsn021

Righart, R., & de Gelder, B. (2008b). Recognition of facial expressions is influencedby emotional scene gist. Cognitive, Affective, and Behavioral Neuroscience, 8(3), 264–272. https://doi.org/10.3758/CABN.8.3.264

Rossion, B., & Jacques, C. (2008). Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage, 39(4), 1959-1979. https://doi.org/10.1016/j.neuroimage.2007.10.011

Ruffman, T., Henry, J. D., Livingstone, V., & Phillips, L. H. (2008). A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience & Biobehavioral Reviews, 32(4), 863-881. https://doi.org/10.1016/j.neubiorev.2008.01.001

Sabbagh, M. A., & Taylor, M. (2000). Neural correlates of theory-of-mind reasoning: an event-related potential study. Psychological Science, 11(1), 46-50.

Snaith, R. P., & Zigmond, A. P. (1994). The Hospital Anxiety and Depression Scale Manual. Windsor: NFER-Nelson.

Strauss, E., Sherman, E. M., & Spreen, O. (2006). A compendium of neuropsychological tests: Administration, norms, and commentary (3rd). New York: Oxford University Press.

Torralva, T., Roca, M., Gleichgerrcht, E., Lopez, P., & Manes, F. (2009). INECO Frontal Screening (IFS): A brief, sensitive, and specific tool to assess executive functions in dementia. Journal of the International Neuropsychological Society, 15(5), 777-786. https://doi.org/10.1017/S1355617709990415

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., … Nelson, C. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research, 168(3), 242-249. https://doi.org/10.1016/j.psychres.2008.05.006

Wang, Y. W., Lin, C. D., Yuan, B., Huang, L., Zhang, W. X., & Shen, D. L. (2010). Person perception precedes theory of mind: an event related potential analys. Neuroscience, 170(1), 238-246. https://doi.org/10.1016/j.neuroscience.2010.06.055

Wechsler, D. (2008). WMS - III. Escala de memória de Wechsler. Lisboa: CEGOC-TEA.

Weinberg, A., & Hajcak, G. (2010). Beyond good and evil: The time-course of neural activity elicited by specific picture content. Emotion, 10(6), 767.

Werheid, K., Alpay, G., Jentzsch, I., & Sommer, W. (2005). Priming emotional facial expressions as evidenced by event-related brain potentials. International Journal of Psychophysiology, 55(2), 209-219. https://doi.org/10.1016/j.ijpsycho.2004.07.006

Wood, S., & Kisley, M. A. (2006). The negativity bias is eliminated in older adults: age-related reduction in event-related brain potentials associated with evaluative categorization. Psychology and Aging, 21(4), 815. https://doi.org/10.1037/0882-7974.21.4.815

Wronka, E., & Walentowska, W. (2011). Attention modulates emotional expression processing. Psychophysiology, 48(8), 1047-1056. https://doi.org/10.1111/j.1469-8986.2011.01180.x

Yi, D., Chu, K., Ko, H., Lee, Y., Byun, M. S., Lee, J. H., & Lee, D. Y. (2017). Changes in perspective taking ability in aging process are dependent on gender and alzheimer-related cognitive decline. Alzheimer's & Dementia: The Journal of the Alzheimer's Association, 13(7), P369. https://doi.org/10.1016/j.jalz.2017.06.319

Yoder, K. J., & Decety, J. (2014). Spatiotemporal neural dynamics of moral judgment: a high-density ERP study. Neuropsychologia, 60, 39-45. https://doi.org/10.1016/j.neuropsychologia.2014.05.022

Zeman, P. M., Till, B. C., Livingston, N. J., Tanaka, J. W., & Driessen, P. F. (2007). Independent component analysis and clustering improve signal-to-noise ratio for statistical analysis of event-related potentials. Clinical Neurophysiology, 118(12), 2591-2604. https://doi.org/10.1016/j.clinph.2007.09.001

Zhang, Q., Li, X., Gold, B. T., & Jiang, Y. (2010). Neural correlates of cross-domain affective priming. Brain Research, 1329, 142-151. https://doi.org/10.1016/j.brainres.2010.03.021

Acknowledgements

This research was supported by a Grant from the BIAL Foundation. Carina Fernandes was supported by a doctoral fellowship from the Fundação para a Ciência e a Tecnologia (SFRH/BD/112101/2015). We thank Brigit Derntl for her permission to use the stimuli displayed as scenarios in the emotional perspective-taking task, and Nim Tottenham for her permission to use the face stimuli displayed as target FEE. Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Dr. Nim Tottenham for more information concerning the stimulus set (nlt7@columbia.edu; http://danlab7.wixsite.com/nimstim). We also thank Programa de Estudos Universitários para Seniores and Associação de Aposentados Pensionistas e Reformados for their help in participant recruitment.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fernandes, C., Gonçalves, A.R., Pasion, R. et al. Age-related decline in emotional perspective-taking: Its effect on the late positive potential. Cogn Affect Behav Neurosci 19, 109–122 (2019). https://doi.org/10.3758/s13415-018-00648-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-018-00648-1