Abstract

Gaze stimuli can shape attention in a peculiar way as compared to non-social stimuli. For instance, in a spatial Stroop task, gaze stimuli elicit a reversed congruency effect (i.e., faster responses on incongruent than on congruent trials) as compared to arrows, for which a standard congruency effect emerges. Here, we tested whether the reversed congruency effect observed for gaze can emerge for other social signals such as pointing gestures. Participants discriminated the direction (left or right) indicated by gaze and pointing finger stimuli that appeared leftwards or rightwards with respect to a central fixation spot. Arrows were also employed as control non-social stimuli. A reversed congruency effect emerged for the gaze, whereas a standard congruency effect emerged for both the pointing finger and the arrows. This suggests that the reversed congruency effect is specific to gaze stimuli and does not embrace all social signals conveying spatial information.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Gaze stimuli can deeply shape visual attention (e.g., Capozzi & Ristic, 2018; Dalmaso et al., 2020a). One of the most investigated phenomena is the tendency to orient visual attention towards the same spatial location gazed at by others (a form of social attention known as ‘gaze cueing’; e.g., Friesen & Kingstone, 1998). At the behavioural level, several studies reported that, in healthy participants, the magnitude of gaze cueing can be virtually identical to the cueing effect elicited by arrow stimuli, which are non-social stimuli known to induce attentional shifts in an observer (e.g., Dalmaso et al., 2020b; Hermens & Walker, 2010; Kuhn & Kingstone, 2009). For some authors, the strong similarities between gaze and arrow cueing would reflect a domain-general mechanism involved in the processing of the two spatial cues (e.g., Callejas et al., 2014).

In recent years, different tasks have been proposed to investigate whether dissociations between gaze and arrow can emerge. One approach is to explore the processing of gaze and arrow stimuli when they are targets rather than cues for spatial attention, as in the case of a spatial Stroop task in which participants are asked to discriminate the direction indicated by arrow or eye-gaze stimuli (Cañadas & Lupiáñez, 2012). The stimulus (either arrow or gaze) can appear to the left or right of a central fixation point, although its spatial location is task-irrelevant. The typical results show that when the direction indicated by the arrow (e.g., left) is congruent with its spatial location (left), responses are faster than when it appears on the opposite location (right), consistent with the classic results reported in spatial Stroop tasks (see Lu & Proctor, 1995). However, this standard congruency effect (SCE) is generally reversed for gaze stimuli, with faster responses emerging when the direction indicated by the gaze is incongruent, rather than congruent, with its spatial location. This reversed congruency effect (RCE) appears as a robust phenomenon, replicated under different experimental conditions and by different groups (e.g., Chacón-Candia et al., 2020; Hemmerich et al., 2022; Ishikawa et al., 2021; Jones, 2015; Tanaka et al., 2023). Nevertheless, the origins of these effects are still debated. According to Marotta et al. (2018), possible explanations call into question different – yet complementary – mechanisms involved in social attention such as, for instance, ‘eye contact’: on incongruent trials, gaze stimuli would be perceived as actually looking at the participants, thus becoming particularly relevant signals that are able to capture attention more strongly than gaze stimuli looking elsewhere. Another explanation discussed by Marotta et al. (2018) relies on ‘joint attention’, which refers to the situation where two individuals are looking towards the same object. In this regard, attention would be oriented more strongly towards faces that establish an episode of joint attention with an observer rather than towards faces looking elsewhere (Edwards et al., 2015). In the spatial Stroop task, on incongruent trials, the peripheral stimulus is pointing towards the object to which participants’ attention is expected to be allocated (i.e., the fixation cross). This could explain why the RCE emerges for gaze but not for arrow stimuli, which, due to their symbolic nature, lack any social intentionality. In other words, as for the specific case of gaze stimuli, an incongruent trial creates a context in which someone else is looking towards the same location attended by the observer. That is, by definition, an episode of joint attention between two individuals (which does not take place in the case of arrows) that would cause an attentional prioritisation towards the joint gaze. More recently, Hemmerich et al. (2022) have proposed a joint distraction account according to which, on congruent trials, eye-gaze stimuli would be unique in their ability to withdraw attention from the relevant task area, thus increasing response times.

The social nature of the RCE has been also supported by the observation that such a phenomenon is influenced by facial expressions (e.g., Jones, 2015; Torres-Marín et al., 2017) and, unlike arrows, by individual social anxiety (Ishikawa et al., 2021). Critically, the RCE does not occur when non-social stimuli resembling faces are used and participants are asked to appraise them as social targets (Cañadas & Lupiáñez, 2012). However, eye gaze is not the only social signal communicating spatial meaning. In everyday interactions, humans make extensive use of pointing gestures, such as when they use the index finger to signal relevant objects in the environment. Indeed, pointing gestures are essential in both social development and communicative skills (e.g., Matthews et al., 2012), and can also orient attention in an observer (Ariga & Watanabe, 2009; Gregory & Hodgson, 2012). Moreover, like arrows, in healthy participants the spatial shifts of attention elicited by pointing gestures appear to be quantitatively similar to those elicited by eye-gaze stimuli (e.g., Cazzato et al., 2012; Dalmaso et al., 2015). Hence, pointing gestures can be considered reliable communicative social signals that can be used in conjunction with (or alternatively to) eye gaze to establish joint attention with others.

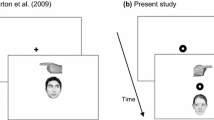

In the present study, we explored whether the RCE for gaze emerging from the spatial Stroop task can also be detected for pointing gestures, with the aim of testing whether the RCE is specific for eye-gaze stimuli or it extends to other social signals. We developed a modified version of the spatial Stroop task proposed by Marotta et al. (2018) in which three different target stimuli were used: Arrow, eye gaze and pointing finger. For the arrow stimulus we expected to observe the SCE, whereas for the eye-gaze stimulus we expected to observe the RCE, consistent with previous studies (e.g., Marotta et al., 2018). Most relevant is the analysis of the effect related to pointing finger stimuli. In this regard, two opposite hypotheses can be proposed. On the one hand, the presence of the RCE, like that expected for the gaze, would support the view that pointing fingers and eye gaze are processed similarly, as they both represent social cues that are informative about the allocation of attention of another individual. On the other hand, the presence of the SCE for pointing fingers (similar to that expected for arrows) would be consistent with the notion that not all social stimuli conveying a spatial meaning are processed similarly and that eye gaze is unique in shaping attentional responses.

Methods

Participants

The sample size was determined by considering the guidelines (Brysbaert & Stevens, 2018) suggested for linear mixed-effect models (see Results section): A minimum of 1,600 observations per experimental cell should be collected. Given our experimental design, the minimum required sample size was about 67 participants. Students at the University of Padova participated on a voluntary basis. Data collection was carried out online and was closed after about 1 week in which no new respondents were recorded, once verified that the minimum number of participants had been met. The final sample consisted of 192 participants (mean age = 25 years, SD = 9.18, 52 males, 16 left-handed). All participants signed an informed consent form. The study was conducted in accordance with the guidelines laid down in the Declaration of Helsinki.

Procedure

The experiment was programmed with PsychoPy and delivered online with Pavlovia, which guarantees reliable data (Bridges et al., 2020). Each trial started with a central black fixation cross (Arial font, 0.1 of normalised units), on a white background, lasting 1,000 ms (see Fig. 1). Then, the target stimulus could appear either leftwards or rightwards with respect to the central cross (± 0.2 of normalised units; timeout: 1,500 ms). Three targets were used: The face of a young adult male, with a neutral expression and gaze averted leftwards or rightwards (about 300 px width × 480 px height), a hand with the index finger pointing leftwards or rightwards (about 170 px width × 80 px height), and two black arrows both pointing leftwards or rightwards (each arrow was about 40 px width × 50 px height). For all targets, the parts conveying spatial information (e.g., eye gaze for the facial stimulus) were roughly the same size. The face stimulus was extracted from the standardised MR2 face database (Strohminger et al., 2016; see also Dalmaso et al., 2021). A single face was used because only one type of arrow and pointing finger stimuli were also utilised. Participants were asked to classify, as quickly and accurately as possible, the direction in which the target was pointing at by pressing the ‘F’ key (with the left index finger) for ‘left’ and the ‘K’ key (with the right index finger) for ‘right’. They were also asked to keep their eyes at fixation and to ignore the location (left or right) in which the target could appear, as it was task-irrelevant. Missing and incorrect responses were signalled by a 500-ms visual feedback (the words ‘TOO SLOW’ and ‘NO’, respectively) and correct responses were followed by a 500-ms blank screen. The three targets were presented in three distinct blocks selected in random order. Each experimental block included 48 trials and was preceded by eight practice trials.

Examples of trials and stimuli (not drawn to scale). Arrows (A), a pointing finger (B), or a face with averted gaze (C) appeared either leftwards or rightwards with respect to the fixation cross. Participants classified the direction (left or right) in which the stimulus was pointing at. Each target type was presented in a different block of trials. In all blocks, visual feedback was provided in case of slow/incorrect responses, whereas correct responses were followed by a blank screen

Results

Trials with missing and incorrect responses (0.5% and 2.9% of total trials, respectively) were discarded.Footnote 1 Correct trials with a latency shorter or longer than 3 SDs of each participant’s mean (calculated separately for each experimental condition) were considered outliers and discarded (0.97% of total trials). After data cleaning, there were, for each experimental condition, a minimum of 4,281 observations, and therefore statistical power was adequate (Brysbaert & Stevens, 2018).

Data were analysed through a linear mixed-effects model by using the R package ‘lme4’ (Bates et al., 2015). Fixed effects were Congruency, Target stimulus, and their interaction. Random effects were the intercepts for participants and the by-participant random slopes for the effects of Congruency and Target stimulus. This was the model associated with the best fitting data established by a likelihood ratio test, which compared different models with an increasing level of complexity (i.e., from the null to the saturated model). A Type 1 ANOVA (implementing Satterthwaite’s approximation for degrees of freedom) for linear mixed-effects models was then used to analyse the model. Effect sizes were calculated by using a standard procedure to get a more direct comparison with the previous works employing this task. When necessary, paired comparisons (Tukey’s HSD) for linear mixed-effects models were computed. Congruency yielded a significant effect, F(1, 187.7) = 154.972, p < .001, η2p = .442, due to shorter RTs on congruent trials (M = 511 ms, SE = 5.13) than on incongruent trials (M = 538 ms, SE = 5.11). Target stimulus yielded a significant effect, F(2, 189.2) = 36.672, p < .001, η2p = .178, due to similar RTs (p = .08) for arrows (M = 508 ms, SE = 5.41) and pointing fingers (M = 517 ms, SE = 5.67), while RTs for gaze were larger (M = 548 ms, SE = 5.97) than for any of the other two stimuli (ps < .001). The slower responses to eye-gaze stimuli are consistent with previous works (e.g., Marotta et al., 2018, 2019; Román-Caballero et al., 2021a), and could reflect the presence of social content as well as the greater perceptual complexity associated with eye-gaze stimuli. Importantly, the Congruency × Target stimulus interaction was also significant, F(1, 25684.7) = 207.374, p < .001, η2p = .387. The paired comparisons showed that RTs were shorter on congruent trials than on incongruent trials for both the arrow target (Mcong = 484 ms, SE = 5.55; Mincong = 531 ms, SE = 5.61, p < .001, d = -1.097) and the pointing finger target (Mcong = 497 ms, SE = 5.83; Mincong = 536 ms, SE = 5.82, p < .001, d = -.919), whereas the reversed pattern emerged for the eye-gaze target, with RTs being shorter on incongruent trials (M = 545 ms, SE = 6.07) than on congruent trials (M = 551 ms, SE = 6.16, p = .022, d = .142; see also Fig. 2).

General discussion

A spatial Stroop task was used to investigate whether pointing gestures would elicit the RCE documented for eye-gaze stimuli or, instead, the SCE similar to that observed for arrows. Participants were asked to discriminate the direction of eye gaze and pointing finger stimuli appearing leftwards or rightwards with respect to a central fixation cross. The spatial location of the stimuli was irrelevant to the task. Arrow stimuli were also used as a control, non-social condition. Consistent with previous studies (e.g., Hemmerich et al., 2022; Marotta et al., 2018), the RCE emerged for gaze stimuli whereas the SCE emerged for arrows. Importantly, pointing finger elicited the SCE. The robustness of the observed pattern has been further supported by a recent study addressing the effects elicited by pointing gestures in a spatial Stroop task (Bonventre & Marotta, 2023). Taken together, these results confirm the peculiarities of eye gaze in shaping visuo-perceptual mechanisms in this task. The observation that pointing fingers and arrows elicited similar effects provides additional insight into the comprehension of the RCE elicited by eye gaze. Indeed, it is important to recall that when arrows, pointing gestures and eye-gaze stimuli are used in tasks designed to study spatial cueing of attention, they generally lead to similar results in healthy participants (e.g., Cazzato et al., 2012; Dalmaso et al., 2015). One possibility is that using these stimuli as targets rather than accessory, task-irrelevant, spatial cues resulted in increasing the likelihood of a full processing of the three types of items, which in turn resulted in different behavioural results. More specifically, spatial cueing paradigms often rely on the explicit instruction to ignore cue stimuli, whereas in the spatial Stroop task the stimuli themselves provide the information for selecting the appropriate response.

Pointing gestures are social stimuli in that, similar to eye gaze (and unlike arrows), they typically belong to intentional agents. From a pointing finger, we may rapidly derive information about where another individual wants us to allocate our attention. Despite the strong social nature of this communicative cue, it is noteworthy that the observed pattern of findings paralleled the one that emerged in the case of arrows, further suggesting the likely uniqueness of eye gaze. One possibility is that, unlike eye gaze, pointing gestures and arrows may both serve a prescriptive function. Hence, while eye gaze provides information about the location presumably attended by others (with no explicit request to orient our attention accordingly), pointing gestures and arrows more typically signal the location where we are somehow ‘pushed’ to shift our attention. This common feature may somehow overrule the social meaning of pointing gestures and play a role in the observed differences with respect to eye stimuli in the behavioural data. An alternative possibility is that the different pattern concerning pointing gestures and eye gaze may in part reflect low-level differences in processing perceptual features of the two stimuli (e.g., figure-background segregation; Román-Caballero et al., 2021a, b). Because the empirical evidence is mixed and there are indeed findings not entirely consistent with this view (Cañadas & Lupiáñez, 2012), future research will need to address this issue more directly.

The notion that eye-gaze stimuli can shape visuo-attentional mechanisms peculiarly has been confirmed by a large bulk of evidence at both the ontogenetic and the phylogenetic levels. For example, humans appear to be already equipped at birth with specific mechanisms devoted to detect geometrical patterns resembling eye gaze (e.g., Reid et al., 2017). Sensitivity to eye-gaze stimuli can also be detected in several animal species, while the sensitivity to pointing gestures, although it can still be observed, is less evident and unambiguous (see Shepherd, 2010).

The relevance of eye-gaze stimuli over pointing gestures appears to be also confirmed by converging neuroimaging evidence, indicating the presence of a widespread neural architecture devoted to eye-gaze processing (e.g., Stephenson et al., 2021). In particular, the superior temporal sulcus (STS) appears as the brain area dedicated to the elaboration of the changeable aspects of faces, such as, precisely, eye-gaze direction, although there is also evidence reporting activation of STS even in response to pointing gestures (Sato et al., 2009). Unfortunately, the neural underpinnings of the congruency effect emerging from the spatial Stroop task with social stimuli have been little explored. In this regard, the only work conducted so far recorded electrophysiological measures (ERPs) in response to arrow and gaze stimuli and showed that, while a common interference modulation would emerge for gaze and arrow stimuli early after target onset, opposite conflict effects would be detectable at later stages of processing (Marotta et al., 2019). Future works based on neuroimaging techniques (e.g., fMRI) could hopefully provide new insights to better qualify the results emerging from the spatial Stroop task employed here.

Another avenue for future work would be the idea of employing the spatial Stroop task in specific populations characterized by atypical responses to eye gaze (e.g., attention-deficit hyperactivity disorder (ADHD); see, e.g., Marotta et al., 2013) or pointing gestures (e.g., anorexia nervosa; see, e.g., Dalmaso et al., 2015), to test the generalizability of the RCE for gaze also in clinical contexts.

To conclude, this work confirmed the presence of the RCE for eye gaze in a spatial Stroop task, which appears not to embrace other social stimuli conveying a spatial meaning, such as pointing gestures. This speaks in favour of the possible uniqueness of gaze for the cognitive mechanisms that support social interactions.

Notes

Although accuracy analyses are not particularly informative here given the very low percentage of errors, no evidence for a speed-accuracy tradeoff emerged in the data (see also Table 1).

References

Ariga, A., & Watanabe, K. (2009). What is special about the index finger?: The index finger advantage in manipulating reflexive attentional shift. Japanese Psychological Research, 51(4), 258–265. https://doi.org/10.1111/j.1468-5884.2009.00408.x

Bates, D., Mächler, M., Bolker, B. M., Walker, S. C. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1). https://doi.org/10.18637/jss.v067.i01

Bonventre, S., & Marotta, A. (2023). Is the reversed congruency effect unique to the eye-gaze? Investigating the effects of finger pointing, eye-gaze and arrows stimuli on spatial interference. Frontiers in Cognition, 2, 1135435. https://doi.org/10.3389/fcogn.2023.11354

Bridges, D., Pitiot, A., MacAskill, M. R., & Peirce, J. W. (2020). The timing mega-study: Comparing a range of experiment generators, both lab-based and online. PeerJ, 8, e9414. https://doi.org/10.7717/peerj.9414

Brysbaert, M., & Stevens, M. (2018). Power analysis and effect size in mixed effects models: A tutorial. Journal of Cognition, 1(1), 1–20. https://doi.org/10.5334/joc.10

Callejas, A., Shulman, G. L., & Corbetta, M. (2014). Dorsal and ventral attention systems underlie social and symbolic cueing. Journal of Cognitive Neuroscience, 26(1), 63–80. https://doi.org/10.1162/jocn_a_00461

Cañadas, E., & Lupiáñez, J. (2012). Spatial interference between gaze direction and gaze location: A study on the eye contact effect. Quarterly Journal of Experimental Psychology, 65(8), 1586–1598. https://doi.org/10.1080/17470218.2012.659190

Capozzi, F., & Ristic, J. (2018). How attention gates social interactions. Annals of the New York Academy of Sciences, 1426(1), 179–198. https://doi.org/10.1111/nyas.13854

Cazzato, V., Macaluso, E., Crostella, F., & Aglioti, S. M. (2012). Mapping reflexive shifts of attention in eye-centered and hand-centered coordinate systems. Human Brain Mapping, 33(1), 165–178. https://doi.org/10.1002/hbm.21202

Chacón-Candia, J. A., Lupiáñez, J., Casagrande, M., & Marotta, A. (2020). Sex differences in attentional selection following gaze and arrow cues. Frontiers in Psychology, 11, 95. https://doi.org/10.3389/FPSYG.2020.00095/BIBTEX

Dalmaso, M., Castelli, L., Franchetti, L., Carli, L., Todisco, P., Palomba, D., & Galfano, G. (2015). Altered orienting of attention in anorexia nervosa. Psychiatry Research, 229(1–2), 318–325. https://doi.org/10.1016/j.psychres.2015.06.044

Dalmaso, M., Castelli, L., & Galfano, G. (2020a). Social modulators of gaze-mediated orienting of attention: A review. Psychonomic Bulletin & Review, 27(5), 833–855. https://doi.org/10.3758/s13423-020-01730-x

Dalmaso, M., Castelli, L., & Galfano, G. (2020b). Early saccade planning cannot override oculomotor interference elicited by gaze and arrow distractors. Psychonomic Bulletin & Review, 27(5), 990–997. https://doi.org/10.3758/s13423-020-01768-x

Dalmaso, M., Castelli, L., & Galfano, G. (2021). Increased gaze cueing of attention during COVID-19 lockdown. iScience, 24(11), 103283. https://doi.org/10.1016/j.isci.2021.103283

Edwards, S. G., Stephenson, L. J., Dalmaso, M., & Bayliss, A. P. (2015). Social orienting in gaze leading: A mechanism for shared attention. Proceedings of the Royal Society B: Biological Sciences, 282(1812), 20151141. https://doi.org/10.1098/rspb.2015.1141

Friesen, C. K., & Kingstone, A. (1998). The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin & Review, 5(3), 490–495. https://doi.org/10.3758/BF03208827

Gregory, N. J., & Hodgson, T. L. (2012). Giving subjects the eye and showing them the finger: Socio-biological cues and saccade generation in the anti-saccade task. Perception, 41(2), 131–147. https://doi.org/10.1068/p7085

Hemmerich, K., Narganes-Pineda, C., Marotta, A., Martín-Arévalo, E., Jiménez, L., & Lupiáñez, J. (2022). Gaze elicits social and nonsocial attentional orienting: An interplay of shared and unique conflict processing mechanisms. Journal of Experimental Psychology: Human Perception and Performance, 48(8), 824–841. https://doi.org/10.1037/XHP0001015

Hermens, F., & Walker, R. (2010). Gaze and arrow distractors influence saccade trajectories similarly. Quarterly Journal of Experimental Psychology, 63(11), 2120–2140. https://doi.org/10.1080/17470211003718721

Ishikawa, K., Oyama, T., & Okubo, M. (2021). The malfunction of domain-specific attentional process in social anxiety: Attentional process of social and non-social stimuli. Cognition and Emotion, 35(6), 1163–1174. https://doi.org/10.1080/02699931.2021.1935217

Jones, S. (2015). The mediating effects of facial expression on spatial interference between gaze direction and gaze location. Journal of General Psychology, 142(2), 106–117. https://doi.org/10.1080/00221309.2015.1009822

Kuhn, G., & Kingstone, A. (2009). Look away! Eyes and arrows engage oculomotor responses automatically. Attention, Perception, and Psychophysics, 71(2), 314–327. https://doi.org/10.3758/APP.71.2.314

Lu, C.-H., & Proctor, R. W. (1995). The influence of irrelevant location information on performance: A review of the Simon and spatial Stroop effects. Psychonomic Bulletin & Review, 2(2), 174–207. https://doi.org/10.3758/BF03210959

Marotta, A., Casagrande, M., Rosa, C., Maccari, L., Berloco, B., & Pasini, A. (2013). Impaired reflexive orienting to social cues in attention deficit hyperactivity disorder. European Child & Adolescent Psychiatry, 23, 649–657. https://doi.org/10.1007/s00787-013-0505-8

Marotta, A., Román-Caballero, R., & Lupiáñez, J. (2018). Arrows don’t look at you: Qualitatively different attentional mechanisms triggered by gaze and arrows. Psychonomic Bulletin & Review, 25, 2254–2259. https://doi.org/10.3758/s13423-018-1457-2

Marotta, A., Lupiáñez, J., Román-Caballero, R., Narganes-Pineda, C., & Martín-Arévalo, E. (2019). Are eyes special? Electrophysiological and behavioural evidence for a dissociation between eye-gaze and arrows attentional mechanisms. Neuropsychologia, 129, 146–152. https://doi.org/10.1016/j.neuropsychologia.2019.03.017

Matthews, D., Behne, T., Lieven, E., & Tomasello, M. (2012). Origins of the human pointing gesture: A training study. Developmental Science, 15(6), 817–829. https://doi.org/10.1111/J.1467-7687.2012.01181.X

Reid, V. M., Dunn, K., Young, R. J., Amu, J., Donovan, T., & Reissland, N. (2017). The human fetus preferentially engages with face-like visual stimuli. Current Biology, 27(12), 1825-1828.e3. https://doi.org/10.1016/j.cub.2017.05.044

Román-Caballero, R., Marotta, A., & Lupiáñez, J. (2021a). Target–background segregation in a spatial interference paradigm reveals shared and specific attentional mechanisms triggered by gaze and arrows. Journal of Experimental Psychology: Human Perception and Performance, 47(11), 1561–1573. https://doi.org/10.1037/XHP0000953

Román-Caballero, R., Marotta, A., & Lupiáñez, J. (2021b). Spatial interference triggered by gaze and arrows. The role of target background on spatial interference. Psicológica, 42, 192–209. https://doi.org/10.2478/psicolj-2021-0010

Sato, W., Kochiyama, T., Uono, S., & Yoshikawa, S. (2009). Commonalities in the neural mechanisms underlying automatic attentional shifts by gaze, gestures, and symbols. NeuroImage, 45(3), 984–992. https://doi.org/10.1016/j.neuroimage.2008.12.052

Shepherd, S. V. (2010). Following gaze: Gaze-following behavior as a window into social cognition. Frontiers in Integrative Neuroscience, 4, 5. https://doi.org/10.3389/fnint.2010.00005

Stephenson, L. J., Edwards, S. G., & Bayliss, A. P. (2021). From gaze perception to social cognition: The Shared-Attention System. Perspectives on Psychological Science, 16(3), 553–576. https://doi.org/10.1177/1745691620953773

Strohminger, N., Gray, K., Chituc, V., Heffner, J., Schein, C., & Heagins, T. B. (2016). The MR2: A multiracial, mega-resolution database of facial stimuli. Behavior Research Methods, 48(3), 1197–1204. https://doi.org/10.3758/s13428-015-0641-9

Tanaka, Y., Ishikawa, K., Oyama, T., & Okubo, M. (2023). Face inversion does not affect the reversed congruency effect of gaze. Psychonomic Bulletin & Review, 30, 974–982. https://doi.org/10.3758/S13423-022-02208-8

Torres-Marín, J., Carretero-Dios, H., Acosta, A., & Lupiáñez, J. (2017). Eye contact and fear of being laughed at in a gaze discrimination task. Frontiers in Psychology, 8, 1954. https://doi.org/10.3389/fpsyg.2017.01954

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open practices statement

Experiment code, stimuli and data are publicly available on the Open Science Framework at https://doi.org/10.17605/OSF.IO/U7FVY. The study was not preregistered.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dalmaso, M., Galfano, G. & Castelli, L. Are eyes special? Gaze, but not pointing gestures, elicits a reversed congruency effect in a spatial Stroop task. Atten Percept Psychophys 85, 2547–2552 (2023). https://doi.org/10.3758/s13414-023-02774-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-023-02774-6