Abstract

It is well known that time-dependent Hamilton–Jacobi–Isaacs partial differential equations (HJ PDEs) play an important role in analyzing continuous dynamic games and control theory problems. An important tool for such problems when they involve geometric motion is the level set method (Osher and Sethian in J Comput Phys 79(1):12–49, 1988). This was first used for reachability problems in Mitchell et al. (IEEE Trans Autom Control 50(171):947–957, 2005) and Mitchell and Tomlin (J Sci Comput 19(1–3):323–346, 2003). The cost of these algorithms and, in fact, all PDE numerical approximations is exponential in the space dimension and time. In Darbon (SIAM J Imaging Sci 8(4):2268–2293, 2015), some connections between HJ PDE and convex optimization in many dimensions are presented. In this work, we propose and test methods for solving a large class of the HJ PDE relevant to optimal control problems without the use of grids or numerical approximations. Rather we use the classical Hopf formulas for solving initial value problems for HJ PDE (Hopf in J Math Mech 14:951–973, 1965). We have noticed that if the Hamiltonian is convex and positively homogeneous of degree one (which the latter is for all geometrically based level set motion and control and differential game problems) that very fast methods exist to solve the resulting optimization problem. This is very much related to fast methods for solving problems in compressive sensing, based on \(\ell _1\) optimization (Goldstein and Osher in SIAM J Imaging Sci 2(2):323–343, 2009; Yin et al. in SIAM J Imaging Sci 1(1):143–168, 2008). We seem to obtain methods which are polynomial in the dimension. Our algorithm is very fast, requires very low memory and is totally parallelizable. We can evaluate the solution and its gradient in very high dimensions at \(10^{-4}\)–\(10^{-8}\) s per evaluation on a laptop. We carefully explain how to compute numerically the optimal control from the numerical solution of the associated initial valued HJ PDE for a class of optimal control problems. We show that our algorithms compute all the quantities we need to obtain easily the controller. In addition, as a step often needed in this procedure, we have developed a new and equally fast way to find, in very high dimensions, the closest point y lying in the union of a finite number of compact convex sets \(\Omega \) to any point x exterior to the \(\Omega \). We can also compute the distance to these sets much faster than Dijkstra type “fast methods,” e.g., Dijkstra (Numer Math 1:269–271, 1959). The term “curse of dimensionality” was coined by Bellman (Adaptive control processes, a guided tour. Princeton University Press, Princeton, 1961; Dynamic programming. Princeton University Press, Princeton, 1957), when considering problems in dynamic optimization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction to Hopf formulas, HJ PDEs and level set evolutions

We briefly introduce Hamilton–Jacobi equations with initial data and the Hopf formulas to represent the solution. We give some examples to show the potential of our approach, including examples to perform level set evolutions.

Given a continuous function \(H:\mathbb {R}^n\rightarrow \mathbb {R}\) bounded from below by an affine function, we consider the HJ PDE

where \(\frac{\partial \varphi }{\partial t} \) and \(\nabla _x \varphi \) respectively denote the partial derivative with respect to t and the gradient vector with respect to x of the function \(\varphi \). We are also given some initial data

where \(J:\mathbb {R}^n\rightarrow \mathbb {R}\) is convex. For the sake of simplicity, we only consider functions \(\varphi \) and J that are finite everywhere. Results presented in this paper can be generalized for \(H:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{ +\infty \}\) and \(J:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{ +\infty \}\) under suitable assumptions. We also extend our results to an interesting class of nonconvex initial data in Sect. 2.2.

We wish to compute the viscosity solution [11, 12] for a given \(x \in \mathbb {R}^n\) and \(t > 0\).

Using numerical approximations is essentially impossible for \(n \ge 4\). The complexity of a finite difference equation is exponential in n because the number of grid points is also exponential in n. This has been found to be impossible, even with the use of sophisticated, e.g., ENO, WENO, DG, methods [31, 42, 52]. High-order accuracy is no cure for this curse of dimensionality [3, 4].

We propose and test a new approach, borrowing ideas from convex optimization, which arise in the \(\ell _1\) regularization convex optimization [25, 51] used in compressive sensing [6, 17]. It has been shown experimentally that these \(\ell _1\)-based methods converge quickly when we use Bregman and split Bregman iterative methods. These are essentially the same as Augmented Lagrangian methods [26] and Alternating Direction Method of Multipliers methods [24]. These and related first-order and splitting techniques have enjoyed a renaissance since they were recently used very successfully for these \(\ell _1\) and related problems [25, 51]. One explanation for their rapid convergence is the “error forgetting” property discovered and analyzed in [43] for \(\ell _1\) regularization.

We will solve the initial value problem (1)–(2) without discretization, using the Hopf formula [30]

where the Fenchel–Legendre transform \(f^*:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{ +\infty \}\) of a convex, proper, lower semicontinuous function \(f:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{ +\infty \}\) is defined by [19, 29, 44]

where \(\langle \cdot , \cdot \rangle \) denotes the \(\ell _2(\mathbb {R}^n)\) inner product. We also define for any \(v \in \mathbb {R}^n\), \(\Vert v\Vert _p = \left( \sum _{i=1}^n |v_i|^p\right) ^{\frac{1}{p}}\) for \(1 \le p < + \infty \) and \(\Vert v\Vert _\infty = \max _{i=1,\ldots ,n} |v_i|\). Note that since \(J:\mathbb {R}^n\rightarrow \mathbb {R}\) is convex we have that \(J^*\) is 1-coercive [29, Prop. 1.3.9, p. 46], i.e., \(\lim _{\Vert x\Vert \rightarrow + \infty } \frac{J^*(x)}{\Vert x\Vert _2}= +\infty \). When the gradient \(\nabla _x \varphi (x,t)\) exists, then it is precisely the unique minimizer of (4); in addition, the gradient will also provide the optimal control considered in this paper (see Sect. 2) For instance, this holds for any \(x\in \mathbb {R}^n\) and \(t>0\) when \(H : \mathbb {R}^n\rightarrow \mathbb {R}\) is convex, \(J:\mathbb {R}^n\rightarrow \mathbb {R}\) is strictly convex, differentiable and 1-coercive. The Hopf formula only requires the convexity of J and the continuity of H, but we will often require that H in (1) is also convex.

We note that the case \(H = \Vert \cdot \Vert _1\) corresponds to \(H(\nabla _x\varphi (x,t)) = \sum _{i=1}^n \left| \frac{\partial }{\partial x_i}\varphi (x,t)\right| \), used, e.g., to compute the Manhattan distance to the zero level set of \(x\mapsto J(x)\), [15]. This optimization is closely related to the \(\ell _1\) type minimization [6, 17],

where A is an \(m \times n\) matrix with real entries, \(m < n\), and \(\lambda > 0 \) arising in compressive sensing. Let \(\Omega \subset \mathbb {R}^n\) be a closed convex set. We denote by \(\mathrm{int}\, \Omega \) the interior of \(\Omega \). If \(J(x) < 0\) for \( x \in \mathrm{int}\, \Omega \), \(J(x) > 0\) for \(x \in (\mathbb {R}^n{\setminus } \Omega )\) and \(J(x) = 0\) for \(x \in (\Omega {\setminus } \mathrm{int}\, \Omega )\), then the solution \(\varphi \) of (1)–(2) has the property that for any \(t>0\) the set \(\{x \in \mathbb {R}^n\;|\; \varphi (x,t) = 0\}\) is precisely the set of points in \(\mathbb {R}^n{\setminus } \Omega \) for which the Manhattan distance to \(\Omega \) is equal to \(t > 0\). This is an example of the use of the level set method [41].

Similarly, if we take \(H = \Vert \cdot \Vert _2\) then for any \(t>0\) the resulting zero level set of \(x \mapsto \varphi (x,t)\) will be the points in \(\mathbb {R}^n{\setminus } \Omega \) whose Euclidean distance to \(\Omega \) is equal to t. This fact will be useful later when we find the projection from a point \(x \in \mathbb {R}^n\) to a compact, convex set \(\Omega \).

We present here two somewhat simple but illustrative examples to show the potential power of our approach. Time results are presented in Sect. 4 and show that we can compute solution of some HJ PDEs in fairly high dimensions at a rate below a millisecond per evaluation on a standard laptop.

Let \(H = \Vert \cdot \Vert _1\) and \(J(x) = \frac{1}{2} \left( \sum _{i=1}^n \frac{x_i^2}{a_i^2} - 1\right) \) with \(a_i > 0\) for \(i=1,\ldots ,n\). Then, for a given \(t > 0\), the set \(\{x \in \mathbb {R}^n\;|\; \varphi (x,t) = 0\}\) will be precisely the set of points at Manhattan distance t outside of the ellipsoid determined by \(\{ x \in \mathbb {R}^n\;|\; J(x) \le 0\}\). Following [29, Prop. 1.3.1, p. 42] it is easy to see that \(J^*(x) = \frac{1}{2} \sum _{i=1}^n a_i^2 x_i + \frac{1}{2}\). So:

We note that the function to be minimized decouples into scalar minimizations of the form

The unique minimizer is the classical soft thresholding operator [14, 22, 33] defined for any component \(i=1,\ldots ,n\) by

Therefore, for any \(x\in \mathbb {R}^n\), any \(t>0\) and any \(i=1,\ldots ,n\) we have

and

Here \(B(t) \subseteq \{0,\ldots ,n\}\) consists of indices for which \(|x_i| \le t\), and thus \(\{0,\ldots ,n\} {\setminus } B(t)\) corresponds to indices for which \(|x_i| > t\), and the zero level set moves outwards in this elegant fashion.

We note that in the above case we were able to compute the solution analytically and the dimension n played no significant role. Of course this is rather a special problem, but this gives us some idea of what to expect in more complicated cases, discussed in Sect. 3.

We will often need another shrink operator, i.e., when we solve the optimization problem with \(\alpha > 0\) and \(x \in \mathbb {R}^n\)

Its unique minimizer is given by

and thus its optimal value corresponds to the Huber function (see [50] for instance)

To move the unit sphere outwards with normal velocity 1, we use the following formula

and, unsurprisingly, the zero level set of \(x \mapsto \varphi (x,t)\) is the set x satisfying \(\Vert x\Vert _2 = t+1\), for \(t>0\).

These two examples will be generalized below so that we can, with extreme speed, compute the signed distance, either Euclidean, Manhattan or various generalizations, to the boundary of the union of a finite collection of compact convex sets.

The remainder of this paper is organized as follows: Sect. 2 contains an introduction to optimal control and its connection to HJ PDE. Section 3 gives the details of our numerical methods. Section 4 presents numerical results with some details. We draw some concluding remarks and give future plans in Sect. 5. The “Appendix” links our approach to the concepts of gauge and support functions in convex analysis.

2 Introduction to optimal control

First, we give a short introduction to optimal control and its connection to HJ PDE which is given in (11). We also introduce positively homogeneous of degree one Hamiltonians and describe their relationship to optimal control problems. We explain how to recover the optimal control from the solution of the HJ PDE. An “Appendix” describes further connections between these Hamiltonians and gauge in convex analysis. Second, we present some extensions of our work.

2.1 Optimal control and HJ PDE

We are largely following the discussion in [16], see also [20], about optimal control and its link with HJ PDE. We briefly present it formally, and we specialize it to the cases considered in this paper.

Suppose we are given a fixed terminal time \(T \in \mathbb {R}\), an initial time \(t < T\) along with an initial \(x\in \mathbb {R}^n\). We consider the Lipschitz solution \(x:[t, T] \rightarrow \mathbb {R}^n\) of the following ordinary differential equation

where \(f: C \rightarrow \mathbb {R}^n\) is a given bounded Lipschitz function and C some given compact set of \(\mathbb {R}^n\). The solution of (9) is affected by the measurable function \(\beta :(-\infty , T] \rightarrow C\) which is called a control. We note \(\mathcal {A}= \{ \beta :(-\infty , T]\rightarrow C \; |\; \beta \mathrm{ is measurable}\}\). We consider the functional for given initial time \(t<T\), \(x\in \mathbb {R}^n\) and control \(\beta \)

where x is the solution of (9). We assume that the terminal cost \(J:\mathbb {R}^n\rightarrow \mathbb {R}\) is convex. We also assume that the running cost \(L: \mathbb {R}^n\rightarrow \mathbb {R}\cup \{ + \infty \}\) is proper, lower semicontinuous, convex, 1-coercive and \(\mathrm{dom}\, L \subseteq C\), where \(\mathrm{dom}\, L\) denotes the domain of L defined by \(\mathrm{dom}\, L= \{x\in \mathbb {R}^n\,|\, L(x) < +\infty \}\). The minimization of K among all possible controls defines the value function \(v:\mathbb {R}^n\times (-\infty ,\, T] \rightarrow \mathbb {R}\) given for any \(x\in \mathbb {R}^n\) and any \(t < T\) by

The value function (10) satisfies the dynamic programming principle for any \(x \in \mathbb {R}^n\), any \(t \ge T\) and any \(\tau \in (t,T)\)

The value function v also satisfies the following Hamilton–Jacobi–Bellman equation with terminal value

Note that the control \(\beta (t)\) at time \(t \in (-\infty ,T)\) in (9) satisfies \(\langle \nabla _x v(x,t), \beta (t) \rangle + L(\beta (t)) = \min _{c\in C}\{\nabla _x v(x,t), c \rangle + L(c)\}\) whenever \(v(\cdot ,t)\) is differentiable.

Consider the function \(\varphi : \mathbb {R}^n\times [0, +\infty ) \rightarrow \mathbb {R}\) defined by \(\varphi \left( x,t \right) = v(x,T-t)\). We have that \(\varphi \) is the viscosity solution of

where the Hamiltonian \(H: \mathbb {R}^n\rightarrow \mathbb {R}\cup \{ +\infty \}\) is defined by

We note that the above HJ PDE is the same as the one we consider throughout this paper. In this paper, we use the Hopf formula (3) to solve (11). We wish the Hamiltonian \(H:\mathbb {R}^n\rightarrow \mathbb {R}\) to be not only convex but also positively 1-homogeneous, i.e., for any \(p\in \mathbb {R}^n\) and any \(\alpha > 0\)

We proceed as follows. Let us first introduce the characteristic function \(\mathcal {I}_\Omega :\mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\) of the set \(\Omega \) which is defined by

We recall that C is a compact convex set of \(\mathbb {R}^n\). We take \(f(c) = -c\) for any \(c \in C\) in (9) and

Then, (12) gives the Hamiltonian \(H:\mathbb {R}^n\rightarrow \mathbb {R}\) defined by

Note that the right-hand side of (14) is called the support function of the closed nonempty convex set C in convex analysis (see, e.g., [28, Def. 2.1.1, p. 208]). We check that H defined by (14) satisfies our requirement. Since C is bounded, we can invoke [28, Prop. 2.1.3, p. 208] which yields that the Hamiltonian is indeed finite everywhere. Combining [28, Def. 1.1.1, p. 197] and [28, Prop. 2.1.2, p. 208], we obtain that H is positively 1-homogeneous and convex. Of course, the Hamiltonian can also be expressed in terms of Fenchel–Legendre transform; we have for any \(p \in \mathbb {R}^n\)

where we recall that the Fenchel–Legendre is defined by (5). The nonnegativity of the Hamiltonian is related to the fact that C contains the origin, i.e., \(0 \in C\), and gauges. This connection is described in the “Appendix.”

Note that the controller \(\beta (t)\) for \(t \in (-\infty ,T)\) in (9) is recovered for the solution \(\varphi \) of (11) since we have

whenever \(\varphi (\cdot ,t)\) is differentiable. For any \(p \in \mathbb {R}^n\) such that \(\nabla H(p)\) exists we also have \(H(p) = \langle p, \nabla H(p) \rangle \). Thus, we obtain that the control is given by \(\beta (t) = \nabla H(\nabla _x \varphi (x,T-t))\).

We present in Sect. 3 our efficient algorithm that computes not only \(\varphi (x,t)\) but also \(\nabla _x\varphi (x,t)\). We emphasize that we do not need to use some numerical approximations to compute the spatial gradient. In other words, our algorithm computes all the quantities we need to get the optimal control without using any approximations.

It is sometimes convenient to use polar coordinates. Let us denote the \((n-1)\)-sphere by \(S^{n-1} = \{ x \in \mathbb {R}^n\;|\; \Vert x\Vert _2 =1 \}\). The set C can be described in terms of the Wulff shape [40] by the function \(W:S^{n-1} \rightarrow \mathbb {R}\). We set

The Hamiltonian H is then naturally defined via \(\gamma : S^{n-1} \rightarrow \mathbb {R}\) for any \(R>0\) and any \(\theta \in S^{n-1}\) by

and where \(\gamma \) is defined by

The main examples are \(H= \Vert \cdot \Vert _p\) for \(p \in [1,+\infty )\) and \(H = \Vert \cdot \Vert _\infty \). Others include the following two: \(H = \sqrt{\langle \cdot , A \cdot \rangle } = \Vert \cdot \Vert _A\) with A a symmetric positive definite matrix, and H defined as follows for any \(p \in \mathbb {R}^n\)

In future work, we will also consider Hamiltonians defined as the supremum of linear forms such as those that arise in linear programming.

We will devise very fast, low memory, totally parallelizable and apparently low time complexity methods for solving (11) with H given by (14) in the next section.

2.2 Some extensions and future work

In this section we show that we can solve the problem for a much more general class of Hamiltonians and initial data which arise in optimal control, including an interesting class of nonconvex initial data.

Let us first consider Hamiltonians that correspond to linear controls. Instead of (9), we consider the following ordinary differential equation

where M is a \(n \times n\) matrices with real entries and N(s) for any \(s \in (-\infty ,T]\) is a \(n \times m\) matrices with real entries. We can make a change of variables

and we have

The resulting Hamiltonian now depends on t and (12) becomes:

If C is a closed convex set, and \(L = \mathcal {I}_{C}\) we have

which leads to a positively 1-homogeneous convex Hamiltonians as a function of p for any fixed \(t \ge 0\). If we have convex initial data there is a simple generalization of the Hopf formulas [34] [32, Section 5.3.2, p. 215]

We intend to program and test this in our next paper.

We now review some well-known results about the types of convex initial value problems that yield to max/min-plus algebra for optimal control problems (see, e.g., [1, 23, 35] for instance). Suppose we have k different initial value problems \(i=1,\ldots ,k\)

where all initial data \(J_i:\mathbb {R}^n\rightarrow \mathbb {R}\) are convex, the Hamiltonian \(H:\mathbb {R}^n\rightarrow R\) is convex and 1-coercive. Then, we may use the Hopf–Lax formula to get, for any \(x \in \mathbb {R}^n\) and any \(t > 0\)

so

So we can solve the initial value problem

by simply taking the pointwise minimum over the k solutions \(\phi _i(x,t)\), each of which has convex initial data. See Sect. 4 for numerical results. As an important example, suppose

and each \(J_i\) is a level set function for a convex compact set \(\Omega _i\) with nonempty interior and where the interiors of each \(\Omega _i\) may overlap with each other. We have \(J_i(x) < 0 \) inside \(\Omega _i\), \(J_i(x) >0 \) outside \(\Omega _i\), and \(J_i(x) = 0\) at the boundary of \(\Omega _i\). Then \(\min _{i=1,\ldots ,n} J_i\) is also a level set function for the union of the \(\Omega _i\). Thus, we can solve complicated level set motion involving merging fronts and compute a closest point and the associated proximal points to nonconvex sets of this type. See Sect. 3.

For completeness we add the following fact about the minimum of Hamiltonians. Let \(H_i:\mathbb {R}^n\rightarrow \mathbb {R}\), with \(i=1,\ldots ,k\), be k continuous Hamiltonians bounded from below by a common affine function. We consider for \(i=1,\ldots ,k\)

where \(J:\mathbb {R}^n\rightarrow \mathbb {R}\) is convex. Then,

that is

So we find the solution to

by solving k different initial value problems and taking the pointwise maximum. See Sect. 4 for numerical results.

We end this section by showing that explicit formulas can be obtained for the terminal value \(\mathrm {x}(T)\) and the control \(\beta (t)\) for another class of running cost L. Suppose that C is a convex compact set containing the origin and take \(f(c) = -c \) for any \(c \in C\). Assume also that \(L: \mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\) is strictly convex, differentiable when its subdifferential is nonempty and that \(\mathrm{dom}\, L\) has a nonempty interior with \(\mathrm{dom}\, L \subseteq C\). Then, the associated Hamiltonian H is defined by \(H=L^*\). Then, using the results of [13], we have that the \((x,t) \mapsto \varphi (x,t)\) which solves (11) is given by the Hopf–Lax formula \(\varphi (x,t) = \min _{y\in \mathbb {R}^n}\left\{ J(y) + tH^*(\frac{x-y}{t})\right\} \) where the minimizer is unique and denoted by \(\bar{y}(x,t)\). Note that the Hopf–Lax formula corresponds to a convex optimization problem which allows us to compute \(\bar{y}(x,t)\). In addition, we can compute the gradient with respect to x since we have \(\nabla _x \varphi (x,t) = \nabla H^*\left( \frac{x-\bar{y}(x,t)}{t}\right) \in \partial J(y(x,t))\) for any given \(x\in \mathbb {R}^n\) and \(t>0\). For any \(t \in (-\infty , T)\) and fixed \(x\in \mathbb {R}^n\) the control is given by \(\beta (t) = \nabla H(\nabla _x \varphi (x,T-xt))\) while the terminal value satisfies \(\mathrm {x}(T) = \bar{y}(x,T-t) = x - (T-t) \nabla H(\nabla \varphi (x,(T-t)))\). Note that both the control and the terminal value can be easily computed. More details about these facts will be given in a forthcoming paper.

3 Overcoming the curse of dimensionality for convex initial data and convex homogeneous degree one Hamiltonians: optimal control

We first present our approach for evaluating the solution of the HJ PDE and its gradient using the Hopf formula [30], Moreau’s identity [38] and the split Bregman algorithm [25]. We note that the split Bregman algorithm can be replaced by other algorithms which converge rapidly for problems of this type. An example might be the primal-dual hybrid gradient method [8, 53]. Then, we show that our approach can be adapted to compute a closest point on a closed set, which is the union of disjoint closed convex sets with a nonempty interior, to a given point.

3.1 Numerical optimization algorithm

We present the steps needed to solve

We take \(J:\mathbb {R}^n\rightarrow \mathbb {R}\) convex and positively 1-homogeneous. We recall that solving (19), i.e., computing the viscosity solution, for a given \(x \in \mathbb {R}^n,\, t > 0\) using numerical approximations, is essentially impossible, for \(n \ge 4\) due to the memory issue, and the complexity is exponential in n.

An evaluation of the solution at \(x\in \mathbb {R}^n\) and \(t>0\) for the examples we consider in this paper is of the order of \(10^{-8}\)–\(10^{-4}\) s on a standard laptop (see Sect. 4). The apparent time complexity seems to be polynomial in n with remarkably small constants.

We will use the Hopf formula [30]:

Note that the infimum is always finite and attained (i.e., it is a minimum) since we have assumed that J is finite everywhere on \(\mathbb {R}^n\) and that H is continuous and bounded from below by an affine function.

The Hopf formula (20) requires only the continuity of H, but we will also require the Hamiltonian H be convex as well. We recall that the previous section shows how to relax this condition.

We will use the split Bregman iterative approach to solve this [25]

For simplicity we consider \(\lambda = 1\) and consider \(v^0 = x\), \(d^0=x\) and \(b^0 = 0\) in this paper. The algorithm still works for any positive \(\lambda \) and any finite values for \(v^0\), \(d^0\) and \(b^0\). The sequence \((v^k)_{k\in \mathbb {N}}\) and \((d^k)_{k\in \mathbb {N}}\) are both converging to the same quantity which is a minimizer of (20). We recall that when the minimizer of (20) is unique then it is precisely the \(\nabla _x \varphi (x,t)\); in other words, both \((v^k)_{k\in \mathbb {N}}\) and \((d^k)_{k\in \mathbb {N}}\) converge to \(\nabla _x \varphi (x,t)\) under this uniqueness assumption. If the minimizer is not unique then the sequences \((v^k)_{k\in \mathbb {N}}\) and \((v^k)_{k\in \mathbb {N}}\) converge to an element of the subdifferential of \(\partial (y\mapsto f(y,t))(x)\) (see below for the definition of a subdifferential). We need to solve (21) and (22). Note that up to some changes of variables, both optimization problems can be reformulated as finding the unique minimizer of

where \(z \in \mathbb {R}^n\), \(\alpha > 0\), and \(f:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\) is a convex, proper, lower semicontinuous function. Its unique minimizer \(\bar{w}\) satisfies the optimal condition

where \(\partial f (x)\) denotes the subdifferential (see, for instance, [28, p. 241], [44, Section 23]) of f at \(x \in \mathbb {R}^n\) and is defined by \(\partial f (x) = \{ s \in \mathbb {R}^n\,|\, \forall y \in \mathbb {R}^n,\, f(y)\, \ge \, f(x) + \langle s, y-x\rangle \}\). We have

where \(\left( I + {\partial f} \right) ^{-1}\) denotes the “resolvent” operator of f (see [2, Def. 2, chp. 3, p. 144], [5, p. 54] for instance). It is also called the proximal map of f following the seminal paper of Moreau [38] (see also [29, Def. 4.1.2, p. 318], [44, p. 339]). This mapping has been extensively studied in the context of optimization (see, for instance, [10, 18, 45, 48]).

Closed-form formulas exist for the proximal of map for some specific cases. For instance, we have seen in the introduction that \(\left( I + {\alpha \ \partial \Vert \cdot \Vert _i} \right) ^{-1} = {\hbox {shrink}}_i(\cdot , \alpha )\) for \(i=1,2\), where we recall that \({\hbox {shrink}}_1\) and \({\hbox {shrink}}_2\) are defined by (6) and (7), respectively. Another classical example consists of considering a quadratic form \(\frac{1}{2}\Vert \cdot \Vert _A^2 = \frac{1}{2} \langle \cdot , A \cdot \rangle \), with A a symmetric positive definite matrix with real values, which yields \(\left( I + {\alpha \, \partial \left( \frac{1}{2}\Vert \cdot \Vert _A ^2\right) } \right) ^{-1}= \left( I_n + \alpha \, A\right) ^{-1}\), where \(I_n\) denotes the identity matrix of size n.

Assume f is twice differentiable with a bounded Hessian, then the proximal map can be efficiently computed using Newton’s method. Algorithms based on Newton’s method require us to solve a linear system that involves an \(n \times n\) matrix. Note that typical high dimensions for optimal control problems are about \(n=10\). For computational purposes, these order of values for n are small.

We describe an efficient algorithm to compute the proximal map of \(\Vert \cdot \Vert _\infty \) in Sect. 4.2 using parametric programming [46, Chap. 11, Section 11.M]. An algorithm to compute the proximal map for \(\frac{1}{2}\Vert \cdot \Vert _1^2\) is described in Sect. 4.4.

The proximal maps for f and \(f^*\) satisfy the celebrated Moreau identity [38] (see also [44, Thm. 31.5, p. 338]) which reads as follows: For any \(w\in \mathbb {R}^n\) and any \(\alpha > 0\)

This shows that \(\left( I + {\alpha \,\partial f} \right) ^{-1}(w)\) can be easily computed from \(\left( I + {\frac{1}{\alpha } \, \partial f^*} \right) ^{-1}\left( \frac{w}{\alpha }\right) \). In other words, depending on the nature and properties of the mappings f and \(f^*\), we choose the one for which the proximal point is “easier” to compute. Section 4.4 describes an algorithm to compute the proximal map of \(\frac{1}{2}\Vert \cdot \Vert _\infty ^2\) using only evaluations of \(\left( I + { \frac{\alpha }{2} \, \partial \Vert \cdot \Vert _1^2} \right) ^{-1}\) using Moreau’s identity (25).

We shall see that Moreau’s identity (25) can be very useful to compute the proximal maps of convex and positively 1-homogeneous functions.

We consider problem (22) that corresponds to compute the proximal of a convex positively 1-homogeneous function \(H:\mathbb {R}^n\rightarrow \mathbb {R}\). (We use H instead f to emphasize that we are considering positively 1-homogeneous functions and we set \(\alpha =1\) to alleviate notations.) We have that \(H^*\) is the characteristic function of a closed convex set \(C \subseteq \mathbb {R}^n\), i.e., the Wulff shape associated to H [40],

and H corresponds to the support function C, that is for any \(p \in \mathbb {R}^n\)

Following Moreau [38, Example 3.d], the proximal point of \(z \in \mathbb {R}^n\) relative to \(H^*\) is

In other words, \(\left( I + { \partial H^*} \right) ^{-1}(z)\) corresponds to the projection of z on the closed convex set C that we denote by \(\pi _C(z)\), that is for any \(z \in \mathbb {R}^n\)

Thus, using the Moreau identity (25), we see that \(\left( I + {\partial H} \right) ^{-1}\) can be computed from the projection on its associated Wulff shape and we have for any \(z \in \mathbb {R}^n\)

In other words, computing the proximal map of H can be performed by computing the projection on its associated Wulff shape C. This formula is not new; see, e.g., [7, 39].

Let us consider an example. Consider Hamiltonians of the form \(H = \Vert \cdot \Vert _A = \sqrt{\langle \cdot , A \cdot \rangle }\) where A is a symmetric positive matrix. Here the Wulff shape is the ellipsoid \(C = \left\{ y\in \mathbb {R}^n\,|\, \langle x, A ^{-1} x \rangle \; \le 1 \right\} \). We describe in Sect. 4.3 an efficient algorithm for computing the projection on an ellipsoid. Thus, this allows us to compute efficiently the proximal map of norms of the form \(\Vert \cdot \Vert _A\) using (26).

3.2 Projection on closed convex set with the level set method

We now describe an algorithm based on the level set method [41] to compute the projection \(\pi _\Omega \) on a compact convex set \(\Omega \subset \mathbb {R}^n\) with a nonempty interior. This problem appears to be of great interest for its own sake.

Let \(\psi :\mathbb {R}^n\times [0,+\infty )\) be the viscosity solution of the eikonal equation

where we recall that \(\frac{\partial \psi }{\partial t}(y,t)\) and \(\nabla _x \psi (y,t)\) respectively denote the partial derivatives of \(\phi \) with respect to the time and space variable at (y, s) and where \(L:\mathbb {R}^n\rightarrow \mathbb {R}\) satisfies for any \(y \in \mathbb {R}^n\)

where \(\mathrm{int } \Omega \) denotes the interior of \(\Omega \). Given \(s>0\), we consider the set

which corresponds to all points that are at a (Euclidean) distance s from \(\Gamma (0)\). Moreover, for a given point \(y \in \Gamma (s)\), the closest point to y on \(\Gamma (0)\) is exactly the projection \(\pi _\Omega (y)\) of y on \(\Omega \), and we have

In this paper, we will assume that L is strictly convex, 1-coercive and differentiable so that \(\nabla _x \psi (s,y)\) exists for any \(y \in \mathbb {R}^n\) and \(s>0\). We note that if \(\Omega \) is the finite union of sets of this type then \(\nabla _x \psi (s,y)\) may have isolated jumps. This presents no serious difficulties. Note that (27) takes the form of (19) with \(H = \Vert \cdot \Vert _2\) and \(J = L\). We again use split Bregman to solve the optimization given by the Hopf formula (3). To avoid confusion we respectively replace J, v, d and b, by L, w, e and c in (21)–(23)

An important observation here is that \(e^{k+1}\) can be solved explicitly in (31) using the \({\hbox {shrink}}_2\) operator defined by (7). Note that the algorithm given by (21)–(23) allows us to evaluate not only \(\psi (y,s)\) but also \(\nabla _x\psi (y,s)\). Indeed for any \(s>0\) \(\nabla _x \psi (y,s) = \arg \min _{v\in \mathbb {R}^n} \{L^*(v) + s H(v)- \langle y, v\rangle \}\), the minimizer being unique. Thus, the above algorithm (30)–(32) generates sequences \((w^k)_{k \in \mathbb {N}}\) and \((e ^k)_{k \in \mathbb {N}}\) that both converge to \(\nabla _x \psi (z,s)\).

The above considerations about the closest point and (29) give us a numerical procedure for computing \(\pi _\Omega (y)\) for any \(y \in (\mathbb {R}^n{\setminus } \Omega )\). Find the value \(\bar{s}\) so that \(\psi (y, \bar{s}) = 0\) where \(\psi \) solve (27). Then, compute

to obtain the projection \(\pi _{\Omega }(z)\). We compute \(\bar{s}\) using Newton’s method to find the 0 of the function \((0, +\infty ) \ni s \mapsto \psi (z,s)\). Given an initial \(s_0 > 0\) the Newton iteration corresponds to computing for integers \(l>0\)

From (27) we have \(\frac{\partial \psi }{\partial t} (z,s) = - \Vert \nabla _x \psi (z,s)\Vert _2\) for any \(s > 0\). We can thus compute (34).

It remains to choose the initial data L related to the set \(\Omega \). We would like it to be smooth so that the proximal point in (30) can be computed efficiently using Newton’s method. (If L lacks differentiability the approach can be easily modified using [46, Chap 11, Section 11.M] or using [9].) We consider \(\Omega \) as Wulff shapes that are expressed, thanks to (15), with the function \(W:S^{n-1}\rightarrow \mathbb {R}\), that is

As a simple example, if \(W \equiv 1\), then we might try \(L = \Vert y\Vert _2 - 1\), the signed distance to \(\Gamma (0)\). This does not suit our purposes, because its Hessian is singular and its dual is the indicator function of the \(l_2(\mathbb {R}^n)\) unit ball. Instead, we take

Note \(L^*(y) = \frac{1}{2} \left( \Vert y\Vert _2^2 + 1\right) \), both of these are convex and \(\mathcal {C}^2\) functions with nonvanishing gradients away from the origin, i.e., near \(\Gamma (s) \). This gives us a hint as how to proceed.

Recall that we need to get initial data which behaves as a level set function should, i.e., as defined by (28). We also want either L or \(L^*\) to be smooth enough, actually twice differentiable with Lipschitz continuous Hessian, so that Newton’s method can be used.

We might take

where \(R \ge 0\), \(\theta \in S^{n-1}\) and m a positive integer. If we consider the important case of \(W(\theta ) = \Vert \theta \Vert _A ^{-\frac{1}{2}} = \frac{1}{\sqrt{\langle \theta , A\, \theta \rangle }}\), with A a symmetric positive definite matrix, which corresponds to \(\Omega = \{x \in \mathbb {R}^n\,|\, \sqrt{\langle x, A\, x \rangle } \,\le \, 1\}\), then \(m\ge 2\) will have a smooth enough Hessian for L. In fact, \(m=2\) will lead us to a linear Hessian. There will be situations where using \(L^*\) is preferable because L cannot be made smooth enough this way.

We can obtain \(L^*\) using polar coordinates and taking \(1 \ge m > \frac{1}{2}\), that is for any \(R\ge 0\) and any \(\theta \in S^{n-1}\)

where we recall that \(\gamma \) is defined by (17). As we shall see, \(L^*\) is often preferable to L yielding a smooth Hessian for \( 1 \ge m > \frac{1}{2}\) with m close to \(\frac{1}{2}\).

Let us consider some important examples. If the set \(\Omega \) is defined by \(\Omega = \{x\in \mathbb {R}^n\,|\, \Vert x\Vert _p \le 1\}\) for \(1< p < \infty \), then we can consider two cases

-

(a)

\(2 \le p < + \infty \)

-

(b)

\(1 < p \le 2\)

For case (a) we need only take

If \(m \ge 1\) it is easy to see that Hessian of L is continuous and bounded for \(p\ge 2\). So we can use Newton’s method for the first choice above. For case (b), we construct the Fenchel–Legendre of the function

but this time for \(\frac{1}{2} < m \le 1\). It is easy to see that

where \(\frac{1}{p} + \frac{1}{q} = 1\) and if \(\frac{1}{2} < m \le 1\) the Hessian of \(L^*\) is continuous.

Other interesting examples include the following regions defined by

For \(\Omega \) to be convex we require A to be a positive definite symmetric matrix with real entries and its maximal eigenvalue is bounded by twice the minimal eigenvalue. We can take

for \(m\ge 2\) and see that this has a smooth Hessian.

4 Numerical results

We will consider the following Hamiltonians

-

\(H = \Vert \cdot \Vert _p\) for \(p = 1, 2, \infty \),

-

\(H = \sqrt{\langle \cdot , A \cdot \rangle }\) with A symmetric positive definite matrix,

and the following initial data

-

\(J = \frac{1}{2}\Vert \cdot \Vert _p^2\) for \(p = 1, 2, \infty \),

-

\(J = \frac{1}{2}\langle \cdot , A\cdot \rangle \) with A a positive definite diagonal matrix.

It will be useful to consider the spectral decomposition of A, i.e., \(A = P D P^{\dagger }\), where D is a diagonal matrix, P is an orthogonal matrix, and \(P^{\dagger }\) denotes the transpose of P. The identity matrix in \(\mathbb {R}^n\) is denoted by \(I_n\).

First, we present the algorithms to compute the proximal points for the above Hamiltonians and initial data. We shall describe these algorithms using the following generic formulation for the proximal map

Second, we present the time results on a standard laptop. Some time results are also provided for a 16 cores computer which shows that our approach scales very well. The latter is due to our very low memory requirement. Finally, some plots that represent the solution of some HJ PDE are presented.

4.1 Some explicit formulas for simple specific cases

We are able to obtain explicit formulas for the proximal map for some specific cases of interest in this paper. For instance, as we have seen, considering \(f = \Vert \cdot \Vert _1\) gives for any \(i=1,\ldots ,n\)

where \(\mathrm{sign}(\beta ) = 1\) if \(\beta \ge 0\) and \(-1\) otherwise. The case \(f=\Vert \cdot \Vert _2\) yields a similar formula

with

The two above cases are computed in linear time with respect to the dimension n.

Proximal maps for positive definite quadratic forms, i.e., \(f(w) = \frac{1}{2}\langle w, A w\rangle \) are also easy to compute since for any \(\alpha >0\) and any \(z\in \mathbb {R}^n\)

where we recall that \(A = P D P^{\dagger }\) with D a diagonal matrix and P an orthogonal matrix. The time complexity is dominated by the evaluation of the matrix-vector product involving P and \(P^{\dagger }\).

4.2 The case of \(\Vert \cdot \Vert _\infty \)

Let us now consider the case \(f=\Vert \cdot \Vert _\infty \). Since \(\Vert \cdot \Vert _\infty \) is a norm, its Fenchel–Legendre transform is the indicator function of its dual norm ball, that is \((\Vert \cdot \Vert _\infty )^* = \mathcal {I}_C\) with \(C = \{ z \in \mathbb {R}^n\;|\; \Vert z\Vert _1 \le 1\}\). We use Moreau’s identity (26) to compute \(\left( I + {\alpha \Vert \cdot \Vert _\infty } \right) ^{-1}\); that is for any \(\alpha >0\) and for any \(z \in \mathbb {R}^n\)

where we recall that \(\pi _{\alpha C}\) denotes the projection operator onto the closed convex set \(\alpha C\). We use a simple variation of parametric approaches that are well known in graph-based optimization algorithm (see [46, Chap. 11, Section 11.M] for instance).

Let us assume that \(z \notin (\alpha C)\). The projection corresponds to solve

Now we use Lagrange duality (see [28, chap. VII] for instance). The Lagrange dual function \(g: [0,+\infty ) \rightarrow \mathbb {R}\) is defined by

that is

We denote by \(\bar{\mu }\) the value that realizes the maximum of g. Then, we obtain for any \(z \notin \alpha C\) that

where \(\bar{\mu } \) satisfies

Computing the projection is thus reduced to computing the optimal value of the Lagrange multiplier \(\bar{\mu }\). Consider the function \(h : [0,\Vert z\Vert _1] \rightarrow \mathbb {R}\) defined by \( h(\mu ) = \Vert \mathrm{shrink}_1(z, \mu )\Vert _1\). We have that h is continuous, piecewise affine, \(h(0)=\Vert z\Vert _1\), \(h(\Vert z\Vert _1) = 0\) and h is decreasing (recall that we assume \(z \notin (\alpha C)\)). Following [46, Chap. 11, Section 11.M], we call breakpoints the values for which h is not differentiable. The set of breakpoints for h is \(B = \left\{ 0 \right\} \bigcup _{i=1,\ldots ,n} \left\{ |z_i|\right\} .\) We sort all breakpoints in increasing order, and we denote this sequence by \((l_i, \ldots , l_{m}) \in B^{m}\) with \(l_i < l_{i+1}\) for \(i=1,\ldots ,(m-1)\), where \(m \le n\) is the number of breakpoints. This operation takes \(O(n \log n)\). Then, using a bitonic search, we can find j such that \(\Vert {\hbox {shrink}}_1(z, l_j)\Vert _1 \le \Vert {\hbox {shrink}}_1(z, \bar{\mu })\Vert _1 < \Vert {\hbox {shrink}}_1(z, l_{j+1})\Vert _1\) in \(O(n \log n)\). Since h is affine on \([\Vert {\hbox {shrink}}_1(z, l_j)\Vert _1, [\Vert {\hbox {shrink}}_1(z, l_{j+1})\Vert _1]\) a simple interpolation computed in constant time yields \(\bar{\mu }\) that satisfies (37). We then use (36) to compute the projection. The overall time complexity is, therefore, \(O(n \log n)\).

4.3 The case \(\Vert \cdot \Vert _A\) and projection on a ellipsoid

We follow the same approach as for \(\Vert \cdot \Vert _\infty \). We consider \(f = \Vert \cdot \Vert _A = \langle \cdot , A \cdot \rangle \) which is a norm since A is assumed to be symmetric positive definite. The dual norm is \(\Vert \cdot \Vert \) [19, Prop. 4.2, p. 19] is \(\Vert \cdot \Vert _{A ^{-1}}\). Thus, \(\left( \Vert \cdot \Vert _{A}\right) ^* = I_{\mathcal {E}_A}\) with \(\mathcal {E}_A\) defined by

Using Moreau’s identity (26), we only need to compute the projection \(\pi _{\mathcal {E}_{A}}(w)\) of \(w\in \mathbb {R}^n\) on the ellipsoid \(\mathcal {E}_{A} \). Note that we have \(\pi _{\mathcal {E}_{A}} (w) = P\, \pi _{\mathcal {E}_{D }} (P^{\dagger } w)\) where we recall that \(A=PDP^{\dagger }\) with D and P a diagonal and orthogonal matrix, respectively. Thus, we only describe the algorithm for the projection on an ellipsoid involving positive definite diagonal matrices.

To simplify notation we take \(d_i = D_{ii}\) for \(i=1,\ldots ,n\). We consider the ellipsoid \(\mathcal {E_D}\) defined by

Let \(w \notin \mathcal {E}_D\). We can easily show (see [27, Exercise III.8] for instance) that \(\Pi _{\mathcal {E}_D}(w)\) satisfies for any \(i=1.\ldots ,n\)

where the Lagrange multiplier \(\bar{\mu } > 0\) is the unique solution of \(\sum _{i=1}^n \frac{d_i^2 w_i^2}{(d_i^2 + \mu )^2} = 1\). We find such \(\bar{\mu }\) by minimizing the function \([0,+\infty ) \ni \mu \mapsto \sum _{i=1}^{n} d_i ^2 w_i ^2 (d_i ^2 + \mu )^{-1} + \mu \) using Newton’s method which generates a sequence \((\mu _k)_{k \in \mathbb {N}}\) converging to \(\bar{\mu }\). We set the initial value to \(\mu _0 = 0\), and we stop Newton’s iterations for the first k which statisfies \(|\mu _{k+1} - \mu _{k}| \le 10^{-8}\). Once we have the value for \(\bar{\mu }\) we use (38) to obtain the approximate projection.

4.4 The cases \(\frac{1}{2}\Vert \cdot \Vert _1^2\) and \(\frac{1}{2}\Vert \cdot \Vert _\infty ^2\)

First, we consider the case of \(\frac{1}{2}\Vert \cdot \Vert _1^2\). We have for any \(\alpha >0\) and any \(z\in \mathbb {R}^n\)

Thus, assuming there exists \(\bar{\beta } \ge 0\) such that

we have for any \(\alpha >0\) and any \(z\in \mathbb {R}^n\)

The existence of \(\bar{\beta }\) and an algorithm to compute it follow. Let us assume that \(z\ne 0\) (otherwise, \(\bar{\beta }=0\) works and the solution is of course 0). Then, consider the function \(g:\left[ 0,\Vert z\Vert _1\right] \rightarrow \mathbb {R}\) defined by

It is continuous, and \(g(0)= \alpha \Vert z\Vert _1\) while \(g\left( \Vert z\Vert _1\right) = -\Vert z\Vert _1\). The intermediate value theorem tells us that there exists \(\bar{\beta }\) such that \(g(\bar{\beta }) = 0\), that is, satisfying (39).

The function g is decreasing, piecewise affine and the breakpoints of g (i.e., the points where g is not differentiable) are \(B= \{0\} \cup _{i=1,\ldots ,n} \{ |z_i|\}\). We now proceed similarly as for the case \(\Vert \cdot \Vert _\infty \). We note \((l_i,\ldots , l_{m}) \in B^{m}\) the breakpoints sorted in increasing order, i.e., such that \(l_i < l_{i+1}\) for \(i=1,\ldots ,(m-1)\), where \(m \le n\) is the number of breakpoints. We use a bitonic search to find the two consecutive breakpoints \(l_i\) and \(l_{i+1}\), such that \(g(l_i) \;\ge \; 0 \;>\; g(l_{i+1})\). Since g is affine on \([l_i, l_{i+1}]\) a simple interpolation yields the value \(\bar{\beta }\). We then compute \(\left( I + {\frac{\alpha }{2} \partial \Vert \cdot \Vert _1^2} \right) ^{-1}(z)\) using (40).

We now consider the case \(\frac{1}{2}\Vert \cdot \Vert _\infty ^2\). We have (for instance [19, Prop. 4.2, p. 19])

Then, Moreau’s identity (25) yields for any \(\alpha >0 \) and for any \(z \in \mathbb {R}^n\)

which can be easily computed using the above algorithm for evaluating \(\left( I + {\frac{\alpha }{2}\,\partial \Vert \!\cdot \!\Vert _1^2} \right) ^{-1}(z)\).

4.5 Time results and illustrations

We now give numerical results for several Hamiltonians and initial data. We present time results on a standard laptop using a single core which show that our approach allows us to evaluate very rapidly HJ PDE solutions. We also present some time results on a 16 cores computer to show that our approach scales very well. We also present some plots that depict the solution of some HJ PDEs.

We recall that we consider the following Hamiltonians

-

\(H = \Vert \cdot \Vert _p\) for \(p = 1, 2, \infty \),

-

\(H = \sqrt{\langle \cdot , D \cdot \rangle }\) with D a diagonal positive definite matrix,

-

\(H = \sqrt{\langle \cdot , A \cdot \rangle }\) with A symmetric positive definite matrix,

and the following initial data

-

\(J = \frac{1}{2}\Vert \cdot \Vert _p^2\) for \(p = 1, 2, \infty \).

-

\(J = \frac{1}{2}\langle \cdot , D^{-1}\cdot \rangle \) with D a positive definite diagonal matrix,

where the matrix D and A are defined follows: D is a diagonal matrix of size \(n\times n\) defined by \(D_{ii} = 1 + \frac{i-1}{n-1}\) for \(i=1,\ldots ,n\). The symmetric positive definite matrix A of size \(n\times n\) is defined by \(A_{ii} = 2\) for \(i=1,\ldots ,n\) and \(A_{ij} = 1 \) for \(i,j=1,\ldots ,n\) with \(i\ne j\).

All computations are performed using IEEE double-precision floating points where denormalized number mode has been disabled. The quantities (x, t) are drawn uniformly in \([-10,10]^n \times [0,10]\). We present the average time to evaluate a solution for 1,000,000 runs.

We set \(\lambda =1\) in the split Bregman algorithm (21)–(23). We stop the iterations when the following stopping criteria is met: \(\Vert v^k - v^{k-1}\Vert _2^2 \le 10^{-8}\) and \(\Vert d^k - d^{k-1}\Vert _2^2 \le 10^{-8}\) and \(\Vert d^k - v^{k}\Vert _2^2 \le 10^{-8}\).

We first carry out the numerical experiments on an Intel Laptop Core i5-5300U running at 2.3 GHz. The implementation here is single threaded, i.e., only one core is used. Tables 1, 2, 3 and 4 present time results for several dimensions \(n=4,8,12,16\) and with initial data \(J = \frac{1}{2}\Vert \cdot \Vert _2^2\), \(J = \frac{1}{2}\Vert \cdot \Vert _\infty ^2\), \(J = \frac{1}{2}\Vert \cdot \Vert _1^2\) and \(J = \frac{1}{2}\langle \cdot , D \rangle \), respectively. We see that it takes about \(10^{-8}\) to \(10^{-4}\) s per evaluation of the solution.

We now consider experiments that are carried out on a computer with 2 Intel Xeon E5-2690 processors running at 2.90 GHz. Each processor has 8 cores. Table 5 presents the average time to compute the solution with Hamiltonian \(H=\Vert \cdot \Vert _\infty \) and initial data \(J=\Vert \cdot \Vert _1^2\) for several dimensions and various number of used cores. We see that our approach scales very well. This is due to the fact that our algorithm requires little memory which easily fits in the L1 cache of each processor. Therefore, cores are not competing for resources. This suggests that our approach is suitable for low-energy embedded systems.

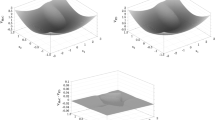

We now consider solutions of HJ PDEs in dimension \(n=8\) on a two-dimensional grid. We evaluate \(\phi (x_1, x_2,0,0,0,0,0,0)\) with \(x_i \in \cup _{k=0,\ldots ,99}\{-20 + k \frac{40}{99} \}\) for \(i=1,2\). Figures 1, 2 and 3 depict the solutions with initial data \(J=\frac{1}{2}\Vert \cdot \Vert _\infty ^2\), \(J=\frac{1}{2}\Vert \cdot \Vert _1^2\) and \(J=\frac{1}{2}\Vert \cdot \Vert _1^2\), and with Hamiltonians \(H= \Vert \cdot \Vert _2\), \(H = \Vert \cdot \Vert _1\). and \(H=\sqrt{\langle \cdot , D \cdot \rangle }\) for various times, respectively. Figures 4 and 5 illustrate the max/min-plus algebra results described in Sect. 2.2. Figure 4 depicts the HJ solution for the initial data \(J = J= \min {}\left( \frac{1}{2}\Vert \cdot \Vert _2^2 - \langle b, \cdot \rangle , \frac{1}{2}\Vert \cdot \Vert _2^2 + \langle b, \cdot \rangle \right) \) with \(b=(1,1,1,1,1,1,1,1)^{\dagger }\), and \(H=\Vert \cdot \Vert _1\) for various times. Figure 5 depicts the HJ solution for various time with \(J= \frac{1}{\Vert }\cdot \Vert _2^2\) and \(H=\min {}\left( \Vert \cdot \Vert _1, \sqrt{\langle \cdot , \frac{4}{3}D \cdot \rangle } \right) \).

Evaluation of the solution \(\phi ((x_1,x_2, 0,0,0,0,0,0)^{\dagger },t)\) of the HJ PDE with initial data \(J=\frac{1}{2}\Vert \cdot \Vert _\infty ^2\) and Hamiltonian \(H=\Vert \cdot \Vert _2\) for \((x_1,x_2) \,\in \, [-20,20]^2\) for different times t. Plots for \(t=0, 5,10,15\) and respectively depicted in a–d. The level lines multiple of 5 are superimposed on the plots

Evaluation of the solution \(\phi ((x_1,x_2,0,0,0,0,0,0)^{\dagger },t)\) of the HJ PDE with initial data \(J=\frac{1}{2}\Vert \cdot \Vert _1^2\) and Hamiltonian \(H=\Vert \cdot \Vert _1\) for \((x_1,x_2) \,\in \, [-20,20]^2\) for different times t. Plots for \(t=0, 5,10,15\) are respectively depicted in a–d. The level lines multiple of 20 are superimposed on the plots

Evaluation of the solution \(\phi ((x_1,x_2,0,0,0,0,0,0)^{\dagger },t)\) of the HJ PDE with initial data \(J=\frac{1}{2}\Vert \cdot \Vert _1^2\) and Hamiltonian \(H=\Vert \cdot \Vert _D\), for \((x_1,x_2) \,\in \, [-20,20]^2\) for different times t. Plots for \(t=0, 5,10,15\) are respectively depicted in a–d. The level lines multiple of 20 are superimposed on the plots

Evaluation of the solution \(\phi ((x_1,x_2,0,0,0,0,0,0)^{\dagger },t)\) of the HJ PDE with initial data \(J= \min {}\left( \frac{1}{2}\Vert \cdot \Vert _2^2 - \langle b, \cdot \rangle , \frac{1}{2}\Vert \cdot \Vert _2^2 + \langle b, \cdot \rangle \right) \) with \(b=(1,1,1,1,1,1,1,1)^{\dagger }\) and Hamiltonian \(H=\Vert \cdot \Vert _1\), for \((x_1,x_2) \,\in \, [-20,20]^2\) for different times t. Plots for \(t=0, 5,10,15\) are respectively depicted in a–d. The level lines multiple of 15 are superimposed on the plots

Evaluation of the solution \(\phi ((x_1,x_2,0,0,0,0,0,0)^{\dagger },t)\) of the HJ PDE with initial data \(J=\frac{1}{2}\Vert \cdot \Vert _1^2\) and Hamiltonian \(H=\min {}\left( \Vert \cdot \Vert _1, \sqrt{\langle \cdot , \frac{4}{3}D \cdot \rangle } \right) \), for \((x_1,x_2) \,\in \, [-20,20]^2\) for different times t. Plots for \(t=2, 5,9,12\) are respectively depicted in a–d. The level lines multiple of 15 are superimposed on the plots

5 Conclusion

We have designed algorithms which enable us to solve certain Hamilton–Jacobi equations very rapidly. Our algorithms not only evaluate the solution but also compute the gradient of the solution. These include equations arising in control theory leading to Hamiltonians which are convex and positively homogeneous of degree 1. We were motivated by ideas coming from compressed sensing; we borrowed algorithms devised to solve \(\ell _1\) regularized problems which are known to rapidly converge. We apparently extended this fast convergence to include convex positively 1-homogeneous regularized problems.

There are no grids involved. Instead of complexity which is exponential in the dimension of the problems, which is typical of grid based methods, ours appears to be polynomial in the dimension with very small constants. We can evaluate the solution on a laptop at about \(10^{-4}{-}10^{-8}\) s per evaluation for fairly high dimensions. Our algorithm requires very low memory and is totally parallelizable which suggest that it is suitable for low-energy embedded systems. We have chosen to restrict the presentation of the numerical experiments to norm-based Hamiltonians, and we emphasize that our approach naturally extends to more elaborate positively 1-homogeneous Hamiltonians (using the min/max algebra results as we did for instance).

As an important step in this procedure, we have also derived an equally fast method to find a closest point lying on \(\Omega \), a finite union of compact convex sets \(\Omega _i\), such that \(\Omega = \cup _{i}^{k}\Omega _i\) has a nonempty interior, to a given point.

We can also solve certain so-called fast marching [49] and fast sweeping [47] problems equally rapidly in high dimensions. If we wish to find \(\psi :\mathbb {R}^n\rightarrow \mathbb {R}\) with, say \(\psi =0\) on the boundary of a set \(\Omega \) defined above, satisfying

then, we can solve for \(u:\mathbb {R}^n\times [0,+\infty ) \rightarrow \mathbb {R}\)

with initial data

and locate the zero level set of \(u(\cdot , t) = 0\) for any given \(t>0\). Indeed, any \(x \in \{y \in \mathbb {R}^n\;|\; u(y,t) =0\}\) satisfies \(\psi (x) = t\).

Of course the same approach could be used for any convex, positively 1-homogeneous Hamiltonian H (instead of \(\Vert \cdot \Vert _2\)), e.g., \(H = \Vert \cdot \Vert _1\). This will give us results related to computing the Manhattan distance.

We expect to extend our work as follows:

-

1.

We will do experiments involving linear controls, allowing x and \(t>0\) dependence while the Hamiltonian \((p,x,t) \mapsto H(p,x,t)\) is still convex and positively 1-homogeneous in p. The procedure was described in Sect. 2.

-

2.

We will extend our fast computation of the projection in several ways. We will consider in detail the case of polyhedral regions defined by the intersection of sets \(\Omega _i = \{x\in \mathbb {R}^n\;|\; \langle a_i, x \rangle - b_i \,\le \,0\}\), \(a_i,b_i \,\in \,\mathbb {R}^n\), \(\Vert a_i\Vert _2=1\), for \(i=1,\ldots ,k\). This is of interest in linear programming (LP) and related problems. We expect to develop alternate approaches to several issues arising in LP, including rapidly finding the existence and location of a feasible point.

-

3.

We will consider nonconvex but positively 1-homogeneous Hamiltonians. These arise in: differential games as well as in the problem of finding a closest point on the boundary of a given compact convex set \(\Omega \), to an arbitrary point in the interior of \(\Omega \).

-

4.

As an example of a nonconvex Hamiltonians we consider the following problems arising in differential games [21, 36, 37]. We need to solve the following scalar problem for any \(z\in \mathbb {R}^n\) and any \(\alpha >0\)

$$\begin{aligned} \min _y \left\{ \frac{1}{2} \Vert y-x\Vert _2^2 - \alpha \Vert y\Vert _1\right\} . \end{aligned}$$It is easy to see that the minimizer is the stretch \(_1\) operator which we define for any \(i=1,\ldots ,n\) as:

$$\begin{aligned} \left( \hbox {stretch}_1 (x,\alpha )\right) _i = {\left\{ \begin{array}{ll} x_i+\alpha &{}\quad \hbox {if }\; \ x_i > 0, \\ 0 &{} \quad \hbox {if } \; x_i=0,\\ x_i-\alpha &{}\quad \hbox {if }\; \ x_i < 0. \end{array}\right. } \end{aligned}$$(41)We note that the discontinuity in the minimizer will lead to a jump in the derivatives \((x,t)\mapsto \frac{\partial \varphi }{\partial x_i}(x,t)\), which is no surprise, given that this interface associated with the equation

$$\begin{aligned} \frac{\partial \varphi }{\partial t}(x,t) - \sum _{i=1}^n \left| \frac{\partial \varphi }{\partial x_i}(x,t)\right| = 0, \end{aligned}$$and the previous initial data, will move inwards, and characteristics will intersect. The solution \(\varphi (x,t)\) will remain locally Lipschitz continuous, even though a point inside the ellipsoid may be equally close to two points on the boundary of the original ellipsoid in the Manhattan metric. So we are solving

$$\begin{aligned} \varphi (x,t)= & {} -\frac{1}{2} - \min _{v\in \mathbb {R}^n}\left\{ \frac{1}{2} \sum _{i=1}^n a_i^2 v_i^2 - t \sum _{i=1}^n |v_i| + \langle x,v\rangle \right\} \\= & {} -\frac{1}{2} + \frac{1}{2} \sum _{i=1}^n \frac{x_i^2}{a_i^2} - \min _{v\in \mathbb {R}^n} \left\{ \frac{1}{2} \sum _i^n a_i^2 \left( v_i - \frac{x_i}{a^2}\right) ^2 - t \sum _{i=1}^n |v_i|\right\} \\= & {} -\frac{1}{2} + \frac{1}{2} \sum _{i=1}^n \frac{(|x_i| + t)^2}{a_i^2} \end{aligned}$$The zero level set disappears when \(t \ge \max _{i} a_i\) as it should.

For completeness, we also consider the nonconvex optimization problem

$$\begin{aligned} \min _{v\in \mathbb {R}^n} \left\{ \frac{1}{2} \Vert v-x\Vert _2^2 - \alpha \,\Vert v\Vert _2\right\} . \end{aligned}$$Its minimizer is given by the \(\mathrm{stretch}_2\) operator formally defined by

$$\begin{aligned} \mathrm{stretch}_2(x, \alpha ) = {\left\{ \begin{array}{ll} x + \alpha \frac{x}{\Vert x\Vert _2} &{}\quad \mathrm{ if }\; x\ne 0,\\ \alpha \theta &{}\quad \mathrm{ with }\; \Vert \theta \Vert _2=1 \mathrm{ if } x=0. \end{array}\right. } \end{aligned}$$This formula, although multivalued at \(x=0\), is useful to solve the following problem: Move the unit sphere inwards with normal velocity 1. The solution comes from finding the zero level set of

$$\begin{aligned} \varphi (x,t)= & {} -\min _{v\in \mathbb {R}^n} \left\{ \frac{|v|_2^2}{2} - t\Vert v\Vert _2 - \langle x,v\rangle \right\} -\frac{1}{2}\\= & {} -\min _{v\in \mathbb {R}^n} \left\{ \frac{1}{2} \Vert v-x\Vert _2^2 - t\Vert v\Vert _2\right\} +\frac{1}{2} \left( \Vert x\Vert _2^2 - 1\right) \\= & {} -\frac{1}{2} t^2 + t\Vert x\Vert _2 \left( 1 + \frac{t}{\Vert x\Vert _2}\right) + \frac{1}{2} \left( \Vert x\Vert _2^2 - 1\right) \\= & {} \frac{1}{2} \left( \Vert x\Vert _2 + t\right) ^2 - \frac{1}{2} \end{aligned}$$and, of course, the zero level set is the set of x satisfying \(\Vert x\Vert _2 = t-1\) if \(t \le 1\) and the zero level set vanishes for \(t > 1\).

References

Akian, M., Bapat, R., Gaubert, S.: Max-plus algebras. In: Hogben, L. (ed.) Handbook of Linear Algebra (Discrete Mathematics and Its Applications), Chapter 25, vol. 39. Chapman & Hall/CRC, Boca Raton (2006)

Aubin, J.-P., Cellina, A.: Differential Inclusions. Springer, Berlin (1984)

Bellman, R.: Adaptive Control Processes, A Guided Tour. Princeton University Press, Princeton (1961)

Bellman, R.: Dynamic Programming. Princeton University Press, Princeton (1957)

Brezis, H.: Opérateurs maximaux monotones et semi-groupes de contractions dans les espaces de Hilbert. North-Holland, Amsterdam (1973)

Candes, E.J., Romberg, J., Tao, T.: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2008)

Chambolle, A., Darbon, J.: On total variation minimization and surface evolution using parametric maximum flows. Int. J. Comput. Vis. 84(3), 288–307 (2009)

Chambolle, A., Pock, T.: A first-order primal–dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 41(1), 120–145 (2011)

Cheng, L.T., Tsai, Y.H.: Redistancing by flow of time dependent eikonal equation. J. Comput. Phys. 227(8), 4002–4017 (2008)

Combettes, P.L., Pesquet, J.-C.: Proximal thresholding algorithm for minimization over orthonormal bases. SIAM J. Optim. 18(4), 1351–1376 (2007)

Crandall, M.G., Evans, L.C., Lions, P.-L.: Some properties of viscosity solutions of Hamilton–Jacobi equations. Trans. AMS 282(2), 487–502 (1984)

Crandall, M.G., Lions, P.-L.: Viscosity solutions of Hamilton–Jacobi equations. Trans. AMS 277(1), 1–42 (1983)

Darbon, J.: On convex finite-dimensional variational methods in imaging sciences, and Hamilton–Jacobi equations. SIAM J. Imaging Sci. 8(4), 2268–2293 (2015)

Daubechies, I., Defrise, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57(11), 1413–1457 (2004)

Dijkstra, E.W.: A note on two problems in connexion with graphs. Numer. Math. 1, 269–271 (1959)

Dolcetta, I.C.: Representations of solutions of Hamilton-Jacobi equations. In: Lupo, D., Pagani, C.D. (eds.) Nonlinear Equations: Methods, Models and Applications, pp. 79–90. Birkhäuser, Basel (2003)

Donoho, D.L.: Compressed sensing. IEEE Trans. Image Inf. Theory 52(4), 1289–1305 (2006)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splittingmethod and the proximal point algorithm for maximal monotone operators. Math. Program. Ser. A 55(3), 293–318 (1992)

Ekeland, I., Temam, R.: Convex Analysis and Variational Problems. North-Holland, Amsterdam (1976)

Evans, L.C.: Partial Differential Equations, Graduate Studies in Mathematics, vol. 19. AMS, Providence (2010)

Evans, L.C., Souganidis, P.E.: Differential games and representation formulas for solutions of Hamilton–Jacobi Isaacs equations. Indiana Univ. Math. J. 38, 773–797 (1984)

Figueiredo, M.A.T., Nowak, R.D.: Bayesian wavelet-based signal estimation using non-informative priors. In: Conference Record of the Thirty-Second Asilomar Conference on Signals, Systems and Computers, vol. 2, IEEE, pp. 1368–1373. Piscataway, NJ (1998)

Fleming, W.H.: Deterministic nonlinear filtering. Annali della Scuola Normale Superiore di Pisa - Classe di Scienze 23(3–4), 435–454 (1997)

Glowinski, R., Marrocco, A.: Sur l’approximation par éléments finis d’ordre, et la resolution par pénalzation-dualité, d’une classe de problemémes de Dirichlet non-linéares, C.R. hebd. Séanc. Acad. Sci., Paris 278, série A, pp. 1649–1652 (1974)

Goldstein, T., Osher, S.: The split Bregman method for \(L_1\) regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Hestenes, M.R.: Multiplier and gradient methods. J. Optim. Theory Appl. 4(3), 303–320 (1969)

Hiriart-Urruty, J.-B.: Optimisation et Analyse Convexe. Presse Universitaire de France, Paris (1998)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms Part I. Springer, Heidelberg (1996)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms Part II. Springer, Heidelberg (1996)

Hopf, E.: Generalized solutions of nonlinear equations of the first order. J. Math. Mech. 14, 951–973 (1965)

Hu, C., Shu, C.-W.: A discontinuous Galerkin finite element method for Hamilton–Jacobi equations. SIAM J. Sci. Comput. 21(2), 666–690 (1999)

Kurzhanski, A.B., Varaiya, P.: Dynamics and Control of Trajectory Tubes: Theory and Computation. Birkhäuser, Boston (2014)

Lions, P.-L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979)

Lions, P.-L., Rochet, J.-C.: Hopf formula and multitime Hamilton–Jacobi equations. Proc. Am. Math. Soc. 96(1), 79–84 (1986)

McEneaney, W.M.: Max-Plus Methods for Nonlinear Control and Estimation. Birkhäuser, Boston (2006)

Mitchell, I.M., Bayen, A.M., Tomlin, C.J.: A time dependent Hamilton–Jacobi formulation of reachable sets for continuous dynamic games. IEEE Trans. Autom. Control 50(171), 947–957 (2005)

Mitchell, I.M., Tomlin, C.J.: Overapproximating reachable sets by Hamilton–Jacobi projections. J. Sci. Comput. 19(1–3), 323–346 (2003)

Moreau, J.-J.: Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. France 93, 273–299 (1965)

Oberman, A., Osher, S., Takei, R., Tsai, R.: Numerical methods for anisotropic mean curvature flow based on a discrete time variational formulation. Commun. Math. Sci. 9(3), 637–662 (2011)

Osher, S., Merriman, B.: The Wulff shape as the asymptotic limit of a growing crystalline interface. Asian J. Math. 1(3), 560–571 (1997)

Osher, S., Sethian, J.A.: Fronts propagating with curvature dependent speech: algorithms based on Hamilton–Jacobi formulations. J. Comput. Phys. 79(1), 12–49 (1988)

Osher, S., Shu, C.-W.: High order essentially nonosicllatory schemes for Hamilton–Jacobi equations. SIAM J. Numer. Anal. 28(4), 907–922 (1991)

Osher, S., Yin, W.: Error forgetting of Bregman iteration. J. Sci. Comput. 54(2), 684–695 (2013)

Rockafellar, R.T.: Convex Analysis. Princeton Landmarks in Mathematics. Princeton University Press, Princeton (1997). (reprint of the 1970 original, Princeton paperbacks)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Rockafellar, R.T.: Network Flows and Monotropic Optimization. Athena Scientific, Belmont (1998). (reprint of the 1984 original)

Tsai, Y.-H.R., Cheng, L.-T., Osher, S., Zhao, H.-K.: Fast sweeping algorithms for a class of Hamilton–Jacobi equations. SIAM J. Numer. Anal. 41(2), 673–694 (2003)

Teboulle, M.: Convergence of proximal-like algorithms. SIAM J. Optim. 7, 1069–1083 (1997)

Tsitsiklis, J.N.: Efficient algorithms for globally optimal trajectories. IEEE Trans. Autom. Control 40(9), 1528–1538 (1995)

Winkler, G.: Image Analysis, Random Fields and Dynamic Monte Carlo Methods. Applications of Mathematics, 2nd edn. Springer, Berlin (2006)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for \(\ell _1\) minimization with applications to compressed sensing. SIAM J. Imaging Sci. 1(1), 143–168 (2008)

Zhang, Y.T., Shu, C.-W.: High order WENO schemes for Hamilton–Jacobi equations on triangular meshes. SIAM J. Sci. Comput. 24(3), 1005–1030 (2013)

Zhu, M., Chan, T.F.: An efficient primal–dual hybrid gradient algorithm for total variation image restoration. UCLA CAM report 08–34 (2008)

Author's contributions

JD and SO equally contributed to this work. Both authors read and approved the final manuscript.

Acknowledgements

The authors deeply thank Gary Hewer (Naval Air Weapon Center, China Lake) for fruitful discussions, carefully reading drafts and helping us to improve the paper. Research supported by ONR Grants N000141410683, N000141210838 and DOE Grant DE-SC00183838.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Appendix: Gauge

Appendix: Gauge

Now suppose furthermore that we wish H to be nonnegative, i.e., \(H(p) \ge 0\) for any \(p \in \mathbb {R}^n\). Under this additional assumption, we shall see that the Hamiltonian H is not only the support function of C but also the gauge of convex set that we will characterize. Let us define the gauge \(\gamma _\Omega :\mathbb {R}^n\rightarrow \mathbb {R}^n\cup \{+\infty \}\) of a closed convex set D containing the origin [28, Def. 1.2.4, p. 202]

where \(\gamma _D(x) = + \infty \) if \( x \notin \lambda D\) for all \(\lambda > 0\). Using [28, Thm. 1.2.5 (i), p 203], \(\gamma _D\) is lower semicontinous, convex and positively 1-homogeneous. If \(0 \in \mathrm{int}\, D\) then [28, Thm. 1.2.5 (ii), p. 203] the gauge \(\gamma _D\) is finite everywhere, i.e., \(\gamma _D:\mathbb {R}^n\rightarrow \mathbb {R}\), we recall that \(\mathrm{int}\, D\) denotes the interior of the set D. Thus, taking \(H = \gamma _D\) satisfies our requirements. Note that if we further assume that D is symmetric (i.e., for any \(d \in D\) then \(-d \in D\)) then \(\gamma _D\) is a seminorm. If in addition we wish \(H(p) > 0 \) for any \(p \in \mathbb {R}^n{\setminus } \{0\}\), then D has to be a compact set [28, Corollary. 1.2.6, p. 204]. For the latter case, a symmetric D implies that \(\gamma _D\) is actually a norm.

We now describe the connections between the sets D and C, the gauge \(\gamma _D\), the support function of C and nonnegative, convex, positively 1-homogeneous Hamiltonians \(H:\mathbb {R}^n\rightarrow \mathbb {R}\). Given a closed convex set \(\Omega \) of \(\mathbb {R}^n\) containing the origin, we denote by \(\Omega ^\circ \) the polar set of \(\Omega \) defined by

We have that \((\Omega ^\circ )^\circ = \Omega \). Using [28, Prop. 3.2.4, p. 223] we have that the gauge \(\gamma _D\) is the support function of \(D^\circ \). In addition, [28, Corollary 3.2.5, p. 233] states that the support function of C is the gauge of \(C^\circ \). We can take \(D = C^\circ \) and see that H can be expressed as a gauge. Also, using [28, Thm. 1.2.5(iii), p. 203] we have that \(C^\circ = \{ p \in \mathbb {R}^n\,|\, H(p) \le 1\}\).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Darbon, J., Osher, S. Algorithms for overcoming the curse of dimensionality for certain Hamilton–Jacobi equations arising in control theory and elsewhere. Res Math Sci 3, 19 (2016). https://doi.org/10.1186/s40687-016-0068-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40687-016-0068-7