Abstract

Background

Laboratory assistants in biology and medicine play a central role in the operation of laboratories in hospitals, research institutes, and industries. Their daily work routine is characterized by dealing with molecular structures/chemical substances (i.e. biochemistry) as well as cell cultures (i.e. cell biology). In both these fields of biochemistry and cell biology, laboratory assistants rely on knowledge about three laboratory tasks: responsible action, data management, and laboratory techniques. Focusing on these laboratory tasks, we developed a test instrument measuring the professional knowledge of prospective laboratory assistants (acronym: PROKLAS) about biochemistry and cell biology.

Methods

We designed a paper-and-pencil test measuring the professional knowledge of laboratory assistants required to fulfill daily laboratory tasks in biochemistry and cell biology. A sample of N = 284 Vocational Education and Training (VET) students [(average age = 20.0 years (SD = 3.3)] were tested in a cross-sectional study. The sample comprised of prospective biology laboratory assistants, biological technicians, and medical laboratory technicians.

Results

Confirmatory factor analysis (CFA) indicates that the test developed allows us to measure the professional knowledge of laboratory assistants in biochemistry and cell biology as two empirically separable constructs among laboratory assistants. CFA with covariates widely confirms the validity of PROKLAS in its respective subscales. Firstly, VET-related covariates predicted biochemistry and cell biology scores of students considerably better compared to the covariates related to general secondary school. Secondly, general biological knowledge predicted biochemistry and cell biology scores of PROKLAS. Finally, VET students’ self-efficacy in laboratory tasks and their opportunities to learn laboratory tasks are positively correlated to achievement in PROKLAS. However, we found a similar relationship for self-efficacy in English too.

Conclusion

Our analyses indicate that PROKLAS can be effectively used for summative and formative VET evaluation in assessing the professional knowledge of laboratory assistants in biochemistry and cell biology.

Similar content being viewed by others

Introduction

Ever since fictitious shows like Crime Scene Investigation flooded television channels, everyone is aware of scientific laboratory assistants and forensic technicians working in laboratories who apply various scientific techniques to track down criminals. The popularity of these shows has impacted our perception of the abilities of laboratory assistants (Podlas 2006). While most of these shows are fictional, the professions of the scientific laboratory assistants in medicine and biology enacted are real. In this study, we focus on the professional knowledge of laboratory assistants in biology and medicine that is required to perform their daily laboratory work. Although we focus specifically on the German education system, the laboratory duties are similar across countries, and our study would be of interest to a wider audience.

Vocational Education and Training (VET) programs, like those to become a laboratory assistant, play an important role in training people outside university careers (Deissinger et al. 2011). They have been very successful in creating occupational opportunities for adolescents (Schmidt 2010; Shavit and Muller 2000). In Germany, 2.5 million students were enrolled across 330 different VET programs from 2013 to 2014 alone (Federal Statistical Office 2014). Recent efforts were initiated to compare and rank the quality of VET programs nationally and internationally, for example, in the National Quality Framework (NQF) and the European Quality Framework (EQF; Brockmann et al. 2011; Deissinger 2009). However, comparing international and national VET programs is difficult due to their lack of common standards for learning goals (Brockmann et al. 2011). To be able to compare VET programs, it is very important to first develop reliable instruments assessing the profession-specific requirements of the VET programs (Deissinger et al. 2011). We contribute to this effort with our newly developed instrument testing the profession knowledge of laboratory assistants. Recently, several educational research studies have started to assess the quality of VET programs, drawing attention to the construct of professional competences (Seeber 2014; Winther and Prenzel 2014). Professional competences are defined as the “…capability to perform; to use knowledge, skills and attitudes that are integrated in the professional repertoire of the individual…” (Mulder et al. 2006, p 23). They are described as work activity-oriented skills (in a broader sense) encompassing knowledge, skills (in a more specific sense), and attitudes (cf. KMK, Secretariat of the Standing Conference of the Ministers of Education and Cultural Affairs of the Länder in the Federal Republic of Germany 2011; Le Deist and Winterton 2005).

An important facet of professional competence is the professional knowledge acquired by the trainees. It is defined as the knowledge that people need to practice their profession, for example, to solve complex problems at workplace (Kirschner 2013; Riedl 2003). Measuring professional knowledge is considered as a suitable form of quality assessment for VET programs (Gschwendtner 2011; Nickolaus et al. 2012). Consequently, the first instruments for the evaluation of such programs addressed professional knowledge, e.g. for mechanical technicians (Abele et al. 2013) and for commercial business clerks (Achtenhagen and Winther 2014). However, to our knowledge, there are no validated instruments for VET programs in biology and medicine currently existing, despite the important role of laboratory assistants in hospitals, research institutes, and industries. To address this gap, we have developed a paper-and-pencil test (Professional Knowledge of Laboratory Assistants or PROKLAS). We validated it in this study with N = 284 VET students, that allows for the assessment of their professional knowledge of laboratory tasks in biochemistry and cell biology. In this paper, we use the term “laboratory assistants” for “laboratory assistants in biology and medicine”, and “laboratory tasks” for “laboratory tasks in biochemistry and cell biology”.

Background

Common tasks of laboratory assistants in biology and medicine

Laboratory assistants are trained to work with complex biological systems performing technical or diagnostic tests in medical and scientific laboratories (Barley and Bechky 1994). Their duties include performing laboratory equipment maintenance and repair, operating instruments, and conducting experiments (Barley and Bechky 1994). The first laboratory work standard, the Good Laboratory Practice (GLP), was issued in the 1970s by the Food and Drug Administration (FDA) in the United States for laboratory work procedures in scientific and clinical research areas. The GLP standards were particularly introduced to reduce safety concerns in laboratories. In 1997, the GLP was internationally set as a standard by the Organization for Economic Cooperation and Development (OECD 1998). In 2003, the Good Clinical Laboratory Practice (GCLP) was introduced to extend the standards of GLP to the specialized clinical research laboratories worldwide (Ezzelle et al. 2008). The GLP and GCLP present guidelines for personnel and facility organization, quality assurance, lab equipment, and Standard Operating Procedures for standardized documentations (Cooper-Hannan et al. 1999).

When conceptualizing and designing our test instrument, we compared GLP and GCLP standards to current curricula and governmental regulations of VET programs of laboratory assistants in biology and medicine. Based on these guidelines we identified two content fields, i.e. biochemistry and cell biology that are mandatory for training laboratory assistants. Each handling procedure provided in the guidelines was assigned to one of the two content fields. For example, procedures involving non-living matter (e.g. weighing substances, or extracting DNA) were grouped under biochemistry, while procedures involving living organisms (e.g. growing cells in flasks) were classified under cell biology. Within biochemistry and cell biology, we distinguished between the following three laboratory tasks of daily work routine: (1) responsible action, (2) data management, and (3) laboratory techniques.

Responsible Action (RA) referred to the use of the globally harmonized system of the classification and labeling of (i.e. in biochemistry) potential toxic chemicals according to the United Nations Economic Commission for Europe (UNECE 2011). Data Management (DM) included information about analyzing measurement data outputs, or how to retrieve information about substances used in the laboratory. Laboratory Techniques (LT) include information on working procedures of experiments, like (for biochemistry) conducting a polymerase chain reaction (PCR), or (in cell biology) growing tissue cells.

In summary, considering the laboratory practice standards and the VET program curricula, we identified procedures that are performed daily by laboratory assistants in biology as well as in medicine. These common procedures allowed us to use PROKLAS for the assessment of professional knowledge of laboratory tasks of VET students in biology and medicine (e.g., biological technicians, biological laboratory assistants, and medical laboratory assistants, respectively).

Training as a laboratory assistant

In Germany, students can choose to enroll in different VET programs after finishing the secondary level of general school education and before entering the labor market (Müskens et al. 2009). Typically, these programs can be grouped into two types: (a) the dual system integrating industry and school education (e.g. VET programs for laboratory assistants in biology), and (b) a purely school-based education system (e.g. VET programs for technical assistants in medicine or biology). In the dual system, the laboratory assistants are employed in the industry and are trained at two places, their industrial workplace and a vocational school. Being employed at an organization, the dual VET students are directly integrated in the daily work processes of the industry and receive first-hand training and work experience this way. Their training follows official guidelines of school curricula (Bundesgesetzesblatt (BGBL) 2009; Harms et al. 2013; KMK 2011). However, laboratory working duties can be limited by the productivity and the size of the company (Hansson 2007).

In contrast, students at the school-based system are taught in classrooms and school laboratories alone (Müskens et al. 2009), thereby receiving less first-hand work experience. Despite these regulation differences, both types of VET programs pursue the common goal to train people in acquiring practical working skills for their future profession (KMK 2011; Nickolaus et al. 2012). Their training includes final written examination (e.g., in science and language) and practical tests on laboratory tasks following the official guidelines of training, examination regulation, and school curricula (e.g. APO-BK 2015; BGBl 2009), in accordance with the German Chambers of Commerce and Industry (IHK, Industrie- und Handelskammer). We present here a newly developed test instrument to test laboratory assistants independent of their VET type or discipline in biology or medicine. To achieve this goal, we focused on the common ground of professional knowledge about laboratory practice in our tested VET programs in biology and medicine.

Assessing professional knowledge and conceptualizing the test instrument PROKLAS

Focusing on laboratory practice in healthcare and medical professions, there are only a few instruments available testing the professional knowledge required to perform laboratory tasks. They mostly deal with emergency responses in hospitals by applying computer-assisted simulations or video sequences (Tavares et al. 2014; Wickler et al. 2013). Few other instruments focus on regular clinical work duties (e.g., for nurses) by standardized role play (e.g., Objective Structured Clinical Examination (OSCE); Selim et al. 2012), or through paper-and-pencil test formats for medical students at universities (Cowin et al. 2008). However, as mentioned above, there are no instruments available that measure the professional knowledge of prospective laboratory assistants. We addressed this gap by developing PROKLAS.

When conceptualizing PROKLAS, we took into account that laboratory assistants require a broad range of scientific knowledge (e.g., in zoology, biotechnology, microbiology, etc.) and as described above, have common guidelines in laboratory practices. As outlined before we identified two different fields in the laboratory practice which we termed “biochemistry” and “cell biology”. Within these two groups, we focused on the three laboratory tasks: (1) RA, including safety regulations important for health and environmental protection, (2) DM, including office-desk work duties like analyzing measurements, and (3) LT, including experimental work in the laboratory.

Since only theoretical and factual knowledge (so-called declarative knowledge; De Jong and Ferguson-Hessler 1996; König 2010) is not sufficient for mastering the laboratory tasks, we also took the so called procedural knowledge (Ambrosini and Bowman 2001; Eraut 2000; Miller 1990) into consideration when developing PROKLAS. The terms “declarative” and “procedural knowledge” were introduced in 1983 by Anderson in his adaptive control of thought (ACT) theory (Anderson 1983). Declarative knowledge is defined as factual knowledge as ‘knowing that’ (Shavelson et al. 2005), which is memorized as a set of knowledge about facts, events, objects and people which can be verbalized and is not situation-specific (König 2010). In contrast, procedural knowledge is defined as ‘knowing how’ (Shavelson et al. 2005) or ‘how it works’ and ‘how to do it’ (Ambrosini and Bowman 2001), and can be seen as the knowledge on how to execute action sequences to solve problems (Rittle-Johnson and Star 2007). Our items for declarative knowledge assessed memorized information and definitions on substances or equipment needed in laboratories, whereas those for procedural knowledge involved identifying handling errors or questioning the correct procedures. Since procedural knowledge is found to evolve gradually from declarative knowledge in the training of novices (Anderson 1983; McPherson and Thomas 1989) we covered both, although procedural knowledge cannot be assessed without detailed information on each of the individuals’ cognitive ability (Mc Pherson et al. 1989). The complete model for the item development to assess the professional knowledge of laboratory assistants is shown in Fig. 1.

Research questions

With regard to the aims outlined above, we examined the following research questions in our study:

-

1.

Are the two fields of biochemistry and cell biology (and the three laboratory tasks of RA, DM, and LT) reflected in VET students’ professional knowledge about laboratory tasks?

-

2.

Is the VET students’ professional knowledge of laboratory tasks in biochemistry and cell biology coherently related to the academic background, general biological knowledge, self-efficacy, and perceived opportunities to learn?

Hypotheses

For our first research question, we hypothesized that our instrument measures the professional knowledge about laboratory tasks in biochemistry and cell biology. Though there are many similarities of daily work routine in laboratories, tasks within biochemistry and cell biology differ in their methods and working techniques. For example, laboratory tasks about DNA extraction or analytical screening are typical techniques in biochemistry, and differ from sampling or cultivation of organisms in cell biology (Gantner et al. 2011). Accordingly, on the one hand we expected that students’ responses can be explained by their knowledge in biochemistry and cell biology as two latent abilities. On the other hand, we similarly expected that students’ responses can be explained by their knowledge of RA, DM, and LT as three latent abilities.

For our second research question, we used personal characteristics of the tested VET students, namely: (a) their academic background, (b) their general biological knowledge, (c) their self-efficacy, and (d) their perceived opportunities to learn. Based on these four personal characteristics, we framed our hypotheses as follows:

Academic background: As school grades reflect cognitive abilities (Trapmann et al. 2007), we hypothesized that VET students with better school and VET grades will perform better in PROKLAS. We also hypothesized that a higher VET students’ training year (VET year) will show a positive effect on the test performance. Studies involving university and school students have shown that the number of years spent in education has an effect on their test performance (Astin 1999; Kleickmann et al. 2013; Riese and Reinhold 2012). We assume to find a similar effect for students when they transition into their VET programs.

General biological knowledge: Ausubel (1968) stated that “the most important single factor influencing learning is what the learner already knows”. Meta-analysis studies have shown that subject-related pre-knowledge of school students predict test performances (Hattie 2009). We hypothesized that pre-knowledge in biological contexts (e.g., about evolution or ecology), as taught in general secondary school, might positively influence students’ performance in our instrument.

Self-efficacy: According to Bandura (1977), a person’s willingness to initiate a coping behavior is affected by the confidence in his own abilities and efforts to do a particular task. Meta-analyses have shown that self-efficacy is moderately related to work-related performance (Stajkovic and Luthans 1998) and academic achievement (Carroll et al. 2009). We hypothesize that vocational students’ professional knowledge of laboratory tasks is positively related to their self-efficacy in performing those tasks. In contrast, there should be no substantial correlation between self-efficacy in a non-related subject such as English.

Perceived opportunities to learn: VET programs provide plenty of learning opportunities in work-based contexts (Tynjälä 2008). Since learning success depends on the use of opportunities to learn (e.g., Helmke and Schrader 2014), we hypothesized that using such learning opportunities will positively affect test performance.

Methods

Methodological approach for the domain analysis

Within these two fields of biochemistry and cell biology, we developed items to cover the three laboratory tasks. Item development was conducted and advised by two experts from the Leibniz Institute for Science and Mathematics Education in Kiel, 10 VET school teachers, and two VET educators from the industry.

For the assessment of procedural knowledge, we asked, for example, “State the proper procedures for sterile filtration of small amounts of samples using a sterile filter and a syringe.”, with five choices, one of which is correct (Item no. 44, Additional file 1). For the assessment of declarative knowledge, we asked, “State which method is used to determine the antibody titer in blood.”, again with five choices and one correct answer (Item no. 19, Additional file 1).

Measures

The test instrument (PROKLAS). Based on the model of professional knowledge used in Fig. 1, we developed 135 items to assess VET students’ knowledge of laboratory tasks. Except for one semi-open item, all items were designed in multiple-choice format. Some items contained pictograms or pictures to illustrate the context (e.g., biohazard characteristics of chemicals). Items developed stemmed from the two major fields of biochemistry (72 items, including those from pharmacology, molecular biology, and genetic engineering) and cell biology (63 items, including those from zoology, botany, and microbiology). Items addressed the three central laboratory tasks within each discipline, namely: RA (19 items for biochemistry and 29 items for cell biology), DM (20 items for biochemistry and 13 items for cell biology), and LT (33 items for biochemistry and 21 items for cell biology). The items developed addressed the two categories of knowledge in each laboratory task: declarative knowledge and procedural knowledge. Declarative knowledge was operationalized by items stimulating cognitive processes such as ‘remember and retrieve’. Procedural knowledge was operationalized by items stimulating cognitive processes such as ‘apply and understand’ according to Bloom’s taxonomy (Krathwohl 2002). The final set of 92 items is provided in the appendices in English and German (Additional files 1 and 2, respectively).

Personal characteristics of the VET students. We used the following personal characteristics of the VET students to validate the subscales biochemistry and cell biology: (a) academic background, (b) general biological knowledge, (c) self-efficacy, and (d) perceived opportunities to learn.

For academic background, we collected information about students’ final grades from general secondary school and the type of general secondary school they attended before entering the VET program. Final school grades potentially ranged from 1 (highest achievement) to 6 (lowest achievement). Two types of general secondary schools can be distinguished, those that train their students for a non-academic career (grades 5–9 [or 10]; nonacademic track), and those that train their students about 2–3 years longer for an academic career (grades 5–12 [or 13]; academic track; cf. Großschedl et al. 2015). We also collected information on students’ VET grades and their year in the VET program. The potential range of VET grades corresponded to the range of final school grade.

We assessed the general biological knowledge of the VET students using 12 items, comprising of areas from morphology, physiology, evolution, genetics, and ecology that were developed by Großschedl et al. (2014). Ten items were administered in multiple-choice format and two in short answer format. Since all the items were scored dichotomously (0 = wrong answer vs. 1 = correct answer), the person’s ability was estimated with Rasch’s simple logistic model according to the WLE method (Weighted Likelihood Estimate; Wright and Mok 2000) using ACER Conquest software (version 1.1.0; Wu et al. 1998). Cronbach’s alpha and WLE reliability were estimated to be .61 and .54, respectively.

Self-efficacy of the VET students was assessed by a revised version of the “Berlin evaluation instrument for self-evaluated student competencies” (BEvaKomp; Braun et al. 2008). The revised instrument measured students’ self-reported competency for laboratory work procedures and English as a foreign language using 15 items on a 4-point scale, from “does not apply at all” (1) to “fully applies” (4). Additionally, students were also asked to answer in similar 4-point scale their self-competency in laboratory tasks (e.g., laboratory safety and microbiological methods) and English as foreign language. An example of an item measuring professional knowledge is: “I can provide an overview on the topics in the subject area of laboratory tasks (vs. English)”. Cronbach’s alpha was .94 (M = 89.56, SD = 13.97) for self-efficacy in laboratory tasks and .95 (M = 37.00, SD = 9.80) for English, respectively.

We assessed the perceived opportunities to learn by asking VET students to indicate how intensively particular contents were reflected in their previous school career. For this, we used 12 items based on knowledge of laboratory tasks and 12 items based on general biological knowledge. All the items were on a 4-point scale from “not at all” (1) to “extremely” (4). Cronbach alphas for opportunities to learn laboratory tasks and general biology were .78 (M = 36.50, SD = 5.33) and .86 (M = 22.24, SD = 6.68), respectively.

Research design

Due to the large number of items, we implemented a planned missing data design with three booklets to evaluate our instrument (cf. Frey et al. 2009; Graham et al. 2006). Booklet 1 and 2 contained two distinct sets of items. These booklets were linked by booklet 3, with about 50 % overlap from the total number of items (linking items). Each participant completed only one booklet. The booklets were randomly distributed.

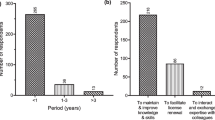

Sample and procedure

In August 2013, 284 students [62 % female, average age: 20.0 years (SD = 3.3)] participated in our cross–sectional study. 10 % were enrolled in the VET program as ‘laboratory biology assistants’ [52.4 % female; average age: 19.9 years (SD = 2.0)], 82 % as ‘biology technicians’ [62.8 % female; average age: 19.8 years (SD = 3.1)], and 8 % as ‘medical laboratory technicians’ [all female; average age: 23.2 years (SD = 5.2)]. We tested students out of fourteen classes from four VET schools in three different German states (Schleswig–Holstein, Lower Saxony, and Hamburg). Laboratory biology assistants participated in the dual VET program, whereas biology technicians and medical laboratory technicians were part of the school-based VET program. Trained test leaders administered the study that was conducted on-site in the VET school classes. The test time was set for 3 h, with a 10-min break. All the participants answering PROKLAS also answered items about their academic background, and additional questions about their general biological knowledge. Moreover, a sub-sample of 38 VET students answered questions about self-efficacy and perceived opportunities to learn.

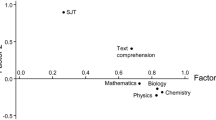

We used parcel score to conduct a factorial analysis of our model. Even though there is no set rule for the minimum amount of data needed to run a CFA, a minimum ratio of 1:5 between estimated parameters and participants is suggested (Bentler 1989; Krauss et al. 2008). Because of the large number of items, we could not apply this ratio when using items as indicators. Therefore, we used subscale or parcel scores as manifest indicators to measure latent variables (e.g., instead of 92, there are just six factor loadings; see Fig. 2; cf. Little et al. 2002). ACER Conquest software (version 1.1.0; cf. Wu et al. 1998) allowed us to calculate the parcel scores for CFA.

Model for the latent constructs of biochemistry and cell biology based on six parcels. Responsible action (RA), Data management (DM), Laboratory techniques (LT), Comparative fit index (CFI), Tucker–Lewis fit index (TLI), Akaike information criterion (AIC), Bayes Information criterion (BIC), Root-mean-square error of approximation (RMSEA). *** p < .001

Results

Statistical item analyses

In order to evaluate the measurement quality of our items, we calculated item difficulties and discrimination parameters in terms of Classical Test Theory. To overcome problems accompanied with missing data, calculations were conducted with ACER Conquest software (version 1.1.0; Wu et al. 1998). Item difficulties (and discrimination parameters) ranged between .14 and .79 (.11 and .60, respectively) for biochemistry, and between .04 and .82 (−14 and .61, respectively) for cell biology. Our item selection was based on an item analysis, in which items with an item difficulty outside the range of .20 and .80 as well as items with discrimination indices less than .15 were removed. We removed 15 items for biochemistry and 28 items for cell biology, yielding item difficulties (and discrimination parameters) ranging between .21 and .55 (.15 and .78, respectively) for biochemistry and .22 and .50 (.20 and .81, respectively) for cell biology (see Additional file 3: Table S1). We checked for the content of each of the removed items to ensure that all laboratory tasks were still covered by our instrument.

Factorial structure of PROKLAS

To answer our first research question, we explored the factorial structure of PROKLAS. We applied confirmatory factor analyses (CFA) by using the maximum likelihood procedure with robust standard errors using Mplus (version 7.11; cf. Muthén and Muthén 1998–2012). A three-dimensional Rasch model was specified for biochemistry and cell biology, respectively. Each dimension represented one of the three laboratory tasks (RA, DM, LT) considered in PROKLAS. Parcel scores were estimated with the WLE method, which is less biased than maximum likelihood estimation, and provides best point estimates of individual ability (Warm 1989). As VET students came from 14 different classes, we used the CFA calculation option ‘Type = complex’ to consider the nested structure of the data. To fix the metric of the latent variables, the factor variance was set to 1. Several goodness-of-fit indexes were calculated: the comparative fit index (CFI), the Tucker–Lewis Index (TLI), the akaike information criterion (AIC), the Bayes information criterion (BIC), and the root mean square error of approximation to determine model fit (RMSEA; Jackson et al. 2009; Schreiber et al. 2006). CFI and TLI represent the ratio of the discrepancy between the data and the hypothesized model. Good model fit is described by values ≥.95 (Schreiber et al. 2006). The penalized model selection criteria, AIC and BIC, are used to describe the complexity of a model (Kuha 2004). Lower values indicate better model fits, when comparing different models. The RMSEA represents the average of the covariance residuals between two models. A value of 0 indicates perfect fit, and satisfactory fit values go below <.06 (Hu and Bentler 1999; Schreiber et al. 2006). Our analysis indicated an excellent model fit (CFI = .997, TLI = .995, AIC = 5097.11, BIC = 5166.44, RMSEA = .025) for the two-factor model which assumes a single latent ability for each discipline (i.e. biochemistry and cell biology; Fig. 2). We compared goodness-of-fit of the two-factor model to the goodness-of-fit of a one-factor model (CFI = .914, TLI = .856, AIC = 5160.19, BIC = 5225.88, RMSEA = .138) assuming a single latent ability for answering PROKLAS. The descriptive results indicate that the two-factor model clearly outperformed the one factor model. We verified this finding with the Satorra–Bentler test, which calculates the differences of scaled (mean adjusted) Chi square values. The Satorra–Bentler test was significant (TRd = 1.97, p < .001, ∆df = 1, N = 284), indicating that assuming two latent abilities better fitted to the observed data than assuming a single latent ability. The reliability of the subscales is described by the factor ρ coefficient, which overcomes the shortcoming of Cronbach’s alpha coefficient outlined by Raykov (2004). The factor ρ coefficients for biochemistry and cell biology were .93, each.

Subsequently, we specified a three-factor model assuming a single factor for each latent ability of laboratory task (RA, DM, and LT). In this model, each laboratory task is measured by two indicators, one indicator from biochemistry and one indicator from cell biology. Analysis shows that the three-factor model (CFI = .887, TLI = .718, AIC = 5161.26, BIC = 5237.89, RMSEA = .025) clearly misfits the data. Finally, we specified a multitrait-multimethod model to reflect both fields and laboratory tasks in structural equation modelling. Therefore, we modified the two-factor model by allowing correlated residuals between the corresponding laboratory tasks of both the fields (e.g. a residual correlation between the indicators RA-biochemistry and RA-cell biology; CFI = .990, TLI = .971, AIC = 5111.32, BIC = 5191.60, RMSEA = .062). However, the simple two-factor model outperformed the three-factor and the multitrait-multimethod model.

In order to check whether dimensionality of the two-factor model depends on the students’ academic background, we tested several multiple-group models with two grouping variables, as the ‘type of general secondary school’ (0 = nonacademic track, 1 = academic track) and ‘VET-year’ as a dummy coded variable (0 = early period of studies, 1 = later period of studies). For each of the grouping variables, we did a model comparison between two multiple-group models. In the first model, the factor correlation was allowed to vary across groups, whereas in the second it was equal across groups. Loadings, intercepts, and residual variances of the indicators were set invariant across groups. Referring to ‘type of general secondary school’ as a grouping variable, the factor correlation for the nonacademic track group (r = .52; SE = .31) does not differ significantly (TRd = 3.16, p = .075, ∆df = 1, N = 238) from the factor correlation for the academic track group (r = .84; SE = .06). Referring to ‘VET-year’ as a grouping variable, the factor correlations also does not differ significantly (TRd = 2.52, p = .112, ∆df = 1, N = 271) between the early (r = .62; SE = .14) and the later period of studies (r = .76; SE = .06).

Relationship between PROKLAS subscales and VET students’ personal characteristics

For our second research question, we were interested in understanding how subscale scores of PROKLAS are related to particular personal characteristics of VET students, as measured by their (a) academic background, (b) general biological knowledge, (c) self-efficacy, and (d) perceived opportunities to learn. For this purpose, a CFA with covariates (cf. MIMIC model; Muthén and Muthén 1998–2012) was performed, where the relationship between the dependent variables (knowledge of biochemistry and cell biology) and a single covariate (Table 1) or a set of covariates (i.e. personal characteristics of VET students; MIMIC model; Muthén and Muthén 1998–2012; Table 2) were studied. For the type of general secondary school (academic track vs. non-academic track), we expected to, but did not find that VET students from the academic track outperformed those from the non-academic track (Table 1). For VET year, we hypothesized and confirmed that advanced VET students outperformed beginners in their biochemistry and cell biology scores in PROKLAS (Table 1). We also hypothesized and proved a positive relationship between general biological knowledge and biochemistry/cell biology scores in PROKLAS. Furthermore, we expected to and again proved our hypothesis that high-achieving VET students (operationalized by the general secondary school and VET grades) outperformed low-achieving students concerning the subscale score of PROKLAS (Table 1). As there are dependences between particular covariates, we did CFA with sets of covariates to adjust for the respective dependences. We adjusted general secondary school grades for type of general secondary school and VET grade for VET year (Table 2). Results show that VET-related covariates (year and grade) explained considerably more variance in biochemistry and cell biology scores than covariates related to general secondary school (type and grade). This strongly supports the validity of PROKLAS.

We also applied subscales representing self-efficacy in laboratory tasks and English to predict subscale scores of PROKLAS. We hypothesized that the subscale scores are better predicted by the self-efficacy in laboratory tasks than in English. CFAs with the respective covariates did not confirm our hypothesis (Tables 1 and 2). Although self-efficacy in laboratory tasks is significantly related to the subscale scores of PROKLAS, there is also a substantial relationship between self-efficacy in English and PROKLAS. Finally, we hypothesized that opportunities to learn laboratory tasks predicted subscale scores of PROKLAS much better than opportunities to learn general biology. Although this was observed for the biochemistry subscale, it was not the case for the cell biology subscale (Tables 1 and 2).

Discussion

Validity of the instrument

In this study, we developed an instrument that assesses the professional knowledge of laboratory assistants about laboratory tasks in biochemistry and cell biology. To our knowledge, this is the first empirically validated instrument available in this field. We distinguished between the three laboratory tasks: (1) the safety regulations important for health and environmental protection (RA), (2) the office-desk work when analyzing measurements (DM), and (3) conducting experiments in the laboratory (LT). We checked the validity of the PROKLAS subscales with a correlational approach (CFA and CFA with covariates). For this, we collected information about personal characteristics of VET students, which were related to the PROKLAS scores.

Based on our research questions, there are four findings supporting the validity of our instrument: Firstly, analyses indicated that the two latent abilities representing professional knowledge of laboratory tasks in biochemistry and cell biology were reflected by the VET students’ responses. Both abilities were correlated but empirically separable. Secondly, VET-related covariates (VET year and VET grade) predicted biochemistry and cell biology scores of students considerably better compared to the covariates related to general secondary school (type of general secondary school and final grade in general secondary school). We hypothesized that students of an academic track would score PROKLAS better than students of a non-academic track. Academic track students are educated 2–3 years longer by teachers who are intensively trained to teach biological fields (e.g., molecular biology or cell biology) in more depth in the academic track (KMK 2008). It has been shown that students’ achievement is linked to the subject knowledge of such well trained teachers (Darling-Hammond 2000; Heller et al. 2012). In this case, our results indicating no differences between academic track and non-academic track students are surprising. However this might be explained by a selection process of the students after graduating the general regular school. Well-performing academic track students are more likely to enroll at university studies than entering the VET programs, leaving relatively poorer academic track students and non-academic students for VET programs (Bosch 2010). Regarding the VET year our data showed that VET students who advanced in their program outperform VET program beginners, confirming results indicating a similar effect as seen in the VET program of mechanical technicians (Nickolaus et al. 2013). Thirdly, general biological knowledge predicted biochemistry and cell biology scores of PROKLAS. Similar results have been reported before in meta-analyses studies showing that subject-related pre-knowledge of school students predicts test performances (e.g. Hattie 2009). Assessing the VET students’ pre-knowledge in biology, we decided to apply a short test due to a better test-time economy, leaving more test-time for PROKLAS. The low reliability score that we achieved of .6 is still in an acceptable range above .55 for a short test according to Rost (2007). This score can be explained by the broad range of items questioning the knowledge about biological disciplines tested which is more preferable then a narrow set reflecting the VET students’ knowledge (Rost 2007).

Finally, VET students’ self-efficacy in laboratory tasks and their opportunities to learn laboratory tasks are positively correlated to achievement in PROKLAS. However, we found a similar relationship for self-efficacy in English too. As many terms used in biological and medical laboratory practice are derived from the English language (e.g., PCR), we can speculate that understanding English might facilitate VET students’ performance in PROKLAS.

Our results indicate that neither academic background nor the time the VET students spent in their VET programs directly affect the dimensionality of PROKLAS. In case of academic track, we assume to have a selection of VET students attending the programs as explained above. Regarding the time spent at the VET program, we did not find a significant effect on the dimensionality of our model either. This indicates the good model fit of PROKLAS which can be applied at different stages of training for laboratory assistants and makes the obtained scores comparable. In literature, a differentiation of sub scales was observed during the training of mechanical technicians (Nickolaus et al. 2013). We have not seen this differentiation in our laboratory tasks; however this could still develop at later training stages.

PROKLAS can be applied for assessing the professional knowledge of laboratory assistants in biology and medicine independent of their academic background. We designed PROKLAS in a paper-and-pencil format because it is easier and faster to administer for large-scale assessments and allows an objective grading when standardized (Kane 1992). These formats are commonly used in large-scale assessments like Trends in International Mathematics and Science Study (TIMSS) and Programme for International Student Assessment (PISA; Harlow and Jones 2004). Paper-and-pencil-tests in multiple choice formats are also applied in medical education reflecting declarative and procedural knowledge (Abu-Zaid and Khan 2013). However, there have been critiques about the usefulness of the paper-and-pencil format in how well they can reflect practical working situations (Erpenbeck 2009; Mulder et al. 2006). To what extent PROKLAS can accurately predict practical working skills of laboratory tasks and potentially replace action-oriented tests to measure practical skills in VET programs needs further investigations. Action-oriented individual tests are common praxis for the examination of laboratory assistants’ knowledge in biology or medicine (BGBl 2009; KMK 2011). Although they can test problem solving skills in real work situations, they are difficult to standardize (Kane 1992). Alternatively, simulation tests allow standardized testing by using computer software or video sequences (Achtenhagen and Winther 2014; Nickolaus et al. 2011). However, analyzing simulation tests are reported to be very time consuming (Achtenhagen and Winther 2014; Gschwendtner et al. 2009). These limitations make those test formats impracticable for the use of standardized large-scale assessments. Another concern with paper-and-pencil tests is that relating to possible booklet design effects. Designing booklets require, for example, to avoid fatigue effects when too many items need to be handled (Hohensinn et al. 2008), or to keep a strong linkage between booklets when more than one is applied (Graham et al. 2006). Therefore, we applied an efficacy 3-form design with a distinct set of items in three different booklets linked by a 50 % overlap (Graham et al. 2006). Minimizing possible fatigue- and item positioning effects, we mixed the item difficulties in these booklets with an equal amount of graphics and pictures in each booklet, and we provided a half time break to our participants. Testing for booklet effects revealed no significant differences between the booklet groups.

Limitations of PROKLAS

We applied item parcel scores as manifest indicators for the two latent variables reflecting the professional knowledge in biochemistry and cell biology. With the exception of the item parcel DM2 (of the subscale data management) loading on cell biology (Fig. 2), all standardized factor loadings of the item parcels exceeded the critical value of .70 (cf. Kline 2011). This indicates that the parcels of items represent the appropriate latent abilities, and thereby confirmed the validity of PROKLAS. Although, we can infer that the parcels adequately indicated knowledge in biochemistry and cell biology, we cannot state that each of the items included in these parcels also indicates knowledge in both fields of biochemistry and cell biology. To be able to address this, we would need to increase the sample size to reach the recommended items (as estimated parameters) to participants’ ratio of 1:5 (Bentler 1989).

For the design of PROKLAS, professional knowledge was classified as declarative knowledge and procedural knowledge. While the former included items requiring facts, definitions and information, the procedural knowledge was tested by items asking for a deeper understanding of handling procedures. Both categories of knowledge have been reported as having very closely associated dimensions (Nickolaus and Seeber 2013). This might be due to the operationalization of procedural knowledge which in the professional context always requires declarative knowledge components (Nickolaus et al. 2012). Novices in training still form first a declarative base when developing procedural knowledge (McPherson SL and Thomas 1989). Due to the smooth transition between declarative and procedural knowledge, we cannot clearly distinguish between both categories of cognition. To be able to do so, we would need to compare VET students’ scores in PROKLAS with observation of these VET students in action-oriented tests for laboratory tasks. In this case, each dimension of laboratory tasks would be needed to be observed and then compared to the scores obtained in PROKLAS. This is currently outside the scope of this project. To compare VET students’ score in PROKLAS we would also apply a simulation test with video vignettes (cf. Seeber 2014) or computer-assisted simulations (cf. Achtenhagen and Winther 2014), which has not been developed yet.

Despite these limitations, PROKLAS could be a very useful tool to assess the professional knowledge of laboratory assistants in biochemistry and cell biology independent of their academic track and their VET year. It therefore can be used also as a prognostic tool to test the professional knowledge at different stages in the VET program. Since its design covers a broad range of topics in biochemistry (including chemistry and genetics) and cell biology (including immunology and microbiology), it also can be used to assess professional knowledge in domain related and ecological aspects. This might become useful when assessing the learning stage of in a VET program during training.

Conclusions

In this study, we presented the test instrument PROKLAS that assesses the professional knowledge of laboratory assistants about laboratory tasks. We checked the validity of PROKLAS using CFA and CFA with covariates. Our findings strongly support that PROKLAS allows an empirically separable measure on professional knowledge of laboratory tasks in biochemistry and cell biology. To our knowledge, it is the first empirically validated instrument for laboratory assistants available.

PROKLAS can be successfully used for summative and formative training evaluations. Since this test measures the professional knowledge needed to perform the daily work tasks or activities in the laboratory, it can have several applications, e.g.:

-

a.

VET teachers can use PROKLAS to measure the learning outcomes of VET students.

-

b.

VET students can self-evaluate their learning outcomes.

-

c.

cResearchers and governmental administrators can use PROKLAS to evaluate the professional knowledge of laboratory assistants. This might become a useful tool when considering the need to evaluate the working skills and quality of different VET programs, for example, for the National Quality Framework (NQF) and the European Quality Framework (EQF; Brockmann et al. 2011; Deissinger et al. 2011).

Currently, PROKLAS is being implemented in the longitudinal large-scale assessment project “Mathematics and Science Competencies in Vocational Education and Training” (ManKobE). ManKobE is conducted as a statewide effort in Germany to empirically assess the competencies in mathematics and sciences in several VET programs (e.g., electrical engineering, car mechatronics, industrial clerks, laboratory assistants in chemistry, and laboratory assistants). In this context, PROKLAS is being used to assess and compare the learning outcomes of the professional knowledge of laboratory tasks of laboratory assistants trained either in both kinds of school-based VET programs or in the dual system.

References

Abele S, Gschwendtner T, Nickolaus R (2013) Bringt uns eine genauere Vermessung der erreichten Kompetenzen weiter? Kompetenzmessung, Kompetenzmodelle, Kompetenzstrukturen und erreichte Kompetenzniveaus in der beruflichen Bildung [Does a more accurate measurement of the achieved professional skills help? Measurement of competences, competence models, competence structures, and achieved levels of competence in vocational training]. BLBS 65:40–45

Abu-Zaid A, Khan TA (2013) Assessing declarative and procedural knowledge using multiple-choice questions. Med Edu Online 18:21132. doi:10.3402/meo.v18i0.21132

Achtenhagen F, Winther E (2014) Workplace-based competence measurement: developing innovative assessment systems for tomorrow’s VET programmes. JVET 66(3):281–295

Ambrosini V, Bowman C (2001) Tacit knowledge: some suggestions for operationalization. J Manage Stud 38(6):811–829

Anderson JR (1983) A spreading activation theory of memory. J Verb Learn Verb Be 22(3):261–295

APO-BK (2015) Ausbildungs- und Prüfungsordnung Berufskolleg [Training and examination regulation]. 13-33 No. 1.1. Ministry of Education, NRW, issued on 26.05.1999, revised 09.12.2014. https://www.schulministerium.nrw.de/docs/RechtSchulrecht/APOen/BK/APOBK.pdf. Accessed 10 Sept 2015

Astin AW (1999) Student involvement: a developmental theory for higher education. J Coll Student Dev 40(5):518–529

Ausubel DP (1968) Educational psychology: A cognitive view. Holt, Rinehart and Winston, New York

Bandura A (1977) Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev 84:191–215

Barley SR, Bechky BA (1994) In the backrooms of science the work of technicians in science labs. Work Occupation 21(1):85–126

Bentler PM (1989) EQS: Structural equations program manual. BMDP Statistical Software, Los Angeles

Bosch G (2010) Zur Zukunft der dualen Berufsausbildung in Deutschland. [About the future of dual vocational training and education in Germany] In: Bosch G, Krone S, Langer D (ed) Das Berufsbildungssystem in Deutschland. VS Verlag für Sozialwissenschaften, Wiesbaden, p 37–61

Braun E, Gusy B, Leidner B, Hannover B (2008) Das Berliner Evaluationsinstrument für selbsteingeschätzte, studentische Kompetenzen (BEvaKomp) [Berlin’s evaluation tool for self-assessed, student competencies (BEvaKomp)]. Diagnostica 54(1):30–42

Brockmann M, Clarke L, Winch C (2011) Knowledge, skills and competence in the European labour market: What’s in a vocational qualification?. Routledge Taylor Francis Group, London and New York

Bundesgesetzesblatt (BGBL) (2009) Verordnung über die Berufsausbildung im Laborbereich Chemie, Biologie und Lack [Ordinance on the vocational training in the laboratory of chemistry, biology, and varnish]. http://www.bibb.de/dokumente/pdf/210809.pdf. Accessed 10 Sept 2015

Carroll A, Houghton S, Wood R, Unsworth K, Hattie J, Gordon L, Bower J (2009) Self-efficacy and academic achievement in Australian high school students: the mediating effects of academic aspirations and delinquency. J Adolescence 32(4):797–817

Cooper-Hannan R, Harbell JW, Coecke S, Balls M, Bowe G, Cervinka M, Clothier R, Hermann F, Klahm LK, de Lange J, Liebsch M, Vanparys P (1999) The principles of good laboratory practice: application to in vitro toxicology studies. ATLA-NOTTINGHAM 27:539–578

Cowin LS, Hengstberger-Sims C, Eagar SC, Gregory L, Andrew S, Rolley J (2008) Competency measurements: testing convergent validity for two measures. J Av Nurs 64(3):272–277

Darling-Hammond L (2000) How teacher education matters. J Teach Educ 51(3):166–173

De Jong T, Ferguson-Hessler M (1996) Types and qualities of knowledge. Educ Psychol 31(2):105–113

Deissinger T (2009) The cultural foundations of VET and the European qualifications framework: a comparison of Germany and Britain. Aust TAFE Teach 2:20–22

Deissinger T, Heine R, Ott M (2011) The dominance of apprenticeships in the German VET system and its implications for Europeanisation: a comparative view in the context of the EQF and the European LLL strategy. J VET 63(3):397–416

Eraut M (2000) Non-formal learning and tacit knowledge in professional work. Brit J Educ Psychol 70(1):113–136

Erpenbeck J (2009) Kompetente Kompetenzerfassung in Beruf und Betrieb [Competent assessment of competence in profession and operation]. In: Münk D, Severing E (eds) Theorie und Praxis der Kompetenzfeststellung im Betrieb—Status quo und Entwicklungsbedarf. Bertelsmann Verlag, Bielefeld, pp 17–44

Ezzelle J, Rodriguez-Chavez IR, Darden JM, Stirewalt M, Kunwar N, Hitchcock R, Walter T, D’souza MP (2008) Guidelines on good clinical laboratory practice: bridging operations between research and clinical research laboratories. J Pharmaceut Biomed 46(1):18–29

Federal Statistical Office (2014) Bildung und Kultur: Berufliche Schulen [Education and culture: Vocational and education training school]. https://www.destatis.de/DE/Publikationen/Thematisch/BildungForschungKultur/Schulen/BeruflicheSchulen2110200157004.pdf?__blob=publicationFile. Accessed 21 Jan 2016

Frey A, Hartig J, Rupp AA (2009) An NCME instructional module on booklet designs in large-scale assessments of student achievement: theory and practice. Educ Meas Issues Pract 28(3):39–53

Gantner S, Andersson AF, Alonso-Sáez L, Bertilsson S (2011) Novel primers for 16S rRNA-based archaeal community analyses in environmental samples. J Microbiol Meth 84(1):12–18

Graham JW, Taylor BJ, Olchowski AE, Cumsille PE (2006) Planned missing data designs in psychological research. Psychol Methods 11(4):323–343

Großschedl J, Mahler D, Kleickmann T, Harms U (2014) Content-related knowledge of biology teachers from general secondary schools: structure and learning opportunities. Int J Sci Educ 36(14):2335–2366

Großschedl J, Harms U, Kleickmann T, Glowinski I (2015) Preservice biology teachers’ professional knowledge: structure and learning opportunities. J Sci Teacher Educ 26(3):291–318

Gschwendtner T (2011) Die Ausbildung zum Kraftfahrzeugmechatroniker im Längsschnitt. Analysen zur Struktur von Fachkompetenz am Ende der Ausbildung und Erklärung von Fachkompetenzentwicklungen über die Ausbildungszeit [The training of automotive mechatronics in a longitudinal section]. Zeitschrift für Berufs- und Wirtschaftspädagogik—Beihefte (Z B W-B) 25:55–76

Gschwendtner T, Abele S, Nickolaus R (2009) Computersimulierte Arbeitsproben: eine Validierungsstudie am Beispiel der Fehlerdiagnoseleistungen von Kfz-Mechatronikern [Computer simulated work samples: a validation study of the fault diagnosis performance of automotive mechatronics]. ZBW 105(4):556–578

Hansson B (2007) Company-based determinants of training and the impact of training on company performance: results from an international HRM survey. Pers Rev 36(2):311–331

Harlow A, Jones A (2004) Why students answer TIMSS science test items the way they do. Res Sci Ed 34(2):221–238

Harms U, Eckhardt M, Bernholt S (2013) Relevanz schulischer Kompetenzen für den Übergang in die Erstausbildung und für die Entwicklung beruflicher Kompetenzen: Biologie und Chemielaboranten. Mathematisch-naturwissenschaftliche Kompetenzen in der beruflichen Erstausbildung [Relevance of school skills for the initial transition to training and for the development of vocational skills: Biology and chemistry laboratory assistants. Mathematical and scientific skills in initial vocational training]. ZBW:111–134

Hattie J (2009) Visible learning: A synthesis of over 800 meta-analyses relating to achievement. Taylor Francis Group, Routledge, London and New York

Heller JI, Daehler KR, Wong N, Shinohara M, Miratrix LW (2012) Differential effects of three professional development models on teacher knowledge and student achievement in elementary science. J Res Sci Teach 49(3):333–362

Helmke A, Schrader F-W (2014) Angebots-Nutzungs-Modell [Utilization of learning opportunities model]. In: Wirtz MA (ed) Dorsch—Lexikon der Psychologie. Huber, Bern, pp 149–150

Hohensinn C, Kubinger KD, Reif M, Holocher-Ertl S, Khorramdel L, Frebort M (2008) Examining item-position effects in large-scale assessment using the Linear Logistic Test Model. Psychol Sci 50(3):391

Hu LT, Bentler PM (1999) Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct Equ Modeling 6(1):1–55

Jackson DL, Gillaspy JA Jr, Purc-Stephenson R (2009) Reporting practices in confirmatory factor analysis: an overview and some recommendations. Psychol Methods 14(1):6–23

Kane MT (1992) The assessment of professional competence. Eval Health Prof 15(2):163–182

Kirschner S (2013) Modellierung und Analyse des Professionswissens von Physiklehrkräften [Modeling and analysis of physiscs teachers ‘professional knowledge]. In: Niedderer H, Fischer H, Sumfleth E (ed) Studien zum Physik- und Chemielernen, Vol 161. Logos Verlag GmbH, Berlin

Kleickmann T, Richter D, Kunter M, Elsner J, Besser M, Krauss S, Baumert J (2013) Teachers’ content knowledge and pedagogical content knowledge: the role of structural differences in teacher education. J Teach Educ 64(1):90–106

Kline RB (2011) Principles and practice of structural equation modeling. The Guilford Press, New York

KMK, Secretariat of the Standing Conference of the Ministers of Education and Cultural Affairs of the Länder in the Federal Republic of Germany (2008) Ländergemeinsame inhaltliche Anforderungen für die Fachwissenschaften und Fachdidaktiken in der Lehrerbildung [Content requirements for subject-related studies and subject-related didactics in teacher training which apply to all Länder] http://www.kmk.org/fileadmin/veroeffentlichungen_beschluesse/2004/2004_12_16-Standards-Lehrerbildung.pdf. Accessed 19 Jan 2016

KMK, Secretariat of the Standing Conference of the Ministers of Education and Cultural Affairs of the Länder in the Federal Republic of Germany (2011) Handreichung für die Erarbeitung von Rahmenlehrplänen der Kultusministerkonferenz für den berufsbezogenen Unterricht in der Berufsschule und ihre Abstimmung mit Ausbildungsordnungen des Bundes für anerkannte Ausbildungsberufe [Guidelines for the development of framework curricula of the KMK for the vocational classes at the vocational school and their coordination with the federal training regulations forrecognized training occupations]. http://www.kmk.org/fileadmin/veroeffentlichungen_beschluesse/2011/2011_09_23_GEP-Handreichung.pdf. Accessed 29 Jun 2015

König J (2010) Lehrerprofessionalität-Konzepte und Ergebnisse der internationalen und deutschen Forschung am Beispiel fachübergreifender, pädagogischer Kompetenzen [Teacher professionalism concepts and results of international and German research on the example of interdisciplinary pedagogical skills]. In: König J, Hofmann B (eds) Professionalität von Lehrkräften—Was sollen Lehrkräfte im Lese-und Schreibunterricht wissen und können. Deutsche Gesellschaft für Lesen und Schreiben (DGLS), Berlin, pp 40–105

Krathwohl DR (2002) A revision of Bloom’s taxonomy: an overview. Theor Pract 41(4):212–218

Krauss S, Baumert J, Blum W (2008) Secondary mathematics teachers’ pedagogical content knowledge and content knowledge: validation of the COACTIV constructs. ZDM 40(5):873–892

Kuha J (2004) AIC and BIC comparisons of assumptions and performance. Sociol Method Res 33(2):188–229

Le Deist FD, Winterton J (2005) What is competence? Hum Res Dev 8(1):27–46

Little TD, Cunningham WA, Shahar G, Widaman KF (2002) To parcel or not to parcel: exploring the question, weighing the merits. Struct Equ Model 9(2):151–173

McPherson SL, Thomas JR (1989) Relation of knowledge and performance in boys’ tennis: age and expertise. J Exp Child Psychol 48(2):190–211

Miller GE (1990) The assessment of clinical skills/competence/performance. Acad Med 65(9):63–67

Mulder M, Weigel T, Collins K (2006) The concept of competence in the development of vocational education and training in selected EU member states: a critical analysis. JVET 59(1):67–88

Müskens W, Tutschner R, Wittig W (2009) Accreditation of prior learning in the transition from continuing vocational training to higher education in Germany. In: Tutschner R, Wittig W, Rami J (eds) Accreditation of vocational learning outcomes–perspectives for a European transfer. Institut Technik und Bildung, Bremen, pp 75–98

Muthén LK, Muthén BO (1998–2012) Mplus User’s Guide. 7th Edition. Muthén and Muthén, Los Angeles

Nickolaus R, Abele S, Gschwendtner T (2011) Prüfungsvarianten und ihre Güte: Simulationen beruflicher Anforderungen als Alternative zu bisherigen Prüfungsformen? [Variants of examinations and their quality simulations occupational requirements as an alternative to existing forms of examinations?]. In: Severing E, Weiß R (eds) Prüfungen und Zertifizierungen in der beruflichen Bildung. Bundesinstitut für Berufsbildung, Bonn, pp 83–95

Nickolaus R, Gschwendtner T, Abele S (2013) Bringt uns eine genauere Vermessung der erreichten Kompetenzen weiter? [Does an accurate measurement of achieved competencies help?]. BbSch 65(2):40–46

Nickolaus R, Lazar A, Norwig K (2012) Assessing professional competences and their development in vocational education in Germany: State of research and perspectives. making it tangible. In: Bernholt S, Neumann K, Nentwig P (eds) Learning outcomes in science education. Münster, Waxmann, pp 129–150

Nickolaus R, Seeber S (2013) Berufliche Kompetenzen: Modellierungen und diagnostische Verfahren [Professional competences: modeling and diagnostic procedures]. In: Frey A, Lissmann U, Schwarz B (eds) Handbuch berufspädagogischer Diagnostik. Beltz, Weinheim, pp 166–195

OECD (1998) Organization for Economic Cooperation and Development series on Principles of Good Laboratory Practice and compliance monitoring. Number 1. OECD Principles on Good Laboratory Practice (as revised in, OECD Environmental Health and Safety Publications, Environment Directorate: ENV/MC/CHEM(98)17. OECD, Paris

Podlas K (2006) CSI effect and other forensic fictions. Loyola Los Angeles Entertain Law Rev 27(87):87–125

Raykov T (2004) Behavioral scale reliability and measurement invariance evaluation using latent variable modeling. Behav Ther 35(2):299–331

Riedl A (2003) Lehr-Lern-Prozesse in technischem beruflichem Unterricht: Gestaltungsvarianten einer Lerneinheit [Teaching-learning processes in technical vocational education: designing variations of a learning unit]. In: Reinisch H, Beck K, Eckert M, Tramm T (eds) Didaktik beruflichen Lehrens und Lernens. Leske and Budrich, Opladen, pp 25–38

Riese J, Reinhold P (2012) Die professionelle Kompetenz angehender Physiklehrkräfte in verschiedenen Ausbildungsformen [The professional competence of prospective physics teachers in various forms of training]. Z Erziehwiss 15(1):111–143

Rittle-Johnson B, Star JR (2007) Does comparing solution methods facilitate conceptual and procedural knowledge? An experimental study on learning to solve equations. J Educ Psychol 99(3):561–574

Rost DH (2007) Interpretation und Bewertung pädagogisch-psychologischer Studien: eine Einführung. [Interpretation and evaluation of pedagogical-psychological studies: an introduction.]. Vol 3, Beltz, Weinheim, p 176–184

Schmidt C (2010) Vocational education and training (VET) for youths with low levels of qualification in Germany. Educ Train 52(5):381–390

Schreiber JB, Nora A, Stage FK, Barlow EA, King J (2006) Reporting structural equation modeling and confirmatory factor analysis results: a review. J Educ Res 99(6):323–338

Seeber S (2014) Struktur und kognitive Voraussetzungen beruflicher Fachkompetenz: am Beispiel Medizinischer und Zahnmedizinischer Fachangestellter [Structure and cognitive conditions of professional expertise: The example of medical and dental assistant]. Z Erziehwiss 17(1):59–80

Selim AA, Ramadan FH, El-Gueneidy MM, Gaafer MM (2012) Using objective structured clinical examination (OSCE) in undergraduate psychiatric nursing education: is it reliable and valid? Nurs Educ Today 32(3):283–288

Shavelson RJ, Ruiz-Primo MA, Wiley EW (2005) Windows into the mind. High Educ 49(4):413–430

Shavit Y, Muller W (2000) Vocational secondary education. Euro Soc 2(1):29–50

Stajkovic AD, Luthans F (1998) Self-efficacy and work-related performance: a meta-analysis. Psychol Bull 124(2):240

Tavares W, LeBlanc VR, Mausz J, Sun V, Eva KW (2014) Simulation-based assessment of paramedics and performance in real clinical contexts. Prehosp Emerg Care 18(1):116–122

Trapmann S, Hell B, Weigand S, Schuler H (2007) Die Validität von Schulnoten zur Vorhersage des Studienerfolgs: eine Metaanalyse [The validity of school grades for academic achievement: a meta-analysis]. Z Padagog Psychol 21(1):11–27

Tynjälä P (2008) Perspectives into learning at the workplace. Educ Res Rev 3(2):130–154

UNECE, United Nations Economic Commission for Europe (2011) Globally harmonized system of classification and labelling of chemicals. https://www.unece.org/fileadmin/DAM/trans/danger/publi/ghs/ghs_rev04/English/ST-SG-AC10-30-Rev4e.pdf. Accessed 10 Sept 2015

Warm TA (1989) Weighted likelihood estimation of ability in item response theory. Psychometrika 54(3):427–450

Wickler G, Tate A, Hansberger J (2013) Using shared procedural knowledge for virtual collaboration support in emergency response. Intelligent Syst IEEE 28(4):9–17

Winther E, Prenzel M (2014) Berufliche Kompetenz und Professionalisierung: testverfahren und Ergebnisse im Spiegelbild ihrer Accountability [Professional competence and professionalisation: Test procedures and results in the reflection of their accountability]. Z Erziehwiss 17(1):1–7

Wright BD, Mok M (2000) Understanding Rasch measurement: Rasch models overview. J Appl Meas 1(1):83–106

Wu ML, Adams RJ, Wilson MR (1998) ACER ConQuest: generalised item response modelling software. Australian Council for Educational Research Ltd., Camberwell

Authors’ contributions

All authors had full access to all data in the study. All authors contributed to drafting and revisions of the paper, approved the final manuscript for publication, and agreed to be accountable for ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. UH obtained the funding for the project ManKobE (Mathematics and Science Competencies in Vocational Education and Training). UH, JG, and SG conceptualized the test instrument PROKLAS. SG designed, developed and conducted the research study. JG and SG analyzed the data. SG wrote the manuscript. JG and DC contributed to the writing, review and revision of the manuscript. All authors read and approved the final manuscript.

Authors’ information

STEPHAN GANTNER (SG) is a postdoctoral researcher in the Department of Biology Education at the Leibniz-Institute for Science and Mathematics Education (IPN) at the University of Kiel (Germany). He studied biology in Cologne, Tübingen, and Munich, where he obtained his PhD in molecular microbiology at the Ludwig-Maximilians-University. He started his career as a researcher in molecular microbiology at the CME, Michigan State University (MI, USA), continued at the Department for Limnology at the University of Uppsala (Sweden), and at the Department for Forest Mycology and Pathology at the SLU in Uppsala (Sweden). In 2012, he joined the IPN. Since then, his research focuses on Vocational Education and Training (VET) for laboratory assistants in biology and medicine, and the VET students’ competency development.

JÖRG GROßSCHEDL (JG) studied biology, chemistry, and education at the Ludwig-Maximilians-University in Munich. He received a PhD in biology education from the University of Kiel (Germany) on the topic of performance assessment and instructional strategies. Between 2007 and 2016, he was a researcher in biology education at the Leibniz-Institute for Science and Mathematics Education (IPN) at the University of Kiel. Since 2016, he is an Assistant Professor in the department of biology education at the University of Cologne. His main field of research is the assessment of teachers’ professional competencies.

DEVASMITA CHAKRAVERTY (DC) is a postdoctoral researcher in Biology Education at the Leibniz Institute for Science and Mathematics Education (IPN) at the University of Kiel (Germany). She has advanced degrees in Science Education, Public Health, and Environmental Sciences. Her research areas focus on the Science, Technology, Engineering, and Mathematics (STEM) workforce development, with an emphasis on women and underrepresented racial/ethnic minorities. She earned her MPH in Toxicology from the University of Washington (2008), and her PhD in Science Education from the University of Virginia (2013). Her doctoral dissertation examined barriers for women and underrepresented racial/ethnic minorities in the fields of medicine and biomedical science research.

UTE HARMS (UH) studied biology, German literature and language, philosophy, and general education and received a PhD in botany from the University of Kiel (Germany). She started her research in science education at the Leibniz Institute for Science and Mathematics Education (IPN) at the University of Kiel and at the University of Oldenburg. In 2000, she became Full Professor for biology education at the Ludwig-Maximilians-University in Munich. In 2006, she got a chair at the University of Bremen. In 2007, she received a chair for biology education at the University of Kiel and became Director at the IPN.

Acknowledgements

The development of PROKLAS was funded by the Leibniz Association (WGL; grant number SAW-2012-IPN-2), Joint Initiative for Research and Innovation (SAW), in the context of the project “Mathematics and Science Competencies in Vocational Education and Training (ManKobE)”.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Gantner, S., Großschedl, J., Chakraverty, D. et al. Assessing what prospective laboratory assistants in biochemistry and cell biology know: development and validation of the test instrument PROKLAS. Empirical Res Voc Ed Train 8, 3 (2016). https://doi.org/10.1186/s40461-016-0029-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40461-016-0029-9