Abstract

In this paper, we mainly study an initial and boundary value problem of a two-dimensional fourth-order hyperbolic equation. Firstly, the fourth-order equation is written as a system of two second-order equations by introducing two new variables. Next, in order to design an implicit compact finite difference scheme for the problem, we apply the compact finite difference operators to obtain a fourth-order discretization for the second-order spatial derivatives and the Crank–Nicolson difference scheme to obtain a second-order discretization for the first-order time derivative. We prove the unconditional stability of the scheme by the Fourier method. Then a convergence analysis is given by the energy method. Numerical results are provided to verify the accuracy and efficiency of this scheme.

Similar content being viewed by others

1 Introduction

Let \(\varOmega =(0,a)\times (0,b)\) and we consider the two-dimensional fourth-order hyperbolic equation with initial and boundary conditions:

where \(u_{tt}=\frac{\partial ^{2}u}{\partial t^{2}}\), \(\Delta ^{2} u=\frac{ \partial ^{4}u}{\partial x^{4}}+2\frac{\partial ^{4}u}{\partial x^{2} \partial y^{2}}+\frac{\partial ^{4}u}{\partial y^{4}}\). \(f(x,y,t)\) is the given source term. \(f_{1}(x,y)\) and \(f_{2}(x,y)\) are initial value functions. \(h_{1}(y,t)\), \(h_{2}(y,t)\), \(h_{3}(x,t)\), \(h_{4}(x,t)\) and \(g_{1}(y,t)\), \(g_{2}(y,t)\), \(g_{3}(x,t)\), \(g_{4}(x,t)\) are boundary value functions. ρ is a given positive constant.

The two-dimensional fourth-order hyperbolic equations have very important physical background and a wide range of applications. For example, they can be used to describe the vibration of a plate and in large-scale civil engineering, spaceflight, and active noise control (see [1,2,3,4,5]). Compared with the second-order equations [6,7,8,9,10,11], it is usually necessary to use higher-order finite element methods or thirteen-point difference schemes in order to solve the numerical solution of the two-dimensional fourth-order equations. The former is difficult to calculate. The latter has some difficulties to deal with the boundary and only achieves second-order accuracy.

The compact finite difference method, compared to the traditional finite difference method, has a narrower band width and achieves a higher accuracy. Hence, they have long been studied, for example, in [12, 13]. In the last few years, high-order computational methods for different kinds of differential equations were studied (see [6,7,8, 12,13,14,15,16,17]). In [14,15,16] fourth-order equations are written as a system of two second-order equations by introducing two new variables. Then, in order to design a high-order scheme for the problem, the spatial derivatives are discretized by applying the compact finite difference method or compact volume method.

In this paper, we apply similar ideas to the two-dimensional fourth-order hyperbolic equation (1). Firstly, the fourth-order equation is written as a system of two second-order equations by introducing two new variables. Next, we use the compact operators to approximate the second-order derivatives in the space variables and rewrite the above problem as an initial value problem for a system of two second-order ordinary differential equations. Then we develop a two time level compact finite difference scheme. We prove the stability for the high-order compact difference scheme by the Fourier method. The convergence of the high-order compact difference scheme is given by the energy method.

The rest of the paper is arranged as follows. In Sect. 2 we formulate the fourth-order compact finite difference scheme for problem (1). A stability analysis is given by the Fourier method in Sect. 3, and a convergence analysis is given by the energy method in Sect. 4. Numerical experiments are performed in Sect. 5 to test the accuracy and efficiency of the proposed compact finite difference scheme. Conclusions are given in Sect. 6.

2 Compact finite difference scheme

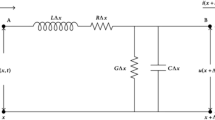

To design a proper finite difference scheme, we set \(v=-\rho \Delta u\), \(w=\frac{ \partial u}{\partial t}\) and reformulate problem (1) in terms of the coupled system of second-order equations

Obviously, u is a solution to (1), if and only if \((v,w)\) is a solution to (2).

Let \(h_{x}=\frac{a}{N_{x}+1}\), \(h_{y}=\frac{b}{N_{y}+1}\) be the spatial step in the x and y directions, \(\tau =\frac{T}{N}\) be the time step and \(x_{i}=ih_{x}\), \(0\leq i\leq N_{x}+1\), \(y_{j}=jh_{y}\), \(0\leq j \leq N_{y}+1\), \(t_{k}=k\tau \), \(0\leq k\leq N\), \(h=\max \{h_{x},h_{y}\}\). The theoretical solutions u, v, w at the point \((x_{i},y_{j},t_{k})\) are denoted by \(u_{ij}^{k}\), \(v_{ij}^{k}\), \(w_{ij}^{k}\) and the numerical solutions at the same mesh point will be represented by \(U_{ij}^{k}\), \(V _{ij}^{k}\), \(W_{ij}^{k}\). At each time level the number of unknowns is \(N_{xy}=N_{x}\times N_{y}\). Besides, we set \(t_{k+\frac{1}{2}}= \frac{1}{2}(t_{k}+t_{k+1})\).

Our compact method for (1) is based on the system (2). To do this, we set

Using the Crank–Nicolson method to approximate (2a) and (2b) at the point \((x_{i},y_{j},t_{k+\frac{1}{2}})\), we get

where

Setting \(v_{xx}=\theta \), \(v_{yy}=\vartheta \) and \(w_{xx}=\varphi \), \(w_{yy}= \psi \), (3a) and (3b) can be rewritten as

Defining the difference operators

and applying a Taylor expansion, we get

where

Denote \(\mathbf{A}_{h}=\mathbf{A}_{x}\mathbf{A}_{y}\) and \(\mathbf{B} _{h}=\mathbf{A}_{y}\delta ^{2}_{x}+\mathbf{A}_{x}\delta ^{2}_{y}\). Hence, multiplying by \(\mathbf{A}_{h}\) both sides of (4), we get

where

Replacing \(v_{i,j}^{k}\), \(w_{i,j}^{k}\) by their approximations \(V_{i,j}^{k}\), \(W_{i,j}^{k}\) and neglecting the higher-order terms, we derive a finite difference scheme as follows:

where the discretized boundary values and initial values are denoted by

Remark 2.1

From (5) it is easy to see that the local truncation error for this scheme is \(O(\tau ^{2}+h^{4})\).

3 Stability analysis

In this section, we adopt the Fourier method to analyze stability of the scheme (6). Assume that \(h=g(\tau )\), where \(g(\tau )\) is a continuous function and \(g(0)=0\). In order to prove stability of the scheme (6), we consider a difference scheme of the form

where \(A_{m}\) and \(B_{m}\) are \(2\times 2\) matrices, \(N_{0}\) and \(N_{1}\) are finite sets containing 0, positive integers and negative integers, \(\mathbf{U}_{j}^{m}\) is a two-dimensional column vector. Using the Fourier method we get the growth factor \(G(x_{i},y_{j})\). Then the scheme (7) is stable if and only if the family of matrices

is uniformly bounded. We introduce the following two lemmas.

Lemma 3.1

([18])

To prove that the family of matrices (8) is uniformly bounded, it is necessary and sufficient to prove that the family of matrices

is uniformly bounded.

Proof

Accuracy is obvious, we now prove the necessity. We use the meshes with \(N_{x}=2^{m}\), \(N_{y}=2^{k}\), \(m=1, 2,\ldots \) , \(k=1,2,\ldots \) . Denote by \((x_{p},y_{q})\) the grid points in the mesh for given m and k, where \(x_{p}=\frac{a}{2^{p}}\), \(y_{q}=\frac{b}{2^{q}}\), \(p=1,2,\ldots ,m\), \(q=1,2,\ldots ,k\). Assume

where M is a constant that has nothing to do with partition. We set \(\tau \rightarrow 0\), therefore, \(h\rightarrow 0\), then

Noting that the bisecting points \(\{(x_{p},y_{q})\}\) are dense on \([0,a]\times [0,b]\), and \(G(x,y)\) is a continuous function, we get

□

Lemma 3.2

([18])

Assume \(G(x,y)\) is an \(2\times 2\) matrices and use \(g_{ij}\) to represent the element of the ith row and the jth column. The eigenvalues of G are \(\lambda _{1}\) and \(\lambda _{2}\). The family of matrices \(\{G^{n}(x,y)\}\) is uniformly bounded if and only if

Remark 3.1

-

(1)

From the relationship between roots and coefficients in the quadric equation \(\lambda ^{2}-b \lambda -c=0\), the modulo of two roots is not bigger than one if and only if

$$ |b|\leq 1-c \leq 2. $$(11) -

(2)

In the condition \((\beta )\),we need to calculate the norm of a \(2\times 2\) matrix. We usually use the Frobenius-norm, which is defined as

$$ \|\mathbf{K}\|_{F}=\Biggl(\sum _{i,j=1}^{2}|k_{ij}|^{2} \Biggr)^{\frac{1}{2}}, $$(12)for a matrix \(\mathbf{K}=(k_{ij})\).

Theorem 3.1

The scheme (6) is unconditionally stable.

Proof

We use the Fourier method to prove the stability of the scheme (6). Using the definitions of \({A}_{h}\) and \({B}_{h}\), the scheme (6) is written as

where

Let \(W_{jm}^{k}=v_{1}^{k}e^{i\sigma _{1} jh_{x}}e^{i\sigma _{2} mh_{y}}\), \(V_{jm}^{k}=v_{2}^{k}e^{i\sigma _{1}jh_{x}}e^{i\sigma _{2}mh_{y}}\), where \(v_{1}^{k}\) and \(v_{2}^{k}\) are the amplitude at time level k, \(\sigma _{1}\) and \(\sigma _{2}\) represent the wave numbers in the x and y directions. By inserting these expressions into the coupled scheme (13), we have

and

Dividing the above equations by \(e^{i\sigma _{1} jh_{x}}e^{i\sigma _{2} mh_{y}}\), we get

and

Equations (14) and (15) can be written as

where

Then from (16) we immediately get the matrix of growth of the scheme (13),

By calculation, we achieve the quadratic equation about eigenvalues of the growth matrix \(G(\sigma _{1} h_{x},\sigma _{2} h_{y})\) as follows:

Obviously, we have

That is, the condition (10α) in Lemma 3.2 is satisfied.

Next, we have

Hence, there exists a constant \(M \geq \frac{1}{2}\frac{\sqrt{1+ \rho ^{2}}}{\sqrt{\rho }}\) such that (10β) holds for any \(r_{x}>0\), \(r_{y}>0\). Then from Lemma 3.2 we know that the difference scheme (13) is stable. □

4 Error analysis

In this section we give the convergence analysis by the energy method. We introduce the spaces \(S_{h}=\{u|u\in R^{(N_{x}+2)\times (N_{y}+2)} \}\), \(S_{h}^{0}=\{u|u\in R^{(N_{x}+2)\times (N_{y}+2)},u_{0,j}=u_{N _{x}+1,j}=u_{i,0}=u_{N_{y}+1,0}=0,0\leq i\leq N_{x}+1,0\leq j\leq N _{y}+1\}\). \(\forall u, v\in S_{h}^{0}\), we define inner products and norms as follows:

Similarly, \((\delta _{y}^{2}u,v)\), \((\delta _{y}u,\delta _{y}v)\), \(\|\delta _{y}u\|^{2}\) and \(\|\delta _{y}\delta _{x}u\|^{2}\) can be defined.

For the error analysis, we first note that our numerical scheme is based on (5) with higher-order terms dropped,

where

with \(C_{1}\), \(C_{2}\) positive constants. And our numerical scheme (6) is equivalent to

Letting \(\xi _{i,j}^{k}=w_{i,j}^{k}-W_{i,j}^{k}\) and \(\eta _{i,j}^{k}=v_{i,j}^{k}-V_{i,j}^{k}\) replace the approximation errors, we can get the error equations

Using the discrete Green formula, we know that the difference operators \(\delta _{x}^{2}\) and \(\delta _{y}^{2}\) are self-adjoint and symmetric positive definite. We find that the difference operators \(\mathbf{A}_{h}\), \(\mathbf{B}_{h}\) are self-adjoint and symmetric positive definite as well. To give the error estimate, the lemmas used later are first given as follows.

Lemma 4.1

([7])

For any grid function \(u, v\in S_{h}^{0}\), we have

-

(1)

\((\mathbf{A}_{h}u,v)=(u,\mathbf{A}_{h}v)\), \((\mathbf{B} _{h}u,v)=(u,\mathbf{B}_{h}v)\),

-

(2)

\((\delta _{x}^{2}u,v)=(u,\delta _{x}^{2}v)\), \((\delta _{y} ^{2}u,v)=(u,\delta _{y}^{2}v)\).

Lemma 4.2

For any grid function \(u\in S_{h}^{0}\), we have

-

(1)

\(\frac{2}{3}\|u\|^{2}\leq (\mathbf{A}_{x}u,u)\leq \|u\|^{2}\), \(\frac{2}{3}\|u\|^{2}\leq (\mathbf{A}_{y}u,u)\leq \|u\|^{2}\);

-

(2)

\(\frac{4}{9}\|u\|^{2}\leq (\mathbf{A}_{h}u,u)\leq \|u\|^{2}\);

-

(3)

\(h_{x}^{2}(\mathbf{A}_{y}\delta _{x}u,\delta _{x}u)\leq 4( \mathbf{A}_{y}u,u)\), \(h_{y}^{2}(\mathbf{A}_{x}\delta _{y}u,\delta _{y}u) \leq 4(\mathbf{A}_{x}u,u)\).

Theorem 4.1

Let \(\{w^{k},v^{k}\}\) be the solution of Eq. (2) and \(\{W^{k},V^{k} \}\) be the solution of scheme (6). For the compact finite difference scheme, assuming that both \(r_{x}\) and \(r_{y}\) are bounded, we have

Proof

Taking the inner product with \(\xi ^{k+1}+\xi ^{k}\) on both sides of (24), we have

Taking the inner product with \(\eta ^{k+1}+\eta ^{k}\) on both sides of (25), we have

From Lemma 4.1 we have

Multiplying by ρ both sides of (27), we have

Combining (28) with (30), we obtain

Using Lemma 4.1, we obtain

By the inequality \(ab\leq \frac{1}{2}(a^{2}+b^{2})\) and \((a+b)^{2} \leq 2(a^{2}+b^{2})\), we get

Summing k from 0 to n, then

which implies that

From Lemma 4.2 and \(\xi _{i,j}^{0}=0\), \(\eta _{i,j}^{0}=0\) we have

which implies that

Applying the discrete Gronwall lemma to (32), we get

From (23) we obtain

Hence

This completes the proof. □

5 Numerical experiments

In this section we give some numerical results for the two-dimensional model problems given below. These results are obtained by using Matlab.

Example 1

We seek the numerical solution for the following problem:

The theoretical solution is taken as \(u(x,y,t)=e^{-\pi t}\sin (\pi x) \sin (\pi y)\). \(f(x,y,t)\), the initial and boundary value functions in (36), can be obtained from \(u(x,y,t)\). We have \(v(x,y,t)=2\pi ^{2}e ^{-\pi t}\sin (\pi x)\sin (\pi y)\) and \(w(x,y,t)=-\pi e^{-\pi t} \sin (\pi x)\sin (\pi y)\). The compact difference scheme (6) is used to solve the problem (36). As comparison with our method, the central difference scheme is used to solve this problem.

In our numerical results, errors and computational orders in \(L^{2}\)-norm and \(L^{\infty }\)-norm of the compact difference scheme and the central difference scheme are given in Tables 1–4. From these tables we can find that the compact difference scheme can achieve a higher accuracy and efficiency than the central difference scheme in identical mesh. The exact results \(v(x,y,t)\) and \(w(x,y,t)\), with a mesh for \(h_{x}=h_{y}=0.05\) are plotted in Figs. 1 and 2 for \(t=1\), respectively. The numerical results \(\{V_{ij}^{n+1}\}\) and \(\{W_{ij} ^{n+1}\}\), with a mesh for \(h_{x}=h_{y}=0.05\), are plotted in Figs. 3 and 4 for \(t=1\).

Example 2

We consider the numerical solution for the problem (36) with the exact solution

Then \(f(x,y,t)\), the initial and boundary value functions in (36), can be obtained from \(u(x,y,t)\). And we get the functions

The compact difference scheme (6) is used to solve the non-homogeneous problem with \(\beta =10\) and \(\beta =\frac{1}{10}\).

Errors and computational orders in \(L^{2}\)-norm and \(L^{\infty }\)-norm of the compact difference scheme and the central difference scheme with \(\beta =10\) are given in Tables 5–8. Tables 9–12 show errors and computational orders in \(L^{2}\)-norm and \(L^{\infty }\)-norm of the compact difference scheme and the central difference scheme with \(\beta =\frac{1}{10}\). From these tables we can see that the compact difference scheme can achieve a higher accuracy than the central difference scheme in identical mesh when they are applied to solving the problem based on the Gaussian pulse.

6 Conclusions

In this article, we have developed a compact finite difference scheme for two-dimensional fourth-order hyperbolic equation. The stability of the scheme is proved by using a Fourier analysis and the convergence of the scheme is obtained. The numerical results show that this scheme has high order of accuracy and is efficient.

References

Conte, S.D.: Numerical solution of vibration problems in two space variables. Pac. J. Math. 4, 1535–1544 (1957)

Katsikadelis, J.T., Kandilas, C.B., Greece, A.: A flexibility matrix solution of the vibration problem of plates based on the boundary element method. Acta Mech. 83, 51–60 (1990)

Huang, Q.: Research on Sound Radiation of the Plate with Two Opposite Sides Simply Supported and Other Two Free. Southwest Jiaotong University, Chengdu (2007) (in Chinese)

Yuan, X., Li, S.: Natural vibration analysis of thin rectangular plate under different boundary conditions. Aeroengine 31, 39–43 (2005)

Luo, Z.D., Jin, S.J., Chen, J.: A reduced-order extrapolation central difference scheme based on POD for two-dimensional fourth-order hyperbolic equations. Appl. Math. Comput. 289, 396–408 (2016)

Ding, H.F., Zhang, Y.X.: A new fourth-order compact finite difference scheme for the two-dimensional second-order hyperbolic equation. J. Comput. Appl. Math. 230, 626–632 (2009)

Cui, M.R.: High order compact alternating direction implicit method for the generalized sine-Gordon equation. J. Comput. Appl. Math. 235, 837–849 (2010)

Mohebbi, A., Dehghan, M.: High-order solution of one-dimensional sine-Gordon equation using compact finite difference and DIRKN methods. Math. Comput. Model. 51, 537–549 (2010)

Dehghan, M., Salehi, R.: A method based on meshless approach for the numerical solution of the two-space dimensional hyperbolic telegraph equation. Math. Methods Appl. Sci. 35, 1220–1233 (2012)

Dehghan, M., Ghesmati, A.: Combination of meshless local weak and strong (MLWS) forms to solve the two dimensional hyperbolic telegraph equation. Eng. Anal. Bound. Elem. 34, 324–336 (2010)

Yang, Q.,: Numerical analysis of partially penalized immersed finite element methods for hyperbolic interface problems. Numer. Math., Theory Methods Appl. 11, 272–298 (2018)

Lele, S.K.: Compact finite difference schemes with spectral-like resolution. J. Comput. Phys. 103, 16–42 (1992)

Sun, Z.Z.: Numerical Solutions of Partial Differential Equations. Science Press, Beijing (2012) (in Chinese)

Cheng, T., Liu, L.B.: Compact difference scheme with high accuracy for solving fourth order parabolic equation. Coll. Math. 2(26), 71–75 (2010) (in Chinese)

Fu, Y.Y., Yang, Q.: Compact finite volume scheme and its error analysis for fourth-order linear equation. J. Shandong Norm. Univ. 3(32), 16–21 (2017) (in Chinese)

Wang, Y.M., Guo, B.Y.: Fourth-order compact finite difference method for fourth-order nonlinear elliptic boundary value problems. J. Comput. Appl. Math. 221, 76–97 (2008)

Wang, Y.M.: Error analysis of a compact finite difference method for fourth-order nonlinear elliptic boundary value problems. Appl. Numer. Math. 120, 53–67 (2017)

Li, R.H., Liu, B.: Numerical Solutions of Partial Differential Equations. Higher Education Press, Beijing (2008) (in Chinese)

Deng, D., Zhang, C.: A new fourth-order numerical algorithm for a class of three-dimensional nonlinear evolution equations. Numer. Methods Partial Differ. Equ. 29, 102–130 (2013)

Qin, J., Wang, T.: A compact locally one-dimensional finite difference method for nonhomogeneous parabolic differential equations. Int. J. Numer. Methods Biomed. Eng. 27, 128–142 (2011)

Acknowledgements

The authors would like to thank editor and referees for their valuable advice for the improvement of this article.

Funding

The work is supported by Shandong Provincial Natural Science Foundation, China (ZR2017MA020).

Author information

Authors and Affiliations

Contributions

The authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Li, Q., Yang, Q. Compact difference scheme for two-dimensional fourth-order hyperbolic equation. Adv Differ Equ 2019, 328 (2019). https://doi.org/10.1186/s13662-019-2094-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2094-4