Abstract

In this paper, we apply a new method, a delayed matrix exponential, to study P-type and D-type learning laws for time-delay controlled systems to track the varying reference accurately by using a few iterations in a finite time interval. We present open-loop P- and D-type asymptotic convergence results in the sense of λ-norm by virtue of spectral radius of matrix. Finally, four examples are given to illustrate our theoretical results.

Similar content being viewed by others

1 Introduction

In the past decades, delay differential equations have been widely used in the fields of economics, physics, and engineering control. Existence, stability, and periodic solutions are studied extensively and there are many interesting and important results; see, for example, [1–6].

Since Uchiyama [7] and Arimoto [8] put forward the iterative learning control (ILC for short), a wide variety of iterative learning control problems and related issues are proposed and studied in recent decades. For example, ILC for varying reference trajectories [9, 10], ILC for fractional differential systems [11, 12], ILC for impulsive differential systems [13, 14], research on the robustness of ILC [15, 16], and so on.

It is a common phenomenon that time delays exist in many practical engineering issues. However, the prevalence of the phenomenon to the delay caused a lot of practical engineering problems. So the study of the control problem of time-delay system is paid more attention. Some effective methods for studying the iterative learning control for time-delay systems are provided by Sun [17–19].

After reviewing the previous works dealing with ILC problems for delay systems, we observe the following facts:

-

(i)

A delay system \(\dot{x}(t)=Ax(t)+Bx(t-\tau)\), \(t>0\) is considered mostly as an integral system, where A, B are suitable matrices.

-

(ii)

A uniform transition matrix associated with A, B is not derived directly and the structure of solution \(x(t)\) is not well characterized on every subintervals \([0,\tau], \ldots,[n\tau,(n+1)\tau]\), \(n\in N\).

-

(iii)

An extended Gronwall inequality is used to derive convergence results instead of applying direct methods.

It is remarkable that Khusainov and Shuklin in [20] initially introduced a delayed exponential matrix method to study the following linear differential equation with one delay term:

where A and B are matrices and φ is an arbitrary continuously differentiable vector functions. A representation of a solution of system (1) with \(AB=BA\) is given by using a so-called delay exponential matrix, which is defined as follows:

for \(\tau>0\), where Θ and E are the n-dimensional zero and identity matrices, respectively.

For more recent contributions on oscillating systems with pure delay, relative controllability of system with pure delay, asymptotic stability of nonlinear multidelay differential equations, one can refer to [21–27] and the references therein.

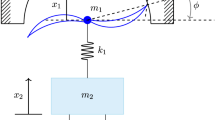

Inspired by the references mentioned above, in this work, we discuss ILC for time-delay systems. More precisely, we study the following linear controlled systems with pure delay:

where T denotes pre-fixed iteration domain length with \(T=N\tau\) and \(N\in \mathbb {N}\). Let \(\varphi\in\mathcal{C}_{\tau}^{1}:=\mathcal{C}^{1}([-\tau, 0], \mathbb {R}^{n})\), A and B be two \(n\times n\) matrices such that \(AB=BA\) and C, D be two \(m\times n\) matrices, k denotes the kth learning iteration, the variables \(x_{k}, u_{k}\in \mathbb {R}^{n}\) and \(y_{k}\in \mathbb {R}^{m}\) denote state, input, and output, respectively. By [20], Corollary 2.2, we derive that the state \(x_{k}(\cdot)\) has the form

where \(e_{\tau}^{Bt}\) is defined in (2) and \(B_{1}=e^{-A\tau}B\).

By introducing \(e_{\tau}^{Bt}\) for (2), we state some possible advantages of our approach as follows.

-

(i)

The structure of solution \(x(t)\) is characterized on every subintervals.

-

(ii)

A direct method is explored to deal with ILC problems by using mathematical analysis tools.

Let \(y_{d}\) be a desired trajectory and set \(e_{k}=y_{d}-y_{k}\), which denotes output error and \(\delta u_{k}=u_{k+1}-u_{k}\).

For the system (3), we consider the open-loop P-type ILC updating law

For the system (3) with \(D=\Theta\), we consider the open-loop D-type ILC updating law

where \(P_{o}\) and \(D_{o}\in \mathbb {R}^{n\times m}\) are learning gain matrices.

The main objective of this paper is to use delayed exponential matrix to generate the control input \(u_{k}\) such that the time-delay system output \(y_{k}\) is tracking the iteratively varying reference trajectories \(y_{d}\) as accurately as possible when \(k\rightarrow\infty\) uniformly on \([0,T]\) in the sense of the λ-norm by adopting P-type ILC and D-type ILC.

Here we point out that our method is different from the method given in the previous reference, however, we obtain the same convergence results. Our method relies on a direct formula solution, so it is constructive.

The rest of this paper is organized as follows. In Section 2, we give some notations, concepts, and lemmas. In Sections 3 and 4, we give convergence results of P-type ILC and D-type for system (3). Examples are given in Section 5 to demonstrate the applicability of our main results.

2 Preliminaries

Let \(J\subset \mathbb {R}\) be a finite interval and \(L(\mathbb {R}^{n})\) be the space of bounded linear operators in \(\mathbb {R}^{n}\). Denote by \(\mathcal{C}(J, \mathbb {R}^{n})\) the Banach space of vector-value continuous functions from \(J\to \mathbb {R}^{n}\) endowed with the ∞-norm \(\Vert x\Vert =\max_{t\in J}\vert x(t)\vert \) for a norm \(\vert \cdot \vert \) on \(\mathbb {R}^{n}\). We also consider on \(\mathcal{C}(J, \mathbb {R}^{n})\) a λ-norm \(\Vert x\Vert _{\lambda}=\sup_{t\in J} \{ e^{-\lambda t}\vert x(t)\vert \}\), \(\lambda>0\). We introduce a space \(\mathcal{C}^{1}(J, \mathbb {R}^{n})=\{x\in\mathcal{C}(J, \mathbb {R}^{n}): \dot{x}\in\mathcal{C}(J, \mathbb {R}^{n}) \}\). For a matrix \(A : \mathbb {R}^{n}\to \mathbb {R}^{n}\), we consider its matrix norm \(\Vert A\Vert =\max_{\vert x\vert =1}\vert Ax\vert \) generated by \(\vert \cdot \vert \).

Lemma 2.1

see [28], 2.2.8, Chapter 2

Let \(A\in L(\mathbb {R}^{n})\). For a given \(\epsilon>0\), there is a norm \(\vert \cdot \vert \) on \(\mathbb {R}^{n}\) such that

where \(\rho(A)\) denotes the spectral radius of the matrix A.

Next, we give an alternative formula to compute the solution of linear system with pure delay, which is a direct corollary of [20], Corollary 2.2.

Lemma 2.2

Let \(f:J\rightarrow \mathbb {R}^{n}\) be a continuous function. The solution \(x\in \mathcal{C}^{1}(J,\mathbb {R}^{n})\) of

has the form

where A and B are commutative, \(B_{1}=e^{-A\tau}B\) and \((j-1)\tau \leq t< j\tau\), \(j=1,2,\ldots,N\).

Proof

According to the formula (21) in [20], we know the solution of system (7) has the form

Without loss of generality, we consider \((j-1)\tau\leq t< j\tau\), \(j=1, 2,\ldots, N\).

Next, we submit the formula of delayed matrix exponential (2) to (9) to prove the result. We divide our proof into two steps.

Step 1. We prove that

Due to the fact that \(-\tau< s<0\), we obtain \(t-\tau< t-\tau-s< t\) and \((j-2)\tau< t-\tau< t-\tau-s< t< j\tau\). When \(-\tau< s< t-j\tau\), we have \((j-1)\tau< t-\tau-s< t< j\tau\). When \(t-j\tau< s<0\), we have \((j-2)\tau< t-\tau< t-\tau-s<(j-1)\tau\). Hence

Step 2. We check that

Due to the fact that \(0< s< t\), we obtain \(-\tau< t-\tau-s< t-\tau\). When \(t-(i+1)\tau< s< t-i\tau\), we have \((i-1)\tau< t-\tau-s< i\tau\), \(i=0,1,\ldots, j-2\). When \(0< s< t-(j-1)\tau\), we have \((j-2)\tau< t-\tau-s< t-\tau <(j-1)\tau\). Hence

Linking (9), (10), and (11), one can get the result (8). The proof is finished. □

3 Convergence analysis of P-type

In this section, we give the first convergence result of P-type.

Theorem 3.1

Let \(y_{d}(t)\), \(t\in[0,T]\) be a desired trajectory for system (3). If \(\rho(E-DP_{o})<1\), then the P-type ILC law (5) guarantees \(\lim_{k\rightarrow\infty }y_{k}(t)=y_{d}(t)\) uniformly on \([0,T]\).

Proof

Without loss of generality, we consider \((j-1)\tau\leq t< j\tau\), \(j=1,2,\ldots, N\). Linking (3) and (4), we have

According to (5) and Lemma 2.2, we have

Further, by Lemma 2.1 we know that, for a given \(\epsilon>0\), there is a norm \(\vert \cdot \vert \) on \(\mathbb {R}^{n}\) such that

So by (12), we have

Hence, we obtain

For fixed i, \(i=0,1,\ldots,j-1\), we have

For any \(\lambda>\Vert A\Vert \), we apply integration by parts via mathematical induction to derive

Linking (13), (14), and (15), it is not difficult to get

and, taking the λ-norm, we arrive at

Since \(\rho(E-DP_{o})<1\), for any \(\epsilon\in(0,\frac{1-\rho (E-DP_{o})}{4} )\) and \(\lambda>\Vert A\Vert \) sufficiently large, we have

which implies \(\lim_{k\rightarrow\infty} \Vert e_{k}\Vert _{\lambda}=0\), since \(\rho(E-DP_{o})+2\epsilon<1\). In addition, \(\Vert e_{k}\Vert \leq e^{\lambda T}\Vert e_{k}\Vert _{\lambda}\). Hence \(\lim_{k\rightarrow\infty} \Vert e_{k}\Vert =0\). The proof is completed. □

Remark 3.2

We use a delayed matrix exponential method to obtain convergence of the P-type ILC algorithm. Next, we applied the norm \(\Vert \cdot \Vert _{\lambda}\) just for a technical reason to get uniform convergence only under condition \(\rho(E-DP_{o})<1\) in the end of the above proof. Moreover, fixing \(\epsilon\in(0,\frac {1-\rho(E-DP_{o})}{4} )\), the smallest suitable \(\lambda>\Vert A\Vert \) is given by the equation

which is rather awkward to solve. On the other hand, (17) has a unique solution for any \(\epsilon>0\). Moreover, it may have any \(\lambda>\Vert A\Vert \) as a solution by varying \(\Vert C\Vert \Vert P_{o}\Vert \).

4 Convergence analysis of D-type

In this section, we discuss the ILC convergence of D-type.

Theorem 4.1

Let \(y_{d}(t)\), \(t\in[0, T]\), be a desired trajectory for system (3) with \(D=\Theta\). If \(\rho(E-CD_{o})<1\) and \(e_{k}(0)=0\), \(k=1,2,\ldots\) , then the D-type ILC law (6) guarantees \(\lim_{k\rightarrow\infty}y_{k}(t)=y_{d}(t)\) uniformly on \([0,T]\).

Proof

First, we consider \((j-1)\tau\leq t< j\tau\), \(j=2,3,\ldots, N\). By (4) and (6), we have

So we have

Similar to the proof of Theorem 3.1, one can apply Lemma 2.2 to derive that

Obviously, we have

In analogy to the computation in (14)-(16), inequality (18) becomes

where

and

If \(j=1\), which means \(0\leq t<\tau\), then by (4) and (6), we have

We can repeat the above arguments to arrive at (19) with \(W_{2}=0\) and \(W_{1}=\frac{2\Vert CA\Vert }{\lambda-\Vert A\Vert }\). Hence (19) holds on the whole \([0,T]\), which implies

Note that by \(\rho(E-CD_{o})<1\), we obtain \(\lim_{k\rightarrow \infty} \Vert \dot{e}_{k}\Vert _{\lambda}=0\). Thus, \(\Vert \dot{e}_{k}\Vert \leq\dot{e}^{\lambda T}\Vert \dot{e}_{k}\Vert _{\lambda}\). So \(\Vert \dot {e}_{k}\Vert \rightarrow0\) as \(k\rightarrow\infty\). Due to the fact that \(e_{k}(0)=0\), we get \(\Vert e_{k}\Vert \le T\Vert \dot{e}_{k}\Vert \), consequently we find that \(\Vert e_{k}\Vert \rightarrow0\) as \(k\rightarrow\infty\). The proof is completed. □

Remark 4.2

Since

we need \(y_{d}(0)=C\varphi(0)\). This gives a compatibility condition for φ. Since \(y_{d}(0)\) is arbitrary, we need C to be surjective.

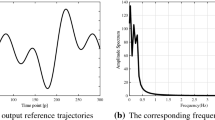

5 Simulation examples

In this section, four numerical examples are presented to demonstrate the validity of the designed method. In order to simulate the tracking errors of trajectories, we adopt for simplicity \(L^{2}\)-norm in our simulations. This \(L^{2}\)-norm can be used, since by the fact that \(\Vert e_{k}\Vert \leq e^{\lambda T}\Vert e_{k}\Vert _{\lambda}\) for a suitable \(\lambda>\Vert A\Vert \), we obtain \(\Vert e_{k}\Vert \rightarrow0\) as \(k\rightarrow \infty\), i.e., \(\lim_{k\rightarrow\infty}\sup_{t\in J}\vert e_{k}(t)\vert =0\), which yields \(e_{k}\to0\) in \(L^{2}(J,\mathbb {R}^{n})\).

Example 5.1

Consider

and P-type ILC

The original reference trajectory is

Set \(t\in[0,1]\), \(\tau=0.5\), \(\varphi(t)=t\). For \(n=m=1\), \(A=1\), \(B=1\), \(C=1\), and \(D=0.3\). It is not difficult to find that \(B_{1}=e^{-A\tau}B=e^{-0.5}\) and

Next, we set \(P_{o}=1\). Obviously, \(\rho(1-DP_{o})=0.7<1\). Thus, all conditions of Theorem 3.1 are satisfied, so \(y_{k}(t)\) uniformly converges to \(y_{d}(t)\), for \(t\in[0,1]\).

The upper figure of Figure 1 shows the output \(y_{k}\) of equation (20) of the 10th iterations and the reference trajectory \(y_{d}\). The lower figure of Figure 1 shows the \(L^{2}\)-norm of the tracking error (see also Table 1) in each iteration.

The system output and the tracking error for ( 20 ).

Example 5.2

Consider

and D-type ILC

The original reference trajectory is the same as for Example 5.1. Set \(t\in[0,1]\), \(\tau=0.5\), \(\varphi(t)=t\). For \(n=m=1\), \(A=1\), \(B=1\), \(C=1\) and \(D=0\). It is not difficult to find that \(B_{1}\) and \(e_{0.5}^{t}\) are the same as for Example 5.1. Next, we set \(D_{o}=0.3\). Obviously, \(\rho(1-CD_{o})=0.7<1\). Thus, all conditions of Theorem 4.1 are satisfied.

The upper figure of Figure 2 shows the equation (21) output \(y_{k}\) of the 20th iterations and the reference trajectory \(y_{d}\). The lower figure of Figure 2 shows the \(L^{2}\)-norm of the tracking error (see also Table 2) in each iteration.

The system output and the tracking error for ( 21 ).

Example 5.3

Consider

and P-type ILC

The original reference trajectory is

Set \(t\in[0,1]\), \(\tau=0.5\), \(\varphi(t)=(e^{t}, e^{t})^{T}\). Next, \(n=2\), \(m=1\), \(A=B=E\), E is the identity matrix, \(C=(1, 2)\) and \(D=(2, 1)\). It is not difficult to find that

and

Next, we set \(P_{o}=(0.5, -0.4)^{T}\). Obviously, \(\rho(E-DP_{o})=0.8<1\). Thus, all conditions of Theorem 3.1 are satisfied. Then \(y_{k}(t)\) uniformly converges to \(y_{d}(t)\), for \(t\in[0,1]\).

The upper figure of Figure 3 shows the equation (22) output \(y_{k}\) of the 10th iterations and the reference trajectory \(y_{d}\). The lower figure of Figure 3 shows the \(L^{2}\)-norm of the tracking error (see also Table 3) in each iteration.

The system output and the tracking error for ( 22 ).

Example 5.4

For system

we take the D-type ILC

The original reference trajectory is the same as for Example 5.3. Obviously, all conditions of Theorem 4.1 are satisfied. Then \(y_{k}(t)\) uniformly converges to \(y_{d}(t)\), for \(t\in[0,1]\).

The upper figure of Figure 4 shows the output \(y_{k}\) of equation (23) of the 20th iterations and the reference trajectory \(y_{d}\). The lower figure of Figure 4 shows the \(L^{2}\)-norm of the tracking error (see also Table 4) in each iteration.

The system output and the tracking error for ( 23 ).

References

Balachandran, B, Kalmár-Nagy, T, Gilsinn, DE: Delay Differential Equations. Springer, Berlin (2009)

He, J: Variational iteration method for delay differential equations. Commun. Nonlinear Sci. Numer. Simul. 2, 235-236 (1997)

Wang, Q, Liu, XZ: Exponential stability for impulsive delay differential equations by Razumikhin method. J. Math. Anal. Appl. 309, 462-473 (2005)

Wen, Y, Zhou, XF, Zhang, Z, Liu, S: Lyapunov method for nonlinear fractional differential systems with delay. Nonlinear Dyn. 82, 1015-1025 (2015)

Abbas, S, Benchohra, M, Rivero, M, Trujillo, JJ: Existence and stability results for nonlinear fractional order Riemann-Liouville Volterra-Stieltjes quadratic integral equations. Appl. Math. Comput. 247, 319-328 (2014)

Zhang, GL, Song, MH, Liu, MZ: Exponential stability of the exact solutions and the numerical solutions for a class of linear impulsive delay differential equations. J. Comput. Appl. Math. 285, 32-44 (2015)

Uchiyama, M: Formulation of high-speed motion pattern of a mechanical arm by trial. Trans. Soc. Instrum. Control Eng. 14, 706-712 (1978)

Arimoto, S, Kawamura, S: Bettering operation of robots by learning. J. Robot. Syst. 1, 123-140 (1984)

Xu, JX, Xu, J: On iterative learning for different tracking tasks in the presence of time-varying uncertainties. IEEE Trans. Syst. Man Cybern. B 34, 589-597 (2004)

Ahn, HS, Moore, KL, Chen, YQ: Iterative Learning Control: Robustness and Monotonic Convergence in the Iteration Domain. Communications and Control Engineering Series. Springer, Berlin (2007)

Luo, Y, Chen, YQ: Fractional order controller for a class of fractional order systems. Automatica 45, 2446-2450 (2009)

Li, Y, Chen, YQ, Ahn, HS: On the \(PD^{\alpha}\)-type iterative learning control for the fractional-order nonlinear systems. In: Proc. Amer. Control Conference, pp. 4320-4325 (2011)

Bien, ZZ, Xu, JX (eds.): Iterative Learning Control: Analysis, Design, Integration and Applications. Springer, Media (2012)

Li, ZG, Chang, YW, Soh, YC: Analysis and design of impulsive control systems. IEEE Trans. Autom. Control 46, 894-897 (2001)

Sun, MX: Robust convergence analysis of iterative learning control systems. Control Theory Appl. 15, 320-326 (1998)

Lee, HS, Bien, Z: Design issues on robustness and convergence of iterative learning controller. Intell. Autom. Soft Comput. 8, 95-106 (2002)

Sun, MX, Chen, YQ, Huang, BJ: High order iterative learning control system for nonlinear time-delay systems. Acta Autom. Sin. 20, 360-365 (1994)

Sun, MX: Iterative learning control algorithms for uncertain time-delay systems (I). J. Xi’an Instit. Technol. 17, 259-266 (1997)

Sun, MX: Iterative learning control algorithms for uncertain time-delay systems (II). J. Xi’an Instit. Technol. 18, 1-8 (1998)

Khusainov, DY, Shuklin, GV: Linear autonomous time-delay system with permutation matrices solving. Stud. Univ. Žilina Math. Ser. 17, 101-108 (2003)

Khusainov, DY, Shuklin, GV: Relative controllability in systems with pure delay. Int. J. Appl. Math. 2, 210-221 (2005)

Medved’, M, Pospišil, M, Škripokvá, L: Stability and the nonexistence of blowing-up solutions of nonlinear delay systems with linear parts defined by permutable matrices. Nonlinear Anal. TMA 74, 3903-3911 (2011)

Medveď, M, Pospišil, M: Sufficient conditions for the asymptotic stability of nonlinear multidelay differential equations with linear parts defined by pairwise permutable matrices. Nonlinear Anal. TMA 75, 3348-3363 (2012)

Diblik, J, Fečkan, M, Pospišil, M: Representation of a solution of the Cauchy problem for an oscillating system with two delays and permutable matrices. Ukr. Math. J. 65, 58-69 (2013)

Boichuk, A, Diblik, J, Khusainov, D, Růžičková, M: Fredholm’s boundary-value problems for differential systems with a single delay. Nonlinear Anal. TMA 72, 2251-2258 (2010)

Boichuk, A, Diblik, J, Khusainov, D, Růžičková, M: Boundary value problems for delay differential systems. Adv. Differ. Equ. 2010, Article ID 593834 (2010)

Boichuk, A, Diblik, J, Khusainov, D, Růžičková, M: Boundary-value problems for weakly nonlinear delay differential systems. Abstr. Appl. Anal. 2011, Article ID 631412 (2011)

Ortega, JM, Rheinboldt, WC: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, San Diego (1970)

Acknowledgements

The authors are grateful to the referees and Associate Professor Dr. Qian Chen for their careful reading of the manuscript and valuable comments. The authors also thank the editor for help. The first and third authors acknowledge National Natural Science Foundation of China (11661016; 11201091), Training Object of High Level and Innovative Talents of Guizhou Province ((2016)4006), United Foundation of Guizhou Province ([2015]7640) and Outstanding Scientific and Technological Innovation Talent Award of Education Department of Guizhou Province ([2014]240). The second author acknowledges the Slovak Grant Agency VEGA No. 1/0078/17 and the Slovak Research and Development Agency under the contract No. APVV-14-0378.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have made equal contributions. All authors have read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Luo, Z., Fečkan, M. & Wang, J. A new method to study ILC problem for time-delay linear systems. Adv Differ Equ 2017, 35 (2017). https://doi.org/10.1186/s13662-017-1080-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1080-y