Abstract

This paper is concerned with the global projective synchronization issue for fractional neural networks in the Mittag-Leffler stability sense. Firstly, a fractional-order differential inequality in the existing literature for the Caputo fractional derivative, with \(0<\alpha< 1\), is improved, which plays a central role in the synchronization analysis. Secondly, hybrid control strategies are designed via combing open loop control and adaptive control, and unknown control parameters are determined by the adaptive fractional updated laws to achieve global projective synchronization. In addition, applying the fractional Lyapunov approach and Mittag-Leffler function, the projective synchronization conditions are addressed in terms of linear matrix inequalities (LMIs) to ensure the synchronization. Finally, two examples are given to demonstrate the validity of the proposed method.

Similar content being viewed by others

1 Introduction

Fractional calculus dates from 300 years ago and deals with arbitrary (noninteger) order differentiation and integration. Although it has a long history, it did not draw much attention from researchers due to its complexity and difficult application. However, in the last decades, the theory of fractional calculus developed mainly as a pure theoretical field of mathematics and has been used in various fields as rheology, viscoelasticity, electrochemistry, diffusion processes, and so on; see, for instance, [1–7] and the references therein.

It is well known that compared with integer-order models, fractional-order calculus provides a more accurate instrument for the description of memory and hereditary properties of various processes. Taking these facts into account, the incorporation of the fractional-order calculus into a neural network model could better describe the dynamical behavior of the neurons, and many efforts have been made. In [8], a fractional-order cellular neural network model was firstly proposed by Arena et al., and chaotic behavior in noninteger-order cellular neural networks was discussed in [9]. In [10], the author pointed out a fractional-order three-cell network, which put forward limit cycles and stable orbits for different parameter values. Besides, it is important to point out that fractional-order neural networks are expected to play an important role in parameter estimation [11–13]. Therefore, as noted in [14], it is very significant and interesting to study fractional-order neural networks both in the area of theoretical research and in practical applications.

Recently, the dynamic analysis of fractional-order neural networks has received considerable attention, and some excellent results have been presented in [15–24]. Zhang et al. [15] discussed the chaotic behaviors in fractional-order three-dimensional Hopfield neural networks. Moreover, a fractional-order four-cell cellular neural network was presented, and its complex dynamical behavior was investigated using numerical simulations in [16]. Kaslik and Sivasundaram [17] considered nonlinear dynamics and chaos in fractional-order neural networks. Nowadays, there have been some advances in the stability analysis of fractional-order neural networks. The Mittag-Leffler stability and generalized Mittag-Leffler stability of fractional-order neural networks were investigated in [18–21]. The α-stability and α-synchronization of fractional-order neural networks were demonstrated in [22]. Yang et al. [23] discussed the finite-time stability analysis of fractional-order neural networks with delay. Kaslik and Sivasundaram [24] investigated the dynamics of fractional-order delay-free Hopfield fractional-order, including stability, multistability, bifurcations, and chaos. Stability analysis of fractional-order Hopfield neural networks with discontinuous activation functions was made in [25]. In [26] and [27], the global Mittag-Leffler stability and asymptotic stability were considered for fractional-order neural networks with delays and impulsive effects. The uniform stability issue was investigated in [28]. In addition, Wu et al. [29] discussed the global stability issue of the fractional-order interval projection neural network.

Since Pecora and Carroll [30] firstly put forward chaos synchronization in 1990, more and more researchers pay enough attention to studying synchronization. The increasing interest in researching synchronization stems from its potential applications in bioengineering [31], secure communication [32], and cryptography [33]. As we know, synchronization exists in various types, such as complete synchronization [34], anti-synchronization [35], lag synchronization [36], generalized synchronization [37], phase synchronization [38], projective synchronization [39–41], and so on. Among all kinds of synchronization, projective synchronization, characterized by a scaling factor that two systems synchronize proportionally, is one of the most interesting problems. Meanwhile, it can be used to extend binary digital to M-nary digital communication for achieving fast communication [42]. Very recently, some results with respect to synchronization of fractional-order neural networks have been proposed in [26, 43–49]. In [26], the complete synchronization of fractional-order chaotic neural networks was considered via nonimpulsive linear controller. Several results with respect to chaotic synchronization of fractional-order neural networks have been proposed in [43–45]. In addition, Wang et al. [46] investigated the projective cluster synchronization for the fractional-order coupled-delay complex network via adaptive pinning control. In [47], the global projective synchronization of fractional-order neural networks was discussed, and several control strategies were given to ensure the realization of complete synchronization, anti-synchronization, and stabilization of the addressed neural networks. Razminia et al. [48] considered the synchronization of fractional-order Rössler system via active control. By using the approach in [47], Bao and Cao [49] considered the projective synchronization of fractional-order memristor-based neural networks, and some sufficient criteria were derived to ensure the synchronization goal. However, most reports related to projective synchronization of neural networks system have utilized the direct Lyapunov method, which can be a bit complicated. We applied the Mittag-Leffler theory to achieve synchronization of fractional-order system. In addition, it should be pointed out that an LMI analysis technique was not applied to develop the synchronization criteria, and hence the above results have a certain degree of conservatism.

Motivated by the previous work, in this paper, our aim is to investigate the global Mittag-Leffler projective synchronization of fractional-order neural networks by using the LMI analysis approach. The main novelty of our contribution lies in three aspects: (1) a new differential inequality of the Caputo fractional derivatives of the quadratic form, with \(0<\alpha<1\), is established, which is applied to derive the synchronization conditions; (2) the hybrid control scheme is designed via combing open-loop control and adaptive control, and unknown control parameters are determined by the adaptive fractional updated laws; (3) by applying the Mittag-Leffler stability theorem in [50, 51], the global Mittag-Leffler synchronization conditions are presented in terms of LMIs to ensure the synchronization of fractional neural networks.

The rest of this paper is organized as follows. In Section 2, some definitions and a lemma are introduced, and a new differential inequality of the Caputo fractional derivatives of the quadratic form, with \(0<\alpha<1\), is presented. A model description is given in Section 3. Some sufficient conditions for Mittag-Leffler projective synchronization are derived in Section 4. Section 5 presents some numerical simulations. Some general conclusions are drawn in Section 6.

2 Preliminaries

In this section, some basic definitions and lemmas about fractional calculations are presented.

Definition 2.1

([52])

The fractional integral of order α for a function f is defined as

where \(t\geqslant t_{0}\) and \(\alpha>0 \).

Definition 2.2

([52])

Caputo’s fractional derivative of order α of a function \(f\in C^{n}([t_{0}, +\infty],R) \) is defined by

where \(t\geqslant t_{0}\), and n is a positive integer such that \(n-1<\alpha<n \). Particularly, when \(0<\alpha<1\),

Lemma 2.1

([52])

Let \(\Omega=[a,b]\) be an interval on the real axis R, let \(n=[\alpha]+1\) for \(\alpha\notin N \) or \(n=\alpha \) for \(\alpha\in N \). If \(y\in C^{n}[a,b] \), then

In particular, if \(0< \alpha<1\) and \(y(t)\in C^{1}[a,b] \), then

Lemma 2.2

([47])

Assume that \(x \in C^{1}[a, b]\) satisfies

for all \(t\in[a,b] \). Then \(x(t)\) is nondecreasing for \(0< \alpha<1\). If

then \(x(t)\) is nonincreasing for \(0< \alpha<1\).

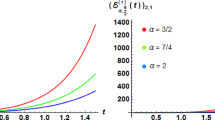

Aguila-Camacho et al. [53] established the fractional-order differential inequality \(\frac{1}{2}{}^{\mathrm{C}}_{t_{0}}D^{\alpha}_{t}x^{2}(t)\leq x(t){}^{\mathrm{C}}_{t_{0}}D^{\alpha}_{t}x(t)\) for the Caputo fractional derivative with \(0< \alpha< 1\). In Lemma 2.3, based on the proof line from [53], we make a generalization of this inequality. We prove that \(\frac{1}{2}D^{\alpha}x^{T}(t) P x(t)\leq x^{T}(t)PD^{\alpha}x(t)\) for all \(\alpha\in(0,1)\), where P is a positive definite matrix. Obviously, we can see that the differential inequality in Lemma 2.3 is more general.

Lemma 2.3

Suppose \(x(t)=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T}\in R^{n} \) is a vector, where \(x_{i}(t) \) are continuous and differentiable functions for all \(i=1,2,\ldots,n\), and \(P\in R^{n\times n}\) is a positive definite matrix. Then, for a general quadratic form function \(x^{T}(t)Px(t) \), we have

Proof

In order to ensure the completeness of the proof process, we recall some steps in the proof from Aguila-Camacho et al. [53]. We believe that this can make the proof easily understood for the readers.

It is easy to see that inequality (1) is equivalent to

According to Definition 2.2, we have

Substituting (3) and (4) into (2), we have

For convenience, we introduce the auxiliary variable \(y(\tau)=x(t)-x(\tau)\). Next, based on variable transformation, we obtain

namely,

By applying integration by parts to (6) it follows that

Now the issue of Lemma 2.3 is transformed into (7). Let us discuss the first term of (7), which is singular at \(\tau=t \), so we consider the corresponding limit:

It is easy to see that (8) is satisfied with L’Hôpital’s rule. By applying L’Hôpital’s rule it follows that

Thus, (7) is reduced to

It is evidently true for (9). This completes the proof. □

Remark 2.1

If the matrix P from Lemma 2.3 is transformed as the identity matrix E, then

In particular, when \(x(t)\in R \) is a continuous and differentiable function, we obtain

by applying Lemma 2.3 to every component of vector.

3 Model description

In this section, we introduce a class of vector fractional-order neural networks as the drive system described by

where \(x(t)=[x_{1}(t),x_{2}(t),\ldots,x_{n}(t)]^{T}\in R^{n} \) is the state vector of the system, \(C=\operatorname{diag}(c_{1},c_{2}, \ldots,c_{n}) \) represents the self-connection weight, where \(c_{i}\in R \) and \(i\in l=(1,2,\ldots,n)\), \(A=(a_{ij})_{n\times n}\) is the interconnection weight matrix, and \(f(x(t))=[f_{1}(x(t)),f_{2}(x(t)),\ldots,f_{n}(x(t))]^{T}\in R^{n}\) and \(I=[I_{1},I_{2},\ldots,I_{n}]^{T}\) denote the activation function vector and external input vector, respectively.

The response system is described by

where \(y(t)=[y_{1}(t),y_{2}(t),\ldots,y_{n}(t)]^{T}\in R^{n} \) is the state vector of the response system, and \(u(t)=(u_{1}(t),u_{2}(t),\ldots,u_{n}(t))^{T}\in R^{n}\) is a control input vector.

Assumption 1

The activation functions \(f_{j} \) are Lipschitz-continuous on R, that is, there exists constant \(l_{j}>0\) (\(j\in l\)) such that

for all \(u\neq v \in R\). For convenience, we define \(L=\operatorname{diag}(l_{1},l_{2},\ldots,l_{n})\).

Definition 3.1

We say that systems (10) and (11) are projectively synchronized if there exists a nonzero constant β for any two solutions \(x(t)\) and \(y(t) \) of systems (10) and (11) with different initial values \(x_{0}\) and \(y_{0} \) such that

where \(\|\cdot\|\) denotes the Euclidean norm of a vector.

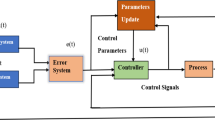

The synchronization error is defined by \(e(t)=y(t)-\beta x(t)\), where \(e(t)=(e_{1}(t),e_{2}(t), \ldots,e_{n}(t))^{T}\in R ^{n}\). According to Definition 3.1, the error system can be described by

In what follows, we will design appropriate control schemes to derive the projective synchronization conditions between systems (10) and (11).

4 Main results

In this section, we resolve the problem of projective synchronization by converting the issue of projective synchronization into stability problem. More specially, the projective synchronization of systems (10) and (11) is equivalent to the stability of the error system (12). We will prove the stability of error system (12) with two different control schemes.

In the first control scheme, we choose the following control input \(u(t) \) in the response system:

with \(K=\operatorname{diag}(k_{1},k_{2},\ldots,k_{n})\), where \(k_{i}>0 \) are the projective coefficients.

Remark 4.1

Note that the control scheme (13) is a hybrid control, \(v(t)\) is an open loop control, and \(w(t)\) is a linear control.

Then, applying the control scheme (13) to the error system (12), we obtain that

Obviously, \(e(t)=0 \) is a trivial solution of the error system (14). Next, we mainly prove the stability of the error system (14) for the zero solution.

Theorem 4.1

Let Assumption 1 be satisfied. Suppose that there exists a positive definitive matrix P such that \(B=\frac{1}{2}(PC+C^{T}P^{T}-PAL-L^{T}A^{T}P^{T}+PK+K^{T}P^{T})>0\). Then systems (10) and (11) are globally Mittag-Leffler projective synchronized based on the control scheme (13).

Proof

Construct the Lyapunov function

Taking the time fractional-order derivative of \(V(t)\), by Lemma 2.3 we have

Substituting \(D^{\alpha}e(t)\) from (14) into (15) yields

Based on Assumption 1, we obtain

where \(PC+C^{T}P^{T}-PAL-L^{T}A^{T}P^{T}+PK+K^{T}P^{T}>0\). Because B also is a positive matrix, it is clear that \(\lambda_{\mathrm{min}}(B)\|e\|^{2}\leq e^{T}(t)Be(t)\leq \lambda_{\mathrm{max}}(B)\|e\|^{2}\), where \(\lambda_{\mathrm{min}}(B)\) and \(\lambda_{\mathrm{max}}(B)\) are minimum and maximum eigenvalues of the matrix B, respectively.

Hence,

So, according to the Mittag-Leffler stability theorem [50, 53], we get that system (14) is Mittag-Leffler stable. Namely, systems (10) and (11) are Mittag-Leffler projectively synchronized. This completes the proof. □

In the second control scheme, we choose the following control input \(u_{1}(t)\) in the response system:

where \(K(t)=\operatorname{diag}(k_{1}(t),k_{2}(t),\ldots,k_{n}(t))\), and \(\gamma_{i}>0 \) are constants.

Remark 4.2

In fact, the control scheme (16) is also a hybrid control, \(v_{1}(t)\) is an open-loop control, and \(w_{1}(t)\) is a adaptive feedback control. Applying the control scheme (16), we obtain the error system

Then we will prove that system (17) is asymptotically stable.

Theorem 4.2

Let Assumption 1 be satisfied. Suppose that there exist a positive matrix P and adaptive constant matrix K such that \(\Omega=\frac{1}{2}(PC+C^{T}P^{T}-PAL-L^{T}A^{T}P^{T}+PK+K^{T}P^{T})>0\). Then systems (10) and (11) are projectively synchronized by the control scheme (16).

Proof

Construct the auxiliary function

where \(U_{1}(t)=\frac{1}{2}e^{T}(t) P e(t)\), and each \(k_{i}\) is an adaptive constant to be determined in the later analysis.

It follows from Lemma 2.3 and Remark 2.1 that the fractional-order derivative of \(V_{1}(t)\) can be described by

Inserting (17) into (18) and applying Assumption 1 yield

with appropriate constant matrix \(\Omega=\frac {1}{2}(PC+C^{T}P^{T}-PAL-L^{T}A^{T}P^{T}+PK+K^{T}P^{T})>0\). It is clear that \(D^{\alpha}V_{1}(t)\leq-e^{T}(t)\Omega e(t)\). Note that

Hence,

Define \(\frac{2\lambda_{\mathrm{min}}(\Omega)}{\lambda_{\mathrm{max}}(P)}=\lambda_{0}\). Then

According to Lemma 2.2, we know that \(V_{1}(t)\) is a nonincreasing function and \(V_{1}(t)\leq V_{1}(0)\), \(t\geq0\). This implies that \(U_{1}(t)\) and \(k_{i}(t)\) are bounded on \(t\geq0 \). Then, it is easy to find that \(D^{\alpha}V_{1}(t)\) also is bounded on \(t\geq0 \). Meanwhile, we know that

is bounded. So there exists a constant \(M>0\) such that

We will further prove that \(\lim_{t\rightarrow\infty}U_{1}(t)=0\). Otherwise, there would exist a constant \(\varepsilon>0\) and a nondecreasing time series \(\{t_{i}\}\) satisfying \(\lim_{i\rightarrow\infty}t_{i}=\infty\) such that

According to (20), we have

Denote \(T=(\frac{\Gamma(\alpha+1)\varepsilon}{2M})^{\frac{1}{\alpha}}>0\). For \(t_{i} -T< t< t_{i}\), \(i=1,2,\ldots\) , taking the integrals of both sides of (20) from t to \(t_{i} \), we get

which, together with (21), gives \(U_{1}(t)\geq\frac{\varepsilon}{2}\), \(t_{i} -T< t< t_{i}\), \(i =1,2,\ldots\) . In the same way, for \(t_{i}< t< t_{i}+T\), \(i =1,2,\ldots\) . combining (20) with (21) yields

which shows that \(U_{1}(t)\geq\frac{\varepsilon}{2}\), \(t_{i} < t< t_{i}+T\), \(i =1,2,\ldots\) .

Based on the above description, we obtain

for \(t_{i} -T \leq t \leq t_{i}+T\), \(i =1,2,\ldots\) . Without loss of generality, we assume that these intervals are disjoint and \(t_{1}-T>0\). Namely,

where \(i =1,2,\ldots\) . It follows from (19) and (24) that, for \(t_{i}-T < t< t_{i}+T\), we have

Taking the integrals of both sides of (25), we obtain

In addition, by (24) we get

and \(V_{1}(t_{0}+T)\geq V_{1}(0)\).

It follows from (24) and (25) that

which reveals that \(V_{1}(t_{i}+T)\rightarrow-\infty\) as \(i\rightarrow+\infty\). However, this is a contradictions with \(V_{1}(t)\geq0\). As a result, \(\lim_{t\rightarrow\infty}U_{1}(t)=0\), and we conclude that \(\lim_{t\rightarrow\infty}e(t)=0\). Thus, the drive system (9) and response system (10) are globally asymptotically projectively synchronized. □

5 Illustrative examples

In this section, we give two examples to illustrate the validity and effectiveness of the proposed theoretical results.

Example 1

In system (10), choose \(x=(x_{1},x_{2},x_{3})^{T}\), \(\alpha=0.98\), \(f_{j}(x_{j})=\tanh(x_{j})\) for \(j=1,2,3\), \(c_{1}=c_{2}=c_{3}=1\), \(I_{1}=I_{2}=I_{3}=0 \), and

Under these parameters, system (10) has a chaotic attractor, which is shown in Figure 1.

Chaotic behavior of system ( 10 ) with initial value \(\pmb{(0.1,-0.08,0.3)}\) .

In the control scheme (13), choose \(k_{1}=5.4837\), \(k_{2}=5.1937\), \(k_{3}=7.5837\). Then system (11) also has a chaotic attractor. After using an appropriate LMI solver to get the feasible numerical solution, we get that the positive definite matrix P could be

By Theorem 4.1 we see that systems (10) and (11) are Mittag-Leffler projectively synchronized, which is verified in Figures 2-4.

In Figure 2, the projective synchronization error system converges to zero, which shows that the drive and response systems are globally asymptotically projectively synchronized.

Similarly, projective synchronization with projective coefficient \(\beta=2\), \(\beta=-1\) is simulated in Figures 5-10.

Example 2

In system (10), the chosen parameters α, \(f(x)\), C, I, A are the same as in Example 1, so that system (10) has a chaotic attractor. In the following, we consider response system. In the control scheme (16). we choose \(k_{1}(0)=0.05\), \(k_{2}(0)=0.06\), \(k_{3}(0)=0.08\), \(r_{1}=r_{2}=r_{3}=1\), \(k_{1}=k_{2}=k_{3}=2\). Using the Matlab LMI toolbox, we find that the linear matrix inequality is feasible and the feasible solution is

Therefore, according to Theorem 4.2, we conclude that systems (10) and (11) are synchronized, which is verified in Figures 11-14.

Chaotic behavior of system ( 10 ) with initial value \(\pmb{(0.2,-0.5,0.8)}\) .

6 Conclusions

In this paper, the global Mittag-Leffler projective synchronization issue for fractional neural networks is investigated. A lemma about the Caputo fractional derivative of the quadratic form in the literature has been improved. Based on a hybrid control scheme, the Mittag-Leffler projective synchronization conditions have been presented in terms of LMIs, and hence the results obtained in this paper are easily checked and applied in practical engineering.

It would be interesting to extend the results proposed in this paper to fractional-order neural networks with delays. This issue will be the topic of our future research.

References

Hartley, T, Lorenzo, F: Chaos in fractional order Chua’s system. IEEE Trans. Circuits Syst. 42, 485-490 (1995)

Lu, J, Chen, G: A note on the fractional order Chen system. Chaos Solitons Fractals 27, 685-688 (2006)

Deng, W, Li, C: Chaos synchronization of the fractional Lu system. Physica A 353, 61-72 (2005)

Meral, F, Royston, T, Magin, R: Fractional calculus in viscoelasticity: an experimental study. Commun. Nonlinear Sci. Numer. Simul. 15, 939-945 (2010)

Boroomand, A, Menhaj, M: Fractional-order Hopfield neural networks. In: Koppen, M, Kasabov, N, Coghill, G (eds.) Advances in Neuro-Information Processing, pp. 883-890. Springer, Berlin (2009)

Zhang, W, Zhou, S, Li, H, Zhu, H: Chaos in a fractional-order Rössler system. Chaos Solitons Fractals 42, 1684-1691 (2009)

Chen, D, Wu, C, Herbert, H, Ma, X: Circuit simulation for synchronization of a fractional-order and integer-order chaotic system. Nonlinear Dyn. 73, 1671-1686 (2013)

Caponetto, R, Fortuna, L, Porto, D: Bifurcation and chaos in noninteger order cellular neural networks. Int. J. Bifurc. Chaos 8, 1527-1539 (1998)

Arena, P, Fortuna, L, Porto, D: Chaotic behavior in noninteger-order cellular neural networks. Phys. Rev. E 61, 776-781 (2000)

Petráš, I: A note on the fractional-order cellular neural networks. In: 2006 International Joint Conference on Neural Networks, Canada, BC, Vancouver, Sheraton Vancouver Wall Centre Hotel, July 16–21, pp. 1021-1024 (2006)

Butzer, PL, Westphal, U: An Introduction to Fractional Calculus. World Scientific, Singapore (2000)

Beer, RD: Parameter space structure of continuous-time recurrent neural networks. Neural Comput. 18, 3009-3051 (2006)

Chon, KH, Hoyer, D, Armoundas, AA: Robust nonlinear autoregressive moving average model parameter estimation using stochastic recurrent artificial neural networks. Ann. Biomed. Eng. 27, 538-547 (1999)

Kilbas, AA, Srivastava, HM, Trujillo, JJ: Theory and Application of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Zhang, R, Qi, D, Wang, Y: Dynamics analysis of fractional order three-dimensional Hopfield neural network. In: International Conference on Natural Computation, pp. 3037-3039 (2010)

Huang, X, Zhao, Z, Wang, Z, Li, Y: Chaos and hyperchaos in fractional-order cellular neural networks. Neurocomputing 94, 13-21 (2012)

Kaslik, E, Sivasundaram, S: Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw. 32, 245-256 (2012)

Ren, F, Cao, F, Cao, J: Mittag-Leffler stability and generalized Mittag-Leffler stability of fractional-order gene regulatory networks. Neurocomputing 160, 185-190 (2015)

Zhang, S, Yu, Y, Wang, H: Mittag-Leffler stability of fractional-order Hopfield neural networks. Nonlinear Anal. Hybrid Syst. 16, 104-121 (2015)

Chen, D, Zhang, R, Liu, X, Ma, X: Fractional order Lyapunov stability theorem and its applications in synchronization of complex dynamical networks. Commun. Nonlinear Sci. Numer. Simul. 19, 4105-4121 (2014)

Wang, H, Yu, Y, Wen, G, Zhang, S, Yu, J: Global stability analysis of fractional-order Hopfield neural networks with time delay. Neurocomputing 154, 15-23 (2015)

Yu, J, Hu, C, Jiang, H: α-Stability and α-synchronization for fractional-order neural networks. Neural Netw. 35, 82-87 (2012)

Yang, X, Song, Q, Liu, Y, Zhao, Z: Finite-time stability analysis of fractional-order neural networks with delay. Neurocomputing 152, 19-26 (2015)

Kaslik, E, Sivasundaram, S: Dynamics of fractional-order neural networks. In: Proceedings of International Joint Conference on Neural Networks, pp. 611-618 (2011)

Zhang, S, Yu, Y, Wang, Q: Stability analysis of fractional-order Hopfield neural networks with discontinuous activation functions. Neurocomputing 171, 1075-1084 (2016)

Stamova, I: Global Mittag-Leffler stability and synchronization of impulsive fractional-order neural networks with time-varying delays. Nonlinear Dyn. 77, 1251-1260 (2014)

Wang, F, Yang, Y, Hu, M: Asymptotic stability of delayed fractional-order neural networks with impulsive effects. Neurocomputing 154, 239-244 (2015)

Chen, L, Chai, Y, Wu, R, Ma, T, Zhai, H: Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 111, 190-194 (2013)

Wu, Z, Zou, Y, Huang, N: A system of fractional-order interval projection neural network. J. Comput. Appl. Math. 294, 389-402 (2016)

Pecora, L, Carroll, T: Synchronization in chaotic system. Phys. Rev. Lett. 64, 821-824 (1990)

Magin, R: Fractional calculus in bioengineering, part 3. Crit. Rev. Biomed. Eng. 32, 195-377 (2004)

Yang, T, Chua, L: Impulsive stabilization of control and synchronization of chaotic systems: theory and application to secret communication. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 44, 976-988 (1997)

Ojalvo, J, Roy, R: Spatiotemporal communication with synchronized optical chaos. Phys. Rev. Lett. 86, 5204-5207 (2001)

Landsman, AS, Schwartz, IB: Complete chaotic synchronization in mutually coupled time-delay systems. Phys. Rev. E 75, 026201 (2007)

El-Dessoky, MM: Anti-synchronization of four scroll attractor with fully unknown parameters. Nonlinear Anal., Real World Appl. 11, 778-783 (2010)

Zhan, M, Wei, G, Lai, C: Transition from intermittency to periodicity in lag synchronization in coupled Rössler oscillators. Phys. Rev. E 65, 036202 (2002)

Molaei, M, Umut, O: Generalized synchronization of nuclear spin generator system. Chaos Solitons Fractals 37, 227-232 (2008)

Rosenblum, MG, Pikovsky, AS, Kurths, J: Phase synchronization of chaotic oscillators. Phys. Rev. Lett. 76, 1804-1807 (1996)

Zhang, D, Xu, J: Projective synchronization of different chaotic time-delayed neural networks based on integral sliding mode controller. Appl. Math. Comput. 217, 164-174 (2010)

Han, M, Zhang, M, Zhang, Y: Projective synchronization between two delayed networks of different sizes with nonidentical nodes and unknown parameters. Neurocomputing 171, 605-614 (2016)

Gan, Q, Xu, R, Yang, P: Synchronization of non-identical chaotic delayed fuzzy cellular neural networks based on sliding mode control. Commun. Nonlinear Sci. Numer. Simul. 17, 433-443 (2012)

Mainieri, R, Rehacek, J: Projective synchronization in three dimensional chaotic systems. Phys. Rev. Lett. 82, 3042-3045 (1999)

Zhu, H, Zhou, S, Zhang, W: Chaos and synchronization of time-delayed fractional neuron network system. In: The 9th International Conference for Young Computer Scientists, pp. 2937-2941 (2008)

Zhou, S, Li, H, Zhu, Z: Chaos control and synchronization in a fractional neuron network system. Chaos Solitons Fractals 36, 973-984 (2008)

Zhou, S, Lin, X, Zhang, L, Li, Y: Chaotic synchronization of a fractional neurons network system with two neurons. In: International Conference on Communications, Circuits and Systems, pp. 773-776 (2010)

Wang, F, Yang, Y, Hu, M, Xu, X: Projective cluster synchronization of fractional-order coupled-delay complex network via adaptive pinning control. Phys. A, Stat. Mech. Appl. 434, 134-143 (2015)

Yu, J, Hu, C, Jiang, H, Fan, X: Projective synchronization for fractional neural networks. Neural Netw. 49, 87-95 (2014)

Razminia, A, Majd, VJ, Baleanu, D: Chaotic incommensurate fractional order Rössler system: active control and synchronization. Adv. Differ. Equ. 2011, 15 (2011). doi:10.1186/1687-1847-2011-15

Bao, H, Cao, J: Projective synchronization of fractional-order memristor-based neural networks. Neural Netw. 63, 1-9 (2015)

Sadati, SJ, Baleanu, D, Ranjbar, A, Ghaderi, R, Abdeljawad (Maraaba), T: Mittag-Leffler stability theorem for fractional nonlinear systems with delay. Abstr. Appl. Anal. 2010, Article ID 108651 (2010)

Li, Y, Chen, Y, Podlubny, I: Stability of fractional-order nonlinear dynamic systems: Lyapunov direct method and generalized Mittag-Leffler stability. Comput. Math. Appl. 59, 1810-1821 (2010)

Podlubny, I: Fractional Differential Equations. Academic Press, San Diego (1999)

Aguila-Camacho, N, Duare-Mermoud, MA, Gallegos, JA: Lyapunov functions for fractional order systems. Commun. Nonlinear Sci. Numer. Simul. 19, 2951-2957 (2014)

Acknowledgements

The authors are extremely grateful to the Associate Editors and anonymous reviewers for their valuable comments and constructive suggestions, which helped to enrich the content and improve the presentation of this paper. This work was jointly supported by the National Natural Science Foundation of China (61573306), the Postgraduate Innovation Project of Hebei Province of China (00302-6370019), the Natural Science Foundation of Hebei Province of China (A2011203103) and High level talent project of Hebei Province of China (C2015003054).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the manuscript. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wu, H., Wang, L., Wang, Y. et al. Global Mittag-Leffler projective synchronization for fractional-order neural networks: an LMI-based approach. Adv Differ Equ 2016, 132 (2016). https://doi.org/10.1186/s13662-016-0857-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0857-8