Abstract

This paper is concerned with the problem of the guaranteed \(\mathcal{H_{\infty}}\) performance state estimation for static neural networks with interval time-varying delay. Based on a modified Lyapunov-Krasovskii functional and the linear matrix inequality technique, a novel delay-dependent criterion is presented such that the error system is globally asymptotically stable with guaranteed \(\mathcal{H_{\infty}}\) performance. In order to obtain less conservative results, Wirtinger’s integral inequality and reciprocally convex approach are employed. The estimator gain matrix can be achieved by solving the LMIs. Numerical examples are provided to demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

1 Introduction

Neural networks can be modeled either as a static neural network model or as a local field neural network model according to the modeling approaches [1, 2]. The typical static neural networks are the recurrent back-propagation networks and the projection networks. The Hopfield neural network is a typical example of the local field neural networks. The two types of neural networks have attained broad applications in knowledge acquisition, combinatorial optimization, pattern recognition, and other areas [3]. But these two types of neural networks are not equivalent in general. Static neural networks can be transferred into local field neural networks only under some preconditions. However, the preconditions are usually not satisfied. Hence, it is necessary to study static neural networks.

In practical implementation of neural networks, time delays are inevitably encountered and may lead to instability or significantly deteriorated performances [4]. Therefore, the dynamics of delayed systems which include delayed neural networks has received considerable attention during the past years and many results have been achieved [5–19]. As is well known, the Lyapunov-Krasovskii functional method is the most commonly used method in the investigation of dynamics of the delayed neural networks. The conservativeness of this approach mainly lies in two aspects: the construction of Lyapunov-Krasovskii functional and the estimation of its time-derivative. In order to get less conservative results, a variety of methods were proposed. First of all, several types of Lyapunov-Krasovskii functional were presented such as an augmented Lyapunov-Krasovskii functional [20] and a delay-decomposing Lyapunov-Krasovskii functional [21]. Second, novel integral inequalities were proposed to obtain a tighter upper bound of the integrals occurring in the time-derivative of the Lyapunov-Krasovskii functional. Wirtinger’s integral inequality [22], the free-matrix-based integral inequality [23], the integral inequality including a double integral [24] were typical examples of these integral inequalities.

In a practical situation, it is impossible to completely acquire the state information of all neurons in neural networks because of their complicated structure. So it is worth to investigate the state estimation of neural networks. Recently, some results on the state estimation of neural networks have been obtained [25–27]. In addition, in analog VLSI implementations of neural networks, uncertainties, which can be modeled as an energy-bounded input noise, should be taken into account because of the tolerances of the utilized electronic elements. Therefore, it is of practical significance to study the \(\mathcal{H_{\infty}}\) state estimation of the delayed neural networks. Some significant results on this issue have been reported by some researchers [28–34]. For instance, in [28], the state estimation problem of the guaranteed \(\mathcal{H_{\infty}}\) performance of static neural networks was discussed. In [31], based on the reciprocally convex combination technique and a double-integral inequality, a delay-dependent condition was derived such that the error system was globally exponentially stable and a prescribed \(\mathcal{H_{\infty}}\) performance was guaranteed. In [32], further improved results were proposed by using zero equalities and a reciprocally convex approach [35].

In the above mentioned results [28–33], the lower bound of time-varying delay was always assumed to be 0. However, in the real world, the time-varying delay may be an interval delay, which means that the lower bound of the delay is not restricted to be 0. In this case, the criteria in [28–33] guaranteeing the \(\mathcal{H_{\infty}}\) performance of the state estimation cannot be applied because they did not consider the information of the lower bound of the delay. In [34], by constructing an augmented Lyapunov-Krasovskii functional, the guaranteed \(\mathcal{H_{\infty}}\) performance state estimation problem of static neural networks with interval time-varying delay was discussed. Slack variables were introduced in order to derive less conservative results, but the computational burden was increased at the same time [34]. Thus, there remains room to improve the results reported in [34], which is one of the motivations of this paper.

In this paper, the problem of an \(\mathcal{H_{\infty}}\) state estimation for static neural networks with interval time-varying delay is investigated. The activation function is assumed to satisfy a sector-bounded condition. On one hand, a modified Lyapunov-Krasovskii functional is constructed which takes information of the lower bound of the time-varying delay into account. Compared with the Lyapunov-Krasovskii functional in [34], the one proposed in this paper is simple, since some terms such as \(V_{4}(t)\) in [34] are removed. On the other hand, Wirtinger’s integral inequality, which can provide a tighter upper bound than the ones derived based on Jensen’s inequality, is employed to deal with the integral appearing in the derivative. Based on the constructed Lyapunov-Krasovskii functional and the new integral inequality, an improved delay-dependent criterion is derived such that the resulting error system is globally asymptotically stable with guaranteed \(\mathcal{H_{\infty}}\) performance. Compared with existing relevant conclusions, the criterion in this paper has less conservativeness as well as a lower computational burden. In addition, when the lower bound of the time-varying delay is 0, a new delay-dependent \(\mathcal{H_{\infty }}\) state estimation condition is also obtained, which can provide a better performance than the existing results. Simulation results are provided to demonstrate the effectiveness of the presented method.

Notations

The notations are quite standard. Throughout this paper, \(R^{n}\) and \(R^{n\times m}\) denote respectively, the n-dimensional Euclidean space and the set of all \(n\times m\) real matrices. \(\operatorname{diag}(\cdot)\) denotes the diagonal matrix. For real symmetric matrices X and Y, the notations \(X\geq Y\) (respectively, \(X>Y\)) means that the matrix \(X-Y\) is a positive semi-definite (respectively, positive definite). The symbol ∗ within a matrix represents the symmetric term of the matrix.

2 Problem description and preliminaries

In this paper, the following delayed static neural network subject to noise disturbance was considered:

where \(x(t)=[x_{1}(t), x_{2}(t), \ldots, x_{n}(t)]^{T}\in R^{n}\) is the state vector of the neural network associated with n neurons, \(y(t) \in R^{m}\) is the network output measurement, \(z(t) \in R^{p}\), to be estimated, is a linear combination of the states, \(w(t) \in R^{q}\) is the noise input belonging to \(\mathcal{L}_{2}[0, \infty)\), \(A=\operatorname{diag}\{a_{1}, a_{2}, \ldots, a_{n}\}>0\) describes the rate with which the ith neuron will reset its potential to the resting state in isolation when disconnected from the networks and external inputs, \(W=[w_{ij}]_{n\times n}\) is the delayed connection weight matrix, \(B_{1}\), \(B_{2}\), C, D, and H are real known constant matrices with appropriate dimensions. \(f(x(t))=[f_{1}(x_{1}(t)), f_{2}(x_{2}(t)), \ldots, f_{n}(x_{n}(t))]^{T}\) denotes the continuous activation function, \(J=[J_{1}, J_{2}, \ldots, J_{n}]^{T}\) is the exogenous input vector. \(\psi(t)\) is the initial condition. \(h(t)\) denotes the time-varying delay satisfying

where \(h_{1}\), \(h_{2}\), μ are known constants.

The neuron activation function \(f_{i}(\cdot)\) satisfies

where \(k^{-}_{i}\), \(k^{+}_{i}\) are some known constants.

Remark 1

Compared with [29, 30, 34], the activation function considered in this paper is more general since \(k^{-}_{i}\), \(k^{+}_{i}\) in (3) may be positive, zero or negative.

For the neural network (1), we construct a state estimator for estimation of \(z(t)\):

where \(\hat{x}(t)\in R^{n}\) denotes the estimated state, \(\hat{z}(t)\in R^{p}\) denotes the estimated measurement of \(z(t)\), and K is the state estimator gain matrix to be determined.

Define the error by \(r(t)=x(t)-\hat{x}(t)\), and \(\bar{z}(t)=z(t)-\hat{z}(t)\). Then, based on (1) and (4), we can easily obtain the error system of the form

where \(g(Wr(t))=f(Wx(t)+J)-f(W\hat{x}(t)+J)\).

To proceed, we need the following useful definition and lemmas.

Definition 1

Given a prescribed level of noise attenuation \(\gamma> 0\), find a proper state estimator (4) such that the equilibrium point of the resulting error system (5) with \(w(t)=0\) is globally asymptotically stable, and

under zero-initial conditions for all nonzero \(w(t)\in\mathcal {L}_{2}[0,\infty)\), where \(\|x(t)\|_{2}= \sqrt{\int_{0}^{\infty}x^{T}(t)x(t)\,dt}\). In this case, the error system (5) is said to be globally asymptotically stable with \(\mathcal{H_{\infty}}\) performance γ.

Lemma 1

([35])

Let \(f_{1}, f_{2}, \ldots , f_{N}: R^{m}\rightarrow R\) have positive values in an open subsets D of \(R^{m}\). Then the reciprocally convex combination of \(f_{i}\) over D satisfies

subject to

Lemma 2

([22])

For a given matrix \(R>0\), the following inequality holds for all continuously differentiable function x in \([a,b]\rightarrow R^{n}\):

where \(\Omega=x(b)+x(a)-\frac{2}{b-a}\int_{a}^{b}x(s)\,ds\).

3 Main results

In this section, we first establish a delay-dependent sufficient condition under which system (5) is asymptotically stable with a prescribed \(\mathcal{H_{\infty}}\) performance γ.

Theorem 1

For given scalars \(0< h_{1}< h_{2}\), \(\mu, \gamma>0\), \(K_{1}=\operatorname{diag}\{k^{-}_{1}, k^{-}_{2}, \ldots, k^{-}_{n}\}\), and \(K_{2}=\operatorname{diag}\{k^{+}_{1}, k^{+}_{2}, \ldots, k^{+}_{n}\}\), the error system (5) is globally asymptotically stable with \(\mathcal{H_{\infty}}\) performance γ if there exist real matrices \(P>0\), \(Q>0\), \(Z_{1}>0\), \(Z_{2}>0\), \(Z_{3}>0\), \(Z_{4}>0\), \(R>0\), \(T_{1}=\operatorname{diag}\{t_{11}, t_{12}, \ldots, t_{1n}\}>0\), \(T_{2}=\operatorname{diag}\{t_{21}, t_{22}, \ldots, t_{2n}\}>0\), and matrices \(S_{11}\), \(S_{12}\), \(S_{21}\), \(S_{22}\), M, G with appropriate dimensions such that the following LMIs are satisfied:

where

Moreover, the gain matrix K of the state estimator of (4) can be designed as \(K=M^{-1}G\).

Proof

Construct the following Lyapunov-Krasovskii functional:

with

where \(h_{12}=h_{2}-h_{1}\).

The time derivative of \(V(t)\) along the trajectory of system (5) is given by

where

where \(u=\frac{1}{h_{1}}\int_{t-h_{1}}^{t}r(s)\,ds\), \(v_{1}=\frac{1}{h(t)-h_{1}}\int_{t-h(t)}^{t-h_{1}}r(s)\,ds\), \(v_{2}=\frac {1}{h_{2}-h(t)}\int_{t-h_{2}}^{t-h(t)}r(s)\,ds\),

based on Lemma 2, one can have

by employing Lemma 1 and Lemma 2, we can derive

where

Calculating \(\dot{V}_{4}(t)\), \(\dot{V}_{5}(t)\) yields

According to (3), for any positive definite diagonal matrices \(T_{1}\), \(T_{2}\), the following inequalities hold:

Furthermore, for any matrix M with appropriate dimension, the following equation holds:

Under the zero-initial condition, it is obvious that \(V(r(t))|_{t=0}=0\). For convenience, let

Then for any nonzero \(w(t)\in\mathcal{L}_{2}[0,\infty)\), we obtain

where

and \(\Phi=[\Phi_{ij}]_{11\times11}\) with \(\Phi_{11}=\Pi_{11}+H^{T}H\), \(\Phi_{ij}=\Pi_{ij} \) (\(i\leq j\), \(1\leq i \leq11\), \(2 \leq j \leq11\)). By applying the Schur complement, \(\Phi<0\) is equivalent to \(\Pi^{*}<0\). Then, if (10) holds, we can ensure the error system (5) with the guaranteed \(\mathcal{H_{\infty}}\) performance defined by Definition 1.

In the sequel, we will show that the equilibrium point of (5) with \(w(t)=0\) is globally asymptotically stable if (10) holds. When \(w(t)=0\), the error system (5) becomes

We still consider the Lyapunov-Krasovskii functional candidate (12) and calculate its time-derivative along the trajectory of (27). We can easily obtain

where

and \(\bar{\Pi}^{*}=[\bar{\Pi}^{*}_{ij}]_{10\times10}\) with

It is obvious that if \(\Pi^{*}_{[h(t)=h_{1}]}<0\), \(\Pi ^{*}_{[h(t)=h_{2}]}<0\), then \(\bar{\Pi}^{*}_{[h(t)=h_{1}]}<0\), \(\bar{\Pi }^{*}_{[h(t)=h_{2}]}<0\). So system (27) is globally asymptotically stable. Moreover, if (10) holds, the state estimator (4) for the static neural networks (1) has the guaranteed \(\mathcal{H_{\infty}}\) performance and guarantees the globally asymptotically stable of the error system (5). This completes the proof. □

Remark 2

The time-varying delay in [28–33] was always assumed to satisfy \(0\leq h(t)\leq h\), which is a special case of the condition (2) in this paper. Therefore, compared with [28–33], the time-varying delay discussed in this paper is less restrictive. In [30, 31], for the sake of converting a nonlinear matrix inequality into a linear matrix inequality, some inequalities such as \(-PT^{-1}P\leq-2P+T\), which lack freedom and may lead to some conservativeness for the derived results, were utilized in the discussion of the guaranteed \(\mathcal{H}_{\infty}\) performance state estimation problem. In our paper, the zero equality (23) is used to avoid this problem, which can give much flexibility in solving LMIs. In [32], Jensen’s integral inequality, which ignored some terms and may introduce conservativeness to some extent, was employed to estimate the upper bound of the time derivative of the Lyapunov-Krasovskii functional. In this paper, Wirtinger’s integral inequality, which takes information not only on the state and the delayed state of a system, but also on the integral of the state over the period of the delay into account, is exploited to give an estimation of the time derivative of the Lyapunov-Krasovskii functional.

Remark 3

Based on a Lyapunov-Krasovskii functional with triple integrals involving augmented terms, the guaranteed \(\mathcal{H}_{\infty}\) performance state estimation problem of static neural networks with interval time-varying delay was investigated in [34], and a sufficient criterion guaranteeing the globally asymptotical stability of the error system (5) for a given \(\mathcal{H}_{\infty}\) performance index was obtained [34]. Since the augmented Lyapunov-Krasovskii functional contained more information, the criterion derived in [34] had less conservativeness than most of the previous results [28–33]. However, the computational burden increased at the same time because of the augmented Lyapunov-Krasovskii functional. Compared with the results in [34], the advantages of the method used in this paper mainly rely on two aspects. First, the Lyapunov-Krasovskii functional is simpler than that in [34], since the triple integrals and other augmented terms in [34] are not needed, which will reduce the computational complexity. Second, in the proof of Theorem 1, Wirtinger’s integral inequality, which includes Jensen’s integral inequality, and a reciprocally convex approach are employed to estimate the upper bound of the derivative of the Lyapunov-Krasovskii functional, which will yield less conservative results.

When \(0\leq h(t)\leq h\), that is, the lower bound of the time-varying delay is 0, we introduce the Lyapunov-Krasovskii functional as follows:

with

By a similar method to that employed in Theorem 1, we can obtain the following corollary.

Corollary 1

For given scalars h, μ, and \(\gamma>0\), the error system (5) is globally asymptotically stable with the \(\mathcal{H_{\infty}}\) performance γ if there exist real matrices \(P>0\), \(Q>0\), \(Z_{2}>0\), \(Z_{4}>0\), \(R>0\), \(T_{1}=\operatorname{diag}\{t_{11}, t_{12}, \ldots, t_{1n}\}>0\), \(T_{2}=\operatorname{diag}\{t_{21}, t_{22}, \ldots, t_{2n}\}>0\), and matrices \(S_{11}\), \(S_{12}\), \(S_{21}\), \(S_{22}\), M, G with appropriate dimensions such that the following LMIs are satisfied:

where

Moreover, the gain matrix K of the state estimator of (4) can be designed as \(K=M^{-1}G\).

Remark 4

As an effective approach to establish the delay-dependent stability criteria for delayed neural networks, the complete delay-decomposing approach was proposed in [21], which significantly reduced the conservativeness of the derived stability criteria. A novel Lyapunov-Krasovskii functional decomposing the delay in all integral terms was constructed. Since delay information can be taken fully into account by dividing the delay interval into several subintervals, less conservative results may be obtained. The computational burden for the complete delay-decomposing approach will increase with the increasing number of subintervals. In order to get less conservative results as well as less computational burden, the number of the subintervals should be chosen properly. Jensen’s inequality was used to estimate the derivative of the Lyapunov-Krasovskii functional in [21]. The conservativeness of the derived result in this paper can be further reduced by our method with the complete delay-decomposing approach [21].

Remark 5

The integral inequality method and the free-weighting matrix method are two main techniques to deal with the bounds of the integrals that appear in the derivative of Lyapunov-Krasovskii functional for stability analysis of delayed neural networks. A free-matrix-based integral inequality was developed and was applied to a stability analysis of systems with time-varying delay [23]. A free-matrix-based integral inequality implied Wirtinger’s inequality as a special case. The free matrices can provide freedom in reducing the conservativeness of the inequality. This new inequality was used to derive improved delay-dependent stability criteria although the computational burden increased because of the introduction of free-weighting matrices. The free-matrix-based integral inequality in [23] made use of information as regards only a single integral of the system state. Different from the free-matrix-based integral inequality, a new integral inequality was developed basing on information as regards a double integral of the system state in [24]. It also included the Wirtinger-based integral inequality. By employing a free-matrix-based integral inequality [23] or the novel integral inequality in [24], less conservative results than those obtained in our paper may be further derived.

4 Examples

Example 1

Consider the system with the following parameters:

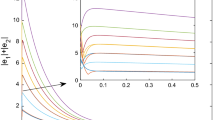

Based on Theorem 1, we can derive the optimal \(\mathcal{H_{\infty}}\) performance index for different \(h_{1}\), \(h_{2}\), and μ. The results are stated in Table 1. From Table 1, it is observed that Theorem 1 in this paper can provide less conservative results than [34]. It is worth pointing out that the criterion in [34] does not work when \(h_{1}=0.5\), \(h_{2}=0.9\), \(\mu=1.2\), but Theorem 1 in our paper can provide a feasible solution of the optimal \(\mathcal{H_{\infty}}\) performance index. It should also be noted that the number of variables involved in [34] is 207, and Theorem 1 only needs 72 variables. Now, let \(h(t)=0.7+0.2\sin(6t)\), \(f(x)=0.5\tanh(x)\), and \(w(t)=\cos(t)e^{-12t}\), then \(0.5\leq h(t) \leq0.9\), \(\dot{h}(t)\leq1.2\). Consider the design of the guaranteed \(\mathcal{H_{\infty}}\) performance state estimator studied above, by employing the MATLAB LMI toolbox to solve the problem, the gain matrix K can be designed as

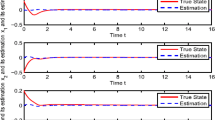

with the optimal \(H_{\infty}\) performance index \(\gamma=0.2555\). Figure 1 represents the responses of the error \(r(t)\) under the initial condition \(r(0)=[-1\ 1]^{T}\). It confirms the effectiveness of the presented approach to the design of the state estimator with \(H_{\infty}\) guaranteed performance for delayed static neural networks.

The response of the error \(\pmb{r(t)}\) for given initial value in Example 1 .

Example 2

Consider the system with the following parameters:

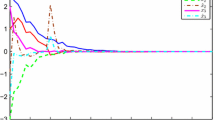

For different h and μ, the optimal \(\mathcal{H_{\infty}}\) performance index γ can be obtained by Theorem 1 in [31], Theorem 3.1 in [32], Theorem 3.1 in [34], and Corollary 1 in this paper. The results are summarized in Table 2. From Table 2, it is clear that a better performance can be derived by the approach proposed in our paper. When \(0\leq h(t) \leq1\), \(\dot {h}(t)\leq0.9\), \(\gamma=1.2361\), the state response of the error system under the initial condition \(r(0)=[-1\ 1\ {-}0.5]^{T}\) is shown in Figure 2.

The response of the error \(\pmb{r(t)}\) for given initial value in Example 2 .

5 Conclusions

In this paper, the problem of delay-dependent \(\mathcal{H_{\infty}}\) state estimation of static neural networks with interval time-varying delay has been investigated. Based on Lyapunov stability theory, Wirtinger’s integral inequality, and a reciprocally convex approach, some improved sufficient conditions which guarantee the globally asymptotical stability of the error system with the guaranteed \(H_{\infty}\) performance have been proposed. The estimator gain matrix can be determined by solving the LMIs. The effectiveness of the theoretical results has been illustrated by two numerical examples. In addition, how to utilize more accurate inequalities such as the integral inequalities in [23, 24] with less computational burden will be investigated in our future work.

References

Qiao, H, Peng, J, Xu, ZB, Zhang, B: A reference model approach to stability analysis of neural networks. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 33, 925-936 (2003)

Xu, ZB, Qiao, H, Peng, J, Zhang, B: A comparative study of two modeling approaches in neural networks. Neural Netw. 17, 73-85 (2004)

Beaufay, F, Abdel-Magrid, Y, Widrow, B: Application of neural networks to load-frequency control in power systems. Neural Netw. 7, 183-194 (1994)

Niculescu, SI, Gu, K: Advances in Time-Delay Systems. Springer, Berlin (2004)

Liu, XG, Wu, M, Martin, RR, Tang, ML: Delay-dependent stability analysis for uncertain neutral systems with time-varying delays. Math. Comput. Simul. 75, 15-27 (2007)

Liu, XG, Wu, M, Martin, RR, Tang, ML: Stability analysis for neutral systems with mixed delays. J. Comput. Appl. Math. 202, 478-497 (2007)

Guo, SJ, Tang, XH, Huang, LH: Stability and bifurcation in a discrete system of two neurons with delays. Nonlinear Anal., Real World Appl. 9, 1323-1335 (2008)

Wang, Q, Dai, BX: Existence of positive periodic solutions for a neutral population model with delays and impulse. Nonlinear Anal. 69, 3919-3930 (2008)

Zhang, XM, Han, QL: New Lyapunov-Krasovskii functionals for global asymptotic stability of delayed neural networks. IEEE Trans. Neural Netw. 20, 533-539 (2009)

Xu, CJ, Tang, XH, Liao, MX: Stability and bifurcation analysis of a delayed predator-prey model of prey dispersal in two-patch environments. Appl. Math. Comput. 216, 2920-2936 (2010)

Luo, ZG, Dai, BX, Wang, Q: Existence of positive periodic solutions for a nonautonomous neutral delay n-species competitive model with impulses. Nonlinear Anal., Real World Appl. 11, 3955-3967 (2010)

Zhang, XM, Han, QL: Global asymptotic stability for a class of generalized neural networks with interval time-varying delays. IEEE Trans. Neural Netw. 22, 1180-1192 (2011)

Liao, MX, Tang, XH, Xu, CJ: Dynamics of a competitive Lotka-Volterra system with three delays. Appl. Math. Comput. 217, 10024-10034 (2011)

Zeng, HB, He, Y, Wu, M, Xiao, HQ: Improved conditions for passivity of neural networks with a time-varying delay. IEEE Trans. Cybern. 44, 785-792 (2014)

Xu, Y, He, ZM, Wang, PG: pth moment asymptotic stability for neutral stochastic functional differential equations with Lévy processes. Appl. Math. Comput. 269, 594-605 (2015)

Ji, MD, He, Y, Wu, M, Zhang, CK: Further results on exponential stability of neural networks with time-varying delay. Appl. Math. Comput. 256, 175-182 (2015)

Zeng, HB, He, Y, Wu, M, Xiao, SP: Stability analysis of generalized neural networks with time-varying delays via a new integral inequality. Neurocomputing 161, 148-154 (2015)

Qiu, SB, Liu, XG, Shu, YJ: New approach to state estimator for discrete-time BAM neural networks with time-varying delay. Adv. Differ. Equ. 2015, 189 (2015)

Xu, Y, He, ZM: Exponential stability of neutral stochastic delay differential equations with Markovian switching. Appl. Math. Lett. 52, 64-73 (2016)

Zhang, CK, He, Y, Jiang, L, Wu, QH, Wu, M: Delay-dependent stability criteria for generalized neural networks with two delay components. IEEE Trans. Neural Netw. Learn. Syst. 25, 1263-1276 (2014)

Zeng, HB, He, Y, Wu, M, Zhang, CF: Complete delay-decomposing approach to asymptotic stability for neural networks with time-varying delays. IEEE Trans. Neural Netw. 22, 806-812 (2011)

Seuret, A, Gouaisbaut, F: Wirtinger-based integral inequality: application to time-delay systems. Automatica 49, 2860-2866 (2013)

Zeng, HB, He, Y, Wu, M, She, J: Free-matrix-based integral inequality for stability analysis of systems with time-varying delay. IEEE Trans. Autom. Control 60, 2768-2772 (2015)

Zeng, HB, He, Y, Wu, M, She, J: New results on stability analysis for systems with discrete distributed delay. Automatica 60, 189-192 (2015)

He, Y, Wang, QG, Wu, M, Lin, C: Delay-dependent state estimation for delayed neural networks. IEEE Trans. Neural Netw. 17, 1077-1081 (2006)

Zheng, CD, Ma, M, Wang, Z: Less conservative results of state estimation for delayed neural networks with fewer LMI variables. Neurocomputing 74, 974-982 (2011)

Huang, H, Huang, T, Chen, X: Mode-dependent approach to state estimation of recurrent neural networks with Markovian jumping parameters and mixed delays. Neural Netw. 46, 50-61 (2013)

Huang, H, Feng, G: Delay-dependent \(\mathcal{H_{\infty}}\) and generalized \(\mathcal{H}_{2}\) filtering for delayed neural networks. IEEE Trans. Circuits Syst. 56, 846-857 (2009)

Huang, H, Feng, G, Cao, JD: Guaranteed performance state estimation of static neural networks with time-varying delay. Neurocomputing 74, 606-616 (2011)

Duan, QH, Su, HY, Wu, ZG: \(\mathcal{H_{\infty}}\) state estimation of static neural networks with time-varying delay. Neurocomputing 97, 16-21 (2012)

Huang, H, Huang, TW, Chen, XP: Guaranteed \(\mathcal{H_{\infty}}\) performance state estimation of delayed static neural networks. IEEE Trans. Circuits Syst. 60, 371-375 (2013)

Liu, YJ, Lee, SM, Kwon, OM, Park, JH: A study on \(\mathcal{H_{\infty}}\) state estimation of static neural networks with time-varying delays. Appl. Math. Comput. 226, 589-597 (2014)

Lakshmanan, S, Mathiyalagan, K, Park, JH, Sakthivel, R, Rihan, FA: Delay-dependent \(\mathcal{H_{\infty}}\) state estimation of neural networks with mixed time-varying delays. Neurocomputing 129, 392-400 (2014)

Syed Ali, M, Saravanakumar, R, Arik, S: Novel \(\mathcal{H_{\infty}}\) state estimation of static neural networks with interval time-varying delays via augmented Lyapunov-Krasovskii functional. Neurocomputing 171, 949-954 (2016)

Park, P, Ko, JW, Jeong, C: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47, 235-238 (2011)

Acknowledgements

This work is partly supported by National Nature Science Foundation of China under grant Numbers 61271355 and 61375063 and the ZDXYJSJGXM 2015. The authors would like to thank the anonymous reviewers for their constructive comments that have greatly improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Shu, Y., Liu, X. Improved results on \(\mathcal{H}_{\infty}\) state estimation of static neural networks with interval time-varying delay. J Inequal Appl 2016, 48 (2016). https://doi.org/10.1186/s13660-016-0990-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-016-0990-7