Abstract

In this paper, state estimation for discrete-time BAM neural networks with time-varying delay is discussed. Under a weaker assumption on activation functions, by constructing a novel Lyapunov-Krasovskii functional (LKF), a set of sufficient conditions are derived in terms of linear matrix inequality (LMI) for the existence of state estimator such that the error system is global exponentially stable. Based on the delay partitioning method and the reciprocally convex approach, some less conservative stability criteria along with lower computational complexity are obtained. Finally, a numerical example is given to show the effectiveness of the derived result.

Similar content being viewed by others

1 Introduction

In the past few years, neural networks have been widely studied and applied in various fields such as load frequency control in pattern recognition, static image processing, associative memories, mechanics of structures and materials, optimization and other scientific areas (see [1–16]). The BAM neural network model is an extension of the unidirectional auto-associator of Hopfield. A BAM neural network is composed of neurons arranged in two layers: X- and Y-layers. The neurons in one layer are fully interconnected to the neurons in the other layer, while there are no interconnection among neurons in the same layer [17]. The BAM neural networks have potential applications in many fields such as signal processing, artificial intelligence and so on. In addition, time delays are inevitable in many biological and artificial neural networks such as signal transmission delay, propagation and signal processing delay. It is well known that the existence of time delays is the source of oscillation and instability in neural networks which can change the dynamic characters of neural networks dramatically. The stability of neural networks has drawn particular research interest. The stability of BAM neural networks also has been widely studied, and a lot of results have been reported (see [18, 19] and the references cited therein).

In relatively large-scale neural networks, the state components of the neural network model are unknown or not available for direct measurement, normally only partial information about the neuron states is available in the network outputs. In order to make use of the neural networks in practice, it is important and necessary to estimate the neuron states through available measurements. Recently, the state estimation problem for neural networks has received increasing research interest (see [20–26]). The state estimation problem for delayed neural networks has been proposed in [20]. Mou et al. [21] studied state estimation for discrete-time neural networks with time-varying delays. A sufficient condition was derived for the design of the state estimator to guarantee the global exponential stability of the error system. The problem of state estimation for discrete-time delayed neural networks with fractional uncertainties and sensor saturations was studied in [22]. In [26], the state estimation problem for a new class of discrete-time neural networks with Markovian jumping parameters as well as mode-dependent mixed time-delays is studied. New techniques are developed to deal with the mixed time-delays in the discrete-time setting, and a novel Lyapunov-Krasovskii functional is put forward to reflect the mode-dependent time-delays. Sufficient conditions are established in terms of linear matrix inequalities (LMIs) that guarantee the existence of the state estimators.

However, only very little attention has been paid to state estimation for BAM neural networks with time-varying delay. The robust delay-dependent state estimation problem for a class of discrete-time BAM neural networks with time-varying delays is considered in [27]. By using the Lyapunov-Krasovskii functional together with linear matrix inequality (LMI) approach, a new set of sufficient conditions is derived for the existence of state estimator such that the error state system is asymptotically stable. The problem of state estimator of BAM neural network with leakage delays is studied in [28]. A sufficient condition is established to ensure that the error system was globally asymptotically stable. It should be pointed out that only the global asymptotical stability of the error system was discussed in [27, 28]. To the best of our knowledge, the exponential stability of the error system for the discrete-time BAM neural networks with time-varying delay has not been fully addressed yet. Motivated by this consideration, in this paper we aim to establish some new sufficient conditions for the existence of state estimations which guarantee the error system for the discrete-time BAM neural networks with time-varying delays to be globally exponentially stable. These conditions are expressed in the form of LMIs.

The rest of this paper is organized as follows. In Section 2, some notations and lemmas, which will be used in this paper, are given. In Section 3, based on utilizing both the delay partitioning method and the reciprocally convex approach, we construct a novel Lyapunov-Krasovskii functional, and a new set of sufficient conditions are derived for the global exponential stability of the error system. In Section 4, an illustrative example is provided to demonstrate the effectiveness of the proposed result.

2 Problem formulation and preliminaries

For the sake of convenience, we introduce some notations firstly. ∗ denotes the symmetric term in a symmetric matrix. For an arbitrary matrix A, \(A^{T}\) stands for the transpose of A. \(A^{-1}\) denotes the inverse of A. \(R^{n\times n}\) denotes the \(n\times n\)-dimensional Euclidean space. If A is a symmetric matrix, \(A>0\) (\(A\geq0\)) means that A is positive definite (positive semidefinite). Similarly, \(A<0\) (\(A\leq0\)) means that A is negative definite (negative semidefinite). \(\lambda_{m}(A)\), \(\lambda_{M}(A)\) denote the minimum and maximum eigenvalues of a square matrix A, respectively. \(\|A\|=\sqrt{\lambda_{M}(A^{T}A)}\).

In this paper, we consider the following class of discrete-time BAM neural networks with time-varying delays:

for \(k =1,2,\ldots,n\), where \(x(k)=(x_{1}(k),x_{2}(k),\ldots,x_{n}(k))^{T}\), \(y(k)=(y_{1}(k),y_{2}(k),\ldots,y_{n}(k))^{T}\) are the neuron state vectors; \(A=\operatorname{diag}(a_{1},a_{2},\ldots,a_{n})\), and \(B=\operatorname{diag} (b_{1},b_{2},\ldots,b_{n})\) are the state feedback coefficient matrices, \(|a_{i}|<1\), \(|b_{i}|<1\), \(i=1,2,\ldots,n\); \(C=[c_{ij}]_{n\times n}\), \(D=[d_{ij}]_{n\times n}\) are the connection weight matrices; \(E=[e_{ij}]_{n\times n}\), and \(W=[w_{ij}]_{n\times n}\) are the delayed connection weight matrices; \(f(y(k))=(f_{1}(y_{1}(k)), f_{2}(y_{2}(k)),\ldots,f_{n}(y_{n}(k)))^{T}\), and \(g(x(k))=(g_{1}(x_{1}(k)), g_{2}(x_{2}(k)),\ldots,g_{n}(x_{n}(k)))^{T}\) denote the neuron activation functions; \(h(k)\) and \(d(k)\) denote the time-varying delays satisfying \(0\leq h_{m} \leq h(k)\leq h_{M}\), \(0 \leq d_{m} \leq d(k)\leq d_{M}\), where \(h_{m}\), \(h_{M}\), \(d_{m}\), \(d_{M}\) are known positive integers; I and J denote the external constant inputs from outside network.

Throughout this paper, we make the following assumptions.

Assumption 1

There exist constants \(F_{i}^{-}\), \(F_{i}^{+}\), \(G_{i}^{-}\) and \(G_{i}^{+}\) such that

Remark 1

In Assumption 1, the constants \(F_{i}^{-}\), \(F_{i}^{+}\), \(G_{i}^{-}\) and \(G_{i}^{+}\) are allowed to be positive, negative, or zero. So the activation functions in this paper are more general than nonnegative sigmoidal functions used in [29, 30]. The stability condition developed in this paper is less restrictive than that in [29, 30].

For presentation convenience, we denote

For some relatively large scale neural networks, it is difficult to achieve all the information about the network computer. So, one often needs to utilize the estimated neuron states to achieve certain design objectives. We assume that the network measurements are as follows:

where \(z_{x}(k)\), \(z_{y}(k)\) are the measurement outputs, and \(M_{1}\), \(M_{2}\) are the known constant matrices with appropriate dimensions. Therefore, the full-order state estimator is of the form

where \(\widehat{x}(k)\), \(\widehat{y}(k)\) are the estimations of \(x(k)\), \(y(k)\), respectively, \(K_{1}\), \(K_{2}\) are the gain matrices of the estimator to be designed later.

Let the estimation error be \(e_{1}(k)=x(k)-\widehat{x}(k)\) and \(e_{2}(k)=y(k)-\widehat{y}(k)\). Then the error system can be written as

By defining \(\xi_{1}(k)=f(y(k))-f(\widehat{y}(k))\), \(\xi _{1}(k-d(k))=f(y(k-d(k)))-f(\widehat{y}(k-d(k)))\), \(\xi _{2}(k)=g(x(k))-g(\widehat{x}(k))\), \(\xi_{2}(k-h(k))=g(x(k-h(k)))-g(\widehat{x}(k-h(k)))\), system (4) can be rewritten as

Before presenting the main results of the paper, we need the following definition and lemmas.

Definition 1

The error system (5) is said to be globally exponentially stable if there exist two scalars \(\lambda>0 \) and \(r>1\) such that

Lemma 1

[31]

For any positive definite matrix \(J\in R^{n\times n}\), two positive integers r and \(r_{0}\) satisfying \(r\geq r_{0}\geq1\), and vector function \(x(i)\in R^{n}\), one has

Lemma 2

[32]

Let \(f_{1}, f_{2}, \ldots, f_{N}: R^{m}\mapsto R\) have positive values in an open subset D of \(R^{m}\). Then the reciprocally convex combination of \(f_{i}\) over D satisfies

3 Main results

In this section, we construct a novel Lyapunov-Krasovskii functional together with free-weighting matrix technique. A delay-dependent condition is derived to estimate the neuron state through available output measurements such that the resulting error system (5) is globally exponentially stable.

Theorem 1

Under Assumption 1, the error state system (5) is globally exponentially stable if there exist symmetric positive define matrices \(P_{1}>0\), \(P_{2}>0\), \(Q_{i}>0\), \(R_{i}>0\) (\(i=1,2,3,4,5,6\)), positive diagonal matrices \(U_{j}>0\) (\(j=1,2,3,4\)), and matrices \(L_{1}\), \(L_{2}\), \(S_{1}\), \(S_{2}\), X, Y of appropriate dimensions such that the following LMIs hold:

where \(\Phi_{1,1}=P_{1}+h_{m}^{2}R_{1}+h_{M}^{2}R_{3}+(h_{M}-h_{m})^{2}R_{5}+L_{1}^{T}+L_{1}\), \(\Phi_{1,2}=-h_{m}^{2}R_{1}-h_{M}^{2}R_{3}-(h_{M}-h_{m})^{2}R_{5}-L_{1}A+XM_{1}\), \(\Phi _{1,6}=-L_{1}C\), \(\Phi_{1,7}=-L_{1}E\), \(\Phi _{2,2}=-P_{1}+(h_{M}-h_{m}+1)Q_{1}+Q_{3}+Q_{5}+(h_{m}^{2}-1)R_{1} +(h_{M}^{2}-1)R_{3}+(h_{M}-h_{m})^{2}R_{5}-G_{1}U_{3}\), \(\Phi_{2,4}=R_{1}\), \(\Phi_{2,5}=R_{3}\), \(\Phi_{2,13}=G_{2}U_{3}\), \(\Phi _{3,3}=-Q_{1}-2R_{5}+S_{1}^{T}+S_{1}-G_{1}U_{4}\), \(\Phi_{3,4}=R_{5}^{T}-S_{1}\), \(\Phi_{3,5}=-S_{1}^{T}+R_{5}\), \(\Phi_{3,14}=G_{2}U_{4}\), \(\Phi _{4,4}=-Q_{3}-R_{1}-R_{5}\), \(\Phi_{4,5}=S_{1}^{T}\), \(\Phi_{5,5}=-Q_{5}-R_{3}-R_{5}\), \(\Phi_{6,6}=-U_{1}\), \(\Phi_{6,9}=F_{2}U_{1}\), \(\Phi_{7,7}=-U_{2}\), \(\Phi_{7,10}=F_{2}U_{2}\), \(\Phi_{8,8}=P_{2}+d_{m}^{2}R_{2}+d_{M}^{2}R_{4}+(d_{M}-d_{m})^{2}R_{6}+L_{2}^{T}+L_{2}\), \(\Phi_{8,9}=-d_{m}^{2}R_{2}-d_{M}^{2}R_{4}-(d_{M}-d_{m})^{2}R_{6}-L_{2}A+YM_{2}\), \(\Phi_{8,13}=-L_{2}D\), \(\Phi_{8,14}=-L_{2}W\), \(\Phi_{9,9}=-P_{2}+(d_{M}-d_{m}+1)Q_{2}+Q_{4}+Q_{6}+(d_{m}^{2}-1)R_{2} +(d_{M}^{2}-1)R_{4}+(d_{M}-d_{m})^{2}R_{6}-F_{1}U_{1}\), \(\Phi_{9,11}=R_{2}\), \(\Phi_{9,12}=R_{4}\), \(\Phi_{10,10}=-Q_{2}-2R_{6}+S_{2}^{T}+S_{2}-F_{1}U_{2}\), \(\Phi_{10,11}=R_{6}^{T}-S_{2}\), \(\Phi_{10,12}=-S_{2}^{T}+R_{6}\), \(\Phi_{11,11}=-Q_{4}-R_{2}-R_{6}\), \(\Phi _{11,12}=S_{2}^{T}\), \(\Phi_{12,12}=-Q_{6}-R_{4}-R_{6}\), \(\Phi_{13,13}=-U_{3}\), \(\Phi_{14,14}=-U_{4}\), and other terms are zeros. Then the gain matrices \(K_{1}\), \(K_{2}\) of state estimator are given by \(K_{1}=L_{1}^{-1}X\), \(K_{2}=L_{2}^{-1}Y\).

Proof

Consider the LKFs for the error state system (5) as follows:

where

where \(\eta_{1}(k)=e_{1}(k+1)-e_{1}(k)\), and \(\eta_{2}(k)=e_{2}(k+1)-e_{2}(k)\). We define forward difference of \(V_{i}(k)\) as \(\Delta V_{i}(k) =V_{i}(k+1)-V_{i}(k)\). Then the forward difference of \(V_{i}(k)\) can be derived as follows:

From Lemma 1, we can obtain

Using Lemma 2 gives

where \(\zeta_{1}(k)=e_{1}(k-h(k))-e_{1}(k-h_{M})\), \(\zeta_{2}(k)=e_{1}(k-h_{m})-e_{1}(k-h(k))\), \(\zeta_{3}(k)=e_{2}(k-d(k))-e_{2}(k-d_{M})\), \(\zeta_{4}(k)=e_{2}(k-d_{m})-e_{2}(k-d(k))\).

Obviously, \(\zeta_{1}(k)=0\), or \(\zeta_{2}(k)=0\), if \(h(k)=h_{M}\) or \(h(k)=h_{m}\). Similarly, \(\zeta_{3}(k)=0\), or \(\zeta_{4}(k)=0\), if \(d(k)=d_{M}\) or \(d(k)=d_{m}\).

Hence, for any matrices \(L_{1}\) and \(L_{2}\) with appropriate dimensions, we get

Moreover, it follows from Assumption 1 that

which is equivalent to

where \(e_{i}\) denotes the unit column vector having an element on its ith row and zeros elsewhere. For positive diagonal matrices \(U_{k}=\operatorname{diag}(u_{k1},u_{k2},\ldots,u_{kn})\), \(k=1,2,3,4\):

that is,

Similarly, for any positive diagonal matrices \(U_{2}\), \(U_{3}\), \(U_{4}\), we obtain

Combining all the above inequalities, we deduce

where \(u(k)\)=\([e_{1}^{T}(k+1)\),\(e_{1}^{T}(k)\),\(e_{1}^{T}(k-h(k))\),\(e_{1}^{T}(k-h_{m})\),\(e_{1}^{T}(k-h_{M})\),\(\xi _{1}^{T}(k)\),\(\xi_{1}^{T}(k-d(k))\), \(e_{2}^{T}(k+1)\),\(e_{2}^{T}(k)\),\(e_{2}^{T}(k-d(k))\),\(e_{2}^{T}(k-d_{m})\),\(e_{2}^{T}(k-d_{M})\),\(\xi _{2}^{T}(k)\),\(\xi_{2}^{T}(k-h(k))]^{T}\) and Φ is defined as in (8).

Combined (23) with (7) and (8), it can be deduced that there exists a scalar \(\sigma< 0\) and \(|\sigma| <\lambda _{\max}(P_{1})+\lambda_{\max}(P_{2})\) such that

Now we are in a position to prove the global exponential stability of the error system (5). Firstly, from the definition of \(V(k)\), it can be verified that

where \(\sigma_{1}=(h_{M}-h_{m}+1)\lambda_{\max}(Q_{1})+\lambda_{\max}(Q_{3})+\lambda _{\max}(Q_{5})+4h_{m}^{2}\lambda_{\max}(R_{1})+4h_{M}^{2}\lambda_{\max}(R_{3}) +4(h_{M}-h_{m})^{2}\lambda_{\max}(R_{5})\), \(\sigma_{2}=(d_{M}-d_{m}+1)\lambda_{\max}(Q_{2})+\lambda_{\max}(Q_{4})+\lambda _{\max}(Q_{6})+4d_{m}^{2}\lambda_{\max}(R_{2})+4d_{M}^{2}\lambda_{\max}(R_{4}) +4(d_{M}-d_{m})^{2}\lambda_{\max}(R_{6})\).

Consider the following equation:

The solution of equation (26)

Obviously \(r>1\).

It follows from (24) and (25) that

where \(\sigma_{3}=\sigma r+(r-1)(\lambda_{\max}(P_{1})+\lambda_{\max}(P_{2}))\), \(\sigma_{4}=(r-1)(\sigma _{1}+\sigma_{2})\).

Moreover, for any positive integer \(n\geq \max\{h_{M}+1,d_{M}+1\}\), by summing up both sides of (27) from 0 to \(n-1\) with respect to k, we get the following inequality:

Noting that the time-varying delays \(h_{M}\geq1\) and \(d_{M}\geq1\), we have

Similarly, we can get

Combining (28) with (29) and (30) yields

It follows from (25) that

where \(d=\max\{\lambda_{\max}(P_{1})+\sigma_{1}h_{M},\lambda_{\max}(P_{2})+\sigma_{2}d_{M}\} \). Again, from (9) we can observe that

Substituting (26), (32) and (33) into (31), we get

where \(\lambda=\frac{d+\sigma _{4}[h_{M}(h_{M}+1)r^{h_{M}}+d_{M}(d_{M}+1)r^{d_{M}}]}{\min\{\lambda_{\min}(P_{1}),\lambda _{\min}(P_{2})\}}\). Therefore, the error system (5) is globally exponentially stable. This completes the proof. □

Remark 2

A state estimator to the neuron states is designed through available output measurements in our paper. A new sufficient condition is established to ensure the global exponential stability of the error system (5) in this paper. The numerical complexity of Theorem 1 in this paper is proportional to \(13n^{2}+11n\). However, only the sufficient condition is established to ensure the global asymptotical stability of the error system in [27]. The numerical complexity of Theorem 3.1 in [27] is proportional to \(29n^{2}+25n\). It is obvious that Theorem 1 in this paper has lower computational complexity.

Corollary 1

Suppose that \(h(k)=h\), and \(d(k)=d\) are constant scalars. Then, under Assumption 1, the error system (5) is globally exponentially stable if there exist positive matrices \(P_{i}\), \(Q_{i}\), \(R_{i}\), \(i=1,2\), positive diagonal matrices \(U_{j}>0\) (\(j=1,2,3,4\)), and matrices \(L_{1}\), \(L_{2}\), X, Y of appropriate dimensions such that the following LMIs hold:

where \(\Phi _{1,1}=P_{1}+h^{2}R_{1}+L_{1}+L_{1}^{T}\), \(\Phi_{1,2}=-h^{2}R_{1}-L_{1}A+XM_{1}\), \(\Phi _{1,4}=-L_{1}C\), \(\Phi_{1,5}=-L_{1}E\), \(\Phi_{2,2}=P_{1}+Q_{1}+(h^{2}-1)R_{1}-G_{1}U_{3}\), \(\Phi_{2,3}=R_{1}\), \(\Phi_{2,9}=G_{2}U_{3}\), \(\Phi_{3,3}=-Q_{1}-R_{1}-G_{1}U_{4}\), \(\Phi_{3,10}=G_{2}U_{4}\), \(\Phi_{4,4}=-U_{1}\), \(\Phi_{4,7}=F_{2}U_{1}\), \(\Phi_{5,5}=-U_{2}\), \(\Phi_{5,8}=F_{2}U_{2}\), \(\Phi_{6,6}=P_{2}+d^{2}R_{2}+L_{2}+L_{2}^{T}\), \(\Phi _{6,7}=-d^{2}R_{2}-L_{2}A+YM_{2}\), \(\Phi_{6,9}=-L_{2}D\), \(\Phi_{6,10}=-L_{2}W\), \(\Phi _{7,7}=P_{2}+Q_{2}+(d^{2}-1)R_{2}-F_{1}U_{1}\), \(\Phi_{7,8}=R_{1}\), \(\Phi _{8,8}=-Q_{2}-R_{2}-F_{1}U_{2}\), \(\Phi_{9,9}=-U_{3}\), \(\Phi_{10,10}=-U_{4}\), and other terms are zeros.

4 Illustrative example

In this section, we provide a numerical example to illustrate the effectiveness of our result.

Consider system (1) and the measurement outputs (2) with the following parameters:

The activation functions are described by \(f_{1}(y(k))=0.8\tanh(y(k))\), \(f_{2}(y(k))=-0.4\tanh(y(k))\), \(g_{1}(x(k))=0.8\tanh(x(k))\), \(g_{2}(x(k))=-0.4\tanh(x(k))\). Further, it follows from Assumption 1 that \(F_{1}=G_{1}=\operatorname{diag}\{0,0\}\), \(F_{2}=G_{2}=\operatorname{diag}\{0.8,-0.4\}\).

By using the MATLAB LMI toolbox, we can obtain the feasible solution which is not given here due to the page constraint. If we choose the lower delay bound as \(h_{m}=d_{m}=7.0\), the upper delay bounds are \(h_{M}=d_{M}=90\), then the corresponding state estimation gain matrices \(K_{1}\) and \(K_{2}\) can be obtained as follows:

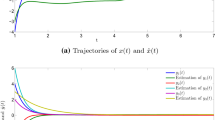

We choose the initial values \(x(0)=[0.5 ,0.9]^{T}\), \(\widehat {x}(0)=[-0.9 ,-0.4]^{T}\), \(y(0)=[0.7 ,0.9]^{T}\) and \(\widehat{y}(0)=[-0.2 ,-0.5]^{T}\). The simulation results for state response and estimation error are shown in Figures 1-6. Figures 1-4 show the response of the state dynamics of \((x(k),\widehat{x}(k))\) and \((y(k),\widehat{y}(k))\). The estimation errors \(e_{1}(k)\) and \(e_{2}(k)\) are shown in Figures 5 and 6. The result reveals that the error state goes to zero after a short period time. We conclude that system (3) is a proper estimator of the BAM neural networks (1), which also demonstrates the effectiveness of our design approach.

5 Conclusions

In this paper, the problem of state estimation for BAM discrete-time neural network with time-varying delays has been studied. Based on the delay partitioning method, the reciprocally convex approach and a new Lyapunov-Krasovskii functional, a new set of sufficient conditions which guarantee the global exponential stability of the error system is derived. A numerical example is presented to demonstrate that the new criterion has lower computational complexity than previously reported criteria.

References

Zhu, QX, Cao, JD: Robust exponential stability of Markovian impulsive stochastic Cohen-Grossberg neural networks with mixed time delays. IEEE Trans. Neural Netw. 21, 1314-1325 (2010)

Li, Y, Shao, YF: Dynamic analysis of an impulsive differential equation with time-varying delays. Appl. Math. 59, 85-98 (2014)

Guo, S, Tang, XH, Huang, LH: Stability and bifurcation in a discrete system of two neurons with delays. Nonlinear Anal., Real World Appl. 9, 1323-1335 (2008)

Liu, XG, Wu, M, Martin, R, Tang, ML: Delay-dependent stability analysis for uncertain neutral systems with time-varying delays. Math. Comput. Simul. 75, 15-27 (2007)

Liu, XG, Wu, M, Martin, R, Tang, ML: Stability analysis for neutral systems with mixed delays. J. Comput. Appl. Math. 202, 478-497 (2007)

Chen, P, Tang, XH: Existence and multiplicity of solutions for second-order impulsive differential equations with Dirichlet problems. Appl. Math. Comput. 218, 11775-11789 (2012)

Zang, YC, Li, JP: Stability in distribution of neutral stochastic partial differential delay equations driven by a-stable process. Adv. Differ. Equ. 2014, 13 (2014)

Wu, YY, Li, T, Wu, YQ: Improved exponential stability criteria for recurrent neural networks with time-varying discrete and distributed delays. Int. J. Autom. Comput. 7, 199-204 (2010)

Tang, XH, Shen, JH: New nonoscillation criteria for delay differential equations. J. Math. Anal. Appl. 290, 1-9 (2004)

Zhao, HY, Cao, JD: New conditions for global exponential stability of cellular network with delays. Neural Netw. 18, 1332-1340 (2005)

Liu, ZG, Chen, A, Cao, JD, Huang, LH: Existence and global exponential stability of periodic solution for BAM neural networks with periodic coefficients and time-varying delays. IEEE Trans. Circuits Syst. I 50, 1162-1173 (2003)

Liu, J, Zhang, J: Note on stability of discrete-time time-varying delay systems. IET Control Theory Appl. 6, 335-339 (2012)

Li, XA, Zhou, J, Zhu, E: The pth moment exponential stability of stochastic cellular neural network with impulses. Adv. Differ. Equ. 2013, 6 (2013)

Zhang, BY, Xu, SY, Zou, Y: Improved delay-dependent exponential stability criteria for discrete-time recurrent neural networks with time-varying delays. Neurocomputing 72, 321-330 (2008)

Wu, ZG, Su, HY, Chu, J: New results on exponential passivity of neural networks with time-varying delays. Nonlinear Anal., Real World Appl. 13, 1593-1599 (2012)

Pan, LJ, Cao, JD: Robust stability for uncertain stochastic neural networks with delays and impulses. Neurocomputing 94, 102-110 (2012)

Kosko, B: Bidirectional associative memories. IEEE Trans. Syst. Man Cybern. 18, 49-60 (1988)

Liu, XG, Tang, ML, Martin, R, Liu, X: Discrete-time BAM neural networks with variable delays. Phys. Lett. A 367, 322-330 (2007)

Liu, XG, Martin, R, Wu, M, Tang, ML: Global exponential stability of bidirectional associative memory neural network with time delays. IEEE Trans. Neural Netw. 19, 397-407 (2008)

Wang, Z, Daniel, W, Liu, X: State estimation for delayed neural networks. IEEE Trans. Neural Netw. 16, 279-284 (2005)

Mou, SH, Gao, HJ, Qiang, WY, Fei, ZY: State estimation for discrete-time neural networks with time-varying delays. Neurocomputing 72, 643-647 (2008)

Kan, X, Wang, ZD, Shu, HS: State estimation for discrete-time delayed neural networks with fractional uncertainties and sensor saturations. Neurocomputing 17, 64-71 (2013)

He, Y, Wu, QG, Lin, C: Delay-dependent state estimation for delayed neural networks. IEEE Trans. Neural Netw. 17, 1077-1081 (2006)

Liang, JL, Lan, J: Robust state estimation for stochastic genetic regulatory networks. Int. J. Syst. Sci. 41, 47-63 (2010)

Wang, Z, Liu, Y, Liu, X: State estimation for jumping recurrent neural networks with discrete and distributed delays. Neural Netw. 22, 41-48 (2009)

Liu, YR, Wang, ZD, Liu, XH: State estimation for linear discrete-time Markovian jumping neural networks with mixed mode-dependent delays. Phys. Lett. A 372, 7147-7155 (2008)

Arunkumar, A, Sakthivel, R, Mathiyalagan, K, Marshal Anthoni, S: Robust state estimation for discrete-time BAM neural networks with time-varying delay. Neurocomputing 131, 171-178 (2014)

Sakthivel, R, Vadivel, P, Mathiyalagan, K, Arunkumar, A, Sivachitra, M: Design of state estimator bidirectional associative memory neural network with leakage delays. Inf. Sci. 296, 263-274 (2015)

Lu, CY: A delay-range-dependent approach to design state estimators for discrete-time recurrent neural networks with interval time-varying delay. IEEE Trans. Circuits Syst. II, Express Briefs 55, 1163-1167 (2008)

Lu, CY, Cheng, JC, Su, TJ: Design of delay-range-dependent state estimators for discrete time recurrent neural networks with interval time-varying delay. In: Press, I (ed.) Proceedings of the American Control Conference, Washington, 11-13 June 2008, pp. 4209-4231 (2008)

Kwon, OM, Park, MJ, Park, JH, Lee, SM, Cha, EJ: New criteria on delay-dependent stability for discrete-time neural networks with time-varying delays. Neurocomputing 121, 185-194 (2013)

Park, P, Ko, J, Jeong, C: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47, 235-238 (2011)

Acknowledgements

The authors would like to thank the reviewers for their valuable comments and constructive suggestions. This project is partly supported by the National Natural Science Foundation of China under Grants 61271355 and 61375063 and Zhong Nan Da Xue Foundation under Grant 2015JGB21.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally and significantly in writing this paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Qiu, S., Liu, X. & Shu, Y. New approach to state estimator for discrete-time BAM neural networks with time-varying delay. Adv Differ Equ 2015, 189 (2015). https://doi.org/10.1186/s13662-015-0498-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-015-0498-3