Abstract

This work introduces a large dataset comprising impulse responses of spatially distributed sources within a plane parallel to a planar microphone array. The dataset, named MIRACLE, encompasses 856,128 single-channel impulse responses and includes four different measurement scenarios. Three measurement scenarios were conducted under anechoic conditions. The fourth scenario includes an additional specular reflection from a reflective panel. The source positions were obtained by uniformly discretizing a rectangular source plane parallel to the microphone for each scenario. The dataset contains three scenarios with a spatial resolution of \(23\,\textrm{mm}\) at two different source-plane-to-array distances, as well as a scenario with a resolution of \(5\,\textrm{mm}\) for the shorter distance. In contrast to existing room impulse response datasets, the accuracy of the provided source location labels is assessed and additional metadata, such as the directivity of the loudspeaker used for excitation, is provided. The MIRACLE dataset can be used as a benchmark for data-driven modelling and interpolation methods as well as for various acoustic machine learning tasks, such as source separation, localization, and characterization. Two timely applications of the dataset are presented in this work: the generation of microphone array data for data-driven source localization and characterization tasks and data-driven model order reduction.

Similar content being viewed by others

1 Introduction

A room impulse response (RIR) characterizes the linear time-invariant acoustic propagation between a source and a receiver within a specific acoustic environment. RIRs are crucial for sound field auralization [1] as well as in the realm of room acoustics, where they are used for estimating acoustic properties of a room such as the reverberation time [2].

The emergence of data-driven methods in acoustics [3], particularly deep learning methods, has sparked increasing interest in the availability of rich, high-quality RIR datasets. These datasets play a pivotal role in the training of data-driven (interpolatory) sound field reconstruction methods [4,5,6,7], deep generative models [8, 9], and augmentation methods [10]. In addition, RIR datasets can be flexibly employed in order to synthesize acoustic training data for source localization and characterization [11], sound event detection, and speech separation tasks by convolving arbitrary source signals with RIRs [12,13,14]. The same synthesis procedure can be employed for data-driven acoustic parameter estimation problems, such as blind reverberation time estimation [15, 16] and others [17].

While data-driven methods often exhibit superior performance compared to conventional model-based methods, they require large amounts of realistic training data and are sensitive to variations of underlying probability distributions describing the data, also known as dataset shift [18]. Experimental data is oftentimes not available or too time-consuming to acquire. Many data-driven methods across various application areas, such as speech enhancement and recognition [19, 20], localization [11, 21], sound field reconstruction [22], room acoustic parameter estimation [23, 24], and acoustical engineering [25,26,27], are therefore trained with simulated data, whereby enhanced realism helps to improve generalization performance [28, 29]. However, without adaptation to or training with realistic data, the performance of data-driven methods can be significantly impaired [25, 30], which indicates the need for experimentally measured RIR datasets.

1.1 State of the art

In contrast to the diverse analytical and data-driven frameworks for the simulation of RIRs proposed in the past [8, 31,32,33,34], the availability of openly available experimental RIR datasets is limited. Moreover, the landscape of RIR datasets is rather heterogeneous because the experimental data is usually acquired with a certain application in mind. This manifests in application-specific environments, customized source-receiver arrangements, and non-standardized dataset annotations.

In some datasets, the focus lies on environmental variability through different room geometries and reverberation times [35,36,37,38,39,40]. The SoundCam dataset [35] poses an extreme example in which the environmental characteristics of four rooms are manipulated by placing humans at hundreds of locations in the rooms, leading to an enormous amount of environmental representations. These datasets are of particular interest in speech recognition, teleconferencing, and sound event detection applications. While in some of these datasets, the sensors are distributed over the room [35, 36], others rely on compact microphone arrangements with only a few channels and aperture sizes that are small compared to the source distance [39,40,41]. The latter is often the case in speech and sound event localization applications. Only very few datasets provide additional annotations of the environmental characteristics, such as echo annotations, which enable their use for acoustic parameter estimation problems [40].

Conversely, there are application fields where spatial diversity and accurate positional annotations are similarly or even more important than environmental variability. In acoustical engineering applications, microphone array measurements for determining source locations and individually induced sound pressure at a specific receiver position are widely used to implement noise reduction measures [42]. To accurately estimate these source characteristics, free-field conditions or laboratory environments with a short reverberation time are preferred. Usually, the utilized microphone arrangements are planar and contain many channels spread over a large area to ensure accurate spatial filtering capabilities over a wide frequency range. Typical examples include the measurement of machinery noise and wind turbines. Oftentimes, multiple closely neighbouring sound sources can be expected. There exist datasets that employed mechatronic positioning devices, which offer a higher positional accuracy over manual positioning, to achieve a dense sampling of the acoustic space under reverberant [43,44,45] or anechoic conditions [46]. For example, the Pyramic dataset [46] was acquired by rotating a tetrahedral microphone array with a turntable device using fixed loudspeaker positions. However, the accuracy of the acquired positional information is rarely assessed in the literature [46]. A limitation of these datasets is that either the number of sources or receivers can be considered as small [39, 43, 44, 46], which prohibits their use in engineering acoustic applications where a dense spatial sampling of the source and receiver space is required.

Another discipline with similar data requirements is the spatial interpolation of room impulse responses [5,6,7, 47]. Due to the large spatial variability of RIRs [47], dense sampling grids have to be employed in order to adequately capture the entire range of dynamics, especially at high frequencies. The absence of large and spatially dense RIR datasets entails that thorough assessment of interpolation methods has only been performed with synthetic data so far [5, 7].

Regardless of the application, only a few large-scale datasets encompassing several thousand RIRs, the largest of which is presented in this work. These datasets are outlined in Table 1. In addition to these larger datasets, there are several popular datasets with a smaller number of RIRs, for example [38,39,40, 48,49,50,51,52,53,54,55]. Large and realistic RIR datasets are of great interest to many scientific communities, especially those concerned with the development of novel data-driven modelling algorithms. The mathematical field of model order reduction (MOR) [56], for example, is rapidly developing data-driven methods [57,58,59]. However, in the majority of cases, new methods are validated only with synthetic data of common benchmark problems [60,61,62] and the validation of these methods in realistic scenarios with high-dimensional data is still pending. Providing well-documented high-dimensional measurement data is an essential element that can enable these communities to increase the applicability of their methods to real problems.

In this work, we introduce a large measured room impulse response (RIR) dataset, which we call “Microphone Array Impulse Response Dataset for Acoustical Learning” (MIRACLE). The dataset is tailored to the application field of sound source localization and characterization in acoustical engineering applications, and spatial interpolation of RIRs. The focus of the dataset lies on spatial rather than on environmental variability and accurate positional annotations.

Its key highlights are:

-

1.

MIRACLE is the first RIR dataset with a dense sampling of the environment in both source and receiver space.

-

2.

Particularly accurate spatial sampling is achieved by using a mechatronic positioning device to control the loudspeaker position. In contrast to most of the previously published datasets, the accuracy of the source positions (positional labels) is statistically validated. The assessment reveals outstandingly accurate and precise source locations with positional uncertainties in the order of a few millimetres.

-

3.

With a total of 856,128 captured room impulse responses and dense spatial sampling of the observation area, the dataset can be well suited for machine learning and deep learning.

-

4.

This paper presents two important applications of the dataset. As will be shown in Section 4.1, the dataset is well suited to construct acoustic source cases with possibly closely neighbouring sources by superimposing signals that have been convolved with the provided RIRs. In Section 4.2, reduced order state-space models are constructed from the MIRACLE dataset, demonstrating that it can serve as an excellent real-world benchmark problem for data-driven reduced order modelling methods.

2 Materials and methods

2.1 Experimental setup

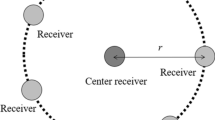

The experimental setup is illustrated in Fig. 1. Details on the utilized hardware are given in Table 2.

2.1.1 Microphone array

The phased microphone setup features a planar microphone array comprising \(n_{\textrm{o}}=64\) channels mounted in a \(1.5\,\text {m} \times 1.5\,\text {m}\) aluminium plate. The microphone arrangement follows Vogel’s spiral [63]. The maximum pairwise distance between the array microphones is referred to as the aperture size \(d_{\textrm{a}} = 1.47\,\text {m}\). The microphone array data was acquired with a multichannel acquisition system (sampling rate = \(51.2\,\text {kHz}\)).

2.1.2 Sound source and excitation signal

A dynamic 2” cone loudspeaker in a cylindrical 3D-printed enclosure was employed as the sound source. An exponential sine sweep was used as the excitation signal because of its favourable properties with regard to crest factor and rejection of non-linearities [64]. It was designed according to [65,66,67] in the frequency range of the loudspeaker, namely from \(100\,\text {Hz}\) to \(16\,\text {kHz}\). Because the anechoic chamber is nearly free of reflections and has very low noise levels, it was possible to choose a relatively short sweep time of \(3\,\text {s}\) for the measurement. In order to ensure that the entire system response after excitation is captured, a safety window of \(250\,\text {ms}\) was added to the recording duration, resulting in \(n_s=166{,}400\) samples per measurement. The loudspeaker excitation signal was also fed back directly to the AD-converter and was synchronously recorded with the microphone signals as a reference signal for post-processing. It is referred to as the loopback excitation signal in the following.

2.1.3 Positioning

A high-precision motor-driven 2D positioning system was employed for loudspeaker positioning. The positioning system and the microphone array were manually aligned by using a laser distance meter and a cross-line laser, achieving only minor alignment errors of a few millimetres at worst. The loudspeaker dust cap at the membrane centre was used as reference in the manual alignment. During data post-processing, a spatial offset correction was applied based on a statistical evaluation given in Section 3.3. The corrected positions apply to the acoustical centre of the loudspeaker rather than the centre of the membrane.

2.1.4 Environment

All measurements were performed in the anechoic chamber of TU Berlin (room volume \(V=830\,\text {m}^3\), lower cut-off frequency \(f_{\textrm{c}}=63\,\text {Hz}\)). Neither heating nor air conditioning was active, and the temperature was monitored at the microphone array centre throughout the experiment. A ground plate was placed between the loudspeaker and the microphone array in one of the experimental scenarios to enable a reflective environment. The supporting grid platform and the positioning system were clad with absorptive foam to minimize reflections.

2.2 Experimental procedure

A customized and fully automated data acquisition procedure was implemented. Before each experiment, the loudspeaker was repeatedly excited with the excitation signal for a duration of 20 min (the duration was determined in a dedicated experiment). This warm-up phase accounts for the weakly non-stationary dynamics of the loudspeaker’s transfer function, e.g., changes of the properties of the loudspeaker magnet related to internal temperature fluctuations; see [68]. Subsequently, the actual measurement routine was started by positioning the loudspeaker at the desired source location and measuring the room temperature simultaneously. After positioning, two repetitions of background noise measurement (\(1\,\text {s}\) each) and loudspeaker excitation measurements (\(3\,\text {s}\) each) were performed using all \(n_{\textrm{o}}\) microphones at once. Subsequently, the cross-correlation between all \(n_{\textrm{i}}\) recorded channels was evaluated according to the rule of two [69]. Based on the measured sweep signals and the noise signal, the rule of two defines a cross-correlation threshold at which a pair of measured sweeps can be regarded free of corruption. In case of any violations, the measurement was repeated automatically.

Following the main measurement campaign, an additional measurement was conducted in the anechoic chamber to obtain the angle-dependent frequency response of the loudspeaker at discrete azimuth angles at a resolution of \(\Delta \theta = 2.5^\circ\). A microphone was placed at a distance of \(0.5\,\text {m}\) from the loudspeaker centre. The latter was mounted on a motor-driven dispersion measurement turntable. A photograph of the measurement setup can be found in Fig. 2. The same excitation signal and processing parameters as in the previous measurement campaign were used to determine the loudspeaker impulse response. Due to the cylindrical enclosure enclosing the loudspeaker, rotational symmetry around the z-axis can be assumed.

2.3 Post-processing

Several post-processing steps were performed to obtain a good estimate of the system impulse response from the measurements. Firstly, the loopback excitation and microphone signals were averaged across the two measurement repetitions to obtain a single averaged excitation signal \(\tilde{u}_{i,j}\in \mathbb {R}^{n_s}\) and averaged microphone signal \(\tilde{y}_{i,j}\in \mathbb {R}^{n_s}\) at the i-th source to the j-th receiver location, respectively. According to that, all signals were resampled to a sampling rate of \(f_{\textrm{d}}=32\,\text {kHz}\) since the loudspeaker transmission capability and excitation sweep have an upper frequency limit of \(16\,\text {kHz}\). We applied the polyphase method for resampling (see [70] for details).

2.3.1 Deconvolution

In the following, let \(n_{\textrm{d}}=104{,}000\) denote the number of samples after resampling. An estimate of the frequency response was obtained by dividing the Discrete Fourier Transform (DFT) of the averaged and downsampled measurement signals \(Y_{i,j}=\textrm{DFT}(\tilde{y}_{i,j})\in \mathbb {C}^{n_{\textrm{d}}}\) by the corresponding DFT of the averaged and resampled loopback excitation signals \(U_{i,j}=\textrm{DFT}(\tilde{u}_{i,j})\in \mathbb {C}^{n_{\textrm{d}}}\), i.e.

for the angular frequency \(\omega _k=2\pi k/n_{\textrm{d}}\) with \({k\in [-n_{\textrm{d}}/2,n_{\textrm{d}}/2]\subset \mathbb {Z}}\). The inverse spectra \(U_{i,j}^{-1}\in \mathbb {C}^{n_{\textrm{d}}}\) were obtained by regularized inversion [67, 71,72,73,74,75]

where \(M=\max _{k\in \{1,\,\dots ,\,n_{\textrm{d}}\}}\{|U_{i,j}\left( e^{\imath \omega _k}\right) |^2\}=1\). Regularization is necessary to avoid instabilities in the deconvolved frequency response that arise from persistently exciting only over a limited frequency range. Practical considerations for choosing the regularization term in acoustic applications can be found in [67]. The regularization term \(\lambda \in \mathbb {R}^{n_{\textrm{d}}}\) was chosen as

such that the regularization term \(\lambda \left( e^{\imath \omega _k}\right)\) is equal to 0 above the cutoff frequency

which is chosen according to the lower limit of the loudspeaker’s frequency range of \(100\,\text {Hz}\) and equal to 1 below \(\omega _{\text {fade}}=\frac{\omega _{\text {cut}}}{\sqrt{2}}\). A cross-fade based on a Hann window (raised-cosine) is used to smoothly transition in between. The estimate of the frequency response \(H_{i,j}\) was then transformed back to the time domain to finally obtain the impulse response

2.3.2 Truncation

The calculated impulse responses were subsequently truncated in order to contain the size of the final dataset. For user convenience, the impulse responses of all measurement scenarios were truncated identically. For this, the minimum cumulative energy \(e\in \mathbb {R}^{n_{\textrm{d}}}\) given by

was calculated for each scenario. The truncation index \(n_t\) was chosen to be the smallest power of two that is larger than the time index for which \(0.1\,\%\) of the energy is truncated at worst, namely

3 Results and discussion

3.1 Impulse responses

A total of four different experimental scenarios were realized, which are summarized in Table 3. The acquisition time for each of the large-scale scenarios A1, A2, and R2 was about 20 h. The total number of single-channel impulse responses across all scenarios is 856,128. The scenarios differ regarding the environment as well as the spatial dimension (\(d_y = d_x\)), sampling resolution (\(\Delta d_y = \Delta d_x\)), and distance \(d_z\) of the source plane. The two large anechoic scenarios A1 and A2 each include 4096 measured source positions on an equidistantly spaced \(64\times 64\) grid at different source-plane distances \(d_z\). In addition, a densely sampled scenario D1 was acquired on a smaller \(33\times 33\) grid with a spacing of only \(5\,\text {mm}\). Scenario R2 is based on the same geometric setup as scenario A2, but an aluminium plate on the floor introduces a specular reflection.

Figures 3 and 4 exemplarily show the measured impulse response and its magnitude spectrum for a single source-receiver combination for scenarios A1, A2, and R2, respectively. It can be readily verified that the doubling of the distance to the source is also reflected in a doubling of the delay shift and an attenuation of the magnitude spectrum by approximately \(-6\text {dB}\). Furthermore, the specular reflection for scenario R2 manifests in a prominent second peak in the impulse response and comb filtering in its magnitude spectrum. Additional reflections manifesting as spurious peaks in the impulse response are due to the structure of the positioning system and the supporting grid platform.

The mean and standard deviation of temperature and the speed of sound for each of the scenarios are given in Table 4. The speed of sound has been calculated according to [76, 77]Footnote 1. It reveals that the temperature and the speed of sound are almost identical across all scenarios with an absolute difference of \(\Delta \mu <1\,^{\circ }\textrm{C}\) and \(\Delta \mu \le 0.6\,\frac{m}{s}\), respectively, which is expected due to the fairly constant environmental conditions inside the anechoic chamber.

3.2 Loudspeaker directivity

Figure 5 shows the directivity \(\textrm{D}\) and the directivity index \(\textrm{DI}\) of the loudspeaker measured with a dispersion measurement turntable in the azimuthal plane. In this work, the directivity is defined as the ratio between the measured squared sound pressure \(p_{\textrm{RMS}}(\theta , f)\) at an angle \(\theta\) and the maximum among all angles, i.e.

The directivity index under the assumption of rotational symmetry is expressed as

where \(p_{\textrm{RMS}}^2(0, f)\) represents the squared sound pressure in front of the speaker.

It is seen that the loudspeaker exhibits a radiation pattern similar to a monopole until an upper frequency of \(2\,\text {kHz}\). Above this frequency, the directivity index increases. Still, the directivity observed by the microphone array is close to a monopole at relevant radiation angles, i.e. \(\theta \le \theta _{\textrm{max}}=67.3^{\circ }\), as indicated by the dashed line in Fig. 5.

3.3 Positional validation

Several uncertainty factors affected the spatial alignment precision regarding the microphone array centre and the centre of the observation area. These factors include measurement uncertainties with regard to the utilized cross-line laser and distance meter as well as mechanical backlash, which occurred primarily with horizontal changes of direction. Therefore, a systematic spatial offset within the range of a few millimetres can be assumed.

Due to the anechoic environment and the use of a large-scale microphone array enabling an excellent spatial resolution, Conventional Frequency Domain Beamforming [42] serves as an appropriate method to obtain an estimate of the actual source location. The large number of acoustic cases also permits a statistical approach to determine the spatial offset for a measurement scenario and to quantify the uncertainty regarding the source position information.

3.3.1 Beamforming

Let \(\omega _k=2\pi k/n_{\textrm{d}}\) with \({k\in [-n_{\textrm{d}}/2,n_{\textrm{d}}/2]\subset \mathbb {Z}}\) and let

denote the transfer function measurements from the i-th source at location \(x_{\textrm{s}}\) for \(i\in \{1,\,\dots ,\,n_{\textrm{i}}\}\) to each of the \(n_{\textrm{o}}\) microphones. The cross-spectral matrix induced by a sound source with unit strength is then given by

The beamforming result for an assumed source location \(x_{\textrm{s}}\in \mathbb {R}^{3}\) is then given by the square of the C-weighted norm of the steering vector \(a(x_{\textrm{s}},\omega _k)\in \mathbb {C}^{n_{\textrm{o}}}\), i.e.

Many formulations of the steering vector can be found in the literature. The formulations I and IV in [78] result in a coincidence of the beamformer’s steered response power maximum and the actual source location for a single monopole source radiating under free-field conditions. In this work, formulation IV was used, which defines the entries of a via

where \(r_j= \left\| x_{\textrm{s}}-x_j\right\| _2\) is the distance between the assumed source location \(x_{\textrm{s}}\) and the j-th microphone location \(x_j\), and \(r_0=\left\| x_{\textrm{s}}-x_0\right\| _2\) is the distance between \(x_{\textrm{s}}\) and the reference position, in this case the origin of the coordinate system.

Validation of each measured source position commenced with the spatial discretization of a neighbourhood around the assumed source position. A \(201\times 201\) equidistantly spaced focus-grid with a resolution of \(\Delta x=0.5\,\text {mm}\) was employed. The beamforming map was computed on the discretized region for every frequency in the range

which was chosen such that the lower frequency limit \(f_{\textrm{l}}=2\,\text {kHz}\) enabled a sufficiently large spatial resolution in the resulting beamforming map, and the upper frequency limit \(f_{\textrm{u}}=4\,\text {kHz}\) ensures that the wavelength is larger than the loudspeaker diameter. The latter is important to ensure that the loudspeaker has a radiation pattern close to a monopole at relevant radiation angles in order to meet the monopole assumption needed for the steering vector formulation. As indicated by the dashed line in Fig. 5, the radiation angle from the loudspeaker to any microphone in the array is bounded by \(\theta _{\textrm{max}}=67.3^{\circ }\). The global spatial maximum is then determined by

where \(\hat{b}(x_s, \omega )\) denotes the amplitude normalized beamforming result

with \(b(\hat{x}_s, \omega )\) being the beamformer’s maximum output among all source locations \(x_s\) at a given frequency \(\omega\). The evaluation was conducted for different distances within a range of up to \(\pm 12\,\text {mm}\) around the assumed source distance with a sampling interval of \(\Delta z = 1\,\text {mm}\) to account for a potential mismatch of the source plane distance. Finally, the positional offset between the beamformer’s prediction and the assumed source position is determined by \(\Delta x_{i} = \hat{x}_{i} - x_{i}\).

3.3.2 Statistical evaluation

The systematic positional offset between the centre of the observation area and the microphone array in the horizontal and vertical direction can be statistically determined by using the estimates \(\Delta x_{i} \in \mathbb {R}^2\) for each individual measured source position. Thereby, each estimated positional deviation \(\Delta x_{i}\) can be seen as a realization of the jointly distributed random variables \(R_x, R_y\) with the joint Probability Density Function (PDF) \(f_{R_x, R_y}(\Delta x_{i})\). It is assumed that the individual positional offset estimations \(\Delta x_i\) are symmetrically distributed around the true positional offset due to the approximate symmetry of the microphone array and observation plane around the origin. Then, the true positional offset corresponds to the deviation associated with the greatest probability. A simple method to determine the joint PDF of jointly distributed random variables based on a finite set of samples is the kernel density estimation [79], denoted by

where N refers to the sample size and \(K_h\) is the so-called kernel. A bivariate Gaussian kernel with bandwidth h was used, where h was chosen according to the Silverman’s rule of thumb [80].

3.3.3 Offset correction

The correction procedure’s first step was determining the distance \(\Delta z\) between the loudspeaker and the microphone array plane for the experiments \(\{A1, D1\}\) and \(\{A2\}\). The joint PDF was estimated individually for each evaluated distance \(\Delta z\). Note that source cases from experiment R2 were excluded from the statistical evaluation since the ground plate reflections would introduce an additional disruptive factor in the positional estimation. It is assumed that the true distance minimizes the variance among any direction associated with \(\hat{f}_{R_x, R_y}(\Delta x_{i})\), i.e. the spectral norm of the covariance matrix \(\Sigma _{\Delta x_{i}}(\Delta z)\) is minimized, such that

Figure 6 shows the joint PDF with the smallest spectral norm for the experiments \(\{A1, D1\}\) and \(\{A2\}\). Based on the joint PDF corresponding to the optimal distance correction \(\Delta z\), the true positional offset in vertical and horizontal direction is determined from the maximum of the corresponding marginal distributions depicted in Fig. 7. Table 5 shows the positional offset correction values for each of the experiments.

Estimated joint PDF of the positional deviations between the beamforming results and the assumed source positions. The inner black circle corresponds to the outer rim of the loudspeaker and the outer black circle indicates the outer rim of the enclosure box (left: Experiments {A1, D1}, right: Experiment A2)

Marginal distribution functions characterizing the positional offset between the microphone array and the observation plane (left: Experiments {A1, D1}, right: Experiment A2). The dashed line indicates the positional offset corresponding to the maximum of the corresponding PDF. The dotted lines indicate the 2.5% and 97.5% percentiles

With the correction offset applied, one can conclude that the positional uncertainties regarding the true source positions are in the order of a few millimetres. Given the 2.5 and 97.5 percentiles of the marginal distributions, the positional uncertainty is in the range of \([-3.6\,\text {mm}, 3.4\,\text {mm}]\) in x-direction and \([-2.1\,\text {mm}, 3.5\,\text {mm}]\) in y-direction for the experiments \(\{A1, D1\}\). Regarding the experiments \(\{A2,R2\}\), the positional uncertainty is in the range of \([-4.9\,\text {mm}, 1.4\,\text {mm}]\) in x-direction and \([-2.6\,\text {mm}, 3.7\,\text {mm}]\) in y-direction.

4 Applications

Experimental measurement of acoustical systems is rarely an end in itself. Usually, one utilizes the measurements to infer system properties or data-driven models of the system at hand. Given its substantial size and precision, the MIRACLE dataset can be applied to a variety of research applications. In the following, two timely and crucial acoustic research areas are addressed: firstly, source localization and characterization, and secondly, data-driven reduced order modelling.

4.1 Acoustic source localization and characterization

In recent years, there has been extensive investigation into data-driven methods, particularly deep learning models, to solve source localization and characterization problems [11]. Localization focuses on determining the positions of one or multiple sound sources, whereas source characterization entails quantifying additional characteristics, such as their individual strength at a specific spatial reference position. Due to the scarcity of suitable experimental data, it is common to leverage synthetically generated microphone array data, with only few studies considering real-world data already during training [25]. It remains an open research question how strongly the performance of data-driven methods is affected by this oversimplification and how this performance degradation could be mitigated.

4.1.1 Microphone array data generation

One way to overcome the lack of experimental data is to generate microphone array data via a convolution of experimental RIRs with arbitrary source signals in a Monte Carlo simulation. In order to facilitate reproducibility in machine learning, the training data has to be published alongside the source code. Providing the immutable raw microphone array data of the Monte Carlo simulation would lead to infeasibly large storage requirements. As a lightweight alternative, a code environment of the data simulation process can be published. This enables the generation of microphone array data on demand, which not only alleviates the problem of storage but can also greatly accelerate the training process. This approach is taken by the AcouPipe framework [81], a previous work of the authors.

AcouPipe is an open-source project written in Python programming language that incorporates a default dataset generation method that constructs source cases with an arbitrary number of sound sources. As of AcouPipe v24.04 [82], the AcouPipe framework includes an additional experimental dataset generation method that utilizes the MIRACLE dataset per default. Both the synthetic and the experimental data generation method rely on similar measurement setups. A Monte Carlo simulation is used to randomly generate multi-source scenarios by superposition. By default, the number of sources and their respective positions are drawn from a Poisson distribution \(I \sim \mathcal {P}(\lambda =3)\) and a bivariate normal distribution \((x_i, y_i) \sim \mathcal {N}(\mu =0, \sigma =0.1688\,d_{\textrm{a}})\) for each individual source case. Artificial white noise is used as the source signal with a signal length of five s. The signal amplitudes are chosen according to the desired squared RMS sound pressure at the reference position. The latter is drawn from a Rayleigh distribution, such that \(p_{\textrm{RMS},i}^2 \sim \mathcal {R}(\sigma _{R}=5)\). Finally, the sound pressure signals at the microphones are obtained by superposition and convolution of the respective RIRs with the source signals

Uncorrelated white noise \(n_j(t)\) is added to each microphone channel. A more detailed description of the underlying dataset generation procedure can be found in [81].

Listing 1 Python source code snippet demonstrating multi-source scenario generation based on the MIRACLE dataset

To demonstrate the ease of use, Listing 1 exhibits a Python source code example that generates multi-source scenarios based on the MIRACLE dataset. Here, a Dataset instance is constructed, utilizing the RIRs from scenario A1 for the generation of 1000 validation samples. With AcouPipe, it is not only possible to obtain the raw time data but also to extract several features. The features include a source mapping of the sources and their locations. By default, conventional beamforming is used to calculate the source mapping. Figure 8 shows the beamforming map related to the seventh sample of the validation dataset.

Theoretically, very large numbers of experimental scenarios can be created by superimposing the individual measurements of the MIRACLE dataset. For example, when considering unordered sampling without replacement

more than eight million different source cases can be constructed when considering scenario A1 and two sources. The number of possible combinations increases significantly when sources of different strengths or even more than two sources are combined.

We believe that both the synthetic and the experimental data provide an excellent basis to address and answer up-to-date research questions related to data-driven source localization and characterization tasks, such as: How strong are synthetically trained source characterization models influenced by the domain shift when evaluated with data acquired in a real environment? Which domain generalization and adaptation methods are suitable to overcome the performance degradation due to the domain shift? How do existing source localization and characterization methods generalize to real-world scenarios?

4.2 Reduced order modelling

System measurements are usually performed with a certain goal in mind, e.g. inferring system properties from the data, such as the reverberation time. In many cases, one would like to obtain a model for the dynamical system transmission, i.e. the mapping from an input signal to an output signal. A common and apparent approach to obtaining a model for the system dynamics from RIR measurements is to employ them as a convolution kernel \(h\in \ell _2^{n_{\textrm{o}}\times n_{\textrm{i}}}\) via the convolution sum

where \(u\in \ell _2^{n_{\textrm{i}}}\) and \(y\in \ell _2^{n_{\textrm{o}}}\) are the input and output signal, respectively, and \(\ell _2\) denotes the Hilbert space of square-summable sequences. This approach was also pursued in the previous section. However, it can be unfavourable with regard to computational effort and storage requirements, especially for larger systems with many inputs and/or outputs, such as densely sampled RIRs, because the computational effort scales with the product of the number of inputs and outputs [83]. Furthermore, systems whose dynamics are governed by a low number of weakly damped modes, e.g. small room acoustics, possess long impulse responses but are otherwise described by a simple model. In a convolution-based approach, there are no effective means to eliminate redundancies in the data, making the dynamical transmission of aforementioned systems unnecessarily expensive to compute.

As argued in [83, 84], state-space models can be computationally advantageous, especially in real-time scenarios, and provide access to powerful system-theoretic MOR methods [56]. MOR is a very active research field that aims to find small models that approximate the dynamics of a large and potentially infeasible so-called full order model as closely as possible. Instead of firstly identifying a large full order model from data and then applying MOR methods, a reduced order model can also be inferred directly from the data. This is oftentimes referred to as data-driven model order reduction or reduced order modelling.

In the following, discrete-time state-space models are briefly introduced, and the applicability of the Eigensystem Realization Algorithm (ERA) [85,86,87] to the MIRACLE dataset is demonstrated.

4.2.1 State-space models

A discrete-time linear time-invariant dynamical system with \(m\in \mathbb {N}\) inputs, \(p\in \mathbb {N}\) outputs can be described by the matrix quadruple (A, B, C, D) with \(A\in \mathbb {R}^{n \times n}\), \(B\in \mathbb {R}^{n \times m}\), \(C\in \mathbb {R}^{p \times m}\), and \(D\in \mathbb {R}^{p \times m}\), where \(n\in \mathbb {N}\) is the order of the system. Its dynamics are governed by the discrete-time state equations

for input \(u\in \ell _{2}^{m}\), output \(y\in \ell _{2}^{p}\), and state \(x\in \ell _{2}^{n}\). In our concrete case, u denotes the \(n_{\textrm{i}}\)-dimensional input signal at the source locations, and y denotes the \(n_{\textrm{o}}\)-dimensional output signal at the array microphones. Being an LTI system, the input-output behaviour of the state-space system is fully described by the convolution sum (21), where the impulse response \(h\in \ell _{2}^{p\times m}\) is given by \(h(t)=CA^{t-1}B\) in discrete-time. This can be easily verified from (22) by applying an impulse input \(u(t)=\delta _{t0}\) with zero initial condition \(x(0)=0\). A frequency domain description can be obtained by applying the z-transform to (22). The z-transform of output \(Y(z)\in \mathbb {C}^{p}\) is given by a multiplication of the z-transform of the input \(U(z)\in \mathbb {C}^{m}\) with the transfer function

4.2.2 Eigensystem Realization Algorithm

The transfer function in (23) is a matrix-valued rational function that can be approximated by a Padé approximation [88]. The moments of the transfer function for expansion at infinity, i.e. \(h_i=\frac{\textrm{d}^i}{\textrm{d}z^i}H(z)\big |_{z=\infty }\), are referred to as the Markov parameters of the system. Intriguingly, the impulse response is equivalent to the sequence of Markov parameters in discrete time, i.e. \(h(t)=h_t=CA^{t-1}B\). This connection is leveraged by ERA in the following way: Given a finite sequence \(h\in \ell _{2}^{p\times m}\) of \(2s-1\), \(s\in \mathbb {N}\) Markov parameters, a matching state-space model can be identified via the Hankel matrix of Markov parameters

For discrete-time systems, the Markov parameters can be conveniently obtained by measurements of the system impulse response. The Hankel matrix can be factored into the observability and controllability matrix \(\mathcal {O}\in \mathbb {R}^{ps \times n}\) and \(\mathcal {C}\in \mathbb {R}^{n \times ms}\):

From this factorization, a realization can be constructed via

where \(\mathcal {O}_{f}\) and \(\mathcal {O}_{l}\) denote the first and last \(p(s-1)\) rows of the observability matrix \(\mathcal {O}\in \mathbb {R}^{ps \times n}\), respectively [86, 87]. Classically, the factorization is computed from a (truncated) singular value decomposition (SVD), i.e. for a truncation order \(r\le \min \{ms,\,ps\}\)

where \(U_{r}\in \mathbb {R}^{ps \times r}\) comprises the first r left singular vectors as columns, \(\Sigma _{r}=\text {diag} \left( {\sigma _{1},\,\dots ,\,\sigma _{r}}\right)\) the first r singular values with \(\sigma _1\le \dots \le \sigma _r\) and \(V_{r}\in \mathbb {R}^{ms \times r}\) the first r right singular vectors as columns. By choosing \(\mathcal {O}=U_{r}\Sigma _{r}^{1/2}\quad \text {and}\quad \mathcal {C} = \Sigma _{r}^{1/2}V_{r}^{T}\), a reduced order realization \((A_r,B_r,C_r)\) can be constructed via (26). For a basic stability and error analysis, the reader is referred to the classical sources [85,86,87]. A recent and thorough error analysis can be found in [89].

Using above theory, ERA is applied to scenario D1 of the MIRACLE dataset with \(m=1089\) inputs, \(p=64\) outputs. In order to improve the model quality, as argued in [83], the common dead time in the measurements is removed conservatively by truncating the first 68 samples. Additionally, to avoid unnecessary computations, only the first \(20\,\text {ms}\) of the impulse response are considered. Thus, after truncation, a total of \(2s-2=572\) samples are taken as input data for ERA. It is immediately obvious from (24) that the resulting Hankel matrix of dimension \(18{,}240\times 310{,}365\) can lead to computational problems with the outlined algorithm. One would already need \(45.3\,\)GB to construct this matrix explicitly, and computing the SVD of such a large matrix is infeasible. There are several strategies [90,91,92] that can alleviate this computational burden in classical ERA by exploiting low-rank structures in the data and/or the Hankel matrix. A randomized SVD algorithm [93] was employed here as an approximate orthogonal decomposition instead of (27) by sampling the range of \(\mathcal {H}\) with 5000 normally distributed random vectors and a single subspace iteration. For details of this randomized ERA variant, see [83, 92].

It is important to emphasize that multiple-input-multiple-output reduced order models are identified for all input-output transmissions simultaneously. In order to facilitate a qualitative analysis of the model quality, Fig. 9 depicts the magnitude responses of the measurement data alongside the identified reduced order models for the single-channel transmission from the centremost source to the centremost microphone. It can be seen that the accuracy increases with increasing model order and decreases with frequency. Furthermore, we have noticed that the single-channel approximation accuracy tends to deteriorate for source or receiver locations with greater distances to the source and receiver planes, respectively. Therefore, an appropriate quantitative error criterion must encompass all transmission channels simultaneously. To this end, denote

and

as the RMS-averaged system and error impulse response, respectively, where \(h(t)\in \ell _{2}^{64\times 1{,}089}\) denotes the measured multichannel impulse response of the D1 scenario and \(A_r,B_r,C_r\) are the reduced order system matrices obtained by ERA for model orders \(r=1000,\,2000,\,3000\). The averaged responses are shown in Fig. 10 and it can be observed that the averaged error \(\varepsilon _r\) is still substantial around \(3\,\text {ms}\) for \(r=1000\), which is due to the aforementioned deterioration of approximation quality towards the edges of the source and receiver planes. For the higher orders \(r=2000,\,3000\), this deviation is much less pronounced.

The potential benefit for model compression and real-time application is showcased in Table 6 in which the required memory and computational cost in Mega Floating Point Operations (MFLOPs) is listed alongside the relative approximation error

In order to compare the cost of solving (22) with the state-space approach over the convolution sum in (21), the memory demand and computational cost of the Uniformly Partitioned Overlap-save (UPOLS) method [94] are also listedFootnote 2. The UPOLS method and the variants described in [94] are well-established and solve (21) via spectral multiplication of overlapping blocks of signals. It can be seen that the reduced order models are more memory efficient than the UPOLS method by factors between \(5-30\). A computational speedup for real-time simulation over the UPOLS method by factors between roughly \(1.3-3.9\) can be achieved for all models if the models are transformed into so-called modal or (quasi-)diagonal form which can be achieved via an eigenvalue decomposition of \(A_r\). Details on the transformation can be found in [83].

5 Conclusion

A publicly available large-scale RIR dataset of 856,128 single-channel impulse responses has been presented, which is particularly suited for applications in the field of microphone array signal processing and sound field reconstruction because of the dense spatial sampling of the receiver and source spaces. To the authors’ knowledge, the MIRACLE dataset is the largest openly available RIR dataset yet.

The measured data have been shown to be spatially accurate and therefore provide an excellent basis for the development and statistical evaluation of data-driven modelling methods in acoustical engineering. The relevance, versatility, and applicability of the MIRACLE dataset have been demonstrated by means of two timely and diverging application examples.

In contrast to existing datasets, the MIRACLE dataset exhibits a notable limitation in environmental diversity, primarily constrained to free-field sound propagation scenarios with and without specular reflection. While the characteristics of the source and receiver predominantly shape the impulse response representation, enhancing environmental variability may be feasible through a semi-synthetic approach. This involves synthesizing corresponding RIRs and integrating them with the measured RIRs. To enrich the dataset, it is envisaged that the dataset will be extended in the near future by similar measurements of reverberant rooms. A persistent challenge lies in calibrating the source positions with comparable precision, mainly due to the diminished reliability of beamforming in reverberant environments. Addressing this challenge and broadening the dataset will be the focus of a forthcoming publication.

Availability of data and materials

The dataset presented in this paper can be obtained from doi:10.14279/depositonce-20106 under the CC BY-NC-SA 4.0 license authored by Adam Kujawski, Art J. R. Pelling, and Ennes Sarradj.

The AcouPipe framework utilizing the MIRACLE dataset can be obtained from 10.5281/zenodo.10908288.

Notes

An atmospheric pressure of \(101.325\,\text {kPa}\) and a carbon dioxide mole fraction of 0.0004 was used. A generic value of \(38\%\) was used for the relative humidity approximating the humidity conditions throughout the experiments

Abbreviations

- DFT:

-

Discrete Fourier Transform

- ERA:

-

Eigensystem Realization Algorithm

- MFLOPs:

-

Mega Floating Point Operations

- MOR:

-

Model order reduction

- PDF:

-

Probability density function

- RIR:

-

Room impulse response

- SVD:

-

Singular value decomposition

- UPOLS:

-

Uniformly Partitioned Overlap-save

References

K. Müller, F. Zotter, Auralization based on multi-perspective ambisonic room impulse responses. Acta Acustica 4, (2020). https://doi.org/10.1051/aacus/2020024

M.R. Schroeder, New Method of Measuring Reverberation Time. J. Acoust. Soc. Am. 37(3), 409–412 (2005). https://doi.org/10.1121/1.1909343

M.J. Bianco, P. Gerstoft, J. Traer, E. Ozanich, M.A. Roch, S. Gannot, C.-A. Deledalle, Machine learning in acoustics: theory and applications. J. Acoust. Soc. Am. 146(5), 3590–3628 (2019). https://doi.org/10.1121/1.5133944

E. Fernandez-Grande, X. Karakonstantis, D. Caviedes-Nozal, P. Gerstoft, Generative models for sound field reconstruction. J. Acoust. Soc. Am. 153(2), 1179–1190 (2023). https://doi.org/10.1121/10.0016896

A. Geldert, N. Meyer-Kahlen, S.J. Schlecht, in ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Interpolation of Spatial Room Impulse Responses Using Partial Optimal Transport (IEEE, Rhodes Island, Greece, 2023), pp. 1–5. https://doi.org/10.1109/ICASSP49357.2023.10095452

Y. Haneda, Y. Kaneda, N. Kitawaki, Common-acoustical-pole and residue model and its application to spatial interpolation and extrapolation of a room transfer function. IEEE Trans. Speech Audio Process. 7(6), 709–717 (1999). https://doi.org/10.1109/89.799696

F. Katzberg, R. Mazur, M. Maass, M. Böhme, A. Mertins, in 2018 16th International Workshop on Acoustic Signal Enhancement (IWAENC). Spatial interpolation of room impulse responses using compressed sensing (Tokyo, 2018), pp. 426–430. https://doi.org/10.1109/IWAENC.2018.8521390

A. Ratnarajah, Z. Tang, R. Aralikatti, D. Manocha, in Proceedings of the 30th ACM International Conference on Multimedia. Mesh2ir: Neural acoustic impulse response generator for complex 3d scenes (Association for Computing Machinery, New York, Lisboa Portugal, 2022), pp. 924–933. https://doi.org/10.1145/3503161.3548253

S. Lee, H.-S. Choi, K. Lee, Yet another generative model for room impulse response estimation. in 2023 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA) (New Paltz, 2023), pp. 1–5. https://doi.org/10.1109/WASPAA58266.2023.10248189

N.J. Bryan, in ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Impulse Response Data Augmentation and Deep Neural Networks for Blind Room Acoustic Parameter Estimation (IEEE, Barcelona, 2020). pp. 1–5. https://doi.org/10.1109/ICASSP40776.2020.9052970

P.-A. Grumiaux, S. Kitić, L. Girin, A. Guérin, A Survey of Sound Source Localization with Deep Learning Methods. J. Acoust. Soc. Am. 152(1), 107–151 (2022). https://doi.org/10.1121/10.0011809

E. Guizzo, R.F. Gramaccioni, S. Jamili, C. Marinoni, E. Massaro, C. Medaglia, G. Nachira, L. Nucciarelli, L. Paglialunga, M. Pennese, S. Pepe, E. Rocchi, A. Uncini, D. Comminiello, in Proceedings of the International Workshop on Machine Learning for Signal Processing (MLSP). L3DAS21 Challenge: Machine Learning for 3D Audio Signal Processing (IEEE, Gold Coast, 2021). https://doi.org/10.1109/MLSP52302.2021.9596248

E. Guizzo, C. Marinoni, M. Pennese, X. Ren, X. Zheng, C. Zhang, B. Masiero, A. Uncini, D. Comminiello, in Proceedings of the ICASSP. L3DAS22 Challenge: Learning 3D Audio Sources in a Real Office Environment (IEEE, Singapore, 2022), pp. 9186–9190. https://doi.org/10.1109/ICASSP43922.2022.9746872

K. Nagatomo, M. Yasuda, K. Yatabe, S. Saito, Y. Oikawa, in Proceedings of the ICASSP. Wearable Seld Dataset: Dataset For Sound Event Localization And Detection Using Wearable Devices Around Head (IEEE, Singapore, 2022), pp. 156–160. https://doi.org/10.1109/ICASSP43922.2022.9746544

M. Lee, J.-H. Chang, Deep neural network based blind estimation of reverberation time based on multi-channel microphones. Acta Acustica U. Acustica 104, 486–495 (2018). https://doi.org/10.3813/AAA.919191

H. Gamper, I.J. Tashev, in 2018 16th International Workshop on Acoustic Signal Enhancement (IWAENC). Blind reverberation time estimation using a convolutional neural network. (Tokyo, 2018), pp. 136–140. https://doi.org/10.1109/IWAENC.2018.8521241

M. Cobos, J. Ahrens, K. Kowalczyk, A. Politis, An overview of machine learning and other data-based methods for spatial audio capture, processing, and reproduction. EURASIP J. Audio Speech Music. Process. 2022(1), 10 (2022). https://doi.org/10.1186/s13636-022-00242-x

J.G. Moreno-Torres, T. Raeder, R. Alaiz-Rodríguez, N.V. Chawla, F. Herrera, A unifying view on dataset shift in classification. Pattern Recog. 45(1), 521–530 (2012). https://doi.org/10.1016/j.patcog.2011.06.019

S. Gannot, E. Vincent, S. Markovich-Golan, A. Ozerov, A consolidated perspective on multimicrophone speech enhancement and source separation. IEEE/ACM Trans. Audio Speech Lang. Process. 25(4), 692–730 (2017). https://doi.org/10.1109/TASLP.2016.2647702

J. Huang, T. Bocklet, in Proc. Interspeech 2019. Intel Far-Field Speaker Recognition System for VOiCES Challenge 2019 (2019), pp. 2473–2477. https://doi.org/10.21437/Interspeech.2019-2894

G. Bologni, R. Heusdens, J. Martinez, in ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Acoustic reflectors localization from stereo recordings using neural networks (2021), pp. 1–5. https://doi.org/10.1109/ICASSP39728.2021.9414473

F. Lluís, P. Martínez-Nuevo, M. Bo Møller, S. Ewan Shepstone, Sound field reconstruction in rooms: Inpainting meets super-resolution. J. Acoust. Soc. Am. 148(2), 649–659 (2020). https://doi.org/10.1121/10.0001687

S. Dilungana, A. Deleforge, C. Foy, S. Faisan, in INTER-NOISE and NOISE-CON Congress and Conference Proceedings. Learning-based estimation of individual absorption profiles from a single room impulse response with known positions of source, sensor and surfaces, vol. 263 (2021), pp. 5623–5630. https://doi.org/10.3397/IN-2021-3186

W. Yu, W.B. Kleijn, Room acoustical parameter estimation from room impulse responses using deep neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 29, 436–447 (2021). https://doi.org/10.1109/TASLP.2020.3043115

E.J.G. Arcondoulis, Q. Li, S. Wei, Y. Liu, P. Xu, in 28th AIAA/CEAS Aeroacoustics Conference. Experimental validation and performance analysis of deep learning acoustic source imaging methods (Southampton, 2022), https://doi.org/10.2514/6.2022-2852

A. Kujawski, E. Sarradj, Fast grid-free strength mapping of multiple sound sources from microphone array data using a Transformer architecture. J. Acoust. Soc. Am. 152(5), 2543–2556 (2022). https://doi.org/10.1121/10.0015005

T. Lobato, R. Sottek, M. Vorländer, Deconvolution with neural grid compression: A method to accurately and quickly process beamforming results. J. Acoust. Soc. Am. 153(4), 2073–2089 (2023). https://doi.org/10.1121/10.0017792

P. Srivastava, A. Deleforge, A. Politis, E. Vincent, in Proc. INTERSPEECH 2023. How to (Virtually) Train Your Speaker Localizer (ISCA, Dublin, 2023), pp. 1204–1208. https://doi.org/10.21437/Interspeech.2023-1065

P. Srivastava, Realism in virtually supervised learning for acoustic room characterization and sound source localization. Theses, Université de Lorraine (2023). https://theses.hal.science/tel-04313405. Accessed 22.05.24

A. Francl, J. McDermott, Deep neural network models of sound localization reveal how perception is adapted to real-world environments. Nat. Hum. Behav. 6, 111–133 (2022). https://doi.org/10.1101/2020.07.21.214486

R. Scheibler, E. Bezzam, I. Dokmanic, in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Pyroomacoustics: A Python Package for Audio Room Simulation and Array Processing Algorithms (IEEE, Calgary, 2018), pp. 351–355. https://doi.org/10.1109/ICASSP.2018.8461310

Z. Tang, R. Aralikatti, A.J. Ratnarajah, D. Manocha, in ACM SIGGRAPH 2022 Conference Proceedings. Gwa: A large high-quality acoustic dataset for audio processing (Association for Computing Machinery, New York, 2022). https://doi.org/10.1145/3528233.3530731

C. Chen, C. Schissler, S. Garg, P. Kobernik, A. Clegg, P. Calamia, D. Batra, P.W. Robinson, K. Grauman, in Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks, (Online Conference). Soundspaces 2.0: A simulation platform for visual-acoustic learning (2022). https://doi.org/10.48550/arXiv.2206.08312

D. Diaz-Guerra, A. Miguel, J.R. Beltran, gpuRIR: A python library for room impulse response simulation with GPU acceleration. Multimedia Tools Appl. 80, 5653–5671 (2021). https://doi.org/10.1007/s11042-020-09905-3

M. Wang, S. Clarke, J.-H. Wang, R. Gao, J. Wu, in Advances in Neural Information Processing Systems, ed by A. Oh, T. Neumann, A. Globerson, K. Saenko, M. Hardt, S. Levine. SoundCam: A dataset for finding humans using room acoustics, vol. 36 (Curran Associates, Inc., New Orleans, 2023), pp. 52238–52264. https://doi.org/10.25740/xq364hd5023

P. Karolina, S.J. Schlecht, V. Välimäki, Dataset of impulse responses from variable acoustics room Arni at Aalto Acoustic Labs. Zenodo. (2022). https://doi.org/10.5281/zenodo.6985104

G. Götz, S.J. Schlecht, V. Pulkki, in 2021 Immersive and 3D Audio: from Architecture to Automotive (I3DA). A dataset of higher-order Ambisonic room impulse responses and 3D models measured in a room with varying furniture (IEEE, Bologna, 2021), pp. 1–8. https://doi.org/10.1109/I3DA48870.2021.9610933

E. Hadad, F. Heese, P. Vary, S. Gannot, in Proceedings of the IWAENC. Multichannel audio database in various acoustic environments (IEEE, Juan-les-Pins, 2014), pp. 313–317. https://doi.org/10.1109/IWAENC.2014.6954309

T. Dietzen, R. Ali, M. Taseska, T. Waterschoot, MYRiAD: a multi-array room acoustic database. EURASIP J. Audio Speech Music Process. (2023) https://doi.org/10.1186/s13636-023-00284-9

D. Di Carlo, P. Tandeitnik, C. Foy, N. Bertin, A. Deleforge, S. Gannot, dEchorate: a calibrated room impulse response dataset for echo-aware signal processing. EURASIP J. Audio Speech Music Process. (2021). https://doi.org/10.1186/s13636-021-00229-0

S. Adavanne, J. Nikunen, A. Politis, T. Virtanen, TUT Tietotalo Ambisonic Impulse Response. Zenodo. 2018. https://doi.org/10.5281/zenodo.1443539

R. Merino-Martínez, P. Sijtsma, M. Snellen, T. Ahlefeldt, J. Antoni, C.J. Bahr et al., A review of acoustic imaging methods using phased microphone arrays. CEAS Aeronaut. J. 10, 197–230 (2019). https://doi.org/10.1007/s13272-019-00383-4

S. Koyama, T. Nishida, K. Kimura, T. Abe, N. Ueno, J. Brunnström, in 2021 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA). MESHRIR: A dataset of room impulse responses on meshed grid points for evaluating sound field analysis and synthesis methods (New Paltz, 2021), pp. 1–5. https://doi.org/10.1109/WASPAA52581.2021.9632672

J. Čmejla, T. Kounovský, S. Gannot, Z. Koldovský, P. Tandeitnik, in 2020 28th European Signal Processing Conference (EUSIPCO). Mirage: Multichannel database of room impulse responses measured on high-resolution cube-shaped grid (2021), pp. 56–60. https://doi.org/10.23919/Eusipco47968.2020.9287646

A. Lu, E. Moore, A. Nallanthighall, K. Sarkar, M. Mittal, R.M. Corey, P. Smaragdis, A. Singer, in 2022 International Workshop on Acoustic Signal Enhancement (IWAENC). Mechatronic generation of datasets for acoustics research (IEEE, Bamberg, 2022), pp. 1–5. https://doi.org/10.1109/IWAENC53105.2022.9914771

R. Scheibler, J. Azcarreta, R. Beuchat, C. Ferry, in 2018 16th International Workshop on Acoustic Signal Enhancement (IWAENC). Pyramic: Full Stack Open Microphone Array Architecture and Dataset (IEEE, Tokyo, 2018), pp. 226–230. https://doi.org/10.1109/IWAENC.2018.8521337

T. Ajdler, L. Sbaiz, M. Vetterli, The plenacoustic function and its sampling. IEEE Trans. Signal Process. 54(10), 3790–3804 (2006). https://doi.org/10.1109/TSP.2006.879280

I. Szöke, M. Skácel, L. Mošner, J. Paliesek, J. Černocký, Building and evaluation of a real room impulse response dataset. IEEE J. Sel. Top. Signal Process. 13(4), 863–876 (2019). https://doi.org/10.1109/JSTSP.2019.2917582

R. Stewart, M. Sandler, in Proceedings of the ICASSP. Database of omnidirectional and B-format room impulse responses. (IEEE, Dallas, 2010), pp. 165–168. https://doi.org/10.1109/ICASSP.2010.5496083

A.V. Venkatakrishnan, P. Pertilä, M. Parviainen, in Proceedings of the EUSIPCO. Tampere university rotated circular array dataset (IEEE, Dublin, 2021), pp. 201–205. https://doi.org/10.23919/EUSIPCO54536.2021.9616072

J. Eaton, N.D. Gaubitch, A.H. Moore, P.A. Naylor, Estimation of room acoustic parameters: The ace challenge. IEEE/ACM Trans. Audio Speech Lang. Process. 24(10), 1681–1693 (2016). https://doi.org/10.1109/TASLP.2016.2577502

K. Kinoshita, M. Delcroix, T. Yoshioka, T. Nakatani, E. Habets, R. Haeb-Umbach, V. Leutnant, A. Sehr, W. Kellermann, R. Maas, S. Gannot, B. Raj, in 2013 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics. The reverb challenge: A common evaluation framework for dereverberation and recognition of reverberant speech (2013), pp. 1–4. https://doi.org/10.1109/WASPAA.2013.6701894

D.T. Murphy, S. Shelley, in Audio Engineering Society Convention OpenAIR: an interactive auralization web resource and database. 129 (2010). https://secure.aes.org/forum/pubs/conventions/?elib=15648

M. Jeub, M. Schafer, P. Vary, in 2009 16th International Conference on Digital Signal Processing. A binaural room impulse response database for the evaluation of dereverberation algorithms (2009). pp. 1–5. https://doi.org/10.1109/ICDSP.2009.5201259

S. Nakamura, K. Hiyane, F. Asano, T. Endo, Sound scene data collection in real acoustical environments. J. Acoust. Soc. Jpn. (E) 20(3), 225–231 (1999). https://doi.org/10.1250/ast.20.225

P. Benner, A. Cohen, M. Ohlberger, K. Willcox (eds.) Model Reduction and Approximation: Theory And Algorithms. Computational Science and Engineering, vol. 15 (Society for Industrial and Applied Mathematics, Philadelphia, 2017). https://doi.org/10.1137/1.9781611974829

S.L. Brunton, J.N. Kutz, in ed. by P. Benner, S. Grivet-Talocia, A. Quarteroni, G. Rozza, W. Schilders, L.M. Silveira (eds.) 7 Data-driven methods for reduced-order modeling, pp. 307–344. (De Gruyter, Berlin, Boston, 2021). https://doi.org/10.1515/9783110671490-007

I.V. Gosea, S. Gugercin, C. Beattie, Data-Driven Balancing of Linear Dynamical Systems. SIAM J. Sci. Comput. 44(1), 554–582 (2022). https://doi.org/10.1137/21M1411081

B. Peherstorfer, S. Gugercin, K. Willcox, Data-driven reduced model construction with time-domain Loewner models. SIAM J. Sci. Comput. 39(5), 2152–2178 (2017). https://doi.org/10.1137/16M1094750

The MORwiki Community: MORwiki - Model Order Reduction Wiki. http://modelreduction.org. Accessed 05 Oct 2021

J.G. Korvink, E.B. Rudnyi, in Dimension Reduction of Large-Scale Systems. Lecture Notes in Computational Science and Engineering, ed. by P. Benner, D.C. Sorensen, V. Mehrmann, Oberwolfach Benchmark Collection (Springer, Berlin, 2005), pp. 311–315. https://doi.org/10.1007/3-540-27909-1_11

Benchmark Examples for Model Reduction - SLICOT. http://slicot.org/20-site/126-benchmark-examples-for-model-reduction. Accessed 29 Sept 2021

E. Sarradj, in 6th Berlin Beamforming Conference. A Generic Approach To Synthesize Optimal Array Microphone Arrangements. (Gesellschaft zur Förderung angewandter Informatik (GFaI), Berlin, 2016), pp. 1–12

M. Rébillat, R. Hennequin, É. Corteel, B.F.G. Katz, Identification of cascade of hammerstein models for the description of nonlinearities in vibrating devices. J. Sound Vib. 330(5), 1018–1038 (2011). https://doi.org/10.1016/j.jsv.2010.09.012

A. Farina, in 108th AES Convention. Simultaneous Measurement of Impulse Response and Distortion with Swept-sine technique. (Paris, 2000). https://secure.aes.org/forum/pubs/conventions/?elib=10211

S. Müller, P. Massarani, Transfer-function measurement with sweeps. J. Audio Eng. Soc. 49(6), 443–471 (2001)

M. Müller-Trapet, On the practical application of the impulse response measurement method with swept-sine signals in building acoustics. J. Acoust. Soc. Am. 148(4), 1864–1878 (2020). https://doi.org/10.1121/10.0001916

L.L. Beranek, T.J. Mellow, Acoustics: Sound Fields and Transducers, 1st edn (Academic Press, and imprint of Elsevier Amsterdam, 2012)

K. Prawda, S.J. Schlecht, V. Välimäki, Robust selection of clean swept-sine measurements in non-stationary noise. J. Acoust. Soc. Am. 151(3), 2117–2126 (2022). https://doi.org/10.1121/10.0009915

SciPy v1.11.4 Manual. https://docs.scipy.org/doc/scipy/reference/generated/scipy.signal.resample_poly.html. Accessed 18 Dec 2023

P.C. Hansen, Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion. SIAM Monographs on Mathematical Modeling and Computation, p. 247. (SIAM, Philadelphia, 1998). https://doi.org/10.1137/1.9780898719697

A. Farina, in 122nd AES Convention. Advancements in impulse response measurements by sine sweeps (Vienna, 2007), p. 21. https://www.aes.org/e-lib/browse.cfm?elib=14106

M. Holters, T. Corbach, U. Zölzer, in 12th Int. Conference on Digital Audio Effects (DAFx-09). Impulse response measurement techniques and their applicability in the real world. (2009). https://www.dafx.de/paper-archive/details.php?id=1u-OdqevtbweDYmNY2_kuA. Accessed 13 Mar 2024

H. Tokuno, O. Kirkeby, P.A. Nelson, H. Hamada, Inverse Filter of Sound Reproduction Systems Using Regularization. IEICE Trans. Fundam. A 80(5), 809–820 (1997)

S.G. Norcross, M. Bouchard, G.A. Soulodre, in Audio Engineering Society Convention 121. Inverse filtering design using a minimal-phase target function from regularization (Audio Engineering Society, San Francisco, 2006). https://www.aes.org/e-lib/browse.cfm?elib=13763. Accessed 13 Mar 2024

O. Cramer, The variation of the specific heat ratio and the speed of sound in air with temperature, pressure, humidity, and CO2 concentration. J. Acoust. Soc. Am. 93(5), 2510–2516 (1993). https://doi.org/10.1121/1.405827

R.S. Davis, Equation for the determination of the density of moist air (1981/91). Metrologia 29(1), 67 (1992). https://doi.org/10.1088/0026-1394/29/1/008

E. Sarradj, Three-dimensional acoustic source mapping with different beamforming steering vector formulations. Adv. Acoust. Vib. (2012). https://doi.org/10.1155/2012/292695

E. Parzen, On estimation of a probability density function and mode. Ann. Math. Stat. 33(3), 1065–1076 (1962)

B.W. Silverman, Density Estimation for Statistics and Data Analysis. Chapman & Hall/CRC monographs on statistics and applied probability (Chapman and Hall, London, 1986). https://cds.cern.ch/record/1070306

A. Kujawski, A.J.R. Pelling, S. Jekosch, E. Sarradj, A framework for generating large-scale microphone array data for machine learning. Multimed. Tools Appl. (2023). https://doi.org/10.1007/s11042-023-16947-w

A. Kujawski, A.J.R. Pelling, S. Jekosch, C. Kayser, E. Sarradj, adku1173/acoupipe: v23.11. Zenodo. (2023). https://doi.org/10.5281/zenodo.10405973

A.J.R. Pelling, E. Sarradj, Efficient forced response computations of acoustical systems with a state-space approach. Acoustics 3(3), 581–593 (2021). https://doi.org/10.3390/acoustics3030037

G. Nijsse, M. Verhaegen, B. Schutter, D. Westwick, N. Doelman, in Proceedings of the 1999 International Symposium on Active Control of Sound and Vibration (ACTIVE 99), ed. by S. Douglas. State space modeling in multichannel active control systems (Fort Lauderdale, 1999), pp. 909–920. https://www.dcsc.tudelft.nl/~bdeschutter/pub/rep/99_11.pdf

L. Silverman, Realization of linear dynamical systems. IEEE Trans. Autom. Control. 16(6), 554–567 (1971). https://doi.org/10.1109/TAC.1971.1099821

S. Kung, in Proceedings of the 12th Asilomar Conference on Circuits, Systems and Computers. A new identification and model reduction algorithm via singular value decomposition (1978). pp. 705–714

J.-N. Juang, R.S. Pappa, An eigensystem realization algorithm for modal parameter identification and model reduction. J. Guid. Control. Dyn. 8(5), 620–627 (1985). https://doi.org/10.2514/3.20031

A.C. Antoulas, Approximation of Large-Scale Dynamical Systems. Advances in Design and Control (Society for Industrial and Applied Mathematics, Philadelphia, 2005). https://doi.org/10.1137/1.9780898718713

S. Oymak, N. Ozay, Revisiting ho-kalman-based system identification: robustness and finite-sample analysis. IEEE Trans. Autom. Control. 67(4), 1914–1928 (2022). https://doi.org/10.1109/TAC.2021.3083651

B. Kramer, S. Gugercin, Tangential interpolation-based eigensystem realization algorithm for mimo systems. Math. Comput. Model. Dyn. Syst. 22(4), 282–306 (2016). https://doi.org/10.1080/13873954.2016.1198389

B. Kramer, A.A. Gorodetsky, System identification via cur-factored hankel approximation. SIAM J. Sci. Comput. 40(2), 848–866 (2018). https://doi.org/10.1137/17M1137632

R. Minster, A.K. Saibaba, J. Kar, A. Chakrabortty, Efficient algorithms for eigensystem realization using randomized svd. SIAM J. Matrix Anal. Appl. 42(2), 1045–1072 (2021). https://doi.org/10.1137/20M1327616

N. Halko, P.G. Martinsson, J.A. Tropp, Finding Structure with Randomness: Probabilistic Algorithms for Constructing Approximate Matrix Decompositions. SIAM Rev. 53(2), 217–288 (2011). https://doi.org/10.1137/090771806

F. Wefers, Partitioned Convolution Algorithms for Real-Time Auralization. Aachener Beiträge Zur Technischen Akustik, vol. Band 20. (Logos Verlag Berlin GmbH, Berlin, 2015)

Acknowledgements

The authors also thank Arya Prasetya, Serdar Gareayaghi, Can Kurt Kayser, and Roman Tschakert for their help with the experimental measurements and Fabian Brinkmann for valuable insights into sweep synthesis and experiment design.

Author information

Authors and Affiliations

Contributions

Experimental design, measurements, and post-processing were performed jointly by AK and AP. The manuscript was written by AK and AP. ES supervised the project. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kujawski, A., Pelling, A.J.R. & Sarradj, E. MIRACLE—a microphone array impulse response dataset for acoustic learning. J AUDIO SPEECH MUSIC PROC. 2024, 32 (2024). https://doi.org/10.1186/s13636-024-00352-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13636-024-00352-8