Abstract

Diabetes as a metabolic illness can be characterized by increased amounts of blood glucose. This abnormal increase can lead to critical detriment to the other organs such as the kidneys, eyes, heart, nerves, and blood vessels. Therefore, its prediction, prognosis, and management are essential to prevent harmful effects and also recommend more useful treatments. For these goals, machine learning algorithms have found considerable attention and have been developed successfully. This review surveys the recently proposed machine learning (ML) and deep learning (DL) models for the objectives mentioned earlier. The reported results disclose that the ML and DL algorithms are promising approaches for controlling blood glucose and diabetes. However, they should be improved and employed in large datasets to affirm their applicability.

Similar content being viewed by others

Introduction

Diabetes is a chronic metabolic disease that happens when the blood glucose is higher than usual. It has been predicted that the number of diabetic patients would reach about 642 million in 2040 [1]. Diabetes is categorized into three common types, namely, Type 1 Diabetes Mellitus (T1DM), Type 2 Diabetes Mellitus (T2DM), and Gestational Diabetes Mellitus (GDM). The mentioned categories are specified by dysregulation of protein metabolism, lipid, and carbohydrate [2]. In addition, there are some other particular kinds of diabetes such as maturity-onset diabetes of the young, neonatal diabetes, and also diabetes due to some diseases like the exocrine pancreas, and chemical- or drug-induced diabetes [3].

T1DM is classified as a chronic autoimmune disease that is a result of elevated blood glucose levels (hyperglycemia; glucose levels > 180 mg/dL) [4]. It causes insulin deficiency that arises from the loss of the pancreatic islet β-cells [5]. Previously, T1DM has been noticed as a disorder for children and adolescents, however, age at the symptomatic beginning has not been considered a limiting factor over the past decade [6]. T1DM is connected with the attendance of autoantibodies several years before the start of symptoms since they can be regarded as a biomarker of autoimmunity. The autoantibodies representing T1DM target insulin, zinc transporter 8 (ZNT8)6–8, or insulinoma-associated protein 2 (IA2). T1DM as a polygenic illness is impressed by environmental agents. In addition, genetic risk factors are essential but not adequate. People with HLA genotypes of HLADQ and HLADR have an elevated risk of progressing T1DM [4, 7, 8]. Classic signs of hyperglycemia are generally comprising weight loss, polydipsia, abdominal symptoms, polyuria, ketoacidosis, and headaches. The American Diabetes Association (ADA) introduced the diagnostic criteria for diabetes mellitus in 2016 [9]. They rely on symptoms of abnormal glucose metabolism such as insulinogenic and evidence of βcell-targeted autoimmunity, irrespective of age and diabetes type. If patients show clinical signs of T1DM without the presence of autoantibodies, the ADA diagnoses it as a group of idiopathic T1DM [4]. Furthermore, multiple environmental factors including the timing of the first introduction of food [10], viral infections [11], and gestational infections [12] can contribute to the development of T1DM.

T2DM is the most common type of diabetes so that it develops in more than 90% of all cases. The main reason for developing T2DM is the impaired secretion of insulin by pancreatic β‑cells, generally because of insulin resistance in adipose tissue 1 (BOX 1), liver, skeletal muscle, and liver [13].

Prediabetes occurs before hyperglycemia, which is a high-risk situation that predisposes subjects to the development of T2DM. Prediabetes can be determined by one of the following conditions: elevated glycated Hemoglobin A1c (HbA1c) levels, Impaired Fasting Glucose (IFG) levels, and Impaired Glucose Tolerance (IGT). Cases with IFG levels are specified by Fasting Plasma Glucose (FPG) measures greater than normal. IGT is characterized by impaired late insulin secretion after a meal and insulin resistance in muscles [14]. People with prediabetes have HbA1c amounts between 5.7 and 6.4%. The rates of annual conversion of prediabetes to T2DM are from 3 to 11% per year [2, 15].

T2DM is heritable and the probable risk of getting T2DM is higher among siblings of a T2DM patient than in families without any T2DM patient [16]. The risk of getting T2DM is higher when the mother has this disease in comparison with when the father has it. Also, the risk of T2DM is noticeably elevated with a non‑normal fasting glucose concentration of > 5.5 mmol.l–1 or a body mass index (BMI) of ≥ 30 [17]. The genetic studies revealed a Single‑Nucleotide Polymorphism (SNP) in TCF7L2, CDKAL1, SLC30A8, CDKN2A, FTO, HHEX, CDKN2B, GCKR, IGF2BP2, and many others in T2DM cases. However, these genetic variants only elevate the risk by 10–20% [2]. T2DM detection can be assessed based on FPG levels, increased plasma glucose test, 2‑hour post‑glucose‑load glucose level, or HbA1c. Moreover, there is a linear correlation between cardiovascular disease and glycemia with no obvious threshold. The risk of developing distal symmetric peripheral polyneuropathy and diabetic nephropathy is raised with hyperglycemia lower than those accompanied by diabetic retinopathy [18].

The US Preventive Services Task Force (USPSTF) recommended that adults higher than 45 years, people who have a first‑degree relative with diabetes, and ones who are obese or overweight should be screened in early care settings [19]. T2DM control is complex due to many pathophysiological disorders and the ‘ABCDE’ conditions (Age, Body weight, Complications, Duration, Education and Expense, and Etiology) [2, 20].

Gestational diabetes mellitus is defined as any occurrence of hyperglycemia identified in pregnancy ranging from mild IGT or IFG diagnosed in pregnancy to detecting higher glucose levels [21]. Several risk factors have been recognized for GDM including a history of gestational diabetes, a family history of T2DM, ethnicity, advanced maternal age, lifestyle factors, and diet [22]. Moreover, psychosocial and environmental factors such as endocrine disruptors, organic pollutants, and depression in the first and second trimesters have been proposed as possible risk factors for developing GDM [21]. Genetic factors can also be involved in progressing GDM, however, the data is limited and contradictory [21].

The metabolic irregularities underlying GDM comprise β-cell defects and elevated insulin resistance. These deficiencies probably exist before conception in many patients and are often quite asymptomatic. There is no unique diagnostic protocol or criteria that have been universally accepted for GDM. The International Association of Diabetes and Pregnancy Study Groups (IADPSG) published several recommendations in 2010 for the diagnosis and classification of hyperglycemia in pregnancy as follows: women with equal levels of glycemia-associated risk of detrimental pregnancy results should be grouped by the same procedure and the values of threshold blood glucose should be internationally standardized [23]. The IADPSG also recommended a ‘one-step’ approach containing an Oral Glucose Tolerance Test (OGTT) at 24–28 weeks of gestation and suggested GDM diagnostic thresholds. It relies on an adjusted odds ratio amount of 1.75 for delivering an infant impressed by critical fetal complications of maternal hyperglycemia, namely, increased cord blood C-peptide levels, elevated size at birth, and increased adiposity. The IADPSG also stated that undetected T2DM in pregnant women is dramatically prevalent in specified populations and recommended that these individuals should be diagnosed preliminarily in pregnancy and categorized as ‘overt diabetes’ [21].

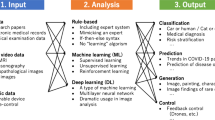

Machine learning (ML) is the application of different computer algorithms that can be ameliorated spontaneously through testing and by the utilization of data. The algorithms create a model that relies on sample data, called training data, in order to predict or make decisions [24, 25]. Deep Learning (DL) is a special subcategory of ML which is a neural network with three or more layers [26]. Moreover, DL algorithms try to learn high-level secessions in data by employing hierarchical architectures. The major properties of deep learning consist of adaptability to the features and learning from the data on their own. DL causes some developments including the fast elevating chip processing capabilities, a remarkable decreasing the cost of computing hardware, and significant improvements in the ML algorithms. Particularly, ML and DL algorithms have advantageous and are applicable in diagnosing and forecasting diseases [27, 28]. They typically include performing several steps. At first, the high-throughput data containing many features are introduced to the learning process. Afterward, data is pre-processed to remove outliers and diminish the space dimensionality by excluding the disjointed data or finding the desired data. In the next step, the algorithms are developed proportional to the aim of the study. Then, the model is tested in external data to compute the performance of the developed method using some metrics such as the Receiver Operating Characteristic Curve (ROC), the Area Under the Curve (AUC), Mean Absolute Relative Difference (MARD), Root-Mean-Square Error (RMSE), Mean Squared Error (MSE), accuracy, precision, recall, F-measure, log loss, etc. Multiple ML and DL methods have been applied to various aspects of diabetes. Among them, ML approaches including Logistic Regression (LR), Extremely Gradient Boosting (XGBoost), Gradient Boosting Machine (GBM), Random Forest (RF), AdaBoost, Support Vector Machine (SVM), Least Absolute Shrinkage And Selection Operator (LASSO) regression, Bayesian Network (BN), K-Nearest Neighbor (K-NN), Artificial Neural Networks (ANNs), and ensemble algorithms as well as DL methods comprising Recurrent Neural Networks (RNNs), Long Short-Term Memory Networks (LSTMs), Gated Recurrent Unit (GRU), Convolutional Neural Networks (CNNs), and reinforcement learning (LR) have been utilized more than others. Computational algorithms have been widely employed for handling diabetes data. However, the analysis of diabetes data is complicated because most of the relevant data are nonlinear, non-normal, and correlation-structured [29]. Therefore, ML algorithms have been employed for controlling, classification, predicting, and management of diabetes.

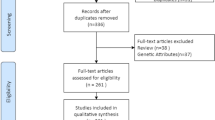

There are several reviews that overview various machine learning and deep learning models for the classification of data-driven blood glucose patterns as well as the prediction of diabetes and hypoglycemia [30,31,32]. In this review, we survey the most recently developed machine learning and deep learning algorithms for various aspects of prediction, diagnosis, and management of all diabetes types in more detail. To our best knowledge, there is no such comprehensive review to overview different aspects regarding all diabetes types. We tried to cover the most recent published papers that developed and applied ML and DL methods for the following purposes: early diagnosis and prediction of diabetes; prediction of blood glucose; detection of blood glucose; Insulin resistance predicting models; determination of the start and effect of treatment; risk assessment of Diabetes; dietary and insulin dose modifications; and diabetes management. Table 1 summarizes some applied ML and DL models to construct each model. Moreover, the publicly available datasets are specified in bold.

Common machine learning and deep learning algorithms

Logistic Regression

Logistic Regression (LR) is commonly used to allocate observations to a distinct set of classes. It transforms its output by employing the logistic sigmoid function to evoke a probability amount. Binary (e.g. sickly or healthy) and multi-linear functions failsClass (e.g. healthy, pre-disease, or sickly) are two common types of LR [19]. The LR can be called a linear regression model, however, it employs a more complicated cost function as the ‘sigmoid or logistic function’. In ML, the sigmoid is utilized to map predictions to probabilities. The hypothesis of LR is relied on the limitation of the cost function between zero and one, while the linear function may have an amount less than zero and higher than one. Moreover, LR can overestimate the prediction accuracy owing to sampling bias. It also may lead to unfavorable accuracy in presence of intricate relationships between input variables [33].

Decision Tree

Decision Tree (DT) algorithm is a non-parametric algorithm that can be employed for both aims of regression and classification problems. The tree can be defined by two concepts, namely decision nodes and leaves [34]. The leaves are the decisions or the eventual results. The decision nodes are the places where the data is split. The root of the tree is considered the starting point for forecasting a class label for a record. Then, the amounts of the root attribute are compared with the record’s attribute, and the branch corresponding to that amount will be followed and jump to the next node. One of the limitations of DTs is that they are widely labile in comparison with other decision predictors. A small alteration in the data may lead to a great alteration to the structure of the decision tree and a different outcome.

Gradient Boosting Machine

Gradient Boosting Machine (GBM) is an ensemble decision tree based on either regression or classification tree models. A GBM can combine the predictions from various DTs to give the ultimate prediction model. GBM is one of the strong approaches for constructing predictive models. Boosting assists in improving the tree’s accuracy. The gradient boosting approach generalizes tree boosting to increase speed and also interpretability. In this algorithm, the models are sequentially constructed. Moreover, it is tried to decrease the faults of the prior model by creating a new model on the residuals or the errors of the former model. Gradient Boosting Regressor (GBR) is used for the continuous targets while Gradient Boosting Classifier (GBC) is implemented for the classification problems. The purpose is to reduce the loss function by appending the feeble learners utilizing gradient descent. The only discrepancy between the above-mentioned algorithms is the loss function. GBM is highly flexible and customizable to any special data-driven role. Moreover, the GBMs are successful in different data-mining and machine-learning problems [35]. However, generally, boosting algorithms can lead to overfitting the outliers. Gradient boosting algorithm is also time-consuming and computationally costly.

Extremely Gradient Boosting (XGBoost)

Extremely Gradient Boosting (XGBoost) is an optimized GBM learning library that employs DT as the base appraiser. The trees are constructed utilizing residuals, not the real class labels. The base appraisers are regarded as regression trees instead of classification trees because the residuals are continuous. The maximum size of the trees can be determined to downgrade the risk of overfitting. The learning rate is applied to scale the quantity of each tree. The probability in each step is the one of an event computed at a prior stage. The probability of 0.5 is considered the primary probability, which is employed to construct the initial tree. For the further trees, the former probability is measured based on primary prediction and predictions from all previous trees. The similarity score is calculated for each leaf and then the Gain is computed. The node with the highest Gain is then selected as the best cleavage for the tree [36]. The disadvantages of XGBoost are its weak performance on unstructured and sparse data and its sensitivity to outliers because each classifier should resolve the errors in the predecessor learners.

Adaptive Boosting

Adaptive Boosting (AdaBoost) is an ensemble method. A decision tree with only one split is the most popular algorithm employed with AdaBoost. These trees are sometimes called by another name, decision stumps. This algorithm begins by constructing a decision stump and then ascribing identical weights to the whole of the data points. Afterward, it enhances the weights for the misclassified points and lowers the weights for the corrected classified points. The points with higher weights are given more significance in the further model. It can lead to improving the predictions that are constructed by the initial stump. Until achieving a lower fault, training models will be held. In AdaBoost, alteration of the base estimator is possible for developing the requirements [37]. When AdaBoost is considered a generalized additive model, the logistic regression can be applied for the cost function which is called the LogitBoost algorithm. AdaBoost needs high-quality data and is also very susceptible to noise and outliers in data. The speed of AdaBoost is also lower than the XGBoost algorithm.

Support Vector Machine

Support Vector Machine (SVM) is a powerful algorithm in which a hyperplane with an N-dimensional space exists corresponding to the number of features for classifying the data points. Hyperplanes denote the decision boundaries used to classify the data points. The dimension of each hyperplane is defined regarding the number of variables in the dataset. Support vectors are data points that are near the hyperplane and impress the location and orientation of the hyperplane. In general, the output of the linear function is taken in SVM. The output > 1 is placed in one class and the output of -1 is categorized in another class. This means that the reinforcement range of amounts (1, -1) acts as a margin. In the SVM approach, the margin of the classifier and the hyperplane should be maximized. The loss function that assists in the maximization of the margin is hinge loss. A regularization parameter is also used to balance the loss and margin maximization. Then, the partial derivatives concerning the weights are calculated to identify the gradients and also to update the weights. In the absence of misclassification, the model predicts the corrected class of data, and the gradient is updated by the regularization parameter. In the presence of misclassification, the model predicts wrongly the class of data, so the loss accompanied by the regularization parameter should be considered to accomplish gradient update [38]. SVM employs kernel functions to accomplish classification on non-linear data [39]. The utilization of SVM in regression is known as Support Vector Regression (SVR). The limitations of SVM are computationally costly for complex and large datasets, low performance in noisy data and when the number of variables is more than the number of training data samples, and classifying only two classes by the generic algorithms [33].

Random Forest

Random Forest (RF) is an ensemble of DTs that are generally trained with the “bagging” procedure. Each tree in the random forest represents a class of forecasting. Among them, the one with the utmost vote is considered the model’s prediction. The hyperparameters in RF are employed to make a rapid model and enhance the predictive power of the model. RF could be employed for both regression and classification problems. The major restriction of RF is due to the large quantities of trees that slow the algorithm and it can be ineffectual for real-time predictions [40]. It is also computationally expensive and is sensitive to trivial alterations in the data.

Least Absolute Shrinkage and Selection Operator Regression

Least Absolute Shrinkage and Selection Operator (LASSO) is a regression approach that carries out both regularization and feature selection to boost the accuracy of prediction and interpretability of the resulting model. It is a common kind of regularized linear regression comprising a penalty. It results in a decrease in the coefficients for those input features that do not assist the prediction task. This penalty also causes the assigning of zero values for some coefficient values and excluding some input features from the model. In addition, this algorithm reduces the absolute amount of the regression coefficients and ameliorates the accuracy of the designed linear regression models [41]. The main drawback of the LASSO method is that the regression coefficients can be unreliably interpretable in terms of independent risk factors. This is due to that it relies on the best-combined forecasting, not on the accuracy of the approximations.

Bayesian Networks

Bayesian Networks (BNs) are graphical models comprising information about the probability of relevance between features and assisting decision-making. The probability relationships can be proposed by the users or can be updated employing the Bayes theorem. The dependency of the inter-variable is shown by nodes and directed arcs (conditional relationships) in the shape of a Directed Acyclic Graph (DAG). Generally, two factors are implicated in learning a BN including structure learning, which contains detecting the DAG, and also parameter learning which includes learning regards to conditional probability distributions. The DAG search and K2 algorithms are the two most common approaches for specifying the structure of the DAG. These algorithms ascribe equivalent previous probabilities to the DAG structures and seek the structure to enhance the probability of the data. This probability is called the Bayesian score. It is important to combine previous information about causal structures in the process of parameter learning [42]. The automatic learning of the graph structure of a BN is a problem that is followed by machine learning. One of the main limitations of BN is that there is no generally accepted approach for building a network from data. It leads to the exploitation of only casual impressions by BN that are detected by its programmer.

K-Nearest Neighbor

The K-Nearest Neighbor (K-NN) algorithm is an easy-to-accomplishment machine learning algorithm that can be employed to resolve both regression and classification problems. K-NN attempts to forecast the true class for test data by computing the distance between them and all the training points. Next, the K number of points that are nearest to the test data would be chosen. The K-NN algorithm computes the probability of the test data appertained to the classes of ‘K’ training data and selects the class with the highest probability. In the case of regression, the amount is the average of the ‘K’ selected training points [43]. One of the limitations of K-NN is that it doesn’t make good forecasting for infrequent classes such as new diseases. It is also costly in terms of memory and time. In addition, Euclidean distance is very susceptible to magnitudes, so features with high magnitudes will weigh more than others with low magnitudes.

Artificial Neural Networks

Artificial Neural Networks (ANNs) are a series of algorithms that are developed to identify patterns and classifications. ANN is identified as a feed-forward neural network since inputs are considered just in the forward orientation. ANN comprises the input, hidden, and output layers. The input layer embraces the inputs, the hidden one surveys the inputs, and also the output one generates the outcome. While each layer endeavors to learn the specified weights, ANN can learn every nonlinear function. ANNs are commonly recognized as general function estimators. One of the major causes of generic estimation is the activation function, which presents nonlinear attributes to the network. It aids the network to learn any complex connection between output and input. ANNs are implemented for classification and clustering. Particularly, they are used to categorize the unlabeled data based on resemblances among the inputs. In addition, they can help for classifying the labeled data [44, 45]. Some of the limitations and disadvantages of ANNs are as follows: high computationally cost to construct and train a complex network, non-approachability of the decision-making process for the user, non-generalizability from limited training data, requiring the pre-processing of the independent variables or predictors, and proneness to overfitting [33, 46].

Recurrent Neural Networks

Recurrent Neural Network (RNN) models are a popular kind of deep learning neural network. RNN passes the backpropagation process through time for learning. It can be developed for handling the data when it loops back the previous information. The output originating from the past state provides the input to the current one. It means that the current state is updated in each time step to obtain the renewed information for arranging the time-dependent sequence data. The impressive success of RNN comes back to generating the sequence data that are supplied from many applications in dynamic systems such as time series, natural language processing, and speech recognition [47]. The limitations of RNN methods are as follows: the speed of calculations is low, training of RNN models may be tough, the model cannot process very long sequences if using relu and tanh as activation functions, and there are gradient disappearing and exploding problems.

Long Short-Term Memory Networks

Long Short-Term Memory (LSTM) networks are a category of RNNs that is able to learn order dependence. They comprise four neural networks and multiple memory blocks called cells in a chain structure. An ordinary LSTM unit includes a cell, an input gate, an output gate, and a forget gate. Three gates control the amount of information in and out of the cell since it remembers amounts over arbitrary time intervals. The LSTMs can hold information for a long time by default. The LSTM algorithm is well adapted to classify, analyze, and forecast time series of indistinct duration. However, LSTMs need a long time, resources, and high memory to train. The easy overfit is another limitation of LSTMs.

Gated Recurrent Unit

Gated Recurrent Unit (GRU) is a progressed format of the standard RNN. GRUs are the same as LSTM since use gates to control the flow of information. GRU has a simpler architecture than LSTM [48]. The update gate determines the value of prior information that needs to pass along the further state. The Reset Gate is responsible for the short-term memory of the network. It is employed from the model to determine how much of the prior information is needed to neglect. First, the reset gate stores related information from the past time stage into new memory content. Next, it multiplies the input vector and hidden state with their weights. Then, it computes element-wise multiplication between the previously hidden state and the reset gate. After summing up the above stages, the non-linear activation function is used and a further sequence is produced [49]. GRU models confront some problems including low learning efficiency and slow convergence rate, leading to too long training time and even under-fitting.

Convolutional Neural Network

Convolutional Neural Networks (CNNs) are one of the most popular DL models that work with backpropagation neural networks. The main success of CNNs is in the field of image processing and image recognition which are adapted to analyze visual imagery specifically with image or video data. The architecture of CNN is comprised of a convolutional, pooling, and fully connected layers. In the convolutional layer, the feature extraction occurs by the kernels. The pooling layer carries out a dimensionality diminution to minimize the network computational effort. The fully connected layer plays the classifier role with non-linear classification. By repeating the convolution-pooling sequences multiple times, the system is deepened and the desired accuracy can be obtained. The traditional NNs generally utilize the full connections between the layers leading to over-fit of train data and intensive time computations. Unlike NNs, CNNs avoid overfitting and general multiplications by using particular layers and consequently provide faster computations [50, 51]. However, CNN does not encode the orientation and position of objects. It is unable to be spatially invariant to the input data. Finally, it needs a large amount of training data.

Deep Reinforcement Learning

Deep reinforcement learning integrates artificial neural networks (deep learning) with a framework of reinforcement learning (RL) to learn software agents how to achieve their objectives. Reinforcement learning algorithms can start from a blank slate, and under the correct directions, reach superhuman performance. Different penalizes and rewards are considered for these algorithms when they make mistakes and the right decisions, respectively– this means reinforcement. The common steps of RL are as follows: (i) observation of the environment; (ii) selecting a strategy; (iii) acting based on the chosen strategy; (iv) receiving a reward or penalty; (v) learning from the experiences and improving the strategy; (vi) iterating to reach an optimal strategy. Three approaches for reinforcement learning are Policy-based. Value-based, and Model-based learning. Model-based RL utilizes experience to build an internal model of the transitions and instant results in the environment. Proper actions are then selected by exploring or planning in this world model. On the other hand, model-free RL uses experience to learn straightly one or both of two simpler quantities which can reach the same optimal behavior but without approximation or use of a world model. One of the most used learning models is Q learning which is a values-based and off-policy learner algorithm. Value-based algorithms update the value function according to an equation, especially the Bellman equation. Off-policy algorithms learn the value of the optimal policy apart from the agent’s actions. The ‘Q’ in Q-learning refers to quality. Quality indicates how beneficial a given function is in obtaining some future reward. There are some challenging drawbacks and limitations with RL: (i) too much reinforcement can lead to an overload and attenuate the outcomes; (ii) it is not a preferred model for simple problems; (iii) it needs a large number of data and contains plenty of computation; (iv) its preservation cost is high.

The application of ML and DL models for the management, prediction, and detection of diabetes

Early diagnosis and prediction of diabetes

Early diagnosis and forecasting of diabetes occurrence through the measurement of several baseline factors could diminish the complications in the future. However, the slight incidence rate of diabetes causes a major challenge in the accurate prediction of diabetes class against non-diabetic one. To overcome this difficulty, various ML and DL algorithms have been developed which are overviewed in the following sections for each diabetes type.

T2DM

One of the interesting methods for diabetes detection in Traditional Chinese Medicine (TCM) is characterized based on tongue features. It is performed by analyzing the extracted parameters from the panoramic tongue images such as tooth markings, shape, texture, color, and fur [52]. An experienced person as a specialist is required to do these visualized diagnosis processes. The yellow tongue is defined as a sign of diabetes [53]. For predicting the T2DM risk, an ML model was arranged in a combination with TCM. To this aim, TDA-1 Tongue Diagnosis Instrument was extended by a research group at Shanghai University of TCM to gather the tongue images. This information in association with the common knowledge and laboratory outcomes of the physical checkup was prepared to propose a model. Through the developed model, the texture and color characteristics of the tongue were computed by the Tongue Diagnosis Analysis System (TDAS) which was also improved by the same research group. TDAS performed the tongue diagnosis and the tongue division through the human-computer interplay. Afterward, it could automatically measure the texture and color features. In addition, deep learning techniques were also employed. To train DL models, Mask Region-Based Convolutional Neural Network (Mask R-CNN) was implemented because of its characteristics for the fast object detection of an image with the high-quality. Specifically, it carried out the instant semantic segmentation that led to being worked as a diagnostic support tool. Through image detection, Mask R-CNN could eliminate the background of the raw tongue images and had superior pre-trained models, swift training speed, and remarkable accuracy. Then, eight classical ML models, including LR, RF, Naive Bayes (NB), SVM, XGBoost, ANN, K-nearest neighbor (K-NN), and DT were applied to fully detection of the inherent regularities. To find the best classification impact, the model fusion was carried out by the stacking technique. The stacking process comprised two steps. In the first part, the operation of eight independent models was evaluated by using ten-fold cross-validation. The prediction outcomes constructed the further training data. Simultaneously, the test dataset was predicted and the next data test was built. In the subsequent step, a second test dataset was employed to fit the training data matrix that was integrated into step one using the LR algorithm. Finally, it could test the data matrix for the ultimate outcomes. To create a convenient and noninvasive diabetes risk forecasting model, tongue features and generic details were accomplished to assess the non-invasive stacking model. Moreover, DL methods including XceptionResNet50, DenseNet121, Vgg16, Vgg19, and InceptionV3 were used to train the model. The stochastic gradient descent was performed for optimization, while the loss function was cross-entropy. The best accuracy was obtained for the non-invasive stacking model and ResNet50 at 71% and 69%, respectively. In the critical BG group, the AUC was acquired at 0.84 for the stacking model and 0.88 for ResNet50. In the high BG group, the AUC was achieved as 0.87 and 0.80 for the non-invasive stacking model and ResNet50, respectively.

In another study [52], a deep autoencoder learning algorithm with CNN was developed to exploit deep variables from panoramic tongue images. Then, these parameters were trained with a deep Radial Basis Function Neural Network (RBFNN) classifier algorithm. The suggested model showed a better classification performance rather than the other models. The training accuracy of the ResNet50-RBFNN model was obtained at 92.3%.

The possibility of predicting diabetes could be found from the special patterns of body fat distribution using Magnetic Resonance Imaging (MRI) [54]. For constructing a powerful model, a novel ML method on more than 2000 whole-body MRI image data sets was developed. For this research, a network was constructed corresponding to the DenseNet architecture. The 3-dimensional volumes were used as the input. The first layer included a completely connected convolutional layer with a kernel size of 5 and 8 convolutional filters. The pooling layer was then applied to reduce the dimensions of the mediator feature maps in order to improve the computational performance. The output was entered into the initial dense block. Transition layers and dense blocks were consecutively appended to process the input. After the eventual transition layer, the activation maps were widened to a 1D array and transferred to 3 consecutive densely connected layers with dropout. The dimension of the resulting dense layers was 1 × 128 units, and it was considered the embedding layer for embodying the low-dimensional delegations of MRI voxels as “learned” by the neural network. The embedding layer was employed to forecast the favorable target labels and the unsupervised clustering analysis. Further dense layers were appended to the embedding layer to predict output nodes. Models were trained for age, sex, HbA1c, insulin sensitivity, BMI, prediabetes, and the occurrence of diabetes. The AUC was found at 87% for T2DM discernment and 68% for prediabetes, which were higher than the conventional models. Moreover, the lower visceral abdominal was found a critical region in the classification of diabetes.

To determine the undiagnosed T2DM in adults, the nutritional markers were found by ANN, LR, and RF models [55]. To overcome the impact of the class imbalance, resampling algorithms containing Random Oversampling Examples (ROSE), minority class over-sampling, and Synthetic Minority Oversampling Technique (SMOTE) were applied. In this process, four models were constructed with (1) original data, (2) oversampling, (3) SMOTE, and (4) ROSE per each algorithm. Undiagnosed T2DM was identified by a negative reply to the query “Have you ever been told by a doctor that you have diabetes?” and a positive glycaemic reply to one or more of the 3 detection tests FPG > 125 mg/dl, HbA1c > 6.4% or 2-hr post- oral glucose tolerance test (OGTT) > 200 mg/ dl. As a result, the prevalence of undiagnosed T2DM was determined as 5.26%. The best models classified 39 markers including 11 unique markers by the logistic technique and 28 via one or more of the three best-performing ensemble/non-linear models. They comprised 4 diet-associated, 9 socio-behavioral, 2 anthropometry-based, and 14 nutrient-based markers. The best-performing approach was a LR method on original, unbalanced training data without any resampling with an AUC of 0.74, a sensitivity of 0.77, a specificity of 0.61, and an accuracy of 0.62. This study suggested personalized clinical nutrition like the risk-stratified nutritional recommendations and the early preventive plans that were aimed at high-risk people as well as the nutritional handling of individuals with T2DM.

The results of a systematic review revealed that the optimal performance for the prediction of T2DM is obtained using tree-type models [56]. However, they need complementary approaches to decrease the dimensionality and balance data by choosing the optimal features. Therefore, SVM and KNN have been commonly preferred for prediction. Moreover, it was concluded that a minimum of three parameters from the confusion matrix (accuracy, precision, specificity, sensitivity, and F1-score) and the AUC should be calculated to reduce the heterogeneity in the validation parameters.

To predict T2DM incidence in obese women, ANN-based on anthropometric and adipocytokines variables were applied [57]. The Separability-Correlation Measure (SCM) was first utilized to select the substantial features. The FBS and HbA1c were found as the best discriminators; homeostatic models assessment as the moderate discriminator; adiponectin, visfatin, and insulin as the weak discriminators of diabetic women. The more chosen variables were apelin, visfatin, HbA1c, FBS, adiponectin, and total cholesterol. Moreover, the subsets of these parameters containing apelin, FBS, HbA1c, and visfatin were found to be the remarkable features that could perform the best discrimination between diabetic and non-diabetic groups.

Electronic Health Record (EHR) is a digital copy of a patient’s paper chart that makes information accessible securely and instantly for users. A novel prognostic method for the prediction of T2DM based on EHR was developed without employing the current invasive approaches [58]. The methodology was based on RF frameworks with data enrichment utilizing the temporal variables. The AUC of 84.2% was obtained with an RF classifier, 83.1% when temporal features were used, and 83.7% after using both the feature selection and the temporal features. It was concluded that pathology prediction was feasible and effective employing the information of a patient’s progression over the years and without utilizing invasive approaches.

The OGTT was also employed to create a predictive model for T2DM utilizing SVM [59]. The insulin concentrations were also considered before glucose intake at 30, 60, and 120 min, plasma glucose, personal information like ethnicity and age, as well as the BMI. All accessible combinations of the 10 best-ranked variables were employed to produce SVM-based models. The outcomes revealed that the glucose levels in plasma and the information obtained therefrom could provide the uppermost predictive performance for the future progress of T2DM. Moreover, demographic features and insulin ones did not present the additional performance advancement for diabetes forecasting. The average accuracy and sensitivity were acquired as 96.80% and 80.09%, respectively.

A novel adherence diagnosis method that employed deep learning approaches was developed for T2DM cases, based on simulated Continuous Glucose Monitoring (CGM) signals [60]. Various classification models including LR, CNN, Multi-Layer Perceptrons (MLPs), and ensembling methods were evaluated. The foremost performing models were acquired for CNN with accuracy of 77.5 ± 1.4% and MLP with the accuracy of 72.5 ± 3.5%.

The Artificial Immune Recognition System (AIRS) was another approach that was used for medical classification problems [61]. AIRS utilizes resources contest, clone choice, maturation, mutation, and generation of memory cells for the advancement of forecasting modeling. In addition, AIRS2 is a more effective version of the AIRS method. The modified AIRS2 called MAIRS2 was considered a developed K-NN algorithm. Using the AIRS2 learning algorithm, the size of the dataset named Memory Cells Pool was decreased. Then, the fuzzy K-NN was applied to dominate the constraints of the K-NN classifier by ascribing a class membership to each case. The highest classification accuracy was acquired as 89.10% and 82.69% for MAIRS2 and AIRS2, respectively.

Diabetic Sensorimotor Polyneuropathy (DSPN) is a remarkable consequence of diabetes mellitus, so early diagnosis or prediction of DSPN is essential for preventing foot ulcers and neuropathic pain [62]. Three machine learning methods including SVM, XGBoost, RF, and their combinations were considered to predict four classes containing normal, possible, probable, and confirmed based on the electrophysiological and clinical characteristics of the doubtful DSPN. RF showed the best AUC (0.82); and the average values of the International Federation of Clinical Chemistry (IFCC), serum glucose, albumin levels, and HbA1c were found as the major predictors. Therefore, it was concluded that ML techniques could help in predicting the DSPN and electrophysiological analysis in T2DM.

Three potent machine learning algorithms including XGBoost, DNN, and RF were applied to forecast the forthcoming occurrence of T2DM based on the biochemical, demographic, and anthropometric measures [63]. Furthermore, three strategies containing cost-sensitive learning, changing threshold, and sampling were used to overcome the imbalance challenge in the diabetes classes. Weighing and changing thresholds caused a reduction in the training time, enhancement of performance, and increased prediction accuracy in the minority diabetes classes. Although sampling led to better performance, it was not found as the best solution to resolve the imbalance distribution between healthy and diabetic classes.

XGBoost, SVM, LR, RF, and ensemble algorithms were also utilized to construct models to predict T2DM incidents in the following year (Y + 1) by employing the variable values in the ruling year (Y) [64]. Before constructing the prediction model, the major features were initially chosen utilizing a data-driven feature selection approach. It contained an analysis of a chi-squared test, variance (ANOVA) test, and the recursive feature elimination methods. The elements including Triglycerides (TG), HbA1c, FPG, gamma-GTP, BMI, uric acid, age, smoking, sex, Physical Activity (PA), drinking, and family history were selected as the variables. The prediction models accurately anticipated the normal (non-diabetic), prediabetes, or diabetes in the Korean population. The accuracy was identified as between 71% and 73%. Moreover, the ensemble models had better proficiency than the single models. The performance of the prediction models was improved by incorporating more medical history from the dataset. In another work, the DT and LR models were also used to predict T2DM [65]. As a result, five main predictors including pregnancy, glucose, age, BMI, and diabetes pedigree function were found as the best classifiers. The prediction accuracy was obtained as 78.26%.

T2DM in youth is more challenging to therapy because of a more quick reduction in the beta-cell function, and also the appearance of onset of complications. The NB, LR, LogitBoost, and DT methods were tested based on HbA1c, FPG, and 2hrPG to improve preDM/T2DM screening performance [66]. The results showed that they were statistically equivalent to or better than the screening guideline. The F-measure and specificity were obtained as 0.005 and 0.225, respectively.

To early predict T2DM by employing lifestyle indicators, various ML algorithms using ensemble methods including Boosting, Bagging, and Voting were employed [67]. Boosting converts weak learners into strong learners, in which weak classifiers are combined to constitute a strong model to ameliorate the predictive abilities of the eventual model. The base learning classifier is used multiple times to produce a novel forecasting rule. The steps will be performed n times iteratively and then the boosting method will incorporate the outcomes from weak learners and transform them into a single strong prediction model. The bagging method follows the bootstrap aggregation approach and creates several training sets for model-building purposes. After the construction of disparate training sets, different models are applied to the resampling process with an ensemble structure. Eventually, the outcomes of learners are aggregated to make the ultimate divination. In the voting method, the forecasting of base learners is aggregated to construct new meta-variables for final prediction.The output of base classifiers is combined based on the most votes and weighted approaches. Among all the models, the Bagged Decision Tree (BDT) algorithm achieved the highest testing accuracy rate of 99.14% followed by Stochastic Gradient Boosting (98.45%,), RF (93.63%), Extra Tree (91.41%), Adaboost (89.69%), and Voting classifiers (89.51%). However, according to the number of misclassifications on the test dataset, BDT obtained the lowest rate of 0.86% and Voting obtained the highest rate of 10.49%.

A patient network-based model containing the existing relationships among health situations for a category of subjects diagnosed with a similar disease using the graph theory was developed to predict T2DM [68]. For this purpose, a bipartite network graph whose vertices can be separated into two independent subsets was utilized to provide the diseases that a patient faces over time. Eight ML models including Naive Bayes, SVM, LR, KNN, RF, XGBoost, DT, and ANN were used to predict the T2DM risk. The RF model led to better results. In addition, closeness and eigenvector centralities as well as patient age were determined as the significant variables for the model.

In order to predict the future incidence of T2DM following pregnancy in women, an XGBoost model based on parity, age, gestational age at delivery, gravidity, glucose challenge test (GCT), oral glucose tolerance test results, OGTT, and birthweight was constructed [69]. The prediction model led to an AUC of 0.85 and an accuracy rate of 91%. The most predictive parameters were neonatal birthweight and the age at the index pregnancy.

A gradient boosting decision tree model was developed to predict the occurrence of T2DM 5 years ahead [70]. The model achieved a test AUC of 80.26 and was robust to immigration status, sex, area-level marginalization with regard to race/ethnicity and material deprivation, and low contact with the health care system.

A method called average-based weighted objective distance (AWOD) was developed for the prediction of T2DM [71]. This approach uses information based on average amounts of the expected amounts and acceptable levels to prioritize factors naming as weighing factors. AWOD has three main stages: i) determining important levels for weight calculation including the expected level and an acceptable level. Afterward, the weights for both significant and negligible factors are computed. Finally, AWOD is specified for the prediction. The AWOD-based approach provided 98.95% accuracy which was more accurate than RF, SVM, K-NN, and DL.

The Q-learning algorithm was used for the early detection of T2DM based on several variables such as glucose level, BMI, and age [72]. In the Q-learning algorithm, a set of q-values are approximated by employing an agent and a set of states. The agent will receive positive/negative rewards for each state pair. The agent maximizes the negative/positive reward in the long-term process by learning optimal policy elections for various unique states. The proposed model produced an off-policy-based RL and made the learning agent to identify an optimal policy for the variables. It achieved a better accuracy rate (84%) than the K-NN and DT algorithms.

T1DM

An XGBoost model was developed to recognize T1DM subjects misdiagnosed as T2DM using Ambulatory Electronic Medical Records (AEMR) data [73]. The model identified BMI/weight, age, HbA1c/blood glucose values, and therapy history as top predictors of misdiagnosis. The precision of the model at low levels of recall (10%) was 17%, in comparison with the < 1% occurrence rate of the misdiagnosis at the time of the first T2DM. This algorithm could diminish misdiagnosis of adult-onset T1DM.

GDM

The earlier diagnosis of GDM is important for barricading or remarkably decreasing the risk of detrimental pregnancy outcomes [74]. A gradient-boosting machine learning model constructed by decision-tree base-learners was applied based on the electronic health records. The models anticipated GDM with high accuracy even at the pregnancy beginning (AUC = 0.85) more than a baseline risk score (AUC = 0.68). The results were confirmed in both a geographical validation set and a future validation set. Eventually, a model was introduced based on the nine queries that a patient could reply to them for determining the early-stage intervention in high-risk women (AUC = 0.80). In another study, eight ML approaches including RF, logistic, XGB, GDBT, LGB, AdaBoost, and Vote as well as LR with RCS and stepwise logistic regression were examined to predict the incidence of GDM [75]. The maternal demographic specifications, the medical history, and also the laboratory amounts at early pregnancy were selected as the predictors. Variables were trained by discrete ML models and traditional LR models. In the validation dataset, the LR and ML models were carried out moderately (AUC: 0.59–0.74). Overall, the GBDT model had the best performance (AUC: 0.74) among the other ML models. BMI, HbA1c, FBS, and TG were found to have a strong contribution to GDM. A cut-off of 0.7 had a positive predictive amount of 93.2% and a specificity of 99%. Moreover, it was realized that machine learning approaches did not have superiority over LR in forecasting GDM. In another study, Light Gradient Boosting Machine (lightGBM) and SVM were utilized to develop the first 19 weeks risk prediction model for GDM [76]. The predictors included blood routine, coagulation function, and hepatic and renal functions were used. It was found that a cutoff of Activated Partial Thromboplastin Time and Prothrombin Time can accurately forecast GDM with a specificity of 99.47% and sensitivity of 88.3% (AUC = 94.2%). If only renal and hepatic functions were used, a cutoff of FBG and direct bilirubin with a specificity of 90.0% and sensitivity of 82.6% (AUC: 91.0%) were specified. And a negative correlation between prothrombin time and patients with the activated partial thromboplastin time was identified. A negative correlation with direct bilirubin and a positive correlation with FPG could neglect the coagulation function test. As a result, the outcomes could disclose the feasible functions of prothrombin time and activated partial thromboplastin time as biomarkers for the forecasting and early detection of GDM.

GDM is commonly affirmed with an OGTT during 24 to 28 weeks of gestation [77]. A cost-sensitive hybrid model (CSHM) and five machine learning approaches including BN, LR, chi-squared automatic interaction detector (CHAID) tree, SVM, and NN were utilized to construct the predictive models based on EHR. The accuracy of positive samples was (62.16%), however, the outcomes proposed that the wide majority (98.4%) of those forecasted positive cases were real positives.

A novel analytical platform (Reverse Engineering and Forward Simulation [REFS]) was developed to construct a prediction model for the development of prediabetes or T2DM using the EHR information [78]. REFS was based on a Bayesian scoring algorithm to follow a vast model space and produced a dispensation of risk approximates from an ensemble of prediction models. The model predicted the progression to T2DM (AUC = 0.76). Models of development to T2DM included primarily appointed risk factors including lipid disorders, triglycerides, socioeconomic factors, blood pressure, blood glucose, and hypertension. While, models of the development of prediabetes contained novel factors including C-reactive protein, alanine aminotransferase, high-density lipoprotein, and body temperature (AUC = 0.70).

Obesity and body fat dispensation are substantial risk factors for type 2 diabetes [79]. To forecast the FPG situation, a composition of different measures was considered. Based on thirty-seven anthropometric values, the predictions of the FPG using the NB classifier and LR were compared. The AUC was obtained as 0.739 and 0.741 for females as well as 0.686 and 0.687 for males using LR and NB classifiers, respectively. The outcome revealed the superiority of a combination of anthropometric measures over case measures alone in both males and females.

AIRS was also used to predict T2DM following GDM [80]. Despite the dataset having imbalanced data, the classification recall reached 62.8%. To develop a simple model to forecast GDM in early pregnancy, biochemical biomarkers and multivariate Bayesian logistic regression using the Markov Chain Monte Carlo simulation algorithm were applied [81]. At first, the predictive maternal factors were chosen through Bayesian adaptive sampling. From the 8th to 20th week of gestation, FPG levels diminished slightly and TG levels elevated slightly. The risk of GDM was predicted with prepregnancy BMI, maternal age, TG, and FPG with an accuracy of 0.64 and an AUC of 0.766.

In order to construct a preconception-based GDM predictor, game theory concepts were merged with genetic programming (GP)-based automated machine learning (AutoML) [82]. For this purpose, the Shapley additive explanations (SHAP) framework was combined with the GP-based Tree-Based Pipeline Optimization Tool (TPOT) to find significant features and choose optimal supervised machine learning models. GP trains the ML problems based on random mutation, crossover, fitness functions, and production to reach optimal solutions. The Shapley amount is the average expected marginal contribution of one player across all accessible permutations of players in game theory. In ML, game players are the variables, and the collective payout is the model forecasting. The SHAP framework supplies local explanations based on the Shapley amounts to find the global model structure. A stacked ensemble model with a linear SVM classifier and GB classifier was achieved utilizing GP. The resulting AUC was 0.93 based on four features (fasting insulin, glycated hemoglobin A1c (HbA1c), triglycerides/HDL ratio, and mean arterial blood pressure). The multivariate logistic regression model also revealed that each 1 mmol/mol rise in preconception HbA1c was positively associated with the appended risks of GDM and preterm birth. Therefore, the control of preconception HbA1c may help avoid GDM and decrease the occurrence of preterm birth.

All diabetes types

Some ML models have been presented for the prediction and diagnosis of diabetes regardless of its type. A filter approach based on the DT algorithm (Iterative Dichotomiser 3) was developed to select important features [83]. Two ensemble learning algorithms, RF and AdaBoost were employed for feature selection. Also, it was compared with wrapper-based feature selection algorithms. The superior performance of the suggested approach was due to the various combinations of the chosen feature set. Diabetes pedigree function, plasma glucose concentrations, and Blood mass index were the most substantial major features for the prediction of diabetes. The suggested method achieved 98.2%, 99.2%, and 99.6% with the cross-validation methods of hold out, k-folds, and LOSO, respectively. Furthermore, the proposed approach would impressively diagnose diabetes and could be utilized in an e-healthcare environment. In another study, an ensemble-based framework called eDiaPredict was suggested to predict diabetes [84]. This algorithm employs an ensemble of various ML algorithms including RF, XGBoost, SVM, NN, and DT. Recursive Feature Elimination (RFE) [85] was used to decrease the feature space in the dataset. The ensemble RF as a bagging method with the XGBoost as a boosting approach generated the best outcome by forecasting the diabetic patient with 95% accuracy. It is due to diminishing the bias recursively and identifying the best solution.

To predict the occurrence of diabetes by employing EHR, LightGBM approach was applied [86]. The predictive ability of this approach was compared with the LR model and led to 0.865–0.925 vs. 0.778–0.876 for various datasets. A novel computing scheme was proposed to categorize several types of diabetes, as they need different treatments [87]. It contained two steps: (1) the major features were found from the glucose concentration curve obtained by the CGM system; (2) a model of diabetes parameter regression called double-Class AdaBoost was constructed. The experiments revealed the coincidence rate of the proposed scheme and the clinical selection at 90.3%. In another work, the Gaussian Process (GP)-based classification approach was used employing three kernels including linear, polynomial, and radial basis [88]. The best performance was obtained for the GP-radial basis kernel with an AUC of 81.97%, a sensitivity of 91.79%, and a specificity of 63.3%.

In a study, RF was employed to detect the occurrence of diabetes in a large set of observational data and figure out the potential predictors of diabetes [89]. The full RF model assessed 93 features including demographic, blood biomarker, anthropometric, echocardiogram data, and medical history. In addition, RF metrics of feature significance were utilized to rank features according to their portion of the diabetes forecast. The performance of the RF full model was analogous (AUC = 0.82) to those of more stingy models. The top-ranked features according to RF included FPG, TG, hemoglobin A1C, adiponectin, waist circumference, c-reactive protein, left ventricular mass, aldosterone, leptin, and high-density lipoprotein cholesterol.

A fuzzy c-means-neuro-fuzzy rule-based classifier was developed for the classification of diabetes [90]. The accuracy of the classifier was calculated by the number of correctly identified diabetes records while its complications were computed by the number of extracted fuzzy rules. Experimental outcomes revealed that the suggested fuzzy classifier could reach a good tradeoff between accuracy and interpretability. The contribution of the Fuzzy C-Means (FCM) algorithm with the Adaptive Network-Based Fuzzy Inference System (ANFIS) decreased the learning time and size of models. ANFIS is a particular method in neuro-fuzzy modeling that used neural networks to adapt rule-based fuzzy systems. The best accuracy of the proposed method was acquired as 81.54%.

A non-invasive diabetes diagnosis system was also suggested dependent on the wristband basic Physiological Parameters (PhyP) and Photoplethysmography (PPG) signal [91]. The PhyP and Mel-Frequency Cepstral Coefficients (MFCC) were extracted from 5 s PPG signal parts and then applied as the input for the RF, SVM, K-NN, and XGBoost. This data was used to classify the patients into prediabetes, diabetes, and normal classes. Moreover, a Hybrid Feature Selection (Hybrid FS) approach was suggested to decrease the size of the entrance data. The Hybrid FS-based XGBoost system resulted in a significant accuracy (99.93%) for non-invasive diabetes diagnosis with numerable variables and less computational endeavor. The analysis showed that the PPG signal was a suitable substitute for ordinary non-invasive BG evaluation.

Laboratory information such as sex, age, body mass index, TG, FBS, high-density lipoprotein, low-density lipoprotein, and blood pressure was used to predict diabetes mellitus using GBM and LR techniques [92]. Moreover, the adjusted threshold and the class weight approaches were employed for ameliorating sensitivity. The AUC and sensitivity were 84.7% and 71.6% for the suggested GBM model as well as 84.0% and 73.4% for the LR method, respectively. The performance of the GBM and LR models was better than the DT and RF models. These approaches could be implemented in an online computer program to assist physicians in forecasting cases of the future incidence of diabetes and supplying essential preventive interventions.

The correct diagnosis of the diabetes type is sometimes challenging. To recognition of the prediabetic, T1DM, and T2DM types, RF, SVM, DT, KNN, Bagging, and Stacking algorithms were used. The outcomes showed that integration of Bagging K-NN, Bagging DT, and K-NN, with a K-NN meta-classifier, led to an accuracy of 94.48%. Moreover, 5 variables including sex, nutrition, insulin, antiDiab, and education were found that remarkably impressed the model accuracy [93]. In another work, a Decision Support System (DSS) was developed for the prediction of diabetes based on SVM and RF and fully CNN. The obtained accuracies using RF, SVM, and DL were 83.67%, 65.38%, and 76.81%, respectively. The experimental outcomes revealed that RF was more efficient for diabetes forecasting in comparison with other methods [94].

A Reinforcement Learning-based Evolutionary Fuzzy Rule-Based System (RLEFRBS) was developed for diabetes diagnosis. The proposed model comprised a Rule Base (RB) constructed by numerical data without initial rules and rule optimization. Following learning the rules, the surplus rules were excluded. Afterward, the superfluous conditions in the prior parts were pruned to yield modest rules with higher interpretability. Eventually, a proper subset of the rules was chosen to employ a Genetic Algorithm (GA), and the RB was built. To improve the performance of RLEFRBS, evolutionary tuning of the membership functions and weight adjusting using RL were employed. The accuracy of the suggested model revealed that it could be a proper alternative for the diagnosis of diabetes [95].

A pipeline based on deep learning methods was proposed to forecast diabetic people. It comprised data augmentation utilizing a variational autoencoder (VAE), feature augmentation utilizing a sparse autoencoder (SAE), and a CNN for classification. The input features were glucose or insulin level, the number of pregnancies, blood pressure, and age. After training the CNN classifier in association with the SAE for featuring augmentation, an accuracy of 92.31% was obtained. It was concluded that this pipeline was proper in diabetes diagnosis [96].

A model using hidden layers of a deep neural network model (DLPD) was proposed to forecast the incidence of diabetes in the future and also to specify the type of disease. The dropout regularization was applied to avoid overfitting and the binary cross-entropy loss function was used to reach high accuracy. The best training accuracy of the diabetes-type data set was determined as 94.02% [97].

Prediction of blood glucose

The level of blood glucose (BG) should be maintained within the normal range (70–120 mg/dL or 3.6–6.9 mmol/L) [98]. Keeping the BG amounts at the desired level in diabetic subjects is challenging because the precise glycemic control employing bolus insulin injections may lead to an elevated risk of having hypoglycemic incidents [99]. Therefore, closed-loop systems and computational methods have been developed to help in controlling BG levels. On the other hand, modeling and controlling are two major challenges to completing diabetes management. Modeling refers to learning a precise BG forecasting model based on several variables and controlling refers to applying a developed model to forecast the BG amount and propose some recommendations. The utilized algorithms for the prediction of BG are critical in the progress of closed-loop insulin delivery and decision support systems for the control of blood glucose in diabetes. Multitask learning is a simplified method for leveraging data from numerous cases while it could still do learning accurately the personalized models [100]. This approach showed stable performance in predictive metrics at both long-term and short-term prediction horizons. The predictive accuracy RMSE of 18.8, 25.3, 31.8, 41.2, and 47.2 mg/dL were obtained at 30, 45, 60, 90, and 120 min prediction horizons, respectively, with around 93% clinically admissible predictions employing the Clarke Error Grid Analysis (EGA). The outcomes showed the success of multitask learning compared with sequential transfer learning. In another study, a multisource adversarial transfer learning method was applied to provide the learning of a feature exhibition that was analogous to the sources [101]. For this purpose, a CNN model was employed. The evaluation was performed by tracking multiple transfer methods. This approach was applicable when data originated from the various datasets, or when there was too small data in the intra-dataset condition. Although the adversarial transfer did not differentiate the patients and datasets, it led to learning a general feature representation contrary to a standard transfer. Only insulin, glucose, and carbohydrates data were used for the forecasting of the future glucose amount. The RMSE was obtained between 18.94 and 19.27 for various datasets.

The Kernel Extreme Learning Machine (KELM) neural network, the Variational Mode Decomposition (VMD) method, and the AdaBoost algorithm were integrated to build a multi-scale blood glucose prediction model so-called VMD-KELM-AdaBoost [102]. The VMD approach was first applied to decompose a series of BG concentrations into a series of intrinsic modal functions (IMFs) with various scales. Afterward, in order to increase the prediction capability of the model, the AdaBoost and KELM neural networks were combined to model and also forecast the decomposed IMFs by VMD. The experimental results revealed that this model could perform the BG prediction (the mean values of RMSE was about 10.14); in Clarke error mesh analysis, the ratio of being in A zone was about 95.7% and the sensitivity was 94.8%.

A Monte Carlo (MC) photon simulation-based model was developed for assessing the concentration of blood glucose through PPG on the fingertip [103]. The MC method was selected for the simulation of a photon in the finger model due to its flexibility in calculating the optical interplays with the biological tissues. The intensities of the discovered photons after simulation with the model were employed to assess the BG concentrations using XGBoost. A heterogeneous finger model with the muscle, fat, skin, and bone layers was developed to propagate photons. Bio-optical characteristics such as scattering coefficient, absorption coefficient, refractive index, and anisotropy were determined at wavelengths of 660 and 940 nm to develop the finger model for photon simulations. The model achieved RMSE 16.1.

To predict BG levels utilizing time-series data of patients with T1DM, autoregression with exogenous inputs (ARX) model, ML-based regression models, and DL models including a temporal convolution network (TCN) and a vanilla LSTM Network were examined [104]. The ARX model obtained the lowest average RMSE for both direct and recursive approaches. No remarkable advantage was found from the ML models in comparison with the classic ARX one, except TCN’s performance. TCN was more robust than BG trajectories with false oscillations, for which ARX over-predicted peak BG and under-predicted valley BG amounts.

DL approaches have been also used for an accurate prediction of CGM. A DL algorithm was developed for glucose forecasting that employed a multi-layer Convolutional Recurrent Neural Network (CRNN) architecture [105]. The model was initially trained on data containing carbohydrates, CGM, and insulin data. The CRNN method contained three sections: a multi-layer convolutional neural network to extract the data variables using convolution and pooling, an RNN layer with LSTM cells, and fully-connected layers. The convolutional layer comprised a 1D Gaussian kernel filter to carry out the temporal convolution. The pooling layers were applied to decrease the features. The eventual output was a regression yield by fully connected layers. The model was able to predict glucose levels with superior accuracy for real patient subjects (RMSE = 21.07 mg/dL for 30-min and RMSE = 33.27 for 60-min). The proposed algorithm was implemented on an Android mobile phone, with a performance time of 6 ms on a phone in comparison with an execution time of 780 ms on a laptop. In another work, the CRNN model was used for the precise prediction of glucose levels in T1DM patients for a 30-minute horizon (MAE = 11.22 [mg/dL] and RMSE = 17.45 [mg/dL]), and for the 60- minute horizon (MAE = 23.25 [mg/dL] and RMSE = 33.67 [mg/dL]) [106].

The CGM measurements are sensitive to sensor faults and it would influence the BG prediction. A novel LSTM-based deep RNN model considering the sensor error was proposed for predicting the BG level [107]. A Kalman smoothing method for the modification of the inaccurate CGM readings was used because of the sensor fault. To this end, the various physiological information including bolus insulin, carbohydrates from the meal, Kalman smoothed CGM data, and cumulative step counts in a constant time interval were considered. The goal was to lessen the diversity between the fingerstick blood glucose measurements and the predicted CGM amounts.

Bluetooth Low Energy (BLE)-based sensors could be applied as a device to trace personal vital signs data [108]. A personalized healthcare monitoring system employs real-time data processing, a BLE-based sensor device, and ML-based approaches to improve the self-managing of chronic diabetes situations. BLEs were used for collecting the vital signs data like blood glucose, weight, heart rate, and blood pressure from the sensor nodes to the smartphones. Moreover, real-time data processing was used to handle a large value of continuously produced sensor data. The outcomes revealed that the suggested real-time data processing and commercial versions of the BLE-based sensors were adequately effective to follow the vital signs data of diabetic cases. Furthermore, ML-based classification approaches were examined and demonstrated that a multilayer perceptron could supply early forecasting of diabetes employing the gainer’s sensor data as input with the accuracy of 77.08% in comparison with 76.69%, 73.04%, 76.04%, and 76.56% for NB, RF, LR, and SVM, respectively. The outcomes also disclosed that LSTM can precisely foresee the future BG level.

A novel framework was suggested to incorporate several algorithms for BG prediction in patients with diabetes mellitus [109]. This framework had an adaptive weight which was specified for each approach where one algorithm’s weight was conversely proportional to the sum of the squared prediction errors. The suggested framework was employed to combine SVR, an Extreme Learning Machine (ELM), and an Autoregressive (AR) model. The new adaptive-weighted algorithm reached the best prediction performance of 92.5%.

Several linear black-box approaches (autoregressive, autoregressive moving average, and autoregressive integrated moving average (ARIMA)) and nonlinear ML procedures (SVR, feed-forward neural network (fNN), regression random forest, and LSTM-NN) were examined to forecast the glucose levels and hypoglycemia. The outcomes revealed that the individualized linear models were more efficient than the population ones. The best linear algorithm (individualized autoregressive integrated moving-average) obtained an accuracy analogous to that of the foremost nonlinear algorithm (individualized feed-forward neural network), with RMSE of 22.1 and 21.5 mg/dL, respectively. For the prediction of hypoglycemia, the individualized ARIMA provided precision = 64% and one incorrect alarm/day in comparison with the foremost nonlinear approach (population SVR) with precision = 63% and 0.5 incorrect alarms/day. Moreover, no remarkable benefits were found when nonlinear techniques were used for a 30 min prediction horizon [110].

Predicting glucose amounts based on food and insulin intake is a hard task that should be done daily for cases with diabetes [111]. In a study, the enhanced variants of grammatical evolution, random forest regression, K-NN regression, and tree-based Genetic Programming (tree-based GP) were utilized to build models. They were then examined to predict the glucose concentrations accompany by the approximation of both future insulin injections and carbohydrate intakes. Two new enhanced modeling approaches for glucose forecasting included (i) diverse grammatical progress using an optimized grammar, and (ii) various tree-based GP utilizing a three-compartment model for insulin and carbohydrate dynamics. The experimental outcomes applying the Clarke error grid metric showed that 90% of the predictions were correct (i.e., Clarke error categories A and B). However, it still produced 5 to 10% of drastic errors (category D) and nearly 0.5% of very critical errors (category E). Several ML-based prediction models such as XGBoost, RF, Glmnet, and LightGBM were applied for the prediction of undiagnosed T2DM that were comparable with the regression models [112]. The accuracy in the forecasting of the FPG level was calculated employing 100 bootstrap iterations in various subsets of data. For the six months of accessible data, a simple regression model was carried out with the undermost average RMSE of 0.838, followed by RF (0.842), LightGBM (0.846), Glmnet (0.859), and XGBoost (0.881). The highest level of variable election stability over time was determined with LightGBM models. The outcomes demonstrated no clinically relevant progress when more advanced prediction models were utilized.

Hypoglycemia is defined as a self-monitored blood glucose value < 70 mg/dL [113]. SVM, RF, NB, and K-NN were examined to construct models. The optimal number of self-monitored blood glucose (SMBG) amounts required by the model was almost ten per week. The specificity of the model for the forecasted hypoglycemia incidence in the next 24 h for patients with T2DM was 70% and the sensitivity was 92%. In the model that combined medication information, the forecasting window was for an hour of hypoglycemia, and the specificity ameliorated to 90%. Hypoglycemia occurs asymptomatic in some patients [114]. The precise clinical decision protection tools are required to recognize susceptible patients for iatrogenic hypoglycemia throughout hospitalization. To this end, RF classification, multivariable logistic regression, Stochastic Gradient Boosting (SGB), and NB were utilized. The SGB predicted the potentially serious results of iatrogenic hypoglycemia within 24 h after each BG measurement. In another study, the XGBoost model was utilized to forecast hypoglycemia employing the dataset of multicenter intensive care unit (ICU) electronic health records [115]. The results revealed the power of the model to predict the incidence of hypoglycemia (blood glucose < 72 mg/dL) during the settlement of patients in the ICU.

Predicting the incidence of postprandial hypoglycemia is a challenge because of the glucose fluctuations occurring around mealtimes [116]. To predict this event with a 30-min prediction horizon, four machine learning models including SVM using radial basis or linear functions, RF, LR, and K-NN were applied. The RF model demonstrated the best performance with an AUC of 0.966, specificity of 91.3%, and sensitivity of 89.6%.

Nocturnal Hypoglycemia (NH) (glucose levels < 50 mg/dL) is a challenging concern for individuals with T1DM, because it may not be diagnosed while sleeping [117]. However, predicting NH before sleep could aid to diminish nighttime hypoglycemia. An SVR model was applied to forecast the glucose before bedtime, the overnight minimum glucose, and overnight NH for individuals with T1DM. The least glucose threshold for declaring NH risk was taken by employing a decision-theoretic criterion to increase the expected net gain. The algorithm anticipated 94.1% of NH events (< 3.9 mmol/L) with an AUC curve of 0.86. In addition, the proposed algorithm could decrease NH by 77.0%.

In another study, thirty-two features based on CGM, insulin, meal, and demographics data from the T1DM cases were measured for three sequential days before the night [118]. The linear discriminant analysis was used to find the optimal feature subset, which led to only one feature subset from four features. The assessment resulted in an AUC of 0.79 leading to a specificity and sensitivity of 70% and 75%, respectively. In another work, SVM and MLP were utilized to predict NH based on CGM data and a PA tracker [119]. The predictions carried out by SVM achieved the best population results, with a specificity and sensitivity of 82.15% and 78.75%, respectively.

A model for foreseeing NH with a 6-hour horizon (midnight-6 am) was built using an RF model [120]. This model showed AUC = 0.75 for late-night (prediction at midnight, looking at 3–6 am window) and AUC = 0.90 for an early night (midnight-3 am). While the fluctuation and the lack of late night blood glucose patterns present predictability challenges, this 6-hour horizon model showed an acceptable performance in forecasting NH.