Abstract

Background

The prescribing of high-risk medications to older adults remains extremely common and results in potentially avoidable health consequences. Efforts to reduce prescribing have had limited success, in part because they have been sub-optimally timed, poorly designed, or not provided actionable information. Electronic health record (EHR)-based tools are commonly used but have had limited application in facilitating deprescribing in older adults. The objective is to determine whether designing EHR tools using behavioral science principles reduces inappropriate prescribing and clinical outcomes in older adults.

Methods

The Novel Uses of Designs to Guide provider Engagement in Electronic Health Records (NUDGE-EHR) project uses a two-stage, 16-arm adaptive randomized pragmatic trial with a “pick-the-winner” design to identify the most effective of many potential EHR tools among primary care providers and their patients ≥ 65 years chronically using benzodiazepines, sedative hypnotic (“Z-drugs”), or anticholinergics in a large integrated delivery system. In stage 1, we randomized providers and their patients to usual care (n = 81 providers) or one of 15 EHR tools (n = 8 providers per arm) designed using behavioral principles including salience, choice architecture, or defaulting. After 6 months of follow-up, we will rank order the arms based upon their impact on the trial’s primary outcome (for both stages): reduction in inappropriate prescribing (via discontinuation or tapering). In stage 2, we will randomize (a) stage 1 usual care providers in a 1:1 ratio to one of the up to 5 most promising stage 1 interventions or continue usual care and (b) stage 1 providers in the unselected arms in a 1:1 ratio to one of the 5 most promising interventions or usual care. Secondary and tertiary outcomes include quantities of medication prescribed and utilized and clinically significant adverse outcomes.

Discussion

Stage 1 launched in October 2020. We plan to complete stage 2 follow-up in December 2021. These results will advance understanding about how behavioral science can optimize EHR decision support to improve prescribing and health outcomes. Adaptive trials have rarely been used in implementation science, so these findings also provide insight into how trials in this field could be more efficiently conducted.

Trial registration

Clinicaltrials.gov (NCT04284553, registered: February 26, 2020)

Similar content being viewed by others

Background

Prescribing of potentially unsafe medications for older adults remains extremely common [1,2,3]. More than 20% of older adults are chronically using at least one of these medications [3,4,5]. Chronic use can result in adverse health consequences such as an increased risk of hospitalizations, falls, and fractures [3, 6]. For instance, benzodiazepines and sedative hypnotics are thought to increase the 1-year risk of falling by 30% in older adults, even among patients who have been using them [7, 8]. Despite strong clinical guidelines recommending reductions in their use, a gap persists in how to achieve deprescribing of high-risk medications in clinical practice.

Several prior studies have evaluated strategies to de-implement the prescribing of high-risk medications to older adults [4, 9,10,11]. Specific approaches have included in-person patient education, pharmacist medication or drug utilization reviews, clinician-facing education, or referral to specialist care [11,12,13]. The vast majority of these have been complex and multi-faceted, and the timing of intervention provision has also varied; some were delivered at specific points in care which may be too late, such as hospital or nursing home admission [14]. Collectively, these interventions have been only modestly effective, perhaps in part due to issues of design or implementation, and substantial resources would be required to sustain their use.

Computerized clinical decision support tools in electronic health record (EHR) systems are a widely scalable strategy to influence physician behavior and have demonstrated effectiveness in implementation science interventions to increase the uptake of preventive health screenings, test ordering, and prescribing guideline-indicated medications [9, 15, 16]. Several prior studies have evaluated the use of decision support to facilitate the deprescribing of high-risk medications to older adults and have found inconsistent results [17,18,19,20,21]. The lack of effectiveness of these EHR interventions may have resulted from aspects of their designs, such as their not presenting clinically actionable information to overcome true barriers to de-prescribing like clinical inertia, patient pressure, or limited access to alternative treatments, or the decision support being insufficiently focused on workflow and cognitive heuristics, such as providing large blocks of text to communicate risks [22,23,24]. Their usability and likelihood of producing success moving forward could also be hampered further by barriers recognized by implementation frameworks, like the representativeness of participating providers and the timing and cost of the interventions [25, 26].

Accordingly, the effectiveness of EHR decision support tools for reducing the prescribing of high-risk medications may be improved by incorporating design principles of behavioral sciences, such as framing, pre-commitment/consistency, or boostering, which have demonstrated impact on individual behavior in other contexts [21, 27,28,29,30,31,32,33]. For example, providing information in terms of risks rather than benefits can make patients more willing to fill a prescribed medication [27, 34]. Similarly, using “defaults” within EHRs can increase generic medication prescribing and ordering of recommended laboratory tests [32].

To fill this knowledge gap, we initiated Novel Uses of adaptive Designs to Guide provider Engagement in Electronic Health Records (NUDGE-EHR) trial. Because there are numerous ways in which EHR tools that incorporate behavioral science principles could be designed and because a direct comparison of approaches is typically difficult to accomplish with parallel-group trials [35, 36], thus, NUDGE-EHR uses an adaptive design, a methodology that has rarely been used in implementation research [36]. Using this design will help identify the best way to deliver deprescribing tools within an EHR and will provide guidance on which specific components of these tools are most effective at changing prescribing, which will inform implementation in other settings. Regardless of study findings, NUDGE-EHR could be used as a blueprint about how to consider and overcome the practicalities of conducting adaptive trials in implementation science.

Methods/design

Overall study design

NUDGE-EHR is a two-stage adaptive randomized pragmatic trial with a “pick the winner” design that seeks to identify the optimal EHR tool for reducing the use of benzodiazepines, sedative hypnotics (“Z-drugs”), and anticholinergics among older adults (Fig. 1). In stage 1, primary care providers (PCPs) at a large integrated delivery network have been randomized to one of 15 active intervention arms or usual care. For stage 2, we will randomize (a) stage 1 usual care providers to one of up to the top 5 promising stage 1 treatment arms or to continue usual care and (b) stage 1 providers in the unselected arms (e.g., ranked lower than 5) will be randomly assigned to one of up to the top 5 promising arms or usual care.

The study is funded by the NIH National Institute on Aging. The trial was approved by the Mass General Brigham institutional review board who waived informed consent for all subjects. The study is registered with clinicaltrials.gov (NCT04284553, first registered February 26, 2020) and overseen by a Data and Safety Monitoring Board. The authors are solely responsible for the design and conduct of this study and drafting and editing of the paper and its final contents.

The trial was designed using Pragmatic Explanatory Continuum Indicator Summary (PRECIS-2) trial guidance and reported using SPIRIT reporting guidelines (full protocol in Supplement 1).

Study setting and subjects

NUDGE-EHR is being conducted at Atrius Health, a delivery network in Massachusetts that uses the Epic® EHR system, the platform used by > 35% of US ambulatory practices and 60% of large hospitals [37]. Atrius employs approximately 220 PCPs at 31 practices who care for approximately 745,000 patients. Eligible study participants include these PCPs (including physicians and PCP-designated nurse practitioners and physician assistants) and their patients.

Providers are eligible if they prescribed a high-risk medication to ≥ 1 older adult in the 180 days before stage 1 assignment. Eligible high-risk medications include benzodiazepines, Z-drugs (e.g., zolpidem), and anticholinergics and were chosen based on clinical guidelines like Choosing Wisely and Beers Criteria that recommend reductions in use and they continue to be over-prescribed [38,39,40]. For stage 1, eligible patients of these providers are those aged ≥ 65 years and who have been prescribed ≥ 90 pills of benzodiazepine or Z-drug in the last 180 days, which most guidelines consider “chronic” use [40]. For stage 2, eligibility will be identical to stage 1 except that patients (and their providers) will also be included in secondary analyses if they had been prescribed ≥ 90 pills of eligible anticholinergics in the last 180 days. We chose to include just benzodiazepines and Z-drugs in stage 1 as these medications have similar prescribing patterns and likely have less variation with improved statistical power. For stage 2, we also included anticholinergics to ensure generalizability to other classes.

Interventions

Our intervention design was based upon a thorough review of peer-reviewed literature and two focus groups with PCPs intended to understand barriers to and facilitators of appropriate prescribing. Based on their effectiveness in other settings, applicability to older adults, and ability to be adapted to EHRs [30, 41,42,43], we selected nine behavioral principles for testing: salience, default bias, social accountability, timing of tools (e.g., an aspect of choice architecture), boostering, “cold-state” priming, simplification, pre-commitment/consistency, and framing (Table 1) [28, 33, 42, 44, 45]. Using these principles, we co-designed and iteratively tested the EHR tools with our multidisciplinary team in the healthcare system.

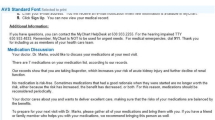

Arms 1 through 14 of stage 1 involve EHR tools that incorporate the selected behavioral science principles (Fig. 2). The central component of each intervention is an enhanced EHR alert (Best Practice Advisory [BPA] in Epic®). This alert provides information about risks of continued medication use and contains an embedded hyperlink to tips to help providers discuss medication discontinuation with their patients (Supplement 2, eFigure 1). When the alert triggers, it defaults to an order set (a SmartSet in Epic®) that provides alternative treatments, templated patient instructions, and relevant referrals, such as to behavioral health. For the benzodiazepines and Z-drug alerts, the order set also includes tapering algorithms to limit risks of withdrawal symptoms. These algorithms are customized to the specific drug, dose, and frequency that the patient is taking along with pre-filled directions to pharmacies dispensing the taper (Supplement 2, eFigure 2) and customizable and printable instructions for patients about how to gradually taper off these drugs (Fig. 3). The order set also allows providers to order alternative medications, refer patients to a behavioral health specialist, and share instructions with patients about how to make lifestyle modifications to improve symptoms.

Enhanced electronic health record tool modified with behavioral principles that triggers when ordering one of the high-risk medications. a Salience: Presenting information about risks impactfully. b Defaults: Defaulting options to (1) discontinuing the order and (2) opening an order set containing dose tapers, alternatives, and patient instructions. c Social accountability: Requiring providers to select either “I accept the drug’s risks” or write a free-test response if they do not discontinue the medication or order a taper. d Choice architecture: Modifying timing of the tool to occur at different times in provider workflow

Arms 1 and 2 are “base” interventions in which the alert either fires when the provider orders a medication (arm 1) or opens an encounter (arm 2) for eligible patients during in-person or telehealth visits, which require addressing before completing the intended action (Supplement 2, eFigure 3). Additional principles are incorporated in arms 3–14. In arms 3 and 4, providers have the option of receiving a follow-up “booster” message (Supplement 2, eFigure 4). Arms 5 and 6 incorporate “cold state” priming, where providers receive a message in the EHR inbox 2 days before a scheduled visit with an eligible patient. Arms 7 and 8 involve simplified language (Supplement 2, eFigure 5), whereas arms 9 and 10 test an additional alert that occurs at the time of approving a medication when it is reordered or refilled by support staff such as a medical assistant (Supplement 2, eFigure 6). Arms 11 and 12 incorporate pre-commitment, in which providers are prompted to first have a discussion with their patient about medication risks and then, at a subsequent visit, are prompted to actively deprescribe the relevant medication (Supplement 2, eFigure 7). Arms 13 and 14 test framing risks based on guidelines and evidence instead of just presenting the risks quantitatively (Supplement 2, eFigure 8).

Conversely, arm 15 is a basic EHR alert meant to mimic clinical decision support that is currently most commonly given to providers, i.e., without behavioral science (Supplement 2, eFigure 9). Physicians randomized to usual care (arm 16) receive no new EHR alert. Presently, a system-generated informational alert fires for all providers upon ordering a benzodiazepine; this will fire across all arms non-differentially.

If patients are eligible for multiple classes of interest (e.g., benzodiazepines and Z-drugs), the EHR tools will appear simultaneously. To supplement the two focus groups, we also incorporated feedback from the Internal Medicine Design team and clinical pharmacists at Atrius. Finally, to fully ensure user-centered design, we conducted pilot testing with several providers not in the trial to assess usability, feasibility, and appropriate firing of the tools following Agency for Healthcare Research and Quality principles [46].

Stage 1

Randomization

In stage 1, we stratified the 201 eligible PCPs into 4 blocks (with 50 PCPs in 3 of the blocks and 51 PCPs in the fourth) based on clinical practice size (i.e., number of providers) and baseline rates of high-risk prescribing (i.e., number of eligible patients). Within these blocks, 30 PCPs were randomly allocated using a random number generator to one of 15 active arms, and 20 PCPs were allocated to usual care (in the 4th block, the extra PCP was assigned to usual care). In this way, 120 PCPs were assigned to an active intervention (n = 8 per intervention), and 81 PCPs were assigned to usual care. Providers were randomized using data from March 1 to August 31, 2020, and randomization was performed in September 2020; stage 1 began in October 2020.

Outcomes

The trial outcomes are shown in Table 2 and will be assessed using routinely collected structured EHR data and/or administrative claims data on the patient level. The primary outcome is a “reduction” in inappropriate prescribing defined as either discontinuation of one of the medication classes of interest or ordering of a medication taper. If either action is taken by the provider for a specific patient in the 6-month follow-up, we will classify the patient as having had a reduction in inappropriate prescribing, even if there is an unexpected later escalation. If a patient is eligible for > 1 therapeutic class (e.g., a benzodiazepine and a Z-drug), we will consider a patient as having met the primary outcome if there was a reduction in any of their eligible medications. This primary outcome was chosen because it can be rapidly measured to facilitate the adaptive trial. In secondary analyses, we will analyze outcomes stratified by the patient’s number of eligible therapeutic classes (i.e., one, two, or three).

Analysis plan

Six months after stage 1 launch, we will use multivariable regression to determine which of the behavioral components in the EHR tools (e.g., boostering effects) is associated with a reduction in inappropriate prescribing (primary outcome) among eligible patients who presented to care. The behavioral components are shown as factors for the models in Supplement 2, eTable 1. In specific, we will adjust for physician-level clustering and multiple patient observations per physician using a generalized linear mixed model for binary outcomes. Based upon this analysis, the 15 active arms will be ranked based on their observed effect estimates compared with usual care. Sample size estimates are described in stage 2. We will only present the study results at the end of stage 2.

Stage 2

Randomization

After the stage 1 analysis, we will randomize the 201 stage 1 providers as follows: (a) stage 1 usual care providers will be randomized to one of up to the top 5 most promising treatment arms or to continue to receive usual care (1:1:1:1:1:1) (Fig. 1), (b) stage 1 providers who were previously in unselected treatment arms (e.g., ranked lower than 5) will be randomized to one of up to the top 5 most promising arms or to usual care (1:1:1:1:1:1), and (c) stage 1 providers who were previously in the most promising arms will be randomly assigned to continue to receive their original treatment assignments or to usual care to test holdover/persistency effects (1:1). If only 1 to 5 arms are ranked, we will choose those for testing in stage 2 and randomize PCPs in equal proportions. If none of the arms are promising versus usual care, we will combine intervention components within the arms to enhance effectiveness.

In stage 2, as secondary analyses, we will also include and randomize any additional providers who prescribed ≥ 1 eligible anticholinergic of ≥ 90 days to an older adult in the prior 180 days and include these in the analysis. Based on preliminary data, we expect an additional 10–15 providers. We will also stratify based on clinic size and baseline rates of prescribing in stage 2. Follow-up will last approximately 8 months but will be based on the average number of observations.

Outcomes

As in stage 1, the primary outcome will be a reduction in inappropriate prescribing defined by discontinuation of high-risk medications or ordering a gradual dose taper. The measurement approach will be the same as for stage 1. In secondary analyses, we will include anticholinergics; the primary outcome for this definition will be a reduction in inappropriate prescribing defined by discontinuation of high-risk medications (benzodiazepines, Z-drugs, or anticholinergics) or ordering a gradual dose taper (benzodiazepines or Z-drugs).

At the end of stage 2, we will also measure other prescribing and clinical outcomes from the EHR including the cumulative number of milligram equivalents of high-risk medications prescribed to patients (secondary outcome). Because claims data are available for a subset of patients whose insurance is in risk-bearing contracts with Atrius, we will also measure tertiary outcomes including the quantity of high-risk medications dispensed in follow-up, measured in pharmacy claims, and the occurrence of clinically significant adverse drug events, specifically diagnoses of sedation, cognitive impairment, and falls or fractures, and all-cause hospitalizations, measured in medical claims data.

We will also measure implementation outcomes informed by the RE-AIM and CFIR implementation frameworks to provide insight into its implementation and scalability to other healthcare settings [25, 47]. These outcomes will include characteristics of providers evaluated in the trial compared with other Atrius providers, percentage and frequency of decision support firing as intended, frequency of using the SmartSet order set, and feedback from clinics and providers about the intervention about acceptability and sustainability after completion of the trial.

Analytic plan

We will conduct analyses of the primary outcome using generalized linear mixed model for binary outcomes to determine whether any of the intervention arms are more effective versus usual care. For secondary and tertiary outcomes, we will also use generalized linear mixed models. Primary analyses will be unadjusted; however, if there are strong patient-level predictors not balanced by stratified randomization, we will adjust for these. We will not include multiplicity adjustments in our statistical plan.

Sample size

Our null hypothesis is that rates of provider prescribing (defined as a reduction in prescribing of high-risk medication) in any of the active intervention arms will be no different than usual care. We determined our sample size to have 80% power assuming that the baseline rate of the composite outcome among usual care physicians is 5% (i.e., that 5% of patients would have a medication discontinued or a taper ordered in follow-up independent of the intervention) and that the effective interventions will increase the relative rate of deprescribing for patients by 10% (i.e., an odds ratio of 1.10) with type I error = 0.05 and correlation = 0.3 [11, 18, 48]. We assumed an average cluster size of 20 patients per provider based on baseline data.

Limitations

There are several potential limitations. These trials are being conducted in one health system. The results may also not be applicable to initial prescribing of these medications or deprescribing of other high-risk medications. Patients with generalized anxiety or panic disorder or followed by specialists may also be included, but this would be non-differential. In addition, many of the tools are alert-based; while we hypothesize that incorporating behavioral science principles will reduce their likelihood of being ignored by providers, it is possible that the tools could increase alert fatigue [49]. However, the design will more explicitly identify tools that may unnecessarily increase alert fatigue and whether certain nudges work for certain types of providers.

Discussion

Decision support in EHRs have shown promise in reducing high-risk prescribing for older adults, yet have not quite met that potential. While EHR strategies are widely used to support informational needs of providers, these tools have demonstrated only modest effectiveness at improving prescribing [49,50,51,52,53]. In a recent systematic review, 57% of studies found that decision support influenced provider behavior, yet effect sizes were small (i.e., mean change of < 5%), and many studies had a high-risk of bias [54]. EHR tools may currently be ineffective in part due to insufficient focus on factors behind clinical inertia, prescribing behavior, or workflow. To our knowledge, only three trials have specifically studied decision support for inappropriate prescribing in ambulatory older adults, which found modest effectiveness [20, 21, 52, 55]. Other decision supports, such as prescribing defaults, have not to our knowledge been applied to inappropriate prescribing in older adults [32, 56]. Discontinuing a medication may also prevent additional behavioral challenges, such as loss-aversion and endowment, compared with adding medication.

Once the trial is completed, these results will also need to be considered in relation to other efforts to reduce inappropriate prescribing in older adults. Limited prior evidence suggests that providing information alone via decision support at the time of a patient encounter could be insufficient at reducing prescribing on its own [20, 21, 55]. Other interventions that are not exclusively provider-facing, such as pharmacist interventions, including medication reviews, and in-person patient education, have also demonstrated some success in reducing inappropriate prescribing, yet these can be more resource intensive and may require actions outside of typical clinical workflow [11, 21, 57]. As the capabilities of EHRs increase, there still exists much opportunity to improve deprescribing efforts aimed at providers directly, including leveraging different time points in workflow and presenting different types of tools to facilitate deprescribing [58, 59].

Over the past decades, behavioral sciences have provided great insight into how to change behaviors by better understanding individuals’ underlying motivations, how they mentally “account” for various options, and processes necessary for sustained changed [44, 45]. These observations and application of their principles has effectively changed behavior in other settings and are likely applicable to EHR systems to improve the uptake of evidence-based care, such as improving prescribing in older adults [60]. The effectiveness of tools could be enhanced by leveraging principles of behavioral economics and related sciences, but they have had very limited application in EHRs and, more specifically, for prescribing in older adults.

Because there are numerous ways these tools could be designed and delivered using behavioral science, we are using an adaptive trial. Adaptive trials are increasingly emerging as options for increasing the efficiency and scale of interventions tested in clinical medicine than traditional parallel groups allow [61]. Accordingly, applying adaptive trials to delivering other healthcare interventions, such as in implementation research, would allow the possibility of testing faster and with more efficiency [36]. Because implementation research seeks to test how to promote uptake of evidence-based interventions and abandon strategies that are harmful, methods to generate evidence faster are central to the field. In specific, NUDGE-EHR will determine the components of EHR tools that are most impactful at changing provider behavior, which is fundamentally an implementation question. By using a study design that allows for the testing of different EHR implementation strategies, we will provide generalizable evidence both to healthcare practices about specific strategies that should be used in EHRs as well as to implementation researchers about the practicalities of how to test different delivery strategies simultaneously [62, 63].

Therefore, this overall approach, regardless of ultimate outcomes of the study itself, could be replicated by others to enhance the conduct and evaluation of pragmatic trials in implementation science. NUDGE-EHR will advance our understanding about how behavioral science can optimize clinical decision support to reduce inappropriate prescribing and improve patient health outcomes as well as how to use adaptive trial designs in healthcare delivery and implementation science.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Abbreviations

- BPA:

-

Best practice advisory

- EHR:

-

Electronic health record

- NUDGE-EHR:

-

Novel uses of designs to guide provider engagement in electronic health records

- PCP:

-

Primary care provider

- PRECIS-2:

-

Pragmatic explanatory continuum indicator summary

- Z-drug:

-

Sedative hypnotic Z-drug

References

Zhan C, Sangl J, Bierman AS, et al. Potentially inappropriate medication use in the community-dwelling elderly: findings from the 1996 Medical Expenditure Panel Survey. Jama. 2001;286(22):2823–9.

Zhang YJ, Liu WW, Wang JB, Guo JJ. Potentially inappropriate medication use among older adults in the USA in 2007. Age Ageing. 2011;40(3):398–401.

Guaraldo L, Cano FG, Damasceno GS, Rozenfeld S. Inappropriate medication use among the elderly: a systematic review of administrative databases. BMC Geriatr. 2011;11:79.

Cooper JA, Cadogan CA, Patterson SM, et al. Interventions to improve the appropriate use of polypharmacy in older people: a Cochrane systematic review. BMJ Open. 2015;5(12):e009235.

Rhee TG, Choi YC, Ouellet GM, Ross JS. National prescribing trends for high-risk anticholinergic medications in older adults. J Am Geriatr Soc. 2018;66(7):1382–7.

By the American Geriatrics Society Beers Criteria Update Expert P. American Geriatrics Society 2015 updated Beers Criteria for potentially inappropriate medication use in older adults. J Am Geriatr Soc. 2015;63(11):2227–46.

Woolcott JC, Richardson KJ, Wiens MO, et al. Meta-analysis of the impact of 9 medication classes on falls in elderly persons. Arch Intern Med. 2009;169(21):1952–60.

Ham AC, Swart KM, Enneman AW, et al. Medication-related fall incidents in an older, ambulant population: the B-PROOF study. Drugs Aging. 2014;31(12):917–27.

Alldred DP, Kennedy MC, Hughes C, Chen TF, Miller P. Interventions to optimise prescribing for older people in care homes. Cochrane Database Syst Rev. 2016;2:CD009095.

Spinewine A, Schmader KE, Barber N, et al. Appropriate prescribing in elderly people: how well can it be measured and optimised? Lancet. 2007;370(9582):173–84.

Martin P, Tamblyn R, Benedetti A, Ahmed S, Tannenbaum C. Effect of a pharmacist-led educational intervention on inappropriate medication prescriptions in older adults: the D-PRESCRIBE randomized clinical trial. Jama. 2018;320(18):1889–98.

Hanlon JT, Weinberger M, Samsa GP, et al. A randomized, controlled trial of a clinical pharmacist intervention to improve inappropriate prescribing in elderly outpatients with polypharmacy. Am J Med. 1996;100(4):428–37.

Tannenbaum C, Martin P, Tamblyn R, Benedetti A, Ahmed S. Reduction of inappropriate benzodiazepine prescriptions among older adults through direct patient education: the EMPOWER cluster randomized trial. JAMA Intern Med. 2014;174(6):890–8.

Kua CH, Yeo CYY, Char CWT, et al. Nursing home team-care deprescribing study: a stepped-wedge randomised controlled trial protocol. BMJ Open. 2017;7:e015293.

Wolfstadt JI, Gurwitz JH, Field TS, et al. The effect of computerized physician order entry with clinical decision support on the rates of adverse drug events: a systematic review. J Gen Intern Med. 2008;23(4):451–8.

Mostofian F, Ruban C, Simunovic N, Bhandari M. Changing physician behavior: what works? Am J Manag Care. 2015;21(1):75–84.

Page AT, Clifford RM, Potter K, Schwartz D, Etherton-Beer CD. The feasibility and effect of deprescribing in older adults on mortality and health: a systematic review and meta-analysis. Br J Clin Pharmacol. 2016;82(3):583–623.

Ng BJ, Le Couteur DG, Hilmer SN. Deprescribing benzodiazepines in older patients: impact of interventions targeting physicians, pharmacists, and patients. Drugs Aging. 2018;35(6):493–521.

Thillainadesan J, Gnjidic D, Green S, Hilmer SN. Impact of deprescribing interventions in older hospitalised patients on prescribing and clinical outcomes: a systematic review of randomised trials. Drugs Aging. 2018;35(4):303–19.

Rieckert A, Reeves D, Altiner A, et al. Use of an electronic decision support tool to reduce polypharmacy in elderly people with chronic diseases: cluster randomised controlled trial. BMJ. 2020;369:m1822.

Clyne B, Fitzgerald C, Quinlan A, et al. Interventions to address potentially inappropriate prescribing in community-dwelling older adults: a systematic review of randomized controlled trials. J Am Geriatr Soc. 2016;64(6):1210–22.

Liao JM, Emanuel EJ, Navathe AS. Six health care trends that will reshape the patient-provider dynamic. Healthcare. 2016;4(3):148–50.

Sequist TD. Clinical documentation to improve patient care. Ann Intern Med. 2015;162(4):315–6.

Maddox TM. Clinical decision support in statin prescription-what we can learn from a negative outcome. JAMA Cardiol. 2020.

Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–46.

Keith RE, Crosson JC, O’Malley AS, Cromp D, Taylor EF. Using the Consolidated Framework for Implementation Research (CFIR) to produce actionable findings: a rapid-cycle evaluation approach to improving implementation. Implement Sci. 2017;12(1):15.

Gong J, Zhang Y, Yang Z, Huang Y, Feng J, Zhang W. The framing effect in medical decision-making: a review of the literature. Psychol Health Med. 2013;18(6):645–53.

Sunstein CR. Nudging: a very short guide. J Consum Policy. 2014;37(4):583–8.

Thaler RH, Benartzi S. Save more tomorrow: using behavioral economics to increase employee saving. J Polit Econ. 2004;112:S164–87.

Keller PA. Affect, framing, and persuasion. J Mark Res. 2003;40(1):54–64.

Keller PA. Enhanced active choice: a new method to motivated behavior change. J Consum Psychol. 2011;21(4):376–83.

Patel MS, Day S, Small DS, et al. Using default options within the electronic health record to increase the prescribing of generic-equivalent medications: a quasi-experimental study. Ann Intern Med. 2014;161(10 Suppl):S44–52.

Meeker D, Linder JA, Fox CR, et al. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. Jama. 2016;315(6):562–70.

Levin IP, Schneider SL, Gaeth GJ. All frames are not created equal: a typology and critical analysis of framing effects. Organ Behav Hum Dec Process. 1998;76(2):149–88.

Bhatt DL, Mehta C. Adaptive designs for clinical trials. N Engl J Med. 2016;375(1):65–74.

Hatfield I, Allison A, Flight L, Julious SA, Dimairo M. Adaptive designs undertaken in clinical research: a review of registered clinical trials. Trials. 2016;17(1):150.

Health Care Professional Health IT Developers [press release]. 2017. https://dashboard.healthit.gov/quickstats/pages/FIG-Vendors-of-EHRs-to-Participating-Professionals.php.

O’Mahony D, O’Sullivan D, Byrne S, O’Connor MN, Ryan C, Gallagher P. STOPP/START criteria for potentially inappropriate prescribing in older people: version 2. Age Ageing. 2015;44(2):213–8.

Kuhn-Thiel AM, Weiss C, Wehling M. members Faep. Consensus validation of the FORTA (Fit fOR The Aged) List: a clinical tool for increasing the appropriateness of pharmacotherapy in the elderly. Drugs Aging. 2014;31(2):131–40.

By the American Geriatrics Society Beers Criteria Update Expert P. American Geriatrics Society 2019 updated AGS Beers Criteria(R) for potentially inappropriate medication use in older adults. J Am Geriatr Soc. 2019;67(4):674–94.

Yokum D, Lauffenburger JC, Ghazinouri R, Choudhry NK. Letters designed with behavioural science increase influenza vaccination in Medicare beneficiaries. Nat Hum Behav. 2018;2(10):743–9.

Emanuel EJ, Ubel PA, Kessler JB, et al. Using behavioral economics to design physician incentives that deliver high-value care. Ann Intern Med. 2016;164(2):114–9.

Purnell JQ, Thompson T, Kreuter MW, McBride TD. Behavioral economics: “nudging” underserved populations to be screened for cancer. Prev Chronic Dis. 2015;12:E06.

Angner ELG. Behavioral economics. Handb Philos Sci Philos Econ. 2007;13:641–90. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=957148.

Rice T. The behavioral economics of health and health care. Annual review of public health. 2013;34:431–47.

Kim S, Trinidad B, Mikesell L, Aakhus M. Improving prognosis communication for patients facing complex medical treatment: a user-centered design approach. Int J Med Inform. 2020;141:104147.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the Consolidated Framework for Implementation Research. Implement Sci. 2016;11:72.

Glynn RJ, Brookhart MA, Stedman M, Avorn J, Solomon DH. Design of cluster-randomized trials of quality improvement interventions aimed at medical care providers. Med Care. 2007;45(10 Supl 2):S38–43.

Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. J Am Med Inform Assoc. 2012;19(e1):e145–8.

Sequist TD, Morong SM, Marston A, et al. Electronic risk alerts to improve primary care management of chest pain: a randomized, controlled trial. J Gen Intern Med. 2012;27(4):438–44.

Field TS, Rochon P, Lee M, Gavendo L, Baril JL, Gurwitz JH. Computerized clinical decision support during medication ordering for long-term care residents with renal insufficiency. J Am Med Inform Assoc. 2009;16(4):480–5.

Tamblyn R, Huang A, Perreault R, et al. The medical office of the 21st century (MOXXI): effectiveness of computerized decision-making support in reducing inappropriate prescribing in primary care. CMAJ. 2003;169(6):549–56.

Pell JM, Cheung D, Jones MA, Cumbler E. Don’t fuel the fire: decreasing intravenous haloperidol use in high risk patients via a customized electronic alert. J Gen Intern Med. 2014;21(6):1109–12.

Jaspers MW, Smeulers M, Vermeulen H, Peute LW. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc. 2011;18(3):327–34.

Tamblyn R, Eguale T, Buckeridge DL, et al. The effectiveness of a new generation of computerized drug alerts in reducing the risk of injury from drug side effects: a cluster randomized trial. J Gen Intern Med. 2012;19(4):635–43.

Malhotra S, Cheriff AD, Gossey JT, Cole CL, Kaushal R, Ancker JS. Effects of an e-Prescribing interface redesign on rates of generic drug prescribing: exploiting default options. J Am Med Inform Assoc. 2016;23(5):891–8.

Hanlon JT, Schmader KE. The medication appropriateness index at 20: where it started, where it has been, and where it may be going. Drugs Aging. 2013;30(11):893–900.

Barker PW, Heisey-Grove DM. EHR adoption among ambulatory care teams. Am J Manag Care. 2015;21(12):894–9.

Johnson CM, Johnston D, Crowley PK, et al. EHR usability toolkit: a background report on usability and electronic health records. 2011.

Lessons for health care from behavioral economics. National Bureau of Economic Research bulletin on aging and health. 2008(4):1–2. https://www.nber.org/bah/2008no4/lessons-health-care-behavioral-economics.

Spieth PM, Kubasch AS, Penzlin AI, Illigens BM, Barlinn K, Siepmann T. Randomized controlled trials - a matter of design. Neuropsychiatr Dis Treat. 2016;12:1341–9.

Brown CH, Curran G, Palinkas LA, et al. An overview of research and evaluation designs for dissemination and implementation. Ann Rev Public Health. 2017;38:1–22.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Acknowledgements

Research reported in this publication was supported by the NIH. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Funding

This research was supported by a grant from the National Institute on Aging (NIA) of the National Institutes of Health (NIH) to Brigham and Women’s Hospital (R33AG057388). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

JCL and NKC drafted the manuscript. TI, LT, PK, TR, RJG, TDS, DHK, CPF, EWBC, NH, RAB, MM, and CG drafted and substantially revised it. All authors reviewed the paper and approved the final submitted version.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The trial was approved by the Mass General Brigham institutional review board (IRB) who served as the IRB of record for this research and the single IRB for the trial. The protocol was reviewed and approved. The IRB waived informed consent for all subjects (2019P002167). The study is registered with clinicaltrials.gov (NCT04284553) and overseen by a Data and Safety Monitoring Board.

Consent for publication

Not applicable

Competing interests

The investigators report no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplement 1

Clinical trial protocol

Additional file 2: Supplement 2, eFigure 1

Provider tapering information and talking points embedded in enhanced alerts. Supplement 2, eFigure 2 SmartSet order set embedded within enhanced alerts. Supplement 2, eFigure 3 Enhanced encounter opening alert used in Arms 2, 6, and 10. Supplement 2, eFigure 4 Arm 3: Enhanced order entry alert + follow-up message. Supplement 2, eFigure 5 Arm 7: Simplified enhanced order entry alert. Supplement 2, eFigure 6 Arms 9 and 10: Sign-off approval alert that triggers to providers when electronically signing off on refill medications ordered by support staff. Supplement 2, eFigure 7 Arm 11: Pre-commitment/consistency alert + enhanced order entry alert. Supplement 2, eFigure 8 Arm 13: Enhanced order entry alert with different risk framing. Supplement 2, eFigure 9 Arm 15: Non-enhanced order entry alert. Supplement 2, eTable 1 Behavioral principles in electronic health record tools tested in regression model.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lauffenburger, J.C., Isaac, T., Trippa, L. et al. Rationale and design of the Novel Uses of adaptive Designs to Guide provider Engagement in Electronic Health Records (NUDGE-EHR) pragmatic adaptive randomized trial: a trial protocol. Implementation Sci 16, 9 (2021). https://doi.org/10.1186/s13012-020-01078-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-020-01078-9