Abstract

The remarkable developments in computer vision and machine learning have changed the methodologies of many scientific disciplines. They have also created a new research field in wood science called computer vision-based wood identification, which is making steady progress towards the goal of building automated wood identification systems to meet the needs of the wood industry and market. Nevertheless, computer vision-based wood identification is still only a small area in wood science and is still unfamiliar to many wood anatomists. To familiarize wood scientists with the artificial intelligence-assisted wood anatomy and engineering methods, we have reviewed the published mainstream studies that used or developed machine learning procedures. This review could help researchers understand computer vision and machine learning techniques for wood identification and choose appropriate techniques or strategies for their study objectives in wood science.

Similar content being viewed by others

Background

Every tree has clues that can help with its identification. Leaves, needles, barks, fruits, flowers, and twigs are important features for tree identification. However, most of these features are lost in harvested logs and processed lumber, so anatomical features are used as clues for wood identification. Fortunately, the International Association of Wood Anatomists (IAWA) has published lists of microscopic features for wood identification [1, 2]. These lists are the fruits of the work of wood anatomists and are well established, so they can be used with confidence to identify wood.

Conventional wood identification is performed by visual inspection of physical and anatomical features. In the field, wood identification is performed by observing macroscopic characteristics such as physical features, including color, figure, and luster, as well as macroscopic anatomical structures in cross sections, including size and arrangement of vessels, axial parenchyma cells, and rays [3]. In the laboratory, wood identification is performed by observing various anatomical features microscopically from thin sections cut in three orthogonal directions, cross, radial, and tangential [4]. Wood identification is a demanding task that requires specialized anatomical knowledge because there are huge numbers of tree species, as well as various patterns of inter-species variations and intra-species similarities. Therefore, visual inspection-based identification can result in misidentification by the wrong judgment of a worker. Unsurprisingly, this is a major problem at the forefront of industries where large quantities of wood must be identified within a limited time.

The spread of personal computers has triggered a major turning point in wood identification. Wood anatomists have created a new system called computer-assisted wood identification [5] by computerizing the existing card key system [6, 7]. Several computerized key databases and programs have been developed to take advantage of the new system [8,9,10]. Because of the vast biodiversity of wood, the deployed databases generally cover only those species that are native to a country or a specific climatic zone [8, 9, 11, 12]. Although this system has made the identification of uncommon woods easier, traditional visual inspection was preferred for efficiency reasons in the identification of commercial woods [3]. The computer-aided wood identification systems used explicit programming, which required the user to program all the ways in which the software can work. That is, the user had to teach the software all the identification rules, which was never an efficient way because there are so many rules for wood identification. This programmatic nature made it difficult to spread the system globally.

Over time, computer-aided wood identification settled with web-based references such as ‘Inside Wood’ at North Carolina State University [13] and ‘Microscopic identification of Japanese woods’ at Forestry and Forest Products Research Institute (FFPRI), Japan [14]. These are very useful open wood identification systems that cover a wide variety of woods, but require expert knowledge of wood anatomy. As such, there are various obstacles to the further development of computer-aided wood identification, so this is where machine learning (ML) comes in.

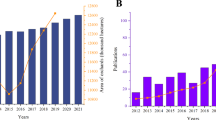

ML is a type of artificial intelligence (AI) where a system can learn and decide exactly what to do from input data alone using predesigned algorithms that do not require explicit instructions from a human [15, 16]. In a well-designed ML model, users no longer have to teach the model the rules for identifying wood, and even wood anatomists are not required to find wood features that are important for identification. Computer vision (CV) is a computer-based system that detects information from images and extracts features that are considered important [17,18,19]. Automated wood identification that combines CV and ML is called computer vision-based wood identification [20, 21]. AI systems based on CV and ML are making great strides in general image classification [22,23,24,25]. The same is true for wood identification and related studies have been increasing [20, 26,27,28,29].

Wood identification is a major concern for tropical countries with abundant forest resources, so there is a high demand for novel wood identification systems to address the wide biodiversity. There are various on-site needs for wood identification, such as preserving endangered species, regulating the trade of illegally harvested timbers, and screening for fraudulent species [30,31,32,33]. However, it is practically impossible to train a sufficient number of field identification workers to meet the demands of the field. Wood identification requires expert knowledge of wood anatomy and long experience, so even if a lot of money and time is spent, there are practical limits to the training of skilled workers [34].

To answer the demands in the field, various approaches have been proposed, such as mass spectrometry [35,36,37], near-infrared spectroscopy [38, 39], stable isotopes [40, 41], and DNA-based methods [42, 43]. However, these approaches have practical limitations as a tool to assist or replace the visual inspection due to their relatively high cost and procedural complexity. This is where CV-based identification techniques and ML models can be very important. Clearly, automated wood identification systems are urgently needed and CV-based wood identification has emerged as a promising system.

In this review, we provide an overview of CV-based wood identification from studies reported to date. CV techniques used in other contexts, such as wood grading, quality evaluation, and defect detection, are outside the scope of this review. This review covers CV-based identification procedures, provides key findings from each process, and introduces the emerging interests in CV-based wood anatomy.

Workflow of CV-based wood identification systems

Image recognition or classification is a major domain in AI and is generally based on supervised learning. Supervised learning is a ML technique that uses a pair of images and its label as input data [44]. That is, the classification model learns labeled images to determine classification rules, and then classifies the query data based on the rules. Conversely, in unsupervised learning the model itself discovers unknown information by learning unlabeled data [45]. Classification is generally a task of supervised learning and clustering is generally a task of unsupervised learning.

CV-based wood identification systems follow the general workflow presented in Fig. 1. Image classification is divided methodologically into conventional ML and deep learning (DL), both of which are forms of AI. In conventional ML, feature extraction, the process of extracting important features from images (also called feature engineering), and classification, the process of learning the extracted features and classifying query images, are performed independently. First, all the images in a dataset are preprocessed using various image processing techniques to convert them into a form that can be used by a particular algorithm to extract features. Then, the dataset is separated into training and test sets, and the features are extracted from the training set images using feature extraction algorithms. A classifier establishes the classification model by learning the extracted features. Finally, the test set images are input and the classification model, which returns the predicted classes of each image, thus completing the identification.

In DL, feature extraction and classification are performed in one integrated process [21, 29], which is end-to-end learning using annotated images [46]. The feature learning process using feature engineering techniques in conventional ML usually allows manual intervention by the user but manual intervention is limited in DL. Subsequent sections describe the process from image acquisition to classification, following the workflow of CV-based wood identification.

Image databases

Image acquisition

CV-based wood identification starts with image acquisition. It takes a considerable amount of time and effort to get enough wood samples to build a new dataset. For this reason, most studies have used Xylarium collections [26, 47,48,49,50,51,52]. Most studies only captured cross-sectional images of wood blocks except for a few studies using lumber surface [53] or three orthogonal sections [54, 55]. The surfaces of the blocks are cut with a knife or sanded with sandpapers to clearly reveal the anatomical characteristics. Macroscale images can be captured directly from the wood blocks using a digital camera or stereo microscope. To capture microscale images, meanwhile, microscope slides of wood samples must be prepared through standard procedures leading to softening, cutting, staining, dehydration, and mounting [56].

In image capturing, the quality of the obtained images can vary depending on lighting conditions. Imaging modules equipped with optical systems have been used to control the lighting uniformly [21, 51, 57], and image processing techniques such as filtering were applied to normalize the brightness of the captured images [58,59,60,61]. Digital image processing is to be covered in section preprocessing.

Image type

All wood image types can be used as data for identification. The most commonly used image types are macroscopic images [50, 54, 62,63,64,65], X-ray computed tomographic (CT) images [66, 67], stereograms [20, 21, 26, 68, 69], and micrographs [47, 70,71,72] (Fig. 2a). Macroscopic images are images taken without magnification by a regular digital camera. Stereograms are generally images taken at the hand lens magnification (10×), but higher magnifications may be used depending on the purpose. Micrographs are optical microscopic images and they are commonly used in conventional wood identification. X-ray CT images are a slice of the original images generated by X-ray CT scans.

Macroscopic images and stereograms were preferred in studies aimed at developing field-deployable systems because they were easily obtained only by smoothing the wood surface [49, 51, 57, 70]. Microscopic level features extracted from micrographs allow for an anatomical approach because the image scale is the same as that used in established wood anatomy [48, 73, 74]. X-ray CT images have been used to identify wooden objects with limited sampling, such as registered cultural properties, because of the non-destructive nature of the imaging [66, 67]. In one study, the morphological features of wood cells were extracted from scanning electron microscope images [75].

The extractable or effective image features that are used for wood identification can differ depending on the image type (Fig. 2b), so the most suitable image type for the research purpose and for the wood should be considered carefully. As shown in Fig. 2a, in macroscale images, macroscopic images, and X-ray CT images, large wood cells and cell aggregates such as annual rings, rays, and vessels are observed from the cross-sectional image. Stereograms provide more detailed anatomical characteristics, such as the type of vessel and axial parenchyma cell. Anatomical characteristics observed from the macroscale images are treated as a matter of texture classification (Fig. 2b) because they are represented as the spatial distribution of intensity between adjacent pixels and repetitive patterns [76]. Because macroscopic images and stereograms retain the unique color of wood, the color information was used for wood identification [58, 62, 63, 77, 78]. In micrographs that provide microscopic information such as wood fibers, local features for extracting morphological characteristics of cells were preferred [48, 74, 79], and statistics related to the size, shape, and distribution of cells were also used for identification [27, 59, 61, 80]. In contrast, convolutional neural networks (CNNs) were employed regardless of image type [29, 65, 72, 81].

Databases

A good identification ML model can be built only from a good reference image collection. Wood image data should contain the specific features of each species and provide sufficient scale for the features to be observed. A quantitatively rich database is required so that the ML model can learn the various biological variations that occur within a species.

Published wood image databases constructed for CV-based wood identification are listed in Table 1. CAIRO and FRIM, which contain stereogram images of commercial hardwood species in Malaysia, were among the first to be constructed and are still regularly updated [27, 82]. LignoIndo, which also contains stereogram images, was constructed for the development of a portable wood identification system [83]. It contains images of Indonesian commercial hardwood species.

UFPR, an open image database of Brazilian wood, contains macroscopic images and micrograph datasets. It was established to serve as a benchmark for automated wood identification studies and is accessible from the Federal University of Paraná website [84, 85]. Other publicly available wood image databases are RMCA, which contains micrograph images of Central-African commercial wood species [86], WOOD-AUTH, which contains macroscopic images of Greek wood [87], and the Xylarium Digital Database (XDD), which contains micrograph-based multiple datasets [88, 89] (Table 1). To measure or improve the performances of wood identification models, benchmarks for performance evaluation are essential. These open databases have contributed to the development of CV-based wood identification.

All the databases contain only cross-sectional images, regardless of image type. Barmpoutis et al. [54] compared the discriminative power of three orthogonal sections of wood and reported that the model trained with the cross-sectional image dataset had a higher classification performance than the same model trained with other sections or combinations of them.

Xylarium digital database for wood information science and education

The biggest obstacle currently faced by CV-based wood identification is the absence of large databases. The development of the ImageNet dataset, which contains 14.2 million images across more than 20,000 classes, ended the AI winter [90]. Similarly, large databases are essential for progressing CV-based wood identification. Historically, the construction of large image databases for wood science has always been a challenge [6, 81], mainly because wood images are cumbersome to make and only wood anatomists can annotate the images correctly. Hence, their construction requires extensive collaboration across many organizations in wood science.

A first step in constructing a large database could be the digitization of Xylaria data that is distributed around the world, accompanied by the establishment of standard protocols for image data generation [91]. Once digitization is complete, data sharing and/or integration systems would need to be discussed. Unlike the Xylaria data, a digital herbaria dataset that covers a wide range of biological diversity has already been established [92].

Under these circumstances, the recently released Xylarium Digital Database (XDD) for wood information science and education is notable. XDD is a digitized database based on the wood collection of the Kyoto University Xylarium database. It contains 16 micrograph datasets, covering the widest biological diversity among open digital databases released to date (Table 2). The datasets in XDD have been used in multiple studies [48, 73, 74, 93], and based on the findings of these studies, each wood family was divided into two datasets with two different pixel resolutions. A large digital database built by combining individual databases such as XDD will be an important contribution to the advancement of wood science with state-of-the-art DL techniques beyond CV-based wood identification.

How computer vision processes images

To identify woods, wood anatomists observe the anatomical characteristics of wood tissues such as wood fibers, axial parenchyma cells, vessels, and rays, as well as their size and arrangement from wood sections (Fig. 3a), whereas CV is used to extract features such as points, blobs, corners, and edges, and their patterns from images (Fig. 3b).

For computers, an image is a combination of many pixels and is recognized as a matrix of numbers with each number representing a pixel intensity (Fig. 4). Wood images are made up of various cell types. Different wood species have different patterns of anatomical elements, and the composition of the elements causes distinctions in pixel intensity, arrangement, distribution, and aggregation patterns. Such differences are detected by CV and learned by ML. This is the fundamental concept of CV-based wood identification.

Preprocessing

Image preprocessing is a preliminary step of feature extraction that facilitates extraction of predefined features and reduces computational complexity [94]. Various image preprocessing techniques have been used depending on the problem to be solved.

Simple tasks such as grayscale conversion and image cropping and scaling are preprocessing techniques commonly used in the conventional ML models [26,27,28, 52, 62, 71, 95]. The color of wood generally is not regarded as important information in because it is easily changed by various factors. In conventional ML RGB color images are converted to grayscale images, which provide enough information to recognize species specificity and also significantly reduce computational costs. Whereas, DL models use RGB images without conversion [29, 65, 81, 96].

Cropping is the process of extracting parts of the original image to remove unnecessary areas or to focus on specific areas [29, 65, 96,97,98,99]. Scaling is the process of changing the image size in relation to pixel resolution. High-resolution images have excessive computational costs [100], so the image size needs to be adjusted but remain within the range in which the expected features can be extracted. Information loss should be considered when resizing wood images, and information distortions inevitably occur when changing aspect ratios.

Homomorphic filtering is a generalized technique for image processing and commonly used for correcting non-uniform illumination. This technique is preferred to normalize the brightness across an image and increase contrast in wood identification [58,59,60,61]. Homomorphic filtering also has the effect of sharpening the image [26, 101] and Gabor filters have been used for sharpening [102]. Sharpening was used as a preprocessing to segment notable cells such as the vessel [59, 61, 103].

Denoising using a median or adaptive filter has been performed to remove noise or artifacts in images [75, 99, 104], and equalization of the gray level histogram was shown to improve the contrast [26, 75, 101]. For motion blurred images, deconvolution using the Richardson–Lucy algorithm was effective for deblurring [105]. For X-ray CT images, pixel intensities are directly related to wood density, so gray level calibration based on wood density is an important process for predicting physical and mechanical properties as well as for wood identification [67]. By preprocessing images from various sources, image data can be cleaned up and standardized, which reduces data complexity and improves algorithm accuracy.

Data splitting

When a wood image dataset has been processed and is ready for use, the next step is to split it into subsets. One of the goals of ML is to build a model with high prediction performance for unseen data [106]. Non-split, that is training data only, and two-split into training and test sets can result in poor prediction for unseen data because they build models that fit best only on anatomical features of training and test data, respectively. Therefore, the most common splitting method is to split the data into training, validation, and test sets.

The training set is used to construct a classification model. The classifier learns the features extracted from the training set images and their labels to build a classification model. The validation set is used to optimize the training set by tuning the parameters during model building. The validation set can be specified as an independent set or a part of the training set can be iteratively selected, such as k-fold cross-validation [107]. Although this method is standard in conventional ML, it is not often used when training a large model in DL because the training itself has a large computational scale. The test set is used to evaluate the performance of the final model built by learning the training set.

The split ratio for a dataset depends on the data. Splitting training and test data sets 8-to-2 was preferred and this can be a good start. Guyon [108] suggested that the validation set should be inversely proportional to the square root of the number of free adjustable parameters, but it should be noted that there is no ideal ratio for splitting a dataset. It is important to find a balance between training and test sets because a small training set can result in high variance of parameter estimates and a small test set can result in high deviations in performance statistics.

In general classification problems, random dataset splitting is considered a good approach [109], but for biological image data such as wood images, especially microscopic scale images, it is not ideal. If multiple images obtained from an individual are divided into training and test sets, the classification model can correctly classify the test images because the model has already learned the characteristics of the individual from the training set, even if the images represent different areas of the sample. In such a case, the classification performance of the model will be high, but this result is caused by overfitting and does not guarantee the generalization performance of the model [44]. In CV-based wood identification, therefore, it is desirable to split a dataset by individual units, not by images, because the splitting process is important in determining the reliability of classification models. Many published studies used random splitting methods [29, 61, 66, 68, 79], but many studies did not provide details of the dataset splitting scheme.

Conventional machine learning

Feature extraction

Image features are the information that is required to perform specific tasks related to CV applications. In general, the features refer to local structures such as points, corners, and edges, and global structures such as patterns, objects, and colors in an image. CV uses a diverse collection of feature extraction algorithms. In wood identification based on conventional ML, different feature types are selected depending on the type of image and classification problem, most of them are for texture and local features.

Texture feature

Texture is the visual pattern of an image and it is expressed by the combination and arrangement of image elements. That is, texture is information about the spatial arrangement of pixel intensities in an image, and this information is quantified to obtain the texture feature. Early studies generally approached wood identification as a matter of texture classification because cross sections of wood represent different anatomical arrangements, namely different patterns, depending on the species [20, 26, 82, 101, 110]. Textures are the image features that were most preferred in published studies, and this is closely related to the finding that stereograms and macroscopic images were preferred for developing field-deployable systems [21, 49, 57, 69].

Gray level co-occurrence matrix (GLCM) is a statistical approach for determining the texture of an image by considering the spatial relationship of pixel pairs, and GLCM is the classic and most widely used texture feature in wood identification [20, 26, 28, 66, 69, 98]. The GLCM feature is quantified by statistical methods such as the Haralick texture feature [111,112,113] that takes into account the direction and distance of the co-occurrences of two adjacent pixels. In the early days of CV-based wood identification, Tou et al. [20] reported 72% identification accuracy using five GLCM texture features extracted from 50 images of five tropical wood species. Subsequently, they scaled up their datasets (CAIRO) and studied various GLCM-based identification strategies such as rotation invariant GLCM [110] and multiple feature combinations [62, 114,115,116]. GLCM is a feature that has high computational cost, so one-dimensional GLCM [117] and image blocking have been considered for computational efficiency [97, 98]. Kobayashi et al. [66, 67, 99] constructed identification models trained with GLCM features extracted from X-ray CT images and stereograms and demonstrated that GLCM-based methods were promising for the identification of wood cultural properties.

To reduce the computational cost and improve the classification performance, the basic gray level aura matrix (BGLAM) was proposed [118]. This is the basis of the gray level aura matrix (GLAM) [119] developed to overcome the limitation of GLCM that it cannot contain information about the interaction between gray level sets in textures with large scale structure [120]. BGLAM is characterized by the co-occurrence probability distribution of gray levels in all possible displacement configurations and it has been actively used for wood identification [27, 28, 59, 61]. Zamri et al. [60] classified 52 species in the FRIM database using the improved-BGLAM algorithm, which realizes feature dimension reduction and rotational invariance from BGLAM, and the classification performance of their model using the improved-BGLAM far exceeded that using GLCM.

Local binary pattern (LBP) is a simple but efficient visual descriptor for representing image texture. LBP calculates the local texture of an image by comparing the value of a center pixel with those of the surrounding pixels in the grayscale image [121]. Nasirzadeh et al. [82] compared the performance of models trained with LBP-based features extracted from the CAIRO database and found that the LBP histogram Fourier features outperformed the conventional rotation-invariant LBP. Martins et al. [47] published their micrograph-based UFPR database and reported a 79% recognition rate for a classification model trained with LBP. In the same study, they also showed that when the original image was divided into sub-regions, the recognition rate of the model trained with LBP improved by 86%.

In comparative studies of GLCM and LBP, classification models trained with LBP always achieved better performances than those trained with GLCM for all image types (Table 3). While GLCM quantifies an image with only one value per texture feature, LBP represents an image with a 256-bin histogram, although it contains many overlapping patterns. Corners and Harlow [112] demonstrated that among the 14 measures proposed by Haralick et al. [111] for GLCM texture feature extraction, only five of them, energy, entropy, correlation, local homogeneity, and contrast, were sufficient for texture classification. That is, GLCM required a lower dimension of feature vectors for texture description than LBP. In addition, LBP histograms can identify local patterns such as edges, flats, and corners, which may explain why LBP outperformed GLCM in wood identification. It has been pointed out that it may be difficult to describe large wood cells, such as vessels and resin canals using LBP [95], but this problem can be solved by adjusting the radius of the LBP unit and combining units with different radii.

Local phase quantization (LPQ) is an algorithm for extracting blur insensitive textures using Fourier phase information. As shown in Table 3, LPQ was applied primarily to micrographs, where it performed better than GLCM and LBP [71, 95, 115, 122]. One study has investigated LPQ on macroscopic scale image datasets, but the discriminative power of LPQ was lower than that of LBP in a comparative study using the UFPR macroscopic image dataset [62].

In addition to the textures described above, other texture features such as higher local order autocorrelation (HLAC) and Gabor filter-based features have been used for wood classification, and because of their high classification accuracy they have proved to be promising feature extractors for wood identification [68, 101, 123,124,125]. Texture fusion strategies for different types of texture features have always been superior to a single feature set in terms of classification accuracy [28, 80, 115].

Local feature

Local features are distinct structural elements such as points, corners, and edges in an image, vs. textures. The biggest difference between local features and textures is that textures are descriptors that describe an image as a whole, whereas local features describe interesting or important local regions called keypoints. That is, the texture feature is an image descriptor and the local feature is a keypoint descriptor. Local feature extraction thus consists of two major processes, feature detection and feature description. In general, local features are better at handling image rotation, scale, and affine changes [18, 19].

Scale-invariant feature transform (SIFT) developed by Lowe in 2004 [18], is a local feature extraction algorithm that has been the benchmark for local feature collection in CV since its introduction. SIFT detects blobs, corners, and edges as keypoints in an image and represents local regions of the image as 128-dimensional vectors calculated based on the gradient orientations of the pixels around each keypoint.

Most of the local features used for wood identification have been collected using SIFT [48, 54, 73, 79, 93]. Hwang et al. [73] investigated the discriminative power of SIFT for image resolution using the XDD Lauraceae micrograph dataset. Taking into account the identification accuracy and the computational cost, they reported that a pixel resolution of about 3 µm was appropriate for wood identification. If the image was shrunk beyond the 3-µm pixel resolution, the accuracy was greatly reduced because information about the wood fibers was lost. However, from an identification study of Fagaceae species, Kobayashi et al. [48] reported that a satisfactory identification performance could be obtained at a pixel resolution of 4.4 µm, even though some of the wood fiber information was lost.

Hwang et al. [74] compared the wood identification performance of each model trained with well-known local feature extraction algorithms: SIFT, speeded up robust features (SURF) [19], oriented features-from-accelerated-segment-test (FAST), rotated binary-robust-independent-elementary-feature (BRIEF) (ORB) [126], and accelerated-KAZE (AKAZE) [127]. Without considering the computational cost, SIFT had the highest discriminative power among the algorithms tested (Table 4). Visualization of the features extracted by each algorithm confirmed that SIFT detected cell corners more effectively than the other algorithms. Hwang et al. [74] noted that in cross-sectional images of wood, the cell corner is an important feature for identification because it contains information about the mode of aggregation of different cell elements. The superiority of SIFT seems to be because it was designed to detect corners.

In comparative studies of local features and textures (Table 4), SIFT and SURF had higher discriminative power than GLCM and LBP, whereas LPQ had similar discriminative power for local features [47, 128]. Histograms of oriented gradients (HOG) [129] are descriptors that represent a local region of an image, and they have been used to classify macroscopic image datasets [130].

Describing a whole image using only local features can limit the classification of images with complex structural elements. To solve this problem, a codebook-based framework has been used to quantify the extracted features. The bag-of-features (BOF) model, which uses codewords generated by the clustering of extracted features, is an effective method of quantifying features to represent an image [131]. The BOF model trained with codewords converted from SIFT descriptors produced higher classification performance than the model trained with SIFT features intact [93]. Even for a macro image dataset, the SIFT-based BOF model outperformed the models trained with texture features [128].

Other features

In addition to the features described above, other feature types such as color and anatomical statistic features have been used for hardwood identification. Such features were used mainly in combination with other types of features because their discriminative power as a single feature set was relatively inadequate, and multiple feature set strategies that combined different types of features produced improved results for identification accuracy [58, 62, 77].

Color is the most intuitive feature for human vision, but it is unstable as a feature. Wood color is not only variable by environmental factors such as tree growth conditions and atmospheric exposure time, but also by variability in an individual tree such as heartwood and sapwood, and earlywood and latewood. Wood identification has been carried out based on color differences between species, but large intra-species color variations are a big obstacle. Zhao et al. [63, 78] proposed novel color recognition systems that efficiently distinguished between intra- and inter-species color variations using an improved snake model [132] and an active shape model [133] with a two-dimensional image measurement machine. They also built a classification model that outperformed their previous models using a fusion scheme of color, texture, and spectral features [116]. Color features have primarily been used in combination with textures as part of macro level multi-feature sets to improve discrimination [58, 62, 77, 134].

Using image segmentation techniques, anatomical statistical features such as the shape, size, number, and distribution of specific wood cells can be extracted from a cross-sectional image. The vessel is the most characteristic anatomical element in macroscale images, so it was particularly preferred for statistical feature extraction. Yusof et al. [28, 59] extracted the statistical properties of pore distribution (SPPD) from the FRIM database and used them as features. Pre-classification of the SPPD features based on fuzzy logic [135] improved the accuracy of the identification system and reduced the processing time. Other similar studies have successfully used statistical features to identify wood [61, 80, 103].

Dimensionality reduction and feature selection

Large numbers of extracted features reduce the computational efficiency of classification models, therefore it is important to find a balance between classification accuracy and computational cost. Principal component analysis (PCA) [136] and linear discriminant analysis (LDA) [107] are representative methods for dimensionality reduction of data. da Silva et al. [71] reduced the dimensionality of LPQ and LBP features extracted from the RMCA database using PCA and LDA. Their classification model produced promising results, even though the feature data were significantly reduced. Kobayashi et al. [48] reduced the 128-dimensional SIFT feature vectors extracted from Fagaceae micrographs to 17 dimensions using LDA, and the wood identification using the reduced feature set was quite accurate.

Whereas dimensionality reduction converts image features into new numerical features, feature selection takes the essence of the features without converting them. The genetic algorithm (GA) is an engineering model that borrows from the biological genetic and evolution mechanisms. The GA finds a better solution by repeating the cycle of feature selection, crossover, mutation, evaluation, and update [137]. Khairuddin et al. [138] applied the GA to texture features extracted from the FRIM database and used the selected features to train their model. They found that the model performance was 10% more accurate than the same model in a previous study [26], even though the GA reduced the dimensionality of the training data by half. Yusof et al. [28] further improved the feature selection by combining the GA with kernel discriminant analysis. Another feature selection algorithm is the Boruta algorithm, which selects key variables based on Z-score using random forest [139].

Classification

Classifiers create classification models by learning the features extracted from a training set and establishing classification rules. The training phase ends with the implementation of the classification model. In the test phase, image features are extracted from the test set through the same processes as the training set. The features are then entered into the classification model and the model classifies each image, which completes the classification of the test set.

The three most preferred classifiers in the wood identification studies were k-nearest neighbors (k-NN) [140], support vector machine (SVM) [141], and artificial neural network (ANN) [142, 143]. k-NN is the simplest ML algorithm, which simply stored the training data and focuses on classifying query data. To classify query data, the algorithm finds k data points closest to the target data point in the training dataset based on the Euclidean distance [140]. Minimum distance-based classifiers [140, 144] such as k-NN work best on small datasets because the amount of required system space increases exponentially as the number of input features increases [145].

SVM is an algorithm that clearly classifies data points in N-dimensional space by finding a hyperplane with the maximum margin between classes of data points [141]. Basically, SVM is a linear model, but combining it with kernel methods enables nonlinear classification by mapping data into higher dimensional feature spaces [146]. SVM requires less space than k-NN because it learns the training data and builds a classification model in advance. SVM has been shown to outperform k-NN for wood identification [68, 73, 128, 147, 148]. In most studies that compared the two classifiers in the same classification strategy, SVM outperformed k-NN (Table 5). Because the SVM algorithm, as well as other ML algorithms, is very sensitive to the parameters, gamma (or sigma), a Gaussian kernel parameter for nonlinear classification, and cost (C), a parameter that controls the cost of misclassification on the training data [149], parameter optimization using techniques such as grid search or GA is essential [150, 151].

The ANN algorithm mimics the learning process of the human brain and is the foundation of DL, which is the mainstream of modern computer science [152,153,154,155]. This algorithm has produced state-of-the-art performance as a classifier in wood identification as well as in various other classification problems. Regardless of the type of image and feature, ANN performed better than k-NN and SVM (Table 5). Wood features are nonlinear relationships, and ANN is able to learn such complicated nonlinear relationships [156]. In addition, ANNs are resilient in the face of noise and unintentional features in the data [106]. The ANN algorithm is a backpropagation algorithm that refines the model by updating the weights through propagation of the prediction error to previous layers. [157]. These characteristics make it suitable for wood identification. ANN has been used with large datasets [72, 97, 128], which supports DL methods such as CNN that are able to build more sophisticated models with larger amounts of data [53, 55, 64, 96].

Although ANN is a good classifier, there is no one optimal classifier for wood identification. For new datasets, it has been recommended that it is better to start with a simple model such as k-NN to develop an understanding of the data characteristics before moving to a more complex model such as SVM or ANN [158]. Depending on the purpose, ensembles of multiple classifiers also may be considered [53, 64, 93].

Deep learning

Convolutional neural networks

Convolutional neural networks (CNN) were first introduced to process images more effectively by applying filtering techniques to artificial neural networks [159]. Afterward, a modern CNN framework for DL was proposed by LeCun et al. [17]. Typical CNN architecture is shown in Fig. 5. There are many layers between input and output. Convolution and pooling layers extract features from images, and fully-connected layers are neural networks that learn features and classify images. In a convolution layer, a feature map is generated by applying a convolution filter to the input image. In a pooling layer, only the important information is extracted from the feature map and used as input to the next convolution unit. Convolution filters can start with very simple features, such as edges, and evolve into more specific features of objects, such as shapes [46]. Features extracted from the convolution and pooling layers are passed to the fully-connected layers and then classification is performed by a deep neural network.

A deep neural network is composed of several layers stacked in a row. A layer has units and is connected by weights to the units of the previous layer. The neural network finds the combinations of weights for each layer needed to make an accurate prediction. The process of finding the weights is said to be training the network. During the training process, a batch of images (the entire dataset or a subset of the data set divided by equal size) is passed to the network and the output is compared to the answer. The prediction error propagates backward through the network and the weights are modified to improve prediction [157]. Each unit gradually becomes equipped with the ability to distinguish certain features and ultimately helps to make better predictions [46].

CNN in wood identification

Table 6 lists wood identification studies using CNN models. All studies have been reported within the last decade and are accelerating over time. Hafemann et al. [29] used a CNN model combined with an image patch extraction strategy to classify macroscopic image and micrograph datasets in the UFPR database. The classification performance of the CNN model outperformed those of models trained with texture features. Notably, their CNN model was designed with only two convolution units. Kwon et al. [64, 96] successfully classified six Korean softwood species using CNN-based models, LeNet [17], mini VGGNET [160], and their ensemble models, which have been successful in general image classification. Oliveira et al. [161] developed a wood identification software based on CNN models trained with the UFPR database. The architecture of their CNN models was not disclosed, but the model that they chose as the basis for the software performed best performance in studies of both macroscopic and micrograph datasets using the UFPR database as a benchmark.

CNNs generally require large databases. However, large wood image databases with the correct labels are quite difficult to obtain. To expect competitive performances from CNN-based models, they are known to require a database that is at least 10 times larger than that required for feature engineering-based methods [91]. Therefore, transfer learning was introduced as a network training method for small databases [162]. Transfer learning provides a path to building competitive models using a moderate amount of data by leveraging pre-trained networks with the ImageNet dataset [163].

Ravindran et al. [81] classified 10 neotropical species with high accuracy using the VGG16 [160] model with transfer learning. Tang et al. [49] developed a smartphone-based portable macroscopic wood identification system based on the SqueezeNet [164] model. In a comparative study of DL and conventional ML models [55], CNN-based models, Inception-v3 [165], SqueezeNet [164], ResNet [25], and DenseNet [166], all models achieved better performance than k-NN models trained with LBP or LPQ features. Lens et al. [72] also reported that VGG16 and ResNet101 models had better classification performance for the UFPR dataset than those trained with texture features. The CNN with residual connections proposed by Fabijańska et al. [65] identified 14 European trees better than other popular CNN architectures.

Field-deployable wood identification systems

Many studies have aimed to develop CV-based automated wood identification systems, and some have been realized as field-deployable systems [21, 49, 51, 69]. XyloTron, developed by the Forest Products Laboratory, US Department of Agriculture, is a CNN-based wood identification system using ResNet34 backbone [21]. This is the only system to report actual field use in real-time and showed higher species and genus identification performance for the family Meliaceae than the mass spectrometry-based model [21].

Recently, smartphone-based systems have been developed that require minimal components. MyWood-ID can identify 100 Malaysian woods [49], and another smartphone-based system, AIKO, can identify major Indonesian commercial woods [83]. XyloPhone, a smartphone-based imaging platform, has been introduced as a field-use identification tool that is more affordable and scalable than other commercial products [57]. All the field-deployable systems are based on stereogram datasets [21, 49, 51, 57, 69, 83].

New aspects in wood science: CV-based wood anatomy

From the outset, CV-based wood identification was an informatics-driven research field, so most studies were focused only on improving the identification performance, and not on the wood itself. With the recent interest in AI, new aspects have emerged to understand CV based on the domain knowledge of wood science.

Feature-based anatomical approaches

Kobayashi et al. [99] conducted PCA on GLCM features extracted from hardwood stereograms to investigate the relationship between anatomical structures and texture features. From principal component loadings and analysis of macroscopic patterns of wood they found that each Haralick texture feature correlated with different anatomical structures such as vessel population, ray-to-ray spacing, and tylosis abundance.

A k-means clustering [167] analysis of local features extracted from micrographs suggested the possibility of matching feature clusters with anatomical elements [73]. This idea was extended to quantify anatomical elements by encoding local features into codewords. The BOF framework effectively visualized and assigned local feature-based codewords to anatomical elements of wood, and codeword histograms provided an indirect means of quantitative wood anatomy [74]. The finding that local features are the vehicles for access to CV from an anatomical point of view is the main reason that much attention is being paid to the applications of informatics in the wood science field.

Hierarchical clustering of a Fagaceae micrograph dataset with SIFT features confirmed that the clustering basically coincided with wood porosity. Moreover, the relationship of species groups that contradicted wood porosity was consistent with evolution based on molecular phylogeny [48]. An unexpected finding was that the subgenera Cerris (ring porous wood) and Ilex (diffuse porous wood) had common characteristics in the arrangement of the latewood vessels as predicted by a CV-based analysis (Fig. 6).

Feature importance measures

For promising results produced by established wood identification models, the features that contributed to the identification need to be assessed. Random forest [168], an ensemble algorithm and classification model that combines multiple decision trees [169], may provide an effective means to do this. This algorithm has been preferred in bioinformatics and biological data-based studies because it provides variable importance, which is the feature importance that evaluates and ranks the variables for the predictive power of a model [170,171,172]. Feature importance measures can be used to interpret the identification results produced by a model into domain knowledge of wood anatomy.

Another feature importance measurement technique using codewords is the term frequency–inverse document frequency (TFIDF) score [173]. TFIDF is derived from bag-of-words (BOW) [174], a model for document retrieval and the origin of BOF, and the TFIDF score provides keywords for document retrieval. Similarly, the BOF model uses image features and provides informative features for image classification. A codeword with a high TFIDF score indicates a rare feature present in a small number of species, whereas a low score denotes a feature shared by many species [74]. As shown in Fig. 7, the features with high TFIDF scores for each species are different, and can be used to infer species-specific features.

Visualization of informative features with the highest TFIDF scores from the Lauraceae micrograph dataset. a Wood fibers adjacent to rays in Machilus pingii. b Intervessel walls in Lindera communis. c Large vessels in Sassafras tzumu. d Wood fibers in earlywood in Phoebe macrocarpa. The frequency–inverse document frequency (TFIDF) scores run from high to low in the order red, yellow, green, blue, and purple. Scale bars = 200 µm

In CNN models, the class activation map (CAM) shows discriminative image regions that contribute to classifying images into specific classes [175, 176]. To produce a CAM, the model classifies images by performing global average pooling on feature maps in the last convolution layer, and then regions of interest are detected from the weights and the feature maps. Nakajima et al. [177] used a CAM to analyze important image regions for the dating of each annual ring in tree ring analysis.

Cell segmentation using deep learning

The anatomical composition of wood cells reflects environmental changes during the growth period of the tree [178, 179], therefore analysis of cell variability, that is, quantitative wood anatomy, helps answer questions related to tree functioning, growth, and environment [180]. The laborious and tedious task of identifying and measuring hundreds of thousands of wood cells is a major obstacle to do it. Several studies have been reported for automated segmentation of wood cells using classical image processing techniques [75, 179, 181], but the results were highly dependent on image quality and manual editing by the operator.

With recent advances in CV and DL technologies, CNNs have shown remarkable achievements in segmenting cells from biomedical microscopic images [155, 182, 183]. The state-of-the-art techniques are also been applied to the segmentation of plant cells, including wood [184, 185]. Garcia-Pedrero et al. [185] segmented xylem vessels from cross-sectional micrographs using Unet [155], a multi-scale encoder-decoder model based on CNN. They reported that the vessel segmentation by Unet was closer to the results of the expert’s work using the image analysis tool ROXAS [186] than the classical techniques Otsu’s thresholding methods [187] and morphological active contour method [188]. In a comparative study of three of the latest neural network models, Unet, Linknet [189], and Feature Pyramid Network (FPN) [190] for vessel segmentation, the models had a high pixel accuracy of about 90% as well as a shorter working time than the existing image analysis tool [186].

The segmentation results produced from CNN-based models demonstrate the potential of DL to perform quantitative wood anatomy more effectively, overcoming obstacles such as the non-homogeneous illumination or staining of images, where conventional methods tend to yield unsatisfactory results [191]. DL is evolving rapidly and has provided excellent results in many fields of study. This can provide solutions to the questions of wood identification and anatomy and is an opportunity and challenge to bring new insights into wood science.

Conclusions

CV-based wood identification continues to evolve in the development of on-site wood identification systems that enable consistent judgment without human prejudice. Furthermore, by allowing an anatomical approach, CV-based wood identification may provide insights that have not yet been revealed by established wood anatomy methods. The first and most important task to progress CV-based wood identification is to build large digital databases where wood information can be accessed anytime, anywhere, and by anyone, and to bridge the current gap between informatics and wood science.

DL in recent years has provided a technical foundation for more accurate wood identification and is expected to answer a variety of questions in wood anatomy shortly. Advances in communication technologies provide a broad space for the use of CV-based wood identification in the field. This could ultimately be a solution to the on-site demands by allowing wood identification by workers who are not trained in traditional identification. From the macro perspective of modern science, it is clear that CV, ML, and DL technologies will contribute to the development of the various subfields encompassed by wood science.

Availability of data and materials

Not applicable.

Abbreviations

- AI:

-

Artificial intelligence

- AKAZE:

-

Accelerated-KAZE

- ANN:

-

Artificial neural network

- BGLAM:

-

Basic gray level aura matrix

- BOF:

-

Bag-of-features

- BOW:

-

Bag-of-words

- BRIEF:

-

Binary-robust-independent-elementary-feature

- CAM:

-

Class activation map

- CNN:

-

Convolutional neural network

- CT:

-

Computed tomography

- CV:

-

Computer vision

- DL:

-

Deep learning

- FAST:

-

Features-from-accelerated-segment-test

- GA:

-

Genetic algorithm

- GLCM:

-

Gray level co-occurrence matrix

- HLAC:

-

Higher local order autocorrelation

- HOG:

-

Histograms of oriented gradients

- IAWA:

-

International Association of Wood Anatomists

- k-NN:

-

k-Nearest neighbors

- LBP:

-

Local binary pattern

- LDA:

-

Linear discriminant analysis

- LPQ:

-

Local phase quantization

- ML:

-

Machine learning

- ORB:

-

Oriented FAST and rotated BRIEF

- PCA:

-

Principal component analysis

- SIFT:

-

Scale-invariant feature transform

- SPPD:

-

Statistical properties of pores distribution

- SURF:

-

Speeded up robust features

- SVM:

-

Support vector machine

- TFIDF:

-

The term frequency–inverse document frequency

- XCT:

-

X-ray computed tomography

References

Wheeler EA, Baas P, Gasson PE, IAWA Committee. IAWA list of microscopic features for hardwood identification. IAWA Bull. 1989;10:219–332.

Richter HG, Grosser D, Heinz I, Gasson P, IAWA Committee. IAWA list of microscopic features for softwood identification. IAWA J. 2004;25(1):1–70.

Wheeler EA, Baas P. Wood identification—a review. IAWA J. 1998;19(3):241–64.

Carlquist S. Comparative wood anatomy: systematic, ecological, and evolutionary aspects of dicotyledon wood. Berlin: Springer; 2013.

Miller RB. Wood identification via computer. IAWA Bull. 1980;1:154–60.

Brazier JD, Franklin GL. Identification of hardwoods: a microscope key. London: H M Stationery Office; 1961.

Pankhurst RJ. Biological identification: the principles and practice of identification methods in biology. Baltimore: University Park Press; 1978.

Pearson RG, Wheeler EA. Computer identification of hardwood species. IAWA Bull. 1981;2:37–40.

LaPasha CA, Wheeler EA. A microcomputer based system for computer-aided wood identification. IAWA Bull. 1987;8:347–54.

Miller RB, Pearson RG, Wheeler EA. Creation of a large database with IAWA standard list characters. IAWA J. 1987;8(3):219–32.

Kuroda K. Hardwood identification using a microcomputer and IAWA codes. IAWA Bull. 1987;8:69–77.

Ilic J. Computer aided wood identification using CSIROID. IAWA J. 1993;14:333–40.

Wheeler EA. Inside wood—a web resource for hardwood anatomy. IAWA J. 2011;32(2):199–211.

Forestry and Forest Products Research Institute. Microscopic identification of Japanese woods. 2008. http://db.ffpri.affrc.go.jp/WoodDB/IDBK-E/home.php. Accessed 26 Mar 2021.

Samuel AL. Some studies in machine learning using the game of checkers. IBM J Res Dev. 1959;3(3):210–29.

Jordan MI, Mitchell TM. Machine learning: trends, perspectives, and prospects. Science. 2015;349(6245):255–60.

LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–324.

Lowe DG. Distinctive image features from scale-invariant keypoints. Int J Comput Vis. 2004;60(2):91–110.

Bay H, Tuytelaars T, Van Gool L. SURF: speeded up robust features. In: Leonardis A, Bischof H, Pinz A, editors. Computer vision—European conference on computer vision 2006. Lecture notes in computer science. Berlin: Springer; 2006. p. 404–17.

Tou JY, Lau PY, Tay YH. Computer vision-based wood recognition system. In: Proceedings of international workshop on advanced image technology; 2007. p. 1–6.

Ravindran P, Wiedenhoeft AC. Comparison of two forensic wood identification technologies for ten Meliaceae woods: computer vision versus mass spectrometry. Wood Sci Technol. 2020;54(5):1139–50.

Lin Y, Lv F, Zhu S, Yang M, Cour T, Yu K, Cao L, Huang T. Large-scale image classification: fast feature extraction and svm training. In: Proceedings of the Institute of Electrical and Electronics Engineers (IEEE) conference on computer vision and pattern recognition; 2011. p. 1689–96.

Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th international conference on neural information processing systems; 2012. p. 1097–105.

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A. Going deeper with convolutions. In: Proceedings of the Institute of Electrical and Electronics Engineers (IEEE) conference on computer vision and pattern recognition; 2015. p. 1–9.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the Institute of Electrical and Electronics Engineers (IEEE) conference on computer vision and pattern recognition; 2016. p. 770–8.

Khalid M, Lee ELY, Yusof R, Nadaraj M. Design of an intelligent wood species recognition system. Int J Simul Syst Sci Technol. 2008;9(3):9–19.

Khalid M, Yusof R, Khairuddin ASM. Improved tropical wood species recognition system based on multi-feature extractor and classifier. Int J Electr Comput Eng. 2011;5(11):1495–501.

Yusof R, Khalid M, Khairuddin ASM. Application of kernel-genetic algorithm as nonlinear feature selection in tropical wood species recognition system. Comput Electron Agric. 2013;93:68–77.

Hafemann LG, Oliveira LS, Cavalin P. Forest species recognition using deep convolutional neural networks. In: Proceedings of the international conference on pattern recognition; 2014. p. 1103–7.

Gasson P. How precise can wood identification be? Wood anatomy’s role in support of the legal timber trade, especially CITES. IAWA J. 2011;32(2):137–54.

Koch G, Haag V, Heinz I, Richter HG, Schmitt U. Control of internationally traded timber-the role of macroscopic and microscopic wood identification against illegal logging. J Forensic Res. 2015;6(6):1000317. https://doi.org/10.4172/2157-7145.1000317.

Dormontt EE, Boner M, Braun B, Breulmann G, Degen B, Espinoza E, Gardner S, Guillery P, Hermanson JC, Koch G, Lee SL, Kanashiro M, Rimbawanto A, Thomas D, Wiedenhoeft AC, Yin Y, Zahnen J, Lowe AJ. Forensic timber identification: it’s time to integrate disciplines to combat illegal logging. Biol Conserv. 2015;191:790–8.

Brancalion PH, de Almeida DR, Vidal E, Molin PG, Sontag VE, Souza SE, Schulze MD. Fake legal logging in the Brazilian Amazon. Sci Adv. 2018;4(8):eaat1192. https://doi.org/10.1126/sciadv.aat1192.

Gomes AC, Andrade A, Barreto-Silva JS, Brenes-Arguedas T, López DC, de Freitas CC, Lang C, de Oliveira AA, Pérez AJ, Perez R, da Silva JB, Silveira AMF, Vaz MC, Vendrami J, Vicentini A. Local plant species delimitation in a highly diverse A mazonian forest: do we all see the same species? J Veg Sci. 2013;24(1):70–9.

Espinoza EO, Wiemann MC, Barajas-Morales J, Chavarria GD, McClure PJ. Forensic analysis of CITES-protected Dalbergia timber from the Americas. IAWA J. 2015;36(3):311–25.

McClure PJ, Chavarria GD, Espinoza E. Metabolic chemotypes of CITES protected Dalbergia timbers from Africa, Madagascar, and Asia. Rapid Commun Mass Spectrom. 2015;29(9):783–8.

Deklerck V, Mortier T, Goeders N, Cody RB, Waegeman W, Espinoza E, Van Acker J, Van den Bulcke J, Beeckman H. A protocol for automated timber species identification using metabolome profiling. Wood Sci Technol. 2019;53(4):953–65.

Brunner M, Eugster R, Trenka E, Bergamin-Strotz L. FT-NIR spectroscopy and wood identification. Holzforschung. 1996;50(2):130–4.

Pastore TCM, Braga JWB, Coradin VTR, Magalhães WLE, Okino EYA, Camargos JAA, de Muniz GIB, Bressan OA, Davrieux F. Near infrared spectroscopy (NIRS) as a potential tool for monitoring trade of similar woods: discrimination of true mahogany, cedar, andiroba, and curupixá. Holzforschung. 2011;65(1):73–80.

Horacek M, Jakusch M, Krehan H. Control of origin of larch wood: discrimination between European (Austrian) and Siberian origin by stable isotope analysis. Rapid Commun Mass Spectrom. 2009;23(23):3688–92.

Kagawa A, Leavitt SW. Stable carbon isotopes of tree rings as a tool to pinpoint the geographic origin of timber. J Wood Sci. 2010;56(3):175–83.

Muellner AN, Schaefer H, Lahaye R. Evaluation of candidate DNA barcoding loci for economically important timber species of the mahogany family (Meliaceae). Mol Ecol Resour. 2011;11(3):450–60.

Jiao L, Yin Y, Cheng Y, Jiang X. DNA barcoding for identification of the endangered species Aquilaria sinensis: comparison of data from heated or aged wood samples. Holzforschung. 2014;68(4):487–94.

Mohri M, Rostamizadeh A, Talwalkar A. Foundations of machine learning. 2nd ed. Cambridge: MIT press; 2018.

Hinton GE, Sejnowski TJ. Unsupervised learning: foundations of neural computation. Cambridge: MIT press; 1999.

Goodfellow I, Bengio Y, Courville A. Deep learning. Cambridge: MIT press; 2016.

Martins J, Oliveira LS, Nisgoski S, Sabourin R. A database for automatic classification of forest species. Mach Vis Appl. 2013;24(3):567–78.

Kobayashi K, Kegasa T, Hwang SW, Sugiyama J. Anatomical features of Fagaceae wood statistically extracted by computer vision approaches: some relationships with evolution. PLoS ONE. 2019;14(8):e0220762. https://doi.org/10.1371/journal.pone.0220762.

Tang XJ, Tay YH, Siam NA, Lim SC. MyWood-ID: Automated macroscopic wood identification system using smartphone and macro-lens. In: Proceedings of the international conference on computational intelligence and intelligent systems; 2018. p. 37–43.

Olschofsky K, Köhl M. Rapid field identification of cites timber species by deep learning. Trees For People. 2020;2:100016. https://doi.org/10.1016/j.tfp.2020.100016.

Lopes DJV, Burgreen GW, Entsminger ED. North American hardwoods identification using machine-learning. Forests. 2020;11(3):298.

Souza DV, Santos JX, Vieira HC, Naide TL, Nisgoski S, Oliveira LES. An automatic recognition system of Brazilian flora species based on textural features of macroscopic images of wood. Wood Sci Technol. 2020;54(4):1065–90.

Wu F, Gazo R, Haviarova E, Benes B. Wood identification based on longitudinal section images by using deep learning. Wood Sci Technol. 2021;55:553–63.

Barmpoutis P, Dimitropoulos K, Barboutis I, Grammalidis N, Lefakis P. Wood species recognition through multidimensional texture analysis. Comput Electron Agric. 2018;144:241–8.

de Geus AR, da Silva SF, Gontijo AB, Silva FO, Batista MA, Souza JR. An analysis of timber sections and deep learning for wood species classification. Multimed Tools Appl. 2020;79(45):34513–29.

Jansen S, Kitin P, De Pauw H, Idris M, Beeckman H, Smets E. Preparation of wood specimens for transmitted light microscopy and scanning electron microscopy. Belgian J Bot. 1998;131(1):41–9.

Wiedenhoeft AC. The XyloPhone: toward democratizing access to high-quality macroscopic imaging for wood and other substrates. IAWA J. 2020;41(4):699–719.

Yu H, Cao J, Luo W, Liu Y. Image retrieval of wood species by color, texture, and spatial information. In: Proceedings of the international conference on information and automation; 2009. p. 1116–9.

Yusof R, Khalid M, Khairuddin ASM. Fuzzy logic-based pre-classifier for tropical wood species recognition system. Mach Vis Appl. 2013;24(8):1589–604.

Zamri MIAP, Cordova F, Khairuddin ASM, Mokhtar N, Yusof R. Tree species classification based on image analysis using improved-basic gray level aura matrix. Comput Electron Agric. 2016;124:227–33.

Ibrahim I, Khairuddin ASM, Talip MSA, Arof H, Yusof R. Tree species recognition system based on macroscopic image analysis. Wood Sci Technol. 2017;51(2):431–44.

Paula Filho PL, Oliveira LS, Nisgoski S, Britto AS. Forest species recognition using macroscopic images. Mach Vis Appl. 2014;25(4):1019–31.

Zhao P. Robust wood species recognition using variable color information. Optik. 2013;124(17):2833–6.

Kwon O, Lee HG, Yang SY, Kim H, Park SY, Choi IG, Yeo H. Performance enhancement of automatic wood classification of Korean softwood by ensembles of convolutional neural networks. J Korean Wood Sci Technol. 2019;47(3):265–76.

Fabijańska A, Danek M, Barniak J. Wood species automatic identification from wood core images with a residual convolutional neural network. Comput Electron Agric. 2021;181:105941. https://doi.org/10.1016/j.compag.2020.105941.

Kobayashi K, Akada M, Torigoe T, Imazu S, Sugiyama J. Automated recognition of wood used in traditional Japanese sculptures by texture analysis of their low-resolution computed tomography data. J Wood Sci. 2015;61(6):630–40.

Kobayashi K, Hwang SW, Okochi T, Lee WH, Sugiyama J. Non-destructive method for wood identification using conventional X-ray computed tomography data. J Cult Herit. 2019;38:88–93.

Wang HJ, Zhang GQ, Qi HN. Wood recognition using image texture features. PLoS ONE. 2013;8(10):e76101. https://doi.org/10.1371/journal.pone.0076101.

de Andrade BG, Basso VM, de Figueiredo Latorraca JV. Machine vision for field-level wood identification. IAWA J. 2020;41(4):681–98.

Yadav AR, Anand RS, Dewal ML, Gupta S. Multiresolution local binary pattern variants based texture feature extraction techniques for efficient classification of microscopic images of hardwood species. Appl Soft Comput. 2015;32:101–12.

da Silva NR, De Ridder M, Baetens JM, Van den Bulcke J, Rousseau M, Bruno OM, Beeckman H, Van Acker J, De Baets B. Automated classification of wood transverse cross-section micro-imagery from 77 commercial Central-African timber species. Ann For Sci. 2017. https://doi.org/10.1007/s13595-017-0619-0.

Lens F, Liang C, Guo Y, Tang X, Jahanbanifard M, da Silva FSC, Ceccantini G, Verbeek FJ. Computer-assisted timber identification based on features extracted from microscopic wood sections. IAWA J. 2020;41(4):660–80.

Hwang SW, Kobayashi K, Zhai S, Sugiyama J. Automated identification of Lauraceae by scale-invariant feature transform. J Wood Sci. 2018;64(2):69–77.

Hwang SW, Kobayashi K, Sugiyama J. Detection and visualization of encoded local features as anatomical predictors in cross-sectional images of Lauraceae. J Wood Sci. 2020;66:16. https://doi.org/10.1186/s10086-020-01864-5.

Mallik A, Tarrío-Saavedra J, Francisco-Fernández M, Naya S. Classification of wood micrographs by image segmentation. Chemom Intell Lab Syst. 2011;107(2):351–62.

Armi L, Fekri-Ershad S. Texture image analysis and texture classification methods—a review. Int Online J Image Process Pattern Recognit. 2019;2(1):1–29.

Harjoko A, Seminar KB, Hartati S. Merging feature method on RGB image and edge detection image for wood identification. Int J Comput Sci Inf Technol. 2013;4(1):188–93.

Zhao P, Dou G, Chen GS. Wood species identification using improved active shape model. Optik. 2014;125(18):5212–7.

Huang S, Cai C, Zhang Y. Wood image retrieval using SIFT descriptor. In: Proceedings of the international conference on computational intelligence and software engineering; 2009. p. 1–4.

Yusof R, Khairuddin U, Rosli NR, Ghafar HA, Azmi NMAN, Ahmad A, Khairuddin AS. A study of feature extraction and classifier methods for tropical wood recognition system. In: Proceedings of the TENCON—IEEE region 10 conference; 2018. p. 2034–9.

Ravindran P, Costa A, Soares R, Wiedenhoeft AC. Classification of CITES-listed and other neotropical Meliaceae wood images using convolutional neural networks. Plant Methods. 2018. https://doi.org/10.1186/s13007-018-0292-9.

Nasirzadeh M, Khazael AA, Khalid M. Woods recognition system based on local binary pattern. In: Proceedings of the international conference on computational intelligence, communication systems and networks; 2010. p. 308–13.

Damayanti R, Prakasa E, Dewi LM, Wardoyo R, Sugiarto B, Pardede HF, Riyanto Y, Astutiputri VF, Panjaitan GR, Hadiwidjaja ML, Maulana YH, Mutaqin IN. LignoIndo: image database of Indonesian commercial timber. In: Proceedings of the international symposium for sustainable humanosphere; 2019. p. 012057.

Forest species database—macroscopic. Federal University of Parana, Curitiba. 2014. http://web.inf.ufpr.br/vri/databases/forest-species-database-macroscopic/. Accessed 26 Mar 2021.

Forest species database—microscopic. Federal University of Parana, Curitiba. 2013. http://web.inf.ufpr.br/vri/databases/forest-species-database-microscopic/. Accessed 26 Mar 2021.

da Silva NR, De Ridder M, Baetens JM, Van den Bulcke J, Rousseau M, Bruno OM, Beeckman H, Van Acker J, De Baets B. Automated classification of wood transverse cross-section micro-imagery from 77 commercial Central-African timber species. zenodo. 2017. https://doi.org/10.5281/zenodo.235668. Accessed 26 Mar 2021.

Barmpoutis P. WOOD-AUTH dataset A (version 0.1). WOOD-AUTH. 2018. https://doi.org/10.2018/wood.auth. Accessed 26 Mar 2021.

Kobayashi K, Kegasa T, Hwang SW, Sugiyama J. Anatomical features of Fagaceae wood statistically extracted by computer vision approaches: some relationships with evolution. Data set. 2019. http://hdl.handle.net/2433/243328. Accessed 26 Mar 2021.

Sugiyama J, Hwang SW, Kobayashi K, Zhai S, Kanai I, Kanai K. Database of cross sectional optical micrograph from KYOw Lauraceae wood. Data set. 2020. http://hdl.handle.net/2433/245888. Accessed 26 Mar 2021.

Wainberg M, Merico D, Delong A, Frey BJ. Deep learning in biomedicine. Nat Biotechnol. 2018;36(9):829–38.

Figueroa-Mata G, Mata-Montero E, Valverde-Otárola JC, Arias-Aguilar D. Automated image-based identification of forest species: challenges and opportunities for 21st century xylotheques. In: Proceedings of the Institute of Electrical and Electronics Engineers (IEEE) international work conference on bioinspired intelligence; 2018. p. 1–8.

Soltis PS. Digitization of herbaria enables novel research. Am J Bot. 2017;104(9):1281–4.

Hwang SW, Kobayashi K, Sugiyama J. Evaluation of a model using local features and a codebook for wood identification. Institute of physics (IOP) conference series: earth and environmental science. IOP Publishing; 2020. p. 415. https://doi.org/10.1088/1755-1315/415/1/012029.

da Silva EAB, Mendonça GV. Digital image processing. In: Chen WK, editor. The electrical engineering handbook. 1st ed. Boston: Academic press; 2005.

Martins JG, Oliveira LS, Britto AS, Sabourin R. Forest species recognition based on dynamic classifier selection and dissimilarity feature vector representation. Mach Vis Appl. 2015;26:279–93.

Kwon O, Lee HG, Lee MR, Jang S, Yang SY, Park SY, Choi IG, Yeo H. Automatic wood species identification of Korean softwood based on convolutional neural networks. J Korean Wood Sci Technol. 2017;45(6):797–808.

Gasim G, Harjoko A, Seminar KB, Hartati S. Image blocks model for improving accuracy in identification systems of wood type. Int J Adv Comput Sci Appl. 2013;4(6):48–53.

Mohan S, Venkatachalapathy K, Sudhakar P. An intelligent recognition system for identification of wood species. J Comput Sci. 2014;10(7):1231–7.

Kobayashi K, Hwang SW, Lee WH, Sugiyama J. Texture analysis of stereograms of diffuse-porous hardwood: identification of wood species used in Tripitaka Koreana. J Wood Sci. 2017;63(4):322–30.

Wang L, Liu Z, Zhang Z. Feature based stereo matching using two-step expansion. Math Probl Eng. 2014. https://doi.org/10.1155/2014/452803.

Yusof R, Rosli NR, Khalid M. Tropical wood species recognition based on Gabor filter. In: Proceedings of the international congress on image and signal processing; 2009. p. 1–5.

Yadav AR, Dewal ML, Anand RS, Gupta S. Classification of hardwood species using ANN classifier. In: Proceedings of the national conference on computer vision, pattern recognition, image processing and graphics; 2013. p. 1–5.

Ibrahim I, Khairuddin ASM, Arof H, Yusof R, Hanafi E. Statistical feature extraction method for wood species recognition system. Eur J Wood Wood Prod. 2018;76(1):345–56.

Brunel G, Borianne P, Subsol G, Jaeger M, Caraglio Y. Automatic identification and characterization of radial files in light microscopy images of wood. Ann Bot. 2014;114(4):829–40.

Rajagopal H, Khairuddin ASM, Mokhtar N, Ahmad A, Yusof R. Application of image quality assessment module to motion-blurred wood images for wood species identification system. Wood Sci Technol. 2019;53(4):967–81.

Mitchell T. Machine learning. New York: McGraw-Hill; 1997.

Hastie T, Tibshirani R, Friedman J. The elements of statistical learning: data mining, inference, and prediction. New York: Springer; 2009.

Guyon I. A scaling law for the validation-set training-set size ratio. AT&T Bell Laboratories; 1997. p. 1–11.

Zumel N, Mount J, Porzak J. Practical data science with R. New York: Manning; 2014.

Tou JY, Tay YH, Lau PY. Rotational invariant wood species recognition through wood species verification. In: Proceedings of the Asian conference on intelligent information and database systems; 2009. p. 115–20.

Haralick RM, Shanmugam K, Dinstein I. Textural features for image classification. IEEE Trans Syst Man Cybern. 1973;6:610–21.

Conners RW, Harlow CA. A theoretical comparison of texture algorithms. IEEE Trans Pattern Anal Machine Intell. 1980;3:204–22.

Albregtsen F. Statistical texture measures computed from gray level cooccurrence matrices. In: Technical note. Department of Informatics, University of Oslo, Norway; 1995.

Tou JY, Tay YH, Lau PY. A comparative study for texture classification techniques on wood species recognition problem. In: Proceedings of the international conference on natural computation; 2009. p. 8–12.

Cavalin PR, Kapp MN, Martins J, Oliveira LE. A multiple feature vector framework for forest species recognition. In: Proceedings of the annual ACM symposium on applied computing; 2013. p. 16–20.

Zhao P, Dou G, Chen GS. Wood species identification using feature-level fusion scheme. Optik. 2014;125(3):1144–8.

Tou JY, Tay YH, Lau PY. One-dimensional grey-level co-occurrence matrices for texture classification. In: Proceedings of the international symposium on information technology; 2008. p. 1–6.

Qin X, Yang YH. Basic gray level aura matrices: theory and its application to texture synthesis. In: Proceedings of the Institute of Electrical and Electronics Engineers (IEEE) international conference on computer vision; 2005. p. 128–35.

Elfadel IM, Picard RW. Gibbs random fields, co-occurrences, and texture modeling. IEEE Trans Pattern Anal Mach Intell. 1994;16(1):24–37.

Haliche Z, Hammouche K. The gray level aura matrices for textured image segmentation. Analog Integr Cir Sig Process. 2011;69:29–38.

Ojala T, Pietikainen M, Maenpaa T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell. 2002;24(7):971–87.

Yadav AR, Anand RS, Dewal ML, Gupta S. Gaussian image pyramid based texture features for classification of microscopic images of hardwood species. Optik. 2015;126(24):5570–8.

Ma LJ, Wang HJ. A new method for wood recognition based on blocked HLAC. In: Proceedings of the international conference on natural computation; 2012. p. 40–3.

Yadav AR, Kumar J, Anand RS, Dewal ML, Gupta S. Binary Gabor pattern feature extraction technique for hardwood species classification. In: Proceedings of the international conference on recent advances in information technology; 2018.

Wang HJ, Qi HN, Wang XF. A new Gabor based approach for wood recognition. Neurocomputing. 2013;116:192–200.

Rublee E, Rabaud V, Konolige K, Bradski GR. ORB: an efficient alternative to SIFT or SURF. In: Proceedings of the international conference on computer vision; 2011. p. 2564–71.

Alcantarilla PF, Nuevo J, Bartoli A. Fast explicit diffusion for accelerated features in nonlinear scale spaces. In: Proceedings of the British machine vision conference; 2013.