Abstract

Background

The locked-in syndrome (LIS), due to a lesion in the pons, impedes communication. This situation can also be met after some severe brain injury or in advanced Amyotrophic Lateral Sclerosis (ALS). In the most severe condition, the persons cannot communicate at all because of a complete oculomotor paralysis (Complete LIS or CLIS). This even prevents the detection of consciousness. Some studies suggest that auditory brain–computer interface (BCI) could restore a communication through a « yes–no» code.

Methods

We developed an auditory EEG-based interface which makes use of voluntary modulations of attention, to restore a yes–no communication code in non-responding persons. This binary BCI uses repeated speech sounds (alternating “yes” on the right ear and “no” on the left ear) corresponding to either frequent (short) or rare (long) stimuli. Users are instructed to pay attention to the relevant stimuli only. We tested this BCI with 18 healthy subjects, and 7 people with severe motor disability (3 “classical” persons with locked-in syndrome and 4 persons with ALS).

Results

We report online BCI performance and offline event-related potential analysis. On average in healthy subjects, online BCI accuracy reached 86% based on 50 questions. Only one out of 18 subjects could not perform above chance level. Ten subjects had an accuracy above 90%. However, most patients could not produce online performance above chance level, except for two people with ALS who obtained 100% accuracy. We report individual event-related potentials and their modulation by attention. In addition to the classical P3b, we observed a signature of sustained attention on responses to frequent sounds, but in healthy subjects and patients with good BCI control only.

Conclusions

Auditory BCI can be very well controlled by healthy subjects, but it is not a guarantee that it can be readily used by the target population of persons in LIS or CLIS. A conclusion that is supported by a few previous findings in BCI and should now trigger research to assess the reasons of such a gap in order to propose new and efficient solutions.

Clinical trial registrations: No. NCT02567201 (2015) and NCT03233282 (2013).

Similar content being viewed by others

Introduction

The typical locked-in syndrome (LIS) is caused by a lesion in the pons, and patients are able to communicate only with movements of their eyes or eyelids [1]. This injury can be due to stroke or to other etiologies, like trauma or tumor [2]. This condition of total paralysis can also be encountered in the amyotrophic lateral sclerosis (ALS). ALS is a neurodegenerative disease, altering mainly the motoneurons. At the end of the evolution, when patients have chosen to undergo a mechanical ventilation, they are at risk to lose all muscular control, including those of the eyes. In this state, named “completely locked-in state” (CLIS), the person cannot communicate at all, which implies that the diagnosis of the state of consciousness is clinically impossible or very delayed [3]. In general, the assessment of consciousness in non-responsive patients (after cerebral anoxia, traumatism or major stroke) remains challenging, with up to 40% of patients in minimal conscious state that may be misdiagnosed in a vegetative state by non-expert teams [4]. Even after a careful behavioral assessment, there is still the possibility that the patient cannot show any response to command because of complete motor impairment. The development of paraclinical assessments of patients with disorders of consciousness revealed that some of them, although diagnosed in a vegetative state or even in coma [5, 6], were able to prove their consciousness by willfully modulating their brain activity (command following) when they were asked to, and thus should be considered as in a complete locked-in state. The first striking demonstration of such a cognitive motor dissociation (dissociation between awareness and motor capacity) was reported in 2006, using fMRI [7].

EEG-based brain–computer interfaces (BCI) are promising tools to detect a cognitive motor dissociation [8]. Indeed, they measure brain activity directly, in real-time, and enable repeated assessments at the patient’s bedside. Furthermore, they may also be used as communication devices. However, restoring communication with these patients once the diagnosis of command-following has been made, remains a major issue. The authors of two studies published in 2017 claimed that a communication was restored with people in CLIS [9, 10], but some flaws have been observed in their methodologies and their results remain controversial [11], which led to the retraction of one of them [12]. In another study [13], the authors used a steady state visually evoked potential BCI, which they evaluated longitudinally over 27 months in a patient with ALS. This patient could train with the BCI during three months before entering a CLIS state. The reliability of the BCI proved to be fluctuant, with accuracies below chance level in 13 out of 40 sessions [13]. A recent publication with an implanted intra-cortical electrode in the dominant left motor cortex demonstrated both the feasibility and the striking limitations of communication with a CLIS patients at the advanced stage of ALS [14]. This patient was implanted once he was already in a CLIS state with no residual eye movements, as attested by EOG. During the first stages of training, it appeared that when the patient was instructed to attempt or imagine hand, tongue or foot movements, no cortical response could be detected. Reliable yes–no responses were finally obtained three months after implantation thanks to a neurofeedback protocol. Tones with two different frequencies were provided according to the neural activity. During the 356 days following this training paradigm, he obtained an accuracy of 86.6% on 5700 trials. During training sessions where his accuracy was above 80%, he could use an auditory speller to produce one letter per minute, and freely spell intelligible sentences on 44 out of 107 days, which allowed him to express some of his needs. However, despite these encouraging results, invasive devices cannot be proposed to all patients, because the risks associated with the implantation (infection, hemorrhage) have to be compensated by the expected benefits. The truth is, potential advantages remain strikingly difficult to estimate. Indeed, as we saw previously, when facing patients with complete paralysis, there is a huge uncertainty about their consciousness and their cognitive abilities. In this context, non-invasive BCI could help detecting to patients with residual voluntary mental activity and provide them with a first line communication tool.

When targeting patients who, by definition, cannot use motor control, including oculomotor one, gaze-independant BCI have to be considered. In this manner, some visual BCI use the principle of steady-state visual evoked potentials, requiring to fixate a grid containing different colors, and to focus on one of them only [15]. However, some patients with locked-in syndrome have a hard time to control it (see for example 4 out of 6 patients with LIS in Lesenfants et al., 2014 who perform at chance level [15]). Indeed visual impairments are very common in the locked-in syndrome [16]. Hence, targeting other sensory modalities can help overcome these pitfalls. Some translational studies suggest that the auditory modality could provide a way to reach these patients [17,18,19,20]. In these four studies, one used pure tones as stimuli, which required the patients to learn a “code” (there were two different frequency streams, one standing for “yes” and the other one for “no”) [18]. This kind of code is quite difficult for patients with possible memory impairment. That’s why other authors suggested the use of spoken words. Sellers et al. [19] and Lulé et al. [17] proposed an oddball protocol where the four words “yes”, “no”, “stop”, “go” were delivered in a random order. The patients with LIS or with disorders of consciousness were asked to count the target words, in order to elicit a P300 event-related potential. In Lulé et al. [17], one out of two persons with a LIS could control the BCI with an online accuracy of 60%. An offline analysis showed that one patient with disorders of consciousness had 8 correct answers out of 14. The only signal considered for classification was the P300 response to deviant stimuli, thus neglecting the potential information carried by responses to standard sounds. A study by Hill et al. [21] showed that it was possible to further use the attentional modulations of the N200 wave elicited by standard words (“yes” and “no”), on top of the ones associated with deviant stimuli. They obtained a fairly good binary classification with healthy subjects (77% ± 11 s.e. with 100 trials, chance level ~ 62% with an alpha risk of 1%). They tested this paradigm in two ALS patients at an advanced stage of the pathology, and obtained accuracies comparable to the ones in healthy subjects.

Considering these encouraging results, we implemented and tested a "yes/no" auditory-based BCI exploiting the attentional modulations of responses to both standard and deviant sounds. We first assessed the auditory BCI with healthy controls. Then, we tested it with a group of 7 patients with severe motor disability but with residual means of communication. This enabled us to (1) be sure that instructions were perceived and understood, (2) get feedback and adapt the paradigm to each patient whenever needed to maximize our chance of success. This is important at this stage, as gaze-independent BCI have rarely been tested in patients so far.

Methods

We used an oddball paradigm, expecting a salient P300 like response to (duration) deviant stimuli when subjects are voluntarily paying attention to them. We also expected an attentional effect onto responses to standard sounds, for the attended stream of stimuli. Regarding further analysis, we computed offline performances by considering less stimuli/block, less electrodes or different preprocessing pipelines. We also assessed the evoked potentials, both at the group and individual level, in order to identify the electrophysiological responses associated with BCI control.

Experimental design

General presentation

We used spoken words pronounced by a synthetized male voice (“yes” and “no”). The sound duration was 100 ms for standard against 150 ms for deviant sounds. The stimulus onset asynchrony (SOA) was set to 250 ms for healthy subjects and adjusted to each patient (Table 1). The audio file of the stream of stimuli is provided in Additional file 2.

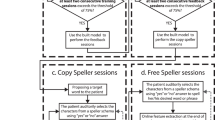

The “yes” sounds were delivered on the right ear and the “no” sounds on the left ear (Fig. 1). The two streams were intermixed, meaning that the “yes” and “no” sounds were never presented at the same time. The “yes” stream always started 250 ms before the “no” stream. One could certainly think of alternative strategies as having simultaneous concurrent streams in the two ears engenders a challenging task. For instance, one could present white noise in one ear and sounds in the other, and then alternate between the two ears and the two sound types. However, this would increase the time for selection, reduce the Information Transfer Rate (ITR) and hence the duration of the attentional effort, while still requiring from the user to focus on one side while ignoring the other.

There were 30 standard and 6 deviant sounds per block, for each stream (i.e. 6 “yes” and 6 “no” deviants, respectively), yielding 18-s long block in healthy subjects, and up to 36-s long ones in patients. The deviant and standards were presented in a pseudo-randomized order, with at least two standards in-between two deviants. Users were asked to focus their attention on one stream only (the “ATTENDED” stream), and to count the number of deviant sounds in that stream. For example, to convey a “yes” answer, the subjects had to focus on the right, pay attention to all “yes” speech sounds and to count the number of “yes” deviant. At the same time, they had to ignore the concurrent stream (the “IGNORED” stream). Stimuli were delivered through headphones (Fig. 1). The volume was standardized around 75 dB for all participants. The sounds were sent using the “NBS Presentation®” software, controlled by a script written in Python which also processed the EEG signal online.

The EEG system was an Acticap™ (Brainproduct) with 32 active gel-electrodes (Fig. 2). The international 10–20 system was used for electrode placement, with the ground electrode on the forehead and the reference on the nose. The signal was sent to a BrainAmp™ amplifier and digitized at 1000 Hz for healthy subjects, or to a V-Amp™ amplifier (BrainVision) for the first three patients (with a sampling frequency also at 1000 Hz, and the same type of gel-electrodes). We used 16 electrodes for the first three patients, with the set up shown in Fig. 2. Then considering the poor online performance in these patients, we decided to go back to a 32-channel set-up, as used with healthy subjects, for the last 4 patients.

Online signal processing

A band-pass filter between 0.5 and 20 Hz was applied. Spatial filtering, computed by the xDAWN algorithm [22], was then used to reduce dimensions and maximize the distinction between the “ATTENDED” and “IGNORED” responses. xDAWN yields as many spatial filters (or virtual sensors) as the number of EEG sensors. Importantly, spatial filters are orthogonal to each other and ranked according to how much they separate the two signals. We used between 1 and 5 filters. The exact number was optimized for each subject based on the results of a leave one out cross-validation procedure performed on the calibration data.

In healthy subjects, 500 ms and 750 ms long epochs were considered to analyze the responses evoked by standard and deviant sounds, respectively. In patients, considering that they often have delayed event related potentials (ERPs), we considered larger windows, namely 800 ms for standard and 1000 ms for deviant sounds.

Averaging was performed for each of the four conditions: standard “yes”, standard “no”, deviant “yes” and deviant “no”. We used four different, supervised, probabilistic binary classifiers, one for each of the four conditions. Therefore, we used calibration data to learn the likelihood distribution for each response type, knowing the target (either ‘yes’ or ‘no’). Then online, considering that the two possible outcomes are equally probable a priori, and assuming conditional independence between the four evoked response types, we computed the posterior probability of each class as the normalized product of each of the four classifier posteriors. At each trial, the one class with a posterior probability greater than 0.5 was selected as the outcome for online feedback (See Additional file 1 for more information).

Right after the calibration phase (14 blocks), a leave-one-out cross-validation procedure was used to evaluate the quality of this calibration. The cross-validation is made by block (i.e. including 30 standards and 6 deviants “yes” and the same amounts of “no”). Depending on the result, it could be decided to proceed with the online testing of the BCI or to perform another calibration if performance were no better than chance.

Healthy subjects

The study was approved by an ethical committee (Ancillary project included in trial No. NCT03233282). Within a single session, 19 healthy subjects (10 females, 23.2 ± 4.4 years) had to perform a 14 blocks long calibration, where responses to provide were instructed, followed by 50 very simple open questions for testing. The questions were printed on screen. Fourteen subjects were complete naive users while 5 subjects (no. 1, 2, 16, 17 and 18) had already taken part to one fairly similar auditory BCI experiment about a month before. Only subject no. 2 had to be excluded because it turned out that he did not perform the task as instructed to.

Patients with severe motor impairment

Seven patients were recruited at the University Hospitals of Lyon and Saint-Etienne, in rehabilitation wards and reference centers for ALS. We informed the patients and their legal representatives about the protocol. After that, patients gave their agreement with a “yes–no” motor code, and for each of them, as they couldn’t write, their legal representative gave a written consent. The study was approved by the ethical committee Sud-Est III (Clinical trial No. NCT02567201).

Clinical information for each patient is reported in Table 2.

The clinical investigators of the reference centers for ALS assessed the patients with the ALS Functional Rating Scale [23]. Each patient underwent a single recording session. To limit the cognitive load, we did not use questions but only direct instructions. For example, for healthy subjects, we asked “Is Paris the capital of France?”. Whereas for patients we asked “Pay attention to the right side, trying to count the “yes” that are longer” (see Fig. 1 for an example). Applying some user-centered design principles [24], we individualized the protocol for each patient, taking into account their feedback on the workload during the experiment, especially considering the speed of the streams, which led to different SOA between patients. A calibration was usually based on 14 blocks and tests were repeated by bunch of 10 blocks, but only if the patient was willing to pursue. The SOA was increased if the patient complained that the pace of stimulus presentation was too fast, starting with 300 ms.

Standardized written instructions were given aloud by the experimenters to introduce the experimental protocol. We presented sequences of stimuli to the patients prior to the actual experiment, especially to highlight the difference between standard and deviant sounds. In this initial familiarization phase, all patients were asked to count the deviant sounds and to report the number, in order to check that they were able to detect them. As already mentioned, we adjusted the experimental procedure according to the results of calibration. If performance, as estimated by the cross-validation procedure, was at chance level, a new calibration was performed. Otherwise, we directly performed the test when the cross-validation of the calibration seemed to be above chance level.The test was launched during which the patient was receiving online auditory feedback on her BCI performance (e.g.: “The selected answer is yes”). The test was made of bunch of ten consecutive blocks. Pauses between blocks allowed us to check the patient's state of comfort and fatigue. We would also briefly interrupt the experiment if the patient needed respiratory care or felt uncomfortable for some other reason.

For patient ALS2, performances were still at chance level after a second calibration but this subject was eager to try the BCI mode. We thus decided not to process with another cumbersome calibration but to proceed with an online test, hoping to obtain enough data to reliably evaluate a posteriori, offline, the overall performance of this patient. Table 1 summarizes the experimental conditions experienced by each patient.

Offline analysis (patients and controls)

Brain–computer interface offline simulation

Complementary offline analyses were performed to assess and predict BCI performance based on different numbers of spatial filters and different numbers of accumulated evoked responses per block. For sake of homogeneity, these offline analyses were based on the 15 electrodes set-up that all subjects (controls and patients) had in common, and the same number of spatial filters (n = 2, which was optimal for most of the subjects). We also compared the classification accuracies based on one, several or all evoked response types combined. For example, we especially compare the accuracies between classification based on responses to the “yes” and “no”, respectively, to assess if their different acoustical properties had an impact. This was done with the same classifier used online. We also assessed whether the classification result would be different if independent component analysis (ICA) would be used to remove artifacts (blinks, saccades and artefacts on reference electrode).

At the end of the control study, before the clinical trial, an offline procedure was performed in order to select the most relevant sensors. We used a backward selection. We first computed the accuracies obtained with all electrodes, and then removed step-by-step the least contributing one in order to identify the most informative sensors at the group level. This analysis was motived by the practical aim of possibly optimizing our set-up, making it more portable and faster to install for our clinical tests in different clinical centers.

Processing of the evoked potentials

The MNE python software [25] enabled us to analyze evoked related potentials (ERP). Raw EEG data were pre-processed by ICA to remove artifacts due to eye movements (blinks and saccades) and common artifacts due to disturbance on the reference electrodes. The pre-processed data were then filtered with the same band-pass filter as used online (0.5–20 Hz). For each stimulus type (standard “yes” and “no” and deviant “yes” and “no”) and condition (target and non-target), single responses were epoched between − 200 ms to + 1000 ms with respect to stimulus onset. Because of the high variability of signal values between patients, one cannot reject trials based on a single threshold. Instead we excluded 15% of the epochs with the highest peak-to-peak amplitude in every subject. No baseline correction was applied, both because of the use of a short SOA and to conform to the processing steps used online. For each subject, we merged the calibration and test data. In controls, we also computed the grand average ERP for each condition, only including subjects who performed best in controlling the BCI (n = 11, online accuracy > 88%Footnote 1), to better characterize the biomarkers of a good BCI control. We also performed an individual ERP analysis for each subject. We looked at the presence of an attentional modulation, namely a significant difference between the “ATTENDED” and the “IGNORED” sounds. We further analyzed the presence of a specific evoked response to deviance compared to standards.

Auditory attention has been shown to modulate eye movements and blinks [26,27,28]. Despite careful signal processing of our patients’ data, it might still be the case that residual signals related to eye movements or blinks contribute to the classification. We hypothesized that some subjects could use, even involuntarily, their eyes or their eyelids to control the BCI. As we did not record directly the electro-oculogram, we used indirect markers to assess this hypothesis. We extracted the ICA components of saccades and blinks. For each component, we averaged separately the “ATTENDED” and “IGNORED” stimuli, and we performed a statistical test to check if there was an attentional modulation of these ICA components. In other words, the ICA was used here as a spatial filter on the sensors that were the most sensitive to the ocular and eyelids artefacts.

Statistical analysis

BCI performance: accuracy and chance level threshold

Our primary criterion was BCI performance, assessed by classification accuracy, that is, the percentage of blocks for which the selected response was correctly identified. An accuracy below chance level was interpreted as a complete lack of BCI control. In order to test for the statistical significance of the obtained accuracies, we assumed that classification errors obey a binomial cumulative distribution, as described in [29]. Therefore, the empirical chance level depends on the total number of blocks. We performed this comparison with accuracies obtained with the 15-channels and 2 filters used for the offline analysis.

Statistical comparison of evoked potentials

Both at the group (grand average) and at the individual level, we compared the attended versus ignored stimuli. We used a non-parametric cluster-level test for spatio-temporal data [30], with a threshold-free cluster enhancement [31], 10,000 permutations and a p-value threshold of 0.01. For the comparison of attended versus ignored evoked responses of the ICA components, we performed a cluster-level statistical permutation test with 10,000 permutations and a p-value threshold of 0.05.

Results

Healthy subjects

Online results

The online accuracy obtained at the group level was 86% on average (n = 18, s.d: 11.7%, median: 92%). Ten subjects obtained an accuracy above 90%. For the 14 naive subjects, the mean accuracy was 85% (s.d: 11%, median: 88%).

Offline results

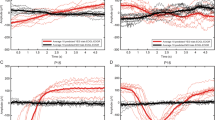

The offline performance was similar with and without ICA preprocessing (Fig. 3).

Offline classification results of ATTENDED versus IGNORED stimuli with and without ICA correction. Offline BCI simulations with 15 channels, for healthy subjects (a) and patients (b). Boxplots filled with light gray stand for accuracy results with no pre-processing of the data, except filtering. Boxplots filled with dark gray stand for the condition where the signal was preprocessed with ICA, removing blinks, saccades and a DC component. Stimulations: Total: Pool of responses to all stimuli, STD: Pool of all responses do standards, DEV: Pool of all responses to deviants, YES: Pool of all responses to « yes», NO: Pool of all responses to « no»

We reanalyzed the group data (n = 18, 50 blocks each) for various block lengths. We observed that the accuracy of detection of ATTENDED versus IGNORED stimuli remained stable for block duration comprised between 9 and 18 s, which is promising for future improvements of the paradigm, as it would allow to improve the information transfer rate (ITR) and would require a shorter attentional effort (Fig. 4). We also assessed the classification performance when considering not all stimulus types together, but either standard or deviant sounds, respectively (Fig. 4).

Offline classification results of ATTENDED versus IGNORED stimuli with different duration of blocks. Offline BCI simulations with 15 channels, for healthy subjects. Stimulations: Total: Pool of responses to all stimuli, STD: Pool of all responses do standards, DEV: Pool of all responses to deviants, YES: Pool of all responses to « yes», NO: Pool of all responses to « no»

It appears that the best accuracies were obtained when accounting for the combination of standard and deviant responses, compared with accuracies based on standards or deviants only. We did not find a significant difference between yes and no responses, despite their different acoustic properties. Finally, the backward electrode selection procedure revealed that performance remained unaffected when reducing the EEG set down to 15 sensors (Fig. 5).

Backward selection of relevant electrodes. A Horizontal axis, from left to right: at each step of the backward selection, an additional electrode is removed (the one that minimizes the loss in accuracy). Vertical axis: Accuracy. B Spatial locations of the most relevant 15 electrodes in green rectangle. Spatial location of the most relevant 7 electrodes in red rectangle

This suggests that future experiments with healthy subjects could be optimized in using a more portable device (e.g. Vamp® system) with 15 channels only.

Evoked potentials

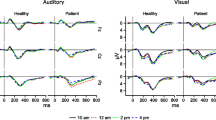

Average responses to standards, for the group of subjects who best controlled the BCI (n = 11, accuracy > 88%), revealed a positive peak at 65 ms and a negative one at 115 ms after stimulus onset. The latter is reminiscent of the auditory N1 component (Fig. 6a). At the group level, there was no attentional modulation on standard stimuli. However, at the individual level, 83% of the subjects showed an attentional modulation on standards. This modulation was highly variable in terms of latency and duration. For the deviant response, we observed a clear P3a component (Fig. 6b), followed by a large P3b when the subject paid attention to the target.

Effect of attention on event related potentials (ERPs): group average for healthy subjects. Mean ERPs for attended and unattended sounds, standards (a) and deviants (b). The SOA is at 250 ms, with an alternance of ATTENDED (Att) and IGNORED (Ign) sounds, hence a switch in the attentional modulation every 250 ms. Each stimulus onset is represented by a vertical dashed line. The solid curve depicts the response when the first stimulus is attended, while the dashed curve depicts the responses when the first stimulus is ignored. The shaded area corresponds to the period when this difference is significant. This analysis was performed on the preprocessed signals using ICA. TFCE: Threshold-free cluster enhancement test for the difference between attended and ignored sounds. Each line represents one electrode. When significant, the clusters for one electrode appear in white (negative) or in gray (positive). There is no significant cluster for the standards

At the individual level, 72% of healthy subjects had an attentional modulation on deviants. Ninety-four percent of the subjects had a response to deviance (Table 3).

Patients with severe motor impairment

Online results

All patients could hear at least some of the deviant sounds. However, 5 out of 7 patients could not control the BCI: online performance was at chance level or below (Table 4). The other two patients (ALS3 and ALS4) did achieve a high degree of control of the BCI, with a 100% accuracy over 30 blocks.

Offline results

With a 15-channel set-up, patient ALS1 who performed at chance level online, improved offline, up to 87% accuracy. Patients ALS3 and ALS4, who performed with 100% accuracy online, with 32 electrodes, maintained their performance when considering those 15 electrodes only (97% and 100% respectively). Interestingly, the offline BCI simulation with ICA preprocessing (Fig. 3b) did not yield any difference compared to raw data, except for patient ALS1 whose performance in session 2 dropped by 24% (from 87 to 63%). We observed that relying on part of the data (e.g. deviants or standards only) yields similar performance than when considering all the data.

Evoked potentials

Individual analysis revealed an artefacted signal in all patients compared to healthy subjects. There were different kinds of artefacts. In one patient (LIS2), it was clearly due to the pathology: he had a facial spasm that contaminated all channels and prevented the visualization of evoked potentials, which did not allow a good functioning of the BCI either. In other patients, it was more electrical artifacts likely due to mechanical ventilation or to the pump of the inflatable air mattress used in prevention of bed sores. However, in the latter cases, signal preprocessing allowed the visualization of evoked potentials.

A significant attentional modulation of the evoked potentials could still be observed in patients who managed to control the BCI (ALS3 and 4) for both standards and deviants (Fig. 7).

Effect of attention on averaged evoked related potentials (ERPs) to sounds “yes” in patient ALS3 and ALS4. Mean ERPs for attended and unattended sounds, standards (a) and deviants (b). The SOA is at 400 ms, with an alternance of ATTENDED (Att) and IGNORED (Ign) sounds, hence a switch in the attentional modulation every 400 ms. Each stimulus onset is represented by a vertical dashed line. The solid curve depicts the response when the first stimulus is attended, while the dashed curve depicts the responses when the first stimulus is ignored. The shaded area corresponds to the period when this difference is significant. This analysis was performed on the preprocessed signals using ICA. TFCE: Threshold-free cluster enhancement test for the difference between attended and ignored sounds. Each line represents one electrode. When significant, the clusters for one electrode appear in white (negative) or in gray (positive)

As for healthy subjects, the pattern of attentional modulations for standards varied from one patient to the next in terms of latency and topography. Figure 7 shows an example of this attentional modulation in response to “yes” standards in patients ALS3 and ALS4. For these two examples, the event-related responses of the attended standards tend to be more negative than the ignored ones. The topography of the significant difference between the attended and unattended conditions is mainly fronto-central, but present in almost every electrode, except the temporal ones. It was maximal at frontal sites prior to ICA pre-processing. This difference remains and is widespread after removing ICA components corresponding to blinks, saccades and a common offset. Amongst patients showing no BCI control, they were no attentional modulation at the classical localization of the evoked responses (central and parietal electrodes).

Albeit all patients could behaviorally detect at least some deviant sounds, and contrary to what we observed in most of the healthy subjects, five out of seven patients did not show a significant response to deviance (Table 3). Patient ALS4 presented with a P300 response to attended deviants, while patient ALS3 also showed a response to attended deviants around 300 ms, but with a negative polarity (Fig. 7).

The analysis of each ICA component did not reveal that the attentional modulation was more captured by one or a few components compared to others. Interestingly though, some differences appeared in the ICA components between the group that controlled the BCI (healthy subjects and patients) and the patients that did not control the BCI. In the group with a good control, all subjects presented with a typical saccadic component, indicating the usual presence of oculomotor movements. However, in the group that couldn’t control the BCI, we found no obvious saccadic ICA component in three out of the 5 patients (i.e. LIS2, LIS3 and ALS2 had no saccadic ICA). The latter suggests that those patients had a more severe oculomotor impairment.

Discussion

Seventeen of 18 healthy subjects proved able to control the proposed auditory BCI. In contrast, only 2 out of 7 severely motor impaired patients proved able to control the interface online, and 3 out of 7 after careful offline signal processing.

The analysis of deviant evoked responses revealed that the presence of a classical P300 and its attentional modulation was associated with a good control of the interface, in both healthy subjects and patients. This could explain the poor BCI results observed in most patients, for whom no P300 was detected. Other studies uncovered this lower prevalence of P300 in patients with LIS [32]. However, this lack of P300 response is quite surprising, since all patients could hear at least some of the deviant sounds when presented with the different stimuli. Hence this oddball auditory protocol lacks robustness with patients with severe motor impairment, and relying only on deviant sounds would not allow an accurate enough BCI communication.

For standard stimuli, the effect of attention on evoked potentials is reminiscent of an “attentional phase shift”, similar to the one observed in [33, 34]. This attentional phase shift was robust and present in 15 of the 18 healthy subjects. However, it was not visible at the group level because of a phase difference in the shift from one subject to another, a variability which is also described in [34]. This attentional shift or marker of sustained attention orienting was also present in patients who did control the BCI (Fig. 7). In these patients, there is probably a differential effect of attention on event-related responses, leading to a more negative event-related response when the subject pays attention to it, and/or a more positive response when the subject tries to ignore the “distractor” on the opposite side. Further studies will be needed to further explore how the attentional modulation is operating, either playing a role on the inhibition of distractor processing and/or enhancing target processing. This could be done for instance by contrasting active attention orienting with passive listening. We observed no obvious N100 evoked potential at the group level. This could be explained by the variability of the evoked potentials when using words as stimuli instead of sharp tones, as noticed by Hill et al. [21]. Peak latencies then indeed vary a lot between, as well as within subjects.

An important finding is that patients with severe motor disability, although clearly conscious and with residual means of communication, present with poor performance of BCI control. Only 3 out of 7 patients were able to control the BCI with an accuracy above chance level. Together with our offline analysis of their electrophysiological responses, this suggest that BCIs that are validated in healthy subjects are unfortunately not readily usable by the targeted end users. Baring in mind that, in the long term, such interfaces are mostly meant to help people who have no means of communication, our findings raise crucial challenges for our community. Reasons behind the poor BCI performance in the majority of the patients have to be thoroughly explored in order to come up with efficient non-invasive solutions.

We can put forward several, non-mutually exclusive, hypotheses. First, the quality of the signal is, on average, lower in patients (due to several factors: mechanical ventilation, erratic muscle activity, electrical interference due to hospital beds, etc.). Second, there is more and more evidence that motor impairments come with cognitive impairments, whatever the etiology [35,36,37]. Cognitive impairment are very prevalent in both the locked-in syndrome [38] and in ALS [36], even early in ALS course [37]. In this context, it might be that our paradigm is cognitively too demanding for the patients: binaural listening requires not only focusing on the “ATTENDED” stream, but also inhibiting the “IGNORED” one. In addition to that, patients have to be able to understand fairly complex instructions, and sustain their attention for half an hour or so. However, all the patients included in this study could handle the complexity of communicating with a yes–no code using a letter board, which presupposes the preservation of some cognitive abilities, especially in terms of working memory and executive functions. Despite this ability, less than half of them proved abled to control the BCI.

It seems difficult to further simplify the protocol given the intrinsic and technical limitations of EEG [35]. However, one possibly useful change to be tested would be to no longer present the "yes" and "no" streams concurrently, but alternately in the form of short separate blocks. This non-lateralized paradigm could be useful also for patients with unilateral deafness. This approach could make the attentional task easier, without too much extending the duration of a block. Patients would concentrate on sounds during blocks where the relevant answer is presented, while during irrelevant blocks they would divert their attention away from sounds (e.g. by imagining navigating in a familiar environment [39]). This may reduce the mental workload and could help patients with cognitive impairments, especially frontal ones, which are quite frequent at an advanced stage of ALS and can occur in LIS too. Moreover, some studies suggest that it is possible for persons with motor impairment to improve their BCI performance with training over several sessions [40].

Beyond improving the protocols, there is a need for better understanding the particularities of patients with severe motor impairment, which remain poorly explored at the moment, both at the neurophysiological and cognitive level [36]. Here we chose an auditory protocol to overcome the oculomotor limitations of patients with severe motor impairment. Indeed, oculomotor impairments are known to be a predictor of weak control of visual BCIs [41], even when stimuli are all presented at the same place in an SSVEP paradigm [42]. A recent study with audio-visual stimulations also reported chance level accuracy with a patient in CLIS (no voluntary control of eye movements), despite the possibility to detect, offline, some differences between target and non-target responses, suggesting that the patient did try to do the task [43]. Other markers using mental imagery (eg: sport imagery, navigation imagery) or motor attempt with people in LIS also failed to improve the control of BCI [44]. Studies exploring user-centered design methods uncovered that temporal demand is considered to contribute the most significantly to workload [44]. And some studies objectivated the impact of mental workload on ERP, decreasing for example the N200 and the late re-orienting negativity [45]

In the same vein, it is striking to notice that, in our study, none of the patients with “classical” LIS, who present more often with oculomotor impairments than patients with ALS [16], managed to control the BCI. And none of the patients with no ICA component (LIS2, LIS3 and ALS2) reflecting saccadic activity could control the BCI, either. Concomitantly, there is a bunch of evidence in the literature that eye-movement planning and spatial attention are tightly related [46,47,48], although not completely similar [49, 50]. Most of these studies relate to visual spatial attention, but attention is a cross-modal effort: for example, orienting attention toward a tactile target also triggers an automatic displacement of spatial attention in the visual modality [51]. Hence it would be useful to test the impact of eye movements impairments on spatial auditory attention. Future studies should provide finer clinical information regarding these patients, namely about their oculomotor limitations, their ability to turn their head. Some adapted cognitive scale with a yes–no code that were developed for persons with LIS could be very useful in the BCI domain to better assess the cognitive profiles of the patients [38]. This would help identifying those who could actually benefit from BCI, as well as identifying factors that prevent their use.

Availability of data and materials

Data are available under reasonable requests, in compliance with national regulations.

Notes

This threshold was set arbitrarily, as a compromise between selecting enough subjects and keeping only those with a very high BCI accuracy.

References

Bauer G, Gerstenbrand F, Rumpl E. Varieties of the locked-in syndrome. J Neurol. 1979;221(2):77–91.

Laureys S, et al. The locked-in syndrome: what is it like to be conscious but paralyzed and voiceless? Prog Brain Res. 2005;150:495–511. https://doi.org/10.1016/S0079-6123(05)50034-7.

León-Carrión J, van Eeckhout P, Domínguez-Morales MDR, Pérez-Santamaría FJ. The locked-in syndrome: a syndrome looking for a therapy. Brain Inj. 2002;16(7):571–82. https://doi.org/10.1080/02699050110119781.

Schnakers C, et al. Diagnostic accuracy of the vegetative and minimally conscious state: clinical consensus versus standardized neurobehavioral assessment. BMC Neurol. 2009;9:35. https://doi.org/10.1186/1471-2377-9-35.

Claassen J, et al. Detection of brain activation in unresponsive patients with acute brain injury. N Engl J Med. 2019;380(26):26. https://doi.org/10.1056/NEJMoa1812757.

Morlet D, et al. Infraclinical detection of voluntary attention in coma and post-coma patients using electrophysiology. Clin Neurophysiol. 2022. https://doi.org/10.1016/j.clinph.2022.09.019.

Owen AM, Coleman MR, Boly M, Davis MH, Laureys S, Pickard JD. Detecting awareness in the vegetative state. Science. 2006;313(5792):1402. https://doi.org/10.1126/science.1130197.

Luauté J, Morlet D, Mattout J. BCI in patients with disorders of consciousness: clinical perspectives. Ann Phys Rehabil Med. 2015;58(1):29–34. https://doi.org/10.1016/j.rehab.2014.09.015.

Guger C, et al. Complete locked-in and locked-in patients: command following assessment and communication with vibro-tactile p300 and motor imagery brain–computer interface tools. Front Neurosci. 2017;11:251. https://doi.org/10.3389/fnins.2017.00251.

Chaudhary U, Xia B, Silvoni S, Cohen LG, Birbaumer N. Brain–computer interface-based communication in the completely locked-in state. PLoS Biol. 2017;15(1): e1002593. https://doi.org/10.1371/journal.pbio.1002593.

Spüler M. Questioning the evidence for BCI-based communication in the complete locked-in state. PLOS Biol. 2019;17(4): e2004750. https://doi.org/10.1371/journal.pbio.2004750.

Editors TPB. Retraction: brain–computer interface-based communication in the completely locked-In state. PLOS Biol. 2019;17(12): e3000607. https://doi.org/10.1371/journal.pbio.3000607.

Okahara Y, et al. Long-term use of a neural prosthesis in progressive paralysis. Sci Rep. 2018;8(1):1. https://doi.org/10.1038/s41598-018-35211-y.

Chaudhary U, et al. Spelling interface using intracortical signals in a completely locked-in patient enabled via auditory neurofeedback training. Nat Commun. 2022;13(1):1236. https://doi.org/10.1038/s41467-022-28859-8.

Lesenfants D, et al. An independent SSVEP-based brain–computer interface in locked-in syndrome. J Neural Eng. 2014;11(3): 035002. https://doi.org/10.1088/1741-2560/11/3/035002.

Graber M, Challe G, Alexandre MF, Bodaghi B, LeHoang P, Touitou V. Evaluation of the visual function of patients with locked-in syndrome: report of 13 cases. J Fr Ophtalmol. 2016;39(5):5. https://doi.org/10.1016/j.jfo.2016.01.005.

Lulé D, et al. Probing command following in patients with disorders of consciousness using a brain–computer interface. Clin Neurophysiol. 2013;124(1):101–6. https://doi.org/10.1016/j.clinph.2012.04.030.

Pokorny C, et al. The auditory P300-based single-switch brain-computer interface: paradigm transition from healthy subjects to minimally conscious patients. Artif Intell Med. 2013;59(2):81–90. https://doi.org/10.1016/j.artmed.2013.07.003.

Sellers EW, Donchin E. A P300-based brain–computer interface: initial tests by ALS patients. Clin Neurophysiol. 2006;117(3):538–48. https://doi.org/10.1016/j.clinph.2005.06.027.

Kübler A, Furdea A, Halder S, Hammer EM, Nijboer F, Kotchoubey B. A brain–computer interface controlled auditory event-related potential (p300) spelling system for locked-in patients. Ann N Y Acad Sci. 2009;1157:90–100. https://doi.org/10.1111/j.1749-6632.2008.04122.x.

Hill NJ, et al. A practical, intuitive brain–computer interface for communicating ‘yes’ or ‘no’ by listening. J Neural Eng. 2014;11(3): 035003. https://doi.org/10.1088/1741-2560/11/3/035003.

Rivet B, Souloumiac A, Attina V, Gibert G. xDAWN algorithm to enhance evoked potentials: application to brain-computer interface. IEEE Trans Biomed Eng. 2009;56(8):Art. no. 8. https://doi.org/10.1109/TBME.2009.2012869.

Cedarbaum JM, et al. The ALSFRS-R: a revised ALS functional rating scale that incorporates assessments of respiratory function. J Neurol Sci. 1999;169(1–2):13–21. https://doi.org/10.1016/S0022-510X(99)00210-5.

Kübler A, et al. The user-centered design as novel perspective for evaluating the usability of BCI-controlled applications. PLoS ONE. 2014;9(12): e112392. https://doi.org/10.1371/journal.pone.0112392.

Gramfort A, et al. MEG and EEG data analysis with MNE-Python. Front Neurosci. 2013;7:267. https://doi.org/10.3389/fnins.2013.00267.

Braga RM, Fu RZ, Seemungal BM, Wise RJS, Leech R. Eye movements during auditory attention predict individual differences in dorsal attention network activity. Front Hum Neurosci. 2016. https://doi.org/10.3389/fnhum.2016.00164.

Abeles D, Amit R, Tal-Perry N, Carrasco M, Yuval-Greenberg S. Oculomotor inhibition precedes temporally expected auditory targets. Nat Commun. 2020;11(1):3524. https://doi.org/10.1038/s41467-020-17158-9.

Kadosh O, Bonneh YS. Involuntary oculomotor inhibition markers of saliency and deviance in response to auditory sequences. J Vis. 2022;22(5):8. https://doi.org/10.1167/jov.22.5.8.

Combrisson E, Jerbi K. Exceeding chance level by chance: the caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J Neurosci Methods. 2015;250:126–36. https://doi.org/10.1016/j.jneumeth.2015.01.010.

Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164(1):177–90. https://doi.org/10.1016/j.jneumeth.2007.03.024.

Smith SM, Nichols TE. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage. 2009;44(1):83–98. https://doi.org/10.1016/j.neuroimage.2008.03.061.

Lugo ZR, et al. Cognitive processing in non-communicative patients: what can event-related potentials tell us? Front Hum Neurosci. 2016;10:569. https://doi.org/10.3389/fnhum.2016.00569.

Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008. https://doi.org/10.1126/science.1154735.

Besle J, et al. Tuning of the human neocortex to the temporal dynamics of attended events. J Neurosci. 2011;31(9):3176–85. https://doi.org/10.1523/JNEUROSCI.4518-10.2011.

Séguin P, Maby E, Mattout J. Why BCIs work poorly with the patients who need them the most? Proc 8th Graz Brain Comput Interface Conf 2019 Bridg Sci Appl. https://doi.org/10.3217/978-3-85125-682-6-48.

Kellmeyer P, Grosse-Wentrup M, Schulze-Bonhage A, Ziemann U, Ball T. Electrophysiological correlates of neurodegeneration in motor and non-motor brain regions in amyotrophic lateral sclerosis—implications for brain–computer interfacing. J Neural Eng. 2018;15(4): 041003. https://doi.org/10.1088/1741-2552/aabfa5.

Finsel J, Uttner I, Vázquez Medrano CR, Ludolph AC, Lulé D. Cognition in the course of ALS-a meta-analysis. Amyotroph Lateral Scler Front Degener. 2023;24(1–2):2–13. https://doi.org/10.1080/21678421.2022.2101379.

Rousseaux M, Castelnot E, Rigaux P, Kozlowski O, Danzé F. Evidence of persisting cognitive impairment in a case series of patients with locked-in syndrome. J Neurol Neurosurg Psychiatry. 2009;80(2):2. https://doi.org/10.1136/jnnp.2007.128686.

Morlet D, Ruby P, André-Obadia N, Fischer C. The auditory oddball paradigm revised to improve bedside detection of consciousness in behaviorally unresponsive patients. Psychophysiology. 2017;54(11):1644–62. https://doi.org/10.1111/psyp.12954.

Halder S, Käthner I, Kübler A. Training leads to increased auditory brain-computer interface performance of end-users with motor impairments. Clin Neurophysiol. 2016;127(2):1288–96. https://doi.org/10.1016/j.clinph.2015.08.007.

Marchetti M, Priftis K. Brain–computer interfaces in amyotrophic lateral sclerosis: a metanalysis. Clin Neurophysiol. 2014. https://doi.org/10.1016/j.clinph.2014.09.017.

Lesenfants D, Habbal D, Chatelle C, Soddu A, Laureys S, Noirhomme Q. Toward an attention-based diagnostic tool for patients with locked-in syndrome. Clin EEG Neurosci. 2018;49(2):122–35. https://doi.org/10.1177/1550059416674842.

Pires G, Barbosa S, Nunes UJ, Gonçalves E. Visuo-auditory stimuli with semantic, temporal and spatial congruence for a P300-based BCI: an exploratory test with an ALS patient in a completely locked-in state. J Neurosci Methods. 2022;379: 109661. https://doi.org/10.1016/j.jneumeth.2022.109661.

Lugo ZR, et al. Mental imagery for brain-computer interface control and communication in non-responsive individuals. Ann Phys Rehabil Med. 2019. https://doi.org/10.1016/j.rehab.2019.02.005.

Ke Y, et al. Training and testing ERP-BCIs under different mental workload conditions. J Neural Eng. 2016;13(1): 016007. https://doi.org/10.1088/1741-2560/13/1/016007.

Craighero L, Nascimben M, Fadiga L. Eye position affects orienting of visuospatial attention. Curr Biol. 2004;14(4):4. https://doi.org/10.1016/j.cub.2004.01.054.

Gabay S, Henik A, Gradstein L. Ocular motor ability and covert attention in patients with Duane Retraction Syndrome. Neuropsychologia. 2010;48(10):10. https://doi.org/10.1016/j.neuropsychologia.2010.06.022.

Thompson K, Biscoe KL, Sato TR. Neuronal basis of covert spatial attention in the frontal eye field. J Neurosci. 2005. https://doi.org/10.1523/JNEUROSCI.0741-05.2005.

Smith DT, Schenk T. The Premotor theory of attention: time to move on? Neuropsychologia. 2012;50(6):6. https://doi.org/10.1016/j.neuropsychologia.2012.01.025.

Carrasco M, Hanning NM. Visual perception: attending beyond the eyes’ reach. Curr Biol. 2020;30(21):R1322–4. https://doi.org/10.1016/j.cub.2020.08.095.

Wu C-T, Weissman DH, Roberts KC, Woldorff MG. The neural circuitry underlying the executive control of auditory spatial attention. Brain Res. 2007;1134(1):1. https://doi.org/10.1016/j.brainres.2006.11.088.

Acknowledgements

We thank the people with LIS and ALS and their relatives for their active participation to this study and for their very valuable feedbacks. We thank Lesly Fornoni for her help in writing the ethical committee files. We thank Pr Jean-Christophe Antoine, Pr Jean-Philippe Camdessanché, and Nathalie Dimier from the university hospitals of Saint-Etienne and Dr Emilien Bernard from the university hospital of Lyon for their valuable feedbacks and their help in recruiting the persons with ALS. We thank Pauline Mandon for her help in experimenting with patients.

Funding

PS, JM, EM, PG and JL were funded by two grants from the Fondation pour la Recherche Médicale (FRM, DEA20140629858 & FDM201906008524); PS and JM were also funded by the French government (ANR-17-CE40-0005, MindMadeClear & ANR-20-CE17-0023, ANR HiFi) and by the Perce-Neige Fondation. AO, JM, EM and DM were funded by the Fondation pour la Recherche Médicale (FRM, ING20121226307). The COPHY team is supported by the Labex Cortex (ANR-11-LABX-0042).

Author information

Authors and Affiliations

Contributions

EM, DM and JM conceived and designed the brain-computer interface and the experimental paradigm. EM, JM and AO designed and implemented the brain-computer interface. PG and JL recruited the patients. PS, MF and EM performed the experiments. PS, EM, DM and JM performed the offline data analysis. PS, EM and JM wrote the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by a national ethical committee. The healthy subjects were part of an ancillary project included in clinical trial N° NCT03233282. The patients were included in clinical trial N° NCT02567201.

Consent for publication

All authors and participants gave their consent for publication.

Competing interests

The authors declare no competing interests as defined by BMC, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Appendix.

Computation of the classification posterior probability.

Additional file 2:

Auditory stimuli for healthy subjects (SOA= 250 ms).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Séguin, P., Maby, E., Fouillen, M. et al. The challenge of controlling an auditory BCI in the case of severe motor disability. J NeuroEngineering Rehabil 21, 9 (2024). https://doi.org/10.1186/s12984-023-01289-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12984-023-01289-3