Abstract

Studies on the applications of infrared (IR) spectroscopy and machine learning (ML) in public health have increased greatly in recent years. These technologies show enormous potential for measuring key parameters of malaria, a disease that still causes about 250 million cases and 620,000 deaths, annually. Multiple studies have demonstrated that the combination of IR spectroscopy and machine learning (ML) can yield accurate predictions of epidemiologically relevant parameters of malaria in both laboratory and field surveys. Proven applications now include determining the age, species, and blood-feeding histories of mosquito vectors as well as detecting malaria parasite infections in both humans and mosquitoes. As the World Health Organization encourages malaria-endemic countries to improve their surveillance-response strategies, it is crucial to consider whether IR and ML techniques are likely to meet the relevant feasibility and cost-effectiveness requirements—and how best they can be deployed. This paper reviews current applications of IR spectroscopy and ML approaches for investigating malaria indicators in both field surveys and laboratory settings, and identifies key research gaps relevant to these applications. Additionally, the article suggests initial target product profiles (TPPs) that should be considered when developing or testing these technologies for use in low-income settings.

Similar content being viewed by others

Background

Effective control of malaria requires an in-depth understanding of its transmission. This entails estimating parasitological, entomological and epidemiological parameters in respective communities [1]. Specific activities may include detecting malaria infections in humans, estimating mosquito survival following deployment of interventions, identifying malaria-infected mosquitoes, and characterizing the human populations at risk [1]. As countries move towards malaria elimination in line with the strategic goals of the World Health Organization (WHO) [2], there is a need to develop simple, low-cost and scalable methods for assessing key entomological and parasitological indicators of malaria and for monitoring the impact of interventions [1, 3,4,5,6].

Proper management of suspected malaria cases requires confirmation through quality-assured laboratory tests [2, 7]. These tests may also include quantifying the number of asexual malaria parasites in blood samples to determine the severity of the infection, or identifying carriers through the detection of Plasmodium gametocytes [8, 9]. In population surveys, malaria prevalence can be estimated through various methods, such as observing malaria parasites under a microscope, using rapid diagnostic tests (RDTs) to detect parasite-derived proteins and by-products, or detecting parasite nucleic acid sequences through polymerase chain reactions (PCR) [4, 10]. These tools have greatly improved the diagnosis of malaria, guided effective case management, and enhanced the evaluation of key interventions.

In terms of entomological indicators, female Anopheles can transmit malaria only if they live long enough to pick up the infective stages of Plasmodium, and thereafter incubate those parasites until they mature into the infectious sporozoite stage. This process usually takes 10–14 days, but can be slower depending on climatic conditions [11, 12]. Proportions of female mosquitoes that are old enough to transmit malaria can, therefore, be used to estimate vectorial capacity (number of mosquito infective bites produced by a single malaria case) and assess the performance of vector control methods, such as insecticide-treated nets (ITNs) and indoor residual spray (IRS) [13,14,15,16].

The primary measure of malaria transmission intensity, the entomological inoculation rate (EIR), is calculated as the product of the human biting rates (number of bites per unit of time) and the proportion of mosquitoes infected with Plasmodium sporozoites. Estimating EIR requires detailed assessments of Anopheles biting rates, typically through mosquito trapping, and the proportion of female Anopheles that carry infective Plasmodium sporozoites, typically through enzyme-linked immunosorbent assays (ELISA) or PCR [17, 18]. In addition to these core entomological metrics, other measures can be used to estimate the natural survival and transmission potential of Anopheles populations. These may include ovarian dissections to assess parous proportions, analysis of vertebrate blood meals to estimate the proportion of mosquitoes biting humans, and estimation of the proportion of mosquitoes biting indoors and outdoors [1, 19].

Although these strategies for monitoring malaria transmission have contributed to progress against the disease [20], there are still considerable obstacles related to operational costs, performance accuracies, scalability, and human resource requirements [5, 6, 21]. In order to align with global priorities for malaria elimination, further advancements in both entomological and parasitological surveillance are just as important as the need for new drugs, vaccines, or vector control approaches [2, 6].

A recent advancement in malaria monitoring is the use of infrared (IR) spectroscopy in combination with machine learning (ML) techniques to assess key indicators of malaria. These indicators include the chronological age of mosquitoes (e.g. number of days post emergence) [22,23,24,25], blood-feeding histories of malaria vectors [26], Plasmodium infections in human blood [27,28,29] or mosquitoes [30], and identification of malaria vector species [22]. In this technique, biological samples are scanned with infrared radiation, and the energy absorbed by the covalent bonds in the target specimen causes its molecules to vibrate. An infrared spectrum generates information about the molecules that absorb the radiation and their intensity of absorption [31]. Despite the subtle biochemical differences between specimens with different biological traits, ML algorithms can disentangle these spectral changes and map them to specific phenotypes [27, 31, 32]. Together, IR and ML-based systems constitute robust, easy-to-use, reagent-free, non-invasive and low-cost approaches, making them attractive in low-income settings [22, 24, 26]. As a result, there has been a significant increase in the number of studies evaluating or validating these techniques for monitoring vector-borne diseases [23, 33, 34].

To ensure maximum benefits going forward, it is important to identify existing gaps and the essential and desirable characteristics that should be met for these technologies to be effectively integrated into routine malaria control programmes. The aim of this article is to review existing IR spectroscopy and ML applications for malaria surveillance and diagnostics, to identify gaps for field use, and to outline a target product profiles for such technologies to be suitable in low-income settings.

Current methods for measuring malaria transmission

Parasitological methods

The most common method for parasitological assessment of malaria is light microscopy, which is standard practice in many laboratories and relies on direct observations of malaria parasites on thick or thin smears of blood [35,36,37]. Although light microscopy is accessible even in low-resource settings, it requires highly experienced personnel and can generally detect only parasite densities above 50 parasites/μl of blood with an overall sensitivity of between 50 and 500 parasites/μl [38, 39]. The method may, therefore, miss individuals with low parasitaemia levels or asymptomatic carriers [37, 38], and may perform poorly in low-transmission settings [42]. The diagnostic accuracy might also be compromised by poor preparation of thick or thin blood smears and visual identification [43, 44].

Another common approach is the use of malaria rapid diagnostic tests (RDTs), which have revolutionized malaria investigations in both clinical settings and community surveys due to their low-cost and promptness [45, 46]. Moreover, they do not require highly-trained or experienced personnel to perform or interpret the tests, and can be used even in hard-to-reach areas, and by community healthcare workers [45, 47]. Most RDTs target the parasite antigen, histidine-rich protein II (HRP-2), which is abundant in P. falciparum infected red blood cells [38, 48]. Some RDTs also target glycolytic enzymes, such as Plasmodium aldolase and Plasmodium lactate dehydrogenase (pLDH) antigens, and can detect non-falciparum malaria parasites, such as Plasmodium ovale, Plasmodium malariae and Plasmodium vivax [38, 49].

The main disadvantages of RDTs include the lack of quantitative information and poor performance in asymptomatic cases or low-level parasitaemia, such as those with parasitaemia levels below 100 parasites/μl [4, 47]. In addition, genetic mutations of the HRP-2 genes, which are spreading around the world, also compromise the sensitivity of RDTs [51,52,53,54,55,56,57,58]. These gene deletions, which have so far been detected in nearly 40 countries [59], make the malaria parasites undetectable by the HRP-2 based RDTs even when the patients are severely ill. The WHO currently recommends that countries should withdraw these specific RDTs if more than 5% of malaria infections have HRP-2 mutations [59].

Nucleic acid-based diagnostics, such as PCR, have the highest sensitivity but are often unaffordable in most malaria-endemic settings and are, therefore, rarely used [60,61,62]. PCR can detect parasitaemia as low as 1–5 parasites/μl of blood, but is used mostly in research settings because of its high cost and the need for specialized facilities and personnel [38, 62, 63]. Epidemiological surveys have also demonstrated that PCR assays can be used to identify areas with unusually high malaria transmission [37, 42]. Moreover, one analysis of methods for detecting malaria hotspots in coastal Kenya concluded that PCR was the most appropriate for mapping asymptomatic cases once overall prevalence had dropped significantly [42]. Lastly, PCR also provides detailed information on Plasmodium species based on the small subunit 18S rRNA or circumsporozoite protein genes, and can also detect mixed infections [38, 62]. Unfortunately, as summarized in Fig. 1A, the techniques require highly-skilled labour, expensive equipment and reagents, making them untenable for regular use in places with poor supply of laboratory materials [4].

Applicability, strengths, and weaknesses of current methods used to measure key malaria indicators. Panel A compares the three most common methods for parasitological assessment [51, 64,65,66,67,68,69,70,71,72] while panel B compares the three main methods for entomological assessment [73,74,75,76,77,78,79,80,81,82,83], on a proportion score of 1–100. These scores are based on expert opinion of the authors of this article

Entomological methods

The WHO has outlined several entomological indicators that malaria programs may consider for monitoring transmission dynamics, guiding the selection or deployment of control strategies, and evaluating the control efforts [1, 7]. These include: (i) mosquito blood-feeding histories, biting frequencies, and resting behaviours, (ii) vector species presence and densities (iii) insecticide resistance status, (iv) proportion of mosquitoes with Plasmodium sporozoites, and (v) larval habitat profiles [1, 20]. The indicators may be differently prioritized depending on the local capabilities, malaria epidemiological profiles, financial constraints, and prevailing control strategies in the respective countries. However, the most central ones are biting rate, mosquito density and EIR.

Entomological surveys involve different sampling methods, after which the collected mosquitoes are sorted by taxa and physiological features. Adult female mosquitoes are frequently dissected for analysis of their internal organs (e.g. gut, reproductive systems and salivary glands) or retained for other laboratory analyses [19]. Sex and species are initially sorted based on the exterior morphology of the mosquitoes using taxonomic keys [84, 85]; but the indistinguishable members of species complexes, such as Anopheles gambiae sensu lato (s.l.) and Anopheles. funestus group require further distinction by PCR [86,87,88]. As summarized in Fig. 1B, these methods are time-consuming, expensive, require specialized training, and are not always readily available locally [89].

Depending on the research goals, additional laboratory tests may be performed on the collected mosquitoes. These can include ELISA tests to detect malaria parasite proteins or PCR tests to find Plasmodium sporozoites in the heads and thoraces of female Anopheles mosquitoes [83, 90, 91]. Additionally, examination of the stomach contents of the mosquitoes, using ELISA [92] or PCR [93], can be performed to identify the vertebrate sources of mosquito blood meals as required to determine their preference for biting humans compared to other animals. The age of field-collected female mosquitoes is generally determined by dissection to examine changes in their ovaries; with age here being estimated in terms of reproductive history (e.g. whether parous or not, and if parous how many gonotrophic cycles have been completed) rather than in terms of chronological age (e.g. number of days post emergence) [19, 77, 94, 95]. Estimates of physiological age derived from these dissection methods are used to approximate chronological age based on fixed assumption of the number of days required to complete a gonotrophic cycle in the field [76, 96]. Finally, a series of bio-efficacy and molecular assays to determine the resistance of the mosquitoes to insecticides, to inform appropriate insecticidal interventions can also be done [97].

Entomological monitoring is complex and costly, and as a result, only a small number of malaria-endemic countries can monitor all the recommended entomological parameters on a large scale [20, 21, 98]. A recent analysis of vector surveillance programmes in malaria-endemic countries found that countries with the highest burden have far less surveillance capacity than countries nearing elimination [20]. Overall, most countries are not well-equipped to establish effective surveillance systems with the minimum essential data necessary to detect changes and adjust public health responses.

Applications of infrared spectroscopy and machine learning for parasitological and entomological surveys of malaria

The attributes of mosquitoes or human body tissues can be studied by analysing their infrared (IR) spectral signatures. These signatures contain complex biochemical information represented by absorbance intensities at different wavenumbers. Near Infrared (NIR) Spectroscopy specifically measures absorption by vibrational overtones, or vibrations that are excited from the ground state to the second or third energy level, in the 14,000–4000 cm-1 range. On the other hand, Mid-Infrared (MIR) spectroscopy measures absorption by fundamental vibrations of molecular bonds in the 4000–400 cm-1 range, which allows for more direct quantification of functional chemical groups present in substances, such as chitin, protein, or wax in the samples of interest [22, 33].

Once the samples have been scanned, ML algorithms can be used to analyse the infrared spectral data and identify specific entomological and parasitological parameters [23,24,25, 28, 99]. Additional techniques may be used to remove errors and improve the accuracy of the analysis, such as transfer learning [100]. These algorithms can be used to determine features such as mosquito age, species identity, infection status, and blood meal types. Studies have shown that combining IR spectroscopy with machine learning (IR-ML) can provide accurate predictions and estimates of various transmission indicators [33]. For example, this approach has been used to classify malaria-transmitting mosquitoes by chronological age or number of gonotrophic cycles [22, 23], making it useful for studying the effects of vector control on mosquito populations. The potential of IR-ML techniques for measuring malaria transmission should be evaluated based on factors such as robustness, speed, validity, infrastructure needs, scalability, costs, and cost-effectiveness; and should only be adopted if they address the challenges of conventional methods.

The cost of IR spectroscopy equipment used for malaria research can vary greatly. Hand-held versions of NIR or MIR spectrometer can cost as little as $2000 [101], while desktop versions of these spectrometers range from $30,000 to $60,000 [27, 99]. Most equipment is durable for regular laboratory or field use and requires minimal maintenance. No additional reagents are needed for operation, except standard low-cost maintenance, such as providing desiccants to limit humidity effects.

Parasitological surveys

The use of infrared spectroscopy (IR) for diseases screening and diagnostic purposes has been demonstrated in various branches of medicine, including the histopathological screening of breast cancers and the prediction of infections (e.g. enterococci), leukaemia, Alzheimer’s, epilepsy, skin carcinoma, brain oedema, and diabetes [102, 103]. An increasing number of studies are also using IR-ML techniques for parasitological screening [33].

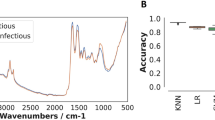

In one study in Tanzania, dried blood spots were scanned using an attenuated total reflection-Fourier Transform Infrared spectrometer (ATR-FTIR) and a logistic regression model was trained using the resulting MIR spectra [27]. This approach achieved 92% accuracy relative to PCR in identifying individuals infected with P. falciparum, and 85% accuracy for detecting mixed infections such as those carrying both P. falciparum and P. ovale [27], which is also common in some parts of Tanzania [104]. Another study used synchrotron MIR Fourier Transform Infrared spectroscopy coupled with artificial neural networks (ANN) to achieve a prediction accuracy of 100% for distinguishing between different stages of the cultured P. falciparum, i.e., rings, trophozoites and schizonts [29]. Additionally, support vector machine algorithms fitted with MIR spectra from an ATR-FT spectrometer were used to classify infected and uninfected individuals with a sensitivity of 92% and specificity of 97% [28]. Recently, it has been shown that the NIR absorption peaks of malaria parasites can be used for non-invasive detection of malaria infection through human skin using miniaturized hand-held spectrometers [101].

Entomological surveys

Using off-the-shelf hardware, both NIR and MIR spectroscopy can be used to analyse large numbers of mosquito samples at a relatively low cost compared to traditional methods [22, 33, 94, 105]. Studies have demonstrated direct applications to predict mosquito chronological (e.g. number of days post emergence) and physiological age classes (e.g. whether parous or not), blood-feeding histories and species identity [22, 24,25,26, 105, 106]. They have also been used to detect mosquito endosymbionts, such as Wolbachia [107, 108], and mosquito-borne pathogens, such as Zika [34, 109] and malaria [110, 111]. Scientists have attempted to validate these laboratory findings in the field settings but so far, there has been success in only a small number of studies [23, 25, 112].

There has been a particular interest in evaluating the potential of IR-ML systems for mapping the demographic characteristics of wild mosquito populations and using this to evaluate the performance of vector control interventions, or monitoring transmission risk. Following multiple successes in combining IR spectroscopy and ML for age-grading laboratory and semi-field vector populations [22, 105], field studies are now underway to validate the potential of this tool in malaria-endemic communities. It is expected that these ongoing efforts will deliver a scalable and operationally relevant IR-ML system that integrates off-the-shelf hardware and open-source software to simplify the technologies. Ultimately, IR-ML based approaches will be most desirable only if they constitute a simple set of routine activities that can be performed by researchers and National Malaria Control Programme (NMCP) staff.

Despite advancements in using IR-ML for malaria surveillance, there remain several challenges that might hinder its full potential. In entomological surveillance, existing algorithms show up to 99% accuracy with laboratory-reared mosquitoes, but this drops significantly in field data, due to variances in mosquito body compositions from dietary, genetic, and environmental factors [22, 23, 33, 112, 113]. Fortunately, recent strides using Convolutional Neural Networks (CNN) have improved accuracy across diverse datasets, with CNNs achieving over 90% accuracy in laboratory and field assessments on specific mosquito species [24]. Transfer learning is also being explored to enhance algorithm generalizability in real-world settings [100]. Additionally, logistical hurdles akin to those faced by existing surveillance methods exist, particularly in hardware maintenance and supply [20]. Moreover, unlike other diagnostic methods with built-in verification, IR-ML lacks this feature [42, 114], indicating a vital area for future research to ensure reliable operations and address these identified gaps. The sections below will discuss these gaps and potential solutions in detail.

Considerations for research and development of IR-ML approaches for malaria survey and diagnostics

As the WHO encourages malaria-endemic countries to scale up effective surveillance-response strategies for malaria, an important question that remains is what elements should be considered when developing or evaluating new approaches such as IR spectroscopy and ML. Moreover, while IR-ML technologies have the potential to aid in malaria surveillance, several gaps in research and development must be addressed to optimize their utility. This section identifies key research and development gaps in the applications of these technologies for malaria surveys in low-income settings. It further proposes a target product profile (TPP) consisting of both the essential characteristics and desirable characteristics that could improve its uptake, performance, and cost-effectiveness.

Research gaps to be addressed

Table 1 provides a summary of the key research gaps for the IR-ML applications relevant to malaria. For each of these gaps, additional details are provided below.

Gap 1: Need for a greater understanding of the biochemical and physicochemical basis of the IR signals relevant for malaria surveys and diagnosis.

IR spectral absorption intensities are determined by the chemical bonds within chitin, protein, and wax, which are the three most abundant components of the mosquito cuticle. Recent research has shown that these signals can be used to infer the age and species of mosquitoes [22, 23], as well as distinguish between Plasmodium-infected and uninfected human blood [27].

In the case of parasitological observations, it is apparent that the most dominant spectral features that influence ML model predictions are found in the fingerprint region (1730–883 cm−1), where most of the signal from biological samples is expected [27]. The breakdown of haemoglobin into haemozoin crystals may also show up in the IR spectra and can help detect infections in blood samples [27, 115]. The interpretation of IR spectra should, therefore, take into account the sample type and characteristics, and in the case of composite sample types such as parasite-infected blood, considerations on how the parasite interacts with and alters the biochemical makeup of the host tissues should be factored in. For example, changes in the carbohydrate regions at 1144, 1101 and 1085 cm−1 may be associated with differential glucose levels in infected red blood cells, since Plasmodium parasites metabolize glucose faster than normal cells [32, 116]. The presence of these direct and indirect signals of infection raises the possibility of non-specific detection and false positive diagnoses, which should be mitigated with carefully chosen controls when training machine learning (ML) models.

Given the many different applications of IR-ML for investigating malaria indicators, a generalized framework is essential and should be derived from an updated understanding of the bio-chemical and physico-chemical features of the samples.

Gap 2: Need to validate the performance of the IR-ML in different field settings and laboratories

So far, most of the successes in the application of IR-ML have been with laboratory specimens, but it has been difficult to apply these laboratory-trained algorithms to field-collected specimens due to environmental, laboratory, and genetic sources of variation, and/or limited training data. Only a small number of studies have achieved this with partial success [23, 25, 112]. This challenge is compounded by the limited generalizability of many existing ML models. Efforts towards field validation should be integrated with those that seek to improve the generalizability of the models, using more diverse datasets with greater genetic and environmental variability.

Field validation is also essential for IR-based parasitological surveys, as algorithms trained using spectral data from laboratory parasite cultures may not be applicable to other settings, in part due to different immunological and physiological profiles [117,118,119]. Consequently, early validation of IR-ML for parasitological surveillance is essential and the training data should capture representative signals associated with immunological and genetic composition from multiple populations [27, 120]. For clinical applications, determining the true effectiveness of these techniques may also require large-scale clinical studies.

Gap 3: Need for appropriate ML-frameworks that achieve maximum predictive accuracies with minimal computational power.

While IR-ML approaches for assessing malaria indicators can achieve high accuracies, there are many differences between the analytical methods and algorithms used. Currently, there is no consensus on the best ML frameworks for spectral analysis, either supervised or unsupervised. Ideally, the best framework would be that which provides minimum computational needs while also achieving accurate and generalizable predictions of the target traits.

Initially, multivariate statistics, including partial least squares (PLS) and principal component analysis (PCA) were the most widely used [32, 116, 119, 121, 122]. More recently, IR spectroscopy coupled with different ML classifiers has been used to link the signals of IR biochemical bands to specific biological traits [33]. A general approach is to compare and select from multiple model types. Diverse ML algorithms, such as support vector machines (SVMs), Random Forests (RFs), K-nearest neighbours (KNNs), Naive Bayes (NBs), Gradient Boosts (XGBs), and Multilayer Perceptrons have been tested for their ability to decipher IR spectra associated with malaria indicators [24, 25, 27,28,29].

Unsupervised learning is often utilized in spectra pre-processing to decrease dimensionality or cluster dominant features before algorithm training [23, 28, 29], but additional statistical techniques can be added to improve generalization. For example, unsupervised PCA was used to reduce the dimensionality of the data set, and an ANN was trained on the pre-processed data to accurately predict malaria parasite stages [29]. Moreover, transfer learning and dimensionality reduction techniques like PCA and t-SNE (t-distributed stochastic neighbour embedding) can significantly reduce computational power while maintaining robust accuracy in models [100].

Gap 4: Need to understand the malaria parasite detection thresholds for the IR-ML systems.

As conventional methods have a low likelihood of detecting malaria infections with low parasitaemia [61], it is necessary to understand the lower limits of detection (LLOD) for any novel diagnostic and screening tools. Unfortunately, only a small number of studies have examined such thresholds for IR-based malaria detection. One study which used serially diluted parasites grown in vitro demonstrated that ATR-FTIR data could be used to identify and quantify parasite densities as low as < 1 parasite/μl [32]. Other research has shown that NIR spectroscopy coupled with PCA and PLS can detect up to 0.5 parasite/μl and quantify up to 50 parasite/μl parasites in isolated RBCs [118].

Other studies have also shown that using wider spectral ranges, e.g. combining the UV, Visual and IR spectra, can accurately detect and measure malaria without the need for complex preservation methods [118, 123]. Nevertheless, most studies that established the LLODs of the IR did not use ML as the framework for interpreting parasite signals in IR spectra. There has been no investigation of threshold detections for malaria parasites using IR-ML approaches in field settings. Future research should, therefore, establish absolute or the relative LLOD of the IR-ML techniques in both the point-of-care applications and population surveys.

Gap 5: Understanding the performance and validity of the IR-ML techniques in settings with varying epidemiological profiles.

Malaria screening methods perform differently in settings with varying transmission intensities and parasitaemia. The most sensitive markers of malaria infections at low transmission intensities tend to be nucleic acids or antibodies to P. falciparum [124]. One study in coastal Kenya that compared RDTs, light microscopy, and PCR showed that malaria transmission hotspots detectable by PCR overlapped with those detectable by microscopy at a moderate transmission setting but not low transmission settings [42]. Elsewhere, the effectiveness of RDTs and microscopy was greatest in regions with high malaria transmission or in the presence of high parasitaemia [125]. These tests can however miss many infections in low-transmission regions, where microscopy-negative individuals may still contribute 20–50% of infections sustaining transmission [126].

According to the WHO-backed “High Burden, High Impact” malaria strategy [127], endemic countries are encouraged to implement sub-nationally tailored plans that differentially address high and low malaria burdens. This requires sensitive, high-throughput, and fast screening tools for malaria with comparable validity across transmission settings [2, 5]. Unfortunately, the performance of new tools such as IR-ML has not been compared across settings. Researchers have been able to identify malaria-positive MIR spectra with models trained on pooled data from both low and high transmission settings or from high transmissions only, but not across strata [27, 28]. Additionally, the positive predictive value (ability to predict true positive cases), and negative predictive value (ability to predict true negative cases) should be clarified. One study in Tanzania estimated the positive and negative predictive values of IR-ML at 92.8 and 91.7%, respectively for detecting malaria infections field-collected dried blood spots relative to PCR [27], but this study had only a small number of samples. Future studies should include broad demonstrations of the performance of IR-ML approaches in different epidemiological strata.

Gap 6: The need for essential human resource training in malaria-endemic countries.

The implementation of effective malaria surveillance in endemic countries is hindered by inadequacies of trained personnel and facilities. A global survey found that only 8% of malaria-endemic countries had sufficient capacity for vector surveillance and nearly 50% had no capacity to implement core interventions [21]. To effectively implement IR-ML based surveillance at the country level, two forms of training are necessary: one for potential users, including researchers and malaria surveillance officers, and one for higher-level experts capable of tasks such as manipulating infrared and machine learning systems and creating new classification algorithms. Countries may also implement periodic refresher training to boost human resource capabilities [128].

To ensure sustainability and effectiveness, a comprehensive and strategic training plan involving the development of IR-ML training guidelines and partnerships with research and academic institutions is necessary.

Gap 7: Need to select the most appropriate hardware and software platforms.

Selecting suitable hardware and software platforms is crucial for enhancing the scalability of IR-ML systems for malaria surveys and diagnostics. A mix of hardware, such as sample collection devices and spectrometers, and software, such as spectral filters and ML models, is necessary. Portable devices are available for field surveys [22, 23, 26], but they are mostly in clinical or research laboratory settings. To implement them on a large scale, spectrometers with hardware systems designed for areas with limited electricity access, such as solar-powered or battery-powered spectrometers, may be necessary. Other options may include the miniaturized IR spectrometers, such as those recently used to detect and quantify malaria parasites in RBCs [118] and for non-invasive parasite detection via the skin of human beings [101].

Spectral data must also be easily interpretable for non-expert users in remote settings. This may require deploying trained algorithms on cloud-based platforms and designing user-friendly interfaces that work with simple internet connectivity. Systems based on mobile phone applications [28] or web interfaces [129] are already being tested, and can be enhanced to remain functional even under limited internet connectivity in remote settings. Lastly, the availability of relevant source codes (preferably via code-sharing platforms such as GitHub) and training in their use should also be ensured.

Gap 8: Need to standardize sample-handling procedures.

Standardized protocols for sample handling are needed to ensure the comparability of findings and to make IR-ML techniques more widely applicable in parasitological and entomological assessments. Unfortunately, little effort has been devoted to determining the optimal methods for storing and preserving samples for IR-ML investigations. For entomological studies, some protocols have indicated using chloroform to kill specimens and storing them in silica gel for 2–3 days before scanning [22, 26], and also that NIR spectroscopy performs well when samples are stored by either desiccants, RNAlater, or refrigeration [130]. Separately, a study using MIR spectroscopy and ML demonstrated the crucial need for standardized handling (storage or preservation) for both training and validation samples [113].

Proper sample storage and preservation is also essential for reducing spectral noise and preserving the biochemical composition of the specimens. For example, the use of anticoagulant materials can significantly affect model performance when using dried RBCs compared to the wet RBCs or whole blood when scanned using ATR-FTIR spectroscopy [119]. While some of these challenges can be addressed by statistical approaches, e.g. transfer learning [100], optimal performance requires a level of standardization in methods for handling different sample types destined for IR-ML analysis.

Target product characteristics of the IR and ML approaches

To guide further development and evaluation of the IR-ML based approaches for parasitological and entomological investigations of malaria, this article proposes an initial outline of key characteristics that should be met. This target product profile (TPP) consolidates the current thoughts and expertise of the authors as experts and early adopters of the application of this technology for malaria surveys. However, this TPP is subject to future modifications and should be considered as a preliminary version. To satisfy the global strategies for malaria monitoring, the draft describes the necessary and desirable qualities of emerging IR and ML-based techniques for use in both field surveys and clinical settings (Tables 2, 3).

Different TPPs have previously been proposed for future vector surveillance tools [95] and malaria diagnostic tools [131]. The article complements these by proposing relevant attributes for IR-ML techniques including both parasitological (Table 2) and entomological measures (Table 3). The proposed profile presents both the core characteristics, which are the minimum basic requirements for a functional system, as well as other desirable characteristics that could further improve the capabilities, scalability, and cost-effectiveness of this technology.

Conclusion

The combination of infrared spectroscopy and machine learning is being considered a promising new method for predicting or estimating various entomological and parasitological indicators of malaria. The IR-ML platforms have the added advantage of being simple to use, reagent-free, high-throughput, low-cost, and applicable in rural and remote settings. As malaria-endemic countries seek to enhance their surveillance-response strategies to achieve elimination targets, an important question is how IR-ML-based approaches can complement ongoing processes and be integrated into routine surveillance. This paper has reviewed existing IR and ML applications and their gaps for malaria surveys and parasite screening; with provision of initial suggestions on target product profiles (TPPs) for such technologies in low-income settings. The TPPs outline both essential and desirable attributes to guide further development. The article also outline key research and development gaps that should be addressed in the short and medium term, including the need for field validation, determination of minimum detection threshold, capacity development and training in user countries, assessment of the validity of the tests in different epidemiological strata, and work on robust hardware and software to enable expanded use.

Availability of data and materials

Not applicable.

Abbreviations

- IR:

-

Infrared spectroscopy

- ML:

-

Machine learning

- AI:

-

Artificial intelligence

- TPPs:

-

Target product profiles

- ANN:

-

Artificial neural networks

- SVM:

-

Support vector machine

- ATR:

-

Attenuated total reflectance

- LR:

-

Logistic regression

- NIRs:

-

Near infrared spectroscopy

- MIRs:

-

Mid Infrared Spectroscopy

References

WHO. Malaria surveillance, monitoring and evaluation: a reference manual. Geneva: World Health Organization; 2018.

WHO. Global technical strategy for malaria 2016–2030. Geneva: World Health Organization; 2015.

WHO. Test, treat, track: scaling up diagnostic testing, treatment and surveillance for malaria. Geneva: World Health Organization; 2012.

UNITAID. Malaria diagnostics landscape update. Geneva: World Health Organization; 2015.

malERA Refresh Consultative Panel on Tools for Malaria Elimination. An updated research agenda for diagnostics, drugs, vaccines, and vector control in malaria elimination and eradication. PLoS Med. 2017;14:e1002455.

malERA: An updated research agenda for characterising the reservoir and measuring transmission in malaria elimination and eradication. PLoS Med. 2017;14:ed1002452

WHO. Guidelines for malaria. Geneva: World Health Organization; 2022.

Bousema T, Okell L, Shekalaghe S, Griffin JT, Omar S, Sawa P, et al. Revisiting the circulation time of Plasmodium falciparum gametocytes: molecular detection methods to estimate the duration of gametocyte carriage and the effect of gametocytocidal drugs. Malar J. 2010;9:136.

Lamptey H, Ofori MF, Kusi KA, Adu B, Owusu-Yeboa E, Kyei-Baafour E, et al. The prevalence of submicroscopic Plasmodium falciparum gametocyte carriage and multiplicity of infection in children, pregnant women and adults in a low malaria transmission area in Southern Ghana. Malar J. 2018;17:331.

Nankabirwa JI, Yeka A, Arinaitwe E, Kigozi R, Drakeley C, Kamya MR, et al. Estimating malaria parasite prevalence from community surveys in Uganda: a comparison of microscopy, rapid diagnostic tests and polymerase chain reaction. Malar J. 2015;14:528.

Smith DL, McKenzie FE. Statics and dynamics of malaria infection in Anopheles mosquitoes. Malar J. 2004;3:13.

Bellan SE. The importance of age dependent mortality and the extrinsic incubation period in models of mosquito-borne disease transmission and control. PLoS ONE. 2010;5:e10165.

Smith DL, Battle KE, Hay SI, Barker CM, Scott TW, McKenzie FE. Ross, Macdonald, and a theory for the dynamics and control of mosquito-transmitted pathogens. PLoS Pathog. 2012;8:e1002588.

Protopopoff N, Mosha JF, Lukole E, Charlwood JD, Wright A, Mwalimu CD, et al. Effectiveness of a long-lasting piperonyl butoxide-treated insecticidal net and indoor residual spray interventions, separately and together, against malaria transmitted by pyrethroid-resistant mosquitoes: a cluster, randomised controlled, two-by-two fact. Lancet. 2018;391:1577–88.

Garrett-Jones C, Grab B. The assessment of insecticidal impact on the malaria mosquito’s vectorial capacity, from data on the proportion of parous females. Bull World Health Organ. 1964;31:71–86.

Garrett-Jones C, Dranga A, Marinov R, Mihai M. Epidemiological entomology and its application to malaria. Geneva: World Health Organization; 1968.

Beier JC, Perkins PV, Koros JK, Onyango FK, Gargan TP, Wirtz RA, et al. Malaria sporozoite detection by dissection and ELISA to assess infectivity of Afrotropical Anopheles (Diptera: Culicidae). J Med Entomol. 1990;27:377–84.

Chaumeau V, Andolina C, Fustec B, Tuikue Ndam N, Brengues C, Herder S, et al. Comparison of the performances of five primer sets for the detection and quantification of Plasmodium in anopheline vectors by real-time PCR. PLoS ONE. 2016;11:e0159160.

Silver JB. Mosquito ecology: field sampling methods. Dordrecht: Springer; 2007.

Burkot TR, Farlow R, Min M, Espino E, Mnzava A, Russell TL. A global analysis of national malaria control programme vector surveillance by elimination and control status in 2018. Malar J. 2019;18:399.

Russell TL, Farlow R, Min M, Espino E, Mnzava A, Burkot TR. Capacity of national malaria control programmes to implement vector surveillance: a global analysis. Malar J. 2020;19:422.

González Jiménez M, Babayan SA, Khazaeli P, Doyle M, Walton F, Reedy E, et al. Prediction of mosquito species and population age structure using mid-infrared spectroscopy and supervised machine learning. Wellcome Open Res. 2019;4:76.

Siria DJ, Sanou R, Mitton J, Mwanga EP, Niang A, Sare I, et al. Rapid age-grading and species identification of natural mosquitoes for malaria surveillance. Nat Commun. 2022;13:1501.

Milali MP, Sikulu-Lord MT, Kiware SS, Dowell FE, Corliss GF, Povinelli RJ. Age grading An. gambiae and An. arabiensis using near infrared spectra and artificial neural networks. PLoS ONE. 2019;14:e0209451.

Milali MP, Kiware SS, Govella NJ, Okumu F, Bansal N, Bozdag S, et al. An autoencoder and artificial neural network-based method to estimate parity status of wild mosquitoes from near-infrared spectra. PLoS ONE. 2020;15:e0234557.

Mwanga EP, Mapua SA, Siria DJ, Ngowo HS, Nangacha F, Mgando J, et al. Using mid-infrared spectroscopy and supervised machine-learning to identify vertebrate blood meals in the malaria vector, Anopheles arabiensis. Malar J. 2019;18:187.

Mwanga EP, Minja EG, Mrimi E, Jiménez MG, Swai JK, Abbasi S, et al. Detection of malaria parasites in dried human blood spots using mid-infrared spectroscopy and logistic regression analysis. Malar J. 2019;18:341.

Heraud P, Chatchawal P, Wongwattanakul M, Tippayawat P, Doerig C, Jearanaikoon P, et al. Infrared spectroscopy coupled to cloud-based data management as a tool to diagnose malaria: a pilot study in a malaria-endemic country. Malar J. 2019;18:348.

Webster GT, de Villiers KA, Egan TJ, Deed S, Tilley L, Tobin MJ, et al. Discriminating the intraerythrocytic lifecycle stages of the malaria parasite using Synchrotron FT-IR microspectroscopy and an artificial neural network. Anal Chem. 2009;81:2516–24.

Maia MF, Kapulu M, Muthui M, Wagah MG, Ferguson HM, Dowell FE, et al. Detection of Plasmodium falciparum infected Anopheles gambiae using near-infrared spectroscopy. Malar J. 2019;18:85.

Fried A, Richter D. Infrared absorption spectroscopy. Anal Tech Atmos Meas. 2006;1:72–146.

Khoshmanesh A, Dixon MWA, Kenny S, Tilley L, McNaughton D, Wood BR. Detection and quantification of early-stage malaria parasites in laboratory infected erythrocytes by attenuated total reflectance infrared spectroscopy and multivariate analysis. Anal Chem. 2014;86:4379–86.

Goh B, Ching K, Soares Magalhães RJ, Ciocchetta S, Edstein MD, Maciel-De-freitas R, et al. The application of spectroscopy techniques for diagnosis of malaria parasites and arboviruses and surveillance of mosquito vectors: a systematic review and critical appraisal of evidence. PLoS Negl Trop Dis. 2021;15:e0009218.

Fernandes JN, Dos Santos LMB, Chouin-Carneiro T, Pavan MG, Garcia GA, David MR, et al. Rapid, noninvasive detection of Zika virus in Aedes aegypti mosquitoes by near-infrared spectroscopy. Sci Adv. 2018;4:0496.

WHO. Giemsa staining of malaria blood films. Malaria microscopy standard operating procedure. World Health Organization, Regional Office for the Western Pacific, 2016.

WHO. Policy brief on malaria diagnostics in low-transmission settings. Geneva: World Health Organization; 2014.

Tangpukdee N, Duangdee C, Wilairatana P, Krudsood S. Malaria diagnosis: a brief review. Korean J Parasitol. 2009;47:93.

Moody A. Rapid diagnostic tests for malaria parasites. Clin Microbiol Rev. 2002;15:66–78.

Milne LM, Kyi MS, Chiodini PL, Warhurst DC. Accuracy of routine laboratory diagnosis of malaria in the United Kingdom. J Clin Pathol. 1994;47:740–2.

Wongsrichanalai C, Barcus MJ, Muth S, Sutamihardja A, Wernsdorfer WH. A review of malaria diagnostic tools: microscopy and rapid diagnostic test (RDT). Am J Trop Med Hyg. 2007;77(Suppl 6):119–27.

Lo E, Zhou G, Oo W, Afrane Y, Githeko A, Yan G. Low parasitemia in submicroscopic infections significantly impacts malaria diagnostic sensitivity in the highlands of Western Kenya. PLoS ONE. 2015;10:e0121763.

Mogeni P, Williams TN, Omedo I, Kimani D, Ngoi JM, Mwacharo J, et al. Detecting malaria hotspots: a comparison of rapid diagnostic test, microscopy, and polymerase chain reaction. J Infect Dis. 2017;216:1091–8.

Pham NM, Karlen W, Beck HP, Delamarche E. Malaria and the “last” parasite: how can technology help? Malar J. 2018;17:260.

Odhiambo F, Buff AM, Moranga C, Moseti CM, Wesongah JO, Lowther SA, et al. Factors associated with malaria microscopy diagnostic performance following a pilot quality-assurance programme in health facilities in malaria low-transmission areas of Kenya, 2014. Malar J. 2017;16:371.

Cunningham J, Jones S, Gatton ML, Barnwell JW, Cheng Q, Chiodini PL, et al. A review of the WHO malaria rapid diagnostic test product testing programme (2008–2018): performance, procurement and policy. Malar J. 2019;18:387.

WHO. New perspectives: malaria diagnosis: report of a joint WHO/USAID informal consultation. Geneva: World Health Organization; 1999.

Shillcutt S, Morel C, Goodman C, Coleman P, Bell D, Whitty CJM, et al. Cost-effectiveness of malaria diagnostic methods in sub-Saharan Africa in an era of combination therapy. Bull World Health Organ. 2008;86:101–10.

Rosenthal PJ. How do we best diagnose malaria in Africa? Am J Trop Med Hyg. 2012;86:192–3.

Bualombai P, Satimai W, Rodnak D, Ruangsirarak P, Congpuong K, Boonpheng S. Detecting malaria using SD Bioline Malaria Pf/PAN (HRP2, pLDH). J Health Res. 2013;27:135–8.

Abuaku B, Amoah LE, Peprah NY, Asamoah A, Amoako EO, Donu D, et al. Malaria parasitaemia and mRDT diagnostic performances among symptomatic individuals in selected health care facilities across Ghana. BMC Public Health. 2021;21:239.

Gamboa D, Ho M-F, Bendezu J, Torres K, Chiodini PL, Barnwell JW, et al. A large proportion of P. falciparum isolates in the Amazon region of Peru lack pfhrp2 and pfhrp3: implications for malaria rapid diagnostic tests. PLoS ONE. 2010;5:e8091.

Mihreteab S, Anderson K, Pasay C, Smith D, Gatton ML, Cunningham J, et al. Epidemiology of mutant Plasmodium falciparum parasites lacking histidine-rich protein 2/3 genes in Eritrea 2 years after switching from HRP2-based RDTs. Sci Rep. 2021;11:21082.

Feleke SM, Reichert EN, Mohammed H, Brhane BG, Mekete K, Mamo H, et al. Plasmodium falciparum is evolving to escape malaria rapid diagnostic tests in Ethiopia. Nat Microbiol. 2021;6:1289–99.

Thomson R, Beshir KB, Cunningham J, Baiden F, Bharmal J, Bruxvoort KJ, et al. pfhrp2 and pfhrp3 gene deletions that affect malaria rapid diagnostic tests for Plasmodium falciparum: analysis of archived blood samples from 3 African countries. J Infect Dis. 2019;220:1444–52.

Maltha J, Gamboa D, Bendezu J, Sanchez L, Cnops L, Gillet P, et al. Rapid diagnostic tests for malaria diagnosis in the Peruvian Amazon: impact of pfhrp2 gene deletions and cross-reactions. PLoS ONE. 2012;7:e43094.

Koita OA, Doumbo OK, Ouattara A, Tall LK, Konaré A, Diakité M, et al. False-negative rapid diagnostic tests for malaria and deletion of the histidine-rich repeat region of the hrp2 gene. Am J Trop Med Hyg. 2012;86:194–8.

Parr JB, Verity R, Doctor SM, Janko M, Carey-Ewend K, Turman BJ, et al. Pfhrp2-deleted Plasmodium falciparum parasites in the Democratic Republic of the Congo: a national cross-sectional survey. J Infect Dis. 2017;216:36–44.

Beshir KB, Epúlveda N, Bharmal J, Robinson A, Mwanguzi J, Busula AO, et al. Plasmodium falciparum parasites with histidine-rich protein 2 (pfhrp2) and pfhrp3 gene deletions in two endemic regions of Kenya. Sci Rep. 2017;7:14718.

WHO. False-negative RDT results and implications of new reports of P. falciparum histidine-rich protein 2/3 gene deletions. Geneva: World Health Organization; 2017.

Hänscheid T, Grobusch MP. How useful is PCR in the diagnosis of malaria? Trends Parasitol. 2002;18:395–8.

Okell LC, Ghani AC, Lyons E, Drakeley CJ. Submicroscopic infection in Plasmodium falciparum-endemic populations: a systematic review and meta-analysis. J Infect Dis. 2009;200:1509–17.

Snounou G. Detection and identification of the four malaria parasite species infecting humans by PCR amplification. Methods Mol Biol. 1996;50:263–91.

Perandin F, Manca N, Calderaro A, Piccolo G, Galati L, Ricci L, et al. Development of a real-time PCR assay for detection of Plasmodium falciparum, Plasmodium vivax, and Plasmodium ovale for routine clinical diagnosis. J Clin Microbiol. 2004;42:1214–9.

Sumari D, Mwingira F, Selemani M, Mugasa J, Mugittu K, Gwakisa P. Malaria prevalence in asymptomatic and symptomatic children in Kiwangwa, Bagamoyo district, Tanzania. Malar J. 2017;16:222.

Cottrell G, Moussiliou A, Luty AJF, Cot M, Fievet N, Massougbodji A, et al. Submicroscopic Plasmodium falciparum infections are associated with maternal anemia, premature births, and low birth weight. Clin Infect Dis. 2015;60:1481–8.

Morris U, Xu W, Msellem MI, Schwartz A, Abass A, Shakely D, et al. Characterising temporal trends in asymptomatic Plasmodium infections and transporter polymorphisms during transition from high to low transmission in Zanzibar, 2005–2013. Infect Genet Evol. 2015;33:110–7.

Baum E, Sattabongkot J, Sirichaisinthop J, Kiattibutr K, Davies DH, Jain A, et al. Submicroscopic and asymptomatic Plasmodium falciparum and Plasmodium vivax infections are common in western Thailand—molecular and serological evidence. Malar J. 2015;14:95.

Karl S, Gurarie D, Zimmerman PA, King CH, St. Pierre TG, Davis TME. A sub-microscopic gametocyte reservoir can sustain malaria transmission. PLoS ONE. 2011;6:e20805.

Makler MT, Palmer CJ, Ager AL. A review of practical techniques for the diagnosis of malaria. Ann Trop Med Parasitol. 1998;92:419–34.

Ogunfowokan O, Nwajei AI, Ogunfowokan BA. Sensitivity and specificity of malaria rapid diagnostic test (mRDT CareStatTM) compared with microscopy amongst under five children attending a primary care clinic in southern Nigeria. Afr J Prim Health Care Fam Med. 2020;12:e1–8.

Timbrook TT, Morton JB, McConeghy KW, Caffrey AR, Mylonakis E, LaPlante KL. The effect of molecular rapid diagnostic testing on clinical outcomes in bloodstream infections: a systematic review and meta-analysis. Clin Infect Dis. 2016;64:15–23.

Dowling MAC, Shute GT. A comparative study of thick and thin blood films in the diagnosis of scanty malaria parasitaemia. Bull World Health Organ. 1966;34:249.

Kamau L, Coetzee M, Hunt RH, Koekemoer LL. A cocktail polymerase chain reaction assay to identify members of the Anopheles funestus (Diptera: Culicidae) group. Am J Trop Med Hyg. 2017;66:804–11.

Bell AS, Ranford-Cartwright LC. A real-time PCR assay for quantifying Plasmodium falciparum infections in the mosquito vector. Int J Parasitol. 2004;34:795–802.

Service MW. Estimation of the mortalities of the immature stages and adults. In: Service MW, editor. Mosquito ecology: field sampling methods. Dordrecht: Springer; 1993. p. 752–889.

Davidson G. Estimation of the survival-rate of anopheline mosquitoes in nature. Nature. 1954;174:792–3.

Detinova TS. Age-grouping methods in Diptera of medical importance with special reference to some vectors of malaria. Monogr Ser World Health Organ. 1962;47:13–191.

Polovodova VP. The determination of the physiological age of female Anopheles by the number of gonotrophic cycles completed. Med Parazitol. 1949;18:352.

Beklemishev WN, Detinova TS, Polovodova VP. Determination of physiological age in anophelines and of age distribution in anopheline populations in the USSR. Bull World Health Organ. 1959;21:223–32.

Hoc TQ, Charlwood JD. Age determination of Aedes cantans using the ovarian oil injection technique. Med Vet Entomol. 1990;4:227–33.

Beier JC, Perkins PV, Wirtz RA, Whitmire RE, Mugambi M, Hockmeyer WT. Field evaluation of an enzyme-linked immunosorbent assay (ELISA) for Plasmodium falciparum sporozoite detection in anopheline mosquitoes from Kenya. Am J Trop Med Hyg. 1987;36:459–68.

Tassanakajon A, Boonsaeng V, Wilairat P, Panyim S. Polymerase chain reaction detection of Plasmodium falciparum in mosquitoes. Trans R Soc Trop Med Hyg. 1993;87:273–5.

Bass C, Nikou D, Blagborough AM, Vontas J, Sinden RE, Williamson MS, et al. PCR-based detection of Plasmodium in Anopheles mosquitoes: a comparison of a new high-throughput assay with existing methods. Malar J. 2008;7:177.

Erlank E, Koekemoer LL, Coetzee M. The importance of morphological identification of African anopheline mosquitoes (Diptera: Culicidae) for malaria control programmes. Malar J. 2018;17:43.

Coetzee M. Key to the females of Afrotropical Anopheles mosquitoes (Diptera: Culicidae). Malar J. 2020;19:70.

Koekemoer LL, Kamau L, Hunt RH, Coetzee M. A cocktail polymerase chain reaction assay to identify members of the Anopheles funestus (Diptera: Culicidae) group. Am J Trop Med Hyg. 2002;66:804–11.

Cohuet A, Toto J-C, Simard F, Kengne P, Fontenille D, Coetzee M. Species identification within the Anopheles funestus group of malaria vectors in Cameroon and evidence for a new species. Am J Trop Med Hyg. 2018;69:200–5.

Scott JA, Brogdon WG, Collins FH. Identification of single specimens of the Anopheles gambiae complex by the polymerase chain reaction. Am J Trop Med Hyg. 1993;49:520–9.

Jourdain F, Picard M, Sulesco T, Haddad N, Harrat Z, Sawalha SS, et al. Identification of mosquitoes (Diptera: Culicidae): an external quality assessment of medical entomology laboratories in the MediLabSecure Network. Parasit Vectors. 2018;11:553.

Wirtz RA, Zavala F, Charoenvit Y, Campbell GH, Burkot TR, Schneider I, et al. Comparative testing of monoclonal antibodies against Plasmodium falciparum sporozoites for ELISA development. Bull World Health Organ. 1987;65:39.

Burkot TR, Zavala F, Gwadz RW, Collins FH, Nussenzweig RS, Roberts DR. Identification of malaria-infected mosquitoes by a two-site enzyme-linked immunosorbent assay. Am J Trop Med Hyg. 1984;33:227–31.

Beier JC, Perkins PV, Wirtz RA, Koros J, Diggs D, Gargan TP II, et al. Bloodmeal identification by direct enzyme-linked immunosorbent assay (ELISA), tested on Anopheles (Diptera: Culicidae) in Kenya. J Med Entomol. 1988;25:9–16.

Kent RJ. Molecular methods for arthropod bloodmeal identification and applications to ecological and vector-borne disease studies. Mol Ecol Resour. 2009;9:4–18.

Johnson BJ, Hugo LE, Churcher TS, Ong OTW, Devine GJ. Mosquito age grading and vector-control programmes. Trends Parasitol. 2020;36:39–51.

Farlow R, Russell TL, Burkot TR. Nextgen vector surveillance tools: sensitive, specific, cost-effective and epidemiologically relevant. Malar J. 2020;19:432.

Davidson G, Draper CC. Field studies of some of the basic factors concerned in the transmission of malaria. Trans R Soc Trop Med Hyg. 1953;47:522–35.

WHO. Global report on insecticide resistance in malaria vectors: 2010–2016. Geneva: World Health Organization; 2018.

Okumu F, Gyapong M, Casamitjana N, Castro MC, Itoe MA, Okonofua F, et al. What Africa can do to accelerate and sustain progress against malaria. PLoS Glob Public Health. 2022;2:e0000262.

Sikulu M, Killeen GF, Hugo LE, Ryan PA, Dowell KM, Wirtz RA, et al. Near-infrared spectroscopy as a complementary age grading and species identification tool for African malaria vectors. Parasit Vectors. 2010;3:49.

Mwanga EP, Siria DJ, Mitton J, Mshani IH, González-Jiménez M, Selvaraj P, et al. Using transfer learning and dimensionality reduction techniques to improve generalisability of machine-learning predictions of mosquito ages from mid-infrared spectra. BMC Bioinformatics. 2023;24:11.

Garcia GA, Kariyawasam TN, Lord AR, da Costa CF, Chaves LB, da Lima-Junior JC, et al. Malaria absorption peaks acquired through the skin of patients with infrared light can detect patients with varying parasitemia. PNAS Nexus. 2022;1:272.

Grabska J, Huck CW, Beć KB, Grabska J, Huck CW. Near-infrared spectroscopy in bio-applications. Molecules. 2020;25:2948.

Ng EYK, Etehadtavakol M. Application of infrared to biomedical sciences. Singapore: Springer; 2017.

Tarimo BB, Nyasembe VO, Ngasala B, Basham C, Rutagi IJ, Muller M, et al. Seasonality and transmissibility of Plasmodium ovale in Bagamoyo District. Tanzania Parasit Vectors. 2022;15:56.

Siria DJ, Sanou R, Mitton J, Mwanga EP, Niang A, Sare I, et al. Rapid ageing and species identification of natural mosquitoes for malaria surveillance. bioRxiv. 2020;15:613.

Sroute L, Byrd BD, Huffman SW. Classification of mosquitoes with infrared spectroscopy and partial least squares-discriminant analysis. Appl Spectrosc. 2020;74:900–12.

Khoshmanesh A, Christensen D, Perez-Guaita D, Iturbe-Ormaetxe I, O’Neill SL, McNaughton D, et al. Screening of Wolbachia endosymbiont infection in Aedes aegypti mosquitoes using attenuated total reflection mid-infrared spectroscopy. Anal Chem. 2017;89:5285–93.

Christensen D, Khoshmanesh A, Perez-Guaita D, Iturbe-Ormaetxe I, O’Neill S, Wood B. Detection and identification of Wolbachia pipientis strains in mosquito eggs using attenuated total reflection fourier transform infrared (ATR FT-IR) spectroscopy. Appl Spectrosc. 2021;75:1003–11.

Santos LMB, Mutsaers M, Garcia GA, David MR, Pavan MG, Petersen MT, et al. High throughput estimates of Wolbachia, Zika and chikungunya infection in Aedes aegypti by near-infrared spectroscopy to improve arbovirus surveillance. Commun Biol. 2021;4:67.

Esperança PM, Blagborough AM, Da DF, Dowell FE, Churcher TS. Detection of Plasmodium berghei infected Anopheles stephensi using near-infrared spectroscopy. Parasit Vectors. 2018;11:377.

Da DF, McCabe R, Somé BM, Esperança PM, Sala KA, Blight J, et al. Detection of Plasmodium falciparum in laboratory-reared and naturally infected wild mosquitoes using near-infrared spectroscopy. Sci Rep. 2021;11:10289.

Krajacich BJ, Meyers JI, Alout H, Dabiré RK, Dowell FE, Foy BD. Analysis of near infrared spectra for age-grading of wild populations of Anopheles gambiae. Parasit Vectors. 2017;10:1–13. https://doi.org/10.1186/s13071-017-2501-1.

Mgaya JN, Siria DJ, Makala FE, Mgando JP, Vianney J-MM, Mwanga EP, et al. Effects of sample preservation methods and duration of storage on the performance of mid-infrared spectroscopy for predicting the age of malaria vectors. Parasit Vectors. 2022;15:281.

Snounou G, Viriyakosol S, Jarra W, Thaithong S, Brown KN. Identification of the four human malaria parasite species in field samples by the polymerase chain reaction and detection of a high prevalence of mixed infections. Mol Biochem Parasitol. 1993;58:283–92.

Goldberg DE, Slater AF, Cerami A, Henderson GB. Hemoglobin degradation in the malaria parasite Plasmodium falciparum: an ordered process in a unique organelle. Proc Natl Acad Sci USA. 1990;87:2931–5.

Roy S, Perez-Guaita D, Andrew DW, Richards JS, McNaughton D, Heraud P, et al. Simultaneous ATR-FTIR based determination of malaria parasitemia, glucose and urea in whole blood dried onto a glass slide. Anal Chem. 2017;89:5238–45.

Lippi G. Machine learning in laboratory diagnostics: valuable resources or a big hoax? Diagnosis. 2021;8:133–5.

Adegoke JA, Kochan K, Heraud P, Wood BR. A near-infrared “Matchbox Size” spectrometer to detect and quantify malaria parasitemia. Anal Chem. 2021;93:5451–8.

Martin M, Perez-Guaita D, Andrew DW, Richards JS, Wood BR, Heraud P. The effect of common anticoagulants in detection and quantification of malaria parasitemia in human red blood cells by ATR-FTIR spectroscopy. Analyst. 2017;142:1192–9.

De Bruyne S, Speeckaert MM, Van Biesen W, Delanghe JR. Recent evolutions of machine learning applications in clinical laboratory medicine. Crit Rev Clin Lab Sci. 2021;58:131–52.

Perez-Guaita D, Andrew D, Heraud P, Beeson J, Anderson D, Richards J, et al. High resolution FTIR imaging provides automated discrimination and detection of single malaria parasite infected erythrocytes on glass. Faraday Discuss. 2016;187:341–52.

Wood BR, Bambery KR, Dixon MWA, Tilley L, Nasse MJ, Mattson E, et al. Diagnosing malaria infected cells at the single cell level using focal plane array Fourier transform infrared imaging spectroscopy. Analyst. 2014;139:4769–74.

Adegoke JA, De Paoli A, Afara IO, Kochan K, Creek DJ, Heraud P, et al. Ultraviolet/visible and near-infrared dual spectroscopic method for detection and quantification of low-level malaria parasitemia in whole blood. Anal Chem. 2021;93:13302–10.

Kangoye DT, Noor A, Midega J, Mwongeli J, Mkabili D, Mogeni P, et al. Malaria hotspots defined by clinical malaria, asymptomatic carriage, PCR and vector numbers in a low transmission area on the Kenyan Coast. Malar J. 2016;15:213.

Moyeh MN, Ali IM, Njimoh DL, Nji AM, Netongo PM, Evehe MS, et al. Comparison of the accuracy of four malaria diagnostic methods in a high transmission setting in coastal Cameroon. J Parasitol Res. 2019;2019:1417967.

Okell LC, Bousema T, Griffin JT, Ouédraogo AL, Ghani AC, Drakeley CJ. Factors determining the occurrence of submicroscopic malaria infections and their relevance for control. Nat Commun. 2012;3:1237.

WHO. High burden to high impact: a targeted malaria response. Geneva: World Health Organization; 2018.

Tetteh M, Dwomoh D, Asamoah A, Kupeh EK, Malm K, Nonvignon J. Impact of malaria diagnostic refresher training programme on competencies and skills in malaria diagnosis among medical laboratory professionals: evidence from Ghana 2015–2019. Malar J. 2021;20:255.

Sow BD, Mshani I, Neema Z, Sanou R, Mwanga E, Okumu FO, et al. An online platform for real-time age-grading and species determination of malaria vectors using artificial intelligence and infrared spectroscopy. PAMCA Conference. 2022; ABS-416. https://conference2022.pamca.org/conference/abstractbook

Dowell FE, Noutcha AEM, Michel K. The effect of preservation methods on predicting mosquito age by near infrared spectroscopy. Am J Trop Med Hyg. 2011;85:1093–6.

Bell D, Fleurent AE, Hegg MC, Boomgard JD, McConnico CC. Development of new malaria diagnostics: matching performance and need. Malar J. 2016;15:406.

Wilson ML. Malaria rapid diagnostic tests. Clin Infect Dis. 2012;54:1637–41.

Acknowledgements

Frank Mussa, Ezekiel Makala, Rukia Mohamed, Elihaika Minja, Najat Kahamba, Betwel John, Letus Muhyaga, Pinda Polius, Emmanuel Hape and Halfan Ngowo are sincerely thanked for their contributions to the development and review of early drafts of this article.

Funding

Support was received from Bill and Melinda Gates Foundation (Grant number Grant No. OPP 1217647 to Ifakara Health Institute), Royal Society (Grant No ICA/R1/191238 to SAB University of Glasgow and Ifakara Health Institute), Rudolf Geigy Foundation through Swiss Tropical & Public Health Institute (to Ifakara Health Institute), Academy Medical Science Springboard Award (ref: SBF007\100094) to FB and the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (Grant agreement No. 832703, to KW).

Author information

Authors and Affiliations

Contributions

IHM, FO, FB, SAB developed the framework of the manuscript, IM wrote the first and subsequent drafts of the manuscript, FO, FB and SAB supervised the writing and reviewing the manuscript, HMF, MTS, MGJ, FO, FB, SAB contributed to the reviewing and proofreading of the target product profile tables, DJS, EPM, KW, BS, MO, RS, AD, HMF, MTS and MGJ participated in reviewing the final draft of this manuscript. All authors reviewed and approved the final draft of this article.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

This review was approved under Ifakara Health Institute Review Board (Ref: IHI/IRB/No: 1–2021) and the Medical Research Coordinating Committee (MRCC) at the National Institute for Medical Research-NIMR (NIMR/HQ/R.8a/Vol. 1X/3735). Permission to publish this review was obtained from NIMR, ref: No: NIMR/HQ/P.12 VOL XXXV/130.

Competing interests

All authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Mshani, I.H., Siria, D.J., Mwanga, E.P. et al. Key considerations, target product profiles, and research gaps in the application of infrared spectroscopy and artificial intelligence for malaria surveillance and diagnosis. Malar J 22, 346 (2023). https://doi.org/10.1186/s12936-023-04780-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12936-023-04780-3