Abstract

Background

The development of machine learning models for aiding in the diagnosis of mental disorder is recognized as a significant breakthrough in the field of psychiatry. However, clinical practice of such models remains a challenge, with poor generalizability being a major limitation.

Methods

Here, we conducted a pre-registered meta-research assessment on neuroimaging-based models in the psychiatric literature, quantitatively examining global and regional sampling issues over recent decades, from a view that has been relatively underexplored. A total of 476 studies (n = 118,137) were included in the current assessment. Based on these findings, we built a comprehensive 5-star rating system to quantitatively evaluate the quality of existing machine learning models for psychiatric diagnoses.

Results

A global sampling inequality in these models was revealed quantitatively (sampling Gini coefficient (G) = 0.81, p < .01), varying across different countries (regions) (e.g., China, G = 0.47; the USA, G = 0.58; Germany, G = 0.78; the UK, G = 0.87). Furthermore, the severity of this sampling inequality was significantly predicted by national economic levels (β = − 2.75, p < .001, R2adj = 0.40; r = − .84, 95% CI: − .41 to − .97), and was plausibly predictable for model performance, with higher sampling inequality for reporting higher classification accuracy. Further analyses showed that lack of independent testing (84.24% of models, 95% CI: 81.0–87.5%), improper cross-validation (51.68% of models, 95% CI: 47.2–56.2%), and poor technical transparency (87.8% of models, 95% CI: 84.9–90.8%)/availability (80.88% of models, 95% CI: 77.3–84.4%) are prevailing in current diagnostic classifiers despite improvements over time. Relating to these observations, model performances were found decreased in studies with independent cross-country sampling validations (all p < .001, BF10 > 15). In light of this, we proposed a purpose-built quantitative assessment checklist, which demonstrated that the overall ratings of these models increased by publication year but were negatively associated with model performance.

Conclusions

Together, improving sampling economic equality and hence the quality of machine learning models may be a crucial facet to plausibly translating neuroimaging-based diagnostic classifiers into clinical practice.

Similar content being viewed by others

Background

Machine learning (ML) models have been extensively utilized for classifying patients with mental illness to aid in clinical decision-making [1, 2]. By building machine learning models that are trained from neuroimaging-based features, the diagnostic decision could be more accurate and reliable with the aid of these objective and high-dimensional biomarkers [3, 4]. Furthermore, given the multivariate nature of brain features, machine learning techniques could capture the whole neural pattern across high-volume dependent voxels for revealing pathophysiological signatures of these disorders, while individualized prediction of machine learning models in the neuroimaging-based ML models also facilitates to address the increasing needs of precision psychiatry [5, 6]. Despite considerable efforts devoted to this end, the translation of machine learning classification for diagnostic and treatment recommendation into clinical practice remains challenging [7]. This is partly due to the poor generalizability of particular these neuroimaging-based classifiers, which are often optimized within a specific sample to incur failure of generalizing to diagnose unseen patients in new samples [8,9,10]. Although these classifiers can be trained to achieve a desirably high accuracy in a specific cohort, they are not representative of a more general population across medical centers, geographic regions, socioeconomic statuses, and ethnic groups [11, 12]. Moreover, persisting concerns over generalizability imply potential sampling biases despite the substantially increased size of data over recent decades [13].

As promising noninvasive, in vivo techniques (e.g., magnetic resonance imaging, MRI; electroencephalogram, EEG; positron emission computed tomography, PET), they provide unique opportunities to assess brain structure, function, and metabolic anomalies for revealing the pathophysiological signatures of these psychiatric disorders as intermediate phenotype, and hence fueled the enthusiasm in these machine learning diagnostic models [9, 14]. In addition, with the huge developments of big-data sharing initiatives (e.g., UK Biobank, Alzheimer’s Disease Neuroimaging Initiative), the diagnostic studies utilizing neuroimaging-based methods for classifying psychiatric conditions have seen a remarkable proliferation at an unprecedented speed over the recent decades [9, 15]. Despite these technical merits and promising research insights, these approaches are nonetheless cost, somewhat non-scalable, and are mostly not readily available or accessible in low-income countries and regions, especially the high-field MRI and PET for neural system mapping. In this vein, probing into why and how the sampling bias and relevant factors impeded the generalizability could be a potent avenue prompting translations of these neuroimaging-based machine learning models into clinical actions. However, comprehensive knowledge about the degree of such sampling issues and what relevant factors incur poor generalizability in these models is still scarce.

The importance of replication in generalizing scientific conclusions has been increasingly stressed, and a “replication crisis” has been discussed for several decades within or beyond psychological science: multiple experimental findings fail to be replicated and generalized across populations and contexts [16, 17]. One possible underlying reason may be that the available data was primarily and predominantly drawn from WEIRD (western, educated, industrialized, rich, and democratic) societies, which mirrors a typical sampling bias [18, 19]. Specifically, in 2008, 96% studies on human behavior relied on samples from WEIRD counties, with the remaining 82% of global population being largely ignored [20, 21]. Recently, we have conducted a systematic appraisal for neuroimaging-based machine learning models in the psychiatric diagnosis by using PTOBAST (Prediction model Risk Of Bias ASsessment Tool) criterion. Results demonstrated that 83.1% of these models are at high risk of bias (ROB), and further indicate a biased distribution of sampled populations [22]. Despite these descriptive evidences, there have been no quantitative analyses conducted to clearly illustrate the extent of sampling biases at a global or regional level [22]. And what’s more, the long-lasting discussion regarding the association between the regional economic level and these sampling biases remains uncertain, and requires reliable statistical evidence for clarification [22, 23]. Examining the status quo of sampling biases is particularly important for psychiatric neuroimaging-based classifiers as generalizability is critical for translating models into clinical actions [23, 24]. Patient groups, compared with non-clinical or healthy entities, are far more heterogeneous due to high inter-individual variability in psychopathology [25, 26]. This is affected not only by genetics, but environment, a broad sense covering socioeconomic status, family susceptibility, and living environment [27, 28]. Therefore, developing a generally applicable model remains challenging, as the issues raised by sampling biases may further compound poor generalizability in psychiatric classification experiments.

Apart from the generalization failures due to sampling bias, there are other pitfalls to cause overfitting as the results of heedless or intended analysis optimization. Overfitting accompanied by accuracy inflation in machine learning models refers that the results are only valid within the data used for optimization but can hardly generalize to other data drawn from the same distribution [29, 30]. In support of this notion, a recent large-scale methodological overview indicated that 87% of machine learning models for clinical prediction exhibited a high risk of bias (ROB) for overfitting, particularly in the domain of psychiatric classification [31]. In addition, variants of methodological parameters that may cause overfitting have been repeatedly discussed in prior review papers: sample-size limitation, in-sample validation, overhyping, data leakage, and especially “double-dipping” cross-validation (CV) methods [32, 33]. The cross-validation procedure is to evaluate the classification performance of the ML model by splitting the whole sample into an independent training set and testing set [32, 34]. Nevertheless, improper CV schemes have been found to overestimate model performance by “double-dipping” dependence or data leakage, which is a main source of incurring overfitting [8]. Besides, a recent review on the application of machine learning for gaining neurobiological and nosological insights in psychiatry underscored the need for cautious interpretation of accuracy in machine learning models [35]. That is, the analytic procedures to obtain reliable model performance are even more critical. However, a comprehensive review that systematically determines these methodological issues in prior studies of psychiatric machine learning classification is currently lacking, and how data/model availability allows for replication analysis to ensure generalization remains unclear. Thus, conducting a meta-research review concerning this topic would facilitate the characterization of the shortcomings and limitations in these current models. Moreover, developing a proof-of-concept assessment tool integrating these issues would facilitate the establishment of a favorable psychiatric machine learning eco-system.

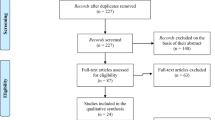

To systematically access the generalizability issues, we conducted a pre-registered meta-research review of current studies that applied neuroimaging-based machine learning models to diagnose psychiatric populations. A total of 476 studies screened from PubMed (n total = 41, 980) over the recent three decades (Jan 1990–July 2021) were included (see Additional file 1: Fig. S1-S2). First, geospatial mapping of the distribution patterns of the samples used in prior literature was depicted to illustrate the sampling biases. Furthermore, capitalizing on the sampling Gini model with the Dagum-Gini algorithm, we quantified the global and area-wide sampling inequality by taking both sampling biases and geospatial patterns into account. The underlying factors of these sampling inequalities were further explored, focusing on economic, social developmental, educational developmental gaps, and psychiatric disorder burdens, with a generalized additive model (GAM). Next, we focused on issues of poor generalizability by extending our examination to methodological issues that caused overfitting in previous psychiatric machine learning studies, which facilitated to uncover potential pitfalls that may undermine generalizability. Finally, we utilized the results of our meta-research review to propose a 5-star standardized rating system for assessing psychiatric machine learning quality considering five domains: sample representativeness, cross-validation method, validation scheme for generalization assessment, report transparency, and data/model availability. Associations of study quality scores with publication year, psychiatric category, and model performance were then established.

Methods

The proposal and protocol for the current study have been pre-registered at Open Science Framework to endorse transparency.

Search strategy for literature

We searched eligible literature in accordance with PRISMA 2020 statement (Preferred Reporting Items for Systematic reviews and Meta-Analyses, see Additional file 1: Fig. S2). We retrieved literature at the PubMed database, with the following predefined criterion: (1) published from 1990 to 2021 (Jul); (2) peer-reviewed English-written article in journals or in conferences; (3) building machine learning models for diagnosis (classification) towards psychiatric disorders with neuroimaging-based biomarkers. By using Boolean codes and DSM classifications, we retrieved a total of 41,980 records from forty-eight 2nd level psychiatric categories. All records were input into Endnote X9 software for initial inspection and further underwent duplicate removal by using self-made code in Excel suits. Eligible papers were screened strictly following the inclusion and exclusion criteria detailed underneath. Furthermore, to obviate missing eligible records, we hand-inspected the reference list for the newest articles (2021).

We implemented a three-stage validation to ensure the correctness of all the processes. Stage 1: one reviewer was required to perform all the works (e.g., literature searching, data extraction, and data coding) by standard pipeline. Stage 2: a completely independent reviewer was asked to conduct all the works mentioned above for cross-check validation. Stage 3: another independent senior reviewer was designated to check the disparities of results between Stage 1 and Stage 2. If there were incongruences in records, the third reviewers should redo this process independently to determine which one was correct.

Inclusion and exclusion

We included studies by the following criteria: (1) machine learning models were built to diagnose (classify) psychiatric patients (defined by DSM-5) from healthy control by neuroimaging-based biomarkers; (2) the ground-truth definition for patients was in accordance with clinical diagnoses performed by qualified staffs (e.g., clinical psychiatrists, DSM-5 or ICD-10); (3) fundamental information was given, such as bibliometric information, classifier, model performance, and sample size for both the training set and testing set. More details can be found in Additional file 1: Fig. S1.

We excluded studies that provided no original machine learning models and non-peer-reviewed results, including reviews, abstract reports, meta-analyses, perspectives, comments, and pre-printing papers. Furthermore, studies would be ruled out if they build models by non-machine learning algorithms or reported model performance with non-quantitative metrics. In addition, researches training machine learning models by non-neuroimaging-based features (e.g., genetics and blood markers) or in nonhuman participants were excluded in the current study. As aforementioned, we also discarded eligible studies if the patients’ group had not yet been diagnosed by qualified institutes or medical staff. Finally, studies aimed at non-diagnostic prediction (e.g., prognostic prediction and regressive prediction) were removed for formal analysis.

Data extraction and coding

To ensure transparency and reproducibility, we extracted and coded data by referring to guidelines, including PRISMA [36], CHARMS checklist [37] (CHecklist for critical Appraisal and data extraction for systematic Reviews of prediction Modeling Studies), and TRIPOD [38] (Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis) statement. As mentioned above, the three-stage validation was adopted here to ensure the correctness of these data. We coded eligible studies from two parts, with one for metainformation (e.g., publication year, affiliation, and countries for first author and journals) and another one for the scientific contexts of machine learning models (e.g., sample population, model performance, toolkit, feature selection methods, data availability, and sample size). Full contexts on data extraction and coding can be found in Additional file 1: Fig. S1.

Data resources

Less (more) economic developed countries (LEDC and MEDC) were defined by using the United Nations Development Programme (UNDP) criteria and International Monetary Fund (IMF, 2020) classification [39, 40]. Following that, a total of 34 countries or regions have been classified as MEDC, such as the USA, Germany, the UK, Japan, and Korea. Data for national development metrics derived from World Bank (WB)-World Development Indicators (2021), including Gross Domestic Product (GDP), Human Development Index (HDI), total government expenditure on public education (GEE), and research and development expenditure (R & D). In addition, we extracted data recording mental health disease burden (MHDB) and prevalence of psychiatric diseases from the Global Burden of Disease Study 2019 (GBD 2019) and the Global Health Data Exchange (GHDx) database. Finally, we obtained metrics for evaluating journal impacts by Journal Citation Reports of Clarivate ™ (2020).

Geospatial models

We built a global geospatial distribution model by packages of R, including the “ggplot2” and “maptools”. The global geospatial map was defined by 251 countries or regions, which was validated by EasyShu suits. Furthermore, the geospatial maps for the USA, Germany, and the UK have been built by public dataset (CSDN communities). In addition, the geospatial pattern of China was built by the EasyShu software 3.2.2 for interactive visualization. Given the overlapping dataset, the global map visualizing the results of this geospatial model in the present study may be highly similar (but not equal) to the one in our previous work [22].

Sampling inequality coefficient

To quantify sampling bias and geospatial pattern for sampled population, we estimated sampling inequality based on the Dagum-Gini algorithm [41]. We estimated the Gini coefficient with Dagum-Gini algorithm by fitting multiple Lorenz curves, with absolutely high values for high sampling inequality. Specifically, we defined a relatively total sample size into each grid cell (e.g., each state in a country or each country in the world) based on extracted data in these eligible studies. Furthermore, the sub-modules were set by economic classification (i.e., MEDC and LEDC). Lastly, the Dagum-Gini model was used to decompose contribution from module-between variance, module-between-net variance, and intensity of transvariation. In this vein, we could estimate the Gini coefficient by adjusting the geospatial pattern and relative economic gap for a given economic entity, which improved statistical rigors by controlling unexpected variances. To validate the robustness of the Gini coefficient, we also calculated the Theil index based on the information entropy algorithm.

Case–control skewness

We calculated case–control skewness to estimate the extent to which the sample size between patients and the healthy control (HC) group was unbalanced, with a high value for high case–control skewness. We estimated the ratio of the number of patients to HC when the sample size in the patient group was larger than the HC group and vice versa, which was used as a metric to quantify the case–control skewness.

Statistics

To examine the monotonic increasing trends for time-series data, we capitalized on the non-parametric Mann–Kendall Trend by using the R package [42]. Furthermore, we built both ARIMA (autoregressive integrated moving average) model and LSTM (long short-term memory) model to perform time-series prediction for the incremental trends of the number of relevant studies during the future decade, which were implemented by Deep Learning Toolbox embedded in MATLAB 2020b (MathWorks ® Inc.). Both models were trained by data split from 90% in the whole dataset and were tested in the remaining 10% dataset. Notably, we tested this model with real-world data using the actual number of relevant studies at the end of 2021 (Dec. 30) (see Fig. 1b).

Trends for research aiming at neuropsychiatric diagnostic prediction (classification) during the recent three decades (1990–2020). A illustrates the growth of the number of studies concerning neuropsychiatric classification from 1995 to 2020. B shows a prediction of the number of relevant studies for future decades based on both the autoregressive integrated moving average (ARIMA) model and the long short-term memory (LSTM) model. The number of relevant studies in 2021 was used as a testing set in the real world. We trained these models with data from 1990 to 2020 and tested them by using real data in 2021 to show the well generalizability. The models predicted the number of relevant studies would be increased to 114.13, and we found that the actual number of these publications in 2021 was 119. C presents trends for each psychiatric category during 1990–2021 (June). D shows the frequencies of first-author affiliation for all the included studies. E mapped the number of countries for the first affiliation in these included studies by using R packages “maptools” and “ggplot2”. F illustrates which journals prefer to publish these studies. The top–bottom rank for these journals was determined by the number of these studies adjusted by the total number of publications per year. The length of the bar shows the proportion of one journal including these studies on all the journals

Given the failure in fulfilling the prerequisites of parametric estimation, the Spearman rank model was defaulted for correlation analysis in the current study. Also, the parametric models for validating these correlations have been built as well. Furthermore, the 95% confidence interval (CI) has been estimated by using Bootstrapping process at n = 1,000. Equivalent Bayesian analytic models have been constructed as well for providing additional statistical evidence. We used the Jeffreys-Zellner-Siow Bayes factor (BF) with prior Cauchy distribution (r = 0.34), with BF > 3 for strong evidence. To examine the non-linear associations of these variables of interest, we have built the generalized additive model (GAM) with natural shape-free spline functions by R package (“mgcv”). To obviate overfitting, the shape-free splines (i.e., smooth function) were used in these models. Finally, metrics of model performance (i.e., classification accuracy) for each study were precision-weighted rather than the original ones as reported.

Checklist for quantitative assessment on quality

We evaluated study quality in terms of the following five facets that were integrated from these meta-analytic findings: sampling representativeness (item 1: sample size and sites), model performance estimation (item 2: CV scheme), model generalizability (item 3: external validation), reporting transparency (item 4: reports for model performance) and model reproducibility (item 5: data/model availability). By ExpertScape™ rank and peer recommendations, we attempted to reach out to peer experts in multidisciplinary domains to examine the validity of this checklist, including data/computer science, psychiatry, neuroscience, psychology, clinical science, and open science. To this end, we have received concerns or advice for item classification and scoring criteria from three independent experts, and have performed four rounds of revision to form a final 5-star rating system called “Neuroimaging-based Machine Learning Model Assessment Checklist for Psychiatry” (N-ML-MAP-P). Three-stage validation was used to ensure assessment quality as well. Scores for one study would be reevaluated by a third independent scorer once the absolute difference between two scorers was larger than 2 points.

Results

General information

Four hundred and seventy-six studies with 118,137 participants from the 41,980 papers were eligible in this meta-research review (see Methods). These studies covered 66.67% (14/21) psychiatric disorders defined by the DSM-5 classification [43]. Diagnostic machine learning classifiers were mostly for schizophrenia (SZ, 24.57%, 117/476), autism spectrum disorder (ASD, 20.79%, 99/476), and attention deficit/hyperactivity disorder (ADHD, 17.85%, 85/476). To probe whether such research interests converged with the healthcare needs, we examined the association between the total number of machine learning studies concerning each psychiatric disorder and their prevalence/disease burdens (Data source: Global Health Data Exchange, GHDx) [44, 45]. Our findings indicated that there was no significant association between the number of studies related to different psychiatric disorders and their real-world prevalence (rho = − 0.24, p = 0.47; BF01 = 1.4, moderate evidence strength for supporting the null hypothesis). Furthermore, we found no significant association between the number of these studies for psychiatric disorders and corresponding DALYs (i.e., disability-adjusted life-years)/YLDs (i.e., years lived with disability) that reflected disease burden (DALYs, rho = − 0.05, p = 0.89; BF01 = 2.5; YLDs, rho = − 0.06, p = 0.89; BF01 = 2.6, moderate evidence strength for null association). Based on World Bank (WB) and International Monetary Fund (IMF 2021) classification, populations sampled in these studies were from 39 upper-middle-income to high-income countries, leaving population from the remaining 84.46% (212/251) countries in the globe unenrolled. In addition, 59.45% (283/476) of these studies used domestically-collected samples, while 31.10% (148/476) reused open-access datasets (e.g., ABIDE and ADHD-200).

Historical trends

The total number of psychiatric machine learning studies for diagnostic classification on psychiatric disorders increased markedly in the past 30 years (z = 5.81, p = 6.41 × 10 −9, Cohen d = 1.82, Mann-Kendell test) (see Fig. 1a and Additional file 1: Fig. S3). Based on time-series prediction models, we predicted a persistent increment for the number of studies pursuing brain imaging-based diagnostic classification for psychiatric disorders in the future decade (e.g., k = 229.65 in 2030, 95% CI: 106.85–352.44) (see Fig. 1b and the “ Methods” section).

Despite the accelerated increase in the number of psychiatric machine learning studies, the increase rate for different psychiatric disorders was found to be different: the number of existing studies on SZ, ASD, ADHD, major depression disorder (MDD), and bipolar disorder (BP) is significantly larger than that on other high-disease-burden categories (e.g., eating disorder and intellectual disability) (see Fig. 1c). To quantify the increment pattern for different psychiatric categories, we capitalized on increment curve models. We found that increase speeds for machine learning models regarding neuropsychiatric diagnoses towards SZ (b = 2.40, 95% CI: 2.05–2.74, p < 0.01) and ASD (b = 2.64, 95% CI: 2.25–3.02, p < 0.01) were significantly faster than others (see Additional file 1: Fig. S3 and Tab. S1).

Interestingly, a quite number of first authors of these studies (46.42%, 221/476) seemed to be trained in computer and data science instead of psychiatry or neuroscience (see Fig. 1d). Institutes from China, the USA, Canada, Korea, and the UK contributed mostly for the total number of these machine learning studies (see Fig. 1e). Moreover, by adjusting the total publications per year, we found that these studies were mostly published in journals with a special scope on neuroimaging, such as Human Brain Mapping and Neuroimaging: Clinical (see Fig. 1f and Additional file 1: Tab. S2-S3).

Sampling bias and sampling inequality

Geospatial pattern of sampling bias

Geospatial maps were generated to visualize the distribution of the sampled populations (i.e., the number of participants). We found that the sample populations covered only the minority upper-middle-income and high-income countries (UHIC) worldwide (n UHIC countries = 32; 12.74%). Even in UHIC, across-country imbalance in sample population was striking (total sample size: n Chinese = 14,869, n Americans = 12,024, n Germans = 4, 330; see Fig. 2a and Additional file 1: Tab. S4). Moreover, we found a likewise prominent within-country imbalance of sample populations (see Fig. 2b, Additional file 1: Tab. S5-S8 and Additional file 1: Fig. S4-S5). Furthermore, as for continents-based classification, populations of these machine learning models were largely enrolled from Asia (44.67%, adjusted by total population) and North America (26.76%, adjusted by total population). Notably, in the current meta-research review, no machine learning models were observed to train classifiers by samples in Africa despite its large population.

Geospatial model for sample population regarding ML models towards neuropsychiatric classification in the world (A) and USA (B). Both maps were built by 1st administrative grid cell, with each country/region for the globe (251 countries/regions) and state for the USA (51 states). For better readability, we re-scaled the sample size by log-transformation. Sample size for a portion of countries/regions has been shown in these maps. A panel was depicted similarly to the Fig. 2A in our previous article [22], because of the overlapping datasets between them

We examined whether the size of the sample population in these models could be determined by the national economic level. Results showed a strikingly positive association between nation-wide GDP (Gross Domestic Product, Data source: IMF 2021) and total sample size all over the globe (r = 0.65, 95% CI: 0.40–0.81, conditions-adjusted, p < 0.001; BF10 = 1.10 × 103, Strong evidence) (see Fig. 3a). Supporting that, such association was found within China (r = 0.47, 95% CI: 0.02–0.76, p < 0.05, conditions-adjusted; BF10 = 1.93, moderate evidence) and the USA (r = 0.47, 95% CI: 0.10–0.73, p < 0.05, conditions-adjusted; BF10 = 3.72, Strong evidence), respectively (see Fig. 3b–c).

Sampling bias and sampling inequalities in these trained ML models. A provides a scatter plot for the association between GDP and sample size for 32 counties/regions in the globe. B offers a scatter plot showing the association between GDP and sample size for 20 provinces within China. (C) shows the association between GDP and sample size for 25 states within the USA. (D) plots Gini sampling coefficients for the top 10% countries with large sample sizes to train ML models in existing studies, with high Gini value for high sampling inequality. LEDC and MEDC were categorized by World Bank (WB) and International Monetary Fund (IMF) classification. E illustrates the sampling bias and Gini coefficients for each continent. The left panel shows the proportion of the total sample size for training ML models in existing studies on the total sample population for each continent. The right panel shows the Gini coefficient for each continent

Sampling inequality

To quantitatively evaluate such sampling bias, the new concept, sampling inequality, was introduced, which reflects both the sample-size gap and the geospatial bias for the sampled populations reported in existing psychiatric machine learning studies. We used sampling Gini coefficient (G, ranged from 0 to 1.0) based on the Dagum-Gini algorithm, to quantify the degree of sampling bias (see Methods). We found severe sampling inequality in samples of prior psychiatric machine learning studies (G = 0.81, p < 0.01, permutation test, see Fig. 3d). Furthermore, based on IMF classification, we grouped global countries into More Economically Developed Country (MEDC) bloc and Less Economically Developed Country (LEDC) bloc and found a significant difference in the sampling inequality between them: sampling Gini coefficient in LEDC was threefold (G LEDC = 0.94, G MEDC = 0.33, p < 0.01, permutation test) higher than that in MEDC. In addition, we also examined within-country sampling inequality. Results showed a weak sampling inequality in China (G = 0.47) and the USA (G = 0.58), but severe inequality in Germany (G = 0.78), the UK (G = 0.87), Spain (G = 0.91), and Iran (G = 0.92) (see Fig. 3d and Additional file 1: Tab. S9). Furthermore, we found a relatively lower sampling inequality in Europe compared with other continents (G Europe = 0.63; see Fig. 3e and Additional file 1: Tab. S10-S11). Notably, a significantly positive association between these sampling Gini coefficients and averaged classification accuracy was uncovered (r = 0.60, p = 0.04, one-tailed; permutation test at n = 10,000), which possibly implied potential inflated estimates for model performance because of such sampling inequality.

To examine whether sampling inequality was further increased by economic gap, that was, individuals (patients) living in richer countries (areas) were more likely to be recruited in building rich-areas-machine learning-specific models, a generalized additive model (GAM) with natural shape-free spline function was constructed. Interestingly, the GDP of these countries allows for an accurate prediction of the sampling inequality values (β = − 2.75, S.E = 0.85, t = 4.75, p < 0.001, R 2 adj = 0.40; r = − 0.84, 95% CI: − 0.41 to − 0.97, p < 0.01; BF10 = 13.57, strong posterior evidence), with higher national income for weaker sampling inequality. The apparent presence of sampling bias and high sampling economic inequality for the reviewed psychiatric machine learning studies may resonate with generalization failure that was widely concerned in the field.

Methodological considerations on generalizability

Sample size, validation, technical shifting, and case–control skewness

We extended the investigations of sampling bias and sampling inequality to an analysis of other methodological facets that may likely lead to overfitting and hence magnify the generalization errors. A significant correlation was found between the sample size in psychiatric machine learning studies and publication year in the last three decades (r (total) = 0.75, 95% CI: 0.22–0.93, p = 0.013; BF10 = 5.83, Strong evidence) (see Fig. 4a and Additional file 1: Tab. S12-S14). Despite improvement over time, we observed a strikingly biased distribution skewing to a small sample size (n < 200) in these machine learning models (73.10%, 348/476) (see Fig. 4b and Additional file 1: Tab. S15).

Methodological considerations for existing ML models towards psychiatric diagnosis. A illustrates increment trends for sample size during the recent three decades by Gaussian kernel density plots. Labeling 2011 sums up all the sample size from 1990 to 2011. B shows the counts for subgroups by dividing these studies according to sample size. C plots the trends of using cross-validation (CV) schemes by accounting counts from all the included studies during the recent three decades. D shows model performance comparisons between independent-sample validation and within-sample validation. The non-parametric W test was used for statistical inferences, with *** for p < .00. Precision-weighted accuracy was estimated by Woo et al. E depicts Gardner-Altman estimation for the classification accuracy comparison between population-within sample and population-across sample. Black dot indicated the point estimate for the mean difference (delta) of the two groups, and the shadow areas showed the distribution estimated by delta. F presents a frequency plot to show case–control skewness

In addition, we found a prominently positive association between the ratio of using k-fold cross-validation (CV) scheme and publication year in recent decades (r = 0.82, 95% CI: 0.40–0.95 p < 0.01; BF10 = 15.80, Strong evidence) (see Additional file 1: Tab. S16-S17). As repeated recommendations by didactic technical papers [8, 10, 34, 46], adopting a k-fold CV to validate model performance could outperform popular LOOCV methods in terms of model variance and biases. We thus examined model performance between them by precision-weighted method [47] that could adjust the effects of sample size and between-study heterogeneity. Results showed that model performance estimated by LOOCV was prominently higher than k-fold CV (Acc LOOCV = 80.35%, Acc k-fold = 76.66%, precision-adjusted, w = 20,752, p < 0.001, Cohen d = 0.31; BF10 = 2289, strong evidence) (see Fig. 4c). Details for other methodological considerations can be found at Additional file 1: Tab. S18-22.

As for independent-sample validation, we found a significantly positive association between the ratio of validating model performance in the independent sample (site) and publication year in recent decades (r = 0.88, 95% CI: 0.63–0.97 p < 0.01; BF10 = 234.93, Strong evidence). Nevertheless, the majority of these machine learning studies (84.24%, 401/476) still lacked validation for model generalizability in the independent sample(s). Furthermore, we found that the classification performance of these models tested in the independent samples was more “conservative” than those tested in the internal samples (Acc independent-sample validation = 72.71%, Acc others = 77.75%, precision-adjusted, w = 3,041, p < 0.001, Cohen d = 0.32; BF10 = 29.43, Strong evidence) (see Fig. 4d and Additional file 1: Tab. S23). To directly test the impact of sampling bias on model generalizability, we compared the model performance between cross-country samples (i.e., training model in a sample from one country and testing model in a sample from other countries) and within-country (i.e., training and testing model in sample within the same countries) sample. Results showed that model performance was more “conservative” in the cross-country sample than in the within-country sample (Acc cross-country sample = 72.83%, Acc within-control sample = 82.69%, precision-adjusted, w = 2,008, p < 0.001, Cohen d = 0.54; BF10 = 150.90, Strong evidence; see Fig. 4e).

Furthermore, we specifically examined the shift of mainstream neuroimaging modalities and features of these models in recent decades. Results showed that the (functional) MRI was still the mainstream neuroimaging technique to build these models over the last three decades (i.e., averaged 73.70% of these models for (functional) MRI, 21.57% for EEG/ERP, 2.69% for fNIRs, 2.02% for MEG and 0.21% for PET). Despite that, the increasing trend of using multi-modalities in training these neuroimaging-based ML models was observed, from 3.22% to 19.19% of these models over time. In addition, with the developments of ML techniques, the ratio of using deep learning models or complicated parameterized models to “shallow learning models” was increasing during recent decades, particularly after 2019 (i.e., 0% in 2012, 10.40% in 2016, and 32.32% in 2020). As for the strategy of feature selection, we found an increase in the applications of algorithmic techniques than of pre-engineered selections in building these models (i.e., 0% before 2012, and averaged 30.90% after 2012). Nevertheless, no changes were found for the shift of paradigm from a single-snapshot case–control cohort to repetitive scanning of the same participants in these models. While the shifting of main neuroimaging modalities, model complexity, and feature selection strategy was observed over time, we found no prominent trends of model performance (i.e., precision-weighted accuracy) over time (Accuracy: 84.43%, 95% CI: 81.84–87.88% at 2011; 84.38%, 95% CI: 80.79–87.86% at 2015; 84.78%, 95% CI: 82.82–87.49% at 2020). Full results for these findings can be found in Additional file 1: Fig. S6-S8.

Finally, by calculating the standardized case–control ratio (see the “Methods” section), we observed a case–control skewness (i.e., the number of patients is larger than healthy control, and vice versa) in a quarter of all the included studies (25.37%, 121/476) (see Fig. 4f). The case–control skewness was significantly (but weakly) associated with the reported classification accuracy, which may imply inflated accuracy due to the imbalanced case–control distribution in the data (r = 0.15, 95% CI: 0.04–0.27, p < 0.05; BF10 = 2.04, moderate evidence).

Technical transparency and reproducibility

We further determined whether existing studies provided sufficiently transparent reports to evaluate potential overfitting and reproducibility. We found that only one fifth of them (23.94%, 114/476) fulfilled the minimum requirements for reporting model results (i.e. balanced accuracy, sensitivity, specificity, and area under curve) by the criterion as proposed by Poldrack [8, 48] (see Fig. 5a).

Reporting transparency and technical (data and model) availability. A presents patterns of reporting model performance across sensitivity, specificity, balanced accuracy, and area under curve (AUC) by a Venn plot. B sums up the proportion of having actual model availability, data availability, and datasets

As for the model reproducibility, only 12.25% (58/476) of studies shared trained classifiers (full-length codes). Furthermore, only 19.12% (91/476) studies claimed to provide available original data. Notably, we manually checked the validity of these resources as these studies stated, one-by-one, but found that only a small portion of trained classifiers (32.27%, 19/58) or data (15.38%, 14/91) were actually available/accessible (see Fig. 5b). Thus, incomplete reports for model results and poor technical reproducibility may be one of the sources to hamper the assessment of generalizability, and hence, the “generalization crisis” remains.

Five-star quality rating system

To promote the establishment of an unbiased, fair, and generalizable diagnostic model, we proposed a 5-star quality rating system called “Neuroimaging-based Machine Learning Model Assessments Checklist for Psychiatry (N-ML-MAP-P)” by integrating these meta-research findings aforementioned and up-to-date guidelines that provided by multidisciplinary experts (see Methods). This rating system incorporated five elements, including sample representativeness, CV methods, independent-sample validations, reports for model performance, and data/model availability (see Fig. 6a).

Neuroimaging-based machine learning model assessment checklist for psychiatry (N-ML-MAP-P). A provides details for five items and scoring criteria in this checklist for evaluating the study quality of all the included studies. B presents a scatter plot for showing the trends of improving study quality during the recent decade (2011–2021). C shows the overall study quality for each psychiatric category in existing studies. This plot is ranked by total quality score, and bars indicate standard error (S.E.). C provides a frequency plot for overall quality scores. D shows the trajectories of study quality for different affiliations, including data/computer science, neuroscience, psychiatry, and others. E draws a scatter plot showing the association between journal quality (i.e., journal impact factor, JIC) and overall quality scores. D provides a scatter plot to show the association of overall quality scores with model accuracy as reported in these studies

Based on this N-ML-MAP-P rating system, we found that overall quality scores for these models have increased consistently over the last decade (r = 0.77, 95% CI: 0.25–0.99, p < 0.01; BF10 = 7.04, strong evidence), demonstrating that study quality for machine learning models on psychiatric diagnosis has been increasingly improved (see Fig. 6b). In addition, we also examined study quality for each item and revealed that ratings for sample size, CV methods, independent validation, and reporting transparency have been gradually improved (see Additional file 1: Tab. S24). However, we found no prominent increase in quality scores on technical (data and model) availability (see Additional file 1: Tab. S25). Furthermore, we found a considerably strong positive correlation between the number of disorder-specific studies and their quality scores (r = 0.69, 95% CI: 0.13–0.88, p < 0.05; BF10 = 4.10, strong evidence), with relatively high quality for machine learning studies concerning SZ, ASD, and ADHD.

Despite the increase, the overall quality scores remained relatively low in the vast majority of these models (see Fig. 6c–d). Intriguingly, we found a weak but statistically significant association between journal impact factors/journal citation indicator (JIF/JCI) and the scores of model quality rated by N-ML-MAP-P assessment (r (JIF) = 0.18, 95% CI: 0.08–0.30, p < 0.001; BF10 = 41.90, strong evidence; r (JCI) = 0.15, 95% CI: 0.06–0.25, p < 0.01; BF10 = 8.60, strong evidence) (see Fig. 6e). Furthermore, we also observed a weakly negative association between the JIF/JCI and model performance (r = − 0.19, 95% CI: − 0.10 to − 0.28, p < 0.001; BF10 = 4697.67, strong evidence) (see Fig. 6f).

In summary, our purpose-built N-ML-MAP-P system for quantitatively assessing the quality of these models revealed prominent improvements for them over time, possibly indicating that efforts made by scientific communities [8, 10, 49] to address overfitting issues in diagnostic machine learning models for psychiatric conditions may be effective. However, existing machine learning studies may still face several challenges, e.g., low overall quality and poor technical reproducibility, which still characterize a majority of these studies. A full list of these models can be found in Additional file 2 [50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228,229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257,258,259,260,261,262,263,264,265,266,267,268,269,270,271,272,273,274,275,276,277,278,279,280,281,282,283,284,285,286,287,288,289,290,291,292,293,294,295,296,297,298,299,300,301,302,303,304,305,306,307,308,309,310,311,312,313,314,315,316,317,318,319,320,321,322,323,324,325,326,327,328,329,330,331,332,333,334,335,336,337,338,339,340,341,342,343,344,345,346,347,348,349,350,351,352,353,354,355,356,357,358,359,360,361,362,363,364,365,366,367,368,369,370,371,372,373,374,375,376,377,378,379,380,381,382,383,384,385,386,387,388,389,390,391,392,393,394,395,396,397,398,399,400,401,402,403,404,405,406,407,408,409,410,411,412,413,414,415,416,417,418,419,420,421,422,423,424,425,426,427,428,429,430,431,432,433,434,435,436,437,438,439,440,441,442,443,444,445,446,447,448,449,450,451,452,453,454,455,456,457,458,459,460,461,462,463,464,465,466,467,468,469,470,471,472,473,474,475,476,477,478,479,480,481,482,483,484,485,486,487,488,489,490,491,492,493,494,495,496,497,498,499,500,501,502,503,504,505,506,507,508,509,510].

Discussion

We conducted a pre-registered meta-research review and quantitative appraisal to clarify generalizability and even quality in existing machine learning models on neuroimaging-based psychiatric diagnosis (k = 476) from insights into sampling issues, methodological flaws, and technical availability/transparency. By doing so, we quantified a severe sampling economic inequality in existing machine learning models. By further determining methodological issues, we found that sample-size limitation, improper CV methods, lack of independent-sample validation, and case–control skewness still contributed to an inflation of model performance. Furthermore, we found a poor technical availability/transparency which may in turn critically hamper mechanisms to examine generalizability for these models. Based on these findings, we developed a checklist to quantitatively assess the quality of existing machine learning models. We found that despite increasing improvement, the overall quality of the vast majority of these machine learning models was still low (88.68% models were rated at low quality in existing literature). Taken together, the results indicated that ameliorating sampling inequality and improving the model quality may facilitate to build of unbiased and generalizable classifiers in future clinical practices.

One critical finding that warrants further discussion is the severe global sampling inequality in existing machine learning models. Despite rapid proliferation, we found that the samples were predominately recruited from upper-middle-income and high-income countries (444/476 models, 93.28%). Also, we observed regional sampling inequalities, with the Gini coefficient in LEDC being 3-fold higher than that in MEDC. To make matters worse, both sampling inequalities were found to be enlarged by regional economic gaps. Despite supporting the descriptive observation to previous studies [22, 35, 511], the present study provided unique statistical evidence to clearly reveal the severity of sampling bias of these extant models in the globe or across countries (regions), which advanced our knowledge to make the sampling bias quantitatively comparable rather previously conceptional concern. Beyond that, the predictive role of national (regional) economic level on these sampling biases has been quantitatively verified, possibly indicating a sampling economic inequality in the neuroimaging-based ML-aid diagnosis. For instance, in China, machine learning models were predominately trained by samples from mid-eastern Chinese with high incomes, whereas there was no evidence to validate whether these trained machine learning models could be generalized for western Chinese with lower incomes. Notably, it is the same case with predictive models, recent works have revealed generalization failure for cross-ethnicity/race samples in neuroimaging-based predictive models [512, 513]. Compared to previous studies using qualitative inferences or conclusions [514, 515], we provided preliminary evidence to quantify the extent to which sampling inequality impacted model generalizability, this result may imply how much sampling inequality should be limited to built generalizable neuroimaging-based diagnostic classifiers. As a didactic example recently, Marek and colleagues (2022) have provided a quantitatively empirical evidence that thousands of subjects are needed to attain reliable brain-behavior associations, although the intensive discussions or concerns for the high overfitting in neuroimaging-based models with small sample sizes have been debated for a long-lasting time [10, 34, 46, 516]. Thus, by quantitatively revealing the association of sampling issues to inflated classification accuracy, the present study may provide valuable insights into how to increase sampling equality enough to achieve good model generalizability in future empirical studies. To tackle this issue, diversifying sample representation (i.e., racial balance or socioeconomic balance) in neuroimaging-based predictive models has been increasingly advocated [517, 518]. That is, existing “population-specific models” trained with less or even no samples in LEDC and Africa practically questioned their generalizability across intersectional populations. More importantly, besides common sense for the disadvantages of global economic gaps in science development, “leaving the poor ones out” in training machine learning models may not only render poor generalizability to psychiatric diagnostic models but also exacerbate global inequalities in clinical healthcare. Relating to this consideration, future studies could explore whether and how economic gaps contribute to biases in clinically diagnostic measures, such as neuroimaging-based precision diagnosis in high-income countries, as opposed to more subjective symptom-dependent diagnosis in low-income countries. This investigation could further our understanding of how economic disparities impact the inequalities in the development and implementation of diagnostic basis. Nonetheless, another insightful viewpoint worthy to note was that an overly board emphasis on generalizability may impede clinical applications of these machine learning models in specific medical systems (e.g., healthcare) [519, 520], with high generalizability at the expense of optimal model performance within specific cohorts. In other words, despite poor geospatial or socioeconomic generalizability, these machine learning models posing high performance within specific contexts (e.g., machine learning model trained by data in the A hospital could accurately predict patients within A hospital rather than other ones) may be still reliable into a given clinical practice.

Another factor contributing to poor generalizability was rooted in methodological issues. We found that, with consistent efforts made by scientific communities [8, 521], the ratio of using k-fold CV in estimating model performance gradually increased during recent decades, which may partly mirror effective controls for overestimation on diagnostic accuracy that was caused by flawed CV scheme [23, 34]. However, the LOOCV was still used widely (40.33%) in recent decades, which may overfit models compared to those using k-fold CV (precision-weighted classification Acc level-one-subject-one CV, 80.35%; Acc k-fold CV, 76.66%, p < 0.001). Thus, although the repeated technical recommendations and calls may be effective in changing our practices to rectify model overfitting, this issue has not been fully addressed to date [522, 523]. Compared to the CV method, testing model performance in external (independent) samples could provide more accurate estimates for interpretability and generalizability [524, 525]. Nevertheless, only 15.76% models were validated in the independent sample (s). More importantly, we observed that model performance may be highly overestimated in within-country independent samples compared to cross-country ones (precision-weighted classification Acc cross-country, 72.83%; Acc within-country, 82.69%, p < 0.001). Thus, not only “independent-sample” validation but also the well-established “intersectional-population-cross” validation is demanded to strengthen generalizability in future studies [526]. Moreover, few machine learning models (< 5%) provided adequate technical availability, which diluted our confidence for the generalizability and reproducibility of these models, especially in the “big data” era [527]. On balance, we found that methodological flaws of these machine learning models were increasingly ameliorated to prompt model generalizability in recent decades, but sample limitation, improper CV methods, lack of “cross-population” independent-sample validation, and poor technical availability still exposed these models to high risks of overfitting.

In the current study, we proposed a quantitative framework for evaluating the quality of these models, covering sample, CV, independent-sample validation, transparency, and technical availability. We found that the overall quality of these models increasingly improved over time. As aforementioned, some didactic methodological papers [8, 9, 23] have considerably contributed to prompting the scientific communities to rectify these methodological flaws in machine learning models. Furthermore, reporting benchmarks or guidelines were also developed to increase the transparency of information for accurately evaluating model performance in recent years [38, 528]. However, despite encouraging improvements, the low-quality machine learning models seem to still dominate this field, as we observed in the current study that single-site samples and poor data/model availability remained largely unchanged [15]. Together, the findings may imply that existing machine learning models are not as solid as claimed in terms of generalizability and reproducibility in clinical practices in their current form. It is noteworthy that the high-quality models that were rated in the current study have not yet been tested for generalizability. Thus, testing the generalizability or reproducibility of these models from originally trained samples to different populations (e.g., countries, ethnics, income-levels) could be a more reliable and valid way to validate the generalizability in future studies.

To tackle these generalizability issues, here we recommended several practical tips. Beyond sample size, recruiting a diverse, economically-equal, case–control balanced, and representative sample is one of the best avenues to obviate sampling biases. Technically speaking, the cross-ethnicity/race or cross-country independent sample should be prepared for the generalizability test. At least, the nest k-fold CV method is clearly warranted. Moreover, we also recommend transparent and unfolded reports for model performance facilitating to take these models into clinical insights. In addition, improving the data/modal availability for these models is one of the ways to provide venues to validate clinical applicability. To conceptualize and streamline these recommendations, we have preliminary built the “Reporting guideline for neuroimaging-based machine learning studies for psychiatry” (RNIMP 2020) checklist and diagram (see Additional file 3: Tab. S1-2); this suit is developed by encapsulating the above tips and currently promising benchmarks [8, 23].

This study warrants several limitations. First, we narrowed the research scope into diagnosis but not all the categories were sampled evenly. Predictive machine learning models for psychiatric conditions included (at least) three forms of prediction: diagnosis (i.e., predicting the current psychiatric condition), prognosis (i.e., predicting outcome in future onsets), and prediction (i.e., predicting response or outcome for a given treatment) [529]. Second, this study may not cover all eligible data, especially in literature that was published in African areas or written in non-English languages, as data were screened from English-written peer-reviewed papers. Therefore, we stress that all the findings are grounded on these studies, instead of completely representing the real-world situation. Third, the current study did not probe into the model generalizability from biological insights, but focused on sampling bias and methodological issues only. Thus, it left room to be uncovered in future works. Fourth, we empirically inferred the academic training experiences of the first authors by their affiliation. However, such assumptions may not be solid. Extending these conclusions from this section to elsewhere should be more prudent. Fifthly, the present study has not thoroughly analyzed the factors that contribute to the changes in the methodological and technical underpinnings of machine learning models for psychiatric diagnosis. For instance, the increased sample size in these models over the last decades may be attributed to the improvements of imaging techniques/infrastructures, the developments of machine learning knowledge, and the decrease of data costs, which are not explored in the current study. In other words, future studies could reap huge fruits from delving into the specific roles of these factors in the advances of these models, particularly in sample size. Lastly, given that the neuroimaging-based signatures are not practically applicable for diagnosing all psychiatric conditions, the statistics for unbalanced developments and qualities across these DSM categories should be explained more prudently.

Conclusions

On balance, we provided meta-research evidence to quantitatively verify the sampling economic inequality in existing machine learning models for psychiatric diagnosis. Such biases may incur poor generalizability that impedes their clinical translations. Furthermore, we found that the methodological flaws have been increasingly ameliorated because of repeated efforts made by these technical papers and recommendations. Nonetheless, in the present study, we stretched views to find that these limitations including small sample size, flawed CV method (i.e., LOOCV), no independent-sample validation, case–control skewness, and poor technical availability still remained, and have demonstrated quantitative associations of such limitations to inflated model performance, which may hence indicate model overfitting. In addition, poor reporting transparency and technical availability were also observed as a hurdle to translate these models into real-world clinical actions. Finally, we extended to develop a 5-star rating system to provide a purpose-built and quantitative quality assessment of existing machine learning models and found that the overall quality of a vast majority of them may still be low. In conclusion, while these models showed a promising direction and well-established contributions in this field, it is suggested that enhancing sampling equality, methodological rigor, and technical availability/reproducibility may be helpful to build an unbiased, fair, and generalizable classifier in neuroimaging-based machine learning-aid diagnostics of psychiatric conditions.

Availability of data and materials

The datasets generated and/or analyzed during the current study are available in the Open Science Framework (OSF) repository, https://osf.io/4zhsp/.

Abbreviations

- Acc:

-

Accuracy

- ADHD:

-

Attention deficit/hyperactivity disorder

- ARIMA:

-

Autoregressive integrated moving average

- ASD:

-

Autism spectrum disorder

- BP:

-

Bipolar disorder

- CV:

-

Cross-validation

- DALYs:

-

Disability-adjusted life-years

- GAM:

-

Generalized additive model

- GBD:

-

Global Burden of Disease

- GDP:

-

Gross Domestic Product

- GEE:

-

Total Government Expenditure on public Education

- HC:

-

Healthy control

- HDI:

-

Human Development Index

- LEDC (MEDC):

-

Less (more) economic developed countries

- LOOCV:

-

Leave-one-out cross-validation

- LSTM:

-

Long short-term memory

- MDD:

-

Major depressive disorder

- MHDB:

-

Mental health disease burden

- ML:

-

Machine learning

- OSF:

-

Open Science Framework

- R & D:

-

Research and Development expenditure

- ROB:

-

Risk of bias

- SZ:

-

Schizophrenia

- UHIC:

-

High-income countries

- WB:

-

World Bank

- WEIRD:

-

Western, Educated, Industrialized, Rich, and Democratic

- YLDs:

-

Years lived with disability

References

Jordan MI, Mitchell TM. Machine learning: trends, perspectives, and prospects. Science. 2015;349(6245):255–60.

Eyre HA, Singh AB, Reynolds C 3rd. Tech giants enter mental health. World Psychiatry. 2016;15(1):21–2.

Walter M, Alizadeh S, Jamalabadi H, Lueken U, Dannlowski U, Walter H, Olbrich S, Colic L, Kambeitz J, Koutsouleris N, et al. Translational machine learning for psychiatric neuroimaging. Prog Neuropsychopharmacol Biol Psychiatry. 2019;91:113–21.

Rutherford S. The promise of machine learning for psychiatry. Biol Psychiatry. 2020;88(11):e53–5.

Sui J, Jiang R, Bustillo J, Calhoun V. Neuroimaging-based individualized prediction of cognition and behavior for mental disorders and health: methods and promises. Biol Psychiatry. 2020;88(11):818–28.

Bzdok D, Varoquaux G, Steyerberg EW. Prediction, not association, paves the road to precision medicine. JAMA Psychiat. 2021;78(2):127–8.

Vayena E, Blasimme A. A systemic approach to the oversight of machine learning clinical translation. Am J Bioeth. 2022;22(5):23–5.

Poldrack RA, Huckins G, Varoquaux G. Establishment of best practices for evidence for prediction: a review. JAMA Psychiat. 2020;77(5):534–40.

Nielsen AN, Barch DM, Petersen SE, Schlaggar BL, Greene DJ. Machine learning with neuroimaging: evaluating its applications in psychiatry. Biol Psychiatry Cogn Neurosci Neuroimaging. 2020;5(8):791–8.

Varoquaux G, Raamana PR, Engemann DA, Hoyos-Idrobo A, Schwartz Y, Thirion B. Assessing and tuning brain decoders: cross-validation, caveats, and guidelines. Neuroimage. 2017;145(Pt B):166–79.

Dwyer DB, Falkai P, Koutsouleris N. Machine learning approaches for clinical psychology and psychiatry. Annu Rev Clin Psychol. 2018;14:91–118.

Mihalik A, Ferreira FS, Moutoussis M, Ziegler G, Adams RA, Rosa MJ, Prabhu G, de Oliveira L, Pereira M, Bullmore ET, et al. Multiple holdouts with stability: improving the generalizability of machine learning analyses of brain-behavior relationships. Biol Psychiatry. 2020;87(4):368–76.

Meehan AJ, Lewis SJ, Fazel S, Fusar-Poli P, Steyerberg EW, Stahl D, Danese A. Clinical prediction models in psychiatry: a systematic review of two decades of progress and challenges. Mol Psychiatry. 2022;27(6):2700–8.

Yuan J, Ran X, Liu K, Yao C, Yao Y, Wu H, Liu Q. Machine learning applications on neuroimaging for diagnosis and prognosis of epilepsy: A review. J Neurosci Methods. 2022;368:109441.

Davatzikos C. Machine learning in neuroimaging: Progress and challenges. Neuroimage. 2019;197:652–6.

Shrout PE, Rodgers JL. Psychology, science, and knowledge construction: broadening perspectives from the replication crisis. Annu Rev Psychol. 2018;69:487–510.

Maxwell SE, Lau MY, Howard GS. Is psychology suffering from a replication crisis? What does “failure to replicate” really mean? Am Psychol. 2015;70(6):487–98.

Henrich J, Heine SJ, Norenzayan A. Most people are not WEIRD. Nature. 2010;466(7302):29.

Muthukrishna M, Bell AV, Henrich J, Curtin CM, Gedranovich A, McInerney J, Thue B. Beyond Western, Educated, Industrial, Rich, and Democratic (WEIRD) psychology: measuring and mapping scales of cultural and psychological distance. Psychol Sci. 2020;31(6):678–701.

Rad MS, Martingano AJ, Ginges J. Toward a psychology of Homo sapiens: making psychological science more representative of the human population. Proc Natl Acad Sci U S A. 2018;115(45):11401–5.

Arnett JJ. The neglected 95%: why American psychology needs to become less American. Am Psychol. 2008;63(7):602–14.

Chen Z, Liu X, Yang Q, Wang YJ, Miao K, Gong Z, Yu Y, Leonov A, Liu C, Feng Z, et al. Evaluation of risk of bias in neuroimaging-based artificial intelligence models for psychiatric diagnosis: a systematic review. JAMA Netw Open. 2023;6(3):e231671.

Cearns M, Hahn T, Baune BT. Recommendations and future directions for supervised machine learning in psychiatry. Transl Psychiatry. 2019;9(1):271.

Tiwari P, Verma R. The pursuit of generalizability to enable clinical translation of radiomics. Radiol Artif Intell. 2021;3(1):e200227.

Wolfers T, Doan NT, Kaufmann T, Alnæs D, Moberget T, Agartz I, Buitelaar JK, Ueland T, Melle I, Franke B, et al. Mapping the heterogeneous phenotype of schizophrenia and bipolar disorder using normative models. JAMA Psychiat. 2018;75(11):1146–55.

Schultebraucks K, Choi KW, Galatzer-Levy IR, Bonanno GA. Discriminating heterogeneous trajectories of resilience and depression after major life stressors using polygenic scores. JAMA Psychiat. 2021;78(7):744–52.

Lee HB, Lyketsos CG. Depression in Alzheimer’s disease: heterogeneity and related issues. Biol Psychiatry. 2003;54(3):353–62.

Arguello PA, Gogos JA. Genetic and cognitive windows into circuit mechanisms of psychiatric disease. Trends Neurosci. 2012;35(1):3–13.

Ying X. An overview of overfitting and its solutions. J Phys: Conf Ser. 2019;1168(2):022022.

Peng Y, Nagata MH. An empirical overview of nonlinearity and overfitting in machine learning using COVID-19 data. Chaos, Solitons Fractals. 2020;139:110055.

Andaur Navarro CL, Damen JAA, Takada T, Nijman SWJ, Dhiman P, Ma J, Collins GS, Bajpai R, Riley RD, Moons KGM, et al. Risk of bias in studies on prediction models developed using supervised machine learning techniques: systematic review. BMJ. 2021;375:n2281.

Hosseini M, Powell M, Collins J, Callahan-Flintoft C, Jones W, Bowman H, Wyble B. I tried a bunch of things: the dangers of unexpected overfitting in classification of brain data. Neurosci Biobehav Rev. 2020;119:456–67.

Varoquaux G, Cheplygina V. Machine learning for medical imaging: methodological failures and recommendations for the future. NPJ Digit Med. 2022;5(1):48.

Varoquaux G. Cross-validation failure: small sample sizes lead to large error bars. Neuroimage. 2018;180(Pt A):68–77.

Chen J, Patil KR, Yeo BTT, Eickhoff SB. Leveraging machine learning for gaining neurobiological and nosological insights in psychiatric research. Biol Psychiatry. 2023;93(1):18–28.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

Moons KG, de Groot JA, Bouwmeester W, Vergouwe Y, Mallett S, Altman DG, Reitsma JB, Collins GS. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS checklist. PLoS Med. 2014;11(10):e1001744.

Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ. 2015;350:g7594.

Nam CW. World Economic Outlook for 2020 and 2021. CESifo Forum. 2020;21(2):58-9. https://www.proquest.com/openview/2b714d1282ff098661c0d252c4db128b/1?cbl=43805&pq-origsite=gscholar&parentSessionId=7a4xwuy%2B60cPGopgOGEQ6SUez3gxXxwiOjjkxULCRuI%3D.

How does the world bank classify countries? https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2741183.

Dagum C. A new approach to the decomposition of the Gini income inequality ratio. Empir Econ. 1997;22:515–31.

Hamed KH, Ramachandra Rao A. A modified Mann-Kendall trend test for autocorrelated data. J Hydrol. 1998;204(1):182–96.

Battle DE. Diagnostic and Statistical Manual of Mental Disorders (DSM). CoDAS. 2013;25(2):191–2.

Huang Y, Wang Y, Wang H, Liu Z, Yu X, Yan J, Yu Y, Kou C, Xu X, Lu J, et al. Prevalence of mental disorders in China: a cross-sectional epidemiological study. Lancet Psychiatry. 2019;6(3):211–24.

Ormel J, VonKorff M. Reducing common mental disorder prevalence in populations. JAMA Psychiat. 2021;78(4):359–60.

Flint C, Cearns M, Opel N, Redlich R, Mehler DMA, Emden D, Winter NR, Leenings R, Eickhoff SB, Kircher T, et al. Systematic misestimation of machine learning performance in neuroimaging studies of depression. Neuropsychopharmacology. 2021;46(8):1510–7.

Woo CW, Chang LJ, Lindquist MA, Wager TD. Building better biomarkers: brain models in translational neuroimaging. Nat Neurosci. 2017;20(3):365–77.

Collins GS, Dhiman P, Andaur Navarro CL, Ma J, Hooft L, Reitsma JB, Logullo P, Beam AL, Peng L, Van Calster B, et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open. 2021;11(7):e048008.

Poldrack RA, Baker CI, Durnez J, Gorgolewski KJ, Matthews PM, Munafò MR, Nichols TE, Poline JB, Vul E, Yarkoni T. Scanning the horizon: towards transparent and reproducible neuroimaging research. Nat Rev Neurosci. 2017;18(2):115–26.

Knoth IS, Lajnef T, Rigoulot S, Lacourse K, Vannasing P, Michaud JL, Jacquemont S, Major P, Jerbi K, Lippé S. Auditory repetition suppression alterations in relation to cognitive functioning in fragile X syndrome: a combined EEG and machine learning approach. J Neurodev Disord. 2018;10(1):4.

Pedersen M, Curwood EK, Archer JS, Abbott DF, Jackson GD. Brain regions with abnormal network properties in severe epilepsy of Lennox-Gastaut phenotype: multivariate analysis of task-free fMRI. Epilepsia. 2015;56(11):1767–73.

Wang Y, Yuan L, Shi J, Greve A, Ye J, Toga AW, Reiss AL, Thompson PM. Applying tensor-based morphometry to parametric surfaces can improve MRI-based disease diagnosis. Neuroimage. 2013;74:209–30.

Hoeft F, Walter E, Lightbody AA, Hazlett HC, Chang C, Piven J, Reiss AL. Neuroanatomical differences in toddler boys with fragile x syndrome and idiopathic autism. Arch Gen Psychiatry. 2011;68(3):295–305.

Parisot S, Ktena SI, Ferrante E, Lee M, Guerrero R, Glocker B, Rueckert D. Disease prediction using graph convolutional networks: application to autism spectrum disorder and Alzheimer’s disease. Med Image Anal. 2018;48:117–30.

Matlis S, Boric K, Chu CJ, Kramer MA. Robust disruptions in electroencephalogram cortical oscillations and large-scale functional networks in autism. BMC Neurol. 2015;15:97.

Ingalhalikar M, Parker D, Bloy L, Roberts TP, Verma R. Diffusion based abnormality markers of pathology: toward learned diagnostic prediction of ASD. Neuroimage. 2011;57(3):918–27.

Shahamat H, Saniee Abadeh M. Brain MRI analysis using a deep learning based evolutionary approach. Neural Netw. 2020;126:218–34.

Bajestani GS, Behrooz M, Khani AG, Nouri-Baygi M, Mollaei A. Diagnosis of autism spectrum disorder based on complex network features. Comput Methods Programs Biomed. 2019;177:277–83.

Lanka P, Rangaprakash D, Dretsch MN, Katz JS, Denney TS Jr, Deshpande G. Supervised machine learning for diagnostic classification from large-scale neuroimaging datasets. Brain Imaging Behav. 2020;14(6):2378–416.

Zhang L, Wang XH, Li L. Diagnosing autism spectrum disorder using brain entropy: a fast entropy method. Comput Methods Programs Biomed. 2020;190:105240.

Rakić M, Cabezas M, Kushibar K, Oliver A, Lladó X. Improving the detection of autism spectrum disorder by combining structural and functional MRI information. NeuroImage Clin. 2020;25:102181.

Ahmadlou M, Adeli H, Adeli A. Fractality and a wavelet-chaos-neural network methodology for EEG-based diagnosis of autistic spectrum disorder. J Clin Neurophysiol. 2010;27(5):328–33.

Libero LE, DeRamus TP, Lahti AC, Deshpande G, Kana RK. Multimodal neuroimaging based classification of autism spectrum disorder using anatomical, neurochemical, and white matter correlates. Cortex. 2015;66:46–59.

Pham TH, Vicnesh J, Wei JKE, Oh SL, Arunkumar N, Abdulhay EW, Ciaccio EJ, Acharya UR. Autism Spectrum Disorder Diagnostic System Using HOS Bispectrum with EEG Signals. Int J Environ Res Public Health. 2020;17(3):971.

Graa O, Rekik I. Multi-view learning-based data proliferator for boosting classification using highly imbalanced classes. J Neurosci Methods. 2019;327:108344.

Ingalhalikar M, Smith AR, Bloy L, Gur R, Roberts TP, Verma R. Identifying sub-populations via unsupervised cluster analysis on multi-edge similarity graphs. Med Image Comput Comput Assist Interv. 2012;15(Pt 2):254–61.

Khosla M, Jamison K, Kuceyeski A, Sabuncu MR. Ensemble learning with 3D convolutional neural networks for functional connectome-based prediction. Neuroimage. 2019;199:651–62.

Li H, Parikh NA, He L. A novel transfer learning approach to enhance deep neural network classification of brain functional connectomes. Front Neurosci. 2018;12:491.

Sen B, Borle NC, Greiner R, Brown MRG. A general prediction model for the detection of ADHD and Autism using structural and functional MRI. PLoS One. 2018;13(4):e0194856.

Xu L, Hua Q, Yu J, Li J. Classification of autism spectrum disorder based on sample entropy of spontaneous functional near infra-red spectroscopy signal. Clin Neurophysiol. 2020;131(6):1365–74.

Ma X, Wang XH, Li L. Identifying individuals with autism spectrum disorder based on the principal components of whole-brain phase synchrony. Neurosci Lett. 2021;742:135519.

Rakhimberdina Z, Liu X, Murata AT. Population graph-based multi-model ensemble method for diagnosing autism spectrum disorder. Sensors (Basel). 2020;20(21):6001.

Tsiaras V, Simos PG, Rezaie R, Sheth BR, Garyfallidis E, Castillo EM, Papanicolaou AC. Extracting biomarkers of autism from MEG resting-state functional connectivity networks. Comput Biol Med. 2011;41(12):1166–77.

Wang H, Chen C, Fushing H. Extracting multiscale pattern information of fMRI based functional brain connectivity with application on classification of autism spectrum disorders. PLoS One. 2012;7(10):e45502.

Hu J, Cao L, Li T, Liao B, Dong S, Li P. Interpretable learning approaches in resting-state functional connectivity analysis: the case of autism spectrum disorder. Comput Math Methods Med. 2020;2020:1394830.

Jung M, Tu Y, Park J, Jorgenson K, Lang C, Song W, Kong J. Surface-based shared and distinct resting functional connectivity in attention-deficit hyperactivity disorder and autism spectrum disorder. Br J Psychiatry. 2019;214(6):339–44.

Heinsfeld AS, Franco AR, Craddock RC, Buchweitz A, Meneguzzi F. Identification of autism spectrum disorder using deep learning and the ABIDE dataset. NeuroImage Clinical. 2018;17:16–23.

Bhaumik R, Pradhan A, Das S, Bhaumik DK. Predicting autism spectrum disorder using domain-adaptive cross-site evaluation. Neuroinformatics. 2018;16(2):197–205.

Wang L, Wee CY, Tang X, Yap PT, Shen D. Multi-task feature selection via supervised canonical graph matching for diagnosis of autism spectrum disorder. Brain Imaging Behav. 2016;10(1):33–40.

Ecker C, Rocha-Rego V, Johnston P, Mourao-Miranda J, Marquand A, Daly EM, Brammer MJ, Murphy C, Murphy DG. Investigating the predictive value of whole-brain structural MR scans in autism: a pattern classification approach. Neuroimage. 2010;49(1):44–56.

Payabvash S, Palacios EM, Owen JP, Wang MB, Tavassoli T, Gerdes M, Brandes-Aitken A, Cuneo D, Marco EJ, Mukherjee P. White matter connectome edge density in children with autism spectrum disorders: potential imaging biomarkers using machine-learning models. Brain Connect. 2019;9(2):209–20.

Price T, Wee CY, Gao W, Shen D. Multiple-network classification of childhood autism using functional connectivity dynamics. Med Image Comput Comput Assist Interv. 2014;17(Pt 3):177–84.

Haweel R, Shalaby A, Mahmoud A, Seada N, Ghoniemy S, Ghazal M, Casanova MF, Barnes GN, El-Baz A. A robust DWT-CNN-based CAD system for early diagnosis of autism using task-based fMRI. Med Phys. 2021;48(5):2315–26.

Chen CP, Keown CL, Jahedi A, Nair A, Pflieger ME, Bailey BA, Müller RA. Diagnostic classification of intrinsic functional connectivity highlights somatosensory, default mode, and visual regions in autism. NeuroImage Clin. 2015;8:238–45.

Anderson JS, Nielsen JA, Froehlich AL, DuBray MB, Druzgal TJ, Cariello AN, Cooperrider JR, Zielinski BA, Ravichandran C, Fletcher PT, et al. Functional connectivity magnetic resonance imaging classification of autism. Brain. 2011;134(Pt 12):3742–54.

Uddin LQ, Supekar K, Lynch CJ, Khouzam A, Phillips J, Feinstein C, Ryali S, Menon V. Salience network-based classification and prediction of symptom severity in children with autism. JAMA Psychiat. 2013;70(8):869–79.

Nielsen JA, Zielinski BA, Fletcher PT, Alexander AL, Lange N, Bigler ED, Lainhart JE, Anderson JS. Multisite functional connectivity MRI classification of autism: ABIDE results. Front Hum Neurosci. 2013;7:599.

Jahedi A, Nasamran CA, Faires B, Fan J, Müller RA. Distributed intrinsic functional connectivity patterns predict diagnostic status in Large Autism Cohort. Brain Connect. 2017;7(8):515–25.