Abstract

Background

Valid cause of death data are essential for health policy formation. The quality of medical certification of cause of death (MCCOD) by physicians directly affects the utility of cause of death data for public policy and hospital management. Whilst training in correct certification has been provided for physicians and medical students, the impact of training is often unknown. This study was conducted to systematically review and meta-analyse the effectiveness of training interventions to improve the quality of MCCOD.

Methods

This review was registered in the International Prospective Register of Systematic Reviews (PROSPERO; Registration ID: CRD42020172547) and followed Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. CENTRAL, Ovid MEDLINE and Ovid EMBASE databases were searched using pre-defined search strategies covering the eligibility criteria. Studies were selected using four screening questions using the Distiller-SR software. Risk of bias assessments were conducted with GRADE recommendations and ROBINS-I criteria for randomised and non-randomised interventions, respectively. Study selection, data extraction and bias assessments were performed independently by two reviewers with a third reviewer to resolve conflicts. Clinical, methodological and statistical heterogeneity assessments were conducted. Meta-analyses were performed with Review Manager 5.4 software using the ‘generic inverse variance method’ with risk difference as the pooled estimate. A ‘summary of findings’ table was prepared using the ‘GRADEproGDT’ online tool. Sensitivity analyses and narrative synthesis of the findings were also performed.

Results

After de-duplication, 616 articles were identified and 21 subsequently selected for synthesis of findings; four underwent meta-analysis. The meta-analyses indicated that selected training interventions significantly reduced error rates among participants, with pooled risk differences of 15–33%. Robustness was identified with the sensitivity analyses. The findings of the narrative synthesis were similarly suggestive of favourable outcomes for both physicians and medical trainees.

Conclusions

Training physicians in correct certification improves the accuracy and policy utility of cause of death data. Investment in MCCOD training activities should be considered as a key component of strategies to improve vital registration systems given the potential of such training to substantially improve the quality of cause of death data.

Similar content being viewed by others

Background

The death certificate is a permanent, legal record of death that provides important information about the circumstances and cause of death [1]. For deaths that occur in hospitals, or other settings where a doctor is present, death certification is initiated by a medical officer, after which the certificate usually undergoes registration by a national civil registration system [2].

Accurate and timely cause of death reporting is essential for health policy and research purposes [3]. Individual death certificates are routinely aggregated into vital statistics by national civil registration systems, providing the most widely verified sources of mortality data in the form of standardised, comparable, cause-specific mortality figures [4]. These statistics provide essential insights for government policymakers, health managers, healthcare providers, donors and research institutes into common causes of death by age, sex, location and time. The data inform the allocation of resources across an array of stakeholders and disciplines, including medical research and education, disease control, social welfare and development and health promotion [5].

Cause of death

The ‘gold standard’ for cause of death statistics is complete civil registration where each death has an underlying cause assigned by a physician and is coded according to International Classification of Diseases (ICD) rules. Causes of death reported in death certificates are defined by the World Health Organization (WHO) as ‘all those diseases, morbid conditions or injuries which either resulted in or contributed to death and the circumstances of the accident or violence which produced any such injuries’ [6]. Importantly, this definition does not include symptoms and modes of dying.

Medical certification of cause of death

The Medical Certificate of Cause of Death (Fig. 1) is a standardised universal form recommended by the WHO for international use, which has been adopted by most WHO member states [6]. The WHO also provides instructions on correct cause of death reporting to improve the quality of medical certification and subsequent data [7].

When a single cause of death is reported on the death certificate, this becomes the underlying cause of death used for tabulation. When more than one cause of death is reported, the disease or injury which initiated the sequence of events that produced the fatal event becomes the underlying cause of death [6].

Despite the availability of guidance, errors in cause of death certification have been observed across all geographical regions, with inadequate certification by doctors remaining the principal reason for inaccurate death data [8, 9]. Over the past few decades, therefore, training medical doctors in death certification has become a key intervention employed by health services and national governments to improve mortality statistics. Interventions have included improvements in death certificate formats, training programmes on completion of death certificates, development of self-learning educational materials, implementation of cause of death query systems, periodic peer auditing of death certificates and increasing autopsy rates [10,11,12].

Intervention studies on death certification

Several studies have investigated the effectiveness of interventions to improve the quality of death certification [13,14,15]. Whilst improvement in death certification accuracy is often reported, negative findings have also been published [16]. Moreover, there are few randomised controlled trials (RCTs) or similar studies that have produced high-quality evidence. A 2010 literature review identified 129 studies on the effectiveness of educational interventions for death certification, ultimately reviewing 14, including three RCTs [8]. All educational interventions identified in the review improved certain aspects of death certification, although the statistical significance of evaluation results varied with the type of intervention.

Given the absence of any systematic review and meta-analysis of death certification training interventions, as well as the increase in experimental data produced in the past decade and the need—made even more urgent by the COVID-19 pandemic—to strengthen national vital registration and cause of death data systems, further evaluation is essential. In this study, we systematically review and meta-analyse the effectiveness of training interventions for improving the quality of medical certification of cause of death (MCCOD). To our knowledge, no study has specifically investigated interventions intended to reduce errors in MCCOD in a systematic review.

Methods

Preparation and search strategy

This review was registered in the International Prospective Register of Systematic Reviews (PROSPERO; Registration ID: CRD42020172547). Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines were followed throughout the review process [17].

A comprehensive literature search was conducted to identify published articles investigating the effectiveness of training and education interventions to improve death certification (additional file 1: Fig. S1). The search was conducted on the CENTRAL, Ovid MEDLINE and Ovid EMBASE electronic databases, and returned 1060 results, which were exported to EndNote X9 citation manager and deduplicated. The remaining 676 studies were then limited to those published from 1994 onwards (where 1994 is the year ICD-10 was implemented) resulting in 616 studies for screening.

Eligibility criteria and study selection

This study aimed to assess the effectiveness of training interventions in improving the quality of MCCOD compared to generic academic training in training curricula for current, as well as prospective physicians (in randomised studies), or pre-intervention quality parameters (in non-randomised studies) [8]. Two reviewers (BPK and JS) independently reviewed each study against inclusion/exclusion criteria (additional file 2: Fig. S2). Studies were screened by titles and abstracts using DistillerSR online screening software. Full texts of 44 records were then reviewed, as well as an additional eight records that were identified from the study reference lists. All disputes were resolved by an expert third reviewer (LM). Researchers were blinded to each others’ decisions. A total of 21 studies were included for data extraction and final analysis (Fig. 2). One reviewer extracted data from the selected studies (BPK), with findings then reviewed by a second reviewer (JS). Disputes were resolved independently by the third reviewer (LM).

Risk of bias, meta-analysis and narrative synthesis

Selected studies were categorised under ‘randomised’ and ‘non-randomised’, and risk of bias was assessed by two reviewers (BPK and JS) with disputes resolved by the third reviewer (LM). Randomised trials were assessed using the seven domains of the GRADE recommendations, and non-randomised studies were assessed using the seven domains of ROBINS-I criteria [18, 19].

All studies were initially assessed for clinical and methodological heterogeneity [20]. Four interventions were eligible to undergo meta-analysis in relation to five outcomes. As these were before-and-after studies without control groups, the ‘generic inverse variance method’ was used in pooling [21]. Review Manager 5.4 software was used in the meta-analysis and the effect measure was ‘risk difference’ (i.e. percentage of death certificates with each error). Statistical heterogeneity was assessed using the I-square statistic and chi-square test. When potential outliers were removed in dealing with statistical heterogeneity, sensitivity analyses were performed with and without excluded studies [22]. Robustness of the effect measures was explored further using a sensitivity analysis with both fixed and random effect assumptions [22]. Potential publication bias was explored with the generation of funnel plots.

The meta-analysis findings were imported through the ‘GRADEproGDT’ online tool. A ‘summary of findings’ table was prepared, and related narrative components added to the table [23]. The certainty assessments were done using eight criteria: study design, risk of bias, potential of publication bias, imprecision, inconsistency, indirectness, magnitude of effect, dose-response gradient and effect of plausible confounders [24]. Studies or sub-groups that were not included in the meta-analysis were included in a narrative synthesis of findings.

Results

Within the 21 selected articles [13,14,15, 25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42], there were 24 distinct interventions, with one article describing four interventions across four countries [30]. In another, findings were stratified under two study populations [27]. Three were randomised controlled trials [13, 35, 37] and 21 were non-randomised interventions. Amongst the latter, one was a non-randomised controlled study [31] whilst the remainder were non-controlled before-after studies. Characteristics of the selected studies are shown in Table 1.

Study populations, interventions and outcomes

In seven interventions, the study populations consisted of medical students [14, 15, 27, 29, 35, 39, 41]. These medical students were comprised of first year students (UK) [35], medical trainees in teaching hospitals (Spain) [41], third year students (USA) [14] and final year students (Fiji and Spain) [15, 29]. Generally, however, the study populations were physicians or doctors, and referred to as residents (Canada, USA, India) [13, 28, 34, 36], medical interns (South Africa, Spain) [37, 39], postgraduates (USA, India) [31, 36, 40], secondary healthcare physicians (Bahrain) [26], family doctors (Spain, Canada) [27, 33, 39] or Senior House Officers (England) [38].

Seminars, interactive workshops, teaching programmes and training sessions were the most common terms used in introducing the interventions. These ranged in duration from 45 min [13] to 5 h [27], and some interventions included subsequent sessions on additional days [36]. Other descriptions included ‘training of trainers’ (Philippines, Myanmar, Sri Lanka) [30], a video (UK) [35] and web-based or online training (USA, Fiji) [14, 15, 31]. In Peru, training was complementary to an online death certification system [32].

For the majority of interventions, a comparison of certification errors pre- and post-intervention was used as the measure of impact, although some studies developed a special knowledge test or used a quality index. These included the Mid-America-Heart Institute (MAHI) Death-Certificate-Scoring System (two interventions) [13, 14], knowledge assessment tests developed by the investigators (three interventions) [31, 35, 37], and quality indexes providing numerical scores based on ICD volume 2 best-practice certification guidelines [15].

Risk of bias assessments

The risk of bias assessments for the randomised studies [13, 35, 37] are shown in Fig. 3a and in Fig. 3b for the non-randomised studies.

For all randomised studies, ‘blinding of participants and personnel’ was assessed as high-risk given the difficulty of maintaining blinding for training interventions. All three studies had pre-determined outcomes and were rated low risk for ‘selective reporting’.

All but one study were before-after studies without a separate control group. Due to the method of recruitment, none of the studies was characterised as low-risk in relation to confounding and selection bias. However, since the intervention periods were clearly defined, all studies were characterised as low-risk for ‘bias in measurement classification of interventions’.

Meta-analysis

Since the interventions targeting medical students were found to be clinically heterogenous, potential meta-analyses were restricted to those targeting physicians. In anticipation of substantial methodological heterogeneity, the meta-analysis was planned separately for non-randomised studies. Findings of the studies and sub-groups initially entered to the meta-analysis are summarised in additional file 3: Tables S1-S5.

As the initial meta-analyses showed statistical heterogeneity, sensitivity analyses were performed after excluding a potential outlier in each comparison, with both fixed and random effect assumptions (Table 2). Except for ‘ill-defined underlying cause of death’ [43], the direction and significance of the estimates did not change with these sensitivity analyses.

The forest plots of the five outcomes (i.e. after excluding the outliers) included in the meta-analyses are shown in Fig. 4a–e. Three interventions were included in each meta-analysis [30].

The lowest pooled risk difference (15%) was observed for ‘multiple causes per line’ and ‘ill-defined underlying cause of death’ whereas the highest was for ‘no disease time interval’ (33%).

Funnel plots exploring potential publication bias are shown in Fig. 5a–e.

All funnel plots were generally symmetrical. A cautious interpretation of these is included in the “Discussion” section.

In the ‘summary of findings’ table (Table 3), the certainty assessments of these five outcomes are presented. ‘Moderate certainty’ was assigned to four outcomes and ‘low certainty’ to one. Findings of related additional studies have also been summarised as comments in Table 3.

Narrative synthesis of other findings

Findings of randomised studies

In two of the three randomised studies conducted on medical interns, overall scores improved with the intervention (p < 0.05) [13, 37]. In the third study, which was conducted on medical students, there was weak evidence for an improvement in the overall performance score (p = 0.046), as well as a ‘skill score’ (p = 0.066) [35]. In one study, ‘correct identification of the COD’ improved more in the intervention group (15% to 91%) compared to the control group (16% to 55%), and ‘erroneous identification of cardiac deaths’ decreased more with the intervention (56% to 6%) compared to the controls (64% to 43%) [13]. In a South African study, three errors (‘mechanism only’, ‘improper sequence’ and ‘absence of time interval’) were significantly reduced in the intervention group only, whereas ‘competing causes’ and ‘abbreviations’ were reduced in both groups [37].

Non-randomised study findings on medical students

Degani et al. (2009) showed improvements in the modified-MAHI score following the intervention (mean difference of 7.1; p < 0.0001) [14]. Vilar and Perez (2007) reported improvements in ‘at least one error’ (p < 0.0001), including ‘mechanism of death only’ (p < 0.0001), ‘improper sequence’ (p < 0.0001), ‘listing cause of death in Part 2’ (p < 0.0001) and ‘mechanism as UCOD’ (p < 0.0001) [41]. In the same study, two error types (‘abbreviations’ and ‘listing two causally related causes as COD’) did not show evidence of improvement (p = 0.413 and p = 0.290) [41]. In a Fijian study, training produced improvements of 1.67% to 19.4% in the following: ‘quality index score’, ‘average error rate’, ‘abbreviations’, ‘sequence’, ‘one cause per line’, ‘not reporting a mode of death’ and ‘legibility’ [15]. In two Spanish studies, the intervention improved performance in ‘sequence’, ‘cause of death’, ‘precision of terms’, ‘abbreviations’ and ‘legibility’ [29, 39].

Other comparisons

Case-wise comparisons with a set of errors were conducted in two studies [25, 27]. Most errors decreased following the intervention. In one non-randomised controlled study, a custom performance score increased post-intervention [31]. One study in England explored ‘mentioning consultant’s name’ and ‘completion by a non-involved doctor’, both of which improved following the intervention [38]. In a Canadian study, ‘increased use of specific diseases as UCOD’ and ‘being more knowledgeable on not using conditions like ‘old age’’ improved in the intervention group [33]. ‘Competing causes’ were less common post-intervention in two Indian studies, with varying strength of evidence (p = 0.001 and p = 0.069) [28, 36], but not in a Canadian study (p = 0.81) [34]. ‘Mechanism of death followed by a legitimate UCOD’ showed non-significant reductions in three studies (45.9% to 36.1%, 13.5% to 7.8% and 16% to 6.6%) [28, 34, 36]. Other studies that assessed ‘presence of at least one-major error’ and ‘keeping blank lines’ in the sequence generally showed a reduction following the intervention [30, 34].

Discussion

We conducted a systematic review of the impact of 24 selected interventions to improve the quality of MCCOD. Our meta-analysis suggests that selected training interventions significantly reduced error rates amongst participants, with moderate certainty (four outcomes), and low certainty (one outcome). Similarly, the findings of the narrative synthesis suggest a positive impact on both physicians and medical trainees. These findings highlight the feasibility and importance of strengthening the training of current and prospective physicians in correct MCCOD, which will in turn increase the quality and policy utility of data routinely produced by vital statistics systems in countries.

The systematic approach we followed distinguishes this study from the more common ‘narrative reviews’, whilst the meta-analysis provides pooled and precise estimates of training impact [44]. Rigorous heterogeneity and ‘certainty of evidence’ assessments were performed. To enable a better comparison of the quality of the selected studies, risk of bias assessments were performed using different criteria for randomised and non-randomised studies [18, 19]. Given the controversy surrounding conventional direct comparison methods for before-after studies in the literature—due to these methods’ non-independent nature [45]—less controversial ‘generic inverse variance methods’ were used in this review.

Irrespective of the study design (i.e. randomised or not) and population (i.e. physicians or medical students), training interventions were shown to reduce diagnostic errors, either in relative terms or due to an increase in scaled scores. Risk differences were used as pooled effect measures and typically suggested that certification errors decreased between 15 and 33% as a result of the training. Our findings also suggest that refresher trainings and regular dissemination of MCCOD quality assessment findings can further reduce diagnostic errors. However, due to the inherent limitations of using ‘absolute risk estimates’ like risk differences, we place greater emphasis on the direction of the effect measure and not on its size [46].

The pre-intervention percentages of all error categories selected for meta-analyses were below 51%, except for the category ‘absence of time intervals’, which ranged from 37 to 93% [30]. Based on post-intervention percentages, we therefore conclude that the intervention had a markedly favourable impact. For example, post-intervention errors were reduced to between 6.0 and 20.8% for ‘multiple causes in a single line’ and between 5.8 and 20.3% for ‘improper sequence’. For all interventions reviewed under the meta-analysis, post-training assessments were conducted between 6 months and 2 years after the intervention. Hence, the observed risk differences reflect the impact of the intervention over a longer time period, which is likely to be a more useful measure of the sustainability and effectiveness of training interventions than the more commonly used immediate post-training assessments.

The classification of errors into ‘minor’ or ‘major’ varies between studies. For example, ‘absence of time intervals’ was considered a major error in one study [32], but minor in several others [28, 30, 34, 36]. Some studies, although not all, classified ‘mechanism of death followed by a legitimate UCOD’ as an error [26, 28, 34, 36, 40]—furthermore, the scoring method and content of the assessment varied between studies [13, 14, 31, 35, 37]. Given this heterogeneity, it is important to focus on the patterns of individual errors and to be clear about how errors are defined before comparing results across studies.

Interestingly, we found greater variation across studies for post-intervention composite error indicators than for specific errors. Across the six interventions considered, post-intervention measures of ‘at least one major error’ ranged from 3.75 to 44.8% [30, 34, 40] whilst the fraction of cases with ‘at least one error’ ranged from 9 to 74.8% [30, 38, 41]. It is also interesting to note that doctors appeared to benefit less from the interventions compared to interns. This may in part reflect lower priority given by doctors to certification compared to patient management, possibly due to limited understanding of the public policy utility of data derived from individual death certificates.

In some studies, it is possible that a small proportion of post-intervention death certificates were actually completed by doctors who had not undergone training. This would have the effect of diluting the impact estimates of the training interventions. Further, constructing the causal sequence on the death certificate may involve a degree of public health and epidemiological consideration, in addition to clinical reasoning, which may be challenging for some doctors to incorporate into the certification process. This could explain the general lower improvement scores reported for the causal sequence. Finally, correct certification practices are heavily dependent on the attitudes of doctors towards the process, as well as the level of monitoring, accountability and feedback related to their certification performance.

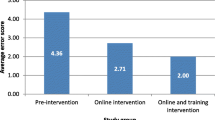

Most interventions were conducted as interactive workshops that enabled participants to undergo ‘on-the-spot’ training [13, 25,26,27,28,29,30, 33, 34, 36, 37, 41]. There is a paucity of studies with control groups that compare different interventions. One study concluded that a ‘face-to-face’ intervention was more effective than ‘printed instructions’ [13]. However, another concluded that an added ‘teaching session’ did not improve performance compared to an ‘education handout’, although both strategies were independently effective [13, 37]. More research is required to test the relative effectiveness of training methods, such as online interventions, compared to those requiring face-to-face interaction.

Our analysis suggests several cost-effective options for improving the quality of medical certification. To the extent that individual-level training of doctors in correct medical certification is costly, strengthening the curricula in medical schools designed to teach medical students how to correctly certify causes of death, and ensuring that these curricula are universally applied, is likely to be the most economical and sustainable way to improve the quality of medical certification. How and when this training is applied prior to completion of medical training is likely to vary from one context to another and will depend on local requirements for internship training. Training smaller groups of physicians as master trainers in medical certification and subsequently rolling out the training in provincial and district hospitals is likely to be an effective and economical interim measure to improve certification accuracy, as has been demonstrated in a number of countries [30].

In some countries, electronic death certification has been used as a means to standardise and improve the quality of cause of death data [32]. Electronic death certification can be helpful in avoiding certain errors such as illegible handwriting and reporting multiple causes on a single line (by not allowing the certifier to report more than one condition per line) [47]. An electronic certification system can also generate pop-up messages to remind the certifier not to report modes of dying, or symptoms and signs, as the underlying cause. However, electronic certification cannot improve the accuracy of the causal sequence or alleviate the reporting of competing causes, unspecified neoplasms or non-reporting of external causes. Furthermore, whilst cause of death data entered in free text format could improve the quality of medical certification [48] when electronic certification is enhanced with suggested text options and ‘pick’ lists, this can lead to systematic errors in medical certification.

This review has several limitations. The studies examined in this review included a diverse range of participants and intervention methods and were conducted in various cultural settings. The duration and modality of the training interventions varied substantially across studies. Only three interventions were randomised, and due to the diversity in non-randomised studies, the potential influence of confounding factors on the quality parameters assessed cannot be excluded. These factors were, however, considered in risk of bias and heterogeneity assessments.

There is also considerable subjectivity in the assessment of some criteria, including ‘legibility’ and ‘incorrect sequence’ that could lead to bias in the assessments. Despite outcomes usually being pre-defined, adherence to risk-lowering strategies, such as ‘blinding the assessor’, was often not described [14, 15, 25, 26, 28,29,30,31,32,33, 36, 38,39,40,41,42]. Despite the inclusion of only three interventions, each meta-analysis included an adequate number of at least 1500 observations per group. Even though funnel plots were presented for gross exploration of publication bias, generally the interpretation of these are recommended for meta-analyses with more than 10 comparisons. Furthermore, little evidence is available on the appropriateness of funnel plots drawn with risk differences [49].

Conclusions

Both pooled estimates and narrative findings demonstrate the effectiveness of training interventions in improving the accuracy of death certification. Meta-analyses revealed that these interventions are effective in reducing diagnostic errors, including ‘no time interval’, ‘using abbreviations’, ‘improper sequence’, ‘multiple causes per line’ with moderate certainty and ‘ill-defined underlying CoDs’ with ‘low certainty’. In general, ‘no time interval’ was observed to be the most common error, and ‘illegibility’ the least observed amongst pre-intervention errors. ‘No time interval’ appeared to be the error with most improvement following intervention, as evidenced by both the pooled and narrative findings.

Strategic investment in MCCOD training activities will enable long-term improvements in the quality of cause of death data in CRVS systems, thus improving the utility of these data for health policy. Whilst these findings strengthen the evidence base for improving the quality of MCCOD, more research is needed on the relative effectiveness of different training methods in different study populations. From the limited evidence thus far, our meta-analysis indicates that training doctors and interns in correct cause of death certification can increase the accuracy of certification and should be routinely implemented in all settings as a means of improving the quality of cause of death data.

Availability of data and materials

All data generated or analysed during this study are included in this published article (and its supplementary information files).

References

Huffman GB. Death certificates: why it matters how your patient died. Am Fam Physician. 1997;56(5):1287–8 90.

World Health Organization, University of Queensland. Improving the quality and use of birth, death and cause-of-death information: guidance for a standards-based review of country practices. 2010.

NCHS. Physicians’ Handbook on Medical Certification of Death. Hyattsville, Maryland. DHHS Publication No (PHS): 2003–1108: U. S Department of Health and Human Services Centres for Disease Control and Prevention NCHS.; 2004.

Byass P. Who needs cause-of-death data? Plos Med. 2007;4(11):e333.

Sibai AM. Mortality certification and cause-of-death reporting in developing countries: editorials Abla Mehio Sibai. Bull World Health Organ. 2004;82(2):83.

WHO. International Statistical Classification of Diseases and Related Health Problems. 10th Revision. Vol. 2. Geneva: World Health Organization; 1993.

World Health Organization. ICD10 International statistical classification of diseases and related health problems- volume 2- Instruction Manual. 5th ed; 2016.

Aung E, Rao C, Walker S. Teaching cause-of-death certification: lessons from international experience. Postgrad Med J. 2010;86(1013):143–52.

Jimenez-Cruz A, Leyva-Pacheco R, Bacardi-Gascon M. Errors in the certification of deaths from cancer and the limitations for interpreting the site of origin. Salud Publica Mex. 1993;35(5):487–93.

Jougla E, Pavillon G, Rossollin F, De Smedt M, Bonte J. Improvement of the quality and comparability of causes-of-death statistics inside the European Community. EUROSTAT Task Force on “causes of death statistics”. Rev Epidemiol Sante Publique. 1998;46(6):447–56.

Rosenberg HM. Improving cause-of-death statistics. Am J Public Health. 1989;79(5):563–4.

Tangcharoensathien V, Faramnuayphol P, Teokul W, Bundhamcharoen K, Wibulpholprasert S. A critical assessment of mortality statistics in Thailand: potential for improvements. Bull World Health Organ. 2006;84(3):233–8.

Lakkireddy DR, Basarakodu KR, Vacek JL, Kondur AK, Ramachandruni SK, Esterbrooks DJ, et al. Improving death certificate completion: a trial of two training interventions. J Gen Intern Med. 2007;22(4):544–8.

Degani AT, Patel RM, Smith BE, Grimsley E. The effect of student training on accuracy of completion of death certificates. Med Educ Online. 2009;14:17.

Walker S, Rampatige R, Wainiqolo I, Aumua A. An accessible method for teaching doctors about death certification. Health Inf Manag. 2012;41(1):4–10.

Davaridolatabadi N, Sadoughi F, Meidani Z, Shahi M. The effect of educational intervention on medical diagnosis recording among residents. Acta Inform Med. 2013;21(3):173–5.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700.

Guyatt GH, Oxman AD, Vist G, Kunz R, Brozek J, Alonso-Coello P, et al. GRADE guidelines: 4. Rating the quality of evidence--study limitations (risk of bias). J Clin Epidemiol. 2011;64(4):407–15.

Sterne JA, Hernan MA, Reeves BC, Savovic J, Berkman ND, Viswanathan M, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919.

Higgins JPT, Green S (editors). What is heterogeneity? : The Cochrane Colloboration; 2011. Available from: https://handbook-5-1.cochrane.org/chapter_9/9_5_1_what_is_heterogeneity.htm. Accessed 30 Jun 2020.

Higgins JPT, Green S (editors). Effect estimates and generic inverse variance meta-analysis: The Cochrane Colloboration; 2011. Available from: https://handbook-5-1.cochrane.org/chapter_7/7_7_7_1_effect_estimates_and_generic_inverse_variance.htm. Accessed 30 Jun 2020.

Higgins JPT, Green S (editors). Strategies for addressing heterogeneity: The Cochrane Colloboration; 2011. Available from: https://handbook-5-1.cochrane.org/chapter_9/9_5_3_strategies_for_addressing_heterogeneity.htm. Accessed 30 Jun 2020.

Guyatt GH, Oxman AD, Santesso N, Helfand M, Vist G, Kunz R, et al. GRADE guidelines: 12. Preparing summary of findings tables-binary outcomes. J Clin Epidemiol. 2013;66(2):158–72.

Balshem H, Helfand M, Schunemann HJ, Oxman AD, Kunz R, Brozek J, et al. GRADE guidelines: 3. Rating the quality of evidence. J Clin Epidemiol. 2011;64(4):401–6.

Abós R, Pérezc G, Rovirae E, Canelab J, Domènechf J, Bardinag JR. Programa piloto para la mejora de la certificación de las causas de muerte en atención primaria en Cataluña (A pilot program to improve causes of death certification in primary care of Catalonia, Spain). Gac Sanit 2006;20(6):450–6.

Ali NMA, Hamadeh RR. Improving the accuracy of death certification among secondary care physicians. Bahrain Med Bull. 2013;35(2):56–9.

Alonso-Sardon M, Iglesias-de-Sena H, Saez-Lorenzo M, Chamorro Fernandez AJ, Salvat-Puig J, Miron-Canelo JA. B-learning training in the certification of causes of death. J Forensic Legal Med. 2015;29:1–5.

Azim A, Singh P, Bhatia P, Baronia AK, Gurjar M, Poddar B, et al. Impact of an educational intervention on errors in death certification: an observational study from the intensive care unit of a tertiary care teaching hospital. J Anaesthesiol Clin Pharmacol. 2014;30(1):78–81.

Canelo JAM, Gonzalez MCS. Eficacia de un seminario informativo en la certificacion de causas de muerte (efficacy of an informative seminar in the certification of causes of death). Rev Esp Salud Publica. 1995;69:227–32.

Hart JD, Sorchik R, Bo KS, Chowdhury HR, Gamage S, Joshi R, et al. Improving medical certification of cause of death: effective strategies and approaches based on experiences from the Data for Health Initiative. BMC Med. 2020;18(1):74.

Hemans-Henry C, Greene CM, Koppaka R. Integrating public health-oriented e-learning into graduate medical education. Am J Prev Med. 2012;42(6 Suppl 2):S103–6.

Miki J, Rampatige R, Richards N, Adair T, Cortez-Escalante J, Vargas-Herrera J. Saving lives through certifying deaths: assessing the impact of two interventions to improve cause of death data in Peru. BMC Public Health. 2018;18(1):1329.

Myers KA, Eden D. Death duties: workshop on what family physicians are expected to do when patients die. Can Fam Physician. 2007;53(6):1035–8.

Myers KA, Farquhar DR. Improving the accuracy of death certification. CMAJ. 1998;158(10):1317–23.

Pain CH, Aylin P, Taub NA, Botha JL. Death certification: production and evaluation of a training video. Med Educ. 1996;30(6):434–9.

Pandya H, Bose N, Shah R, Chaudhury N, Phatak A. Educational intervention to improve death certification at a teaching hospital. Natl Med J India. 2009;22(6):317–9.

Pieterse D, Groenewald P, Bradshaw D, Burger EH, Rohde J, Reagon G. Death certificates: let's get it right! S Afr Med J. 2009;99(9):643–4.

Selinger CP, Ellis RA, Harrington MG. A good death certificate: improved performance by simple educational measures. Postgrad Med J. 2007;83(978):285–6.

Suarez LC, Lopez CM, Gil JC, Sanchez CN. Aprendizaje y satisfaccion de los talleres de pre y postgrado de medicina para la mejora en la certificacion de las causas de defuncion, 1992-1996 (Learning and Satisfaction of the Workshops for Pre and Postgraduates of Medicine for the Improvement in the Certification of the Causes of Death, 1992-1996). Rev Esp Salud Publica. 1998;72(3):185–95.

Sudharson T, Karthikeyan KJM, Mahender G, Mestri SC, Singh BM. Assessment of standards in issuing cause of death certificate before and after educational intervention. Indian J Forensic Med Toxicol. 2019;13(3):25–8.

Villar J, Perez-Mendez L. Evaluating an educational intervention to improve the accuracy of death certification among trainees from various specialties. BMC Health Serv Res. 2007;7:183.

Wood KA, Weinberg SH, Weinberg ML. Death certification in Northern Alberta: error occurrence rate and educational intervention. Am J Forensic Med Pathol. 2020;41(1):11–7.

University of Melbourne. Avoiding ill-defined and unusable underlying causes 2018. Available from: https://crvsgateway.info/Avoiding-ill-defined-and-unusable-underlying-causes~343.

Garg AX, Hackam D, Tonelli M. Systematic review and meta-analysis: when one study is just not enough. Clin J Am Soc Nephrol. 2008;3(1):253–60.

Cuijpers P, Weitz E, Cristea IA, Twisk J. Pre-post effect sizes should be avoided in meta-analyses. Epidemiol Psychiatr Sci. 2017;26(4):364–8.

Newcombe RG, Bender R. Implementing GRADE: calculating the risk difference from the baseline risk and the relative risk. Evid Based Med. 2014;19(1):6–8.

Pinto CS, Anderson RN, Martins H, Marques C, Maia C, do Carmo Borralho M. Mortality Information System in Portugal: transition to e-death certification. Eurohealth (Lond). 2016;22(2):1–53.

Lassalle M, Caserio-Schönemann C, Gallay A, Rey G, Fouillet A. Pertinence of electronic death certificates for real-time surveillance and alert, France, 2012-2014. Public Health. 2017;143:85–93.

Higgins JPT, Green S (editors). Recommendations on testing for funnel plot asymmetry 2011. Available from: https://handbook-5-1.cochrane.org/chapter_10/10_4_3_1_recommendations_on_testing_for_funnel_plot_asymmetry.htm.

Acknowledgements

The authors would like to acknowledge Sara Hudson and Avita Streatfield of the University of Melbourne for proofreading and editing the manuscript.

Funding

The subscription for the DistillerSR application was funded by the Bloomberg Philanthropies data for health initiative of the University of Melbourne.

Author information

Authors and Affiliations

Contributions

USHG and ADL conceptualised the review. ADL supervised and guided the overall review. USHG, PKBM, JS, HC, HL, DM and ADL contributed in developing the protocol. USHG obtained the registration of the review. PKBM and JS conducted the study search. PKBM, JS and LM contributed in study selection and risk of bias assessments. PKBM, JS and JH contributed in the data extraction. PKBM conducted the meta-analysis. DM and USHG did the overall coordination of the review. All authors contributed in drafting of the initial manuscript. JH, HC, HL, LM, DM and ADL were involved in revising the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval is not applicable for this review of previously conducted studies.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Figure S1.

Search strategy used in the review of literature.

Additional file 2: Figure S2.

Selection criteria used in study selection.

Additional file 3: Tables S1-S5.

Data used for meta-analysis.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Gamage, U.S.H., Mahesh, P.K.B., Schnall, J. et al. Effectiveness of training interventions to improve quality of medical certification of cause of death: systematic review and meta-analysis. BMC Med 18, 384 (2020). https://doi.org/10.1186/s12916-020-01840-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-020-01840-2