Abstract

Background

Symptoms in patients with advanced cancer are often inadequately captured during encounters with the healthcare team. Emerging evidence demonstrates that weekly electronic home-based patient-reported symptom monitoring with automated alerts to clinicians reduces healthcare utilization, improves health-related quality of life, and lengthens survival. However, oncology practices have lagged in adopting remote symptom monitoring into routine practice, where specific patient populations may have unique barriers. One approach to overcoming barriers is utilizing resources from value-based payment models, such as patient navigators who are ideally positioned to assume a leadership role in remote symptom monitoring implementation. This implementation approach has not been tested in standard of care, and thus optimal implementation strategies are needed for large-scale roll-out.

Methods

This hybrid type 2 study design evaluates the implementation and effectiveness of remote symptom monitoring for all patients and for diverse populations in two Southern academic medical centers from 2021 to 2026. This study will utilize a pragmatic approach, evaluating real-world data collected during routine care for quantitative implementation and patient outcomes. The Consolidated Framework for Implementation Research (CFIR) will be used to conduct a qualitative evaluation at key time points to assess barriers and facilitators, implementation strategies, fidelity to implementation strategies, and perceived utility of these strategies. We will use a mixed-methods approach for data interpretation to finalize a formal implementation blueprint.

Discussion

This pragmatic evaluation of real-world implementation of remote symptom monitoring will generate a blueprint for future efforts to scale interventions across health systems with diverse patient populations within value-based healthcare models.

Trial registration

NCT04809740; date of registration 3/22/2021.

Similar content being viewed by others

Background

Patients with cancer experience a myriad of symptoms, many of which are inadequately assessed during encounters with the healthcare team. Previous studies showed that patients with cancer report symptom severity up to 40% higher than their physician’s report and a substantial proportion of symptoms are missed entirely [1,2,3]. Patient-reported outcomes (PROs) are emerging as a potential solution to address this gap in symptom identification and ultimately to improve patient management. PROs are defined as “information about a patient’s health status that comes directly from the patient,” which can include symptoms as well as other key patient-reported data such as quality of life and physical function [4]. In patients with advanced cancer, Basch and colleagues assessed weekly electronic home-based PRO symptom monitoring with automated alerts to clinicians (remote symptom monitoring) within a single-site randomized clinical trial, finding reduced emergency department (ED) and hospital visits, improved health-related quality of life, and a 5-month increase in overall survival [5]. Other studies of ePROs have similarly found benefits in terms of efficiency of symptom assessment [6,7,8,9]; patient-clinician communication and satisfaction [10,11,12]; symptom control and well-being [9, 13,14,15,16,17,18]; frequency of hospitalizations [19]; and survival [20].

Despite evidence of benefit, general oncology practices have lagged in adopting remote symptom monitoring into routine practice [21]. Prior studies that utilized research funding to support remote symptom monitoring administration, achieving high compliance with 80–85% of patients in studies being willing and able to self-report symptoms in remote symptom monitoring trials [16]. However, patients enrolling in randomized trials using remote symptom monitoring may not represent the general cancer population and/or barriers to participation exist for patients who are not on clinical trials. For example, only 9% of one study’s participants were Black, and few lived in rural areas [5]. Limited data are available on the use of remote symptom monitoring in diverse populations and incorporating remote symptom monitoring into routine clinical care, at scale, is expected to require considerable staff engagement and modifications to the healthcare delivery system given the complex nature of the intervention. Thus, a substantial knowledge gap remains regarding optimal strategies for remote symptom monitoring implementation in real-world settings where all patients in a cancer center participate, including diverse populations that may differ in their participation, barriers, and outcomes.

One emergent trend that could potentially support the implementation of remote symptom monitoring in real-world settings is the transition toward value-based health care. In 2016, the Centers for Medicare and Medicaid Innovation launched the Oncology Care Model (OCM), a nationwide payment reform demonstration project [22]. The OCM and the proposed Oncology Care First Model (Medicare’s proposed payment reform project) require use of navigators for cancer care coordination in all > 100 participating practices across the US [22]. The patient navigation workforce is ideally positioned to assume a leadership role in remote symptom monitoring implementation, as collecting and responding to PROs aligns with their designated roles and responsibilities, such as assessing functional status, depression, and distress while patients are present in clinic [23]. The goals of navigation include enhancing care coordination and proactively managing patient concerns [24]. Patient navigation programs have proven efficacious for increasing access to care [25, 26], care coordination [27], symptom management [28, 29], and reducing cost [30, 31]. These demonstrated benefits contribute to the growing number of nurse and lay (non-clinical) navigation programs, particularly for practices in communities with limited resources [32].

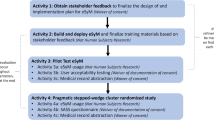

The OCM recommendation to use navigators in cancer centers was based, in part, on a 2012 Center for Medicare and Medicaid Innovation project conducted by the University of Alabama at Birmingham (UAB) and University of South Alabama Mitchell Cancer Institute (MCI), which showed lower cost with higher quality metrics from implementation of patient navigation services across the southeastern U.S. [24, 33] We developed a lay-navigator led approach to remote symptom monitoring which is being implemented as part of routine care at UAB and MCI with support from payment reform initiatives and grant funding. Remote symptom monitoring is predicated on hypotheses that electronically captured patient-reported symptoms will result in increased clinical team awareness and prompt clinical action, resulting in improved symptom management and reduced symptom burden. These improvements are expected to have the downstream effects of lowering distress and improving physical function, which will translate to improved tolerance of chemotherapy, reduced hospital utilization and cost, and improved survival (Fig. 1). This pragmatic study will evaluate implementation of navigator-delivered remote symptom monitoring for all patients with cancer across two practice sites (Aim 1); examine the barriers, facilitators, and implementation strategies used in implementing navigator-delivered remote symptom monitoring (Aim 2); and assess the impact of remote symptom monitoring on clinical and utilization outcomes for a general cancer population receiving medical therapies (e.g., chemotherapy, immunotherapy, targeted therapy) (Aim 3) for all patients and for diverse populations. This study will ultimately generate a blueprint of implementation strategies for sustainable, navigator-delivered remote symptom monitoring that does not rely on research funding.

Methods

Study design

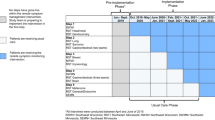

This hybrid type 2 study design [34], simultaneously evaluates the implementation and the effectiveness of remote symptom monitoring as standard of care. Recruitment to the system-wide phase of implementation began in May 2021. At the time of manuscript submission, the remote symptom monitoring implementation and evaluation of the program is actively ongoing and will continue through May 2026. This mixed methods study will have two key components: (1) secondary data analysis to evaluate key implementation (e.g., service penetrance and provider adoption/penetrance) and patient outcomes (e.g., symptom burden, healthcare utilization, end-of-life care, cost of care, survival); and (2) qualitative evaluation at key time points will be utilized to assess barriers and facilitators to remote symptom monitoring, implementation strategies, fidelity to implementation strategies, and perceived utility of these strategies (Table 1). For quantitative outcomes, this study will utilize a pragmatic approach evaluating real-world data collected as part of routine care. We will use a mixed methods approach (QUAL + QUAN) [35] to triangulate the qualitative and quantitative findings using parallel side-by-side comparisons to integrate both data sources to interpret findings and to finalize a formal implementation blueprint. The study schema is shown in Fig. 2.

This study was approved by a central Institutional Review Board from UAB, which served as the IRB record for this project with secondary approval from MCI and University of North Carolina Chapel Hill. Evaluating the implementation and impact of navigator-delivered remote symptom monitoring trial is supported by the National Institute for Nursing Research (1R01NR019058–01).

Implementation setting

UAB and MCI are the academic medical institutions in the state of Alabama and treat a largely nonoverlapping but similar population. These institutions serve historically diverse populations, including Black patients (25%), rural residents (30%), socioeconomically disadvantaged people (10% dual eligible for Medicare and Medicaid), and 15% of residents have a high school education or less [36]. Alabama has a large number of census block groups with high Area Deprivation Index scores, reflecting the low socioeconomic status of the state [37]. Internet connectivity has been a challenge in Alabama, but in May 2019, Alabama passed House Bill 400 which allows electrical providers to use existing and future power networks for high-speed internet in rural communities [38]. In recent years, more than $62 M in grants were awarded to community partners for the provision of high-speed internet in Alabama communities with limited access [39]. For our study in routine care, philanthropic support is available from Blue Cross Blue Shield of Alabama (BCBS-AL) for mobile phones for patients without high-speed internet access at home.

Standard-of-care components

Remote symptom monitoring implementation

Remote symptom monitoring will be implemented as standard of care with all patients offered the intervention, thus no consent will be required. The remote symptom monitoring process and technology components are shown in Fig. 3. Physicians will introduce the program to new patients as an approach for routine monitoring. Patients who are on chemotherapy, targeted therapy, or immunotherapy will be approached for enrollment by lay navigators. The navigator will explain the rationale for remote symptom monitoring, help the patient select the e-mail or text option for symptom surveys, and initiate the video-based self-enrollment process. The navigators guide the patients through the technical aspects of participation. Patients will be allowed to opt out of participation. After enrollment, patients will be asked to complete a weekly symptom assessment using the ePRO version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) [40]. If patients do not complete their survey, two reminders will be sent, followed by a notification to the lay navigator to call the patient. Lay navigators will be responsible for retention in remote symptom monitoring, which will include monitoring for survey non-completion and assessing reasons for lack of participation. If severe symptoms or a change in symptoms are reported, an automatic notification will be sent to the nurse or nurse navigator as a message in the electronic medical record (EMR). The nurse will call the patient, coordinate care, and communicate with the physician as needed. In the clinic, members of the clinical team will review symptoms within an integrated dashboard in the EMR and adjust management as needed.

Staff training

We will offer multiple provider remote symptom monitoring training sessions and keep detailed logs of the number of providers participating in training. The initial training will be led by the PI or site PI and will include a 30-min didactic presentation on the purpose of remote symptom monitoring, the planned workflow, preliminary data from participating institutions, and a brief overview of the project evaluation plan. If a provider does not attend training, we will document the reason for declining participation. The frequency and percentage of clinical teams (physicians, nurses, navigators) participating in training will be recorded. Of note, > 90% participation from staff is expected because training will be integrated into routine staff education. These didactic trainings will be hands-on, practical training led by a super user of the platform. For lay navigators, this includes shadowing followed by observed navigator-led program enrollment over the course of 2–3 weeks. For nurses, hands-on training is completed in approximately 15–20 min and focused on locating alerts in the medical record, closing alerts, and reviewing summary dashboards. Additionally, the super user will also complete routine check-ins with the clinical teams and provide additional review of technology components as needed.

Research components on implementation

Implementation frameworks

The Consolidated Framework for Implementation Research (CFIR) by Damschroder and colleagues is ideally positioned to frame evaluation of multi-level barriers to implementation and implementation strategies deployed as part of remote symptom monitoring [41]. The CFIR starts with the key intervention characteristics, but also hypothesizes that the inner setting, outer setting, characteristics of individuals involved, and implementation process all influence successful implementation [41]. The CFIR will also guide qualitative evaluations. Proctor’s Implementation Outcome Framework [42] will be used in assessment of implementation outcomes, providing a guide to practical implementation outcomes for both ongoing quality monitoring as well as to inform future practice on expected implementation. We will operationalize Proctor’s outcomes using Stover’s PRO Implementation science metrics for evaluating PRO initiatives in usual care settings. These metrics integrate prior frameworks to assess the relationship between remote symptom monitoring implementation strategies, proximal variables or mediators, implementation science outcomes, and patient clinical outcomes [43].

Implementation strategy selection

We anticipate barriers (e.g., potential for staff burden) to be encountered during implementation, resulting in the need for additional implementation strategies. We will use the CFIR-ERIC (Expert Recommendations for Implementing Change) Barrier Busting Query Tool V 1.0 and Intervention Mapping [44] to identify appropriate implementation strategies to overcome additional barriers encountered [45]. This approach has been successfully used by Howell and colleagues in implementation of self-management support for symptoms in patients with cancer [46]. Intervention mapping is a rigorous strategy design approach combining theory, evidence, and practice characteristics, which seeks to identify any additional implementation strategies necessary for targeting barriers identified within the proposed study’s qualitative interviews.

Implementation outcome evaluation (quantitative)

Evaluate implementation of navigator-delivered remote symptom monitoring for all cancer patients across multiple practice sites (aim 1)

Sampling and recruitment

This study will include two groups of participants identified through medical record review: (1) patients and (2) clinical team members. The patient group will be composed of all adults receiving their initial treatment with chemotherapy, targeted therapies, or immunotherapies at UAB or MCI from 2021 to 2026 (regardless of whether they completed remote symptom monitoring). Patients can receive concurrent treatments (e.g. radiation, surgery). Patients aged 18 years or older, all races and ethnicities, and all insurance types will be included for the duration of their treatment. Patients who are seen for a second opinion or to receive hormone therapy alone will be excluded. For clinical teams, we will include all medical and gynecologic oncologists, nurses, and navigators (nurse and lay) who care for patients receiving chemotherapy. There are no exclusion criteria for providers.

Data collection

Data from this study will be collected at multiple levels, including the cancer center, clinical team, and patients. Training materials, logs, and minutes will be captured and reviewed on an ongoing basis. The following data from the EMR will be abstracted: age, sex, race and ethnicity, insurance status, home address, cancer type, cancer stage, diagnosis date, treatments received, and dates of treatments. Home address will be utilized to identify urban or rural residence using Rural-Urban Commuting Area (RUCA) codes and to characterize their Area Deprivation Index scores, which is a combined measure of socioeconomic disadvantage [47]. The providers’ roles will be identified and recorded using personnel lists available through clinic supervisors (e.g. physician, clinic nurse, nurse navigator, lay navigator). Remote symptom monitoring data will be abstracted from the PROmpt™ system (Patient Reported Outcome mobile platform technology), which is integrated into the EMR. From this system, data will be abstracted monthly and will include: member of the team responsible for survey compliance, individual responsible for alert management, physician, date enrolled, reason for not enrolling, survey administration date, date of reminders for survey completion, survey completion date, survey responses, alerts, time to close alert, and responses to alert. Additional chart review will be completed as needed to supplement missing data.

Outcomes

System changes

Given the multi-level influences on our implementation outcomes, we will record in real-time the date and details of any changes in team staffing or organization, institutional policy changes, or national policy changes. Staffing and institutional policy changes may be provided by a member of the service line or OCM leadership teams.

Implementation outcomes

Implementation outcomes are based on Proctor’s Implementation Framework [42], with personalization of these outcomes using Stover’s PRO implementation science outcome metrics [43]. Patient service penetrance will be evaluated using the following outcomes: (1) percentage of patients approached and enrolled of all new adult patients initiating treatment; (2) percentage completing at least one remote symptom monitoring assessment; (3) percentage completing assessments at 3 months, 6 months, and percentage of all expected surveys. A 75% completion rate is expected, which is a 10% reduction observed in randomized trials due to application of ePRO surveys in a real-world setting [16]. Provider adoption and penetration will be assessed using training logs and medical record documentation. We will include the following outcomes: (1) percentage of alerts with a response (with > 90% response rates to remote symptom monitoring symptom alerts expected because remote symptom monitoring will be integrated into the clinical team’s workflow and EMR); (2) response time for alerts; (3) types of responses to alerts. Reports on selected outcomes will be provided monthly to the implementation team as an audit and feedback mechanism to allow for identification of implementation challenges.

Intervention fidelity

Recognizing minor adaptations will be necessary to move implementation from a clinical trial to real-world clinics, intervention fidelity will be tracked through monthly reviews. Adaptations will be reviewed with the research team at least quarterly for the purpose of clearly reporting adaptations to evidence-based interventions using the FRAME (Framework for Modifications and Adaptions) by Stirman and colleagues [48]. We will include details about adaptations to the intervention within a final implementation blueprint.

Analysis

We will first descriptively summarize baseline health system characteristics, participant demographics, and study outcomes. We will examine differences in patient characteristics between those who participate and those who do not using bivariate measures of association (e.g., Cohen’s d, Cramer’s V). For patient outcomes, site and navigation team will be treated as fixed effects when needed, as all navigation teams will be included. The primary analysis will be conducted using logistic regression models to estimate the service penetration proportions of interest throughout the project. Model-predicted means and inverse-link transformations will be used to estimate the proportions of interest and respective 95% confidence intervals. Secondary analysis for patient outcomes will be conducted using logistic regression models to evaluate the association between patient characteristics and penetration outcomes. Patient characteristics will include age, sex, race and ethnicity, rurality (estimated using RUCA codes), driving distance from cancer care site, and socio-economic disadvantage status (estimated using the Area Deprivation Index). For clinician metrics, generalized linear mixed models with random effect for clinician team will be used to estimate the monthly response to alerts and time to response. A False Discovery Rate approach [49] will be used to correct for multiple inference when appropriate (10% FDR).

Sample size considerations

UAB and MCI see approximately 4000 patients and 2600 new patients per year, respectively. We anticipate approximately 35% of patients to be receiving chemotherapy, thus > 2000 patients will be eligible to participate in remote symptom monitoring each year. We expect implementation will increase over the 5 year study period. In Year 1, we anticipate at least 30% of patients to be approached (n = 600) with increases by 10% each year to 70% (n = 1400) at Year 5, for a total of 5000 patients over the duration of the funding period. As an opt-out program, we predict that 75% of patients approached will be willing to complete ePROs based on our prior PRO work and recent pilot. Thus, we anticipate at least 3750 will enroll in remote symptom monitoring. Under these assumptions, the expected large sample size provides high power and precision; however, inferences apply to patient populations from similar systems and in adjacent geographical areas. For the expected 40% increase in patients approached between Year 1 and Year 5, the 95% confidence interval is 36–44% (from 30 to 70%). For the expected 75% patient participation if approached in Years 1–5, the 95% confidence interval is 73–77%.

Implementation outcome evaluation (qualitative)

Examine the barriers, facilitators, and implementation strategies used in implementing navigator-delivered remote symptom monitoring (aim 2)

Sampling and recruitment

For patients, each health system will identify up to 20 patients in Years 1 (baseline), 2 (early implementation), and 4 (maintenance) to participate in individual interviews. Patient participants will be selected using the purposive sampling technique [50] for variation in perspectives. We expect engagement of patients of different races and ethnicities, distances traveled for cancer care, levels of prior computer use, education levels, and insurance types. For providers, sites will identify a convenience sample of up to ten staff for interviews at each site. The interviewed staff will include the nursing supervisor and champions representing physicians, nurses, lay navigators, nurse navigators, and administrators. Different patients and providers will be selected at each time point. The sample will be expanded in Year 4 if thematic saturation is not reached for the key themes of barriers, implementation strategies, and benefit of strategies to address barriers [51].

Data collection and outcomes

A multi-disciplinary team including experts in medical oncology, gynecologic oncology, psychology, social and behavioral science, and implementation science will generate an a priori thematic schema. Interviews will be designed to elucidate (1) acceptability and (2) barriers to remote symptom monitoring implementation using the determinants; (3) approaches to operationalizing implementation strategies using existing literature on implementation strategies and ePRO delivery within clinical trials; and (4) perceptions of how well selected implementation strategies addressed barriers and recommendations for improvement. In-depth interviews will be led jointly by the site PIs and experts in program implementation using interview guides developed by the multidisciplinary research team. The interviews will elucidate important contextual factors based on the CFIR, barriers to implementation, and how implementation strategies are used to overcome these barriers, which may influence health systems’ ability to optimally implement and sustain remote symptom monitoring and ultimately improve outcomes. Examples of implementation strategies and associated CFIR targets, barriers, team member responsible, and actions are shown in Table 2. All audio files will be uploaded to a study computer and stored in password-protected files. Audio files will be transcribed verbatim.

Changes to the base implementation strategy package will be considered an adaptation, which will be recorded using the FRAME framework by Stirman and colleagues for reporting adaptations to evidence-based interventions [48]. Implementation strategy fidelity will be assessed through formal tracking of site base implementation strategies, added implementation strategies, adaptations made and why, and perceptions of the utility of strategies deployed included in the implementation blueprint.

Analysis

The analytic strategy is primarily informed by content analysis, which examines the language to classify the text into categories that represent key concepts within the interviews [52]. Qualitative coding and content analysis will consist of identifying quotations which express themes related to remote symptom monitoring barriers, use of implementation strategies, fidelity to implementation strategies, and perception of specific implementation strategy benefits [53]. In the initial stages of coding, two independent coders with health behavior and medical anthropology expertise will read the transcripts and develop an open coding scheme, which is the process of labeling portions of text to identify all ideas, themes, and issues suggested by the data [54]. Analytic codes constructed in the context of open coding are provisional and will be grounded within the data [55]. The final version of the coding schema will be reviewed and finalized by the multi-disciplinary team, which will include the two primary coders, an oncologist, a gynecologic oncologist, a nurse researcher, and implementation scientists. The two primary coders will subsequently use NVivo software (QRS International) to conduct “focused coding,” which includes a detailed analysis of themes identified during open coding. Any discrepancies will be resolved by a third coder. The process will be repeated until thematic saturation is reached, where no new categories or relevant themes emerge [51]. Data from interviews will be analyzed at an aggregate and health system-specific level. Summaries will be reviewed with the multi-disciplinary team and used to modify the implementation blueprint by adding or removing implementation strategies.

Sample size considerations

Sampling for qualitative inquires is sequential and targeted to individuals who can provide insights on the study processes. Typically, qualitative approaches involve < 50 participants [56]. If thematic saturation is not reached, we will increase the number of participants. Access to a diverse group of stakeholders in the two health systems will provide a sufficient participant pool to carry out the inquiry. If differences in qualitative or quantitative outcomes are observed in Years 1–3 for a specific sub-population (e.g. older adults, patients with low socioeconomic status), we will expand Years 4–5 qualitative interviews to include an additional 10–20 participants of these sub-populations.

Patient outcome evaluation (quantitative)

Assess the impact of remote symptom monitoring on clinical and utilization outcomes (aim 3)

Sampling and recruitment

Patients included in Aim 1 will be included in Aim 3.

Data collection

In addition to data described above, Aim 3 data will include claims data provided quarterly by Medicare and every 6 months from Blue Cross Blue Shield of Alabama. These data will be utilized to assess patient characteristics, clinical characteristics, healthcare utilization, survival, and cost to the payer.

Patient outcomes

Outcomes will include patient-reported functioning and distress (patient surveys); rates of healthcare utilization (emergency department visits, hospitalizations, intensive care unit admissions), treatment duration, and total cost to payer (claims data); and overall survival (claims data).

Analysis

Patient-reported outcomes and utilization trends will be described for all patients, including patients receiving and not receiving remote symptom monitoring. Latent class models stratified by cancer type will be used to explore 6-month symptom trajectory groups and the relationships between group membership and relevant covariates such as age, cancer type/stage, and socioeconomic disadvantage status. We will estimate a propensity score (the probability of being a remote symptom monitoring participant given the values of relevant patient characteristics) and use it to match remote symptom monitoring participants with historical controls using radius matching. If needed, we will use matching with replacement to include as many remote symptom monitoring patients as possible in the analyses. To minimize the potential confounding effect of change or improvement of cancer therapy over time, we will restrict the pool of controls to patients initiating treatment up to 3 years before the implementation of remote symptom monitoring intervention. Given this time restriction, it is possible that matching with replacement (i.e., a control patient could be matched with more than one ePRO patient) will be needed to include as many remote symptom monitoring patients as possible. We will then use generalized linear or generalized linear mixed models, as appropriate, to conduct between-group comparisons on ePROs, healthcare utilization, and cost of care. For survival analysis, we will recode and censor the survival time of the controls as appropriate to match the potential follow-up time of the remote symptom monitoring patients. We will use time-to-event to estimate and provide inferences on survival differences. A False Discovery Rate approach will be used to correct for multiple inference when appropriate (10% FDR).

Synthesis into implementation blueprint

An initial set of implementation strategies to be used by sites was identified for the initial “formal implementation blueprint.” [57, 58] Table 2 includes CFIR targets, planned implementation strategies to address barriers, and qualitative evaluation prompts. The selected strategies focus on building buy-in, educating both stakeholders and participants in the intervention, restructuring the workforce and technology to facilitate uptake, and developing quality management strategies to facilitate successful implementation [57, 58]. To promote intervention sustainability, the investigative team will invite key external stakeholders to participate in annual meetings to discuss implementation progress, review implementation strategies, and assess available data on patient and health system outcomes. Stakeholders will include participants from the investigative team, electronic health record company, patient-reported outcome platform company, BCBS-AL, UAB and MCI cancer center directors, key administrative leadership, the director for the Office of Community Outreach, and a patient advocate. Detailed meeting notes will be captured. These discussions will generate continued engagement and support for the intervention and improvements to the implementation blueprint. We will provide a final implementation blueprint to stakeholders that can be used with other health systems for implementing navigator-led remote symptom monitoring.

Discussion

Both Medicare’s proposed Oncology First Model and the American Society of Clinical Oncology’s Oncology Medical Home demonstration project [59, 60] are proposed to require implementation of patient-reported outcomes in routine care. This policy context provides a tremendous opportunity in terms of both resources and cultural pressure for implementation. At the same time, inclusion of remote symptom monitoring in payment models exposes vulnerabilities due to the lack of rigorous data on implementation outcomes available for oncology practices. Without data to guide implementation, timely integration of this intervention into practice will be challenging. The application of implementation science methodology to practice transformation activities required within payment reform fills a substantial knowledge gap. In addition, the unique alignment of financial incentives for payers, practices, and technology companies creates an opportunity to educate these stakeholders on benefits of implementation science and to support sustainability and scalability of interventions and implementation strategies.

This project has a major focus on increasing health equity through evaluation of implementation and patient outcomes for Black patients, rural residents, and/or patients living in poverty. The inclusion of these subset analyses will help to uncover potential disparities that may naturally occur during implementation. This is particularly important for remote symptom monitoring given the reliance on technology, which may not be readily accessible to all patients. While the project leverages philanthropic funding for telephones and navigator support to overcome such barriers at the onset, additional strategies will likely be needed to support dissemination of this intervention to diverse populations. Findings on implementation strategies to support these patients may be applicable to future technology-supported applications.

A limitation of this study is the potential for missing data. EMR data often have missing variables and claims data will not be available for all patients. However, the benefits of the pragmatic evaluation are anticipated to outweigh these limitations in this study for several key reasons. First, this approach allows for inclusion of all patients in the participating institutions, which would not be feasible from a logistical perspective if primary data collection were required. Second, the project provides a measurement strategy with delineation of real-world data sources that could be abstracted by any practice without excessive cost. This provides substantial value for future practices who will track their own implementation and effectiveness of implementation strategies as part of local quality improvement efforts. Another potential limitation is the reliance on non-study personnel who may have high turnover or be tasked with other duties, particularly in light of the ongoing pandemic. We will monitor and document these real-world challenges, identifying implementation strategies to address them where appropriate, which will be disseminated through the implementation blueprint. Future studies will consider how implementation strategies differ across a national cohort of sites implementing remote symptom monitoring as part of standard of care.

Availability of data and materials

No data are available for the protocol, data from project are anticipated to be made available upon request.

Abbreviations

- BCBS-AL:

-

Blue Cross Blue Shield of Alabama

- CFIR:

-

Consolidated Framework for Implementation Research

- ED:

-

Emergency Department

- EMR:

-

Electronic Medical Record

- ePRO:

-

Electronic Patient-Reported Outcomes

- ERIC:

-

Expert Recommendations for Implementing Change

- FRAME:

-

Framework for Modifications and Adaptions

- MCI:

-

University of South Alabama Mitchell Cancer Institute

- OCM:

-

Oncology Care Model

- PRO-CTCAE:

-

ePRO version of the Common Terminology Criteria for Adverse Events

- PROmpt:

-

Remote Symptom Monitoring Platform.

- RUCA:

-

Rural-Urban Commuting Area

- UAB:

-

University of Alabama at Birmingham

References

Basch E, Iasonos A, McDonough T, et al. Patient versus clinician symptom reporting using the National Cancer Institute common terminology criteria for adverse events: results of a questionnaire-based study. Lancet Oncol. 2006;7:903–9.

Basch E. The missing voice of patients in drug-safety reporting. N Engl J Med. 2010;362:865–9.

Atkinson TM, Ryan SJ, Bennett AV, et al. The association between clinician-based common terminology criteria for adverse events (CTCAE) and patient-reported outcomes (PRO): a systematic review. Supportive Care Cancer. 2016;24:3669–76.

PRO. In NCI Dictionary of Cancer Terms. (n.d). Retrieved from https://www.cancer.gov/publications/dictionaries/cancer-terms/def/pro.

Basch E, Deal AM, Kris MG, et al. Symptom monitoring with patient-reported outcomes during routine cancer treatment: a randomized controlled trial. J Clin Oncol. 2016;34:557–65.

Seow H, Sussman J, Martelli-Reid L, Pond G, Bainbridge D. Do high symptom scores trigger clinical actions? An audit after implementing electronic symptom screening. J Oncol Pract. 2012;8:e142–8.

Santana MJ, Feeny D, Johnson JA, et al. Assessing the use of health-related quality of life measures in the routine clinical care of lung-transplant patients. Qual Life Res. 2010;19:371–9.

Kroenke K, Krebs EE, Wu J, Yu Z, Chumbler NR, Bair MJ. Telecare collaborative management of chronic pain in primary care: a randomized clinical trial. Jama. 2014;312:240–8.

Howell D, Li M, Sutradhar R, et al. Integration of patient-reported outcomes (PROs) for personalized symptom management in "real-world" oncology practices: a population-based cohort comparison study of impact on healthcare utilization. Support Care Cancer. 2020;28:4933–42.

Cleeland CS, Wang XS, Shi Q, et al. Automated symptom alerts reduce postoperative symptom severity after cancer surgery: a randomized controlled clinical trial. J Clin Oncol. 2011;29:994–1000.

Gilbert JE, Howell D, King S, et al. Quality improvement in cancer symptom assessment and control: the provincial palliative care integration project (PPCIP). J Pain Symptom Manag. 2012;43:663–78.

Basch E, Stover AM, Schrag D, et al. Clinical utility and user perceptions of a digital system for electronic patient-reported symptom monitoring during routine cancer care: findings from the PRO-TECT trial. JCO Clin Cancer Inform. 2020;4:947–57.

Valderas JM, Kotzeva A, Espallargues M, et al. The impact of measuring patient-reported outcomes in clinical practice: a systematic review of the literature. Qual Life Res. 2008;17:179–93.

Chen J, Ou L, Hollis SJ. A systematic review of the impact of routine collection of patient reported outcome measures on patients, providers and health organisations in an oncologic setting. BMC Health Serv Res. 2013;13:211.

Detmar SB, Muller MJ, Schornagel JH, Wever LD, Aaronson NK. Health-related quality-of-life assessments and patient-physician communication: a randomized controlled trial. Jama. 2002;288:3027–34.

Basch E, Artz D, Dulko D, et al. Patient online self-reporting of toxicity symptoms during chemotherapy. J Clin Oncol. 2005;23:3552–61.

Velikova G, Booth L, Smith AB, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22:714–24.

Berry DL, Blumenstein BA, Halpenny B, et al. Enhancing patient-provider communication with the electronic self-report assessment for cancer: a randomized trial. J Clin Oncol. 2011;29:1029–35.

Howell D, Rosberger Z, Mayer C, et al. Personalized symptom management: a quality improvement collaborative for implementation of patient reported outcomes (PROs) in 'real-world' oncology multisite practices. J Patient Rep Outcomes. 2020;4:47.

Denis F, Basch E, Septans AL, et al. Two-year survival comparing web-based symptom monitoring vs routine surveillance following treatment for lung Cancer. Jama. 2019;321:306–7.

Zhang B, Lloyd W, Jahanzeb M, Hassett MJ. Use of patient-reported outcome measures in quality oncology practice initiative-registered practices: results of a National Survey. J Oncol Pract. 2018;14:e602–e11.

Oncology Care Model. 2015. (Accessed 1/16/2020, 2020, at http://innovation.cms.gov/initiatives/Oncology-Care/.)

Rocque GB, Cadden A. Creation of Institute of medicine care Plans with an eye on up-front care. J Oncol Pract. 2017;13:512–4.

Rocque GB, Partridge EE, Pisu M, Martin MY, Demark-Wahnefried W, Acemgil A, Kenzik K, Kvale EA, Meneses K, Li X, Li Y, Halilova KI, Jackson BE, Chambless C, Lisovicz N, Fouad M, Taylor RA. The Patient Care Connect Program: Transforming Health Care Through Lay Navigation. J Oncol Pract. 2016;12(6):e633-42.

DeGroff A, Schroy PC 3rd, Morrissey KG, et al. Patient navigation for colonoscopy completion: results of an RCT. Am J Prev Med. 2017;53:363–72.

Rohan EA, Slotman B, DeGroff A, Morrissey KG, Murillo J, Schroy P. Refining the patient navigation role in a colorectal Cancer screening program: results from an intervention study. J National Comprehensive Cancer Network. 2016;14:1371–8.

Gorin SS, Haggstrom D, Han PKJ, Fairfield KM, Krebs P, Clauser SB. Cancer care coordination: a systematic review and Meta-analysis of over 30 years of empirical studies. Ann Behav Med. 2017;51:532–46.

Liang H, Tao L, Ford EW, Beydoun MA, Eid SM. The patient-centered oncology care on health care utilization and cost: A systematic review and meta-analysis. Health Care Manage Rev. 2020;45(4):364-76.

Cobran EK, Merino Y, Roach B, Bigelow SM, Godley PA. The independent specialty medical advocate model of patient navigation and intermediate health outcomes in newly diagnosed Cancer patients. J Oncol Navig Surviv. 2017;8:454–62.

Rocque GB, Pisu M, Jackson BE, Kvale EA, Demark-Wahnefried W, Martin MY, Meneses K, Li Y, Taylor RA, Acemgil A, Williams CP, Lisovicz N, Fouad M, Kenzik KM, Partridge EE; Patient Care Connect Group. Resource Use and Medicare Costs During Lay Navigation for Geriatric Patients With Cancer. JAMA Oncol. 2017;3(6):817-25.

Gerves-Pinquie C, Girault A, Phillips S, Raskin S, Pratt-Chapman M. Economic evaluation of patient navigation programs in colorectal cancer care, a systematic review. Health Econ Rev. 2018;8:12.

Hedlund N, Risendal BC, Pauls H, et al. Dissemination of patient navigation programs across the United States. J Public Health Manage Pract. 2014;20:E15–24.

Rocque GB, Taylor RA, Acemgil A, et al. Guiding lay navigation in geriatric patients with Cancer using a distress assessment tool. J Natl Compr Cancer Netw. 2016;14:407–14.

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–26.

Creswell JCP. Designing and conducting mixed methods research. 2nd ed. Washington DC: Sage Publications, Inc.; 2011.

Educational Attainment 2013–2017 American Community Survey 5-Year Estimates. 2017.

Kind AJH, Buckingham WR. Making neighborhood-disadvantage metrics accessible - the neighborhood atlas. N Engl J Med. 2018;378:2456–8.

Alabama Political Reporter. Ivey signs rural broadband initiative into law. 2019.

AL.com. USDA grants to bring high speed Internet to rural Alabama. 2019.

Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE™). (Accessed 7/12/16, 2016, at http://healthcaredelivery.cancer.gov/pro-ctcae/.)

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38:65–76.

Stover AM, Haverman L, van Oers HA, Greenhalgh J, Potter CM; ISOQOL PROMs/PREMs in Clinical Practice Implementation Science Work Group. Using an implementation science approach to implement and evaluate patient-reported outcome measures (PROM) initiatives in routine care settings. Qual Life Res. 2021;30(11):3015-33. https://doi.org/10.1007/s11136-020-02564-9.

Bartholomew Eldredge LK. Planning health promotion programs : an intervention mapping approach. Fourth edition. Ed. San Francisco: Jossey-Bass & Pfeiffer Imprints, Wiley; 2016.

Waltz TJ, Powell BJ, Fernandez ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. 2019;14:42.

Howell D, Powis M, Kirkby R, Amernic H, Moody L, Bryant-Lukosius D, O'Brien MA, Rask S, Krzyzanowska M. Improving the quality of self-management support in ambulatory cancer care: a mixed-method study of organisational and clinician readiness, barriers and enablers for tailoring of implementation strategies to multisites. BMJ Qual Saf. 2022;31(1):12-22. https://doi.org/10.1136/bmjqs-2020-012051.

Kind AJ, Jencks S, Brock J, et al. Neighborhood socioeconomic disadvantage and 30-day rehospitalization: a retrospective cohort study. Ann Intern Med. 2014;161:765–74.

Wiltsey Stirman S, Baumann AA, Miller CJ. The FRAME: an expanded framework for reporting adaptations and modifications to evidence-based interventions. Implement Sci. 2019;14:58.

Glickman ME, Rao SR, Schultz MR. False discovery rate control is a recommended alternative to Bonferroni-type adjustments in health studies. J Clin Epidemiol. 2014;67:850–7.

Creswell JW, Creswell JW. Qualitative inquiry and research design : choosing among five approaches. 3rd ed. Los Angeles: SAGE Publications; 2013.

Strauss JCA. Basics of qualitative research. Newbury Park: Sage Publications, Inc.; 1990.

Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15:1277–88.

Charmaz K. Constructing grounded theory. London ; Thousand Oaks, Calif: Sage Publications; 2006.

Pope C, Mays N. Reaching the parts other methods cannot reach: an introduction to qualitative methods in health and health services research. Bmj. 1995;311:42–5.

Miles MB, Huberman AM. Qualitative data analysis : an expanded sourcebook. 2nd ed. Thousand Oaks: Sage Publications; 1994.

Polit DF, Beck CT. Nursing research: generating and assessing evidence for nursing practice. 10th ed. Philadelphia: Wolters Kluwer; 2017.

Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69:123–57.

Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci. 2015;10:21.

Services CfMaM. Oncology care first model: Informal Request for Information. 2019.

Woofter K, Kennedy EB, Adelson K, et al. Oncology medical home: ASCO and COA standards. JCO Oncol Pract. 2021;17:475–92.

Acknowledgements

Not applicable.

Funding

This work is supported by the National Institute of Nursing Research (R01NR019058). In addition, Dr. Stover is supported by the North Carolina Translational and Clinical Science Institute (1UL1TR002489).

Author information

Authors and Affiliations

Contributions

All authors GR, JD, AS, CD, AA, CH, SI, JF, NC, DD, EB, BJ, DH, BW, JP, participated in the conception and design. All authors GR, JD, AS, CD, AA, CH, SI, JF, NC, DD, EB, BJ, DH, BW, JP, assisted with drafting of the manuscript. All authors GR, JD, AS, CD, AA, CH, SI, JF, NC, DD, EB, BJ, DH, BW, JP, approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by University of Alabama at Birmingham Institutional Review Board for Human Use (IRB) which served as the IRB for record for this project. (IRB-300007406). No consent required for this study protocol.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Provider Semi-structured Interview Guide – This file contains the interview guide that will used for each of the Provider interviews that will be conducted.

Additional file 2.

ePRO Patient Interview Guide – This file contains the interview guide that will be used for each of the patient interviews that will be conducted.

Additional file 3.

UAB IRB Stamped Consent Form for the Patient Interview – This file contains the IRB approved consent form that patients will review and sign if they agree to participate in the patient interview.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Rocque, G.B., Dionne-Odom, J.N., Stover, A.M. et al. Evaluating the implementation and impact of navigator-supported remote symptom monitoring and management: a protocol for a hybrid type 2 clinical trial. BMC Health Serv Res 22, 538 (2022). https://doi.org/10.1186/s12913-022-07914-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-022-07914-6