Abstract

Background

Implementation research has emerged as part of evidence-based decision-making efforts to plug current gaps in the translation of research evidence into health policy and practice. While there has been a growing number of initiatives promoting the uptake of implementation research in Africa, its role and effectiveness remain unclear, particularly in the context of universal health coverage (UHC). Hence, this scoping review aimed to identify and characterise the use of implementation research initiatives for assessing UHC-related interventions or programmes in Africa.

Methods

The review protocol was developed based on the methodological framework proposed by Arksey and O’Malley, as enhanced by the Joanna Briggs Institute. The review is reported in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews (PRISMA-ScR). MEDLINE, Scopus and the Cochrane Library were searched. The search also included a hand search of relevant grey literature and reference lists. Literature sources involving the application of implementation research in the context of UHC in Africa were eligible for inclusion.

Results

The database search yielded 2153 records. We identified 12 additional records from hand search of reference lists. After the removal of duplicates, we had 2051 unique records, of which 26 studies were included in the review. Implementation research was used within ten distinct UHC-related contexts, including HIV; maternal and child health; voluntary male medical circumcision; healthcare financing; immunisation; healthcare data quality; malaria diagnosis; primary healthcare quality improvement; surgery and typhoid fever control. The consolidated framework for implementation research (CFIR) was the most frequently used framework. Qualitative and mixed-methods study designs were the commonest methods used. Implementation research was mostly used to guide post-implementation evaluation of health programmes and the contextualisation of findings to improve future implementation outcomes. The most commonly reported contextual facilitators were political support, funding, sustained collaboration and effective programme leadership. Reported barriers included inadequate human and other resources; lack of incentives; perception of implementation as additional work burden; and socio-cultural barriers.

Conclusions

This review demonstrates that implementation research can be used to achieve UHC-related outcomes in Africa. It has identified important facilitators and barriers to the use of implementation research for promoting UHC in the region.

Similar content being viewed by others

Background

The need for health decision making to be informed by empirical evidence has been identified as a vital step for achieving universal health coverage (UHC) and equitable access to high quality health care [1, 2]. It has been recognised that decisions informed by research evidence have the potential to promote equitable service delivery and improve health outcomes at population level, while strengthening health systems [2]. The World Health Organization (WHO) defines UHC as “ensuring that all people have access to needed health services (including prevention, promotion, treatment, rehabilitation and palliation) of sufficient quality to be effective while also ensuring that the use of these services does not expose the user to financial hardship” [3]. Since the 1978 Alma-Ata Declaration and the 1986 Ottawa Charter for Health Promotion, the right to the highest attainable standard of physical and mental health has gained increasing attention [4]. As a result of this prioritisation, UHC was adopted as a target of the Sustainable Development Goals (SDG), with the aspiration that countries will achieve this by 2030 [5].

With the increasing momentum of global efforts towards the attainment of UHC, countries are often faced with difficult choices regarding the most effective use of available health resources, particularly in contexts of resource limitation, competing healthcare needs and political priorities [6]. Given this inherent complexity, UHC decision making requires adequate consideration of best available and contextually applicable research evidence [6, 7]. While investment in health research and research outputs have grown considerably in Africa over the years, there remain enormous gaps in translating available research evidence into health policy and practice [8]. This so-called ‘know–do gap’ has resulted in suboptimal gains from allocated health resources, in spite of growing investment towards the actualisation of UHC in Africa [2, 9]. The gap is accentuated by the region’s high burden of communicable and non-communicable diseases [10, 11].

Implementation science has emerged in response to this critical gap [12]. Implementation science is an integral part of the broader Evidence-informed Decision Making (EIDM) enterprise. EIDM involves processes of distilling and disseminating the best available evidence from research, practice and experience and using that evidence to inform and improve public health policy and practice [13, 14]. Knowledge translation, knowledge transfer and translational research are EIDM concepts that are closely related to implementation science, used to refer to the processes of moving research-based evidence into policy and practice, through the synthesis, dissemination, exchange and application of knowledge to improve the health of the population [13, 15,16,17]. Although there may be nuanced differences in their conceptualisation, these terms essentially have similar goals and practical implications for improving health outconmes [15,16,17].

There has been no clear consensus on the definition of implementation science [18]. In 2015, Odeny and colleagues published a review of the literature that found 73 unique definitions [19]. Broadly, implementation science has been defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services.” [16] Since the field of implementation science has cogent applications for both clinical and public health settings, this definition is more encompassing and highlights the field’s broad nature. The process of inquiry in implementation science is through research, which builds on traditional scientific methods, but focuses on a unique set of questions to improve the use of research in implementation [16, 19]. Thus, implementation science offers the toolkit for addressing the know-do gap [16, 20, 21].

In 2006, Eccles and Mittman proposed a working definition for the emerging field of implementation research – defining it as the “scientific study of methods to promote the adoption and integration of evidence based practices, interventions and policies into routine health care and public health settings.” [21] More recently in 2013, the WHO’s Alliance for Health Policy and Systems Research (AHPSR) defines it as “the scientific study of the processes used in the implementation of initiatives as well as the contextual factors that affect these processes.” [18] This definition highlights a defining feature of implementation research; that is, going beyond the study of methods of promoting the uptake of evidence into routine practice, to studying the contextual facilitators and barriers to evidence-based implementation [17, 18]. For this reason, implementation research has been regarded as the heart and soul of implementation science [17]. While implementation science and implementation research have been interchangeably used in literature, implementation research is the reference term for this review.

The role of implementation research encompasses health policy development, policy communication, as well as programme planning, implementation and evaluation [17, 18]. Various conceptual theories and frameworks have been used to guide implementation research efforts across diverse settings. Some of the most commonly used frameworks include the Consolidated Framework for Implementation Research (CFIR), Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) and Theoretical Domains Frameworks (TDF) [22, 23]. To facilitate the use of implementation research in health system decision making and routine practice, there have to be: (a) availability of rigorous, robust, relevant, and reliable evidence, (b) decision-makers’ appreciation of the value and importance of empirical evidence in decision making processes (c) a trusting, mutually respectful and enduring engagement between evidence producers and decision makers [6, 13, 24].

Various implementation research initiatives and efforts for improving health outcomes have emerged in the African region in the last decade [13, 17, 25,26,27,28]. In spite of this substantial growth, implementation research uptake, effectiveness and scale-up in the region is challenged by numerous barriers [25,26,27]. These include inadequate research funding; limited availability and access to research training opportunities and paucity of contextually relevant implementation research models [27]. Another major barrier is the lack of political will or commitment to health-related implementation research and the broader UHC agenda, the pursuits of which are intrinsically political and cannot be attained without adequate political support [27, 29]. Other reported barriers include the untimeliness of research and, of course, fragile collaboration between researchers and users of evidence like policy-makers and frontline programme implementers [2, 7, 30, 31].

Study rationale

Globally, evidence-based health decision making and implementation models are being adopted as approaches for improving the health of populations [7, 16, 32]. While there has been a growing number of institutions and initiatives promoting the uptake of implementation science and implementation research in Africa, the characteristics and role of these initiatives remain unclear [33, 34].

There is a dearth of literature on synthesised bodies of evidence on the role of implementation research in Africa’s health systems and the extent to which it has been used in the context of UHC on the continent. With limited funding and institutional research capacity to drive implementation research efforts in Africa, there is an urgent need to seek out cross-country learning opportunities that can bolster understanding of implementation research and broader EIDM strategies in the region [11, 35]. A better understanding is important to stimulate greater synergy and collaboration between evidence producers and users, while optimising the overall impact of implemented programmes and health systems strengthening in the region.

Scoping reviews represent an appropriate methodology for thematically reviewing large bodies of literature in order to generate an overview of existing knowledge and practice, as well as identifying existing evidence gaps [36, 37]. Like full systematic reviews, scoping reviews employ methods that are transparent and reproducible, using pre-defined search strategies and inclusion criteria [38, 39]. However, unlike systematic reviews which often target specific and narrow research questions, scoping reviews typically have a broader focus – including the nature, volume and characteristics of the literature in order to identify, describe and categorise available evidence on the topic of interest [37,38,39].

Therefore, this scoping review seeks to fill existing gaps in the availability of synthesised evidence on implementation research in the context of UHC, health equity and health systems strengthening within the African region. It maps the region’s implementation research strategies, major actors, reported outcomes, facilitators, and barriers from a diverse body of literature. Ultimately, it seeks to provide a holistic and user-friendly evidence summary of implementation research and key issues in the region for researchers, policymakers and implementers, while identifying lingering knowledge and practice gaps to inform future implementation research efforts.

Methods

Protocol design

An a priori protocol for this review, which has been published elsewhere [40], was designed in accordance with the Arksey and O’Malley scoping review methodology [41], as enhanced by the Joanna Briggs Institute (JBI) [42]. The JBI’s enhanced framework expands the six stages of Arksey and O’Malley into 9 distinct stages for undertaking a scoping review: (1) defining the research question; (2) developing the inclusion and exclusion criteria; (3) describing the search strategy; (4) searching for the evidence; (5) selecting the evidence; (6) extracting the evidence; (7) charting the evidence; (8) summarising and reporting the evidence and (9) consulting with relevant stakeholders. The protocol was disseminated throughout the extensive professional networks of the author group and the World Health Organization (WHO) to solicit feedback. Findings of the review are reported using the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) checklist [43].

Conceptual framework

This scoping review used the WHO’s UHC Cube conceptual framework for mapping the processes and outcomes between implementation research and UHC [44]. This framework uses a cube (see Fig. 1) to depict the multidimensional nature and outcomes of UHC. The cube illustrates three core dimensions of conceptualising UHC: population coverage of health-related social security systems, financial protection, and access to quality health care according to need [44, 45]. These dimensions provide an assessment framework for UHC-targeted initiatives, reflecting how many (or what proportion of) people received various needed health services of sufficient quality, while being protected from undue financial risks [44]. Although the framework does not take into account specific contextual factors, it has been widely used globally for conceptualising UHC across diverse health systems and contexts [45,46,47].

Defining the research question

Through consultation with the research team and key stakeholders, the overall main research question was defined as: ‘What are the nature and scope of implementation research initiatives for improving equitable access to quality promotive, preventive, curative, rehabilitative and palliative health services in Africa?’ For the purpose of this review, implementation research has been defined within the broader frameworks of implementation science, knowledge translation and evidence informed decision making. Based on the primary research question, the following specific research questions were defined:

-

1.

How has implementation research been used to assess or evaluate UHC-related interventions and programmes in the African Region?

-

2.

What are the facilitators and barriers to the application, uptake and sustainability of implementation research in UHC-related contexts in Africa?

Inclusion and exclusion criteria

Inclusion criteria

These were generated using the PCC (Population, Concept and Contexts) framework, proposed by Peters and colleagues [48]. This framework is more appropriate for scoping reviews, compared with the commonly used PICO (Population, Intervention, Comparator and Outcome) framework, as it allows for the consideration of publications that may not feature all of the four PICO elements (e.g. lacking an outcome or comparator/control). Eligible population included evidence producers (health researchers), intermediaries (such as knowledge brokers and implementation research institutions) and evidence users (such as health policymakers, programme implementers like non-government organisations and healthcare providers). There are two concepts of interest for this review, an intervention concept (implementation research) and an outcome concept (UHC). The two concepts of interest are implementation science and UHC. To be considered for inclusion, implementation research initiatives were any activity using a specified implementation research framework or theory design to facilitate the use of research in UHC-related planning, decision making and implementation. Studies with or without comparator between implementation research strategies and control were eligible for inclusion. Outcomes included health service coverage, access (service utilisation and quality of care) and financial risk protection, in line with the UHC Cube framework [44]. Studies that evaluated specific health programme implementation outcomes, barriers or facilitators were included, provided the implementation involved the use of specific implementation research approaches, frameworks or theories. Health systems in Africa were the context of interest. All primary study designs were eligible for inclusion. Further details about the eligibility criteria have been published elsewhere [40].

Exclusion criteria

Literature focused solely or mainly on theoretical and conceptual development of implementation research were excluded, as were those evaluating implementation research knowledge and practice outcomes without interventions, those evaluating implementation outcomes without using specific implementation research frameworks and those discussing implementation research strategies that are not UHC-related. Multinational literature involving African and non-African countries and meeting inclusion criteria were excluded if country-specific information could not be abstracted.

Searching the evidence

The search strategy was developed and applied in accordance with the Peer Review of Electronic Search Strategies (PRESS) guidelines [49]. It was adapted for the different databases using appropriate controlled vocabulary and syntaxes. The search strategy used search terms that are sensitive enough to capture literature relevant to implementation research, with due cognisance of the field’s diverse and overlapping nomenclature and search filters for African countries. An initial exploration of current available literature on implementation research and UHC guided the selection of search terms, ensuring they are inclusive enough to capture any UHC-related implementation research intervention. Details of the search strategies for each database are outlined in Additional file 1.

A comprehensive literature search was conducted on the following electronic databases: MEDLINE (via PubMed), Scopus and Cochrane Library (including the Cochrane Central Register of Controlled Trials (CENTRAL) and the Database of Abstracts of Reviews of Effects (DARE)). Each database was searched from inception until August 15, 2020. Additionally, relevant grey literature was searched for implementation research-related reports, including the website of the WHO Alliance for Health Policy and Systems Research (AHPSR). Websites of known implementation research institutions, networks and collaborations were explored. We also conducted a hand-search of reference lists of relevant literature to identify for potentially eligible literature. No language restriction was applied. We planned for translation if a potentially eligible literature was published in a language other than English. Further details of the planned search strategies are described in the published review protocol [40].

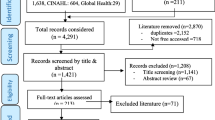

Selecting the evidence

The review process consisted of two levels of screening: a title and abstract screening to identify potentially eligible publications and review of full texts to select those to be included in the review based on pre-defined inclusion/exclusion criteria. For the first level of screening, titles, and abstracts of all retrieved citations from the search output were screened. Articles that were deemed relevant were included in the full-text review. In the second step, the retrieved full texts were assessed to determine if they met the inclusion/exclusion criteria.

Extracting the evidence

A pre-tested data extraction tool was used to extract relevant info from included literature. Extracted information included study characteristics (author, year of publication and country context), study design, implementation research details (platform, framework, strategies and target participants), UHC-related target outcomes as well as identified contextual facilitators and barriers. All extracted data were validated with the full texts before analysis.

Results

Our database search yielded 2153 records. We identified 12 additional records from hand search of reference lists. After removal of duplicates, we had 2051 unique records. The titles and abstracts of these articles were screened, of which 1967 clearly ineligible records were excluded. We conducted a full-text review of the remaining 84 articles, of which 58 were excluded: 54 were removed due to citing but not utilising any implementation research framework, two did not report UHC-related outcomes and another two were not based in an African context. Figure 2 describes the study selection process.

We included 26 studies in the review (see Table 1: summary of characteristics of included studies). Findings were reported using the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) checklist [43], and summarised narratively based on identified themes.

The studies’ publication years ranged from 2013 to 2019. The articles addressed implementation research and UHC in 14 different African countries, including Nigeria (n = 6), Kenya (n = 5), South Africa (n = 5), Mozambique (n = 4), Zambia (n = 3), Uganda (n = 2), Benin (n = 1), Côte d’Ivoire (n = 1), Ghana (n = 1), Malawi (n = 1), Mali (n = 1), Rwanda (n = 1), Tanzania (n = 1) and Zimbabwe (n = 1). Five of the included studies were conducted in multi-country contexts. See Fig. 3.

There were ten distinct UHC-related themes of focus across the articles, including HIV (n = 8) [54, 56, 60, 61, 65, 67, 68, 70], maternal and child health (n = 4) [55, 59, 69, 73], immunisation (n = 3) [50, 51, 72], voluntary male medical circumcision (n = 2) [63, 71], healthcare financing (n = 2) [66, 75], healthcare data quality (n = 2) [62, 64], primary health care quality improvement (n = 2) [57, 58], malaria diagnosis (n = 1) [52], surgery (n = 1) [74] and typhoid fever (n = 1) [53].

Most studies addressed all three dimensions of UHC Cube, with the majority involving the use of implementation research to improve access to health services and the quality of health care. Only two studies particularly addressed the healthcare financing dimension [66, 75].

Qualitative study designs (n = 12) and mixed methods (n = 12) were the most commonly used implementation research study designs, while only two studies used solely quantitative designs. Common qualitative methods utilised across the studies included focus group discussions and key informant interviews. Mixed methods studies used a combination of these with analysis of quantitative data from routine health facility and programmatic records and health worker questionnaire surveys. The consolidated framework for implementation research (CFIR) was the most commonly used implementation research framework (n = 17). Other frameworks used included the Mid-Range Theoretical framework (n = 1), Model for Understanding Success in Quality (n = 1), Normalisation Process Theory (n = 1), Quality implementation framework (n = 1), Reach Effectiveness Adoption Implementation Maintenance framework (n = 1), Theoretical Domains Framework (n = 1) and the Theoretical framework for acceptability (n = 1). One study used both CFIR and the Theoretical Domains Framework (TDF), while another did not report the specific implementation research framework used.

Broadly, implementation research was used to guide the design, implementation and evaluation of health programmes and services as well as the contextualisation of evaluation findings to improve future implementation outcomes. Implementation research was most frequently used for post-implementation evaluation of implemented health programmes or activities [51, 53, 55, 56, 61, 62, 66,67,68, 70,71,72,73, 75]. Four studies used implementation research approaches for pre-implementation assessment, piloting and planning [50, 59, 65, 69]; while four studies involved mid-implementation evaluation [54, 60, 63, 74]. Specific implementation research activities included the use of implementation research frameworks or theories to guide planning, stakeholder engagement, data collection, data analysis and implementation evaluation, as well as the identification of implementation facilitators and barriers. The majority of the studies (n = 16) targeted health care workers. Others targeted and policymakers and health system leaders at national or subnational levels (5 studies), community or lay health workers (n = 3), non-profit organisations implementing health programmes (2 studies) and patients or individuals seeking health services (n = 2). Eleven of the studies focused on more than one category of target participants.

The implementation research framework analytical domains, themes and constructs used varied across studies. Of the studies that used the CFIR, most reported at least one domain or theme used with their corresponding constructs. The most commonly evaluated domains were intervention characteristics, outer setting, inner setting, characteristics of individuals/teams, process and outcomes domains. Complexity and networks and communication were the mostly commonly used constructs. The study by Finocchario-Kessler and colleagues used the RE-AIM model and reported findings in the implementation reach, effectiveness, adoption, implementation, and maintenance domains [60]. Nabyonga-Orem and colleagues used the MDT to guide content thematic analysis and reporting of findings [66].

The most commonly reported contextual facilitators were political support for programme funding and implementation [51, 53, 55, 56, 61, 62, 66,67,68, 70,71,72,73, 75]; strong and sustained collaborations among stakeholders [59, 65, 69]; goal sharing among stakeholders [51, 55], effective leadership and administrative support for frontline personnel, peer-support [73] and staff motivation [74, 75]. Others included intervention flexibility [51, 55], health workers’ confidence in the intervention [51]; supportive leadership [59, 70]; implementing a pilot project before the actual implementation [75]; and performance appraisal with remedial feedback [59, 63]. The most commonly reported barriers included inadequate human resource and other health care resource and infrastructural gaps, lack of leadership [74], lack of incentives, perception of implementation as additional work burden by personnel [74] and socio-cultural barriers. Others included the hierarchical relationships between staff [70], use of volunteer-based implementation with actors outside of the formal health system with limited retention and adverse hierarchical relationships and tension among healthcare providers [55].

As the purpose of the scoping review was to aggregate evidence and present a summary of the evidence rather than to evaluate the quality of the individual evidence, a formal quality appraisal of included literature was not undertaken.

Discussion

This review identified 26 studies that utilised implementation research to address UHC-related issues, ranging from specific diseases to performance-based financing and evidence-based decision-making. This suggests a rapid growth in the use of implementation research to promote UHC-related outcomes in the African region. Consistent with the findings of previous reviews, our review shows that qualitative methods were the most commonly applied methodological design, followed by mixed methods, while quantitative methods were the least commonly used [76, 77]. The increasing use of qualitative methods in implementation research has been driven by the suitability of qualitative enquiry for eliciting the perspectives of implementation stakeholders and gaining a deeper understanding of the implementation context. Although, none of the studies explicitly used implementation research in the context of UHC, the implementation outcomes reported in the included studies all related to at least one dimension of UHC Cube, with the majority of them aiming to improve access to health services and quality of health care, while a few particularly addressed the healthcare financing dimension.

This review also found that implementation research can be applied at multiple stages of implementation; before, during and after implementation. Pre-implementation application can be used to prospectively assess organisational readiness and potential implementation barriers or facilitators, which are important for informing UHC policy making, programme design, planning and implementation. At the implementation level, implementation research can be used to monitor implementation progress, track utilisation of resources and identify implementation gaps. At the post-implementation stage, implementation research can be used to evaluate what worked (effectiveness and facilitators) and what did not work (failures and implementation barriers), as well as to interpret and contextualise those findings.

Specific implementation research activities depended on study design. Qualitative methods were mostly used to guide the development of semi-structured interview guides or focus group protocols, data collection and the development of qualitative coding templates for analysis. On the other hand, quantitative methods often involved the use of implementation research frameworks to guide survey question development and quantitative data analysis. Mixed-method approaches used both qualitative and quantitative methods complementarily. While qualitative interviews of implementation stakeholders were the most common implementation research activities, specific activities depended on the stage of implementation in which they were conducted. For example, McRobie and colleagues applied the CFIR framework to guide pre-implementation baseline data collection and analysis, and identify potential enablers and barriers to the implementation of national HIV policies regarding testing, treatment and retention in Uganda [65]. In Zambia, Jones and colleagues used a mid-implementation design to identify and analyse predictors of a voluntary male medical circumcision programme’s success or failure to create an ‘early warning’ system that enables remedial action during implementation [63]. Naidoo and colleagues used a post-implementation CFIR to map contextual barriers and facilitators to the implementation of community-based HIV programmes in order to produce actionable findings to improve them within the South African context [67].

The diversity of target participants and UHC-related contexts across studies in this review reflects the multi-dimensional, multi-stakeholder and multi-level utilisation of implementation research studies to promote UHC-related outcomes. It also reflects the adaptation of implementation research approaches to take into account the complexity of the health systems within the study settings. Another important finding of this review is that the CFIR was the most commonly used implementation research framework, which may reflect its compatibility for use in African context as previously noted by Means and colleagues in their review [76].

The most commonly reported contextual facilitators such as political support, sustained funding, supportive institutional leadership, financial incentives, clarity of goals as well as strong collaboration among stakeholders, are consistent with those reported in previous reviews [76, 78]. Conversely, the most frequently reported barriers, such as insufficient funding, inadequate human resource and other health care resource and infrastructural gaps, lack of incentives, perception of implementation as additional work burden and socio-cultural barriers have also been previously reported [76]. It is also evident that weak political commitment poses a major implementation barrier [74]. Given its economic costs and social implications, the UHC agenda is intensely political, with contested scope and diverse stakeholders capable of facilitating or hindering its progress [29, 79]. As such, advancing the use of implementation research in the context of UHC will require strong political support for; funding, mobilisation of stakeholders and the uptake of the evidence generated to inform UHC-oriented policy making, governance, implementation and monitoring. It is imperative to take these facilitators and barriers into account when designing contextually appropriate implementation research strategies for promoting the attainment of UHC-related goals.

This review highlights the growing interests in the use of implementation research and data-driven decision making for improving health outcomes at the population level in African contexts. However, the further uptake of implementation research is constrained by numerous barriers as earlier outlined, in addition to the scarcity of good quality, consistently available, complete and reliable health data, particularly at facility and health programme levels [80]. Implementation research presents a practical opportunity for investment in routine health service and programme data collection to improve the quality and availability of essential health services. Overall, the lessons learnt from the various ways in which implementation research has been applied can help to inform future efforts at planning, implementing and tracking the performance of health programmes in achieving UHC-related outcomes; improving service delivery, increasing population coverage and facilitating wider access, while fostering health system strengthening and resilience in the African region.

One way of increasing the uptake and use of implementation research is by leveraging existing monitoring and evaluation systems which have already been substantially established across African countries. However, integrating implementation research into conventional monitoring and evaluation systems will require addressing challenges resulting from sometimes onerous donor reporting requirements. Donor-driven data reporting requirements often result in duplicate reporting systems, burdening the limited human resources at health facility and programme levels [62]. In addition, human resource gaps and inadequate research capacity, knowledge and skills which often constrain the conduct of implementation, need to be addressed. Failure to address the human resource shortages can lead to situations such as health workers’ perception of implementation research activities as additional work rather than an opportunity to learn and improve health service outcomes, as reported by many of the studies included in this review. Thus, personnel recruitment, regular training, guidance, and mentoring are all essential for successful implementation research activities, as are efforts to address the structural divide between policy makers, implementers and researchers.

Limitations

As with any scoping review, our review is not without limitations. While the search strategy was designed to be sensitive enough to capture relevant literature, it may still have missed some. To minimise this, we reviewed the search term iteratively to incorporate related terminologies as we became more familiar with the literature and performed manual review of references. Although we searched a relevant grey literature database, it is difficult to comprehensively search for and locate these sources of evidence and some may have been missed. To ensure feasibility of the review, only one reviewer (CAN) screened, selected and extracted all the data. However, every step of these processes, as well as the extracted data were reviewed and verified by the review team. Another important limitation of this review is that, as in most scoping reviews; a formal quality appraisal of included literature was not undertaken. As such, the strength of the evidence cannot be ascertained. While our literature search was comprehensive, covering both peer-reviewed and relevant grey literature; it is possible that the review did not include all relevant literature available, as some may not have been accessible at the time of literature search. It is also important to acknowledge that the included studies did not directly or explicitly aim to assess UHC outcomes of implementation research. All outcomes reported were however well related, albeit implicitly, to at least one of the three UHC dimensions.

Conclusion

While still limited, there is a body of evidence on the use of implementation research in the attainment of UHC-related outcomes in Africa, including the improvement of routine data for decision-making, efficient resource allocation, as well as the improvement of the availability, accessibility, affordability and quality of health services. Therefore, there is a need for more attention and investment in this type of research. This review has also identified important facilitators and barriers to the use of implementation research in UHC-related contexts in the African region, which need to be considered when designing future implementation research strategies.

Availability of data and materials

All data analysed in this review are available in the included published articles.\

Abbreviations

- AHPSR:

-

Alliance for Health Policy and Systems Research

- CFIR:

-

Consolidated Framework for Implementation Research

- CHW:

-

Community Health Workers

- EIDM:

-

Evidence-informed Decision Making

- MRT:

-

Middle range theory

- MUSIQ:

-

Model for Understanding Success in Quality

- NPT:

-

Normalization Process Theory

- PRESS:

-

Peer Review of Electronic Search Strategies

- PRISMA-ScR:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews

- QIF:

-

Quality implementation framework

- RE-AIM:

-

Reach Effectiveness Adoption Implementation Maintenance

- TDF:

-

Theoretical Domains Framework

- UHC:

-

Universal Health Coverage

References

Conalogue DM, Kinn S, Mulligan J-A, et al. International consultation on long-term global health research priorities, research capacity and research uptake in developing countries. Health Res Policy Syst. 2017;15:24.

Edwards A, Zweigenthal V, Olivier J. Evidence map of knowledge translation strategies, outcomes, facilitators and barriers in African health systems. Health Res Policy Syst. 2019;17(1):16. https://doi.org/10.1186/s12961-019-0419-0.

World Health Organization (WHO). World Health Report 2010. Health systems financing: the path to universal coverage. Available via: https://www.who.int/whr/2010/10_summary_en.pdf?ua=1 Accessed 1 Apr 2021.

Puras D. Universal health coverage: a return to Alma-Ata and Ottawa. Health Hum Rights. 2016;18(2):7–10.

Hogan DR, Stevens GA, Hosseinpoor AR, Boerma T. Monitoring universal health coverage within the sustainable development goals: development and baseline data for an index of essential health services. Lancet Glob Health. 2018;6(2):e152–e68. https://doi.org/10.1016/S2214-109X(17)30472-2.

Jessani NS, Siddiqi SM, Babcock C, Davey-Rothwell M, Ho S, Holtgrave DR. Factors affecting engagement between academic faculty and decision-makers: learnings and priorities for a school of public health. Health Res Policy Syst. 2018;16(1):65. https://doi.org/10.1186/s12961-018-0342-9.

Brownson RC, Fielding JE, Green LW. Building capacity for evidence-based public health: reconciling the pulls of practice and the push of research. Annu Rev Public Health. 2018;39(1):27–53. https://doi.org/10.1146/annurev-publhealth-040617-014746.

Grimshaw JM, Eccles MP, Lavis JN, et al. Knowledge translation of research findings. Implement Sci. 2012;7:50.

Tetroe JM, Graham ID, Foy R, et al. Health research funding agencies’ support and promotion of knowledge translation: an international study. Milbank Q. 2008;86(1):125–55. https://doi.org/10.1111/j.1468-0009.2007.00515.x.

Poot CC, van der Kleij RM, Brakema EA, Vermond D, Williams S, Cragg L, et al. From research to evidence-informed decision making: a systematic approach. J Public Health. 2018;40(suppl_1):i3–i12. https://doi.org/10.1093/pubmed/fdx153.

Shroff Z, Aulakh B, Gilson L, et al. Incorporating research evidence into decision-making processes: researcher and decision-maker perceptions from five low- and middle-income countries. Health Res Policy Syst. 2015;13:70.

National Academies of Sciences, Engineering, and Medicine. Applying an implementation science approach to genomic medicine: workshop summary. Washington: The National Academies Press; 2016. https://doi.org/10.17226/23403.

Jessani NS, Hendricks L, Nicol L, et al. University Curricula in Evidence-Informed Decision Making and Knowledge Translation: Integrating Best Practice, Innovation, and Experience for Effective Teaching and Learning. Front Public Health. 2019;7:313.

Yousefi-Nooraie R, Dobbins M, Brouwers M, et al. Information seeking for making evidence-informed decisions: a social network analysis on the staff of a public health department in Canada. BMC Health Serv Res. 2012;12:118.

Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26(1):13–24. https://doi.org/10.1002/chp.47.

Bauer MS, Damschroder L, Hagedorn H, et al. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3:32.

Hoke T. Implementation research: The unambiguous cornerstone of implementation science. Research for evidence. FHI 360 Research. Available via: https://researchforevidence.fhi360.org/implementation-research-the-unambiguous-cornerstone-of-implementation-science Accessed 27 Dec 2019.

David H. Peters, Nhan T. Tran, Taghreed Adam. Implementation research in health: a practical guide. Alliance for Health Policy and Systems Research, World Health Organization, 2013. Available via: https://apps.who.int/iris/bitstream/handle/10665/91758/9789241506212_eng.pdf;jsessionid=A55D0E6349536138CC3C24C865C15991?sequence=1.

Odeny TA, Padian N, Doherty MC, Baral S, Beyrer C, Ford N, et al. Definitions of implementation science in HIV/AIDS. Lancet HIV. 2015;2(5):e178–e80. https://doi.org/10.1016/S2352-3018(15)00061-2.

Kao LS. In: Dimick JB, Greenberg CC, editors. Implementation Science and Quality Improvement. Success in Academic Surgery: Health Services Research. London: Springer London; 2014. p. 85–100.

Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1:1.

Birken SA, Powell BJ, Shea CM, Haines ER, Alexis Kirk M, Leeman J, et al. Criteria for selecting implementation science theories and frameworks: results from an international survey. Implement Sci. 2017;12(1):124. https://doi.org/10.1186/s13012-017-0656-y.

Foy R, Ovretveit J, Shekelle PG, Pronovost PJ, Taylor SL, Dy S, et al. The role of theory in research to develop and evaluate the implementation of patient safety practices. BMJ Qual Saf. 2011;20(5):453–9. https://doi.org/10.1136/bmjqs.2010.047993.

Ross S, Lavis J, Rodriguez C, Woodside J, Denis JL. Partnership experiences: involving decision-makers in the research process. J Health Serv Res Policy. 2003;8(Suppl 2):26–34. https://doi.org/10.1258/135581903322405144.

Oliver K, Innvar S, Lorenc T, et al. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv Res. 2014;14:2.

Oliver TR. The politics of public health policy. Annu Rev Public Health. 2006;27(1):195–233. https://doi.org/10.1146/annurev.publhealth.25.101802.123126.

Ongolo-Zogo P, Lavis JN, Tomson G, Sewankambo NK. Initiatives supporting evidence informed health system policymaking in Cameroon and Uganda: a comparative historical case study. BMC Health Serv Res. 2014;14(1):612. https://doi.org/10.1186/s12913-014-0612-3.

World Health Organization (WHO). Supporting the Use of Research Evidence (SURE) for Policy in African Health Systems. Avialable via: https://www.who.int/evidence/partners/SURE.pdf Accessed 12 Jan 2020.

Rizvi SS, Douglas R, Williams OD, Hill PS. The political economy of universal health coverage: a systematic narrative review. Health Policy Plan. 2020;35(3):364–72. https://doi.org/10.1093/heapol/czz171.

Langlois EV, Becerril Montekio V, Young T, et al. Enhancing evidence informed policymaking in complex health systems: lessons from multi-site collaborative approaches. Health Res Policy Syst. 2016;14:20.

Kothari A, Wathen CN. Integrated knowledge translation: digging deeper, moving forward. J Epidemiol Community Health. 2017;71(6):619–23. https://doi.org/10.1136/jech-2016-208490.

Vasan A, Mabey DC, Chaudhri S, Brown Epstein HA, Lawn SD. Support and performance improvement for primary health care workers in low- and middle-income countries: a scoping review of intervention design and methods. Health Policy Plan. 2017;32(3):437–52. https://doi.org/10.1093/heapol/czw144.

Tricco AC, Zarin W, Rios P, et al. Barriers, facilitators, strategies and outcomes to engaging policymakers, healthcare managers and policy analysts in knowledge synthesis: a scoping review protocol. BMJ Open. 2016;6:e013929-e.

Uzochukwu B, Onwujekwe O, Mbachu C, Okwuosa C, Etiaba E, Nyström ME, et al. The challenge of bridging the gap between researchers and policy makers: experiences of a health policy research group in engaging policy makers to support evidence informed policy making in Nigeria. Glob Health. 2016;12(1):67. https://doi.org/10.1186/s12992-016-0209-1.

Kirigia JM, Pannenborg CO, Amore LGC, et al. Global Forum 2015 dialogue on “From evidence to policy - thinking outside the box”: perspectives to improve evidence uptake and good practices in the African Region. BMC Health Serv Res. 2016;16(Suppl 4):215.

Bussiek P-BV, De Poli C, Bevan G. A scoping review protocol to map the evidence on interventions to prevent overweight and obesity in children. BMJ Open. 2018;8:e019311-e.

Wickremasinghe D, Kuruvilla S, Mays N, Avan BI. Taking knowledge users’ knowledge needs into account in health: an evidence synthesis framework. Health Policy Plan. 2016;31(4):527–37. https://doi.org/10.1093/heapol/czv079.

Bragge P, Clavisi O, Turner T, et al. The Global Evidence Mapping Initiative: scoping research in broad topic areas. BMC Med Res Methodol. 2011;11:92.

Munn Z, Peters MDJ, Stern C, et al. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18:143.

Nnaji CA, Wiysonge CS, Okeibunor J, Malinga T, Adamu AA, Tumusiime P, et al. Protocol for a scoping review of implementation research approaches to universal health coverage in Africa. BMJ Open. 2021;11(2):e041721. https://doi.org/10.1136/bmjopen-2020-041721.

Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32. https://doi.org/10.1080/1364557032000119616.

Peters M, Godfrey C, McInerney P, et al. Scoping Reviews. In: Aromataris E, Munn Z, editors. Joanna Briggs Institute reviewer's manual the Joanna Briggs Institute; 2017. Available via: https://wiki.joannabriggs.org/display/MANUAL/11.1.3+The+scoping+review+framework Accessed 16 Feb 2020.

Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73. https://doi.org/10.7326/M18-0850.

World Health Organization (WHO). The universal health coverage (UHC) cube. Health financing for universal coverage and health system performance: concepts and implications for policy. Available via: https://www.who.int/bulletin/volumes/91/8/12-113985/en/. Accessed 15 June 2020.

Abiiro GA, De Allegri M. Universal health coverage from multiple perspectives: a synthesis of conceptual literature and global debates. BMC Int Health Hum Rights. 2015;15(1):17. https://doi.org/10.1186/s12914-015-0056-9.

Nygren-Krug H. The right(s) road to universal health coverage. Health Hum Rights. 2019;21(2):215–28.

Obermann K, Chanturidze T, Glazinski B, et al. The shaded side of the UHC cube: a systematic review of human resources for health management and administration in social health protection schemes. Health Econ Rev. 2018;8:4.

Peters MDJ, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Based Healthc. 2015;13(3):141–6. https://doi.org/10.1097/XEB.0000000000000050.

McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS peer review of electronic search strategies: 2015 guideline statement. J Clin Epidemiol. 2016;75:40–6. https://doi.org/10.1016/j.jclinepi.2016.01.021.

Adamu AA, Uthman OA, Gadanya MA, Cooper S, Wiysonge CS. Using the theoretical domains framework to explore reasons for missed opportunities for vaccination among children in Kano, Nigeria: a qualitative study in the pre-implementation phase of a collaborative quality improvement project. Expert Rev Vaccines. 2019;18(8):847–57. https://doi.org/10.1080/14760584.2019.1643720.

Adamu AA, Uthman OA, Gadanya MA, Wiysonge CS. Using the consolidated framework for implementation research (CFIR) to assess the implementation context of a quality improvement program to reduce missed opportunities for vaccination in Kano, Nigeria: a mixed methods study. Hum Vaccin Immunother. 2020;16(2):465–75. https://doi.org/10.1080/21645515.2019.1654798.

Anaba MK, Ibisomi L, Owusu-Agyei S, Chirwa T, Ramaswamy R. Determinants of health workers intention to use malaria rapid diagnostic test in Kintampo north municipality, Ghana - a cross-sectional study. BMC Health Serv Res. 2019;19(1):491. https://doi.org/10.1186/s12913-019-4324-6.

Barac R, Als D, Radhakrishnan A, Gaffey MF, Bhutta ZA, Barwick M. Implementation of interventions for the control of typhoid fever in low- and middle-income countries. Am J Trop Med Hygiene. 2018;99(3_Suppl):79–88. https://doi.org/10.4269/ajtmh.18-0110.

Bardosh KL, Murray M, Khaemba AM, Smillie K, Lester R. Operationalizing mHealth to improve patient care: a qualitative implementation science evaluation of the WelTel texting intervention in Canada and Kenya. Glob Health. 2017;13(1):87. https://doi.org/10.1186/s12992-017-0311-z.

Cole CB, Pacca J, Mehl A, Tomasulo A, van der Veken L, Viola A, et al. Toward communities as systems: a sequential mixed methods study to understand factors enabling implementation of a skilled birth attendance intervention in Nampula Province, Mozambique. Reprod Health. 2018;15(1):132. https://doi.org/10.1186/s12978-018-0574-8.

Cooke A, Saleem H, Hassan S, Mushi D, Mbwambo J, Lambdin B. Patient and provider perspectives on implementation barriers and facilitators of an integrated opioid treatment and HIV care intervention. Addict Sci Clin Pract. 2019;14(1):3. https://doi.org/10.1186/s13722-019-0133-9.

Eboreime EA, Nxumalo N, Ramaswamy R, Eyles J. Strengthening decentralized primary healthcare planning in Nigeria using a quality improvement model: how contexts and actors affect implementation. Health Policy Plan. 2018;33(6):715–28. https://doi.org/10.1093/heapol/czy042.

Eboreime EA, Eyles J, Nxumalo N, et al. Implementation process and quality of a primary health care system improvement initiative in a decentralized context: a retrospective appraisal using the quality implementation framework. Int J Health Plann Manag. 2019;34:e369–e86.

English M. Designing a theory-informed, contextually appropriate intervention strategy to improve delivery of paediatric services in Kenyan hospitals. Implement Sci. 2013;8(1):39. https://doi.org/10.1186/1748-5908-8-39.

Finocchario-Kessler S, Odera I, Okoth V, et al. Lessons learned from implementing the HIV infant tracking system (HITSystem): A web-based intervention to improve early infant diagnosis in Kenya. Healthcare. 2015;3:190–5.

Gimbel S, Rustagi AS, Robinson J, Kouyate S, Coutinho J, Nduati R, et al. Evaluation of a systems analysis and improvement approach to optimize prevention of mother-to-child transmission of HIV using the consolidated framework for implementation research. J Acquir Immune Defic Syndr. 2016;72(Suppl 2):S108–16. https://doi.org/10.1097/QAI.0000000000001055.

Gimbel S, Mwanza M, Nisingizwe MP, et al. Improving data quality across 3 sub-Saharan African countries using the consolidated framework for implementation research (CFIR): results from the African health initiative. BMC Health Serv Res. 2017;17(S3):828. https://doi.org/10.1186/s12913-017-2660-y.

Jones DL, Rodriguez VJ, Butts SA, Arheart K, Zulu R, Chitalu N, et al. Increasing acceptability and uptake of voluntary male medical circumcision in Zambia: implementing and disseminating an evidence-based intervention. Transl Behav Med. 2018;8(6):907–16. https://doi.org/10.1093/tbm/iby078.

Maruma TW. Factors influencing the collection of information by community health workers for tuberculosis contact tracing in Ekurhuleni, Johannesburg. Wits University thesis repository. 2018. Available via: http://wiredspace.wits.ac.za/handle/10539/25549 Accessed 16 Aug 2020.

McRobie E, Wringe A, Nakiyingi-Miiro J, Kiweewa F, Lutalo T, Nakigozi G, et al. HIV policy implementation in two health and demographic surveillance sites in Uganda: findings from a national policy review, health facility surveys and key informant interviews. Implement Sci. 2017;12(1):47. https://doi.org/10.1186/s13012-017-0574-z.

Nabyonga-Orem J, Ssengooba F, Mijumbi R, Kirunga Tashobya C, Marchal B, Criel B. Uptake of evidence in policy development: the case of user fees for health care in public health facilities in Uganda. BMC Health Serv Res. 2014;14(1):639. https://doi.org/10.1186/s12913-014-0639-5.

Naidoo N, Zuma N, Khosa NS, Marincowitz G, Railton J, Matlakala N, et al. Qualitative assessment of facilitators and barriers to HIV programme implementation by community health workers in Mopani district, South Africa. PloS one. 2018;13(8):e0203081. https://doi.org/10.1371/journal.pone.0203081.

Newman Owiredu M, Bellare NB, Chakanyuka Musanhu CC, Oyelade TA, Thom EM, Bigirimana F, et al. Building health system capacity through implementation research: experience of INSPIRE-A multi-country PMTCT implementation research project. J Acquir Immune Defic Syndr. 2017;75(Suppl 2):S240–s7. https://doi.org/10.1097/QAI.0000000000001370.

Petersen Williams P, Petersen Z, Sorsdahl K, Mathews C, Everett-Murphy K, Parry CDH. Screening and brief interventions for alcohol and other drug use among pregnant women attending midwife obstetric units in Cape Town, South Africa: a qualitative study of the views of health care professionals. J Midwifery Women’s Health. 2015;60(4):401–9. https://doi.org/10.1111/jmwh.12328.

Rodriguez VJ, LaCabe RP, Privette CK, et al. The Achilles’ heel of prevention to mother-to-child transmission of HIV: protocol implementation, uptake, and sustainability. SAHARA J. 2017;14(1):38–52. https://doi.org/10.1080/17290376.2017.1375425.

Rodriguez VJ, Chahine A, de la Rosa A, Lee TK, Cristofari NV, Jones DL, Zulu R, Chitalu N, Weiss SM. Identifying factors associated with successful implementation and uptake of an evidence-based voluntary medical male circumcision program in Zambia: the Spear and Shield 2 Program. Transl Behav Med. 2020;10(4):970–7. https://doi.org/10.1093/tbm/ibz048.

Soi C, Gimbel S, Chilundo B, Muchanga V, Matsinhe L, Sherr K. Human papillomavirus vaccine delivery in Mozambique: identification of implementation performance drivers using the consolidated framework for implementation research (CFIR). Implement Sci. 2018;13(1):151. https://doi.org/10.1186/s13012-018-0846-2.

Warren CE, Ndwiga C, Sripad P, Medich M, Njeru A, Maranga A, et al. Sowing the seeds of transformative practice to actualize women’s rights to respectful maternity care: reflections from Kenya using the consolidated framework for implementation research. BMC Womens Health. 2017;17(1):69. https://doi.org/10.1186/s12905-017-0425-8.

White MC, Randall K, Capo-Chichi NFE, Sodogas F, Quenum S, Wright K, et al. Implementation and evaluation of nationwide scale-up of the surgical safety checklist. Br J Surg. 2019;106(2):e91–e102. https://doi.org/10.1002/bjs.11034.

Zitti T, Gautier L, Coulibaly A, Ridde V. Stakeholder perceptions and context of the implementation of performance-based financing in district hospitals in Mali. Int J Health Policy Manag. 2019;8(10):583–92. https://doi.org/10.15171/ijhpm.2019.45.

Means AR, Kemp CG, Gwayi-Chore MC, Gimbel S, Soi C, Sherr K, et al. Evaluating and optimizing the consolidated framework for implementation research (CFIR) for use in low- and middle-income countries: a systematic review. Implement Sci. 2020;15(1):17. https://doi.org/10.1186/s13012-020-0977-0.

Birken SA, Powell BJ, Presseau J, Kirk MA, Lorencatto F, Gould NJ, et al. Combined use of the consolidated framework for implementation research (CFIR) and the theoretical domains framework (TDF): a systematic review. Implement Sci. 2017;12(1):2. https://doi.org/10.1186/s13012-016-0534-z.

Kirk MA, Kelley C, Yankey N, et al. A systematic review of the use of the consolidated framework for implementation research. Implement Sci. 2016;11:72.

Greer SL, Méndez CA. Universal health coverage: a political struggle and governance challenge. Am J Public Health. 2015;105(Suppl 5):S637–S9. https://doi.org/10.2105/AJPH.2015.302733.

Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci. 2015;10(1):155. https://doi.org/10.1186/s13012-015-0342-x.

Acknowledgements

The authors gratefully acknowledge the valuable comments and inputs from the following colleagues at Cochrane South Africa; Chinwe Iwu-Jaja, Selvan Naidoo, Phelele Njenje, Jill Ryan and Alison Wiyeh.

Funding

The WHO Regional Office for Africa and the South African Medical Research Council (SAMRC), through Cochrane South Africa, provided funding for this study. The funders had no role in the design, execution and reporting of the study. The views expressed are those of the authors and do not necessarily reflect those of WHO, the SAMRC, Cochrane or any other organisation that the authors are affiliated with.

Author information

Authors and Affiliations

Contributions

The study was conceived by CSW, JCO, and HK. CAN wrote the first draft of the manuscript with guidance from CSW. CSW, JCO, TM, AAA, PT, and HK contributed to writing the final version of the manuscript. All the authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Since the scoping review methodology involved reviewing and collecting data from publicly available materials, with no direct involvement of human participants; this study did not require ethics approval or consent to participate.

Consent for publication

Not applicable.

Competing interests

None declared.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

PubMed/MEDLINE search strategy.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Nnaji, C.A., Wiysonge, C.S., Okeibunor, J.C. et al. Implementation research approaches to promoting universal health coverage in Africa: a scoping review. BMC Health Serv Res 21, 414 (2021). https://doi.org/10.1186/s12913-021-06449-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-021-06449-6