Abstract

Background

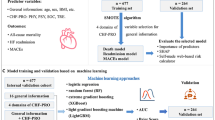

The goal of this study was to assess the effectiveness of machine learning models and create an interpretable machine learning model that adequately explained 3-year all-cause mortality in patients with chronic heart failure.

Methods

The data in this paper were selected from patients with chronic heart failure who were hospitalized at the First Affiliated Hospital of Kunming Medical University, from 2017 to 2019 with cardiac function class III-IV. The dataset was explored using six different machine learning models, including logistic regression, naive Bayes, random forest classifier, extreme gradient boost, K-nearest neighbor, and decision tree. Finally, interpretable methods based on machine learning, such as SHAP value, permutation importance, and partial dependence plots, were used to estimate the 3-year all-cause mortality risk and produce individual interpretations of the model's conclusions.

Result

In this paper, random forest was identified as the optimal aools lgorithm for this dataset. We also incorporated relevant machine learning interpretable tand techniques to improve disease prognosis, including permutation importance, PDP plots and SHAP values for analysis. From this study, we can see that the number of hospitalizations, age, glomerular filtration rate, BNP, NYHA cardiac function classification, lymphocyte absolute value, serum albumin, hemoglobin, total cholesterol, pulmonary artery systolic pressure and so on were important for providing an optimal risk assessment and were important predictive factors of chronic heart failure.

Conclusion

The machine learning-based cardiovascular risk models could be used to accurately assess and stratify the 3-year risk of all-cause mortality among CHF patients. Machine learning in combination with permutation importance, PDP plots, and the SHAP value could offer a clear explanation of individual risk prediction and give doctors an intuitive knowledge of the functions of important model components.

Similar content being viewed by others

Introduction

Chronic heart failure (CHF), which is characterized by cardiac systolic or diastolic dysfunction [1], is the advanced manifestation of various cardiovascular diseases and has become one of the deadliest cardiovascular conditions of the twenty-first century [2]. It is estimated that 64.3 million people worldwide suffer from heart failure [3]. In developed countries, the prevalence of heart failure is generally estimated to be 1% to 2% [4]. An epidemiological survey conducted many years ago showed that the prevalence of heart failure in China was about 0. 9% (0. 7% in men and 1. 0% in women) [5]. It is projected that the number of heart failure patients in China is about 8.9 million now, and the age of heart failure inpatients is 67 ± 14, with men accounting for 60.8% [6].Over the past several years, although drugs and instrumental agents for CHF have continued to emerge, the mortality rate of CHF remains high, causing a serious economic and social burden [7]. Increasing patient prognosis and lowering mortality have been essential therapeutic objectives for CHF. Finding targeted treatments in clinical treatment requires accurate mortality risk estimations for patients with CHF and an understanding of what influences these predictions.

Several researchers have developed risk score models to stratify HF patients, such as the Seattle Heart Failure Model (SHFM) and Meta-Analysis Global Group in Chronic Heart Failure (MAGGIC) [8,9,10,11,12]. The above prediction models have been successfully applied in clinical practice for the management of patients with varying degrees of heart failure. However, the data of the above survival prediction models are from clinical trials [7, 13]. These data have small sample sizes and are less representative of the population. Therefore, even if such a model is constructed with high accuracy, it is not very useful for real-world research.

In recent years, with the rapid development of artificial intelligence, machine learning technology has been used to build cardiovascular disease prediction models more and more widely. To more accurately predict mortality in HF patients, artificial intelligence,specifically machine learning (ML) [9, 14], may be a useful tool because ML algorithms can improve accuracy by analyzing large amounts of medical data.Meanwhile, with the gradual spread of electronic medical records (EHRs) in clinical research, it has become increasingly necessary to use electronic medical records rather than just clinical trial data to predict heart failure prognosis [10]. Recently, several studies have shown that ML methods superior traditional risk models. However, the lack of interpretability and intuitive understanding of ML models is one of the major barriers to incorporating ML into the cardiovascular field.

To solve these disadvantages, we introduce six machine learning methods for training, including logistic regression (LR), naive bayes(NB), random forest classifier, extreme gradient boost(XGBoost), k-nearest neighbors (KNN), and decision tree [15, 16]. Meanwhile, this study combined the advanced ML algorithm with a framework based on SHapley Additive exPlanations (SHAP) [13, 17,18,19], permutation importance, and partial dependence plots. The above three ML interpretable tools and techniques help to improve the accuracy of 3-year mortality risk prediction in patients with heart failure, and also provide intuitive explanations for patients to predict risk. Hence, This helps clinicians to better understand and assess the severity of the disease and provides a basis for early intervention and subsequent treatment. This is an important step forward for ML in medicine and will help researchers continue to develop personalized and interpretable risk prediction models.

Methods

Study population

The goal of this retrospective study is to forecast the 3-year risk of all-cause mortality in patients with CHF. The data in this paper were selected from patients with CHF (NYHA class III or IV) who were hospitalized at the First Affiliated Hospital of Kunming Medical University, Yunnan Province, from 2017 to 2019. Patients were screened through the electronic medical record system according to the inclusion and exclusion criteria.

Data collection

Patient data were gathered in accordance with the Chronic heart failure case report form, which was created by this study group based on the case records and CHF guidelines [20].The report contained basic information of patients, past medical history, vital signs of admission, drugs they were taking at that time, the echocardiography and electrocardiogram results, and laboratory examination results. After gathering a total of 1222 individuals with CHF brought on by various forms of cardiovascular illness, we were able to identify 626 patients who had been followed up for more than three years or had passed away [21].

A total of 104 indicators were collected per patient.According to previous studies [22, 23]. Related to heart failure, we selected 45 indicators that were potentially clinically related to heart failure [24,25,26]. The indicators we chose were mentioned in almost all risk prediction models for chronic heart failure in the past [27, 28]. For example, a case study by Ahmad T, Munir A et al. confirmed that age, renal dysfunction, blood pressure, ejection fraction and anemia were significant risk factors for mortality among heart failure patients [22]. Pocock SJ's CHARM program showed that older age, diabetes, and lower left ventricular ejection fraction were the three most powerful predictors [27]. Other independent predictors included higher NYHA class, cardiomegaly, hospitalization, male sex, lower body mass index, and lower diastolic blood pressure [27]. A study showed that abnormal potassium levels were linked to a higher risk of death [29, 30].

Data preprocessing

-

1.

There were 27 groups of selected data with a small amount of missing data (see Fig. 1). For numerical variables, the filling method of k nearest neighbors is used (KNNImputer(n neighbors = 3)). For nominal variables, we used the mode method to fill in missing values (SimpleImputer(strategy = "most_frequent")).

-

2.

Since the survival rate and mortality rate are basically the same, the problem of data imbalance is not addressed.

-

3.

Data normalization: We normalized the data to ensure that the data variance per dimension was 1 and that the mean was 0. This treatment ensures that the results will not be dominated by excessively large eigenvalues of some dimensions.

Model development

To predict 3-year all-cause mortality, we created six ML models utilizing follow-up data. They are logistic regression, K-nearest neighbor, random forest, naive Bayes, decision tree, and extreme gradient boost. Logistic Regression(LR): The logistic regression algorithm is a probabilistic nonlinear regression, which is a multivariate analysis method that examines the relationship between two or more categorical outcome variables and influencing factors [31,32,33]. K-Nearest Neighbor (KNN): The K-nearest neighbor algorithm is a classification algorithm that estimates the target categories with k neighbors in a sample. KNN is suitable for overlapping or repeating samples because KNN does not rely on identifying the class domain but mainly determines the attribution category based on the limited adjacent samples nearby. Random Forest(RF): The basic idea is to construct multiple decision trees to form a forest and then use these decision trees to jointly decide the output category [34]. Naive Bayes(NB): This is a probabilistic method borrowed from statistics and is one of the few probability-based algorithms [35]. It not only works well with many samples but also works well with little data and can handle multiclass classification tasks [31, 32]. Decision Tree(DT): A decision tree is a top-down tree consisting of nodes and directed edges. The node is an attribute, and the branch is the corresponding attribute value. The more information data there are, the more branches there are, and the larger the tree. The decision-generating path from the root to the leaf node can be used to derive the categorization criteria [34, 36]. Extreme Gradient Boost (XGBoost): XGBoost is a tree-based boosting algorithm designed to be portable, efficient and flexible. Its basic idea is to combine multiple trees with low classification accuracy into a model with relatively improved accuracy [15, 37, 38]. XGBoost can solve not only classification but also regression problems [39].

Interpretability in machine learning

From the six ML models, the optimal algorithm for this dataset was identified as random forest. At the same time, because the interpretability of machine learning is generally poor, it is not conducive to the formulation of diagnostic strategies for doctors and the understanding and cooperation of patients. We used ML interpretable tools and techniques, permutation importance, PDP plots and SHAP values for analysis.

Results

Dataset

In this paper, we located 626 patients who had been followed up for more than three years or had passed away; 332 patients were alive, and 294 had died. The average age of the enrolled patients was 66.27 ± 12.25 years, of whom 402 were men and 224 were women (for detailed information, see Table 1).

Data were presented by continuous variables (as means and standard deviation) or categorical variables (as frequencies and percentages) (Table1). To identify the diferences, the Kolmogorov–Smirnov test was used for continuous variables of normal distribution, and the Mann–Whitney U test was used for continuous variables of non-normal distribution. Te diferences of categorical variables between groups were tested with a Chi-squared test.

ML models select

The hyperparameters of the ML models were optimized using a grid search method with five-fold cross-validation (CV) (details in Table 2). Finally, the effectiveness of each model was assessed and contrasted. The six models were thoroughly evaluated using several assessment markers, and the model with the highest performance was chosen for additional in-depth study.

The accuracy of each model is shown in the table above (Table 2). The accuracy of logistic regression was 72.44%, that of naive Bayes was 72.63%, that of random forest was 78.96%, that of extreme gradient boost was 77.33%, that of K-nearest neighbor was 62.28%, and that of decision tree was 72.43%. We used accuracy as the main evaluation index and ROC (Fig. 2) as the secondary evaluation index. Among the six models, random forest was the best, with an accuracy of 78.96%, precision of 98% and recall of 99.44%. We also provide a tree map of the random forest decision tree model (see Fig. 3).

The random forest is formed by multiple decision trees. Each decision tree calculates patient outcomes by splitting them into groups with similar characteristics [34, 40]. This is determined by the principle of entropy, which is the parent node and which node needs to be split. For a given set of data, a decreased entropy indicates a better categorization outcome. Therefore, the state with the lowest classification impact is one where entropy is 1, while the state with complete classification is one where entropy is 0. The process of increasing classification accuracy is called continuous entropy minimization. From Fig. 3, we can see that the entropy of age is the largest, which is 1, followed by that of BNP at 0.97 and that of triglycerides at 0.78. Conversely, lower entropy has smaller values until the entropy is 0 for complete classification [36].

ML-based interpretability

Permutation importance

Permutation importance is the first tool for understanding an ML model; it is a useful technique for revealing each variable's predictive potential in ML models, and it entails changing individual variables in the validation data and observing how the accuracy changes. In permutation importance, the top value is the most important feature, while the bottom value is relatively less important. Among them, number of hospitalizations, age, glomerular filtration rate, BNP, and NYHA cardiac function classification were the top five important factors. In terms of permutation, the most important feature was the number of hospitalizations, and its weight was 0.116 (Table 3). The number of hospitalizations is an important factor that affects the prognosis of patients with CHF. More hospitalizations indicate severe heart failure and a worse prognosis. Second, age (0.034) and glomerular filtration rate (0.029) are also important factors for prognosis. A case study on survival analysis of heart failure patients also confirmed that age, renal dysfunction and anemia were significant risk factors for mortality among heart failure patients [22, 41].

Partial Dependence Plot (PDP)

The PDP plots is a visual post hoc explainability approach that illustrates the marginal impact of a given feature on the projected outcome [42]. In the PDP diagram, the black line represents the change in risk of mortality after three years after sweeping through all potential values of the variable of interest while holding other factors constant. We selected seven variables, including the number of hospitalizations, age, glomerular filtration rate, BNP, NYHA cardiac function classification, absolute neutrophil value and RBC volume distribution width, to draw a partial dependence graph, as shown in Fig. 4-A-G. In the first figure (Fig. 4-A), we kept the other variables constant. When the number of hospitalizations was less than 4, the patient's survival rate decreased, and when the number of hospitalizations was more than 4, the patient's survival rate gradually increased. This does not seem consistent with our previous studies; however, considering that patients with more hospitalizations may have better compliance, patients hospitalized in a timely manner can receive effective intervention, and the survival rate will be improved. For the New York Heart Function Class (Fig. 4-B), when the New York Heart Function was grade IV, the survival rate decreased. In the same way, for BNP (Fig. 4-C), there was a change trend at approximately 0–1800. We can see that with the increase in BNP content in the blood from 0 to 1800, the adverse survival prognosis rapidly increased with increasing BNP values, so the survival rate decreased, and the curve above 1800 became flat. This interval had no extra effect on the final prognosis as the index increased. An absolute neutrophil value (Fig. 4-D) interval of 0–7.5 had a better effect on survival prognosis, and a value greater than 7.5 had a slightly adverse effect on survival prognosis, but this effect did not increase rapidly with increasing value. For RBC volume distribution width (Fig. 4-E), the adverse effects in the 13–15 range increased rapidly, and the effects remained basically unchanged after 15. For age (Fig. 4-F), 60 years is a critical value, before which age has almost no effect on survival prognosis, and after 60 years, the adverse effect on survival prognosis increases rapidly with increasing age. Glomerular filtration rate (Fig. 4-G) is a protective factor for survival prognosis, especially for GFR values between 30 and 40, where survival increases rapidly and tends to stabilize after 40. According to the PDP diagram, we can speculate that the number of hospitalizations, NYHA cardiac function classification, age, glomerular filtration rate, BNP, absolute neutrophil value and RBC volume distribution width are important predictors of survival prognosis in patients with chronic heart failure. Many previous studies have also confirmed that these factors are closely related to the prognosis of chronic heart failure [22, 23, 27, 28].

SHAP values

We use SHAP to highlight how the chosen factors impact the mortality rate in the model to provide an intuitive explanation of the variables [43]. The SHAP value is an all-encompassing index that reacts to a model feature's influence. A SHAP plot is obtained by taking the mean of the absolute SHAP values based on the magnitude of each feature attribute as the importance of the feature (see Fig. 5) or by plotting a scatter plot of all the training data and looking at the positive and negative relationship between the contribution of the feature values and the predicted impact by color (see Fig. 6). The prediction model’s significance is denoted by the feature ranking (y-axis). A unified index that responds to the impact of a certain model feature is the SHAP value (x-axis) [43]. As with the mean SHAP value bar chart, it is also ordered by importance, although each point in the scatter plot represents a sample, where the blue dot denotes a low risk rating and the red dot a high risk value. The higher the SHAP value of a given feature is, the higher the risk of death the patient would have [33, 42, 44].

The number of hospitalizations has the highest importance, followed by age, glomerular filtration rate, and BNP. Among them, the number of hospitalizations and glomerular filtration rate have high and positive effects. For the number of hospitalizations, blue points are mainly concentrated in areas where SHAP is more than 0. It is evident that a greater number of hospitalizations increases patient survival. This is consistent with the results obtained with the PDP (Fig. 4-A), considering that patients with more hospitalizations may have better compliance, those who are hospitalized in a timely manner can receive effective intervention, and the survival rate will be improved. For the glomerular filtration rate, blue points are mainly concentrated in areas where SHAP is less than 0. It is evident that low GFR values reduce patient survival. Age and BNP are negatively correlated with the target variable, and its red dots are concentrated in the areas where SHAP is less than 0. If age and BNP levels are too high, the survival rate decreases.

This shows that the number of hospitalizations, age, glomerular filtration rate, BNP, diastolic blood pressure, systolic blood pressure, NYHA cardiac function classification, serum uric acid, low-density lipoprotein cholesterol, BMI, lymphocyte absolute value, total cholesterol, hemoglobin, serum urea, absolute neutrophil value, blood sodium, LVEF, white blood cells, triglycerides, and serum albumin were associated with a higher predicted probability of CHF-related mortality. The importance of the impact of these factors is also shown in Figs. 5 and 6.

Finally, we selected two representative patients, one who died and one who survived from the dataset, to observe their prediction score and main influencing factor and see how the different variables affect their outcomes. This plot shows the respective contribution of each feature. Blue indicates a negative effect on the forecast (left arrow, SHAP reduction), and red represents a positive effect on the forecast (right, increased SHAP value). The baseline (mean predicted value) was 0.53; that is, the mean survival rate for all study patients was 0.53. The first patient was 0.55 and was higher than the baseline. The number of hospitalizations was a more important factor (Fig. 7). The reason this score was above the baseline is that although the number of hospitalizations (4 times) provided a relatively unfavorable prognostic effect, the patient's age (53 years old), diastolic blood pressure (85 mmHg), and glomerular filtration rate (56.72 ml/min) provided better prognostic support. The second patient was 0.33 and was lower than the baseline (Fig. 8). The reason is that age (89 years old) provided a relatively unfavorable prognostic effect, although the patient's serum uric acid (314.3 mmol/L) and glomerular filtration rate (43.14 ml/min) predicted a better prognosis.

Discussion

ML technology does not require assumptions about input variables and their relationship with output. The advantage of this completely data-driven learning without relying on rule-based programming makes ML a reasonable and feasible approach. An increasing number of studies are applying ML to predict cardiovascular disease. In recent studies, it has also been used to predict adverse outcomes in patients with HF by integrating clinical and other data [7, 45, 46]. Several models have been used to predict the risk of death in patients with HF, such as random forest (RF) and Gradient Boosting Decision Tree [47, 48]. Furthermore, Decision Tree model was able to provide a ranking of feature importance and identify important factors in predicting all-cause mortality in patients with heart failure [17]. At the same time, logistic regression (LR) can tell the user whether these important factors are protective or dangerous. XGBoost algorithm has been widely favored recently due to its fast calculation speed, strong generalization ability and high prediction performance. The RF algorithm involves multiple decision tree creations that identify important predictive features with better accuracy in processing large numbers of highly nonlinear data. However, despite the promising performance of ML in previous studies, evidence on its application in a real-world clinical setting and explainable risk prediction models to assist disease prognosis are limited.

To identify the optimal prediction model for prediction, this paper employs six machine learning algorithms, including logistic regression, naive Bayes, random forest classifier, extreme gradient boost, K-nearest neighbor, and decision tree. During a 3-year follow-up period, we created and evaluated an interpretable machine learning-based risk forecasting tool to predict all-cause death in CHF patients. The machine learning risk score was produced using the random forest model because it performed the best out of the six models. This model risk score greatly exceeded the other risk scores currently available, with an average AUC of 0.82.

Moreover, because the interpretability of machine learning is generally poor, it is not conducive to the formulation of diagnostic strategies by doctors and the understanding and cooperation of patients. We used ML interpretable tools and techniques, including permutation importance, PDP plots and SHAP values, for analysis. The permutation importance showed that the number of hospitalizations, age, glomerular filtration rate, BNP, and NYHA cardiac function classification were the top five important factors.We selected seven variables, namely, number of hospitalizations, age, glomerular filtration rate, BNP, NYHA cardiac function classification, absolute neutrophil value and RBC volume distribution width, to draw its PDP, which allowed us to intuitively see the impact of the change trend of each feature on survival prognosis. Finally, it is clear from SHAP values and SHAP plots that the number of hospitalizations, followed by age, glomerular filtration rate, and BNP, has the greatest significance. We demonstrated how machine learning can be applied to create a high-accuracy mortality prediction model in CHF patients and predicted the crucial features. The graphical description may help physicians understand the key components intuitively.

The significant factors that predict all-cause mortality in patients with HF were further identified in this investigation. According to the significance of the features, it was clear that a good risk assessment required consideration of clinical traits, demographic traits, and treatment status. Age, BNP concentration, NYHA classification,glomerular filtration rate, and other factors are still significant in predicting death for CHF patients, in line with prior research and clinical practice [22, 27].

Conclusion

To create a survival prediction model for patients with CHF, this study uses a more recent survival analysis algorithm. Based on the confusion matrix analysis of each algorithm model, the optimal random forest model was selected as the prediction model. The model was investigated based on ML interpretable tools and techniques. The importance of variables based on permutation importance, partial dependence plot and SHAP value showed that number of hospitalizations, age, glomerular filtration rate, BNP, diastolic blood pressure, systolic blood pressure, and NYHA cardiac function classification were the most important factors in predicting survival after 3 years of follow-up in heart failure patients.

Availability of data and materials

The datasets during and/or analysed during the current study available from the corresponding author on reasonable request.

References

Yan L, Zirui H, Chun X, et al. Association of serum total cholesterol and left ventricular ejection fraction in patients with heart failure caused by coronary heart disease. Arch Med Sci. 2017;14(5):988–94.

Alba A, Agoritsas T, Jankowski M, et al. Risk prediction models for mortality in ambulatory patients with heart failure: a systematic review. Circ Heart Fail. 2013;6(5):881–9.

Lippi G. Sanchis-Gomar F (2020) Global epidemiology and future trends of heart failure. AME Med J. 2020;5:15.

Orso F, Fabbri G, Maggioni AP. Epidemiology of Heart Failure. Handb Exp Pharmacol. 2017;243:15–33.

Jun H. Epidemiological characteristics and prevention strategies of heart failure in China. Chinese Heart and Heart Rhythm Elec J. 2015;3(02):2–3.

Summary of China Cardiovascular Health and Disease Report 2021. Chinese Journal of Circulation,2022,37(06):553–578.

Savarese G, Lund LH. Global public health burden of heart failure. Card Fail Rev. 2017;3(1):7–11.

Pocock SJ, Ariti CA, McMurray JJV, Maggioni A, Køber L, Squire IB, Swedberg K, Dobson J, Poppe KK, Whalley GA, Doughty RN, Meta-Analysis Global Group in Chronic Heart Failure. Predicting survival in heart failure: a risk score based on 39 372 patients from 30 studies. Eur Heart J. 2013;34:1404–13.

Levy WC, Mozaffarian D, Linker DT, Sutradhar SC, Anker SD, Cropp AB, Anand I, Maggioni A, Burton P, Sullivan MD, Pitt B, Poole-Wilson PA, Mann DL, Packer M. The Seattle Heart Failure Model: Prediction of survival in heart failure. Circulation. 2006;113:1424–33.

Collier TJ, Pocock SJ, McMurray JJV, Zannad F, Krum H, van Veldhuisen DJ, 4084 T. Tohyama et al. ESC Heart Failure 2021; 8: 4077–4085

Swedberg K, Shi H, Vincent J, Pitt B. The impact of eplerenone at different levels of risk in patients with systolic heart failure and mild symptoms: Insight from a novel risk score for prognosis derived from the EMPHASIS-HF trial. Eur Heart J. 2013; 34: 2823–2829.[15] Mortazavi BJ, Downing NS,

Anderson JL, Heidenreich PA, Barnett PG, et al. ACC/AHA statement on cost/value methodology in clinical practice guidelines and performance measures: A report of the American college of cardiology/American heart association task force on performance measures and task force on practice guidelines[J]. J Am Coll Cardiol. 2014;(63-21). https://doi.org/10.1016/j.jacc.2014.03.016.

Bucholz EM, et al. Analysis of machine learning techniques for heart failure readmissions. Circ Cardiovasc Qual Outcomes. 2016;9:629–40.

Lyle M, Wan SH, Murphree D, Bennett C, Wiley BM, Barsness G, et al. Predictive value of the get with the guidelines heart failure risk score in unselected cardiac intensive care unit patients. J Am Heart Assoc. 2020;9:e012439.

Lundberg SM, Nair B, Vavilala MS, et al. Explainable machine-learning predictions for the prevention of hypoxaemia during surgery. Nat Biomed Engi. 2018;2(10):749–60.

Ahmad FS, Ning H, Rich JD, Yancy CW, Lloyd-Jones DM, Wilkins JT. Hypertension, obesity, diabetes, and heart failure-free survival: the cardiovascular disease lifetime risk pooling project. JACC Heart Fail. 2016;4(12):911–9.

Allen LA, Matlock DD, Shetterly SM, Xu S, Levy WC, Portalupi LB, McIlvennan CK, Gurwitz JH, Johnson ES, Smith DH, Magid DJ. Use of risk models to predict death in the next year among individual ambulatory patients with heart failure. JAMA Cardiol. 2017;2:435–41.

Lv H, Yang X, Wang B, Wang S, Du X, Tan Q, Hao Z, Liu Y, Yan J, Xia Y. Machine learning-driven models to predict prognostic outcomes in patients hospitalized with heart failure using electronic health records: retrospective study. J Med Internet Res. 2021;23(4):e24996. https://doi.org/10.2196/24996.PMID:33871375;PMCID:PMC8094022.

Wang K, Tian J, Zheng C, Yang H, Ren J, Liu Y, Han Q, Zhang Y. Interpretable prediction of 3-year all-cause mortality in patients with heart failure caused by coronary heart disease based on machine learning and SHAP. Comput Biol Med. 2021;137:104813. https://doi.org/10.1016/j.compbiomed.2021.104813. (Epub 2021 Aug 28 PMID: 34481185).

Yancy CW, Jessup M, Bozkurt B, et al. ACC/AHA/HFSA focused update of the 2013 ACCF/AHA guideline for the management of heart failure: a report of the American college of cardiology/American heart association task force on clinical practice guidelines and the heart failure society of America. J Am Coll Cardiol. 2017;136:1476–88.

Vermond RA, Geelhoed B, Verweij N, Tieleman RG, Van der Harst P, Hillege HL, Van Gilst WH, Van Gelder IC, Rienstra M. Incidence of atrial fibrillation and relationship with cardiovascular events, heart failure, and mortality: a community-based study from the Netherlands. J Am Coll Cardiol. 2015;66(9):1000–7.

Ahmad T, Munir A, Bhatti SH, Aftab M, Raza MA. Survival analysis of heart failure patients: a case study. PLoS ONE. 2017;12(7):e0181001.

Kawasoe S, Kubozono T, Ojima S, Miyata M, Ohishi M. Combined assessment of the red cell distribution width and b-type natriuretic peptide: a more useful prognostic marker of cardiovascular mortality in heart failure patients. Intern Med. 2018;57(12):1681–8.

Varbo A, Nordestgaard BG. Nonfasting triglycerides, low-density lipoprotein cholesterol, and heart failure risk: two cohort studies of 113554 individuals. Arterioscler Thromb Vasc Biol. 2018;38(2):464–72. https://doi.org/10.1161/ATVBAHA.117.310269. (Epub 2017 Nov 2 PMID: 29097364).

Alfraidi H, Seifer CM, Hiebert BM, Torbiak L, Zieroth S. McIntyre WF relation of increasing QRS duration over time and cardiovascular events in outpatients with heart failure. Am J Cardiol. 2019;124(12):1907–11.

Niedziela JT, Hudzik B, Szygula-Jurkiewicz B, Nowak JU, Polonski L, Gasior M, Rozentryt P. Albumin-to-globulin ratio as an independent predictor of mortality in chronic heart failure. Biomark Med. 2018;12(7):749–57.

Pocock SJ, Wang D, Pfeffer MA, Yusuf S, McMurray JJ, Swedberg KB, Ostergren J, Michelson EL, Pieper KS, Granger CB. Predictors of mortality and morbidity in patients with chronic heart failure. Eur Heart J. 2006;27(1):65–75.

Kwon HJ, Park JH, Park JJ, Lee JH, Seong IW. Improvement of left ventricular ejection fraction and pulmonary hypertension are significant prognostic factors in heart failure with reduced ejection fraction patients. J Cardiovasc Imaging. 2019;27(4):257–65.

Núñez J, Bayés-Genís A, Zannad F, Rossignol P, Núñez E, Bodí V, Miñana G, Santas E, Chorro FJ, Mollar A, Carratalá A, Navarro J, Górriz JL, LupLupón J, Husser O, Metra M, Sanchis J. Long-term potassium monitoring and dynamics inheart failure and risk of mortality. Circulation. 2018;137(13):1320–30.

Madan VD, Novak E, Rich MW. Impact of change in serum sodium concentration on mortality in patients hospitalized with heart failure and hyponatremia. Circ Heart Fail. 2011;4(5):637–43.

Zhao Juan Juan, Qiang Yan. Machine Learning in Python [M]. Mechanical Industry Press, 2019. 51–145

Mpanya D, Celik T, Klug E, Ntsinjana H. Machine learning and statistical methods for predicting mortality in heart failure. Heart Fail Rev. 2021;26(3):545–52.

Lundberg S, Lee SI. A unified approach to interpreting model predictions. Adv Neural Information Processing Syst. 2017;30:4765–74.

Yu Mei, Yu Jian, Wang Jianrong, etc. Data Analysis and Data Mining. Tsinghua University Press, 2018.114–189

Hackenberger BK. Bayes or not Bayes, is this the question? Croat Med J. 2019;60(1):50–2.

Shiyou L. Introduction to Artificial Intelligence. Tsinghua University Press. 2020;570:205–12.

Liu L. Research and application of recommendation technology based on logistic regression. Univ Elect Sci Technol. 2013;18:30.

Dong X. Research on logistic regression algorithm and its GPU parallel implementation. Harbin Institute Technol. 2016;12:11.

Zhanshan Li, Zhaogeng Li. Feature selection algorithm based on XGBoost. Journal of Communications. 2019;40(10):102.

Hastie T, Tibshirani R, Friedman J. The elements of statistical learning. Available from: https://statweb stanford.edu/~tibs/ElemStatLearn/.

Lundberg SM, Erion G, Chen H, DeGrave A, Prutkin JM, Nair B, et al. From local explanations to global understanding with explainable AI for trees. Nat Mach Intell. 2020;2:56–67.

S.M. Lundberg, G.G. Erion, S-IJapa Lee, Consistent Individualized Feature Attribution for Tree Ensembles, 2018.

Yang H, Tian J, Meng B, Wang K, Zheng C, Liu Y, Yan J, Han Q, Zhang Y. Application of extreme learning machine in the survival analysis of chronic heart failure patients with high percentage of censored survival time. Front Cardiovasc Med. 2021;29(8):726516.

.M. Athanasiou, K. Sfrintzeri, K. Zarkogianni, et al., An Explainable XGBoost–Based Approach towards Assessing the Risk of Cardiovascular Disease in Patients with Type 2 Diabetes Mellitus[C]//2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), IEEE, 2020.

Mathur P, Srivastava S, Xu X, Mehta JL. Artificial intelligence, machine learning, cardiovascular disease. Clin Med Insights Cardiol. 2020;14:1179546820927404.

Wang Y, Zhu K, Li Y, Lv Q, Fu G, Zhang W. A machine learningbased approach for the prediction of periprocedural myocardial infarction by using routine data. Cardiovasc Diagn Ther. 2020;10:1313–24.

Melchio R, Rinaldi G, Testa E, Giraudo A, Serraino C, Bracco C, Spadafora L, Falcetta A, Leccardi S, Silvestri A, Fenoglio L. Red cell distribution widthpredicts mid-term prognosis in patients hospitalized with acute heart failure:the RDW in Acute Heart Failure (RE-AHF) study. Intern Emerg Med. 2019;14(2):239–47.

Strassheim D, Dempsey EC, Gerasimovskaya E, Stenmark K, Karoor V. Role of inflammatory cell subtypes in heart failure. J Immunol Res. 2019;2(2019):2164017.

Funding

The investigation was subsidized by the Yunnan Provincial Health Commission Clinical Medical Center (ZX2019-03–01) and by the Applied Basic Research Pro-gram of the Science and Technology Hall of Yunnan Province and Kunming Medical University (Project No.202301AY070001-130).

Author information

Authors and Affiliations

Contributions

Chenggong Xu, Hongxia Li and Lixing Chen conceptualized and designed the survey, conducted the statistical analyses, drafted the first manuscript and approved the final manuscript as submitted. Jianping Yang and Lixing Chen performed the statistical analysis and machine learning model building, were conducive to explaining the data and drafted the first manuscript. Yunzhu Peng, Hongyan Cai and Jianping Yang were involved in drafting the manuscript and conducted the statistical analyses. Chenggong Xu, Hongxia Li, Jing Zhou and Wenyi Gu collected the data and performed the statistical analyses. All authors agreed to the submission of the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

We obtained informed consent from every patient included in the study.The study protocol was approved by the medical ethics committee of the First Affiliated Hospital of Kunming Medical University, in accordance with the guidelines of the Declaration of Helsinki of the World Medical Association, and the ethics number of the study is: (2022) Ethics L No. 173.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Xu, C., Li, H., Yang, J. et al. Interpretable prediction of 3-year all-cause mortality in patients with chronic heart failure based on machine learning. BMC Med Inform Decis Mak 23, 267 (2023). https://doi.org/10.1186/s12911-023-02371-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-023-02371-5