Abstract

Background

Train-the-trainer (TTT) programs are widely applied to disseminate knowledge within healthcare systems, but evidence of the effectiveness of this educational model remains unclear. We systematically reviewed studies evaluating the impact of train-the-trainer models on the learning outcomes of nurses.

Methods

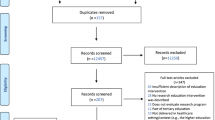

The reporting of our systematic review followed PRISMA 2020 checklist. Records identified from MEDLINE, Embase, CINAHL, and ERIC were independently screened by two researchers and deemed eligible if studies evaluated learning outcomes of a train-the-trainer intervention for trainers or trainees targeting nurses. Study quality was assessed with Joanna Briggs Institute’s critical appraisal tools and data of study characteristics extracted (objective, design, population, outcomes, results). Heterogeneity of outcomes ruled out meta-analysis; a narrative synthesis and vote counting based on direction of effects (p < 0.05) synthesized the results.

All records were uploaded and organized in EPPI-Reviewer.

Results

Of the 3800 identified records 11 studies were included. The included studies were published between 1998 and 2021 and mostly performed in the US or Northern Europe. Nine studies had quasi-experimental designs and two were randomized controlled trials. All evaluated effects on nurses of which two also included nurses’ assistants. The direction of effects of the 13 outcomes (knowledge, n = 10; skills, n = 2; practice, n = 1) measured in the 11 included studies were all beneficial. The statistical analysis of the vote counting showed that train-the-trainer programs could significantly (p < 0.05) improve trainees’ knowledge, but the number of outcomes measuring impact on skills or practice was insufficient for synthesis.

Conclusions

Train-the-trainer models can successfully disseminate knowledge to nurses within healthcare systems. Considering the nurse shortages faced by most Western healthcare systems, train-the-trainer models can be a timesaving and sustainable way of delivering education. However, new comparative studies that evaluate practice outcomes are needed to conclude whether TTT programs are more effective, affordable and timesaving alternatives to other training programs.

Trial registration

The protocol was registered in Research Registry (https://www.researchregistry.com, unique identifying number 941, 29 June 2020).

Similar content being viewed by others

Background

Train-the-trainer (TTT) programs were originally used by non-governmental organisations and universities in the 1970s as an educational model delivering cost-effective education to hard-to-reach populations in settings with limited resources [1, 2]. Drawing on the assumptions that social capital from relationships within a community optimize the learning process [3], local trainers familiar with the local language, culture, and economic realities were employed to educate their peers [4, 5]. TTT models have subsequently been applied across disciplinary fields and within various healthcare contexts and clinical settings [5,6,7,8,9] to update healthcare professionals’ knowledge and skills and implement evidence-based medical practices [10, 11].

Although TTT programs can draw on a wide range of educational and implementation strategies, several steps characterize knowledge dissemination in healthcare contexts (Fig. 1).

Master trainers with appropriate expertise educate selected professionals, preparing them to train others. Traditionally, trainers are often nurses or social workers working in the organization where the TTT program is implemented. Trainers learn about new expert knowledge, instructional tools, and guidelines, which they then disseminate to ‘trainees’, i.e., their professional peers [1]. No single gatekeeper of knowledge exists because expert knowledge, skills and evidence-based practices are disseminated across many professionals [1]. Ultimately, the application of trainees’ newly acquired skills can help ensure quality of care and better treatment for recipients of healthcare services. Advantages of using professional peer trainers include availability of support during the workday and insight into organization characteristics that can help trainees overcome barriers to applying new knowledge and skills in practice [3].

Since TTT model elements have been linked to improved clinical teamwork [12] and higher job satisfaction and decreased staff turnover [13], the TTT model may be a more efficient alternative to traditional direct trainer models in which more experienced professionals provide informal training of specific skills. Also, given their potential to deliver continual peer to peer support throughout the workday, TTT models may prove more sustainable and cost-effective than other training models [14].

An increasing number of studies investigating the impact of TTT models in healthcare settings have been published in the past decade and three systematic reviews have synthesized findings across studies [2, 15, 16]. Anderson and Taira [2] found evidence showing that TTT models could propagate knowledge and skills for providers in limited resource settings, but further research was needed to infer whether the model was sustainable for the long term. Two other reviews focused on health and social care workers. In a narrative synthesis, Pierce et al. [15] found that TTT programs applying a blended learning approach that combined interactive and multifaceted methods were the most effective to disseminate knowledge to healthcare and social care professionals. A meta-analysis concluded that TTT programs improved trainers’ health and social care knowledge domains [16]. However, this review did not focus on the impact on trainees’ knowledge, which is an essential feature that distinguishes the TTT programs from other training models. Although these systematic reviews provide insights into the effectiveness of TTT programs, to our knowledge, no systematic review has considered the claimed potential of TTT programs to disseminate knowledge, like a waterfall, from expert to trainee, through the different steps that require different training elements and qualities of teachers (Fig. 1).

Today, most Western healthcare systems face staff shortages and high work pressure [17,18,19]. The qualities inherent of TTT models can be an effective and sustainable way of disseminating knowledge. However, a new updated review is needed to clarify the evidence for these qualities and, in so doing, help healthcare providers make evidence-based decisions regarding the best way of delivering and implementing education in high strung healthcare systems. The aim of this systematic review was to synthesize findings about the impact of TTT models, disseminating knowledge from trainers to trainees, on nurses’ learning outcomes.

Methods

The reporting of our systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 checklist, and the reporting of the literature search followed the extension PRISMA Statement for Reporting Literature Searches in Systematic Reviews (PRISMA-S) [20] The protocol was registered in Research Registry, 29 June 2020 (https://www.researchregistry.com, unique identifying number 941). The reporting of the analysis followed Synthesis Without Meta-analysis (SWiM) reporting guidelines [21].

Eligibility criteria

The research question, eligibility criteria and search strings were structured using the Population, Intervention, Comparison, Outcome (PICO) framework. Records were eligible for inclusion if they: 1) targeted nurses, social and healthcare assistants, or healthcare assistants alone (P), 2) described a TTT intervention or program and specified how knowledge was transferred from master trainer to trainer or from trainer to trainee (I), and 3) evaluated intervention learning outcomes (i.e., attitudes, knowledge, skills and practice) for nurses in a healthcare context (O). A preliminary search identified no randomized controlled trials (RCTs), and controlled trials and pre-/post-intervention studies were thus also included. Consequently, no comparison (C) was necessary, but could be other educational models. For inclusion in the synthesis, records had to represent primary studies published in peer-reviewed journals.

Search strategy

We conducted a literature search for studies published from inception to 21 January 2020 in MEDLINE (Ovid), Embase (Ovid), CINAHL (EBSCO) and ERIC (EBSCO). The search was updated in all four databases on 10 September 2021. The literature was searched for the two key concepts of ‘train-the-trainer’ and ‘health personnel’ using controlled vocabularies (e.g., medical subject headings), free-text terms, and keywords when possible (i.e. title, abstract, keywords and MeSH terms).

We applied an RCT filter that was adapted to include a broader range of studies evaluating impact. A filter removing animal studies was used in MEDLINE and Embase. Due to the relatively low number of studies retrieved in CINAHL and ERIC, we chose not to apply filters in those databases. A limit excluding MEDLINE journals was applied in Embase to avoid duplicate journals. Two information specialists (THA and ON) developed and conducted the literature search. The search strategy was evaluated by testing its ability to identify known key articles. The complete search strategy is available in Additional file 1.

All records were uploaded to and organized in EPPI-Reviewer [22]. Deduplication was carried out in EPPI-Reviewer using the built-in automated deduplication function supplemented by a manual search for duplicate records.

Study selection

All records were screened in duplicate and independently by title and abstract in EPPI-Reviewer, each by two authors (NK, SHE, EMK). Included full-text reports were assessed for eligibility by two authors (NK, SHE). Disagreements were resolved by discussion and in two cases resolved by a third author (THA).

Full-text reports were retrieved electronically. If no electronic version was available or could not be retrieved via a research library (Royal Danish Library), we e-mailed the corresponding author. If no response was received within a month, the report was excluded. References of included studies were searched to identify any additional relevant references. Records in languages other than English, Danish, Swedish and Norwegian (languages understood by the review team) that we considered relevant based on title and abstract were not included in the synthesis but have been listed in Additional file 2 for others to analyze.

Data extraction

Two authors (SHE and NK) designed a data extraction form which was pilot tested and adapted accordingly before final data extraction. One author (SHE) extracted data from included reports which was checked by a second author (NK). In the case of missing data, an e-mail inquiry was sent to the first or corresponding author. If missing data were essential to include in the synthesis and no response was received after 1 month, the report was excluded.

Quality assessment

Reports included after the full-text screening were assessed for methodological quality by two authors (SHE, NK) using the Joanna Briggs Institute (JBI) critical appraisal tools (CAT) for quasi-experimental studies and RCTs [11]. A pilot search revealed few RCTs and many quasi-experimental studies with a wide range of study designs. To allow for a robust synthesis, quasi-experimental studies that failed to meet criteria for comparison due to insufficient reporting of data were excluded before analysis. More specifically, studies that were rated ‘no’ or ‘unclear’ to JBI quality assessment check list item 7 (similar outcome measurements for compared groups), 8 (reliable outcome measures), and 9 (appropriate statistical analysis) were excluded (Table 2). To help overview the quality assessment scores we calculated a percentage score for each study by dividing the number of CAT items with ‘yes’ responses by the total number of items [23].

Data synthesis

To distinguish between the impact of TTT interventions on learning for trainers and trainees, we grouped studies by their target population groups. Any impact on trainers’ learning was regarded the result of training by master trainers. Any impact on nurses’ (trainees) learning was attributed the effect of training by trainers. We therefore also regarded a successful training of trainees a result of a successful training of trainers by the master trainers (Fig. 1).

Characteristics of included studies ruled out conducting a meta-analysis. Only four of eleven included studies reported measures of precision. Effect measures varied across studies and nine studies did not report P values, precluding summarizing effect estimates or combining P values. In addition, outcome definitions differed substantially across studies. As an alternative, we synthesized findings by vote counting based on direction of effect, as specified in the Cochrane Handbook for Systematic Reviews of Interventions [24]. Regardless of statistical significance, the direction of the effect of TTT intervention on each independent study outcome was counted as beneficial if data indicated a positive effect (‘1’) or as not beneficial if data indicated no effect or a negative effect on the outcome (‘0’). Effect direction was based on pre- and post-intervention measures, not the results of comparison with any control group. To examine the statistical significance of effects by vote count and help clarify the certainty of the findings, we conducted binomial tests (one sample, non-parametric test) comparing the number of beneficial and not beneficial direction of effects for individual outcomes (e.g., knowledge) and all outcomes (knowledge, skills, and practice) on trainers, trainees, and trainers and trainees together. Statistical significance was set at P < 0.05 (two-tailed) and 95% confidence intervals with Clopper-Pearson interval. Data was analyzed by SPSS Statistics for Windows version 25.

Results

The literature search yielded 3800 records. Duplicates automatically marked by EPPI-Reviewer (n = 229) were manually verified; manual screening detected an additional 31 duplicate records. Of all remaining records (n = 3540) screened by title and abstract, 3332 were excluded. Full-text reports for two of the resulting 208 records could not be retrieved through correspondence with the authors. Four studies were in other languages than English, Danish, Swedish and Norwegian, 12 studies were not journal articles, and two full-text records were unavailable.

Of 190 records assessed for eligibility, 16 met inclusion criteria. After critical appraisal, five were excluded [25,26,27,28,29]. Eleven studies were included for data extraction and synthesis. Figure 2 provides additional details about the screening and selection process.

Study characteristics

Demographics

The 11 included studies were published between 1998 and 2021 (Table 1). Four studies were conducted in the US [13, 30,31,32], three in Northern Europe [33,34,35], one in South Asia [6], and one in the Middle East [36]. Two studies did not report the country where the TTT intervention took place [37, 38]. Nine studies were quasi-experimental [6, 13, 30,31,32, 34, 36,37,38] of which two included a control group [31, 34].

Two studies were cluster RCTs [33, 35]. One quasi-experimental study compared the TTT intervention to a direct trainer teaching intervention [31] and the remaining studies had no comparison intervention [33,34,35].

All studies investigated the effect on nurses [6, 13, 30,31,32,33,34,35,36,37,38] and two studies also included nursing assistants or aides [31, 34].

The collective study population of included studies was 1808 (range of individual study populations: 8–428). Eight studies were conducted in hospital settings [6, 13, 30, 32, 35,36,37,38]; two of these also included health centers [36, 37]. Two studies took place in psychiatric settings [33, 34] and one was conducted in long-term care facilities [31].

The specific knowledge or skills of the TTT programs varied, but most studies examined psychiatric knowledge or skills [30, 31, 33, 34] or prevention of low back pain [35, 38]. Among other topics included palliative care [37], HIV counselling [6], and infant safe sleep practices [32].

Outcomes

Knowledge was the most common outcome measure, used in ten studies [6, 13, 30,31,32,33,34,35,36, 38]. One study assessed clinical practice [33] and two studies measured skills [37, 38]. No studies investigated the effect of the TTT intervention on attitudes. In included quasi-experimental studies, outcomes were most commonly measured by items testing attendees’ knowledge pre- and post-intervention.

Six studies defined knowledge as the score on knowledge tests [6, 13, 31, 32, 36, 38]. In one of these studies, the score was defined as the number of correct answers [13], whereas three other studies calculated knowledge scores in various ways [32, 36, 38]. In the remaining studies defining knowledge as a test score, it was unclear if the score was calculated or reflected the number of correct answers [6, 31].

The remaining four studies measured knowledge through self-evaluation [33], perceptions of being sufficiently trained or lacking knowledge [34], correct responses about what to do in various situations [35], and correct completion of cases [30]. Practice was defined as guideline adherence and measured by correct responses to videoclips [33], whereas skills were measured as self-evaluation of skills on different tasks [37] or as a score based on observation [38].

Impact on outcomes

Seven studies measured the effect of the TTT programs on trainees [6, 13, 32,33,34,35, 37] and four studies measured the effect on trainers [30, 31, 36, 38].

Six reports included mean pre- and post-intervention scores for the intervention group [6, 31, 32, 35, 36, 38]. Two of these also included mean pre- and post-intervention scores for a control group [31, 35]. Another study reported the mean pre- and post-intervention difference in scores for the intervention group [13]. Only one study calculated the mean difference-in-difference [33]. The remaining three studies measured outcomes as percentages. Two reported only pre- and post-intervention percentages of the desired outcome for either the intervention group [37] or both the intervention and control groups [34]. The third study reported a percentage increase from pre- to post-intervention for the intervention group [30].

Six studies measured pre- and immediate post-intervention outcomes [6, 30, 31, 34, 36, 37]. One of these studies measured post-intervention outcomes 1.5 years into a 2-year program [34]. Three studies measured outcomes before and 1, 3 or 12 months after the intervention, rather than immediately post-intervention [33, 35, 38]. The remaining two studies measured pre-, post- and follow-up outcomes at 2 and 3 months after the TTT intervention [13, 32].

Quality assessment

Quality appraisal of all 16 studies is shown in Table 2 (quasi-experimental studies) and Table 3 (RCTs). In accordance with the pre-defined quality assessment criteria, we excluded five quasi-experimental studies due to poor quality [25,26,27,28,29] (Table 2) and included all the studies with design RCT [31, 35] (Table 3). Consequently, of the 16 studies, 11 were included in the final synthesis. The nine quasi-experimental studies included in the synthesis were of good quality, with 67–89% positive responses to quality appraisal items. Studies with the lowest scores did not apply a control group, use multiple measures, or present complete follow-up data or results (Table 2).

The two RCT studies were of lower overall quality than the quasi-experimental studies (Table 3). It was unclear whether randomization could be regarded as ‘true’ and whether treatment allocation was concealed (Table 3). Two questions regarding blinding of participants or those delivering treatment were not scored because they were not applicable to a TTT intervention and did not count in final percentage scores. In addition to JBI appraisal criteria, it is worth noting that only one quasi-experimental study and one RCT [33, 38] adjusted estimates for important confounders (e.g., department, baseline score, sex, age education, length of employment, and job title) and both RCTs included in the synthesis conducted intention-to-treat analyses [31, 35].

Synthesis

The 11 included studies collectively reported 13 effect directions (standardized metrics), all of which were beneficial, indicating that the TTT model can increase knowledge, skills and practice in all target groups we identified (Table 4).

Trainers significantly improved knowledge for trainees (6 metrics, P < 0.031) or both trainees and trainers (10 metrics, P < 0.002). Although all four included metrics were beneficial, knowledge was not significantly transferred from master trainers to trainers (P = 0.125). Too few metrics were reported for the outcomes of skills (two metrics) and practice (one metric) to conduct binomial testing. However, pooling skills and practice with knowledge yielded similar results. TTT models significantly improved outcomes for trainees (P < 0.008) and both trainers and trainees (P < 0.001) but not trainers (P < 0.125).

Discussion

All effect directions of included studies suggested that TTT interventions can improve knowledge, skills, or practice. However, only for knowledge transfer between trainer and trainee was the number of effect directions sufficient to detect a statistically significant increase. Too few effect directions to permit testing for statistical significance were reported for knowledge transfer from master trainer to trainer and the impact of TTT interventions on skills and practice.

Although our findings are consistent with those of one previous systematic review [15], Pearce et al. [15], or the two other previous reviews [2, 16] did not distinguish between the different levels of the TTT program (e.g., knowledge dissemination from master trainer to trainer, from trainer to trainee). Also, our synthesis was based on an updated literature search and the vote count methodology enabled us to account for the summarised direction of effects.

Most learning outcomes of TTT programs can be evaluated in light of Kirkpatrick’s framework [39], distinguishing between their impact on trainees’ reactions (e.g., feelings about the program), learning (e.g., knowledge, skills, attitudes), behavior (e.g., performance in practice), and results (e.g., organizational benefits or patient outcomes). Most studies included in our synthesis evaluated learning outcomes, primarily by knowledge. Presumably because knowledge outcomes are easy to measure with self-reported questionnaires and requires a limited follow up period. Only a single study assessed behavioral outcomes (performance in practice) and none evaluated results. Changes in knowledge may not lead to changed behavior or better care [40]. Study designs that include long-term follow-up measurements could provide insights into whether TTT interventions lead to behavior change or improve care, generating more robust findings about their effects on practice.

None of the included studies investigated how the program was taught and generally lacked transparency about assumptions of how knowledge was best transferred. Improved methodological, theoretical and pedagogical frameworks in future evaluation frameworks are warranted to further illuminate the effectiveness of TTT programs on different aspects of practice and care. For example, study designs that include theoretical frameworks (e.g., Kirkpatrick’s learning model) can help explain how and why TTT-programs impact outcomes at different levels of learning (e.g., reaction, learning, behavior or results).

Our synthesis distinguished between the impact of TTT interventions on trainers and trainees. It can be argued that the impact of learning of TTT trainers is the same as in a direct trainer intervention. However, trainers of TTT programs are both subjects and agents of change, which introduces complexity that distinguishes TTT models from direct training models [41]. Also, trainers are often selected to be trainers because they already have a high level of experience or knowledge and therefore would be expected to improve less on tests of knowledge. One study included in our review found that direct training was superior to the TTT model on trainees’ knowledge test scores [31], suggesting that other ways of transferring knowledge to trainees may be more effective [15].

Strengths and limitations

To the best of our knowledge, our review is the first to distinguish between outcomes for trainers and trainees in synthesising the findings which can help decision makers evaluate the benefits of TTT models (e.g., cost-effectiveness or peer-facilitation) in light of limited evidence of their effects on nurses’ performance and practice. Other methodological strengths include the updated systematic and comprehensive literature search in several databases. In accordance with recommendations [21], we included studies proved too heterogenous for meta-analysis and we chose the vote count method to enhance transparency and clarity of synthesis. However, this method also introduces some limitations. A vote is counted as beneficial based on direction of the effect on study outcomes without considering the statistical significance or magnitude of the results. Three of the included studies had control groups, but the vote count methodology limited the possibility of comparing TTT models to alternative models. Although the synthesis suggested that TTT interventions can increase nurses’ knowledge, we were unable to synthesise whether alternative training models improved knowledge more effectively. Finally, most included studies were conducted in European or North American healthcare settings and the findings may not be generalizable to countries with different healthcare systems or educational traditions.

Conclusions

Our systematic review synthesis showed that TTT-programs targeting nurses, social and healthcare assistants/nurse aids can effectively disseminate knowledge from trainers to trainees supporting the underlying assumption of the model that local professionals can be trained to train other peers. Given the nurse shortages and high work pressures TTT models may be a timesaving and sustainable way of delivering education. However, the methodological limitations identified in this review (e.g., study design, outcome measurements) point out that there is not yet sufficient evidence to conclude whether TTT-programs are more effective compared to other programs. New studies that compare the effectiveness of TTT-programs on high quality measurements with other programs can clarify whether TTT-programs are more sustainable and cost-effective than other programs (e.g., e-learning). Qualitative studies can further illuminate how TTA programs may change practice outcomes. In light of the limited evidence, our findings can nevertheless give healthcare providers insights into the advantages and disadvantages of implementing TTT models in high strung healthcare systems.

Availability of data and materials

Search strings are included in Additional file 1 and other data in the current study are available from the corresponding author on request.

Abbreviations

- CAT:

-

Critical appraisal tools

- JBI:

-

Joanna Briggs Institute

- PICO:

-

Population, Intervention, Comparison, Outcome

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PRISMA-S:

-

Statement for Reporting Literature Searches in Systematic Reviews

- RCTs:

-

Randomized controlled trials

- SWiM:

-

Synthesis Without Meta-analysis

- TTT:

-

Train-the-trainer

References

Orfaly RA, Frances JC, Campbell P, Whittemore B, Joly B, Koh H. Train-the-trainer as an educational model in public health preparedness. J Public Health Manag Pract. 2005;Suppl:S123-127.

Anderson CR, Taira BR. The train the trainer model for the propagation of resuscitation knowledge in limited resource settings: a systematic review. Resuscitation. 2018;127:1–7.

Balatti J, Falk I. Socioeconomic contributions of adult learning to community: a social capital perspective. Adult Educ Quart. 2002;52:281–98.

Knettel BA, Slifko SE, Inman AG, Silova I. Training community health workers: an evaluation of effectiveness, sustainable continuity, and cultural humility in an educational program in rural Haiti. Int J Health Promot Educ. 2017;55(4):177–88.

Tobias CR, Downes A, Eddens S, Ruiz J. Building blocks for peer success: lessons learned from a train-the-trainer program. AIDS Patient Care STDS. 2012;26(1):53–9.

Nyamathi A, Vatsa M, Khakha DC, McNeese-Smith D, Leake B, Fahey JL. HIV knowledge improvement among nurses in India using a train-the-trainer program. J Assoc Nurses AIDS Care. 2008;19(6):443–9.

Hiner CA, Mandel BG, Weaver MR, Bruce D, McLaughlin R, Anderson J. Effectiveness of a training-of-trainers model in a HIV counseling and testing program in the Caribbean Region. Hum Resour Health. 2009;7(1):11.

Burr CK, Storm DS, Gross E. A faculty trainer model: increasing knowledge and changing practice to improve perinatal HIV prevention and care. AIDS Patient Care STDS. 2006;20(3):183–92.

Booth-Kewley S, Gilman PA, Shaffer RA, Brodine SK. Evaluation of a sexually transmitted disease/human immunodeficiency virus prevention train-the-trainer program. Mil Med. 2001;166(4):304–10.

Yarber L, Brownson C, Jacob R, Baker E, Jones E, Baumann C, et al. Evaluating a train-the-trainer approach for improving capacity for evidence-based decision making in public health. BMC Health Serv Res. 2015;15:547.

Tufanaru C, Munn Z, Aromataris E, Campbell J, Hopp L. Chapter 3: Systematic reviews of effectiveness. In: Aromataris E, Munn Z, editors. JBI Manual for Evidence Synthesis. JBI; 2020. Available from: https://synthesismanual.jbi.global. https://doi.org/10.46658/JBIMES-20-04.

Clancy C, Tornberg D. TeamSTEPPS: assuring optimal teamwork in clinical settings. Am J Med Qual. 2019. Available from: https://pubmed.ncbi.nlm.nih.gov/31479300/. Cited 2023 Feb 8.

Kalisch BJ, Xie B, Ronis DL. Train-the-trainer intervention to increase nursing teamwork and decrease missed nursing care in acute care patient units. Nurs Res. 2013;62(6):405–13.

Hinds PJ, Patterson M, Pfeffer J. Bothered by abstraction: the effect of expertise on knowledge transfer and subsequent novice performance. J Appl Psychol. 2001;86(6):1232–43.

Pearce J, Mann MK, Jones C, van Buschbach S, Olff M, Bisson JI. The most effective way of delivering a train-the-trainers program: a systematic review. J Contin Educ Health Prof. 2012;32(3):215–26.

Poitras ME, Bélanger E, Vaillancourt VT, Kienlin S, Körner M, Godbout I, et al. Interventions to improve trainers’ learning and behaviors for educating health care professionals using train-the-trainer method: a systematic review and meta-analysis. J Contin Educ Health Prof. 2021;41(3):202–9.

WHO and partners call for urgent investment in nurses. Available from: https://www.who.int/news/item/07-04-2020-who-and-partners-call-for-urgent-investment-in-nurses. Cited 2022 Dec 5.

Scheffler RM, Arnold DR. Projecting shortages and surpluses of doctors and nurses in the OECD: what looms ahead. Health Econ Policy Law. 2019;14(2):274–90.

Haddad LM, Annamaraju P, Toney-Butler TJ. Nursing shortage. In: StatPearls. Treasure Island: StatPearls Publishing; 2022. Available from: http://www.ncbi.nlm.nih.gov/books/NBK493175/. Cited 2023 Feb 17.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

Campbell M, McKenzie J, Sowden A, Katikireddi S, Brennan S, Ellis S, et al. Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. 2020.

Thomas J, Brunton J, Graziosi S. EPPI-Reviewer 4: software for research synthesis. EPPI-Centre Software. Social Science Research Unit, UCL Institute of Education; 2010. Available from: https://eppi.ioe.ac.uk/cms/er4/Features/tabid/3396/Default.aspx.

Alman DG. Practical statistics for medical research. 1st ed. London: Chapman & Hall; 1991.

McKenzie JE, Brennan SE. Synthesizing and presenting findings using other methods. In: Cochrane handbook for systematic reviews of interventions. Wiley; 2019. p. 321–47. Available from: https://onlinelibrary.wiley.com/doi/abs/10.1002/9781119536604.ch12. Cited 2023 Feb 8.

Goucke CR, Jackson T, Morriss W, Royle J. Essential pain management: an educational program for health care workers. World J Surg. 2015;39(4):865–70.

Hanssens Y, Woods D, Alsulaiti A, Adheir F, Al-Meer N, Obaidan N. Improving oral medicine administration in patients with swallowing problems and feeding tubes. Ann Pharmacother. 2006;40(12):2142–7.

Hardy SA, Kingsnorth R. Mental health nurses can increase capability and capacity in primary care by educating practice nurses: an evaluation of an education programme in England. J Psychiatr Ment Health Nurs. 2015;22(4):270–7.

Jacobs-Wingo JL, Schlegelmilch J, Berliner M, Airall-Simon G, Lang W. Emergency preparedness training for hospital nursing staff, New York City, 2012–2016. J Nurs Scholarsh. 2019;51(1):81–7.

Lombardo NBE, Wu B, Hohnstein JK, Chang K. Chinese dementia specialist education program: training Chinese American health care professionals as dementia experts. Home Health Care Serv Q. 2002;21(1):67–86.

Sinvani L, Delle Site C, Laumenede T, Patel V, Ardito S, Ilyas A, et al. Improving delirium detection in intensive care units: multicomponent education and training program. J Am Geriatr Soc. 2021;69(11):3249–57.

Smith. From the rural midwest to the rural southeast: evaluation of the generalizability of a successful geriatric mental health training program. University of Iowa. Available from: https://iro.uiowa.edu/esploro/outputs/9983557168302771. Cited 2023 Feb 8.

Rholdon R, Lemoine J, Templet T, Stueben F. Effects of implementing a simulation-learning based training using a train-the-trainer model on the acquisition and retention of knowledge about infant safe sleep practices among licensed nurses. J Pediatr Nurs. 2020;55:224–31.

de Beurs DP, de Groot MH, de Keijser J, Mokkenstorm J, van Duijn E, de Winter RFP, et al. The effect of an e-learning supported Train-the-Trainer programme on implementation of suicide guidelines in mental health care. J Affect Disord. 2015;175:446–53.

Ramberg IL, Wasserman D. Benefits of implementing an academic training of trainers program to promote knowledge and clarity in work with psychiatric suicidal patients. Arch Suicide Res. 2004;8(4):331–43.

Warming S, Ebbehøj NE, Wiese N, Larsen LH, Duckert J, Tønnesen H. Little effect of transfer technique instruction and physical fitness training in reducing low back pain among nurses: a cluster randomised intervention study. Ergonomics. 2008;51(10):1530–48.

Karayurt O, Gürsoy AA, Taşçi S, Gündoğdu F. Evaluation of the breast cancer train the trainer program for nurses in Turkey. J Cancer Educ. 2010;25(3):324–8.

Anderson WG, Puntillo K, Cimino J, Noort J, Pearson D, Boyle D, et al. Palliative care professional development for critical care nurses: a multicenter program. Am J Crit Care. 2017;26(5):361–71.

Carta A, Parmigiani F, Roversi A, Rossato R, Milini C, Parrinello G, et al. Training in safer and healthier patient handling techniques. Br J Nurs. 2010;19(9):576–82.

Kirkpatrick JD, Kirkpatrick WK. Kirkpatrick’s four levels of training evaluation. Alexandria: ATD Press; 2016.

Walczak J, Kaleta A, Gabryś E, Kloc K, Thangaratinam S, Barnfield G, et al. How are ‘teaching the teachers’ courses in evidence based medicine evaluated? A systematic review. BMC Med Educ. 2010;10(1):64.

Gask L, Coupe N, Green G. An evaluation of the implementation of cascade training for suicide prevention during the ‘Choose Life’ initiative in Scotland - utilizing Normalization Process Theory. BMC Health Serv Res. 2019;19(1):588.

Acknowledgements

None

Funding

Open access funding provided by Copenhagen University The study was part of Steno Diabetes Center Copenhagen’s larger initiative to improve the quality of diabetes care: ‘Supplementary treatment initiatives’, which is supported by the Novo Nordisk Foundation (award/grant number not available). The Novo Nordisk Foundation had no influence on the study design, conduct or interpretation of findings.

Author information

Authors and Affiliations

Contributions

NRK, SHE, THA and MAN wrote the manuscript and MAN was responsible for the final version. ON and THA developed the search strategy and EMK, SHE, NRK and THA carried out the review of the primary studies. SHE, THA and MAN carried out the statistical analyses. All authors were involved in formulation of the research questions, methods, interpretations of the results and have commented and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search documentation.

Additional file 2.

Studies in other languages than English, Danish, Swedish and Norwegian (Languages understood by the review team).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Nexø, M.A., Kingod, N.R., Eshøj, S.H. et al. The impact of train-the-trainer programs on the continued professional development of nurses: a systematic review. BMC Med Educ 24, 30 (2024). https://doi.org/10.1186/s12909-023-04998-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-023-04998-4