Abstract

Background

Medical imaging related knowledge and skills are widely used in clinical practice. However, radiology teaching methods and resultant knowledge among medical students and junior doctors is variable. A systematic review and meta-analysis was performed to compare the impact of different components of radiology teaching methods (active versus passive teaching, eLearning versus traditional face-to-face teaching) on radiology knowledge / skills of medical students.

Methods

PubMed and Scopus databases were searched for articles published in English over a 15-year period ending in June 2021 quantitatively comparing the effectiveness of undergraduate medical radiology education programs regarding acquisition of knowledge and/or skills. Study quality was appraised by the Medical Education Research Study Quality Instrument (MERSQI) scoring and analyses performed to assess for risk of bias. A random effects meta-analysis was performed to pool weighted effect sizes across studies and I2 statistics quantified heterogeneity. A meta-regression analysis was performed to assess for sources of heterogeneity.

Results

From 3,052 articles, 40 articles involving 6,242 medical students met inclusion criteria. Median MERSQI score of the included articles was 13 out of 18 possible with moderate degree of heterogeneity (I2 = 93.42%). Thematic analysis suggests trends toward synergisms between radiology and anatomy teaching, active learning producing superior knowledge gains compared with passive learning and eLearning producing equivalent learning gains to face-to-face teaching. No significant differences were detected in the effectiveness of methods of radiology education. However, when considered with the thematic analysis, eLearning is at least equivalent to traditional face-to-face teaching and could be synergistic.

Conclusions

Studies of educational interventions are inherently heterogeneous and contextual, typically tailored to specific groups of students. Thus, we could not draw definitive conclusion about effectiveness of the various radiology education interventions based on the currently available data. Better standardisation in the design and implementation of radiology educational interventions and design of radiology education research are needed to understand aspects of educational design and delivery that are optimal for learning.

Trial registration

Prospero registration number CRD42022298607.

Similar content being viewed by others

Background

Diagnostic imaging interpretation is an essential skill for medical graduates, as imaging is frequently utilised in medical practice. However, radiology is often under-represented in medical curricula [1, 2]. Exposure to radiology education in medical school could result in better understanding of the role of imaging, leading to benefits such as enhanced selection of imaging, timely diagnosis and, subsequently, improved patient care [1]. There is no consensus as to how radiology should be taught in undergraduate medical programs, and methods vary widely across the globe [3,4,5,6]. Great diversity exists in radiology topics taught, the stage of learning at which radiology is introduced to students, and the training of those teaching radiology [7, 8]. In addition to traditional lectures, small group tutorials, case conferences or clerkship models, many newer methods of delivering radiology education have been described [1, 5, 9, 10]. These include eLearning, flipped classrooms and in diagnostic reasoning simulator programs [1, 11].

Radiology is particularly suited to eLearning, given the digitisation of medical imaging and its ease of incorporation into eLearning resources [1]. eLearning can provide easy access to radiology education, regardless of students’ location and has been increasingly utilized, particularly following the onset of the COVID-19 pandemic [10, 12,13,14]. In addition to delivery methods, other factors may influence effectiveness of radiology education, including active or passive method of instruction, instructor expertise and content complexity.

Many studies looked at individual educational interventions, typically confined to a single cohort with limited sample size, making it difficult to make recommendations on how radiology education should be delivered. Thus, we conducted a systematic review and meta-analysis to determine the factors associated with effective radiology knowledge or skill acquisition by undergraduate medical students. We analysed teaching methods, modes of delivery, instructor expertise, content taught, medical student experience / seniority, and the methods of assessment as outlined in Supplementary Material 1.

Methods

This study utilised a prospectively designed protocol which is in concordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) Statement [15]. Ethics approval was not required as this is a systematic review and meta-analysis.

Search identification

A systematic search strategy was designed to identify articles evaluating knowledge and / or skill acquisition following radiology education interventions for medical students. With the assistance of a university librarian, PubMed and Scopus databases were searched for articles dating from January 2006 to the time of review in June 2021 using the search terms listed in Table 1. Initial screening was performed by a single reviewer (SW), limiting the studies to articles written in English. Abstracts were screened and where ambiguity existed, the full articles were reviewed. Where full text articles were not available, the corresponding authors were contacted for a copy. Duplicate articles and those where full text versions could not be obtained were removed.

Study eligibility and inclusion

The shortlisted articles were reviewed by two authors (SW, NT) according to the inclusion and exclusion criteria which are summarised in outlined in Table 2. The articles were discussed by the authors and where ambiguity existed, consensus was achieved following discussion with a third author (MM).Where missing data precluded calculation of the effect size of an educational intervention, several attempts were made to contact corresponding authors via email. If no response was received, the article was excluded. Cohen’s D effect sizes were calculated from available data, then independently reviewed by a statistician.

Quality assessment

The methodological quality of all articles meeting the inclusion criteria were quantitatively assessed using the Medical Education Research Study Quality Instrument (MERSQI) [16]. Risk of bias was assessed according to the Cochrane collaboration risk of bias assessment tools: Revised Cochrane risk-of-bias for randomized trials (RoB 2) [17] and Risk of Bias in Non-randomized Studies of Interventions (ROBINS-1) [18]. Tabulated representations were constructed using the Risk-of-bias visualization (robvis) [19] package.

Data extraction

Data extracted included publication details, sample sizes, medical students’ seniority, instructor expertise, educational delivery methods, radiology content and methods of assessment. A more comprehensive description can be found in Supplementary Material (1) Extracted data points are defined in Supplementary Material (2) Cohen’s D effect sizes were recorded when published or calculated from available data.

Many studies employed several methods of educational delivery. If the intervention group received an educational resource (e.g. eLearning) in addition to an educational activity shared by both intervention and control groups (e.g. lecture), then only the additional activity (i.e. eLearning) was included in the comparison analysis. When two reviewers were undecided about how to classify data extracted from a study, the outcome would be resolved by consensus after review by a third author (MM). If disagreement remained, attempts to contact the authors for additional information were made. If a final determination was unable to be made, the study was excluded.

Data synthesis

A random effects meta-analysis model was used to obtain the pooled estimate of the standardised mean difference (SMD) based on Cohen’s D effect size calculations. Heterogeneity was quantified by I² statistics, which estimate the percentage of variability across studies not due to chance. Evidence of publication bias was assessed by visual inspection of funnel plots and regression tests. A meta-regression analysis was performed to examine the possible sources of heterogeneity and the association between study factors and the intervention effect (SMD). All statistical analyses were performed with R version 4.1.2 (R Foundation for Statistical Computing Vienna Austria) using the R package metafor 2010.

All effect sizes were expressed as Cohen’s D which were interpreted as 0.2 for small, 0.5 for moderate and ≥ 0.8 as a large effect size.

Results

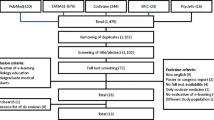

The search terms yielded 3052 articles. Initial screening of titles and abstracts excluded 2684 articles due to irrelevance, leaving 368 for further screening. Of these articles, 82 were removed as 74 were duplicates and 8 were unavailable in our library and unable to be obtained via interlibrary loans, resulting in 286 articles for review. Of these, 246 articles were excluded as 238 did not meet inclusion criteria and 8 had insufficient information to calculate effect sizes despite attempts to contact the corresponding authors. A majority of studies were excluded as they did not address undergraduate medical student populations, measured subjective measures (e.g., student opinions) or involved research interventions without a control group. In many cases, studies were excluded due to a combination of factors not meeting inclusion criteria. In total, 40 articles were included for final review. A summary of the process is outlined in Fig. 1.

Flowchart of the systematic literature search. The search terms yielded 3052 articles where 2684 were excluded on review of titles and abstracts due to lack of relevance leaving 368 articles. 82 of these articles were not retrieved as 74 were duplicates and 8 were inaccessible in our library or via interlibrary loans. Of the remaining 286 articles, 246 were excluded as 238 did not meet inclusion criteria and 8 had insufficient information to calculate effect sizes despite attempting to contact the corresponding authors. The remaining 40 articles were included for final review.

Study characteristics

From the 40 articles reviewed, 30 consisted of randomised controlled trials (RCTs) and 10 were non-randomised studies. Most were published in 2014 or later, with the greatest number of articles published in 2019 (n = 7, 18%). A large proportion of the studies were conducted in Europe (n = 18, 45%) followed by North America (n = 14, 35%) with USA being the single country with the most studies (n = 11, 28%), see Fig. 2. More studies focused on senior medical students (n = 17, 42.5%), rather than junior medical students (n = 16, 40%). Of the remaining studies, 4 had combined populations of senior and junior medical students (10%) while 3 did not specify the seniority of the cohort (7.5%). The combined studies involved a total of 6242 medical students where population sizes ranged from 17 to 845 (median 101.5; IQR 125.5).

Publication year (A) and location of studies (B) The majority of the 40 shortlisted studies were published from 2014 onwards (n=32) with the highest number published in 2019 (n=7). Most studies were conducted in Europe (n=18, 45%), followed by North America (n=14, 35%) with USA being the single country with the most studies (n=11, 28%).

Other extracted study parameters included: active versus passive education delivery; whether eLearning was employed; the imaging modalities taught; and radiology training of the teacher (Supplementary Material 1). Distinctions between the content subgroups of radiologic anatomy, radiation protection and indications for imaging and imaging interpretation were abandoned due to considerable overlap between articles. Many articles did not provide sufficient detail regarding methods of assessment, so that parameter was also omitted from the meta-analysis.

The studies meeting inclusion criteria were generally of good quality with MERSQI scores ranging from 10.5 to 15.5 out of 18, the median score being 13. However, half of the included studies (n = 20) were judged to be at serious risk of bias while 16 were judged to be at low risk of bias (40%) and 4 at moderate risk of bias (10%). Among the included randomised control trials, missing data resulted in a serious risk of bias in 10 of 12 studies and some concerns in 1 study. This was a feature of all three randomized cross over control trials. The main contributor was missing data due to attrition in study groups between phases of these trials. In the non-randomised trials, bias was predominantly due to confounding variables. This featured in all 8 studies deemed at serious risk of bias and contributed to moderate risk in 1 study. A summary of the risk of bias assessments is shown in Fig. 3. A full breakdown of the risk of bias assessment for each included article can be found in Supplementary Material 3 and 4.

Risk of bias assessment summary for randomised control trials (A) and non-randomised trials (B)In the randomised trials, there was a serious risk of bias in 12 of 30 randomised studies and moderate risk in 3 of 30 studies. This was predominantly due to missing outcome data and issues from randomisation. In non-randomised trials, 8 of 10 studies were considered at serious risk and 1 study was considered at moderate risk of bias. This was predominantly due to confounding variables followed by missing data and bias in participant selection.

A funnel plot analysis demonstrated that studies with high variability and effect sizes near 0 are not present, with multiple studies lying outside the funnel (Fig. 4). In particular, small studies that have been published showed relatively large effect sizes. When overlayed with the p-values of the included studies, only those with p > 0.1 were larger studies. Eggers Test indicated there was evidence of publication bias (Intercept = -0.2841, p < 0.05).A summary of the included articles study characteristics and educational interventions is outlined in Tables 3 and 4 respectively. Brief descriptions of included studies can be found in Supplementary Material 5.

Assessment for publication bias of included studiesThe funnel plot shows the relationship between the effect size and the sample size of the studies included in the systematic review. Studies with high variability and effect sizes near 0 are missing and there are a number of studies which lie outside the expected funnel. In particular it is clear that small studies that have been published are those with relatively large effect sizes (those points on the lower right of the plot). This funnel plot asymmetry suggests publication bias.

Meta-analysis

Considerable heterogeneity between studies (I2 = 93.42%) limited the capacity to draw conclusions in this analysis. In subgroup analyses, including comparing eLearning vs. other methods, senior vs. junior medical students, passive vs. active learning, cross-sectional imaging vs. other imaging, radiology-trained vs. non-radiology-trained teaching staff and RCT vs. non-randomised studies, heterogeneity remained high. This suggests none of these were significant contributors to the heterogeneity. A forest plot of the included studies reveals a majority of the educational interventions increased medical students’ radiology knowledge and or skills evidenced by a majority demonstrating a shift to the right. This is displayed in Fig. 5. This is a trend also demonstrated in all forest plots of subgroup analyses which can be found in Supplementary Material 6-11. However, there were no significant differences encountered in the subgroup analyses. It is worthwhile to note a greater proportion studies utilising active learning had a shift to the right in Supplementary Material 6. This resulted in a higher standard mean difference of 0.57 vs. 0.51 however was not statistically significant.

Random effects meta-analysis of studies comparing radiology education interventionsThe majority of education interventions increased students’ radiology knowledge or skills evidenced by a majority demonstrating a shift to the right. However, in general studies with higher standard mean differences had wider confidence intervals. High heterogeneity (I2 = 93.4) limited the capacity to draw conclusions from this analysis and a cause was not found in the subgroup analyses).

Thematic analysis

The meta-analysis demonstrated high heterogeneity with no statistically significant differences encountered in the subgroup analyses to account for this. This would suggest educational interventions were highly contextual and thematic analysis was performed to further explore this.

Active vs. passive learning

Active learning has been shown to produce superior gains in knowledge acquisition than passive learning [20,21,22,23,24,25]. In particular, active learning utilising interactive eLearning in several student cohorts demonstrated superior knowledge gains compared with passive methods of instruction [20, 22, 23, 25]. Three of these studies were judged to be at potential serious risk of bias due to missing outcome data. This was as a result of participants dropping out between phases of the study which was likely an inherent risk with all studies involving randomised cross-over control trials [20, 22, 23]. Otherwise these studies were judged to have a low risk of bias in the remaining domains. This attrition could be in part explained by active / interactive learning being associated with greater levels of student satisfaction or intrinsic motivation [22, 23, 26].

eLearning vs. face-to-face learning

Multiple studies demonstrated eLearning is at least equivalent to ‘traditional’ face-to-face education [12, 22, 23, 27,28,29,30]. Blending eLearning with ‘traditional’ learning pedagogies was reported to have a synergistic effect [31, 32]. Moreover, guided interactive eLearning has been shown to be effective in radiology education and is well accepted by participants [20, 22, 23, 25, 33]. The use of worked examples or clinical scenarios with feedback to demonstrate imaging concepts was effective. However, knowledge gains in these guided eLearning resources appeared to diminish with increasing medical student experience / seniority [22, 23, 33].

Specialist vs. non-specialist radiology educators

Most articles employing radiologists as teachers had topics which varied and often overlapped (n = 26, 65%). A majority taught medical imaging indications or interpretation component (n = 21 of 26, 81%). In 6 articles educators were non imaging trained specialists (15%) and in 8 articles instructor training was unspecified (20%). Non-imaging trained specialists were primarily involved in anatomy teaching (n = 3 of 6, 50%), [34,35,36] followed by ultrasound scanning (n = 2 of 6, 30%) [26, 37] and in one article, interpreting orthopaedic imaging [38]. Considering the meta-analysis, this could suggest a trend toward non-imaging trained teachers being equivalent to imaging trained specialists in teaching basic imaging anatomy and ultrasound scanning. However, there was heterogeneity in the student cohorts and topics taught. This suggests these findings are likely contextual.

Medical student seniority

There were 17 studies involving senior medical students, 16 studies involving junior medical students, 4 in a combined group of medical students and 3 were unspecified. Junior students were mostly taught basic imaging interpretation (n = 12/16, 75%), followed by anatomy (n = 8/16, 50%). Imaging as part of anatomy teaching to senior students was relatively less common (n = 7/17, 41%), however more content covering imaging indications was taught to that cohort. Risks and radiation protection were only specified in 4 of 17 studies (24%) involving exclusively senior students and 1 study with a combination of senior and junior students. An overview is provided in Supplementary Material 9.

Imaging modalities

Imaging modalities employed were divided into cross sectional imaging (CT and MRI) or non-cross-sectional imaging (x-ray and ultrasound). In 4 studies it was indeterminate whether cross-sectional imaging was taught. Most studies utilised multiple imaging modalities to teach (n = 17/37, 46%) where cross sectional imaging featured in 23 of 36 studies (64%). Cross sectional imaging teaching was employed proportionately more in studies with only senior students (n = 11/14, 79%) compared to studies with only junior students (n = 6/15, 40%).

Learning anatomy using imaging

Cross-sectional imaging was frequently used to teach anatomy, however the method in which the anatomy was displayed affects learning [39,40,41]. 3D representations have been shown to produce significantly superior knowledge gains compared to 2D [39,40,41]. The use of augmented reality, e.g., 3D CT hologram displays to teach head and neck anatomy, yielded a large effect size when compared with 2D CT images [42]. Using x-rays to teach radiological anatomy yielded only a relatively small effect size in a study 2013 study by Webb and Choi; however, this should be interpreted with caution due to potential bias in this study [43]. In a single study by Knudsen et al. there was no significant difference between the group using ultrasound scanning (hands-on group) and a group which utilized ultrasound images, 3D models and prosections (hands-off group) for learning anatomy [26]. The ultrasound scanning group had significantly higher intrinsic motivation compared to the ‘hands-off’ group which had a greater degree of didactic teaching [26].

Indications for imaging

Learning indications for imaging using face-to-face collaborative learning and didactic teaching was equally effective in a cohort of 3rd year medical students [44]. However, collaborative learning was perceived as more enjoyable [44].

eLearning has been successfully used to teach indications for imaging [20, 22, 23, 25, 29]. Engaging interactive eLearning which utilised clinical scenarios and provided feedback, has been showed to produce significantly improved knowledge of imaging indications when compared to non-interactive eLearning [20, 22, 23].

Imaging interpretation

Learning how to interpret imaging investigations enabled students to detect suboptimal imaging and to identify abnormalities [45]. However, following an eLearning educational intervention using active learning, students were less likely to detect normal imaging compared with abnormal imaging [45]. This could be mitigated by providing comparisons between normal studies and studies showing diseases, as demonstrated by Kok and colleagues [46]. When instruction with the ratio of normal to abnormal studies in imaging sets was varied, there was a trade-off between sensitivity and specificity in imaging interpretation by students [47].

Sequencing of educational interventions also had implications for knowledge acquisition. In groups where expert instruction was provided prior to practice (deductive learning), students demonstrated higher specificity than those who were allowed to practice cases prior to instruction (inductive learning) [47]. The type of learning did not significantly affect sensitivity for detecting pathologies [47].

Mandatory vs. voluntary participation

There was a correlation between the number of educational sessions attended and performance on test scores [12, 32]. Overall, students performed significantly better when participation in educational interventions was mandatory [32].

Assessment of learning

Most articles did not include a copy of the assessments available and the type or a part of the assessment was not specified in 9 of 40 articles (23%). Delayed testing several months after the educational intervention was only present in 4 of 40 studies (10%). The most common mode of assessment was multiple choice questions (MCQ) followed by short answer questions. Objective Structured Clinical Exams (OSCE) featured in 3 studies, all involving senior medical students. ‘Drag and drop’ or identifying features on imaging was present in one study of junior medical students (6%) and 4 of 17 studies of senior medical students (24%). This suggests that assessments more closely mirroring clinical practice are predominantly used in senior years. An overview is provided in Supplementary Material 5.

Discussion

There has been increasing interest in undergraduate radiology education, as evidenced by the number of published articles per annum. This review covered a wide variety of educational delivery methods related to several radiology-related topics. This is reflected in the high degree of heterogeneity in the meta-analysis, which did not reduce after subgroup analyses, suggesting these were not significant contributors to heterogeneity.

Educational design aspects addressed in the meta-analysis were active or passive learning and eLearning vs. other forms of delivery. A more granular analysis stratified according to delivery methods such as readings, lectures, flipped or non-flipped classrooms was not possible due to the insufficient number of articles in each sub-category meeting the inclusion criteria for this study. There were many examples in the literature for both medical education in general, and radiology in particular, where active learning has resulted in superior outcomes for knowledge acquisition and/or engagement by participants, compared with didactic approaches [5, 22, 23, 48, 49]. This finding is reinforced by our analysis, where all articles directly comparing the outcomes of active versus passive approaches had effect sizes favouring active learning. Likewise, all studies which evaluated combined active and passive approaches versus passive learning only had effect sizes favouring groups utilizing active learning. However, there was no significant difference between groups exposed to active learning versus passive learning methods in subgroup analyses. This finding could be related to the confounding effect of studies which compared a combination of active and passive approaches with passive learning.

Another factor impacting these findings is the instructional design of educational interventions. In eLearning, for example, effective strategies included use of multimedia learning principles, i.e., relevant graphics to accompany text, arrows to direct attention in complex graphics (signalling principle), using simple graphics to promote understanding while avoiding irrelevant information to maintain coherence and breaking down topics into small logical segments [50]. Teaching of imaging concepts prior to practical applications such as worked examples which fade to full practice scenarios accompanied by feedback is also effective [50]. These principles can all be integrated into teaching anatomy or basic imaging interpretation, the two most commonly addressed topics by articles included in this study.

There are conflicting findings in the literature comparing the efficacy of eLearning versus face-to-face learning in healthcare education [51,52,53,54,55,56,57]. This study demonstrated that eLearning is at least equivalent to traditional face-to-face instruction and may be synergistic with face-to-face teaching. However, several forms of guided eLearning in this study appear to have diminishing effects with increasing medical student experience / seniority.59 In this scenario, worked examples could impede learning in more experienced participants through the ‘expertise reversal effect.’ [58] Gradually fading ‘worked examples’ into ‘practice questions’ could overcome this concern [58]. These findings are particularly relevant with the massive expansion of eLearning in medical education, including radiology, during the COVID-19 pandemic. Furthermore, there are ongoing barriers to engaging radiologists in education of medical students due to competing clinical demands, thereby increasing the attractiveness of employing eLearning for radiology education [59]. In designing an eLearning intervention, interactivity, practice exercises, repetition and feedback have been shown to improve learning outcomes [22, 23, 52, 60].

This study included articles demonstrating synergies can be achieved between radiology education and the broader medical curriculum [21,22,23, 33]. For example, there are many instances where cross sectional imaging has been used to teach anatomy [39, 61,62,63].

Instructional design of e-learning materials influences learning. An example of this includes the use of ‘worked examples’ in e-learning tutorials which were designed for a cohort of senior medical students [23]. This format highlighted relevant clinical information which likely contributed to greater learning efficiency though greater mean scores and/or less time spent interacting with resources by the intervention group [22, 23, 58]. According to cognitive load theory, cognitive overload can occur when information exceeds the learner’s capacity for processing information in their working memory [22, 23, 58, 64]. The result is incomplete or disorganised information [22, 23, 58, 64]. The way information is presented can influence extraneous load imposed by instructional design [64]. To avoid cognitive overload in these e-learning modules, information was concise, pitched at the level of the learner and appropriately segmented [22, 23, 58, 64]. Participants favoured the concise, case-based nature of the tutorials which promoted interactivity and engagement [23, 58]. These studies provide evidence to suggest that students’ learning would benefit from greater integration of radiology into modern medical curricula in a way which is relevant to clinical practice.

Implications for radiology education and study design

The implications of this review for design of interventions and evaluative studies of radiology education are summarised in Table 5.

Study strengths

To the authors knowledge, this is the first systematic review and meta-analysis aimed at quantitively comparing the effectiveness of different methods of radiology education for medical students. This review captured a large quantity of articles and a large medical student population dating back 15 years.

Through the application of stringent search criteria, only comparative effectiveness studies which were generally of high quality were shortlisted, as evidenced by high MERSQI scores. This study also excluded qualitative studies assessing perceived gains in knowledge or skills, because perceptions can differ from objectively measured attainment of knowledge or skills [65, 66].

Study limitations

The main limitation of this study is the high level of heterogeneity between studies. Significant heterogeneity existed between the shortlisted articles regarding the topics studied, study methods, data collection and reporting. This is unsurprising, because medical curricula vary widely, and educational interventions are typically contextual [3,4,5]. The interventions in many cases were designed for specific populations to address specific educational needs related to radiology. The high heterogeneity in this meta-analysis has also been demonstrated in other medical and health sciences-related meta-analyses of educational effectiveness [60, 67, 68].

Descriptions of interventions and reporting of data in some studies were ambiguous which complicated data extraction. This more commonly occurred in descriptions of control groups. Frequently, critical aspects of studies were reported in insufficient detail, which has been encountered in other reviews [52, 57, 60, 68]. While the authors tried to ensure categorisation was as accurate as possible, in some instances their ability to do so was limited due to the ambiguities in reporting of the data.

Moderate to high levels of bias and evidence of publication bias in the shortlisted articles is another limitation which impacts on the ability to draw conclusions from the meta-analysis. This suggests published literature may be skewed towards studies reporting effectiveness of the interventions and negative results being potentially under-reported. Prevalent sources of bias such as missing data and confounding variables highlight the need to be vigilant when evaluating education interventions. This paper is limited to peer-reviewed articles in the PubMed and Scopus databases. Articles identified in the reference lists of included articles, as well as grey literature and unpublished sources were not included. This restriction was intended to maintain the reliability of this review’s method.

Future directions

The authors recommend better standardisation of the design of studies examining educational interventions in general, and radiology in particular, to help determine the most effective methods for teaching undergraduate medical students. Greater use of delayed testing to evaluate long term effectiveness of educational interventions is needed. This could inform educators regarding the reinforcement required to maintain knowledge for future clinical practice.

Further research is needed to analyse the effectiveness of integration of radiology education with other disciplines in medical curricula. It stands to reason that integration of radiology with basic sciences and clinical experiences would lead to synergistic benefits for students’ learning. Disciplines such as anatomy, pathology, and clinical reasoning might all benefit from integration with radiology [2, 9, 69,70,71,72,73,74]. When combined with delayed testing, this could inform curriculum planners on when to incorporate radiology topics into medical curricula.

Conclusion

There has been increasing research interest in radiology education for medical students. However, methods of educational delivery and evaluation vary widely, thus contributing to significant heterogeneity between studies. A comprehensive subgroup analysis did not reveal a cause for this heterogeneity, suggesting that it could be due to tailoring of educational interventions for specific curricular contexts.

While heterogeneity precluded any firm conclusions being drawn from the meta-analysis, this systematic review has explored scenarios where certain educational interventions and specific improvements in future study design can be of benefit. For example, eLearning has been shown to be at least equivalent to traditional face-to-face instruction and may be synergistic. Better standardisation in the design of studies to evaluate radiology education interventions and in the nature of the radiology education interventions themselves is needed to help provide evidence for the optimization of radiology education in medical curricula. Other potential research directions might include evaluating long-term knowledge retention through delayed testing of learning as well as further work to demonstrate the effect of integrating radiology education with other disciplines within medical curricula.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CT:

-

Computed Tomography

- DSA:

-

Digital Subtraction Angiography

- MERSQI:

-

Medical Education Research Study Quality Instrument

- MCQ:

-

Multiple Choice Question

- MRI:

-

Magnetic Resonance Imaging

- OSCE:

-

Objective Structure Clinical Exams

- PBL:

-

Problem Based Learning

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- RCT:

-

Randomised controlled trial

- SMD:

-

Standard Mean Difference

- US:

-

Ultrasound

- VIVA:

-

Viva voce / oral examination

References

Naeger DM, Webb EM, Zimmerman L, Elicker BM. Strategies for incorporating radiology into early medical school curricula. J Am Coll Radiol. 2014;11(1):74–9.

Kushdilian MV, Ladd LM, Gunderman RB. Radiology in the study of bone physiology. Acad Radiol. 2016;23(10):1298–308.

Kourdioukova EV, Valcke M, Derese A, Verstraete KL. Analysis of radiology education in undergraduate medical doctors training in Europe. Eur J Radiol. 2011;78(3):309–18.

Subramaniam RM, Gibson RN. Radiology teaching: essentials of a quality teaching programme. Australas Radiol. 2007;51(1):42–5.

Awan OA. Analysis of common innovative teaching methods used by Radiology Educators. Curr Probl Diagn Radiol. 2022;51(1):1–5.

Rohren SA, Kamel S, Khan ZA, et al. A call to action; national survey of teaching radiology curriculum to medical students. J Clin Imaging Sci. 2022;12:57.

Oris E, Verstraete K, Valcke M. Results of a survey by the European Society of Radiology (ESR): undergraduate radiology education in Europe—influences of a modern teaching approach. Insights into Imaging. 2012;3(2):121–30.

Mirsadraee S, Mankad K, McCoubrie P, Roberts T, Kessel D. Radiology curriculum for undergraduate medical studies–a consensus survey. Clin Radiol. 2012;67(12):1155–61.

Undergraduate education in radiology. A white paper by the European Society of Radiology. Insights into Imaging. 2011;2(4):363–74.

Sivarajah RT, Curci NE, Johnson EM, Lam DL, Lee JT, Richardson ML. A review of innovative teaching methods. Acad Radiol. 2019;26(1):101–13.

ESR statement on. New approaches to undergraduate teaching in Radiology. Insights into Imaging. 2019;10(1):109.

Alamer A, Alharbi F. Synchronous distance teaching of radiology clerkship promotes medical students’ learning and engagement. Insights into Imaging. 2021;12(1):41.

Belfi LM, Dean KE, Bartolotta RJ, Shih G, Min RJ. Medical student education in the time of COVID-19: a virtual solution to the introductory radiology elective. Clin Imaging. 2021;75:67–74.

Durfee SM, Goldenson RP, Gill RR, Rincon SP, Flower E, Avery LL. Medical Student Education Roadblock due to COVID-19: virtual Radiology Core Clerkship to the rescue. Acad Radiol. 2020;27(10):1461–6.

Moher D, Shamseer L, Clarke M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Reviews. 2015;4(1):1.

Reed DA, Cook DA, Beckman TJ, Levine RB, Kern DE, Wright SM. Association between funding and quality of published medical education research. JAMA. 2007;298(9):1002–9.

Sterne JAC, Savović J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019;366:l4898.

Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919.

McGuinness LA, Higgins JPT. Risk-of-bias VISualization (robvis): An R package and Shiny web app for visualizing risk-of-bias assessments. Research Synthesis Methods 2020;n/a(n/a).

Velan GM, Goergen SK, Grimm J, Shulruf B. Impact of interactive e-Learning modules on appropriateness of imaging referrals: a Multicenter, Randomized, crossover study. J Am Coll Radiol. 2015;12(11):1207–14.

Tshibwabwa ET, Cannon J, Rice J, Kawooya MG, Sanii R, Mallin R. Integrating Ultrasound Teaching into Preclinical Problem-based Learning. J Clin Imaging Sci 2016;6(3).

Wong V, Smith AJ, Hawkins NJ, et al. Adaptive tutorials Versus web-based resources in Radiology: a mixed methods comparison of Efficacy and Student Engagement. Acad Radiol. 2015;22(10):1299–307.

Wade SWT, Moscova M, Tedla N, et al. Adaptive tutorials Versus web-based resources in Radiology: a mixed methods analysis of Efficacy and Engagement in Senior Medical Students. Acad Radiol. 2019;26(10):1421–31.

Ebert J, Tutschek B. Virtual reality objects improve learning efficiency and retention of diagnostic ability in fetal ultrasound. Ultrasound Obstet Gynecol. 2019;53(4):525–8.

Willis MH, Newell AD, Fotos J, et al. Multisite implementation of Radiology-TEACHES (Technology-Enhanced appropriateness Criteria Home for Education Simulation). J Am Coll Radiol. 2020;17(5):652–61.

Knudsen L, Nawrotzki R, Schmiedl A, Mühlfeld C, Kruschinski C, Ochs M. Hands-on or no hands-on training in ultrasound imaging: a randomized trial to evaluate learning outcomes and speed of recall of topographic anatomy. Anat Sci Educ. 2018;11(6):575–91.

Sendra-Portero F, Torales-Chaparro OE, Ruiz-Gomez MJ, Martinez-Morillo M. A pilot study to evaluate the use of virtual lectures for undergraduate radiology teaching. Eur J Radiol. 2013;82(5):888–93.

Poland S, Frey JA, Khobrani A, et al. Telepresent focused assessment with sonography for trauma examination training versus traditional training for medical students: a simulation-based pilot study. J Ultrasound Med. 2018;37(8):1985–92.

Viteri Jusué A, Tamargo Alonso A, Bilbao González A, Palomares T. Learning how to Order Imaging tests and make subsequent clinical decisions: a Randomized Study of the effectiveness of a virtual learning environment for medical students. Med Sci Educ. 2021;31(2):469–77.

Tam MDBS, Hart AR, Williams SM, Holland R, Heylings D, Leinster S. Evaluation of a computer program (‘disect’) to consolidate anatomy knowledge: a randomised-controlled trial. Med Teach. 2010;32(3):e138–42.

Tshibwabwa E, Mallin R, Fraser M et al. An Integrated Interactive-Spaced Education Radiology Curriculum for Preclinical Students. J Clin Imaging Sci 2017;7(1).

Mahnken AH, Baumann M, Meister M, Schmitt V, Fischer MR. Blended learning in radiology: is self-determined learning really more effective? Eur J Radiol. 2011;78(3):384–7.

Burbridge B, Kalra N, Malin G, Trinder K, Pinelle D. University of Saskatchewan Radiology Courseware (USRC): an Assessment of its utility for Teaching Diagnostic Imaging in the Medical School Curriculum. Teach Learn Med. 2015;27(1):91–8.

James HK, Chapman AWP, Dhukaram V, Wellings R, Abrahams P. Learning anatomy of the foot and ankle using sagittal plastinates: a prospective randomized educational trial. Foot. 2019;38:34–8.

Rajprasath R, Kumar V, Murugan M, Goriparthi B, Devi R. Evaluating the effectiveness of integrating radiological and cross-sectional anatomy in first-year medical students - a randomized, crossover study. J Educ Health Promotion 2020;9(1).

Saxena V, Natarajan P, O’Sullivan PS, Jain S. Effect of the use of instructional anatomy videos on student performance. Anat Sci Educ. 2008;1(4):159–65.

Le CK, Lewis J, Steinmetz P, Dyachenko A, Oleskevich S. The use of ultrasound simulators to strengthen scanning skills in medical students: a randomized controlled trial. J Ultrasound Med. 2019;38(5):1249–57.

Lydon S, Fitzgerald N, Gannon L et al. A randomised controlled trial of SAFMEDS to improve musculoskeletal radiology interpretation. Surgeon: J Royal Colleges Surg Edinb Irel 2021.

Petersson H, Sinkvist D, Wang C, Smedby Ö. Web-based interactive 3D visualization as a tool for improved anatomy learning. Anat Sci Educ. 2009;2(2):61–8.

Beermann J, Tetzlaff R, Bruckner T, et al. Three-dimensional visualisation improves understanding of surgical liver anatomy. Med Educ. 2010;44(9):936–40.

Nickel F, Hendrie JD, Bruckner T, et al. Successful learning of surgical liver anatomy in a computer-based teaching module. Int J Comput Assist Radiol Surg. 2016;11(12):2295–301.

Weeks JK, Pakpoor J, Park BJ, et al. Harnessing augmented reality and CT to teach first-Year Medical Students Head and Neck anatomy. Acad Radiol. 2021;28(6):871–6.

Webb AL, Choi S. Interactive radiological anatomy eLearning solution for first year medical students: development, integration, and impact on learning. Anat Sci Educ. 2014;7(5):350–60.

Stein MW, Frank SJ, Roberts JH, Finkelstein M, Heo M. Integrating the ACR appropriateness Criteria into the Radiology Clerkship: comparison of Didactic Format and Group-based learning. J Am Coll Radiol. 2016;13(5):566–70.

Pusic MV, Leblanc VR, Miller SZ. Linear versus web-style layout of computer tutorials for medical student learning of radiograph interpretation. Acad Radiol. 2007;14(7):877–89.

Kok EM, de Bruin AB, Leppink J, van Merrienboer JJ, Robben SG. Case comparisons: an efficient way of learning Radiology. Acad Radiol. 2015;22(10):1226–35.

Geel KV, Kok EM, Aldekhayel AD, Robben SGF, van Merrienboer JJG. Chest X-ray evaluation training: impact of normal and abnormal image ratio and instructional sequence. Med Educ. 2019;53(2):153–64.

Wu Y, Theoret C, Burbridge BE. Flipping the Passive Radiology Elective by including active learning. Can Association Radiol J = J l’Association canadienne des radiologistes. 2020. 846537120953909.

Redmond CE, Healy GM, Fleming H, McCann JW, Moran DE, Heffernan EJ. The integration of active learning teaching strategies into a Radiology Rotation for Medical Students improves Radiological Interpretation skills and attitudes toward Radiology. Curr Probl Diagn Radiol. 2020;49(6):386–91.

Clark RC, Mayer RE. E-learning and the science of instruction: proven guidelines for consumers and designers of multimedia learning 4th edition. ed. Hoboken: Hoboken: Wiley; 2016.

Fontaine G, Cossette S, Maheu-Cadotte MA, et al. Efficacy of adaptive e-learning for health professionals and students: a systematic review and meta-analysis. BMJ open. 2019;9(8):e025252.

Lahti M, Hätönen H, Välimäki M. Impact of e-learning on nurses’ and student nurses knowledge, skills, and satisfaction: a systematic review and meta-analysis. Int J Nurs Stud. 2014;51(1):136–49.

Voutilainen A, Saaranen T, Sormunen M. Conventional vs. e-learning in nursing education: a systematic review and meta-analysis. Nurse Educ Today. 2017;50:97–103.

George PP, Papachristou N, Belisario JM, et al. Online eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Global Health. 2014;4(1):010406.

Zafar S, Safdar S, Zafar AN. Evaluation of use of e-Learning in undergraduate radiology education: a review. Eur J Radiol. 2014;83(12):2277–87.

Wentzell S, Moran L, Dobranowski J, et al. E-learning for chest x-ray interpretation improves medical student skills and confidence levels. BMC Med Educ. 2018;18(1):256.

Vaona A, Banzi R, Kwag KH, et al. E-learning for health professionals. Cochrane Database Syst Rev. 2018;1(1):Cd011736.

Wade SWT, Moscova M, Tedla N, et al. Adaptive tutorials versus web-based resources in radiology: a mixed methods analysis in junior doctors of efficacy and engagement. BMC Med Educ. 2020;20(1):303.

Serhan LA, Tahir MJ, Irshaidat S, et al. The integration of radiology curriculum in undergraduate medical education. Annals of Medicine and Surgery. 2022;80:104270.

Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Instructional design variations in internet-based learning for health professions education: a systematic review and meta-analysis. Acad Medicine: J Association Am Med Colleges. 2010;85(5):909–22.

Kolossváry M, Székely AD, Gerber G, Merkely B, Maurovich-Horvat P. CT images are noninferior to anatomic specimens in teaching cardiac Anatomy—A randomized quantitative study. J Am Coll Radiol. 2017;14(3):409–415e402.

Lufler RS, Zumwalt AC, Romney CA, Hoagland TM. Incorporating radiology into medical gross anatomy: does the use of cadaver CT scans improve students’ academic performance in anatomy? Anat Sci Educ. 2010;3(2):56–63.

Phillips AW, Smith SG, Straus CM. The role of Radiology in Preclinical anatomy. A critical review of the past, Present, and Future. Acad Radiol. 2013;20(3):297–304e291.

van Merriënboer JJ, Sweller J. Cognitive load theory in health professional education: design principles and strategies. Med Educ. 2010;44(1):85–93.

Kada S. Awareness and knowledge of radiation dose and associated risks among final year medical students in Norway. Insights into Imaging. 2017;8(6):599–605.

Faggioni L, Paolicchi F, Bastiani L, Guido D, Caramella D. Awareness of radiation protection and dose levels of imaging procedures among medical students, radiography students, and radiology residents at an academic hospital: results of a comprehensive survey. Eur J Radiol. 2017;86:135–42.

Vallée A, Blacher J, Cariou A, Sorbets E. Blended learning compared to traditional learning in Medical Education: systematic review and Meta-analysis. J Med Internet Res. 2020;22(8):e16504.

Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181–96.

Pascual TN, Chhem R, Wang SC, Vujnovic S. Undergraduate radiology education in the era of dynamism in medical curriculum: an educational perspective. Eur J Radiol. 2011;78(3):319–25.

Schober A, Pieper CC, Schmidt R, Wittkowski W. Anatomy and imaging: 10 years of experience with an interdisciplinary teaching project in preclinical medical education - from an elective to a curricular course. RoFo Fortschr Geb Rontgenstrahlen Bildgeb Verfahr. 2014;186(5):458–65.

Grignon B, Oldrini G, Walter F. Teaching medical anatomy: what is the role of imaging today? Surg Radiol Anat. 2016;38(2):253–60.

Pathiraja F, Little D, Denison AR. Are radiologists the contemporary anatomists? Clin Radiol. 2014;69(5):458–61.

Hartman M, Silverman J, Spruill L, Hill J. Radiologic-pathologic Correlation-An Advanced Fourth-year Elective: how we do it. Acad Radiol. 2016;23(7):889–93.

Nay JW, Aaron VD, Gunderman RB. Using radiology to teach physiology. J Am Coll Radiol. 2011;8(2):117–23.

Courtier J, Webb EM, Phelps AS, Naeger DM. Assessing the learning potential of an interactive digital game versus an interactive-style didactic lecture: the continued importance of didactic teaching in medical student education. Pediatr Radiol. 2016;46(13):1787–96.

Di Salvo DN, Clarke PD, Cho CH, Alexander EK. Redesign and implementation of the radiology clerkship: from traditional to longitudinal and integrative. J Am Coll Radiol. 2014;11(4):413–20.

Gibney B, Kassab GH, Redmond CE, Buckley B, MacMahon PJ. Pareidolia in Radiology Education: a Randomized Controlled Trial of Metaphoric signs in Medical Student Teaching. Acad Radiol. 2020.

Kok EM, Abed A, Robben SGF. Does the use of a Checklist Help Medical students in the detection of abnormalities on a chest radiograph? J Digit Imaging. 2017;30(6):726–31.

Lorenzo-Alvarez R, Rudolphi-Solero T, Ruiz-Gomez MJ, Sendra-Portero F. Medical Student Education for abdominal radiographs in a 3D virtual Classroom Versus Traditional Classroom: a Randomized Controlled Trial. AJR Am J Roentgenol. 2019;213(3):644–50.

Rozenshtein A, Pearson GD, Yan SX, Liu AZ, Toy D. Effect of massed Versus interleaved teaching method on performance of students in Radiology. J Am Coll Radiology: JACR. 2016;13(8):979–84.

Shaffer K, Ng JM, Hirsh DA. An Integrated Model for Radiology Education. Development of a year-long Curriculum in Imaging with Focus on Ambulatory and Multidisciplinary Medicine. Acad Radiol. 2009;16(10):1292–301.

Smeby SS, Lillebo B, Slørdahl TS, Berntsen EM. Express Team-based learning (eTBL): a time-efficient TBL Approach in Neuroradiology. Acad Radiol 2019.

Thompson M, Johansen D, Stoner R, et al. Comparative effectiveness of a mnemonic-use approach vs. self-study to interpret a lateral chest X-ray. Adv Physiol Educ. 2017;41(4):518–21.

Vollman A, Hulen R, Dulchavsky S, et al. Educational benefits of fusing magnetic resonance imaging with sonograms. J Clin Ultrasound. 2014;42(5):257–63.

Yuan Q, Chen X, Zhai J et al. Application of 3D modeling and fusion technology of medical image data in image teaching. BMC Med Educ 2021;21(1).

Cook DA. Randomized controlled trials and meta-analysis in medical education: what role do they play? Med Teach. 2012;34(6):468–73.

Nourkami-Tutdibi N, Tutdibi E, Schmidt S, Zemlin M, Abdul-Khaliq H, Hofer M. Long-Term Knowledge Retention after peer-assisted abdominal Ultrasound Teaching: is PAL a successful model for Achieving Knowledge Retention? Ultraschall Med. 2020;41(1):36–43.

Feigin DS, Magid D, Smirniotopoulos JG, Carbognin SJ. Learning and retaining normal radiographic chest anatomy. Does preclinical exposure Improve Student performance? Acad Radiol. 2007;14(9):1137–42.

Doomernik DE, van Goor H, Kooloos JGM, Ten Broek RP. Longitudinal retention of anatomical knowledge in second-year medical students. Anat Sci Educ. 2017;10(3):242–8.

Ropovik I, Adamkovic M, Greger D. Neglect of publication bias compromises meta-analyses of educational research. PLoS ONE. 2021;16(6):e0252415.

Undergraduate Radiology RCR. Curriculum 2022. In: The Royal College of Radiologists; 2022.

Gadde JA, Ayoob A, Carrico CWT, Falcon S, Magid D, Miller-Thomas MM, Naeger D. AMSER National Medical Student Curriculum. 2020.

Reddy S, Straus CM, McNulty NJ, et al. Development of the AMSER standardized examinations in radiology for medical students. Acad Radiol. 2015;22(1):130–4.

Acknowledgements

The authors would like to thank Ms Zhixin Liu for her valuable recommendations regarding statistical analysis in this review and Ms Jennifer Whitfield for her valuable assistance devising the literature search strategy.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

SW worked on project design, data collection and analysis, manuscript write up and revision. GM, NT and MM worked on project design, data analysis and manuscript revision. NB worked on project design and data analysis.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable as this was a systematic review and meta-analysis of published research.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wade, S.W., Velan, G.M., Tedla, N. et al. What works in radiology education for medical students: a systematic review and meta-analysis. BMC Med Educ 24, 51 (2024). https://doi.org/10.1186/s12909-023-04981-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-023-04981-z