Abstract

Background

Although electrocardiography is considered a core learning outcome for medical students, there is currently little curricular guidance for undergraduate ECG training. Owing to the absence of expert consensus on undergraduate ECG teaching, curricular content is subject to individual opinion. The aim of this modified Delphi study was to establish expert consensus amongst content and context experts on an ECG curriculum for medical students.

Methods

The Delphi technique, an established method of obtaining consensus, was used to develop an undergraduate ECG curriculum. Specialists involved in ECG teaching were invited to complete three rounds of online surveys. An undergraduate ECG curriculum was formulated from the topics of ECG instruction for which consensus (i.e. ≥75% agreement) was achieved.

Results

The panellists (n = 131) had a wide range of expertise (42.8% Internal Medicine, 22.9% Cardiology, 16% Family Medicine, 13.7% Emergency Medicine and 4.6% Health Professions Education). Topics that reached consensus to be included in the undergraduate ECG curriculum were classified under technical aspects of performing ECGs, basic ECG analysis, recognition of the normal ECG and abnormal rhythms and waveforms and using electrocardiography as part of a clinical diagnosis. This study emphasises that ECG teaching should be framed within the clinical context. Course conveners should not overload students with complex and voluminous content, but rather focus on commonly encountered and life-threatening conditions, where accurate diagnosis impacts on patient outcome. A list of 23 “must know” ECG diagnoses is therefore proposed.

Conclusion

A multidisciplinary expert panel reached consensus on the ECG training priorities for medical students.

Similar content being viewed by others

Background

The first step in the development of an outcomes-based undergraduate medical curriculum is the performance of a needs assessment to ascertain what junior doctors are expected to know [1, 2]. The results of such a needs assessment serve to inform those involved with curricular design of the core knowledge and skills that medical students need to acquire during their undergraduate training [1]. In the absence of expert consensus, however, curricular content is subject to the opinion of individual lecturers and, therefore, variable between academic institutions [3].

Worldwide, graduating medical trainees lack adequate ECG competence [4,5,6,7], i.e. the ability to accurately analyse and interpret an electrocardiogram (ECG) [8]. Yet, ECG competency is considered an Entrustable Professional Activity (EPA) that medical students need to master prior to graduation [9]. It is important to consolidate ECG knowledge and skills before qualifying, as there is usually little formal training in electrocardiography once medical students graduate [10].

Even though electrocardiography forms part of core undergraduate medical training [11], there is a lack of guidance as to which ECG diagnoses should be taught to medical students [5]. In a recent systematic review, it was found that there was significant variation in topics of undergraduate ECG instruction [12]. This could be explained by the inconsistency in undergraduate ECG curricular recommendations in the literature [9, 13]. Central to the process of addressing the lack of ECG competence is the establishment of a mutually agreed curriculum.

Establishing consensus using the Delphi method

Delphi studies are a recognised method for establishing expert consensus in curricular development [14]. The Delphi technique is an iterative process through which expert opinion is transformed into consensus amongst experts [15]. Experts in the field are invited to complete multiple rounds of questionnaires. These questionnaires are completed anonymously, and the collective results are shared with participants in subsequent rounds [16, 17].

The classical Delphi method starts with a set of open-ended questions (to collect qualitative data) in the first round. Participants’ responses are then summarised and used to create closed-ended questions (to collect quantitative data) for the subsequent rounds [18, 19]. However, multiple studies in health professions education have adopted a modified Delphi technique wherein the first round already starts with closed-ended questions that are carefully selected by the convener through literature reviews and expert consultation [20,21,22]. As the methodology is flexible, a modified Delphi study can still collect input through open-ended questions, by asking participants if they have any additions to the list prepared by the convener [14, 23].

In a Delphi study, quantitative data is collected by means of directed questions, in the form of Likert-type questions, through which participants indicate how strongly they agree or disagree with each statement on the list in the survey of each round [16, 24]. Likert-type questions typically ask, “please select how strongly you agree with the following statement…”. Most studies use five response categories (i.e. “strongly disagree”, “disagree”, “uncertain”, “agree”, “strongly agree”), with a central point (i.e. uncertain) to allow for participants to opt out if they are not sure about the statement [3, 15, 22, 25, 26]. Frequencies and mode are appropriate descriptive statistics for the categorical data collected by Likert-type questions [22, 24, 27]. Frequencies indicate variability of the data, i.e. the level of agreement for each statement in the survey [28], whereas the mode indicates the central tendency of the data (i.e. the response most commonly selected).

The level of agreement amongst participants that is considered as consensus varies between 51 and 80% in the literature on Delphi studies [14]. Investigators decide a priori on the level of agreement that would be considered as having reached consensus [29]. Although there is no universal value that is used for this purpose, many studies use 75% agreement between experts as the cut off value to establish consensus in Delphi studies [29]. Surveys are administered through multiple rounds until the predetermined level of consensus for each statement is reached. This usually occurs after the third round of the study [14, 16].

There are no rigid criteria for the selection of participants in a Delphi study, neither how many participants should be recruited [30]. The investigator needs to take great care in the selection of potential participants [31]. Participants that are invited to take part in a Delphi study should be content and context experts, so that the results can be accurate and reliable [32, 33]. The panel of experts invited to take part should have a keen interest in the subject matter [26]. Also, because of the risk of losing participants between successive rounds, those invited to take part in the study should be willing to take part in a multi-stage surveying process [15].

The aim of this study was to establish consensus (amongst specialists who regularly analyse and interpret ECGs in clinical practice [i.e. content experts], and who are involved in ECG training [i.e. context experts]), on an outcomes-based undergraduate electrocardiography curriculum for medical students.

Methods

This study used a modified Delphi technique to establish consensus on a curriculum for undergraduate ECG training.

Delphi expert panel

Cardiologists, Specialist Physicians, Emergency Physicians, Family Physicians and Medical Education Specialists at the eight medical schools of South Africa were invited to take part in this modified Delphi study. The purpose of the study was explained in the letter of invitation. On acceptance to take part, an email with a link to the online survey was sent to the participant. Consent for participation in the study was obtained electronically prior to accessing the online survey in the first round. Invitees were also asked to nominate other colleagues who were responsible for ECG teaching of undergraduate medical students and/or work closely with junior doctors at the academic institutions or hospitals that are considered intern training sites.

Participants were only included as part of the expert panel if they fulfilled all of the following criteria:

-

Participants had to be content experts (i.e. have specialist level knowledge of electrocardiography and/or medical education). Therefore, we included participants if they were either

-

registered as a specialist with the Health Professions Council of South Africa (HPCSA) in Cardiology, Internal Medicine, Emergency Medicine or Family Medicine and practised in an environment that required regular ECG analysis and interpretation (i.e. coronary care unit, medical wards, outpatient department, and/or emergency unit), or

-

a qualified medical doctor with a postgraduate qualification or fellowship in medical education

-

-

Participants required context expertise (i.e. be familiar with the environment in which junior doctors work and/or train in South Africa). We included participants if they were either

-

working in a hospital or clinic where they do ward rounds or review patients with junior doctors (interns, medical officers), or

-

involved in ECG teaching by either giving formal ECG lectures to undergraduate students or reviewing ECGs with junior doctors (interns, medical officers) on ward rounds

-

In South Africa, medical students undergo six years of undergraduate training before graduating as medical doctors, with the exception of one medical school offering a five-year course. South African undergraduate medical programmes include both pre-clinical and clinical training. Although there is no national or international guideline on which undergraduate ECG training or assessment is based, the eight medical schools in South Africa offer comprehensive undergraduate ECG teaching, as demonstrated in Table 1. Medical students receive formal ECG tuition during pre-clinical (typically second and third year) and clinical training (typically fourth to sixth year) and are exposed to real-life ECG analysis and interpretation during various clinical clerkships. However, each academic institution choses their own curriculum and appoint lecturers (from various departments) who are available and show an interest in the subject. For the most part, ECG competence is assessed by multiple choice questions (MCQ), objective structured clinical examination (OSCE) and as part of clinical examinations.

After graduation, South African medical graduates do a two-year internship at an accredited hospital where they practice under supervision. All medical interns rotate through Family Medicine (with dedicated time in Emergency Medicine and Psychiatry), Internal Medicine, Paediatrics, Obstetrics, Orthopaedics, Surgery and Anaesthetics. Although there is little formal ECG training during their internship, they are required to perform and interpret ECGs in most of these rotations. In the third year after graduation, they are compelled to work independently as community service medical officers in the public sector, often at sites where there is limited supervision. Once they have completed this year of community service, they are registered as independent practitioners and are eligible to work in the public or private sector and may then enrol for specialist training.

Delphi survey development

The investigators carefully selected the ECG diagnoses included on the pre-selected list in the first round, by considering the content of undergraduate ECG lectures, suggested and prescribed textbooks for ECG learning [34, 35], as well as a thorough literature search of topics of undergraduate ECG teaching [4, 6, 7, 9, 13, 36,37,38,39,40,41,42,43,44,45,46], as well as postgraduate ECG training [47,48,49,50,51].

Delphi survey administration

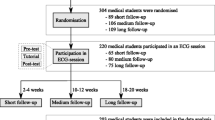

The study comprised three rounds of online surveys that were completed by the participants in the study (Fig. 1). The surveys were administered through REDCap (Research Electronic Data Capture), which is a secure (password protected) online database manager hosted at the University of Cape Town (UCT) [52]. Participants had access to the online surveys through an emailed link specific to the survey of each round and unique to the participant. If, after three weeks, no responses were received, reminder emails were sent to all participants who had not yet completed the online survey by that time.

The first round of the modified Delphi study

In June 2017, a link to the online survey of the first round was sent to all consenting participants. The survey consisted of directed questions and open-ended questions:

-

directed questions: participants were asked to reply to a set of 5-point Likert-type questions (Supplementary Table 1) using a pre-selected list of topics of instruction (Supplementary Table 2)

-

open-ended questions: participants were given the opportunity to suggest additional ECG diagnoses that were not included in the pre-selected list.

The expert panel continued to nominate other colleagues to also participate in this modified Delphi study throughout the course of the first round. The last of these invitations were sent in May 2018 and the last response to the survey of the first round was received in June 2018.

Analysis of the first round’s results and preparation for the second round

In June 2018, after three weeks of not receiving any new responses from participants, the first round was closed. The investigators subsequently analysed the data collected. The following criteria was used to determine consensus for each ECG diagnosis in the survey:

-

inclusion in the proposed undergraduate ECG curriculum: ≥ 75% of the expert panel indicated that they agreed, or strongly agreed, that a junior doctor should be able to make the ECG diagnosis. These items were removed from the list used in the next round of the modified Delphi study.

-

exclusion from the proposed undergraduate ECG curriculum: ≥ 75% of the expert panel indicated that they disagreed, or strongly disagreed, that a junior doctor should be able to make the ECG diagnosis. These items were removed from the list used in the next round of the modified Delphi study.

The survey in the second round was prepared and consisted of all the items that had not reached consensus, as well as the additional items suggested by the expert panel (Supplementary Table 3).

The second round of the modified Delphi study

In July 2018, a link to the second round’s online survey was sent to all those who participated in the first round of the modified Delphi study. Participants were given collective feedback from the first round. Frequencies of participant responses to each Likert-type question were presented to the participants (Supplementary Table 4), before they completed the Likert-type questions of the second round. After completing all the directed questions (Supplementary Table 1), the expert panel was given the opportunity to comment on the feedback they had seen. The last response for the survey of the second round was received in December 2018.

Analysis of the second round’s results and preparation for the third round

Subsequently, the investigators analysed the data collected from the second round. The same inclusion and exclusion criteria that were used in the first round were applied to the responses to the closed-ended questions. The survey in the third round was prepared and consisted of all the items that did not reach consensus in the second round.

The third round of the modified Delphi study

In May 2019, a link to the online survey of the third round of the modified Delphi study was sent to all those who participated in the first round. Participants were given collective feedback from the second round. Frequencies of participant responses for each Likert-type question were presented to the participants (Supplementary Table 4) before they completed the Likert-type questions of the third round (Supplementary Table 1). The last response to the survey of the third round was received in October 2019.

Analysis of the third round's results

The investigators subsequently analysed the data collected during the third round. From these results, and those of the prior rounds, an undergraduate curriculum could be formulated from the topics of ECG instruction for which consensus was established (i.e. ≥ 75% agreement) amongst the expert panel. Thereafter, a mode was calculated for each item in all the rounds, to indicate the majority of responses amongst the expert panel. A final list of ECG diagnoses was compiled, only including those ECG diagnoses that had a mode of 5 (i.e. most participants voted “strongly agree”) and diagnoses that can only be made by means of an ECG recording.

Qualitative content analysis

Qualitative content analysis was performed by two investigators (CAV, VCB). An inductive approach was used to identify themes and subthemes from the free-text comments made by expert panellists at the end of the second and third rounds of the modified Delphi study [53, 54]. Themes and subthemes were refined through an iterative process of reviewing the panellists’ responses [55]. Disagreement was resolved through discussions with a third investigator (RSM). A deductive approach was used to quantify the frequency in which the themes and subthemes emerged from the feedback by the expert panel [56].

Results

The modified Delphi expert panel

This modified Delphi consisted of a large expert panel (n = 131), with good retention in the second (80.9%) and third rounds (77.1%) respectively (Fig. 2). Of the 249 specialists who were invited to take part, five declined the invitation and 111 did not respond. Two participants consented to take part, but never completed the surveys.

As shown in Table 2, the composition of the expert panel remained stable between the rounds with regards to speciality, years of experience, settings in which the panellists encountered ECGs in their own practice and where they taught ECGs. The panellists had a wide range of expertise (42.8% Internal Medicine, 22.9% Cardiology, 16% Family Medicine, 13.7% Emergency Medicine and 4.6% Health Professions Education). A third of the expert panel had more than 15 years’ experience as academic physicians. Most of the panellists consulted in the emergency department (70.2%) and in-patient wards (66.4%), and more than half (55.7%) interpreted an ECG at least once a day. About two thirds were affiliated to a University as a lecturer or senior lecturer. Whereas only 15.3% of the panel were responsible for large group teaching of ECGs (i.e. lectures), 91.6% were involved in workplace-based teaching (i.e. teaching ECGs on ward rounds, etc.).

Items that achieved consensus

Of the 53 items on the pre-selected list that was used in in the first round, 46 items (87.0%) reached consensus to be included in an undergraduate curriculum amongst the panellists, during three rounds of the modified Delphi study (Supplementary Table 2). At the end of the first round, the expert panel suggested an additional 76 items to be included in subsequent rounds of the modified Delphi study, of which 34 (44.7%) reached consensus to be included in the curriculum by the end of the final round (Supplementary Table 3). None of the topics reached consensus to be excluded. The outcomes of the first, second and third rounds are presented in Supplementary Tables 5, 6 and 7 respectively, indicating overall agreement amongst the expert panellists, as well as amongst the different specialties separately.

As shown in Table 3, there was consensus amongst the panellists that a new graduate should know the indications for performing an ECG (i.e. chest pain, dyspnoea, palpitations, syncope, depressed level of consciousness), and that they should be au fait with the technical aspects of performing and reporting a 12-lead ECG.

There was consensus that medical graduates should be able to perform basic analysis of the ECG (Table 4) and recognise the normal ECG (Table 5). Most panellists strongly agreed that young doctors should be able to diagnose sinus rhythm, sinus arrhythmia, sinus tachycardia and sinus bradycardia. Regarding atrial rhythms, atrial fibrillation and atrial flutter were considered important by most. None of the junctional rhythms reached consensus. The life-threatening ventricular rhythms, i.e. ventricular tachycardia, torsades de pointes and ventricular fibrillation all reached consensus. Conduction abnormalities such as left and right bundle branch block, as well as all the atrioventricular (AV) blocks were considered important. Left and right ventricular hypertrophy reached consensus, as well as transmural (STEMI) and subendocardial ischaemia (NSTEMI). As shown in Table 6, most panellists strongly agreed that medical graduates should be able to recognise ECG features such as AV dissociation and pathological Q waves. Consensus was also reached for the recognition of clinical diagnoses such as pericarditis and electrolyte abnormalities (such as hyperkalaemia) on the ECG. Most panellists strongly agreed that medical graduates should have an approach to regular and irregular, narrow and wide complex tachycardias.

Feedback from participants

Feedback was received in free-text form from 25 and 28 participants at the end of the second and third rounds’ surveys respectively (Supplementary Table 8). Themes that emerged from the inductive analysis were issues with curriculum development, knowing when to seek advice, contextualised learning and a recognition of the importance of the work studied in this modified Delphi study (Table 7).

Issues with curriculum development

An important sub-theme that emerged under curricular development, was the need for prioritisation of the different topics that are taught in electrocardiography. Students should be taught “the firm basics and emergencies” to ensure that they are able to diagnose conditions that are life-threatening, or often encountered in clinical practice, once they graduate. Expert panellists cautioned against an undergraduate ECG curriculum that is too difficult (i.e. including ECGs that are too complex for the level of training of young graduates) and also voiced their concern of an undergraduate ECG curriculum that is too extensive and covers too much work.

Knowing when to seek advice

Participants advised that students should be encouraged to seek advice from more experienced colleagues when they have diagnostic uncertainty and to be taught how to make use of electronic support, such as smartphone applications (“apps”) as points of reference, in the workplace.

Contextualised learning

It was recommended that ECGs should be taught within a given clinical context. However, with regard to ECG diagnoses, panellists suggested that the focus of an ECG curriculum should be on conditions that can only be diagnosed by an ECG. With regards to workplace teaching, there was a concern that not all the ECG diagnoses recommended by the Delphi study would be encountered in the workplace during student training.

Recognition of the importance of this Delphi study

There was predominantly positive stakeholder engagement. Participants were often appreciative of being invited to be part of the expert panel. On occasion, narratives concerned criticism of the Delphi process with regard to the composition of the panel and the interval between the rounds in the study. However, it was felt that the results of this study should be disseminated, as it would have a positive impact on undergraduate ECG training.

Final list of “must know” ECG diagnoses

Based on the concerns of curriculum overload, we compiled a consolidated list of “must know” diagnoses that can only be made by means of an ECG recording (Table 8).

Discussion

This modified Delphi study was a first attempt to obtain consensus on an ECG curriculum for medical students. The variable training opportunities offered by medical schools and the lack of national and international guidance for an undergraduate ECG curriculum was the rationale for performing this study. Through an iterative process of systematically measuring agreement amongst ECG experts, 80 topics reached consensus to be included in undergraduate ECG teaching. These topics included the clinical indications and technical aspects of performing and reporting an ECG, basic ECG analysis (rate, rhythm, interval measurements, QRS axis), recognition of the normal ECG, abnormal ECG rhythms and waveforms, and use of the ECG to make or support a clinical diagnosis. From this list of “should know” topics, it was possible to identify 23 “must know” conditions, which are considered as imperative ECG knowledge. These 23 conditions should serve as the core of an undergraduate ECG curriculum, because they encompass important life-threatening conditions (such as ischaemia, ventricular arrhythmias, atrial fibrillation and high degree AV blocks) that can only be diagnosed by means of an ECG, and for which urgent intervention is likely to make a significant difference to outcome.

The validity of the results of any Delphi study depends on the expertise of the panel [16, 26]. Our study consisted of a large expert panel working in a broad range of clinical practice settings. Delphi study literature has cautioned that large expert panels are difficult to manage, with little benefit of better results [16, 57]. Indeed, we did encounter delays in obtaining responses from the expert panel. However, there was a high response rate and little attrition between rounds. Moreover, the positive stakeholder engagement by participants endorsed the importance of the study. As the surveys were done online, the study was a cost-effective way of gathering the opinion of experts [15], and it saved the participants the time and expense of face-to-face meetings [25]. Furthermore, anonymous participation and feedback limited the influence of panel members on each other [15].

Over and above the list of topics that should be taught, the responses by participants in this study highlight several important issues regarding ECG curriculum development. The long list of topics that was suggested, over and above the original pre-selected list, illustrates the tendency for curricular overload and the demand for diagnostic expertise beyond the reach of new medical graduates. Overwhelming novices with ECG content that is “too much” and/or “too difficult” paradoxically results in less learning [58]. It is therefore important that course conveners refrain from overloading students.

A theme that emerged strongly from the feedback by the expert panel was the need for prioritisation within a curriculum (Fig. 3). Despite the concerns of curricular overload, 80 topics of ECG instruction achieved expert consensus. These “should know” topics are proposed to guide undergraduate ECG instruction. ECG lecturers and tutors are discouraged to include “nice to know” topics in undergraduate curricula. However, reducing the list of 80 “should know” topics to a list of 23 “must know” conditions, allows for a core ECG curriculum that does not overwhelm the student. This condensed list is well aligned with the current recommendation in the literature that ECG teaching should focus on enabling medical graduates to safely diagnose life-threatening conditions, so that the emergency management could be promptly implemented [59]. Training that ensures that medical graduates are competent at diagnosing the conditions included in the core ECG curriculum, would therefore allow for safe practice. However, in the event of diagnostic uncertainty, graduates should be encouraged to seek assistance from more senior colleagues. Current medical education opinion is also increasingly recognising the supportive role of information technology in the process of clinical reasoning and diagnosis [60]. The expert panel’s suggestion that smartphone applications be used to support cognitive diagnostic processes in ECG training is well aligned with this opinion.

It has been suggested that tuition should be geared towards the understanding of vectors [61], and the basics of electrocardiography [62,63,64]. If students are familiar with the features of a normal ECG, they may be more able to identify abnormal rhythms and waveforms by means of analysis and pattern recognition [65,66,67].

The need for clinically contextualised ECG training was reaffirmed by this study. This observation is consistent with previous reports that students and clinicians make more accurate ECG diagnoses when the clinical context is known [38, 68]. While this underscores the importance of learning in the workplace, our modified Delphi study identified ECGs that may not be routinely observed in clinical training settings. The participants therefore expressed concern that undergraduate ECG training must be comprehensive and not driven by opportunistic learning encounters only [69, 70].

Lessons learnt from this modified Delphi study

Although expert consensus on an undergraduate ECG curriculum could be derived from the quantitative data collection, the modified Delphi process also allowed for the collection of qualitative data, which helped to put the results of this study in perspective. The quantitative results should therefore not be appraised in isolation or seen as the final arbiter, but rather be considered along with the important remarks by the expert panellists as highlighted by the qualitative content analysis. The suggestions from the quantitative and qualitative analyses should also be implemented according to local context.

The large expert panel’s enthusiasm to participate in this study highlighted their acceptance of the Delphi technique as an appropriate means of establishing consensus. The low attrition rate (despite the iterative rounds of the Delphi study) testifies to the inclination towards an expert consensus document for undergraduate ECG training.

Study limitations

Although this modified Delphi study was conducted in only one country, it does represent a broad spectrum of opinion amongst a large group of specialists engaged in undergraduate ECG education and is, therefore, worthy of consideration in the international community. The proposed list of 23 “must know” conditions, consisting of life-threatening and commonly encountered conditions, is applicable to medical school training in any part of the world, including those where specialist training commences straight after undergraduate studies. The list encompasses conditions that are commonly encountered by clinicians, not limited to those who only work in Cardiology or Internal Medicine. For example, a septic and dehydrated patient awaiting bowel surgery is at high risk of developing atrial fibrillation; or a femoral neck fracture might be the result of a syncopal event associated with third degree AV block.

A limitation to this Delphi study is the absence of Anaesthetists and Paediatricians on the expert panel for devising this undergraduate ECG curriculum. These groups of clinicians, and potentially others, should be involved in future Delphi studies for the development of ECG curricula tailored to their practice.

This modified Delphi study established consensus for a list of conditions that we propose for the tuition of medical students. These topics are apt for pre-clinical and clinical phases of training of medical students. However, this study did not aim to achieve consensus on the teaching modalities that should be used for ECG instruction or assessment of ECG competence.

Conclusion

We have identified undergraduate ECG teaching priorities by means of a modified Delphi study with an expert panel that consisted of specialists with a wide range of expertise. Instead of teaching long lists and complex conditions, we propose focusing on the basics of electrocardiography, life-threatening arrhythmias and waveforms, as well as conditions commonly encountered in daily practice.

Glossary terms

-

‘ECG analysis’ refers to the detailed examination of the ECG tracing, which requires the measurement of intervals and the evaluation of the rhythm and each waveform [8]

-

‘ECG competence’ refers to the ability to accurately analyse as well as interpret the ECG [8]

-

‘ECG interpretation’ refers to the conclusion reached after careful ECG analysis, i.e. making a diagnosis of an arrhythmia, or ischaemia, etc. [61]

-

‘ECG knowledge’ refers to the understanding of ECG concepts, e.g. knowing that transmural ischaemia or pericarditis can cause ST-segment elevation [71, 72]

-

An ‘Entrustable Professional Activity’ (EPA) is a task of every day clinical practice that could be delegated to a medical school graduate as soon as they can perform the task competently and unsupervised [73, 74].

Availability of data and materials

The datasets used and/or analysed during the current study, are available in the “Determining electrocardiography training priorities for medical students using a modified Delphi method” repository, which could be accessed at the DOI https://doi.org/10.25375/uct.12412724.

Abbreviations

- AF:

-

Atrial fibrillation

- AV:

-

Atrioventricular

- ECG:

-

Electrocardiogram

- EPA:

-

Entrustable Professional Activity

- HREC:

-

Human Research Ethics Committee

- LAFB:

-

Left anterior fascicular block

- LBBB:

-

Left bundle branch block

- MCQ:

-

Multiple-choice question

- OSCE:

-

Objective structured clinical examination

- RBBB:

-

Right bundle branch block

- SD:

-

Standard deviation

- STEMI:

-

ST-segment Elevation Myocardial Infarction

- UCT:

-

University of Cape Town

- WPW:

-

Wolff-Parkinson-White

- AVJRT:

-

atrioventricular junctional re-entrant tachycardia

- AVNRT:

-

atrioventricular nodal re-entrant tachycardia

- AVRT:

-

atrioventricular re-entrant tachycardia

- HPCSA:

-

Health Professions Council of South Africa

- LVH:

-

left ventricular hypertrophy

- MMVT:

-

monomorphic ventricular tachycardia

- NSTEMI:

-

Non-ST-segment Elevation Myocardial Infarction

- PAC:

-

premature atrial complex

- PJC:

-

premature junctional complex

- PMVT:

-

polymorphic ventricular tachycardia

- PVC:

-

premature ventricular complex

- RVH:

-

right ventricular hypertrophy

- SA:

-

sino-atrial

- SVT:

-

supraventricular tachycardia

- TCA:

-

tricyclic antidepressant

- TdP:

-

Torsades de Pointes

- VF:

-

ventricular fibrillation

References

Gonsalves CL, Ajjawi R, Rodger M, Varpio L. A novel approach to needs assessment in curriculum development: going beyond consensus methods. Med Teach. 2014;36(5):422–9.

Prideaux D. ABC of learning and teaching in medicine. Curriculum design. BMJ. 2003;326(7383):268–70.

Johnston LM, Wiedmann M, Orta-Ramirez A, Oliver HF, Nightingale KK, Moore CM, et al. Identification of Core competencies for an undergraduate food safety curriculum using a modified Delphi approach. J Food Sci Educ. 2014;13(1):12–21.

Kopec G, Magon W, Holda M, Podolec P. Competency in ECG interpretation among medical students. Med Sci Monitor. 2015;21.

Jablonover RS, Lundberg E, Zhang Y, Stagnaro-Green A. Competency in electrocardiogram interpretation among graduating medical students. Teach Learn Med. 2014;26(3):279–84.

McAloon C, Leach H, Gill S, Aluwalia A, Trevelyan J. Improving ECG competence in medical trainees in a UK district general hospital. Cardiol Res. 2014;5(2):51–7.

Lever NA, Larsen PD, Dawes M, Wong A, Harding SA. Are our medical graduates in New Zealand safe and accurate in ECG interpretation? New Zealand Med J. 2009;122(1292):9–15.

Viljoen CA, Scott Millar R, Engel ME, Shelton M, Burch V. Is computer-assisted instruction more effective than other educational methods in achieving ECG competence among medical students and residents? Protocol for a systematic review and meta-analysis. BMJ Open. 2017;7(12):e018811.

Jablonover RS, Stagnaro-Green A. ECG as an Entrustable professional activity: CDIM survey results, ECG teaching and assessment in the third year. Am J Med. 2016;129(2):226–30 e1.

de Jager J, Wallis L, Maritz D. ECG interpretation skills of south African emergency medicine residents. Int J Emerg Med. 2010;3(4):309–14.

O'Brien KE, Cannarozzi ML, Torre DM, Mechaber AJ, Durning SJ. Training and assessment of ECG interpretation skills: results from the 2005 CDIM survey. Teach Learn Med. 2009;21(2):111–5.

Viljoen CA, Scott Millar R, Engel ME, Shelton M, Burch V. Is computer-assisted instruction more effective than other educational methods in achieving ECG competence amongst medical students and residents? A systematic review and meta-analysis. BMJ Open. 2019;9(11):e028800.

Keller D, Zakowski L. An effective ECG curriculum for third-year medical students in a community-based clerkship. Med Teach. 2000;22(4):354–8.

Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000;32(4):1008–15.

Rohan D, Ahern S, Walsh K. Defining an anaesthetic curriculum for medical undergraduates. A Delphi study. Med Teach. 2009;31(1):e1–5.

de Villiers MR, de Villiers PJ, Kent AP. The Delphi technique in health sciences education research. Med Teach. 2005;27(7):639–43.

Rowe M, Frantz J, Bozalek V. Beyond knowledge and skills: the use of a Delphi study to develop a technology-mediated teaching strategy. BMC Med Educ. 2013;13:51.

Hasson F, Keeney S. Enhancing rigour in the Delphi technique research. Technol Forecast Soc Chang. 2011;78(9):1695–704.

Skulmoski GJ, Hartman FT, Krahn J. The Delphi method for graduate research. J Inform Technol Educ. 2007;6(1):1–21.

Nayyar B, Yasmeen R, Khan RA. Using language of entrustable professional activities to define learning objectives of radiology clerkship: a modified Delphi study. Med Teach. 2019:1–8.

Nayahangan LJ, Stefanidis D, Kern DE, Konge L. How to identify and prioritize procedures suitable for simulation-based training: experiences from general needs assessments using a modified Delphi method and a needs assessment formula. Med Teach. 2018;40(7):676–83.

Clayton R, Perera R, Burge S. Defining the dermatological content of the undergraduate medical curriculum: a modified Delphi study. Br J Dermatol. 2006;155(1):137–44.

Ogden SR, Culp WC Jr, Villamaria FJ, Ball TR. Developing a checklist: consensus via a modified Delphi technique. J Cardiothorac Vasc Anesth. 2016;30(4):855–8.

Boone HN Jr, Boone DA. Analyzing likert data. J Extension. 2012;50(2):1–5.

Ruetschi U, Olarte Salazar CM. An e-Delphi study generates expert consensus on the trends in future continuing medical education engagement by resident, practicing, and expert surgeons. Med Teach. 2019:1–7.

Clayton MJ. Delphi: a technique to harness expert opinion for critical decision-making tasks in education. Educ Psychol. 1997;17(4):373–86.

von der Gracht HA. Consensus measurement in Delphi studies. Technol Forecast Soc Chang. 2012;79(8):1525–36.

Holey EA, Feeley JL, Dixon J, Whittaker VJ. An exploration of the use of simple statistics to measure consensus and stability in Delphi studies. BMC Med Res Methodol. 2007;7:52.

Diamond IR, Grant RC, Feldman BM, Pencharz PB, Ling SC, Moore AM, et al. Defining consensus: a systematic review recommends methodologic criteria for reporting of Delphi studies. J Clin Epidemiol. 2014;67(4):401–9.

Hordijk R, Hendrickx K, Lanting K, MacFarlane A, Muntinga M, Suurmond J. Defining a framework for medical teachers' competencies to teach ethnic and cultural diversity: results of a European Delphi study. Med Teach. 2019;41(1):68–74.

Keeney S, Hasson F, McKenna H. Consulting the oracle: ten lessons from using the Delphi technique in nursing research. J Adv Nurs. 2006;53(2):205–12.

Carley S, Shacklady J, Driscoll P, Kilroy D, Davis M. Exposure or expert? Setting standards for postgraduate education through a Delphi technique. Emerg Med J. 2006;23(9):672–4.

Hsu C-C, Sandford BA. The Delphi technique: making sense of consensus. Pract Assess Res Eval. 2007;12(10):1–8.

Houghton A, Gray D. Making sense of the ECG: a hands-on guide: CRC press; 2014.

Hampton J, Hampton J. The ECG Made easy E-book: Elsevier health sciences; 2019.

Little B, Mainie I, Ho KJ, Scott L. Electrocardiogram and rhythm strip interpretation by final year medical students. Ulster Med J. 2001;70(2):108–10.

Fent G, Gosai J, Purva M. A randomized control trial comparing use of a novel electrocardiogram simulator with traditional teaching in the acquisition of electrocardiogram interpretation skill. J Electrocardiol. 2016;49(2):112–6.

Hatala R, Norman GR, Brooks LR. Impact of a clinical scenario on accuracy of electrocardiogram interpretation. J Gen Intern Med. 1999;14(2):126–9.

Kingston ME. Electrocardiograph course. J Med Educ. 1979;54(2):107–10.

Lessard Y, Sinteff JP, Siregar P, Julen N, Hannouche F, Rio S, et al. An ECG analysis interactive training system for understanding arrhythmias. Stud Health Technol Inform. 2009;150:931–5.

Montassier E, Hardouin JB, Segard J, Batard E, Potel G, Planchon B, et al. E-learning versus lecture-based courses in ECG interpretation for undergraduate medical students: a randomized noninferiority study. Eur J Emerg Med. 2016;23(2):108–13.

Raupach T, Harendza S, Anders S, Schuelper N, Brown J. How can we improve teaching of ECG interpretation skills? Findings from a prospective randomised trial. J Electrocardiol. 2016;49(1):7–12.

Rolskov Bojsen S, Rader SB, Holst AG, Kayser L, Ringsted C, Hastrup Svendsen J, et al. The acquisition and retention of ECG interpretation skills after a standardized web-based ECG tutorial-a randomised study. BMC Med Educ. 2015;15:36.

Rubinstein J, Dhoble A, Ferenchick G. Puzzle based teaching versus traditional instruction in electrocardiogram interpretation for medical students--a pilot study. BMC Med Educ. 2009;9:4.

Rui Z, Lian-Rui X, Rong-Zheng Y, Jing Z, Xue-Hong W, Chuan Z. Friend or foe? Flipped classroom for undergraduate electrocardiogram learning: a randomized controlled study. BMC Med Educ. 2017;17(1):53.

Zeng R, Yue RZ, Tan CY, Wang Q, Kuang P, Tian PW, et al. New ideas for teaching electrocardiogram interpretation and improving classroom teaching content. Adv Med Educ Pract. 2015;6:99–104.

Balady GJ, Bufalino VJ, Gulati M, Kuvin JT, Mendes LA, Schuller JL. COCATS 4 task force 3: training in electrocardiography, ambulatory electrocardiography, and exercise testing. J Am Coll Cardiol. 2015;65(17):1763–77.

Kadish AH, Buxton AE, Kennedy HL, Knight BP, Mason JW, Schuger CD, et al. ACC/AHA clinical competence statement on electrocardiography and ambulatory electrocardiography: a report of the ACC/AHA/ACP-ASIM task force on clinical competence (ACC/AHA Committee to develop a clinical competence statement on electrocardiography and ambulatory electrocardiography) endorsed by the International Society for Holter and noninvasive electrocardiology. Circulation. 2001;104(25):3169–78.

Auseon AJ, Schaal SF, Kolibash AJ Jr, Nagel R, Lucey CR, Lewis RP. Methods of teaching and evaluating electrocardiogram interpretation skills among cardiology fellowship programs in the United States. J Electrocardiol. 2009;42(4):339–44.

Fisch C. Clinical competence in electrocardiography. A statement for physicians from the ACP/ACC/AHA task force on clinical privileges in cardiology. Circulation. 1995;91(10):2683–6.

Myerburg RJ, Chaitman BR, Ewy GA, Lauer MS. Task force 2: training in electrocardiography, ambulatory electrocardiography, and exercise testing. J Am Coll Cardiol. 2008;51(3):348–54.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81.

Hashemnezhad H. Qualitative content analysis research: a review article. J ELT Appl Linguistics. 2015;3(1):54–62.

Tavakol M, Sandars J. Quantitative and qualitative methods in medical education research: AMEE guide no 90: part I. Med Teach. 2014;36(9):746–56.

Tavakol M, Sandars J. Quantitative and qualitative methods in medical education research: AMEE guide no 90: part II. Med Teach. 2014;36(10):838–48.

Walling A, Istas K, Bonaminio GA, Paolo AM, Fontes JD, Davis N, et al. Medical student perspectives of active learning: a focus group study. Teach Learn Med. 2017;29(2):173–80.

Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–82.

van den Berge K, van Gog T, Mamede S, Schmidt HG, van Saase JLCM, Rikers RMJP. Acquisition of visual perceptual skills from worked examples: learning to interpret electrocardiograms (ECGs). Interact Learn Environ. 2013;21(3):263–72.

Varvaroussis DP, Kalafati M, Pliatsika P, Castren M, Lott C, Xanthos T. Comparison of two teaching methods for cardiac arrhythmia interpretation among nursing students. Resuscitation. 2014;85(2):260–5.

Wartman SA, Combs CD. Medical education must move from the information age to the age of artificial intelligence. Acad Med. 2018;93(8):1107–9.

Hurst JW. Methods used to interpret the 12-lead electrocardiogram: pattern memorization versus the use of vector concepts. Clin Cardiol. 2000;23(1):4–13.

Kashou A, May A, DeSimone C, Noseworthy P. The essential skill of ECG interpretation: how do we define and improve competency? Postgrad med J. 2019.

Okreglicki A, Scott MR. ECG: PQRST morphology – clues and tips. A guide to practical pattern recognition. SA Heart J. 2006;3:27–36.

Larson CO, Bezuidenhout J, van der Merwe LJ. Is community-based electrocardiography education feasible in the early phase of an undergraduate medical curriculum? Health SA Gesondheid. 2017;22:61–9.

Eva KW, Hatala RM, Leblanc VR, Brooks LR. Teaching from the clinical reasoning literature: combined reasoning strategies help novice diagnosticians overcome misleading information. Med Educ. 2007;41(12):1152–8.

Simpson SA, Gilhooley KJ. Diagnostic thinking processes: evidence from a constructive interaction study of electrocardiogram (ECG) interpretation. Appl Cognit Psychol. 1997;11:543–54.

Ark TK, Brooks LR, Eva KW. Giving learners the best of both worlds: do clinical teachers need to guard against teaching pattern recognition to novices? Acad Med. 2006;81(4):405–9.

Grum CM, Gruppen LD, Woolliscroft JO. The influence of vignettes on EKG interpretation by third-year students. Acad Med. 1993;68(10 Suppl):S61–3.

Hirsh DA, Ogur B, Thibault GE, Cox M. “continuity” as an organizing principle for clinical education reform. N Engl J Med. 2007;356(8):858–66.

Johnston BT, Valori R. Teaching and learning on the ward round. Frontline Gastroenterol. 2012;3(2):112–4.

Burke JF, Gnall E, Umrudden Z, Kyaw M, Schick PK. Critical analysis of a computer-assisted tutorial on ECG interpretation and its ability to determine competency. Med Teach. 2008;30(2):e41–8.

Kopeć G, Magoń W, Hołda M, Podolec P. Competency in ECG interpretation among medical students. Med Sci Monit. 2015;21:3386–94.

Ten Cate O, Chen HC, Hoff RG, Peters H, Bok H, van der Schaaf M. Curriculum development for the workplace using Entrustable professional activities (EPAs): AMEE guide no. 99. Med Teach. 2015;37(11):983–1002.

Ten Cate O. A primer on entrustable professional activities. Korean J Med Educ. 2018;30(1):1–10.

Acknowledgements

The authors wish to thank Dr. Heleen Emmer at Groote Schuur Hospital, Ms. Sylvia Dennis and Dr. Julian Hövelmann from the Hatter Institute for Cardiovascular Research in Africa (HICRA) for their very valuable input and assistance, as well as Ms. Annemie Stewart and Ms. Chedwin Grey at the Clinical Research Centre (CRC), Faculty of Health Sciences, University of Cape Town for their support with REDCap. We are grateful for Ms. Elani Muller and Dr. Heike Geduld for their advice on conducting the modified Delphi study, Mr. Charle Viljoen for analytical support and the medical interns at Groote Schuur Hospital for their willingness to provide insight to their undergraduate ECG training. We would like to extend a special thanks to the expert panellists for their time to complete the iterative online surveys and their valuable input throughout study.

Funding

RSM is a lecturer and host of the AO Memorial Advanced ECG and Arrhythmia Course and receives an honorarium from Medtronic Africa. The authors did not receive a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

CAV conceived the study protocol, with advice from RSM and VCB regarding study design. CAV collected the data. CAV performed the statistical analysis under the guidance of KM. CAV, RSM and VCB interpreted the results. CV drafted the manuscript, which was critically revised by RSM, KM and VCB. All authors have read and approved the manuscript.

Authors’ information

CV, MBChB MMed FCP(SA), is a fellow in Cardiology at Groote Schuur Hospital and a PhD student at the University of Cape Town.

RSM, MBChB FCP(SA), is an emeritus associate professor at the University of Cape Town. He continues to play a key role in postgraduate ECG teaching in South Africa.

KM, BS PG Dip (Diet) MSc (Med), is a statistical analyst at the University of Cape Town.

VB, MBChB FCP(SA) MMed PhD, is an honorary professor at the University of Cape Town. She has a leading position in curricular design for postgraduate teaching in South Africa.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Approval was obtained from the Human Research Ethics Committee (HREC) at the Faculty of Health Sciences (HREC reference number 680/2016), as well as institutional permission from Groote Schuur Hospital and the Human Resources Department at the University of Cape Town. All participants provided consent prior to participation in the study.

Consent for publication

All study participants provided informed consent for the anonymised analysis of their responses in the three rounds of the modified Delphi study and a possible scientific publication of the results.

Competing interests

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Supplementary Table 1. Example of a Likert-type question in the first round.

Additional file 2:

Supplementary Table 2. The pre-selected list that was used in the first round was based on the undergraduate ECG curriculum at UCT and prescribed textbooks. This list consisted of 53 items, of which 46 items (87.0%) reached consensus amongst the panellists during the course of three rounds of the modified Delphi study.

Additional file 3:

Supplementary Table 3. At the end of the first round, the expert panel suggested an additional 76 items to be included in the subsequent rounds of the modified Delphi study, of which 34% (44.7%) reached consensus by the end of the third round

Additional file 4:

Supplementary Table 4. Example of the feedback of the first round given in the second round.

Additional file 5:

Supplementary Table 5. First round results.

Additional file 6:

Supplementary Table 6. Second round results.

Additional file 7:

Supplementary Table 7. Third round results.

Additional file 8:

Supplementary Table 8. Participant feedback classified according to themes and subthemes.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Viljoen, C.A., Millar, R.S., Manning, K. et al. Determining electrocardiography training priorities for medical students using a modified Delphi method. BMC Med Educ 20, 431 (2020). https://doi.org/10.1186/s12909-020-02354-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-02354-4