Abstract

Background

It is difficult for orthodontists to accurately predict the growth trend of the mandible in children with anterior crossbite. This study aims to develop a deep learning model to automatically predict the mandibular growth result into normal or overdeveloped using cephalometric radiographs.

Methods

A deep convolutional neural network (CNN) model was constructed based on the algorithm ResNet50 and trained on the basis of 256 cephalometric radiographs. The prediction behavior of the model was tested on 40 cephalograms and visualized by equipped with Grad-CAM. The prediction performance of the CNN model was compared with that of three junior orthodontists.

Results

The deep-learning model showed a good prediction accuracy about 85%, much higher when compared with the 54.2% of the junior orthodontists. The sensitivity and specificity of the model was 0.95 and 0.75 respectively, higher than that of the junior orthodontists (0.62 and 0.47 respectively). The area under the curve value of the deep-learning model was 0.9775. Visual inspection showed that the model mainly focused on the characteristics of special regions including chin, lower edge of the mandible, incisor teeth, airway and condyle to conduct the prediction.

Conclusions

The deep-learning CNN model could predict the growth trend of the mandible in anterior crossbite children with relatively high accuracy using cephalometric images. The deep learning model made the prediction decision mainly by identifying the characteristics of the regions of chin, lower edge of the mandible, incisor teeth area, airway and condyle in cephalometric images.

Similar content being viewed by others

Background

Class III malocclusion is a frequently observed clinical problem, mostly manifested as anterior crossbite, occurring in 4–14% East Asian populations [1]. Anterior crossbite not only affects the occlusal function, but also damages the balance of profile, and increases the social and psychological burden of the child [2]. As for the components of Class III malocclusion, the combination of underdeveloped maxilla and overdeveloped mandible was most common at 1/3, whereas those with a normal maxilla and overdeveloped mandible constituted about 1/5 [3]. Children who have Class III malocclusion because of excessive growth of the mandible are extremely difficult to treat. Prediction for the future growth trend of the mandible greatly affects the decision for treatment option. Inaccurate prediction may lead to insufficient or unnecessary treatment.

In fact, it is difficult for orthodontists to accurately predict the remaining growth amount of the mandible. Similar anterior crossbite in the mixed dentition may develop to malocclusions with different skeletal pattern in the permanent dentition [4]. Therefore, accurate prediction of the future mandibular growth in the early stage will be very useful to assist treatment planning and prognosis.

Various mathematical methods have been proposed over the past few decades to predict the growth of mandible. Sato et al. [5] established a linear equation to predict mandibular growth potential based on bone age, which was assessed from hand-wrist radiographs. This method was not widely used due to the unnecessary radiation dose to children resulted from the additional hand-wrist radiograph. Later, Mito et al. [6] developed a formula to predict mandibular growth potential by calculating the actual growth of the mandible (condyle-gnathion) with cervical vertebral bone age, which was evaluated from cephalometric radiographs. However, this method was not suitable for individual prediction because the formula was derived from the mixed data of 7–13 years of age children, including different stages of the growth period. Moshfeghi et al. [7] set a regression equation to predict mandibular length (Articulare-Pogonion) by analyzing the morphological changes of the cervical vertebrae on lateral cephalograms. Recently, Franchi et al. [8] developed a mathematical mixed effect model to predict the growth of mandible, and the results demonstrated that cervical stage, chronological age and gender were significant predictors for the annualized increments in mandibular growth.

Among the above studies, regression equation analysis is the most frequently used method for predicting mandibular growth potential. Regression equation is applied to identify and verify the factors that probably reflect the growth potential of mandible [9]. However, as it works based on the analysis of a linear combination of covariates, it may be too simplistic to predict the complex growth outcome of mandible.

Deep learning technique is a big breakthrough in machine learning field, and it has showed great application potential in medical filed in recent years. Deep learning models have behaved high accuracy on medical image classification by automatically learning from datasets, which are manually annotated by clinical experts [10, 11]. Among deep learning models, the convolutional neural network (CNN) achieves the most attention and is widely researched due to its amazing performance for detection of medical images [12]. CNN models can automatically learn and extract characteristic features and structures from training images, and then make classification and prediction for new images.

In the past few years, deep learning techniques have been successfully applied to the dental field and acquired significant achievements. Deep learning models have showed a reliable ability in identifying and classifying several types of dental imaging, even surpassing human experts [13, 14]. Kim et al. [15] used a deep CNN model to automatically identify and classify skeletal malocclusions into three classes from 3D Cone-Beam Computerized Tomography (CBCT) craniofacial images and achieved a good performance of the accuracy over 93%. Yu et al. [16] constructed a multimodal CNN model to provide vertical and sagittal skeletal diagnosis with cephalometric radiographs and reported a high classification accuracy at 96.4%. The CNN model has showed its great potential for the analysis of Orthodontic X-ray images, including CBCT and cephalograms. However, to our knowledge, prediction for the growth potential of the mandible based on CNN models has not yet been reported.

In this research, we aimed to train a deep learning CNN model to automatically classify the mandibular growth result for children with anterior crossbite into two types- normal and overdeveloped, using cephalometric radiographs. We also aimed to reveal the critical regions in cephalometric images based on which the model made the classification.

Methods

Patients inclusion

This retrospective study was approved by the Research Ethics Committee of Sir Run Run Shaw Hospital, Zhejiang University School of Medicine (No. 20210729-122). In this study, 512 patients who visited our Orthodontic Center between January 2010 and December 2016 with the chief complain of anterior crossbite were screened for further research.

The inclusion criteria were as follows: (1) anterior crossbite; (2) Class III or Class I molar relationship; (3) ANB < 0°; (4) without functional mandibular setback to edge to edge; (5) 8–14 years of age; (6) availability of the pre-treatment (T1) and after 18-year of age (T2) lateral cephalograms which were of good quality.

The exclusion criteria were as follows: (1) maxillary retrusion; (2) anterior crossbite caused by misaligned teeth; (3) congenital deformity such as cleft lip and palate, infection or trauma history.

A total of 296 patients were included in this study (142 males and 154 females, ranged from 8.08 to 13.92 years, with an average age of 10.8 years).

Cephalometric analysis and skeletal classification

All T1 and T2 cephalometric radiographs were uploaded into Dolphin software (Version 11.9, Dolphin Digital Imaging, Chatsworth, Calif, USA). The anatomic contours were traced and cephalometric landmarks were located simultaneously by two orthodontic experts. Any disagreements about landmark location were resolved by retracing the anatomic contours until the two experts achieved the same point.

The cephalometric measurements related to evaluate the growth condition of mandible included SNB, ANB, Wits appraisal, FMA (mandibular plane to FH), SNPog (facial plane to SN), NSGn (Y-Axis), NSAr (Sella Angle), ArGoMe (Gonial Angle); Ar-Gn (effective mandibular length), Co-Gn (total mandibular length), Go-Gn (mandibular body length), and Co-Go (mandibular ramus length).

Anterior crossbite with prognathic mandible belongs to skeletal malocclusion which may require orthognathic surgery according to the Kerr’s research [17]. In the contrast, anterior crossbite with normal mandible can be treated by orthodontics. According to the cephalometric analysis results at T2, if SNB > 86°, ANB < − 2° and Wits value < − 2.0 mm [17], the subject was recognized as a patient with overdeveloped mandible and assigned to Group A, otherwise, assigned to Group B (patient with normal mandible). Finally, 102 patients (49 males and 53 females, ranged from 8.08 to 13.92 years, with an average age of 11.5 years) were sorted to Group A and 194 patients (93 males and 101 females, ranged from 8.08 to 13.83 years, with an average age of 10.4 years) were sorted to Group B.

Datasets build and annotation

The lateral cephalometric images of the 296 subjects at T1 were collected for the training and testing of the deep learning-based CNN model. Among those, 256 lateral cephalograms (82 images from Group A and 174 images from Group B) were randomly selected as the training dataset. During the process of model training, the tenfold cross-validation process was applied to acquire the best model parameters. The remaining 40 cephalometric images (20 images from Group A and 20 images from Group B) were used as the testing dataset to evaluate the performance of the deep learning-based CNN model.

Area adjustment of the cephalometric images was conducted in the process of preparing square images for the deep learning model generation. As the redundant information in the cephalometric images would affect the efficiency of the training process, the input images were zoomed out, cropped and resized to 512 * 512 pixels without changing its aspect ratio. The specific requirements of reducing the region were as follows: the right border of the image should include the complete nose structure and the least air area; the bottom border of the image should include the complete chin structure and the least air area; the top border of the image should include the complete temporomandibular joint structure and part of skull base structure; the left border of the image should include the complete upper airway structure and part of cervical vertebra structure.

Then, in order to avoid overfitting, the images were randomly augmented by applying random transformations, including rotation, horizontal and vertical flipping, width and height shifting, shearing, and zooming.

Based on the classification results of Group A and Group B, the reference annotations of the mandibular growth trend (overdeveloped vs normal) for T1 cephalograms were created.

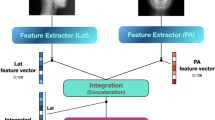

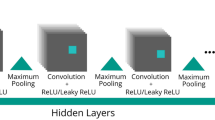

Architecture of the deep-learning model

We developed a neural network based on ResNet50, which was famous for its excellent performance in image classification and object detection [18]. The architecture of this model was composed of several residual networks and a softmax layer. The residual network was used to detect and analyze the characteristics of the input images. The softmax layer was adopted to predict the classification of the object. Figure 1 showed the workflow of this deep learning-based CNN model. The training process was performed on a Linux machine with a GPU accelerator, and the initial learning rate and training epoch was 0.01 and 300, respectively.

Visualization of region of interest

The analysis behavior and process of the model was recorded and visualized by marking a region of interest (ROI) on the input image, using a visualization method called “Grad-CAM”. Grad-CAM is a region proposal network that is equipped into the output layer of the neural network and can mark the ROI [19]. Specifically, Grad-CAM has the super advantage of localizing the most discriminative and critical region from the whole scene for classifying the input image because some special spatial element in the feature maps plays an essential role in the calculating and prediction process of the model.

Mandibular growth prediction and statistical analysis

After completion of the training process of the CNN model, the testing dataset (20 images from Group A and 20 images from Group B) was classified by the CNN model and junior orthodontists respectively. For CNN model classification, the testing images were input to the model and the classification result will be given based on the possibility comparison between different classification. For example, as shown in Fig. 2, after the input of Image X, the output result was shown as: 0.9963781 for Class A (overdeveloped mandible) and 0.0036219 for Class B (normal mandible). Then Image X was classified to Class A. For junior orthodontist classification, three junior orthodontists (clinical work experience less than 5 years) gave their individual prediction (only one prediction result: Class A or Class B) for the future mandibular growth of the subject based on cephalometric measurements and analysis of the testing cephalogram.

The prediction results from the deep learning-based CNN model were compared with those from the junior orthodontists to explore its clinical application by the following indices: classification accuracy, true positive rate (sensitivity), false negative rate, false positive rate, true negative rate (specificity), and the area under the curve (AUC) [20]. These calculating work were based on a Keras framework in Python.

Results

Comparison of prediction results

The performance of the deep-learning CNN model and the junior orthodontists were summarized in Table 1. Higher accuracy was found in the deep-learning model prediction results (85.0%) when compared with that of the three junior orthodontists (54.2%). The mean sensitivity/specificity for the model prediction and human prediction were 0.95/0.75 and 0.62/0.47 respectively. The receiver-operating characteristics (ROC) curve of the model and the performance points of the junior orthodontists in the ROC picture were shown in Fig. 3. The AUC value of the deep-learning model was 0.9775.

Receiver operating characteristic (ROC) curve of the deep learning model and the performance points of the junior orthodontists. The solid bule line displayed the trajectory of the deep learning model with respect to Sensitivity (True Positive Rate) and 1-Specificity (False Positive Rate). The colorful points represented the performance of the junior orthodontists. None of the junior orthodontists outperformed the deep learning model

Visualization of localization results

Figure 4 showed some examples of the heatmap images reflecting the characteristic of the learning and classification behaviors of the deep-learning model. Visual inspection results showed that the deep-learning model mainly focused on the following areas: chin (40/40), lower edge of the mandible (28/40) and incisor teeth (7/40). It’s interesting to find that the area used to be considered as not important for the prediction of the mandibular growth such as the airway area was recognized as ROI in some subjects by the deep-learning model (2/40) (Table 2).

Discussion

Class III malocclusion can be of dental or skeletal origin, so it is crucial to classify the malocclusion accurately in order to manage it on a sound clinical basis. Class III malocclusion resulted from overdeveloped mandible is extremely difficult to treat. The mandible has the longest growth and develop period among the craniofacial bones [21]. Therefore, the prediction for the mandibular growth in the early stage is very challenging. Severe skeletal Class III with prognathic mandible requires orthognathic surgery. However, if camouflaged orthodontic extraction treatment was conducted at an early stage, the result might be unstable or even deteriorated during the treatment.

Since the key point of successful treatment of anterior crossbite lies on the mandible, the early and accurate prediction of the growth trend of mandible and treatment with corresponding interventional methods can be beneficial to the patients [22]. Clinically, the decision of proper treatment and timing for Class III patients mainly relies on the clinical experience of orthodontists. However, individual clinical experience varies significantly among different orthodontists. On the other hand, even for experienced orthodontists, it is also challenging to accurately predict the remaining growth of mandible when facing anterior crossbite in mixed dentition or early permanent dentition.

In this study, a deep learning-based CNN model was trained to predict the growth trend of mandible in anterior crossbite child from pre-treatment cephalometric radiographs. The result showed that the deep learning model achieved 85% accuracy in predicting whether the mandible of anterior crossbite child will grow into an overdeveloped mandible or a normal mandible. The accuracy of the machine learning based prediction is much higher than that from the junior orthodontists, which was 54.2%. The sensitivity and specificity for the model prediction were also higher when compared with human prediction (0.95 vs 0.62 and 0.75 vs 0.47). The reason for the good performance of this deep learning model should be related to the innovation of the prediction process, which replaced the analysis of linear and angular measurements by a direct and comprehensive detecting and analyzing system [23]. This deep learning model was developed from a well-known algorithm called ResNet, which has been recognized as a breakthrough innovation for its strong ability to train extremely deep neural networks, possessing important advantages such as analyzing more structure information, relevance information and detail information of images [24]. In the deep learning field, the AUC value is considered to be an important index when evaluating the performance of the models [25]. The deep learning model in this study achieved an AUC value of 0.9775, demonstrating its reliable performance.

Among the results from this study, there were two that worth more notice. One was that the false positive rate of the deep learning model was much lower than that of the junior orthodontists. This suggested that orthodontists with less clinical experience tended to be overcautious in the prediction of future mandibular growth in order to reducing clinical risk and avoiding medical dispute. Another revealing was that the false negative rate of the deep learning model was also much lower than that of the junior orthodontists. This implied that junior orthodontists might be more likely to make a wrong prediction of judging the mandible to be normal in the future, whereas the mandible would grow into an excessive size. This type of wrong prediction may result in unsuccessful camouflaged orthodontic treatment for cases which actually need orthognathic surgery and anterior crossbite relapse after treatment.

In addition, we combined Grad-CAM into the deep learning model to provide a guide for visually predicting the growth feature of the mandible in anterior crossbite child and reveal the prediction mechanism behind. The output of this model includes heatmaps, which allowing the identification of the main areas in cephalometric images by which the model made the prediction decision. The results showed that the heatmaps included regions of chin, lower edge of the mandible, incisor teeth area, airway and condyle. The regions like chin and lower edge of the mandible are easy to understand as they are closely related to the growth regulation of mandible [26, 27]. However, the airway region is unexpectable as we rarely take it into consideration for mandibular growth prediction in anterior crossbite child. This interesting result could be further understood by some reports claiming that the morphological characteristics of the airway were correlated with the craniofacial skeletal pattern, such as skeletal Class II malocclusion (mainly manifested as mandibular retraction) [28] and skeletal Class III malocclusion (mainly manifested as mandibular protrusion) [29, 30]. The findings from this study provided some new clues for prediction.

Despite the good performance of the deep learning-based CNN model, there are several limitations in our approach. First, the total size of the training dataset was small, as well as the testing dataset. Second, other deep learning models were not applied to compare the prediction performance. Last, clinical characteristics and family history of patients were not included in the algorithm, so it is unknown whether the performance of the deep learning model could be improved if these factors were added.

Conclusions

-

1.

The deep learning model behaved well and resulted in a much higher accuracy in mandibular growth trend prediction for children with anterior crossbite.

-

2.

The deep learning model made the prediction decision mainly by identifying the characteristics of the regions of chin, lower edge of the mandible, incisor teeth area, airway and condyle in cephalometric images.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CNN:

-

Convolutional neural network

- CBCT:

-

Cone-beam computerized tomography

- Conv:

-

Convolution

- BN:

-

Batch norm

- ReLU:

-

Rectified linear unit

- ROI:

-

Region of interest

- AUC:

-

Area under the curve

- TPR:

-

True positive rate

- FNR:

-

False negative rate

- FPR:

-

False positive rate

- TNR:

-

True negative rate

- ROC:

-

Receiver-operating characteristics

References

Ngan P, Moon W. Evolution of class III treatment in orthodontics. Am J Orthod Dentofac Orthop. 2015;148(1):22–36.

Vasilakos G, Koniaris A, Wolf M, Halazonetis D, Gkantidis N. Early anterior crossbite correction through posterior bite opening: a 3D superimposition prospective cohort study. Eur J Orthod. 2018;40(4):364–71.

Ellis E 3rd, McNamara JA Jr. Components of adult class III malocclusion. J Oral Maxillofac Surg. 1984;42(5):295–305.

Ngan P. Early treatment of Class III malocclusion: is it worth the burden? Am J Orthod Dentofac Orthop. 2006;129(4 Suppl):S82–5.

Sato K, Mito T, Mitani H. An accurate method of predicting mandibular growth potential based on bone maturity. Am J Orthod Dentofac Orthop. 2001;120(3):286–93.

Mito T, Sato K, Mitani H. Predicting mandibular growth potential with cervical vertebral bone age. Am J Orthod Dentofac Orthop. 2003;124(2):173–7.

Moshfeghi M, Rahimi H, Rahimi H, Nouri M, Bagheban AA. Predicting mandibular growth increment on the basis of cervical vertebral dimensions in Iranian girls. Prog Orthod. 2013;14(1):3.

Franchi L, Nieri M, McNamara JA Jr, Giuntini V. Predicting mandibular growth based on CVM stage and gender and with chronological age as a curvilinear variable. Orthod Craniofac Res. 2021;24(3):414–20.

Buschang PH, Tanguay R, LaPalme L, Demirjian A. Mandibular growth prediction: mean growth increments versus mathematical models. Eur J Orthod. 1990;12(3):290–6.

Gao XW, Hui R, Tian Z. Classification of CT brain images based on deep learning networks. Comput Methods Programs Biomed. 2017;138:49–56.

Czajkowska J, Badura P, Korzekwa S, Płatkowska-Szczerek A, Słowińska M. Deep learning-based high-frequency ultrasound skin image classification with multicriteria model evaluation. Sensors (Basel). 2021;21(17):5846.

Anwar SM, Majid M, Qayyum A, Awais M, Alnowami M, Khan MK. Medical image analysis using convolutional neural networks: a review. J Med Syst. 2018;42(11):226.

Cantu AG, Gehrung S, Krois J, Chaurasia A, Rossi JG, Gaudin R, Elhennawy K, Schwendicke F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J Dent. 2020;100: 103425.

Fu Q, Chen Y, Li Z, Jing Q, Hu C, et al. A deep learning algorithm for detection of oral cavity squamous cell carcinoma from photographic images: a retrospective study. EClinicalMedicine. 2020;27: 100558.

Kim I, Misra D, Rodriguez L, Gill M, Liberton DK, Almpani K, Lee JS, Antani S. Malocclusion classification on 3D cone-beam CT craniofacial images using multi-channel deep learning models. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:1294–8.

Yu HJ, Cho SR, Kim MJ, Kim WH, Kim JW, Choi J. Automated skeletal classification with lateral cephalometry based on artificial intelligence. J Dent Res. 2020;99(3):249–56.

Kerr WJ, Miller S, Dawber JE. Class III malocclusion: surgery or orthodontics? Br J Orthod. 1992;19(1):21–4.

Yu H, Li J, Zhang L, Cao Y, Yu X, Sun J. Design of lung nodules segmentation and recognition algorithm based on deep learning. BMC Bioinform. 2021;22(Suppl 5):314.

Jiang H, Xu J, Shi R, Yang K, Zhang D, Gao M, Ma H, Qian W. A multi-label deep learning model with interpretable grad-CAM for diabetic retinopathy classification. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:1560–3.

England JR, Cheng PM. Artificial intelligence for medical image analysis: a guide for authors and reviewers. AJR Am J Roentgenol. 2019;212(3):513–9.

Reyes BC, Baccetti T, McNamara JA Jr. An estimate of craniofacial growth in Class III malocclusion. Angle Orthod. 2006;76(4):577–84.

Tai K, Park JH, Ohmura S, Okadakage-Hayashi S. Timing of Class III treatment with unfavorable growth pattern. J Clin Pediatr Dent. 2014;38(4):370–9.

Chan HP, Samala RK, Hadjiiski LM, Zhou C. Deep learning in medical image analysis. Adv Exp Med Biol. 2020;1213:3–21.

Yu X, Kang C, Guttery DS, Kadry S, Chen Y, Zhang YD. ResNet-SCDA-50 for breast abnormality classification. IEEE/ACM Trans Comput Biol Bioinform. 2021;18(1):94–102.

Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, Peng L, Webster DR. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. 2018;2(3):158–64.

Buschang PH, Gandini Júnior LG. Mandibular skeletal growth and modelling between 10 and 15 years of age. Eur J Orthod. 2002;24(1):69–79.

Patcas R, Herzog G, Peltomäki T, Markic G. New perspectives on the relationship between mandibular and statural growth. Eur J Orthod. 2016;38(1):13–21.

Brito FC, Brunetto DP, Nojima MCG. Three-dimensional study of the upper airway in different skeletal Class II malocclusion patterns. Angle Orthod. 2019;89(1):93–101.

Iwasaki T, Hayasaki H, Takemoto Y, Kanomi R, Yamasaki Y. Oropharyngeal airway in children with Class III malocclusion evaluated by cone-beam computed tomography. Am J Orthod Dentofac Orthop. 2009;136(3):318.

Zhang J, Liu W, Li W, Gao X. Three-dimensional evaluation of the upper airway in children of skeletal class III. J Craniofac Surg. 2017;28(2):394–400.

Acknowledgements

Not applicable.

Funding

This study was supported by three research projects. One is “National Natural Science Foundation of China (81200806)” which provided financial support in stage of data collection and statistical interpretation of data. Another is “Ningxia Hui Autonomous Region key Research and Development Program (2022BEG02031)” which provided financial support in stage of deep-learning model development. The third is “Medical Science and Technology Project of Zhejiang Province (2023KY122)” which provided financial support in stage of open access publishing. The funders had no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

JNZ: writing original draft, data curation, investigation, methodology. HPL: writing review and editing, validation, formal analysis. JH: software. QW: data curation, investigation, methodology. FYY: investigation, methodology. CZ: investigation, methodology. CYH: data curation, investigation, methodology, resources, supervision. SC: conceptualization, writing review and editing, funding acquisition, project administration. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This retrospective study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Research Ethics Committee of Sir Run Run Shaw Hospital, Zhejiang University School of Medicine (No. 20210729-122). All the methods in the study were carried out in accordance with the relevant guidelines and regulations. Informed consent agreement was signed by a parent or guardian for participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Zhang, JN., Lu, HP., Hou, J. et al. Deep learning-based prediction of mandibular growth trend in children with anterior crossbite using cephalometric radiographs. BMC Oral Health 23, 28 (2023). https://doi.org/10.1186/s12903-023-02734-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12903-023-02734-4