Abstract

Background

Chest pain is among the most common presenting complaints in the emergency department (ED). Swift and accurate risk stratification of chest pain patients in the ED may improve patient outcomes and reduce unnecessary costs. Traditional logistic regression with stepwise variable selection has been used to build risk prediction models for ED chest pain patients. In this study, we aimed to investigate if machine learning dimensionality reduction methods can improve performance in deriving risk stratification models.

Methods

A retrospective analysis was conducted on the data of patients > 20 years old who presented to the ED of Singapore General Hospital with chest pain between September 2010 and July 2015. Variables used included demographics, medical history, laboratory findings, heart rate variability (HRV), and heart rate n-variability (HRnV) parameters calculated from five to six-minute electrocardiograms (ECGs). The primary outcome was 30-day major adverse cardiac events (MACE), which included death, acute myocardial infarction, and revascularization within 30 days of ED presentation. We used eight machine learning dimensionality reduction methods and logistic regression to create different prediction models. We further excluded cardiac troponin from candidate variables and derived a separate set of models to evaluate the performance of models without using laboratory tests. Receiver operating characteristic (ROC) and calibration analysis was used to compare model performance.

Results

Seven hundred ninety-five patients were included in the analysis, of which 247 (31%) met the primary outcome of 30-day MACE. Patients with MACE were older and more likely to be male. All eight dimensionality reduction methods achieved comparable performance with the traditional stepwise variable selection; The multidimensional scaling algorithm performed the best with an area under the curve of 0.901. All prediction models generated in this study outperformed several existing clinical scores in ROC analysis.

Conclusions

Dimensionality reduction models showed marginal value in improving the prediction of 30-day MACE for ED chest pain patients. Moreover, they are black box models, making them difficult to explain and interpret in clinical practice.

Similar content being viewed by others

Background

Chest pain is among the most common chief complaints presenting to the emergency department (ED) [1,2,3]. The assessment of chest pain patients poses a diagnostic challenge in balancing risk and cost. Inadvertent discharge of acute coronary syndrome (ACS) patients is associated with higher mortality rates while inappropriate admission of patients with more benign conditions increases health service costs [4, 5]. Hence, the challenge lies in recognizing low-risk chest pain patients for safe and early discharge from the ED. There has been increasing focus on the development of risk stratification scores. Initially, risk scores such as the Thrombolysis in Myocardial Infarction (TIMI) score [6, 7] and the Global Registry of Acute Coronary Events (GRACE) score [8] were developed from post-ACS patients to estimate short-term mortality and recurrence of myocardial infarction. The History, Electrocardiogram (ECG), Age, Risk factors, and initial Troponin (HEART) score was subsequently designed for ED chest pain patients [9], which demonstrated superior performance in many comparative studies on the identification of low-risk chest pain patients [10,11,12,13,14,15,16,17]. Nonetheless, the HEART score has its disadvantages. Many potential factors can affect its diagnostic and prognostic accuracy, such as variation in patient populations, provider determination of low-risk heart score criteria, specific troponin reagent used, all of which contribute to clinical heterogeneity [18,19,20,21]. In addition, most risk scores still require variables that may not be available during the initial presentation of the patient to the ED such as troponin. There remains a need for a more efficient risk stratification tool.

We had previously developed a heart rate variability (HRV) prediction model using readily available variables at the ED, in an attempt to reduce both diagnostic time and subjective components [22]. HRV characterizes beat-to-beat variation using time, frequency domain, and nonlinear analysis [23] and has proven to be a good predictor of major adverse cardiac events (MACE) [22, 24, 25]. Most HRV-based scores were reported to be superior to TIMI and GRACE scores while achieving comparable performance with HEART score [17, 24, 26, 27]. Recently, we established a new representation of beat-to-beat variation in ECGs, the heart rate n-variability (HRnV) [28]. HRnV utilizes variation in sampling RR-intervals and overlapping RR-intervals to derive additional parameters from a single strip of ECG reading. As an extension to HRV, HRnV potentially supplements additional information about adverse cardiac events while reducing unwanted noise caused by abnormal heartbeats. Moreover, HRV is a special case of HRnV when n = 1. The HRnV prediction model, developed from multivariable stepwise logistic regression, outperformed the HEART, TIMI, and GRACE scores in predicting 30-day MACE [28]. Nevertheless, multicollinearity is a common problem in logistic regression models where supposedly independent predictor variables are correlated. They tend to overestimate the variance of regression parameters and hinder the determination of the exact effect of each parameter, which could potentially result in inaccurate identification of significant predictors [29, 30]. In the paper, 115 HRnV parameters were derived but only seven variables were left in the final prediction model, and this implies the possible elimination of relevant information [28].

Within the general medical literature, machine learning dimensionality reduction methods are uncommon and limited to a few specific areas, such as bioinformatics studies on genetics [31, 32] and diagnostic radiological imaging [33, 34]. Despite this, dimensionality reduction in HRV has been investigated and shown to effectively compress multidimensional HRV data for the assessment of cardiac autonomic neuropathy [35]. In this paper, we attempted to investigate several machine learning dimensionality reduction algorithms in building predictive models, hypothesizing that these algorithms could be useful in preserving useful information while improving prediction performance. We aimed to compare the performance of the dimensionality reduction models against the traditional stepwise logistic regression model [28] and conventional risk stratification tools such as the HEART, TIMI, and GRACE scores, in the prediction of 30-day MACE in chest pain patients presenting to the ED.

Methods

Study design and clinical setting

A retrospective analysis was conducted on data collected from patients > 20 years old who presented to Singapore General Hospital ED with chest pain between September 2010 to July 2015. These patients were triaged using the Patient Acuity Category Scale (PACS) and those with PACS 1 or 2 were included in the study. Patients were excluded if they were lost to the 30-day follow-up or if they presented with ST-elevation myocardial infarction (STEMI) or non-cardiac etiology chest pain such as pneumothorax, pneumonia, and trauma as diagnosed by the ED physician. Patients with ECG findings that precluded quality HRnV analysis such as artifacts, ectopic beats, paced or non-sinus rhythm were also excluded.

Data collection

For each patient, HRV and HRnV parameters were calculated using HRnV-Calc software suite [28, 36] from a five to six-minute single-lead (lead II) ECG performed via the X-series Monitor (ZOLL Medical, Corporation, Chelmsford, MA). Table 1 shows the full list of HRV and HRnV parameters used in this study. Besides, the first 12-lead ECGs taken during patients’ presentation to the ED were interpreted by two independent clinical reviewers and any pathological ST changes, T wave inversions, and Q-waves were noted. Patients’ demographics, medical history, first set of vital signs, and troponin-T values were obtained from the hospital’s electronic health records (EHR). In this study, high-sensitivity troponin-T was selected as the cardiac biomarker and an abnormal value was defined as > 0.03 ng/mL.

The primary outcome measured was any MACE within 30 days, including acute myocardial infarction, emergent revascularization procedures such as percutaneous coronary intervention (PCI) or coronary artery bypass graft (CABG), or death. The primary outcome was captured through a retrospective review of patients’ EHR.

Machine learning dimensionality reduction

Dimensionality reduction in machine learning and data mining [37] refers to the process of transforming high-dimensional data into lower dimensions such that fewer features are selected or extracted while preserving essential information of the original data. Two types of dimensionality reduction approaches are available, namely variable selection and feature extraction. Variable selection methods generally reduce data dimensionality by choosing a subset of variables, while feature extraction methods transform the original feature space into lower-dimensional space through linear or nonlinear feature projection. In clinical predictive modeling, variable selection techniques such as stepwise logistic regression are popular for constructing prediction models [38]. In contrast, feature extraction approaches [39] are less commonly used in medical research, although they have been widely used in computational biology [40], image analysis [41, 42], physiological signal analysis [43], among others. In this study, we investigated the implementation of eight feature extraction algorithms and evaluated their contributions to prediction performance in risk stratification of ED chest pain patients. We also compared them with a prediction model that was built using conventional stepwise variable selection [28]. Henceforth, we use the terms “dimensionality reduction” and “feature extraction” interchangeably.

Given that there were n samples (xi, yi), i = 1, 2, …, n, in the dataset (X, y), where each sample xi had original D features and its label yi = 1 or 0, with 1 indicating a positive primary outcome, i.e., MACE within 30 days. We applied dimensionality reduction algorithms to project xi into a d-dimensional space (d < D). As a result, the original dataset X ∈ ℝn × D became \( \hat{\boldsymbol{X}}\boldsymbol{\in}{\mathbb{R}}^{n\times d} \). There was a total of D = 174 candidate variables in this study. As suggested in Liu et al. [28], some variables were less statistically significant in terms of contributions to the prediction performance. Thus, we conducted univariable analysis and preselected a subset of \( \overset{\sim }{D} \) variables if their \( p<\overset{\sim }{P} \). In this study, we determined \( \overset{\sim }{P} \) by running principal component analysis (PCA) [44] and logistic regression through 5-fold cross-validation; we plotted a curve to visualize the choice of a threshold and its impact on predictive performance. PCA was used for demonstration because of its simplicity and fast running speed. Other than PCA, we also implemented seven dimensionality reduction algorithms, including kernel PCA (KPCA) [45] with polynomial kernel function, latent semantic analysis (LSA) [46], Gaussian random projection (GRP) [47], sparse random projection (SRP) [48], multidimensional scaling (MDS) [49], Isomap [50], and locally linear embedding (LLE) [51]. All these algorithms are unsupervised learning methods, meaning the transformation of feature space does not rely on sample labels y. Among the eight methods, MDS, Isomap, and LLE are manifold learning-based techniques for nonlinear dimensionality reduction. Table 2 gives a brief introduction to these eight methods.

Predictive and statistical analysis

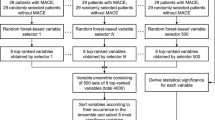

In this study, we chose logistic regression as the classification algorithm to predict the MACE outcome. As described earlier, we determined the threshold \( \overset{\sim }{P} \) to preselect a subset of \( \overset{\sim }{D} \) variables, ensuring the removal of less significant variables as indicated by univariable analysis, after which X ∈ ℝn × D became \( \overset{\sim }{X}\in {\mathbb{R}}^{n\times \overset{\sim }{D}} \). In summary, the inputs to all dimensionality reduction algorithms were in \( \overset{\sim }{D} \)-dimensional space. Subsequently, conventional logistic regression was implemented to take d-dimensional \( \hat{\boldsymbol{X}} \) to predict y, where 5-fold cross-validation was used.

We compared the models built with machine learning dimensionality reduction with our previous stepwise model [28], in which the following 16 variables were used: age, diastolic blood pressure, pain score, ST-elevation, ST-depression, Q wave, cardiac history (the “History” component in the HEART score), troponin, HRV NN50, HR2V skewness, HR2V SampEn, HR2V ApEn, HR2V1 ApEn, HR3V RMSSD, HR3V skewness, and HR3V2 HF power. As described in [28], we selected candidate variables with p < 0.2 in univariable analysis and subsequently conducted multivariable analysis using backward stepwise logistic regression. In the current study, we further built eight dimensionality reduction models without using the cardiac troponin and compared them with the stepwise model without the troponin component. This analysis enabled us to check the feasibility of avoiding the use of laboratory results for quick risk stratification.

In evaluating the modeling performance, we performed the receiver operating characteristic (ROC) curve analysis and reported the corresponding area under the curve (AUC), sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) measures. Moreover, we generated the calibration plots for prediction models. In describing the data, we reported continuous variables as the median and interquartile range (IQR) and statistical significance using two-sample t-test. We reported categorical variables as frequency and percentage and statistical significance using chi-square test. All analyses were conducted in Python version 3.8.0 (Python Software Foundation, Delaware, USA).

Results

We included 795 chest pain patients in this study, of which 247 (31%) patients had MACE within 30 days of presentation to the ED. Table 3 presents the baseline characteristics of the patient cohort. Patients with MACE were older (median age 61 years vs. 59 years, p = 0.035) and more likely to be male (76.1% vs. 64.6%, p = 0.002). History of diabetes, current smoking status, and pathological ECG changes such as ST elevation, ST depression, T wave inversion, pathological Q waves, and QTc prolongation were significantly more prevalent in patients with the primary outcome. Troponin-T and creatine kinase-MB levels were also significantly elevated in patients with the primary outcome. There was no statistically significant difference in patient ethnicity between MACE and non-MACE groups.

Figure 1a depicts the PCA-based predictive performance versus the threshold \( \overset{\sim }{P} \) (for preselection of variables) and Fig. 1b shows the number of preselected variables versus threshold \( \overset{\sim }{P} \). The predictive performance peaked at \( \overset{\sim }{P}=0.02 \), where a total of 30 variables were preselected, including gender, diastolic blood pressure, pain score, ST-elevation, ST-depression, T-wave inversion, Q wave, cardiac history, EKG, and risk factor components of the HEART score, troponin, HRV RMSSD, HRV NN50, HRV pNN50, HRV HF power, HRV Poincaré SD1, HR2V RMSSD, HR2V NN50, HR2V pNN50, HR2V HF power, HR2V Poincaré SD1, HR2V1 RMSSD, HR2V1 NN50, HR2V1 HF power, HR2V1 Poincaré SD1, HR3V1 RMSSD, HR3V1 HF power, HR3V1 Poincaré SD1, HR3V2 RMSSD, and HR3V2 Poincaré SD1. These were used as inputs to all dimensionality reduction algorithms whose outputs were linear or nonlinear combinations of these 30 variables.

Figure 2 shows the predictive performance (in terms of AUC value) versus feature dimension (i.e., number of “principal components”) for all eight dimensionality reduction algorithms. The AUC values of GRP, SRP, and KPCA gradually increased with the increment of feature dimension, while the AUC values of PCA, LSA, MDS, Isomap, and LLE drastically jumped to more than 0.8 when feature dimension d ≥ 3 and plateaued in the curves when d ≥ 15. The highest AUC values of PCA, KPCA, LSA, GRP, SRP, MDS, Isomap, and LLE were 0.899, 0.896, 0.899, 0.896, 0.898, 0.901, 0.888, and 0.898, achieved with feature dimensions of 15, 30, 15, 22, 20, 27, 23, and 30, respectively.

Figure 3 shows the ROC curves of the eight dimensionality reduction algorithms, the stepwise logistic regression [28], and three clinical scores. All eight dimensionality reduction methods performed comparably with the stepwise variable selection, and MDS achieved the highest AUC of 0.901. Table 4 presents ROC analysis results of all 12 methods/scores where sensitivity, specificity, PPV, and NPV are reported with 95% confidence intervals (CIs), noting that the performance of the stepwise model in this paper was slightly different from that reported in [28] due to the choice of cross-validation scheme, i.e., 5-fold (AUC of 0.887) versus leave-one-out (AUC of 0.888). Figure 4 presents the calibration curves of predictions by all methods/scores. The stepwise model and seven dimensionality reduction models (PCA, KPCA, LSA, GRP, SRP, MDS, and Isomap) showed reasonable model calibrations, in which their curves fluctuated along the diagonal line, meaning these models only slightly overestimated or underestimated the predicted probability of 30-day MACE. The LLE model was unable to achieve good calibration. In comparison, all three clinical scores (HEART, TIMI, and GRACE) generally underpredicted the probability of 30-day MACE.

Figure 5 shows the ROC curves of prediction models without using cardiac troponin. At feature dimensions of 13, 21, 13, 29, 24, 17, 18, and 18, the highest AUC values of PCA, KPCA, LSA, GRP, SRP, MDS, Isomap, and LLE were 0.852, 0.852, 0.852, 0.852, 0.851, 0.852, 0.845, and 0.849, respectively. The stepwise model without troponin yielded an AUC of 0.834 compared to 0.887 with troponin. All prediction models outperformed both the TIMI and GRACE scores while achieving comparable results with the HEART score.

Discussion

In this study, we showed that machine learning dimensionality reduction yielded only marginal, non-significant improvements compared to stepwise model in predicting the risk of 30-day MACE among chest pain patients in the ED. This corroborates with similar observations that traditional statistical methods can perform comparably to machine learning algorithms [52, 53]. Among the dimensionality reduction models integrated with cardiac troponin, the MDS model had the highest discriminative performance (AUC of 0.901, 95% CI 0.874–0.928) but did not significantly outperformed the traditional stepwise model (AUC of 0.887, 95% CI 0.859–0.916). Among the models without using troponin, PCA, KPCA, LSA, GRP, and MDS performed equally well, achieving an AUC of 0.852, compared with the stepwise model without troponin which had an AUC of 0.834. In general, the traditional stepwise approach was proved to be comparable to machine learning dimensionality reduction methods in risk prediction, while benefiting from model simplicity, transparency, and interpretability that are desired in real-world clinical practice.

High-dimensional data suffers from the curse of dimensionality, which refers to the exponentially increasing sparsity of data and sample size required to estimate a function to a given accuracy as dimensionality increases [54]. Dimensionality reduction has successfully mitigated the curse of dimensionality in the analysis of high-dimensional data in various domains such as computational biology and bioinformatics [31, 32]. However, clinical predictive modeling typically considers relatively few features, limiting the effects of the curse of dimensionality. This may account for the relatively limited benefit of dimensionality reduction in our analysis.

Additionally, with comparable performance to the traditional stepwise model, transparency and interpretability of machine learning dimensionality reduction models are constrained by complex algorithmic transformations of variables, leading to obstacles in the adoption of such models in real-world clinical settings. In contrast, traditional biostatistical approaches like logistic regression with stepwise variable selection deliver a simple and transparent model, in which the absolute and relative importance of each variable can be easily interpreted and explained from the odds ratio. Marginal performance improvements should be weighed against these limitations in interpretability, which is an important consideration in clinical predictive modeling.

Comparing the eight dimensionality reduction algorithms, PCA and LSA use common linear algebra techniques to learn to create principal components in a compressed data space, while MDS, Isomap, and LLE are nonlinear, manifold learning-based dimensionality reduction methods. As observed from our results, complex nonlinear algorithms did not show an obvious advantage over simple PCA and LSA methods in enhancing the predictive performance. Yet, nonlinear algorithms are more computationally complex and require more computing memory. For example, KPCA and Isomap have computational complexity of O(n3) and memory complexity of O(n2), while PCA has computational complexity of \( O\left({\overset{\sim }{D}}^3\right) \) and memory complexity of \( O\left({\overset{\sim }{D}}^2\right) \) [39]. In applications of clinical predictive modeling, n — the number of patients — is usually larger than \( \overset{\sim }{D} \) — the number of variables; in our study, n is 795 and \( \overset{\sim }{D} \) is 29 or 30, depending on the inclusion of troponin. This suggests that linear algorithms may be preferred due to reduced computational complexity and memory while retaining comparable performance. Another observation in this study was that the impact of preselection (as shown in Fig. 1) on predictive performance was more substantial than that of dimensionality reduction, indicating the importance of choosing statistically significant candidate variables.

Our study also reiterates the value of HRnV-based prediction models for chest pain risk stratification. Among chest pain risk stratification tools in the ED, clinical scores like HEART, TIMI, and GRACE are currently the most widely adopted and validated [55, 56]. However, a common barrier to quick risk prediction using these traditional clinical scores is the requirement of cardiac troponin, which can take hours to obtain. To address these difficulties, machine learning-based predictive models that integrate HRV measures and clinical parameters have been proposed [17, 22, 25, 26], including our development of HRnV, a novel alternative measure to HRV that has shown promising results in predicting 30-day MACE [28], which was the stepwise model in this paper. Both the dimensionality reduction-based predictive models and the stepwise model with troponin presented superior performance than HEART, TIMI, and GRACE scores. When troponin was not used, several dimensionality reduction-based models such as PCA, KPCA, and MDS still yielded marginally better performance than the original HEART score, while benefiting from generating the predictive scores in merely 5 to 6 min.

Additionally, Table 4 shows that all HRnV-based predictive models had higher specificities than the HEART score while all HRnV-based models except Isomap also improved on the already high sensitivity of the HEART score [21, 57]. The specificities of KPCA, Isomap, and MDS were significantly higher by an absolute value of almost 10%. Substantial improvements to the specificity of MACE predictive models may reduce unnecessary admission and thus minimize costs and resource usage [5]. This is particularly relevant in low-resource settings, for example, the overburdened EDs in the current coronavirus disease 2019 (COVID-19) pandemic, where novel methods in resource allocation and risk stratification could alleviate the strain on healthcare resources [58].

There remains a need for further investigation into methods that utilize information from the full set of HRV and HRnV variables. From 174 variables in the initial data set, dimensionality reduction performed the best with a preselection of 30 variables, of which 19 were HRV and HRnV parameters. That is, the majority of the newly constructed HRnV parameters were removed based on the strict significance threshold of p < 0.02 on univariable analysis. Therefore, novel HRnV measures were not fully used in prediction models of 30-day MACE, leaving room for further investigation of alternative ways of using them. Moving forward, it may be valuable to develop and evaluate deep learning frameworks [59] to synthesize novel low-dimensional representations of multidimensional information. Alternatively, building point-based, interpretable risk scores [60] can also be beneficial to implementation and adoption in real-world clinical settings, since designing inherently interpretable models is more favorable than explaining black box models [61].

We acknowledge the following limitations of this study. First, the clinical application (i.e., risk stratification of ED chest pain patients) was only one example of clinical predictive modeling, thus our conclusion on the effectiveness of machine learning dimensionality reduction algorithms may not be generalizable to other applications, particularly those with a larger number of variables. Second, only eight dimensionality reduction algorithms were investigated, while many other methods are available. Third, given the small sample size, we were unable to determine the threshold \( \overset{\sim }{P} \) and build predictive models with a separate training set; this also limited the stability check [62] for both logistic regression and machine learning models. Last, we did not build a workable predictive model for risk stratification of ED chest pain patients, although several models built in this study showed promising results compared to existing clinical scores. We aim to conduct further investigations.

Conclusions

In this study we found that machine learning dimensionality reduction models showed marginal value in improving the prediction of 30-day MACE for ED chest pain patients. Being black box models, they are further constrained in clinical practice due to low interpretability. Whereas traditional stepwise prediction model showed simplicity and transparency, making it feasible for clinical use. To fully utilize the available information in building high-performing predictive models, we suggest additional investigations such as exploring deep representations of the input variables and creating interpretable machine learning models to facilitate real-world clinical implementation.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ACS:

-

Acute coronary syndrome

- AMI:

-

Acute myocardial infarction

- AUC:

-

Area under the curve

- ApEn:

-

Approximate entropy

- CI:

-

Confidence intervals

- CABG:

-

Coronary artery bypass graft

- COVID-19:

-

Coronavirus disease 2019

- DFA:

-

Detrended fluctuation analysis

- ECG:

-

Electrocardiogram

- EHR:

-

Electronic health records

- ED:

-

Emergency department

- GRP:

-

Gaussian random projection

- GRACE:

-

Global registry of acute coronary events

- HRnV:

-

Heart rate n-variability

- HRV:

-

Heart rate variability

- HF:

-

High frequency

- HEART:

-

History, ECG, Age, Risk factors, and initial Troponin

- IQR:

-

Interquartile range

- KPCA:

-

Kernel principal component analysis

- LSA:

-

Latent semantic analysis

- LLE:

-

Locally linear embedding

- LF:

-

Low frequency

- MACE:

-

Major adverse cardiac events

- Mean NN:

-

Average of R-R intervals

- MDS:

-

Multidimensional scaling

- NPV:

-

Negative predictive value

- NN50:

-

The number of times that the absolute difference between 2 successive R-R intervals exceeds 50 ms

- NN50n :

-

The number of times that the absolute difference between 2 successive RRnI/RRnIm sequences exceeds 50 × n ms

- PACS:

-

Patient acuity category scale

- PCI:

-

Percutaneous coronary intervention

- pNN50:

-

NN50 divided by the total number of R-R intervals

- pNN50n :

-

NN50n divided by the total number of RRnI/RRnIm sequences

- PPV:

-

Positive predictive value

- PCA:

-

Principal component analysis

- ROC:

-

Receiver operating characteristic

- RMSSD:

-

Square root of the mean squared differences between R-R intervals

- RRI:

-

R-R interval

- SampEn:

-

Sample entropy

- SD:

-

Standard deviation

- SDNN:

-

Standard deviation of R-R intervals

- SRP:

-

Sparse random projection

- STEMI:

-

ST-elevation myocardial infarction

- TIMI:

-

Thrombolysis in myocardial infarction

- VLF:

-

Very low frequency

References

Long B, Koyfman A. Best clinical practice: current controversies in evaluation of low-risk chest pain-part 1. J Emerg Med. 2016;51(6):668–76. https://doi.org/10.1016/j.jemermed.2016.07.103.

Long B, Koyfman A. Best clinical practice: current controversies in the evaluation of low-risk chest pain with risk stratification aids. Part 2. J Emerg Med. 2017;52(1):43–51. https://doi.org/10.1016/j.jemermed.2016.07.004.

Januzzi JL Jr, McCarthy CP. Evaluating chest pain in the emergency department: searching for the optimal gatekeeper. J Am Coll Cardiol. 2018;71(6):617–9. https://doi.org/10.1016/j.jacc.2017.11.065.

Pope JH, Aufderheide TP, Ruthazer R, Woolard RH, Feldman JA, Beshansky JR, et al. Missed diagnoses of acute cardiac ischemia in the emergency department. N Engl J Med. 2000;342(16):1163–70. https://doi.org/10.1056/NEJM200004203421603.

Hollander JE, Than M, Mueller C. State-of-the-art evaluation of emergency department patients presenting with potential acute coronary syndromes. Circulation. 2016;134(7):547–64. https://doi.org/10.1161/CIRCULATIONAHA.116.021886.

Antman EM, Cohen M, Bernink PJ, McCabe CH, Horacek T, Papuchis G, et al. The TIMI risk score for unstable angina/non-ST elevation MI: a method for prognostication and therapeutic decision making. JAMA. 2000;284(7):835–42. https://doi.org/10.1001/jama.284.7.835.

Morrow DA, Antman EM, Charlesworth A, Cairns R, Murphy SA, de Lemos JA, et al. TIMI risk score for ST-elevation myocardial infarction: a convenient, bedside, clinical score for risk assessment at presentation: an intravenous nPA for treatment of infarcting myocardium early II trial substudy. Circulation. 2000;102(17):2031–7. https://doi.org/10.1161/01.CIR.102.17.2031.

Fox KA, Dabbous OH, Goldberg RJ, Pieper KS, Eagle KA, Van de Werf F, et al. Prediction of risk of death and myocardial infarction in the six months after presentation with acute coronary syndrome: prospective multinational observational study (GRACE). BMJ (Clinical research ed). 2006;333(7578):1091.

Six AJ, Backus BE, Kelder JC. Chest pain in the emergency room: value of the HEART score. Neth Hear J. 2008;16(6):191–6. https://doi.org/10.1007/BF03086144.

Backus BE, Six AJ, Kelder JC, Bosschaert MA, Mast EG, Mosterd A, et al. A prospective validation of the HEART score for chest pain patients at the emergency department. Int J Cardiol. 2013;168(3):2153–8. https://doi.org/10.1016/j.ijcard.2013.01.255.

Six AJ, Cullen L, Backus BE, Greenslade J, Parsonage W, Aldous S, et al. The HEART score for the assessment of patients with chest pain in the emergency department: a multinational validation study. Crit Path Cardiol. 2013;12(3):121–6. https://doi.org/10.1097/HPC.0b013e31828b327e.

Chen X-H, Jiang H-L, Li Y-M, Chan CPY, Mo J-R, Tian C-W, et al. Prognostic values of 4 risk scores in Chinese patients with chest pain: prospective 2-Centre cohort study. Medicine. 2016;95(52):e4778. https://doi.org/10.1097/MD.0000000000004778.

Jain T, Nowak R, Hudson M, Frisoli T, Jacobsen G, McCord J. Short- and Long-term prognostic utility of the HEART score in patients evaluated in the emergency Department for Possible Acute Coronary Syndrome. Crit Path Cardiol. 2016;15(2):40–5. https://doi.org/10.1097/HPC.0000000000000070.

Sakamoto JT, Liu N, Koh ZX, Fung NX, Heldeweg ML, Ng JC, et al. Comparing HEART, TIMI, and GRACE scores for prediction of 30-day major adverse cardiac events in high acuity chest pain patients in the emergency department. Int J Cardiol. 2016;221:759–64. https://doi.org/10.1016/j.ijcard.2016.07.147.

Sun BC, Laurie A, Fu R, Ferencik M, Shapiro M, Lindsell CJ, et al. Comparison of the HEART and TIMI risk scores for suspected acute coronary syndrome in the emergency department. Crit Path Cardiol. 2016;15(1):1–5. https://doi.org/10.1097/HPC.0000000000000066.

Poldervaart JM, Langedijk M, Backus BE, Dekker IMC, Six AJ, Doevendans PA, et al. Comparison of the GRACE, HEART and TIMI score to predict major adverse cardiac events in chest pain patients at the emergency department. Int J Cardiol. 2017;227:656–61. https://doi.org/10.1016/j.ijcard.2016.10.080.

Sakamoto JT, Liu N, Koh ZX, Guo D, Heldeweg MLA, Ng JCJ, et al. Integrating heart rate variability, vital signs, electrocardiogram, and troponin to triage chest pain patients in the ED. Am J Emerg Med. 2018;36(2):185–92.

Engel J, Heeren MJ, van der Wulp I, de Bruijne MC, Wagner C. Understanding factors that influence the use of risk scoring instruments in the management of patients with unstable angina or non-ST-elevation myocardial infarction in the Netherlands: a qualitative study of health care practitioners' perceptions. BMC Health Serv Res. 2014;14(1):418. https://doi.org/10.1186/1472-6963-14-418.

Wu WK, Yiadom MY, Collins SP, Self WH, Monahan K. Documentation of HEART score discordance between emergency physician and cardiologist evaluations of ED patients with chest pain. Am J Emerg Med. 2017;35(1):132–5. https://doi.org/10.1016/j.ajem.2016.09.058.

Ras M, Reitsma JB, Hoes AW, Six AJ, Poldervaart JM. Secondary analysis of frequency, circumstances and consequences of calculation errors of the HEART (history, ECG, age, risk factors and troponin) score at the emergency departments of nine hospitals in the Netherlands. BMJ Open. 2017;7(10):e017259. https://doi.org/10.1136/bmjopen-2017-017259.

Laureano-Phillips J, Robinson RD, Aryal S, Blair S, Wilson D, Boyd K, et al. HEART score risk stratification of low-risk chest pain patients in the emergency department: a systematic review and meta-analysis. Ann Emerg Med. 2019;74(2):187–203. https://doi.org/10.1016/j.annemergmed.2018.12.010.

Ong MEH, Goh K, Fook-Chong S, Haaland B, Wai KL, Koh ZX, et al. Heart rate variability risk score for prediction of acute cardiac complications in ED patients with chest pain. Am J Emerg Med. 2013;31(8):1201–7. https://doi.org/10.1016/j.ajem.2013.05.005.

Rajendra Acharya U, Paul Joseph K, Kannathal N, Lim CM, Suri JS. Heart rate variability: a review. Med Biol Eng Comput. 2006;44(12):1031–51. https://doi.org/10.1007/s11517-006-0119-0.

Liu N, Koh ZX, Chua ECP, Tan LML, Lin Z, Mirza B, et al. Risk scoring for prediction of acute cardiac complications from imbalanced clinical data. IEEE J Biomed Health Inform. 2014;18(6):1894–902. https://doi.org/10.1109/JBHI.2014.2303481.

Liu N, Lin Z, Cao J, Koh ZX, Zhang T, Huang G-B, et al. An intelligent scoring system and its application to cardiac arrest prediction. IEEE Trans Inf Technol Biomed. 2012;16(6):1324–31. https://doi.org/10.1109/TITB.2012.2212448.

Heldeweg ML, Liu N, Koh ZX, Fook-Chong S, Lye WK, Harms M, et al. A novel cardiovascular risk stratification model incorporating ECG and heart rate variability for patients presenting to the emergency department with chest pain. Crit Care. 2016;20(1):179. https://doi.org/10.1186/s13054-016-1367-5.

Liu N, Koh ZX, Goh J, Lin Z, Haaland B, Ting BP, et al. Prediction of adverse cardiac events in emergency department patients with chest pain using machine learning for variable selection. BMC Med Inform Decis Mak. 2014;14(1):75. https://doi.org/10.1186/1472-6947-14-75.

Liu N, Guo D, Koh ZX, Ho AFW, Xie F, Tagami T, et al. Heart rate n-variability (HRnV) and its application to risk stratification of chest pain patients in the emergency department. BMC Cardiovasc Disord. 2020;20(1):168. https://doi.org/10.1186/s12872-020-01455-8.

Meloun M, Militký J, Hill M, Brereton RG. Crucial problems in regression modelling and their solutions. Analyst. 2002;127(4):433–50. https://doi.org/10.1039/b110779h.

Dormann CF, Elith J, Bacher S, Buchmann C, Carl G, Carré G, et al. Collinearity: a review of methods to deal with it and a simulation study evaluating their performance. Ecography. 2013;36(1):27–46. https://doi.org/10.1111/j.1600-0587.2012.07348.x.

Gui J, Andrew AS, Andrews P, Nelson HM, Kelsey KT, Karagas MR, et al. A robust multifactor dimensionality reduction method for detecting gene-gene interactions with application to the genetic analysis of bladder Cancer susceptibility. Ann Hum Genet. 2011;75(1):20–8. https://doi.org/10.1111/j.1469-1809.2010.00624.x.

Ritchie MD, Hahn LW, Roodi N, Bailey LR, Dupont WD, Parl FF, et al. Multifactor-dimensionality reduction reveals high-order interactions among estrogen-metabolism genes in sporadic breast Cancer. Am J Hum Genet. 2001;69(1):138–47. https://doi.org/10.1086/321276.

Akhbardeh A, Jacobs MA. Comparative analysis of nonlinear dimensionality reduction techniques for breast MRI segmentation. Med Phys. 2012;39(4):2275–89. https://doi.org/10.1118/1.3682173.

Balvay D, Kachenoura N, Espinoza S, Thomassin-Naggara I, Fournier LS, Clement O, et al. Signal-to-noise ratio improvement in dynamic contrast-enhanced CT and MR imaging with automated principal component analysis filtering. Radiology. 2011;258(2):435–45. https://doi.org/10.1148/radiol.10100231.

Tarvainen MP, Cornforth DJ, Jelinek HF. Principal component analysis of heart rate variability data in assessing cardiac autonomic neuropathy. In: 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; 2014. p. 6667–70.

Vest AN, Da Poian G, Li Q, Liu C, Nemati S, Shah AJ, et al. An open source benchmarked toolbox for cardiovascular waveform and interval analysis. Physiol Meas. 2018;39(10):105004. https://doi.org/10.1088/1361-6579/aae021.

Maimon O, Rokach L. Data mining and knowledge discovery handbook. Berlin: Springer Publishing Company, Incorporated; 2010. https://doi.org/10.1007/978-0-387-09823-4.

Zhang Z. Variable selection with stepwise and best subset approaches. Ann Transl Med. 2016;4(7):136. https://doi.org/10.21037/atm.2016.03.35.

van der Maaten LJP, Postma EO, van den Herik HJ. Dimensionality Reduction: A Comparative Review. In: Tilburg University Technical Report TiCC-TR 2009–005. Tilburg: Tilburg University; 2009.

Nguyen LH, Holmes S. Ten quick tips for effective dimensionality reduction. PLoS Comput Biol. 2019;15(6):e1006907. https://doi.org/10.1371/journal.pcbi.1006907.

Liu N, Wang H. Weighted principal component extraction with genetic algorithms. Appl Soft Comput. 2012;12(2):961–74. https://doi.org/10.1016/j.asoc.2011.08.030.

Pan Y, Ge SS, Al Mamun A. Weighted locally linear embedding for dimension reduction. Pattern Recogn. 2009;42(5):798–811. https://doi.org/10.1016/j.patcog.2008.08.024.

Artoni F, Delorme A, Makeig S. Applying dimension reduction to EEG data by principal component analysis reduces the quality of its subsequent independent component decomposition. Neuroimage. 2018;175:176–87. https://doi.org/10.1016/j.neuroimage.2018.03.016.

Diamantaras KI, Kung SY. Principal component neural networks: theory and applications. New Jersey: Wiley; 1996.

Schölkopf B, Smola AJ, Müller KR. Kernel principal component analysis. In: Advances in kernel methods: support vector learning. Cambridge: MIT Press; 1999. p. 327–52.

Landauer TK, Foltz PW, Laham D. An introduction to latent semantic analysis. Discourse Process. 1998;25(2–3):259–84. https://doi.org/10.1080/01638539809545028.

Dasgupta S. Experiments with random projection. In: Proceedings of the sixteenth conference on uncertainty in artificial intelligence. Stanford: Morgan Kaufmann Publishers Inc; 2000. p. 143–51.

Li P, Hastie TJ, Church KW. Very sparse random projections. In: Proceedings of the 12th ACM SIGKDD international conference on knowledge discovery and data mining. Philadelphia: Association for Computing Machinery; 2006. p. 287–96.

Mead A. Review of the development of multidimensional scaling methods. J Royal Stat Soc Ser D (The Statistician). 1992;41(1):27–39.

Tenenbaum JB, de Silva V, Langford JC. A global geometric framework for nonlinear dimensionality reduction. Science (New York, NY). 2000;290(5500):2319–23.

Roweis ST, Saul LK. Nonlinear dimensionality reduction by locally linear embedding. Science (New York, NY). 2000;290(5500):2323–6.

Gravesteijn BY, Nieboer D, Ercole A, Lingsma HF, Nelson D, van Calster B, et al. Machine learning algorithms performed no better than regression models for prognostication in traumatic brain injury. J Clin Epidemiol. 2020;122:95–107. https://doi.org/10.1016/j.jclinepi.2020.03.005.

Nusinovici S, Tham YC, Chak Yan MY, Wei Ting DS, Li J, Sabanayagam C, et al. Logistic regression was as good as machine learning for predicting major chronic diseases. J Clin Epidemiol. 2020;122:56–69. https://doi.org/10.1016/j.jclinepi.2020.03.002.

Lee JA, Verleysen M. Nonlinear dimensionality reduction. New York: Springer; 2007. https://doi.org/10.1007/978-0-387-39351-3.

D'Ascenzo F, Biondi-Zoccai G, Moretti C, Bollati M, Omedè P, Sciuto F, et al. TIMI, GRACE and alternative risk scores in acute coronary syndromes: a meta-analysis of 40 derivation studies on 216,552 patients and of 42 validation studies on 31,625 patients. Contemp Clin Trials. 2012;33(3):507–14. https://doi.org/10.1016/j.cct.2012.01.001.

Liu N, Ng JCJ, Ting CE, Sakamoto JT, Ho AFW, Koh ZX, et al. Clinical scores for risk stratification of chest pain patients in the emergency department: an updated systematic review. J Emerg Crit Care Med. 2018;2:16.

Byrne C, Toarta C, Backus B, Holt T. The HEART score in predicting major adverse cardiac events in patients presenting to the emergency department with possible acute coronary syndrome: protocol for a systematic review and meta-analysis. Syst Rev. 2018;7(1):148.

Liu N, Chee ML, Niu C, Pek PP, Siddiqui FJ, Ansah JP, et al. Coronavirus disease 2019 (COVID-19): an evidence map of medical literature. BMC Med Res Methodol. 2020;20(1):177. https://doi.org/10.1186/s12874-020-01059-y.

Xie J, Girshick R, Farhadi A. Unsupervised deep embedding for clustering analysis. In: Proceedings of the 33rd international conference on international conference on machine learning - volume 48. New York: JMLR.org; 2016. p. 478–87.

Xie F, Chakraborty B, Ong MEH, Goldstein BA, Liu N. AutoScore: a machine learning-based automatic clinical score generator and its application to mortality prediction using electronic health records. JMIR Med Inform. 2020;21798.

Rudin C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Machine Intelligence. 2019;1(5):206–15. https://doi.org/10.1038/s42256-019-0048-x.

Heinze G, Wallisch C, Dunkler D. Variable selection – a review and recommendations for the practicing statistician. Biom J. 2018;60(3):431–49. https://doi.org/10.1002/bimj.201700067.

Acknowledgments

We would like to thank and acknowledge the contributions of doctors, nurses, and clinical research coordinators from the Department of Emergency Medicine, Singapore General Hospital.

Funding

This work was supported by the Duke-NUS Signature Research Programme funded by the Ministry of Health, Singapore. The funder of the study had no role in study design, data collection, data analysis, data interpretation, or writing of the report.

Author information

Authors and Affiliations

Contributions

NL conceived the study and supervised the project. NL and MLC performed the analyses and drafted the manuscript. NL, MLC, ZXK, SLL, AFWH, DG, and MEHO made substantial contributions to results interpretation and critical revision of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The ethical approval was obtained from the Centralized Institutional Review Board (CIRB, Ref: 2014/584/C) of SingHealth, in which patient consent was waived.

Consent for publication

Not applicable.

Competing interests

NL and MEHO hold patents related to using heart rate variability and artificial intelligence for medical monitoring. NL, ZXK, DG, and MEHO are advisers to TIIM SG. The other authors report no conflicts.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Liu, N., Chee, M.L., Koh, Z.X. et al. Utilizing machine learning dimensionality reduction for risk stratification of chest pain patients in the emergency department. BMC Med Res Methodol 21, 74 (2021). https://doi.org/10.1186/s12874-021-01265-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-021-01265-2