Abstract

Background

Atrial fibrillation (AF) is caused by different mechanisms but current treatment strategies do not target these mechanisms. Stratified therapy based on mechanistic drivers and biomarkers of AF have the potential to improve AF prevention and management outcomes. We will integrate mechanistic insights with known pathophysiological drivers of AF in models predicting recurrent AF and prevalent AF to test hypotheses related to AF mechanisms and response to rhythm control therapy.

Methods

We will harmonise and combine baseline and outcome data from 12 studies collected by six centres from the United Kingdom, Germany, France, Spain, and the Netherlands which assess prevalent AF or recurrent AF. A Delphi process and statistical selection will be used to identify candidate clinical predictors. Prediction models will be developed in patients with AF for AF recurrence and AF-related outcomes, and in patients with or without AF at baseline for prevalent AF. Models will be used to test mechanistic hypotheses and investigate the predictive value of plasma biomarkers.

Discussion

This retrospective, harmonised, individual patient data analysis will use information from 12 datasets collected in five European countries. It is envisioned that the outcome of this analysis would provide a greater understanding of the factors associated with recurrent and prevalent AF, potentially allowing development of stratified approaches to prevention and therapy management.

Similar content being viewed by others

Background

Atrial fibrillation (AF) is a common multifactorial disease that often remains undiagnosed until its first complication [1, 2]. It affects 2–3% of the population with growing incidence especially in the ageing Western population [3,4,5], with current estimates suggesting 150,000–200,000 newly diagnosed AF patients per year worldwide [2, 6, 7]. Patients with AF are at increased risk of stroke, cardiovascular death, heart failure, and cardiovascular hospitalisations [8,9,10,11,12]. Although anticoagulation can now prevent most strokes in patients with AF, other cardiovascular complications remain common even in optimally-treated patients [13]. Complications are also caused by side effects of therapy, and in addition, approximately 2% of AF patients still suffer from a major bleed even on modern anticoagulants [14, 15].

Early management of AF to restore and maintain sinus rhythm for preventing cardiovascular complications is vital [16]. Unfortunately, there is little evidence guiding rhythm control therapy approaches to prevent recurrent AF. Current treatment to prevent AF from recurring is often unsuccessful, with 30–70% of patients experiencing a recurrence within a year after initiation of antiarrhythmic drug therapy or catheter ablation [17,18,19]. Clinical interventions aiming to prevent AF recurrence, or to maintain sinus rhythm in patients with established AF, are largely based on “trial and error” approaches [20]. As a result, effective rhythm control is difficult to achieve and complications related to recurrent AF are common. It is clear that stratified approaches to AF prevention and management are needed to improve outcomes in AF patients. As AF is caused by many different mechanisms in different patients, there is potential for markers indicating these underlying mechanistic drivers of AF to aid the selection of an optimal, individualised therapeutic approach [21, 22].

In a variety of cardiovascular conditions, blood biomarkers have been successfully used to identify patients at risk of adverse outcomes. In AF, biomarkers can identify patients at risk of developing AF (e.g. natriuretic peptides [23,24,25]), and patients at risk of AF-related complications (e.g. troponin and GDF-15 relating to stroke and bleeding in anticoagulated patients with AF [26,27,28,29]).

The CATCH ME consortium (www.catch-me.info) was established with the aim of identifying and validating markers reflecting the major drivers of AF in patients to provide evidence for a mechanism-based therapeutic approach. This manuscript describes the methods that will be used to integrate markers related to potential causal mechanisms into prediction models for prevalent AF and recurrent AF in a large dataset harmonised from 12 distinct studies. Our analysis will include blood biomarkers quantified from plasma of a subset of patients.

Analytical approach

The analytical approach has three stages. In stage 1, models will be developed to predict (a) recurrent AF, (b) prevalent AF, (c) stroke, (d) cardiovascular death and (e) worsening of heart failure. These models will include clinical characteristics as predictors. In stage 2, further predictors reflecting the major drivers of AF as established in the literature as well as those identified in a differential gene expression analysis conducted by the CATCH ME consortium will be added to the model to evaluate their predictive value over and above clinical characteristics. Seven a priori hypotheses will be tested in stage 2 (see below). In stage 3, the predictive value of the blood biomarkers quantified from plasma samples in a subset of patients will be assessed.

Analyses will be undertaken in a combined dataset created by harmonising and merging individual patient-level clinical data [30] collected in 12 clinical research projects. The included studies are led by the CATCH ME consortium partners and reflect patients treated with varied treatment strategies in different health care systems. External validation of predictive models will be undertaken in a separate dataset. We will also compare the performance of the model for recurrent AF with 4 existing scores: the CHADS2 score [31], the HATCH score [32], the APPLE score [33], and the ATLAS score [34].

Specific hypotheses to be tested

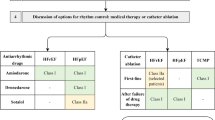

Different mechanisms of AF should translate into different patterns of recurrence, and therefore into different responses to rhythm control therapy [22]. The following seven a priori hypotheses were formulated based on published research.

-

1.

The recurrence of AF differs in patients with and without a genetic or genomic predisposition to AF [35] (defined as AF occurring first in those aged < 60 or with a family history of AF). In addition, we hypothesise that sodium channel blockers are more effective in preventing AF recurrence than other antiarrhythmic drugs in patients with a genetic or genomic predisposition to AF. This is based on experimental data on PITX2 levels and on the resting membrane potential [36].

-

2.

The recurrence of AF is more common in patients with concomitant heart failure than in those without heart failure (defined as elevated BNP, a surrogate marker for heart failure [37]). For patients with heart failure, we hypothesise that catheter ablation is more effective than antiarrhythmic drugs at preventing AF recurrence [38,39,40].

-

3.

The recurrence of AF is more common in obese patients (BMI ≥ 30). There is experimental and clinical evidence that increased fatty infiltration and activation of adipocytes in the atria cause AF [22, 41,42,43], while weight reduction reduces recurrent AF in obese patients with AF [44, 45].

-

4.

The recurrence of AF is more common in patients with severe hypertension (defined as left ventricular hypertrophy on echocardiogram or uncontrolled hypertension at baseline (blood pressure ≥ 160/90)) [46].

-

5.

The recurrence of AF is more common in patients with chronic kidney disease (defined as elevated levels of fibroblast growth factor-23 as a surrogate marker for atrial fibrosis [47,48,49]).

-

6.

The prevalence of AF is associated with exercise intensity, which has been shown to modulate the relationship between physical activity and AF. Exercise load has been shown to correlate with AF incidence through a U-shaped curve [50].

-

7.

The prevalence of AF is associated with height. Preliminary data suggests that the autonomic tone could mediate this relationship. The association between stature, sex, heart rate and AF will be tested.

Methods

Studies are eligible for inclusion if they include patients with AF, or who were at risk of AF, identified within the health care system. Longitudinal studies (both observational and randomised trials) are eligible to be included for assessment of recurrent AF, stroke, cardiovascular death and worsening of heart failure. Both prospective and retrospective (including case-control) studies are eligible. Longitudinal and cross-sectional studies are eligible for inclusion for assessment of prevalent AF. Studies have to document diagnoses of AF, key patient characteristics and interventions (where given), and provide individual patient datasets in a form suitable for harmonisation. Twelve studies have been identified from the CATCH ME collaborators which meet these criteria. Our rational for taking this approach instead of searching for eligible studies in a systematic review is that ours is a feasible method in which individual patient data can be obtained in a useful way.

Description of studies

Individual patient data from the 12 studies will be merged into a single combined dataset (see Additional file 1: Supplementary S1). Data relevant to the design and analysis of the studies were collated from ongoing and closed studies from five European countries (United Kingdom, Germany, France, Netherlands and Spain), contributed by the CATCH ME consortium partners (See Table 1). To achieve comparability of the same latent concepts measured in different studies, the original data coding will be retrospectively harmonised using a semi-automated transformation process during which the source data is assessed and recoded to a common format. The data owners have identified a total of 270 variables (179 baseline, 91 follow-up) covering a wide breadth of information (e.g. patient demographics, rhythm history, cardiovascular disease history, medications, study logistics etc.; see Additional file 1: Supplementary S2). During the harmonisation process, variables from the original dataset will be mapped to their respective target variables. Patient records from seven studies will be augmented by biomarker data obtained by analysing plasma samples from these patients. All biomarkers will be quantified centrally in a core lab using quality-controlled, standardised processes. Ethical approval for repurposing the data will be monitored and overseen by the Atrial Fibrillation NETwork (AFNET, DE).

Candidate variables for inclusion

One hundred and twenty of the 179 baseline variables are patient characteristics potentially suitable for inclusion in the analysis. The most important patient characteristics will be identified using a Delphi process conducted within the Consortium. These patient characteristics will include variables previously associated with AF as well as markers arising from experiments conducted by CATCH ME partners [22] and others. The Delphi process will rank patient characteristics according to their expected relative importance in predicting recurrent AF and prevalent AF. Similar predictors which assess common clinical concepts will be combined into single variables to minimise the possibility of collinearity within the analysis and lessen the impact of missing data. The highest ranked characteristics will go forward into the statistical selection process (below); the number included will be determined by the sample size calculations (see below) which will establish a subset of characteristics (the candidate predictors). In subsequent statistical steps, candidate predictors will be assessed to determine if they are predictive for the outcomes of recurrent AF. This subset of candidate predictors, deemed both clinically and statistically important are referred to as the confirmed predictors (Fig. 1).

Sample size considerations

The number of candidate predictors that can be included in model development is determined by the total number of events for the outcome of interest. We will follow general guidelines that suggest a minimum of 10 events per parameter be considered in a development model [54]. This estimate is known to be conservative for larger lists of parameters, where five events per parameter may suffice [54,55,56,57]. Categorical predictors are counted as multiple predictors in this assessment, with the number of indicator variables added to the total count of predictors.

Stage 1: development of predictive models

Cox regression will be used to develop a predictive model for time-to-recurrence of AF in patients previously diagnosed with AF. For other follow-up outcomes (stroke, cardiovascular death, worsening of heart failure), Cox regression models will be used if follow-up time is available, otherwise logistic regression models will be used. Logistic regression will be used for prevalent AF prediction (Fig. 2).

Missing data

It is expected that the CATCH ME combined database will have missing data for candidate predictors which were not measured in all studies. A detailed assessment of the quantity of missing data across studies and predictor variables will be carried out. We will use multiple imputation using chained equations to account for missing data, assuming data is missing at random (MAR). Multiple imputation will be completed separately for each of the studies after candidate predictors have been identified [58]. Candidate predictors with more than 70% missing (in any study) will be excluded. Characteristics with less than 70% missing data which are not candidate predictors will be included in the multiple imputation procedure as auxiliary characteristics but will not be included as predictors in the models.

The percentage of participants with incomplete data on the candidate predictors will be used to determine the number of imputations required. For example, if in one study 37% of individual participants have incomplete data across all of the eligible candidate predictors then, the number of imputations required would be 37 [59, 60]. Required numbers of imputations will be computed for each study, and we will use the maximum value to ensure it is possible to combine the multiple imputed datasets across all studies.

Statistical selection and non-linearity

To develop each model we will use backward elimination (BE) to select confirmed predictors from the list of eligible candidate predictors using a p-value of 0.157. This significance level has been chosen as it is considered a good proxy to use in place of the Akaike information criterion (AIC) approach [61]. Age and sex will be forced to remain in the statistical selection process irrespective of whether they are statistically significant. The original study will also be included in the model. All continuous predictors will be kept in their original format and will not be dichotomised or categorised to minimise information loss [40]. Continuous characteristics will be assessed for non-linearity. Predictors found to be non-linear will be incorporated using multivariable fractional polynomials (MFP) to allow non-linear relationships. Transforming the continuous predictors using MFP will produce models that are more precise and are more likely to satisfy model assumptions [41].

Combining multiple imputation and statistical selection procedure

The statistical selection procedure and non-linearity assessments will be performed on the combined imputed datasets (combined across studies and imputations). Multiple imputation datasets are usually combined using Rubin’s rule [40]. However when using statistical selection on multiply imputed data it is likely that for each imputed dataset different predictors will be selected. Hence, the repeated use of Rubin’s Rule across these different selected predictors is computationally challenging. We therefore will use an approximation to Rubin’s rule outlined in Wood, White [62].

Statistical analysis model and performance measures

Model performance will be assessed, primarily looking at the calibration and discrimination of the model. The calibration of the model will compare the probability of the observed risk to the expected risk using a calibration plot. For determining the models discriminatory ability we will assess using the C-statistic (Harrell’s C-statistic) and its corresponding 95% confidence interval [63].

Internal validation

To assess optimism and overfitting, the bootstrap method will be used to internally validate the development models. One hundred bootstraps will be used on each of the imputed datasets [64, 65]: each of the imputed and bootstrapped datasets will be analysed individually to determine the calibration and C-statistic values and these will be appropriately combined across the imputed datasets to produce a single value of calibration and C-statistic. The calibration value will be used to adjust the coefficients to ‘shrink’ their value (shrinkage factor) hence allowing us to adjust for over-optimism within our development models. Completing internal validation will affect both the calibration and discrimination values in addition to the coefficient values found in the development model, as they will all be adjusted for the over-optimism. The values reported for the final models will be corrected by the shrinkage factor, along with both optimism adjusted and original C-statistic and calibration values.

Comparison of developed models to currently available models

We will compare predictions from our new models with those of the CHADS2 score [31], HATCH score [32], APPLE score [33], and ATLAS score [34] for recurrence of AF within the CATCH ME combined dataset. Models will be compared using C-statistics.

Stage 2: tests of seven specified hypotheses

The seven a priori hypotheses outlined above will be tested, adjusting for differences in case-mix using the prediction models developed for prevalent and recurrent AF. Cox-regression models will be used for recurrent AF statements and logistic regression for prevalent AF statements. Each model will include a calculation of the predictive risk for each patient computed using the prediction model as a fixed offset term, and additional terms for study to allow for baseline differences in risk. The hypotheses will be tested by adding covariates and interactions to these models. Statements 1–5 pertaining to recurrent AF will use the predicted risk of AF obtained from the predictive model as an offset term. The remaining statements relate to prevalent AF and will use the predicted risk of AF in the same way.

Specifically the first and second hypotheses statements will be assessed by testing for an interaction between treatment and patient status (genetic predisposition for AF/heart failure). Further hypotheses will compare outcomes between two or more groups. These hypothesis tests will be performed on complete data for treatment and these covariates. In summary, the models addressing each hypothesis statement will consist of the predictive risk of AF, the appropriate characteristics and interactions as per the hypothesis and will also adjust for study.

Stage 3: plasma biomarkers

The statistical predictive ability of selected biomarkers quantified in a subset of patients will be investigated for recurrent AF and prevalent AF. The predictive value of each of the biomarkers will be assessed univariately and in addition to the predicted risk of AF recurrence and prevalent AF obtained from the developed models (included as an offset term to adjust for differences in case-mix) whilst adjusting for study. Biomarkers will be considered individually and in combination (see Additional file 2: Supplementary Statistical Analysis Plan for full details).

Discussion

AF requires chronic and multidimensional management. Providing all treatment options is challenging and ineffective for optimal patient care. While stroke prevention therapy can be selected based on a few clinical parameters in most patients with AF, selecting rhythm control therapy is currently based on personal preferences, local protocols, or availability of treatments. Evidence supporting stratification of rhythm control therapy options in patients with AF would thus meet a clinical need. Similarly, knowledge of the major drivers of recurrent AF could inform strategies to prevent AF, e.g. through targeted modification of risk factors. This retrospective, harmonised, individual patient data analysis will use information from 12 datasets collected in five European countries. Both expert consensus (using a Delphi process) and statistical selection will be applied for a rigorous identification of relevant predictors. Our novel approach will combine clinical characteristics with plasma biomarkers to identify the best predictors of recurrent AF and prevalent AF and use the models to test mechanistic hypotheses and investigate the predictive value of the biomarkers. The heterogeneity of the data will pose analytical and interpretive challenges, however, it will simultaneously drive the pioneering of novel methods for interrogating existing datasets while maintaining the quality and integrity of the data. It is envisioned that the outcome of this analysis would provide a greater understanding of the factors associated with recurrence of AF, potentially allowing development of stratified approaches to prevention of recurrent AF.

Abbreviations

- AF:

-

Atrial fibrillation

References

Chugh SS, Havmoeller R, Narayanan K, Singh D, Rienstra M, Benjamin EJ, et al. Worldwide epidemiology of atrial fibrillation: a global burden of disease 2010 study. Circulation. 2014;129(8):837–47.

Colilla S, Crow A, Petkun W, Singer DE, Simon T, Liu X. Estimates of current and future incidence and prevalence of atrial fibrillation in the U.S. adult population. Am J Cardiol. 2013;112(8):1142–7.

Heeringa J, van der Kuip DA, Hofman A, Kors JA, van Herpen G, Stricker BH, et al. Prevalence, incidence and lifetime risk of atrial fibrillation: the Rotterdam study. Eur Heart J. 2006;27(8):949–53.

Lloyd-Jones DM, Wang TJ, Leip EP, Larson MG, Levy D, Vasan RS, et al. Lifetime risk for development of atrial fibrillation: the Framingham heart study. Circulation. 2004;110(9):1042–6.

Go AS, Hylek EM, Phillips KA, Chang Y, Henault LE, Selby JV, et al. Prevalence of diagnosed atrial fibrillation in adults: national implications for rhythm management and stroke prevention: the AnTicoagulation and risk factors in atrial fibrillation (ATRIA) study. JAMA. 2001;285(18):2370–5.

Krijthe BP, Kunst A, Benjamin EJ, Lip GY, Franco OH, Hofman A, et al. Projections on the number of individuals with atrial fibrillation in the European Union, from 2000 to 2060. Eur Heart J. 2013;34(35):2746–51.

Zoni-Berisso M, Lercari F, Carazza T, Domenicucci S. Epidemiology of atrial fibrillation: European perspective. Clinical epidemiology. 2014;6:213–20.

Wolf PA, Abbott RD, Kannel WB. Atrial fibrillation as an independent risk factor for stroke: the Framingham study. Stroke. 1991;22(8):983–8.

Krahn AD, Manfreda J, Tate RB, Mathewson FA, Cuddy TE. The natural history of atrial fibrillation: incidence, risk factors, and prognosis in the Manitoba follow-up study. Am J Med. 1995;98(5):476–84.

Stewart S, Hart CL, Hole DJ, McMurray JJ. A population-based study of the long-term risks associated with atrial fibrillation: 20-year follow-up of the Renfrew/Paisley study. Am J Med. 2002;113(5):359–64.

Benjamin EJ, Wolf PA, D'Agostino RB, Silbershatz H, Kannel WB, Levy D. Impact of atrial fibrillation on the risk of death: the Framingham heart study. Circulation. 1998;98(10):946–52.

Andersson T, Magnuson A, Bryngelsson IL, Frobert O, Henriksson KM, Edvardsson N, et al. All-cause mortality in 272,186 patients hospitalized with incident atrial fibrillation 1995-2008: a Swedish nationwide long-term case-control study. Eur Heart J. 2013;34(14):1061–7.

Kotecha D, Holmes J, Krum H, Altman DG, Manzano L, Cleland JG, et al. Efficacy of beta blockers in patients with heart failure plus atrial fibrillation: an individual-patient data meta-analysis. Lancet. 2014;384(9961):2235–43.

Camm AJ, Amarenco P, Haas S, Hess S, Kirchhof P, Kuhls S, et al. XANTUS: a real-world, prospective, observational study of patients treated with rivaroxaban for stroke prevention in atrial fibrillation. Eur Heart J. 2016;37(14):1145–53.

Heidbuchel H, Verhamme P, Alings M, Antz M, Diener HC, Hacke W, et al. Updated European heart rhythm association practical guide on the use of non-vitamin-K antagonist anticoagulants in patients with non-valvular atrial fibrillation: executive summary. Eur Heart J. 2016.

Nattel S, Guasch E, Savelieva I, Cosio FG, Valverde I, Halperin JL, et al. Early management of atrial fibrillation to prevent cardiovascular complications. Eur Heart J. 2014;35(22):1448–56.

Hohnloser SH, Crijns HJ, van Eickels M, Gaudin C, Page RL, Torp-Pedersen C, et al. Effect of dronedarone on cardiovascular events in atrial fibrillation. N Engl J Med. 2009;360(7):668–78.

Kirchhof P, Andresen D, Bosch R, Borggrefe M, Meinertz T, Parade U, et al. Short-term versus long-term antiarrhythmic drug treatment after cardioversion of atrial fibrillation (Flec-SL): a prospective, randomised, open-label, blinded endpoint assessment trial. Lancet. 2012;380(9838):238–46.

Verma A, Jiang CY, Betts TR, Chen J, Deisenhofer I, Mantovan R, et al. Approaches to catheter ablation for persistent atrial fibrillation. N Engl J Med. 2015;372(19):1812–22.

Kirchhof P, Benussi S, Kotecha D, Ahlsson A, Atar D, Casadei B, et al. 2016 ESC guidelines for the management of atrial fibrillation developed in collaboration with EACTS. Eur Heart J. 2016;37(38):2893–962.

Kirchhof P. The future of atrial fibrillation management: integrated care and stratified therapy. Lancet. 2017;390(10105):1873–87.

Fabritz L, Guasch E, Antoniades C, Bardinet I, Benninger G, Betts TR, et al. Expert consensus document: defining the major health modifiers causing atrial fibrillation: a roadmap to underpin personalized prevention and treatment. Nat Rev Cardiol. 2016;13(4):230–7.

Arakawa M, Miwa H, Noda T, Ito Y, Kambara K, Kagawa K, et al. Alternations in atrial natriuretic peptide release after DC cardioversion of non-valvular chronic atrial fibrillation. Eur Heart J. 1995;16(7):977–85.

Rossi A, Enriquez-Sarano M, Burnett JC Jr, Lerman A, Abel MD, Seward JB. Natriuretic peptide levels in atrial fibrillation: a prospective hormonal and Doppler-echocardiographic study. J Am Coll Cardiol. 2000;35(5):1256–62.

Santema BT, Kloosterman M, Van Gelder IC, Mordi I, Lang CC, Lam CSP, et al. Comparing biomarker profiles of patients with heart failure: atrial fibrillation vs. sinus rhythm and reduced vs. preserved ejection fraction. Eur Heart J. 2018.

Hijazi Z, Wallentin L, Siegbahn A, Andersson U, Christersson C, Ezekowitz J, et al. NT-proBNP for risk assessment in patients with atrial fibrillation: insights from the ARISTOTLE trial. J Am Coll Cardiol. 2013.

Hijazi Z, Wallentin L, Siegbahn A, Andersson U, Alexander JH, Atar D, et al. High-sensitivity troponin T and risk stratification in patients with atrial fibrillation during treatment with apixaban or warfarin. J Am Coll Cardiol. 2014;63(1):52–61.

Hijazi Z, Lindback J, Alexander JH, Hanna M, Held C, Hylek EM, et al. The ABC (age, biomarkers, clinical history) stroke risk score: a biomarker-based risk score for predicting stroke in atrial fibrillation. Eur Heart J. 2016.

Hijazi Z, Oldgren J, Lindback J, Alexander JH, Connolly SJ, Eikelboom JW, et al. The novel biomarker-based ABC (age, biomarkers, clinical history)-bleeding risk score for patients with atrial fibrillation: a derivation and validation study. Lancet. 2016;387(10035):2302–11.

Fortier I, Raina P, Van den Heuvel ER, Griffith LE, Craig C, Saliba M, et al. Maelstrom research guidelines for rigorous retrospective data harmonization. Int J Epidemiol. 2017;46(1):103–5.

Gage BF, Waterman AD, Shannon W, Boechler M, Rich MW, Radford MJ. Validation of clinical classification schemes for predicting stroke: results from the National Registry of atrial fibrillation. JAMA. 2001;285(22):2864–70.

de Vos CB, Pisters R, Nieuwlaat R, Prins MH, Tieleman RG, Coelen RJ, et al. Progression from paroxysmal to persistent atrial fibrillation clinical correlates and prognosis. J Am Coll Cardiol. 2010;55(8):725–31.

Kornej J, Hindricks G, Shoemaker MB, Husser D, Arya A, Sommer P, et al. The APPLE score: a novel and simple score for the prediction of rhythm outcomes after catheter ablation of atrial fibrillation. Clin Res Cardiol. 2015;104(10):871–6.

Mesquita J, Ferreira AM, Cavaco D, Moscoso Costa F, Carmo P, Marques H, et al. Development and validation of a risk score for predicting atrial fibrillation recurrence after a first catheter ablation procedure - ATLAS score. Europace. 2017.

Roselli C, Chaffin MD, Weng LC, Aeschbacher S, Ahlberg G, Albert CM, et al. Multi-ethnic genome-wide association study for atrial fibrillation. Nat Genet. 2018.

Syeda F, Holmes AP, Yu TY, Tull S, Kuhlmann SM, Pavlovic D, et al. PITX2 modulates atrial membrane potential and reduced PITX2 potentiates the antiarrhythmic effects of sodium-channel blockers. JACC. 2016;68:59–72. https://doi.org/10.1016/j.jacc.2016.07.766.

Levin ER, Gardner DG, Samson WK. Natriuretic Peptides. N Engl J Med. 1998;339(5):321–8.

Hsu LF, Jais P, Sanders P, Garrigue S, Hocini M, Sacher F, et al. Catheter ablation for atrial fibrillation in congestive heart failure. N Engl J Med. 2004;351(23):2373–83.

Khan MN, Jais P, Cummings J, Di Biase L, Sanders P, Martin DO, et al. Pulmonary-vein isolation for atrial fibrillation in patients with heart failure. N Engl J Med. 2008;359(17):1778–85.

Marrouche NF, Brachmann J, Andresen D, Siebels J, Boersma L, Jordaens L, et al. Catheter ablation for atrial fibrillation with heart failure. N Engl J Med. 2018;378(5):417–27.

Haemers P, Hamdi H, Guedj K, Suffee N, Farahmand P, Popovic N, et al. Atrial fibrillation is associated with the fibrotic remodelling of adipose tissue in the subepicardium of human and sheep atria. Eur Heart J. 2015.

Venteclef N, Guglielmi V, Balse E, Gaborit B, Cotillard A, Atassi F, et al. Human epicardial adipose tissue induces fibrosis of the atrial myocardium through the secretion of adipo-fibrokines. Eur Heart J. 2013.

Suffee N, Moore-Morris T, Farahmand P, Rucker-Martin C, Dilanian G, Fradet M, et al. Atrial natriuretic peptide regulates adipose tissue accumulation in adult atria. Proc Natl Acad Sci U S A. 2017;114(5):E771–E80.

Pathak RK, Middeldorp ME, Meredith M, Mehta AB, Mahajan R, Wong CX, et al. Long-term effect of goal-directed weight Management in an Atrial Fibrillation Cohort: A Long-Term Follow-Up Study (LEGACY). J Am Coll Cardiol. 2015;65(20):2159–69.

Wong CX, Abed HS, Molaee P, Nelson AJ, Brooks AG, Sharma G, et al. Pericardial fat is associated with atrial fibrillation severity and ablation outcome. J Am Coll Cardiol. 2011;57(17):1745–51.

Schotten U, Verheule S, Kirchhof P, Goette A. Pathophysiological mechanisms of atrial fibrillation: a translational appraisal. Physiol Rev. 2011;91(1):265–325.

Seiler S, Cremers B, Rebling NM, Hornof F, Jeken J, Kersting S, et al. The phosphatonin fibroblast growth factor 23 links calcium-phosphate metabolism with left-ventricular dysfunction and atrial fibrillation. Eur Heart J. 2011;32(21):2688–96.

Geach T. Atrial fibrillation: FGF-23 associated with incident AF--a link with CKD? Nat Rev Cardiol. 2014;11(8):436.

Chua W, Purmah Y, Cardoso VR, Gkoutos GV, Tull SP, Neculau G, Thomas MR, Kotecha D, Lip GYH, Kirchhof P, Fabritz L. Data-driven discovery and validation of circulating blood-based biomarkers associated with prevalent atrial fibrillation. Eur Heart J. 2019;40(16):1268–76. https://doi.org/10.1093/eurheartj/ehy815. PMID: 30615112.

Guasch E, Mont L. Diagnosis, pathophysiology, and management of exercise-induced arrhythmias. Nat Rev Cardiol. 2017;14(2):88–101.

Kirchhof P, Fetsch T, Hanrath P, Meinertz T, Steinbeck G, Lehmacher W, et al. Targeted pharmacological reversal of electrical remodeling after cardioversion--rationale and design of the Flecainide short-long (Flec-SL) trial. Am Heart J. 2005;150(5):899.

Mont L, Tamborero D, Elosua R, Molina I, Coll-Vinent B, Sitges M, et al. Physical activity, height, and left atrial size are independent risk factors for lone atrial fibrillation in middle-aged healthy individuals. Europace. 2008;10(1):15–20.

Berruezo A, Tamborero D, Mont L, Benito B, Tolosana JM, Sitges M, et al. Pre-procedural predictors of atrial fibrillation recurrence after circumferential pulmonary vein ablation. Eur Heart J. 2007;28(7):836–41.

Peduzzi P, Concato J, Kemper E, Holford TR, Feinstein AR. A simulation study of the number of events per variable in logistic regression analysis. J Clin Epidemiol. 1996;49(12):1373–9.

Green SB. How many subjects does it take to do a regression analysis. Multivariate Behav Res. 1991;26(3):499–510.

Vittinghoff E, McCulloch CE. Relaxing the rule of ten events per variable in logistic and cox regression. Am J Epidemiol. 2007;165(6):710–8.

Austin PC, Steyerberg EW. The number of subjects per variable required in linear regression analyses. J Clin Epidemiol. 2015;68(6):627–36.

Resche-Rigon M, White IR, Bartlett JW, Peters SA, Thompson SG, Group P-IS. Multiple imputation for handling systematically missing confounders in meta-analysis of individual participant data. Stat Med. 2013;32(28):4890–905.

Bodner TE. What improves with increased missing data imputations? Struct Equ Modeling. 2008;15(4):651–75.

White IR, Royston P, Wood AM. Multiple imputation using chained equations: issues and guidance for practice. Stat Med. 2011;30(4):377–99.

Sauerbrei W. The use of resampling methods to simplify regression models in medical statistics. J Roy Stat Soc C-App. 1999;48:313–29.

Wood AM, White IR, Royston P. How should variable selection be performed with multiply imputed data? Stat Med. 2008;27(17):3227–46.

Harrell FE, Lee KL, Mark DB. Multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat Med. 1996;15.

Altman DG, Andersen PK. Bootstrap investigation of the stability of a cox regression model. Stat Med. 1989;8.

Baneshi MR, Talei A. Assessment of internal validity of prognostic models through bootstrapping and multiple imputation of missing data. Iran J Public Health. 2012;41(5):110–5.

Acknowledgements

Not applicable.

Funding

This work was primarily supported by European Union (grant agreement No 633196 [CATCH ME] to PK, LF, EG, LM, US, HJGMC, SK, MFS, BC, SH, DH). Further support came from British Heart Foundation (FS/13/43/30324 to PK and LF; German Centre for Cardiovascular Research to PK, US, SK, MFS; the Netherlands Heart Foundation (CVON2014–09, RACE V: Reappraisal of Atrial Fibrillation: Interaction between hyperCoagulability, Electrical remodeling, and Vascular Destabilisation in the Progression of AF) to US; AGAUR (Agència de Gestió d’Ajuts Universitaris i de Recerca, 2017SGR1548) to EG and LM, Instituto de Salud Carlos III (PI16/00703 to EG; PI16/00435 to LM). The funding bodies did not play any roles in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Availability of data and materials

There is no data in this protocol manuscript.

Disclaimer

This paper presents independent research supported by the NIHR Birmingham Biomedical Research Centre at the University Hospitals Birmingham NHS Foundation Trust and the University of Birmingham. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care.

Author information

Authors and Affiliations

Contributions

The manuscript was drafted by WC, CE, JJD, PK, LF and critically revised by all authors. The analysis plan was designed by CE, AS, KH, JJD. The idea of the work was conceived by BC, HJGMC, LF, EG, SH, PK, SK, LM, MFS, US. Ethics was overseen by DH. Access to data was provided by HJGMC, LF, EG, SH, PK, SK, LM, MFS, US. All authors reviewed and agreed for the manuscript to be published.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This analysis protocol is approved by the University of Birmingham, UK (ERN_15–0062), and complies with ethical requirements by the European Commission for projects funded by Horizon 2020. All institutional ethical and legal regulations and requirements applicable to individual studies were adhered to during the process of data compilation and biomarker quantification. The ethics committee waived the need for consent.

Consent for publication

Not applicable.

Competing interests

BC, HJGMC, WC, CE, DH, KH, SH, EG, LM, SK, AS, MFS have nothing to disclose. JJD reports being an NIHR Senior Investigator Emeritus and is supported by the NIHR Birmingham Biomedical Research Centre at the University Hospitals Birmingham NHS Foundation Trust and the University of Birmingham. US is co-founder and shareholder of YourRhythmics BV, a spin-off company of the University Maastricht and received consultancy fees or honoraria from Roche Diagnostics (Switzerland) and Bayer Healthcare (Germany). PK has received support for research from the European Union, British Heart Foundation, Leducq Foundation, Medical Research Council (UK), and German Centre for Heart Research, from several drug and device companies active in atrial fibrillation, honoraria from several such companies; and is listed as inventor on two patents held by University of Birmingham (Atrial Fibrillation Therapy WO 2015140571, Markers for Atrial Fibrillation WO 2016012783). LF has received institutional research grants and non-financial support from European Union, British Heart Foundation, Medical Research Council (UK), and DFG and Gilead; and is listed as inventor on two patents held by University of Birmingham (Atrial Fibrillation Therapy WO 2015140571, Markers for Atrial Fibrillation WO 2016012783).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Supplementary S1: Individual dataset descriptions. Supplementary S2: Variables extracted from datasets for the combined database (DOCX 75 kb)

Additional file 2:

Statistical analysis plan – CATCH ME –Prognostic development models (DOCX 281 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Chua, W., Easter, C.L., Guasch, E. et al. Development and external validation of predictive models for prevalent and recurrent atrial fibrillation: a protocol for the analysis of the CATCH ME combined dataset. BMC Cardiovasc Disord 19, 120 (2019). https://doi.org/10.1186/s12872-019-1105-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12872-019-1105-4